Abstract

Node localization or positioning is essential for many position aware protocols in a wireless sensor network. The classical global poisoning system used for node localization is limited because of its high cost and its unavailability in the indoor environments. So, several localization algorithms have been proposed in the recent past to improve localization accuracy and to reduce implementation cost. One of the popular approaches of localization is to define localization as a least square localization (LSL) problem. During optimization of LSL problem, the performance of the classical Gauss–Newton method is limited because it can be trapped by local minima. By contrast, differential evolution (DE) algorithm has high localization accuracy because it has an ability to determine global optimal solution to the LSL problem. However, the convergence speed of the conventional DE algorithm is low as it uses fixed values of mutation factor and cross-over probability. Thus, in this paper, a self-adaptive mutation factor cross-over probability based differential evolution (SA-MCDE) algorithm is proposed for LSL problem to improve convergence speed. The SA-MCDE algorithm adaptively adjusts the mutation factor and cross-over probability in each generation to better explore and exploit the global optimal solution. Thus, improved localization accuracy with high convergence speed is expected from the SA-MCDE algorithm. The rigorous simulation results conducted for several localization algorithms declare that the propose SA-MCDE based localization has about (40–90) % more localization accuracy over the classical techniques.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The use of wireless sensor network (WSN) has become quite predominant in recent years because of availability of low cost and energy efficient wireless sensor nodes [1]. Large number of such sensor nodes can be connected to form a WSN that can be used for disaster relief operation, military surveillance, environmental monitoring, medical observations, home applications and so on [2]. The sensor nodes in WSN can be deployed either randomly or in a systematic way. During sensing certain phenomena (such as temperature, humidity, pressure, position, vibration, sound, chemical concentration etc.), each node in a WSN is supposed to know its location in physical world. If the location of the event is unknown, detection of that event is not particularly useful [3].

The global poisoning system (GPS) is a popular scheme that is used for outdoor positioning and this scheme requires a clear line of sight (LOS) between the satellite and the unknown node without any obstacles [4]. However, the GPS has limitations, such as high energy consumption and expensive when it is deployed in a large quantity. Hence, it is not recommended to deploy GPS module in each node. Alternatively, few nodes can be selected as beacons (or anchors) and GPS modules can be deployed only in these beacons. Through localization process and with the aid of these beacons, remaining nodes can determine their locations. This strategy can effectively minimize energy consumption as well as the cost of implementation.

During last two decades, numerus localization techniques were introduced to minimize localization error. All these localization techniques are mainly classified as classical techniques and soft computing based techniques. The classical localization schemes are again classified as range based and range free schemes. Range-based localization techniques require distance or angle between sensor nodes to estimate node position. Using simple geometry, this information can be used to derive information about node positions. When distances between entities are used, the approach is called multilateration; when angles between nodes are used, one talks about angulation. Range-free localization algorithms do not require distance or angle information between target node and anchor node, but depends on the topological information.

Range-based algorithms provide better accuracy compared to range-free localization algorithms, but they are not so economic [5]. Examples of this range based schemes angle-of-arrival (AoA) [6], time of arrival (ToA) [7], time difference of arrival (TDoA) [8], acoustic energy [9], and received signal strength indicator (RSSI) [10, 11]. The AoA technique requires deploying an extra antenna in an appropriate direction, which may impose additional cost. Both the ToA and TDoA techniques require that all the receiving nodes to be perfectly synchronized. These techniques have high positioning accuracy but need additional hardware. Thus, among all range based techniques, the RSSI is cost effective as it does not required much equipment, but its accuracy still needs to be improved.

By contrast, the range-free techniques are based on pattern matching and Hop count but not on distance or angle. Thus their implementation cost is low at the price of low accuracy. Examples of this approach include centroid algorithm [12], DV-Hop technique [13], and amorphous algorithm [14]. In centroid algorithm, nodes infer proximity to reference points and then localize themselves to the centroid of the reference points. The DV-Hop used Hop count between nodes instead of distance estimation. Hence, when the DV-Hop is used for implementing in high dense sensor network, its poisoning error also increases consistently. Unlike DV-Hop, the amorphous algorithm computed Hop distance instead of linear distance among the nodes. Hence, it’s positioning accuracy higher than DV-Hop algorithm. There is a clear trade-off between range based and range free techniques with respect to poisoning accuracy and implementation cost. Hence localization is defined as least square localization (LSL) problem and it is solved by several soft computing techniques.

Recently, the applications of soft computing techniques had gained a great interest. Since, the mobile node localization also can be formulated as an optimization problem, the gradient technique such as Gauss–Newton algorithm is used for location in [15]. However, the gradient based techniques have a possibility of trapped by local minima and also these techniques require appropriate initial conditions of desired parameters. Thus, the gradient based techniques can be replaced by metaheuristic optimization techniques (MOTs) to obtain better localization accuracy. Various MOTs such as flower pollination algorithm (FPA), firefly algorithm (FA), grey wolf optimization (GWO), particle swarm optimization (PSO), bacterial foraging algorithm (BFA), butterfly optimization algorithm (BOA), particle filtering NLS (PF-NLS) initial optimization algorithms have used for node localization of sensor networks in the literature [16,17,18,19,20,21,22]. Similarly, the popular differential evolution (DE) algorithm is also applied for node localization in the literature [23,24,25]. Though each MOT has its own merits, the DE algorithm proposed by Storn and Price [26] has become popular because of its ability to reach global optima irrespective of initial parameters and consists of limited control parameters. Despite DE algorithm has an excellent performance, its convergence speed still seems slow because the DE algorithm is sensitive to the choice of mutation factor and cross over probability. In general, selection of such control parameters is a tedious task. The fixed control parameter does not guarantee the better individuals. As a result, a self-adaptive mutation factor and cross-over probability differential evolution (SA-MCDE) algorithm was proposed, which has self-adaptive mechanism to vary mutation factor and cross over probability in each generation [27]. The SA-MCDE algorithm thoroughly explores solution in the initial generations and exploits more the solution in the final few generations. Thus, this adaptive selection of control parameters guarantees better solutions and also provides high convergence speed. So, this paper aims at designing SA-MCDE based localization technique, that has fast convergence, accurate positioning and low implementation cost.

The paper is organized as follows. Optimization of localization problem along with Gauss–Newton method is described in Sect. 2. The proposed SA-MCDE localization technique in detail is given in Sect. 3. The simulation analyses and conclusions are presented in Sects. 4 and 5 respectively.

2 Localization problem formulation

Let us consider, in a given two dimensional sensor field, N position aware anchor nodes an, n = 1, 2,…, N and M unknown sensor nodes bm, m = 1, 2,…, M are deployed. Deployment of these nodes can be either systematic way or in a random fashion. The positions of nth anchor node and mth unknown node are given as (xn, yn) and (xm, ym) respectively. All these nodes are assumed to be with in the radio rang R, therefore an, bm ϵ R2. It is also assumed that, each unknown node have distance measurement from all anchor nodes. That means, the mth node contains Euclidean distance dnm between an and bm. This distances dnm is also assumed to be within the radio range R, because a communication link exists between an and bm only if the distances dnm is less than R. The aim of unknown node localization is to estimate the coordinates of M unknown nodes using N anchor nodes. Thus, localization problem can be formulated to determine coordinates of bm with the obtained distance measurements dnm as:

where \( \left\| {a_{n} - b_{m} } \right\| = \sum\nolimits_{n = 1}^{N} {\sqrt {\left( {x_{n} - x_{m} } \right)^{2} + \left( {y_{n} - y_{m} } \right)^{2} } } \) and \( \hat{d}_{nm} \) is the noisy measurement of the distance dnm, which is obtained from RSSI. The noisy distance measurement is given by:

Hence, the solution from localization problem can be obtained from:

A centralized Gauss–Newton method is commonly used for solving this least squares localization problem. The Gauss–Newton technique is a gradient descent technique that updates the unknown node position in the reverse direction of their gradient. The unknown node location is updated in the (i + 1)th iteration as:

Let, \( A = \nabla_{{b_{m} }} \left[ {s_{nm} (b_{m} )} \right]^{T} = \frac{\partial }{{\partial b_{m} }}F(b_{m} ) \) and \( B = \left[ {\hat{d}_{nm} } \right]^{T} \) then

Here, α is rate learning parameter.

The gradient based methods such as Gauss–Newton has high converge speed, but they can be trapped by local minima. Also, gradient techniques need appropriate choice of initial states along with differentiable and continuous cost functions. These difficulties may be overcome by replacing gradient descent techniques with MOTs [12]. The MOTs work without derivative information of the cost function and hence can accept discontinuous cost functions. The MOTs update the desired parameters from random initial positions and converge the cost function precisely to global minima.

3 Proposed SA-MCDE algorithm based localization

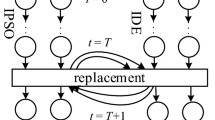

Among the various optimization techniques, the DE algorithm is found to be more appropriate due to its fast convergence and optimal solutions [26]. However, the DE algorithm is much sensitive to selection of mutation factor and cross-over probability. So, and SA-MCDE was proposed, which adapts mutation factor and cross-over probability in each generation [27]. The adaptive mutation factor may retain population diversity and adaptive cross-over probability may firmly explore the search space for global optima. So, an improved solution is expected from SA-MCDE, a thus it has been applied in several engineering applications [28,29,30]. SA-MCDE is a simple, efficient and robust optimization technique. The SA-MCDE algorithm uses four stages, namely, initialization, mutation, crossover and selection to obtain global optimum. Figure 1 shows the flow chart of the SA-MCDE employed for the node localization. The process implemented for node localization is summarized as follows.

Initialization SA-MCDE begins its search from a randomly initialized population containing P individuals, where each individual contains two variables corresponding to x and y axes of unknown node. The pth individual of the (P × 2)—dimensional population set \( \varvec{w}_{m} \) in the gth generation is expressed as:

where m refers to index of unknown node.

Adaptive Mutation and crossover Mutation operation explores the search space with an adaptive mutation factor F. In each generation, DE algorithm randomly samples three other individuals \( \varvec{w}_{m}^{{g,r_{1} }} \), \( \varvec{w}_{m}^{{g,r_{2} }} \) and \( \varvec{w}_{m}^{{g,r_{2} }} \) from the same generation and produces a mutant vector \( v_{m}^{g,p} \) by adding an appropriately scaled difference vector of two individual to the third vector. The indexes of these randomly chosen vectors are integers, mutually different and r1, r2, r3\( \in \) {1, 2,…, P}. The randomly chosen integers r1, r2 and r3 are also taken to be different from the running index p. This can be expressed mathematically as follows:

The mutation factor \( F \in (0,1] \) is a positive real-valued number and its controls the amplification of the differential variation \( \left( {\varvec{w}_{m}^{{g,r_{2} }} - \varvec{w}_{m}^{{g,r_{3} }} } \right) \).

In order to improve diversity of the perturbed vectors, crossover operation is used. The crossover operation generates a trail vector um by restoring certain parameters of the target vector wm with randomly selected parameters of mutant vector vm. The crossover operator effectively shuffles parameters with a crossover probability Cp\( \in \)(0, 1] about to increase the search space for a better solution. The obtained dth variable in the trail vector is given by:

where d = 1 or 2, that means 1 corresponding to x-axis position and 2 corresponding to y-axis position. Further, randd\( \in \) [0, 1] is the random value generated for dth variable and drand is a randomly chosen index, that is 1 or 2, which ensures that the trail vector gets at least one parameter from mutant vector.

The SADA algorithm uses self-adaptive control strategy for varying the mutation factor F and crossover probability Cp during each generation in order to find best solution with high rate of convergence. The self-adaptive control parameters \( F^{g + 1} \) and \( C_{p}^{g + 1} \) are obtained in generation g + 1 as:

where randj\( \in \) {1, 2, 3, 4}, are uniform pseudo-random values lies between 0 and 1, τ1 and τ2 are constants that are used to define F and Cp respectively. The values of τ1, τ2, F1 and F2 are taken to be 0.1, 0.1, 0.1 and 0.9, respectively according to [27]. The new F takes value from [0.1, 1.0] and the new Cp from [0, 1] randomly. The control parameter values \( F^{g + 1} \) and \( C_{p}^{g + 1} \) are updated prior to mutation as they involved in mutation, crossover, and selection operations.

Fitness evaluation In the fitness evaluation stage the trail vector \( \varvec{u}_{m}^{g,p} \) and the target vector \( \varvec{w}_{m}^{g,p} \) are fed to a fitness (cost) function to know their fitness value. The fitness values of the pth individual in the gth generation has been computed for both trail and target vectors from:

Selection The DE algorithm uses a greedy selection. The selection operation compares fitness value of each individual in both trail vectors and target vectors. The individual with minimum fitness value can be survived and carried to the next generation. So, the cost minimization problem ca be defined as:

The whole procedure will be repeated from mutation stage till termination criterion is met. Termination can be defined either based on number of generations or meeting minimum acceptable fitness value.

Optimal solution The best individual with minimum fitness value is considered as the optimal position for unknown node m at the end of termination. From this optimal solution, the position of unknown node is estimated as:

Similarly, the entire procedure is repeated for finding the optimal positions of remaining M− 1 nodes.

4 Simulation analyses and results

In this section, the computer simulations have been conducted to show effective ness of the proposed SA-MCDE Localization technique. The proposed and classical algorithms are simulated in Matlab. In these simulations, we have deployed 100 unknown sensor nodes and 10 position aware anchor nodes in a region of 100 × 100 square meters. Among 10 anchor nodes, 4 anchor nodes are placed at (25 m, 25 m), (25 m, 75 m), (75 m, 25 m), (75 m, 75 m), and remaining 6 anchor nodes are deployed randomly to increase connectivity in the network. All unknown nodes are placed at random positions. To eliminate randomness of position estimates, we have averaged the position estimates by applying each localization techniques 100 times repeatedly at each unknown node. The parameters of various localization techniques have been chosen based on extensive simulations conducted with different values. The selected parameters are listed in Table 1. The simulation results presented in this section mainly focusses on localization accuracy and convergence speed of various localization techniques. The localization accuracy can be computed mainly based on the measures namely, Localization Error (LE), Average Localization Error (ALE) and Root Mean Square Error (RMSE), which are defined in Eqs. (15), (16) and (17) respectively.

where M is number of unknown nodes, (xm, ym) is the true location of unknown node m, and \( (\hat{x}_{m} ,\hat{y}_{m} ) \) is the estimated location of unknown node m.

Figure 2 shows distribution of anchor nodes, unknown nodes and estimated positions of unknown node using various localization techniques. From this figure, it is found that the localization accuracy of the proposed SA-MCDE localization is high compared to the classical technique. Among various localization schemes, the range free technique such as DV-Hop results high localization error. So, there is a huge deviation between true positions and estimated positions. By contrast, the range based techniques are fairly performing better compared to range DV-Hop technique. Among various range-based techniques, the proposed SA-MCDE localization has high degree of accuracy, as the true node position and estimated node position almost coincides, which is show in Fig. 2e. This is due to the fact that the SA-MCDE has true global optimization ability. Further, the localization error computed for each node in the network using different localization techniques is plotted in Fig. 3. As seen in this figure, there is a large variation of localization error for different nodes while using DV-Hop and RSSI localization techniques. Because these techniques fail to estimate position of the unknown node which is located far away to all anchor nodes. By contrast, the optimization techniques can optimize the localization problem equally to all nodes irrespective to their locations. Therefore, an uninform error distribution can be observed for optimization techniques based localization as depicted in Fig. 3c–e.

Table 2 describes the accuracy comparisons in terms of both ALE and RMSE among various localization techniques for different load densities. In our simulations, low, medium and high densities are corresponding to deployment of 20, 50, and 100 unknown nodes respectively. As the load density increases, the network connectivity between sensor nodes become high and the number of anchor nodes available within the communication range increases. This leads to decrease localization error as presented in Table 2. This is further observed in Fig. 4, where the ALE of different techniques is presented while increasing number of nodes from 10 to 100. As seen in this figure, the SA-MCDE localization has the minimum localization error compared to all other techniques as the number of nodes increases. Similarly, localization error of various techniques while varying anchor nodes from 4 to 20, keeping number of unknown nodes fixed at 100 is illustrated in Fig. 5. The accuracy of the estimated position is also depends on number of deployed anchors as the connectivity between unknown node and all anchors increases with increased number of anchors. As a result the localization error decreases along with number of anchors as shown in this figure.

At last, the convergence speed in terms of number of iteration or generation to reach minimum least square distance error is measured by using different optimization technique and plotted in Fig. 6. For Gauss–Newton method, the convergence speed is measured with respective to number of iterations and for DE algorithm and SA-MCDE algorithm, the convergence speed is measured with respective to number of generations. The least square distance error is measured from Eq. (1). Though, the Gauss–newton method has high convergence speed, it is frequently trapped by local minima and results high least square error. By contrast, the DE algorithm and SA-MCDE algorithm have an ability to reach global optima and results minimum least square error. Comparing DE algorithm, the convergence speed of SA-MCDE algorithm is further high as depicted in this figure, because it has a self-controlling mechanism to adjust mutation factor and cross-over probability.

Finally, from the regions simulation study, it is understood that, there is a clear trade-off between range based and range free localization techniques. Thus, localization is defined as LSL problem. During optimizing LSL problem, compared to the Gauss–Newton algorithm the DE algorithm has better localization accuracy in terms of low ALE. The performance and convergence speed of DE algorithm is further improved by including self-adaptive control parameter selection mechanism. Thus, the SA-MCDE algorithm is consistently performing well with high convergence speed under different conditions including at various node densities and for different anchor nodes.

5 Conclusions

Many networking protocol and application require appropriate localization of nodes. The Quality of Service (QoS) of WSN is depends on localization accuracy. In this paper, we investigate SA-MCDE algorithm based localization technique and compared with DE algorithm, Gauss–Newton and classical localization algorithms. Among various localization techniques, the range based techniques have better localization accuracy but they require extra hardware. On the other hand rang free technique are cost effective but they are limited by poor accuracy. So the propose SA-MCDE algorithm localization is found to be better alternative. Through exhaustive simulation study, we found that the SA-MCDE algorithm localization is robust and has better localization accuracy with fast convergence speed.

Generally, MOTs tries to find an optimal solution considering all the controlling parameters. In several problems, these factors are enormous and expressing them in quantity and establishing relationships among these require voluminous calculations. Therefore, developing new MOTs with reduced complexity is always constitutes a promising novel research area. Further, recently, unmanned aerial vehicle (UAV) assisted node localization has become popular as they provide better distance estimates because of clear line of sight between areal anchors and unknown nodes, which may lead to better positioning. Thus, in summary, we can say that localization of WSN continues to be an important research challenge.

References

Harl H, Willig A (2005) Protocols and architectures for wireless sensor networks. Wiley, West Sussex

Akyildiz IF, Su W, Sankarasubramaniam Y, Cayirci E (2002) A survey on sensor networks. IEEE Commun Mag 40:102–114

Akyildiz IF, Su W, Sankarasubramaniam Y, Cayirci E (2002) Wireless sensor networks: a survey. Comput Netw 38:393–422

Chizari H, Poston T, Abd Razak S, Abdullah AH, Salleh S (2014) Local coverage measurement algorithm in GPS-free wireless sensor networks. Ad Hoc Netw 23:1–17

Aspnes J, Eren T, Goldenberg DK et al (2006) A theory of network localization. IEEE Trans Mob Comput 5:1663–1678

Wen F, Liang C (2015) Fine-grained indoor localization using single access point with multiple antennas. IEEE Sens J 15:1538–1544

Tarrío P, Bernardos AM, Casar JR (2012) An energy-efficient strategy for accurate distance estimation in wireless sensor networks. Sensors 12:15438–15466

Xiong H, Chen Z, Yang B, Ni R (2015) TDoA localization algorithm with compensation of clock offset for wireless sensor networks. China Commun 12:193–201

Liu Y, Hu YH, Pan Q (2012) Distributed, robust acoustic source localization in a wireless sensor network. IEEE Trans Signal Process 60:4350–4359

Maddumabandara A, Leung H, Liu M (2015) Experimental evaluation of indoor localization using wireless sensor networks. IEEE Sens J 15:5228–5237

Xu Y, Zhou J, Zhang P (2014) RSS-based source localization when path-loss model parameters are unknown. IEEE Commun Lett 18:1055–1058

Bulusu N, Heidemann J, Estrin D (2000) GPS-less low-cost outdoor localization for very small devices. IEEE Pers Commun 7:28–34

Niculescu D, Nath B (2003) DV based positioning in ad hoc networks. Telecommun Syst 22:267–280

Liu Y (2011) An adaptive multi-hop distance localization algorithm in WSN. Manuf Autom 33:161–163

Cheng BH, Vandenberghe L, Yao K (2009) Distributed algorithm for node localization in wireless ad-hoc networks. ACM Trans Sens Netw 6:8–20

Kulkarni R, Venayagamoorthy G, Cheng X, Maggie (2009) Bio-inspired node localization in wireless sensor networks. In: Proceedings of IEEE international conference on systems, man and cybernetics. https://doi.org/10.1109/ICSMC.2009.5346107

Arora S, Kaur R (2017) Nature inspired range based wireless sensor node localization algorithms. Int J Interact Multimed Artif Intell 4:7–17

Arora S, Singh S (2017) Node localization in wireless sensor networks using butterfly optimization algorithm. Arab J Sci Eng 42:3325–3335

Rajakumar R, Amudhavel J, Dhavachelvan P, Vengattaraman T (2017) GWO-LPWSN: grey wolf optimization algorithm for node localization problem in wireless sensor networks. J Comput Netw Commun. https://doi.org/10.1155/2017/7348141

Nagireddy V, Parwekar P, Mishra TK (2018) Velocity adaptation based PSO for localization in wireless sensor networks. Evol Intel. https://doi.org/10.1007/s12065-018-0170-4

Ni J, Li Z, Qi Y (2018) A gas source localization algorithm based on NLS initial optimization of particle filtering. Evol Intel. https://doi.org/10.1007/s12065-018-0191-z

Elbes M, Alzubi S, Kanan T (2019) A survey on particle swarm optimization with emphasis on engineering and network applications. Evol Intel. https://doi.org/10.1007/s12065-019-00210-z

Moravec J, Pošík P (2014) A comparative study: the effect of the perturbation vector type in the differential evolution algorithm on the accuracy of robot pose and heading estimation. Evol Intel 6:171–191

Harikrishnan R, Jawahar Senthil Kumar V, Sridevi P (2016) A comparative analysis of intelligent algorithms for localization in wireless sensor networks. Wirel Pers Commun 87:1057–1069

Annepu V, Rajesh A (2017) An efficient differential evalutionary algorithm based localization in wireless sensor networks. In: Proceedings of international conference on microelectronic devices, circuits and systems. https://doi.org/10.1109/ICMDCS.2017.8211560

Storn R, Price KV (1997) Differential evolution—a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim 11:341–359

Brest J, Greiner S, Boskovic B, Mernik M, Zumer V (2006) Self-adapting control parameters in differential evolution: a comparative study on numerical benchmark problems. IEEE Trans Evolut Comput 10:646–657

Qin AK, Huang VL, Suganthan PN (2009) Differential evolution algorithm with strategy adaptation for global numerical optimization. IEEE Trans Evolut Comput 13:398–417

Zhang J, Sanderson AC (2009) JADE: adaptive differential evolution with optional external archive. IEEE Trans Evolut Comput 13:945–958

Brest J, Maucec MS (2011) Self-adaptive differential evolution algorithm using population size reduction and three strategies. Soft Comput 15:2157–2174

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Annepu, V., Rajesh, A. Implementation of self adaptive mutation factor and cross-over probability based differential evolution algorithm for node localization in wireless sensor networks. Evol. Intel. 12, 469–478 (2019). https://doi.org/10.1007/s12065-019-00239-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12065-019-00239-0