Abstract

Hyperspectral imaging is built with the aggregation of imaging, spectroscopy and radiometric techniques. This technique observes the sample behaviour when it is exposed to light and interprets the properties of the biological samples. As hyperspectral imaging helps in interpreting the sample at the molecular level, it can distinguish very minute changes in the sample composition from its scatter properties. Hyperspectral data collection depends on several parameters such as electromagnetic spectrum wavelength range, imaging mode and imaging system. Spectral data acquired using a hyperspectral imaging system contain variations due to external factors and imaging components. Moreover, food samples are complex matrices with conditions of surface and internal heterogeneities, which may lead to variations in acquired data. Hence, before extracting information, these variations and noises must be reduced from the data using reference-dependent or reference-independent spectral pre-processing techniques. Using of the entire hyperspectral data for information extraction is tedious and time-consuming. In order to overcome this, exploratory data analysis techniques are used to select crucial wavelengths from the excessive hyperspectral data. Using appropriate chemometric techniques (supervised or unsupervised learning techniques) on this pre-processed hyperspectral data, qualitative or quantitative information from sample can be obtained. Qualitative information for analysing of the chemical composition, detecting of the defects and determining the purity of the food product can be extracted using discriminant analysis techniques. Quantitative information including variation in chemical constituents and contamination levels in food and agricultural sample can be extracted using categorical regression techniques. In combination with appropriate spectra pre-processing and chemometric technique, hyperspectral imaging stands out as an advanced quality evaluation system for food and agricultural products.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The revolutions in computer, optical and image sensor technologies and their applications in the field of agriculture have led to sensing techniques like imaging systems which are capable of automated and quick quality analysis on the processing line, with very little human interference (Zayas et al. 1985; Sapirstein et al. 1987; Symonds and Fulcher 1988; Brosnan and Sun 2004; Du and Sun 2004; Gowen et al. 2007). Initial applications of image processing to the food and agricultural products were the use of red-blue-green colour vision system for colour and size grading and later for identifying defects (Chen et al. 2002; Paliwal et al. 2005; Ramalingam et al. 2011; Singh et al. 2012). Although colour imaging can perform the quality analysis, when it comes to the identification of minor constituents or contaminants, it is ineffective. Multispectral imaging systems have been developed using few narrow wavelengths generally less than 20, to detect features of interest (Gowen et al. 2007; Yang 2012). These wavelengths may range from visible to near infrared. The number of wavelengths used to constitute a multispectral image cube may range based on the imaging system, number of filters used and features of interest. For instance, if the feature of interest is already known, the research acquires images in those wavelength ranges that can accurately provide information about that feature. Novales et al. (1999) used multispectral fluorescence imaging for the identification of maize, peas, soybean and wheat. Their multispectral imaging system was built to take 12 images and was able to identify each of these food products with classification accuracy up to 98.8 %. Liu et al. (2014) used multispectral imaging to take images at 19 wavelengths ranging from 405 to 970 nm for determining various quality attributes (firmness, total soluble solids (TSS)) and ripeness of strawberry fruits. Multispectral imaging has proven to be advantageous over conventional chemical analysis routines like gas chromatography (GC) and high-performance liquid chromatography (HPLC) which are destructive in nature. Multispectral imaging finds itself as the basis for the evolution of hyperspectral imaging system, which is capable of acquiring images over a wide range of wavelengths along the electromagnetic spectrum and correlating the spectral variation with the chemical constituent of interest. The multispectral imaging typically involves acquiring images in the three to six spectral bands that range from visible to near-infrared (NIR) range (Jensen 2007). Acquiring images in such a few narrow wavelengths is the primary disadvantage of multispectral imaging. Acquiring images in this small wavelength range provides gaps in the spectral band to exploit the entire signature. Hyperspectral imaging fills this gap by acquiring images in a broader wavelength range of more than 200 spectral bands that range from visible to NIR section. Hyperspectral imaging is an advanced technique for the quality evaluation in the food and agricultural industry, which is built with the combination of regular imaging, radiometry and spectroscopic principles. Radiometry is the measure of the amount of electromagnetic energy (generally expressed in common energy units, W) present within a specific wavelength range. Typical radiometer is designed with only one sensor with a filter installed to just to select the intended wavelength range. Spectrometry is a measure of light’s intensity (generally expressed in W/m2) within specific wavelength range. Unlike radiometer, spectrometers use diffraction grating or prisms or multiple sensors to divide the wavelength range into different wavelength bands. Goetz et al. (1985) used the word ‘hyperspectral imaging’ for the first time when they were discussing their remote sensing of earth using spectroscopic imaging techniques. The term ‘hyper’, meaning too much, has a negative implication in the medical sense, like hypertension, but in image processing, ‘hyperspectral’ imaging means acquiring images at ‘many bands’ along the electromagnetic spectrum. Chemicals when available in pure form and to be identified uniquely do not require hundreds of spectral bands spread over several octaves of the electromagnetic spectrum (Goetz 2009). But biological materials exist as a complex system of chemical compounds with interactions and bonds between them. Application of image processing in combination with spectroscopic technique makes it possible for automatic target detection and measurement of the analytical composition of that material; hence, hyperspectral imaging can be effectively applied for quantitative and qualitative analyses, as it can identify the presence of the material as well as their spatial location.

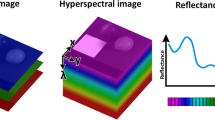

Hyperspectral imaging is an appropriate technique for many operations as it can generate both a spatial map and spectral variation (ElMasry and Sun 2010). Data acquired from hyperspectral imaging system are three-dimensional structures made up of one spectral and two spatial dimensions, commonly known as the ‘hypercubes’ or the ‘datacubes’ (Fig. 1) (Schweizer and Moura 2001; Chen et al. 2002; Kim et al. 2002; Mehl et al. 2004).

Goetz et al. (1985) originally developed the hyperspectral imaging for the remote sensing; later, its applications were expanded to various fields like agriculture (Du et al. 2013; Suzuki et al. 2012; Gracia and León 2011; Pérez-Marín et al. 2011), environment (Wang et al. 2016), food science (Lu 2003; Cheng et al. 2004; Nicolai et al. 2006; Gowen et al. 2007; ElMasry et al. 2007; Qu et al. 2016; Xu et al. 2016a,b; Xie et al. 2016, Wold 2016, Cheng et al. 2016), material science (Neville et al. 2003; Tatzer et al. 2005), biomedicine (Zheng et al. 2004; Kellicut et al. 2004; Liu et al. 2012; Akbari et al. 2012; Kiyotoki et al. 2013), astronomy (Zhang et al. 2008; Soulez et al. 2011; Nguyen et al. 2013) and pharmacy (Amigo and Ravn 2009; Lopes et al. 2010; Amigo 2010; Vajna et al. 2011). In the field of food and agriculture, hyperspectral imaging is used to determine the quality in fruits (Lu 2003; Mehl et al. 2004; Xing et al. 2005; Lee et al. 2005; Nicolai et al. 2006; Lefcourt et al. 2006; Qin et al. 2008; Bulanon et al. 2013; Pu and Sun 2016; Pu et al. 2016), vegetables (Cheng et al. 2004; Ariana et al. 2006; Gowen et al. 2009; Taghizadeh et al. 2011; Wang et al. 2012; Su and Sun 2016), meat and meat products (Park et al. 2002; Park et al. 2006; Sivertsen et al. 2009; Qu et al. 2016; Xu et al. 2016a,b; Xie et al. 2016; Wold 2016; Cheng et al. 2016; Chen et al. 2016; Ma et al. 2016), cereals (Cogdill et al. 2004; Zhang et al. 2007; Mahesh et al. 2008; Choudhary et al. 2009; Singh et al. 2010) and diseases in crops (Smith et al. 2004; Keulemans et al. 2007; Qin et al. 2009; Kumar et al. 2012; Xie et al. 2015; Kuska et al. 2015). Hyperspectral data collection is the most important step to understand the properties of the sample. Significant scientific literature has been published in recent years regarding the data acquisition using hyperspectral imaging system (Varshney and Arora 2004; Borengasser et al. 2007; Park and Lu 2015; Vadivambal and Jayas 2016).

Hyperspectral Data Collection

Understanding the behaviour of food sample at molecular level on its exposure to the light photons is the basis for the hyperspectral imaging (ElMasry and Sun 2010). Hyperspectral imaging deals with the sample at the molecular level; therefore, it can distinguish even slight changes in the sample composition. Biological materials continuously absorb or emit energy (radiation) by lowering or raising its molecules from one energy level to another. The strength and wavelength of this radiation depend on the nature of the material (ElMasry and Sun 2010). Hyperspectral imaging generally measures the intensity of the absorbed or emitted radiation over a range of spectral band along the electromagnetic spectrum. Data collected using a hyperspectral imaging system are the absorbed or emitted radiation values and are stored in the form of a hypercube. The hypercube is a complex data unit, which contains abundant information about the physical and chemical properties of the sample. Data collection using a hyperspectral imaging system depends on various parameters such as electromagnetic spectrum wavelength range used for imaging, type of the imaging system and type of the imaging mode.

Imaging Spectrum Range

Electromagnetic spectrum (Fig. 2) is the distribution of all types of electromagnetic radiation arranged in one spatial scale ranging from very short wavelength (very high frequency and energy) gamma radiation to the long wavelength (very low frequency and energy) radio waves. Electromagnetic radiation is a wave of energy packets called photons with both magnetic and electrical properties which when interact with matter produce a spectrum. Gamma radiation (wavelength less than 0.01 nm) has very high energy and good penetration potential. In food and agricultural industry, it is used for microbial safety as it can kill the microorganisms by disrupting their DNA bonds. X-rays are classified into two forms, hard X-rays (wavelength between 0.01 and 0.1 nm) and soft X-rays (wavelength between 0.1 and 10 nm), and are used in food applications for detection of hidden infestation in grains (Karunakaran et al. 2003) and defects in fruits and vegetables (Schatzki et al. 1997). There is a limitation in the use of gamma rays and X-rays for biological materials as their high energy damages the structure and components of biological materials. The use of ultraviolet light (wavelength between 10 and 100 nm) is well established in the food industry for water treatment, air disinfection and surface decontamination due to its low penetration power (Koutchma 2008). Microwaves (wavelength range from 106 to 109 nm) and radio waves (wavelength range from 109 to 1017 nm) have been used for grain disinfestation (Vadivambal et al. 2007) and to study the grain moisture distribution (Ghosh et al. 2007) due to their high transmission power. Visible range (400 to 700 nm) imaging is used mainly for colour-based sorting of agricultural and food materials. Visible light is generally used in combination with near-infrared radiations (400–1000 nm range) to achieve a robust hyperspectral range for quality identification in various foods. This range is called the visible-infrared (Vis-IR) region. Kim et al. (2002) effectively detected faecal contamination on apples using hyperspectral imaging in the Vis-IR region (450 to 851 nm). Mehl et al. (2004) applied Vis-IR hyperspectral imaging within the spectrum of 430 to 900 nm for the detection of surface defects such as side rots, bruises, flyspeck, scrubs and moulds, fungal infection and soil contamination on apples. Delwiche et al. (2011) applied Vis-IR hyperspectral imaging (400–1000 nm) to find Fusarium head blight damage in wheat kernels. Infrared radiation with a wavelength range of 900 to 12,000 nm falls within electromagnetic spectrum between the visible and microwave regions and is the most explored region for hyperspectral image analysis. Infrared spectrum that is closest to visible light is called NIR (ranging from 900 to 1700 nm) spectrum. Hyperspectral instrument designed for use in NIR region will have a very high signal-to-noise ratio (10,000:1) (Hans 2003). In recent years, NIR hyperspectral imaging has been extensively applied for understanding the quality characteristics of food and agricultural materials. Vermeulen et al. (2011) applied NIR hyperspectral imaging (900–1700 nm) to identify the presence of ergot bodies in wheat kernels. NIR hyperspectral spectral imaging was used to identify the level of Fusarium infection in maize using 720 to 940 nm range (Firrao et al. 2010) and in wheat using 400 to 1000 nm range (Bauriegel et al. 2011). Dacal-Nieto et al. (2011) applied NIR hyperspectral imaging for the identification of hollow heart in potato using 900 to 1700 nm range. Short-wave infrared (SWIR) (970–3000 nm) hyperspectral imaging technique has been used for identification of undesirable substances in food and feed (Pierna et al. 2012), wheat kernels damaged by insects (Singh et al. 2010), moisture content of cereals like barley, wheat and sorghum grain (McGoverin et al. 2011). Mid-wave infrared (MWIR) (3000 to 5000 nm) and long-wave infrared (LWIR) (8000 to 12,000 nm) hyperspectral imaging has been used for the identification of hot gases and minerals, respectively, from the earth, using remote sensing techniques (Anonymous 2013).

Imaging System

The three-dimensional hypercube (x, y, λ), which is a pile of two-dimensional spatial images acquired at various wavelengths can be obtained in any one of these three approaches:

-

1.

Intensity data collected at all the wavelengths for one pixel at a time.

-

2.

Intensity data collected at all the wavelengths for one row of pixels at a time.

-

3.

Intensity data collected at one wavelength for all the pixels at a time.

In a hypercube, a spatially arranged image at each wavelength contains an equal number of pixels. Each pixel contains the spectrum, which relates to the chemical composition of the sample at that individual pixel. The three-dimensional hyperspectral image datacube can be acquired using any one of these four approaches (Wang and Paliwal 2007):

-

1.

A point-to-point spatial pattern (whiskbroom method).

-

2.

Fourier transform imaging.

-

3.

A line-by-line spatial scan pattern (line scan or pushbroom imaging system).

-

4.

Wavelength tuning with filters (area scan or staring array imaging system).

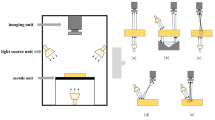

The line scan and area scan imaging system are better suited for quality analysis of food and agricultural materials (Mehl et al. 2004) (Fig. 3).

Line Scan or Pushbroom Imaging System

Line scan or pushbroom imaging system is proficient by collecting spectral data of single spatial line at a time and progressively constructing the hypercube (Wang and Paliwal 2007). The light intensity of the narrow line of the sample along all the wavelength range is imaged onto one row of the hypercube using two-dimensional dispersing element and a two-dimensional detector array. This imaging system generally operates with an imaging lens having a slit-shaped opening aperture. The pushbroom hyperspectral imaging system uses wavelength dispersive system that uses a diffraction grating (transmissive or reflective) or prism techniques. Pushbroom imaging works either by physically moving the sample (Martinsen et al. 1999) or by directing the beam and detector to the region of interest (Nicolai et al. 2007). Unlike area scan imaging system, spectral record of the entire imaging session can be controlled in the pushbroom technique. Pushbroom imaging system has been adopted in food and agricultural industries because of its speed and adaptability (Manley et al. 2009).

Area Scan or Staring Array Imaging System

Area scan or staring array imaging system acquires the entire spatial image of the sample at one wavelength and steps through the next wavelength. This technique is also called wavelength scanning or tunable filter scanning or focal plane scanning. Generally, it uses tunable filters to collect images at one wavelength at one time. Tran (2005) had provided an extensive review of the principles, instrumentation and application of filters in infrared multispectral imaging systems. The most frequently used tuning filters in staring array hyperspectral imaging system are filter wheels, liquid crystal tunable filter (LCTF) and acousto-optic tunable filter (AOTF) (Fig. 4).

Filter wheels are used in the most basic form of multispectral imaging systems, where a mechanical filter wheel is incorporated into the optical path during imaging. The number of imaging wavelengths is determined by the number of windows in the filter wheel. This technique is restricted to a limited number of fixed wavelengths, which is initially determined by the prior knowledge about the sample’s spectral behaviour (Fong and Wachman 2008). This technique is mechanically restricted to change wavelength at a relatively slow pace. Filter wheels are only used in inexpensive imaging systems (Wang and Paliwal 2007). AOTFs are crystals with optical properties, and the wavelengths are controlled by sending acoustic waves through them. These sound waves are created by applying radiofrequency electrical signals, AOTF wavelength and bandwidth that can be regulated electronically. LCTFs, also known as Lyot filters, can select various band passes from the sample (band-sequential scanning system), as they are generated by alternative layers of crystal quartz plates and liquid crystal polarizer. LCTFs generally work in between visible and SWIR of the electromagnetic spectra. Use of these filters with hyperspectral imaging system increased the speed of data acquisition, made auto wavelength change and random wavelength selection possible, but, in turn, increased the overall cost of the system. The staring array imaging system is applicable for many operations where the sample is fixed at one point, for example, these tunable filters are used to change the excitation and emission wavelengths electronically to achieve excitation-emission matrix in florescence imaging (El Masry and Sun 2010). The two main drawbacks of staring array imaging systems when compared to the pushbroom imaging system are

-

1.

Because of lengthy imaging time and continuous exposure to the illumination system, the sample gets heated up and sometimes loses moisture.

-

2.

It is not useful for online sensing or real-time application in industrial scale, as stationary samples are needed.

Imaging Mode

Understanding the theory of interaction of light with the surface of the studied material is important for understanding the concept of hyperspectral imaging. Generally, hyperspectral imaging is carried out in any one of the optical modes (reflectance, absorbance and transmittance) or in florescence mode. According to the optical property of the sample, when the electromagnetic radiation is incident on this sample, the radiation is reflected, absorbed and transmitted. The absorbed light can sometime be re-emitted at lower energy and longer wavelength, and this aspect is known as fluorescence. The fluorescence and optical properties are the unified functions of the wavelength and angle of the incident radiation as well as physical and chemical characteristics of the sample (Chen et al. 2002; ElMasry et al. 2012a). According to Beer-Lambert law, the absorbance of the sample is directly proportional to the concentration of the chemical constituent of that sample. This law had laid the base for the concept of chemical composition analysis using hyperspectral imaging.

Reflectance

Reflection is a process where the fraction of the electromagnetic radiation (radiant flux) incident on the surface of the sample returns into the same hemisphere whose base is the surface of the sample and which contains the incident radiation. The reflectance of light occurs in three main types: specular (reflects back from the surface at the same angle as that of incoming light), diffuse (scattered or reflected at many different angles) or a combination of both. Most of the biological materials reflect light in the diffusion mode. The general mathematical definition for reflectance ρ is given by the radiant flux reflected ϕ r divided by the radiant flux incident ϕ i :

Reflectance is the most common hyperspectral imaging mode used for the analyses of food quality and safety. Reflectance mode measurements are easy to conduct without any contact with the substance, and light level is reasonably high in relation to the sample (El Masry et al. 2012a). Hyperspectral reflectance imaging is commonly applied in Vis-NIR (400–1000 nm) or NIR (1000–1700 nm) spectrum and has been applied to identify defects, quantify contaminants and analyse quality attributes of fruits, vegetables and meat products (Gowen et al. 2007; Wu and Sun 2013b). In particular, it has been used to classify cereals (Mohan et al. 2005; Gorretta et al. 2006; Mahesh et al. 2008), identify fungal infection in agricultural products (Wang et al. 2003; Pearson et al. 2001) and determine quality of fruits and vegetables (Kim et al. 2002).

Transmittance

Transmittance is a process by which the fraction of the electromagnetic radiation (radiant flux) leaves the surface of the sample from the side (usually opposite) other than that of incident radiation. Within the electromagnetic spectral range, transmittance is more commonly measured in the 700 to 1100-nm NIR region (Givens et al. 1997; Ariana and Lu 2010). Although in transmittance, the extent of light passing through the sample is relatively small; it contains significant valuable information to detect internal defects and estimate the internal ingredient concentration of the food more accurately (Schaare and Fraser 2000; Shenderey et al. 2010). As it requires a strong light source and a sensitive detector, transmission mode is not always preferred when compared to the reflectance mode (ElMasry et al. 2012a). In agricultural and food applications, transmittance hyperspectral system was used to extract the data from cucumbers for detection of insects, using a moving sample (Lu and Ariana 2013) and other vegetables and soybeans (Huang et al. 2012b), from cod fish sample for detection of parasite load (Heia et al. 2007; Sivertsen et al. 2011), from tart cherries for detection of pits (Qin and Lu 2005) and from corn for estimation of moisture content and oil of kernels (Cogdill et al. 2004).

Interactance

Interactance is an image sensing mode where the light is allowed to fall on the sample and detected using a detector lying on the same side of the sample (Wu and Sun 2013a). The part of light that interacts with the sample and re-emitted is analysed in interactance mode. Due to exploration of this property of light in interactance hyperspectral imaging, it can deliver more valuable information about the deeper properties of the sample compared to reflectance. It is also advantageous than transmittance due to reduced influence of thickness of the sample. Unlike transmittance, where a special setup is required for sealing the light to prevent it from entering directly into the detector, interactance is also advantageous in its simple setup (Nicolai et al. 2007). Interactance imaging is widely used for the determination of fat content of humans and animals. Interactance hyperspectral imaging system application for food and agricultural products has been limited, but it was used for detection of fat content of beef (Wold et al. 2011), ham (Gou et al. 2013) and pork (O’Farrell et al. 2010) and nematodes in cod fish (Sivertsen et al. 2012). Wang et al. (2013) and Schaare and Fraser (2000) compared the reflectance, interactance and transmittance hyperspectral imaging for the quality assessment of the fruits and vegetables.

Absorbance

Absorbance is the process by which a portion of incident electromagnetic radiation (radiant flux) is retained in the sample without complete reflectance or transmittance. In this case, the incident energy is converted into different form and preserved within the sample. Amount of energy converted into different form is dependent on chemical constituents of the sample. The general mathematical definition of absorbance α is the ratio of the radiant flux absorbed ϕ a to the radiant flux incident ϕ i :

Extrinsic characteristics like size, geometry, appearance and colour and chemical compositions like water content, fat, protein and carbohydrate of the sample can be identified using hyperspectral absorbance imaging (Lu and Chen 1999). Most biological materials have stronger absorption in the spectral range of 600–1000 nm (Kavdir et al. 2009). Although the use of hyperspectral absorbance imaging mode is very limited when compared to the reflectance mode, effective literature in the field of food and agriculture was identified. For cereal grain research, spectroscopic absorbance imaging was used for categorization of vitreous and non-vitreous kernels (Dowell 2000), detecting insect infestation (Baker et al. 1999) and quantifying essential nutrients and chemical constituents (Wang et al. 2004) of various cereals. In general, hyperspectral data acquired using any of the radiometric properties (reflectance, absorbance or transmittance) can be exchanged between each other.

Fluorescence

Fluorescence is a technique in which light absorbed at a given wavelength by a chromophore of the sample is retained and later emitted at higher wavelength (Kim et al. 2001). This difference in absorption and emission wavelengths is called Stokes shift. The phenomenon of fluorescence is observed in various samples in the visible region (400–700 nm), when excited with ultraviolet (UV) region radiation (Chappelle et al. 1991; Lang et al. 1992). In the areas of cell biology, medicine, forensic and environmental sciences, steady-state fluorescence imaging is regarded as a sensitive optical technique and used for scientific research (Harris and Hartly 1976; Chappelle et al. 1984; Albers et al. 1995). Changes in fluorescence emission of food due to contaminations with faecal material and infection with pathogens serve as a basis for application of fluorescence hyperspectral imaging for foods. Despite these advantages, the use of fluorescence imaging for the online quality and safety assessment in food industry is not fully explored due to the complexity in this phenomenon (Kim et al. 2001). Fluorescence hyperspectral imaging system was used for assessing various quality parameters like skin and flesh colour, firmness, soluble solids and titrateable acidity (TA) in apples (Noh and Lu 2007), for detecting skin tumours in chicken carcasses (Kim et al. 2004). This technique was also used for detection of the faecal contamination on apples (Kim et al. 2002; Lefcourt et al. 2006) and cantaloupes (Vargas et al. 2005).

Hyperspectral Imaging Sample Complexities

In comparison with the traditional imaging, hyperspectral imaging is complex, as it involves working with more number of images at the same time in both spatial and spectral dimensions. In order to say that hyperspectral imaging results are the perfect representation of the objective results, there is a need to consider the drawbacks and issues that arise due to sample complexity. In this section, the most common sample complexities that are to be considered while working with hyperspectral images are as follows:

Variations in Sample Surface

Food materials are complex and show very high variations in the sample surface. In reflectance mode, incident radiation in the form of photons falls on the sample surface. Based on the sample surface condition, this radiation may enter the sample or get reflected from the surface. The amount of the photons that reflected back to the sensor is based on the incident radiation wavelength and the nature of the sample surface (Clark and Sasic 2006). The reflected radiation that reached the detector contains both the sample composition at different depths and location within the sample. This depth of penetration depends on the condition of the sample surface. Hence, while conducting hyperspectral imaging, it is important to consider the sample surface variations to arrive at an accurate analysis in the later stages.

Asymmetry in Sample Surface

Considering the focus nature of the hyperspectral imaging systems, the use of flat-surfaced materials for imaging is preferable. The use of flat-surfaced sample makes the sample surface parallel to the imaging plane (Amigo 2010). In contrast to these food materials, sample surfaces are irregular with variations in colour and surface conditions. Most of the dry food and agricultural materials are having large variations in the surface roughness, which creates incident light to scatter. Hence, the collected information may not be the exact representative of the sample spectra. While acquiring hyperspectral images, it is important to keep in mind the asymmetry and roughness of sample surface.

Sample Representativeness

Hyperspectral imaging involves extraction of information due to the interaction of incident light mostly with the surface and very little with the penetration of the food sample. In most cases, penetration depth is negligible as the food samples are complex and opaque. As the food sample matrix is very complex, it can be imagined that if the sample is sliced into infinite number of thin slices, the composition of each sliced surface will show that each sliced surface itself will be very different due to variation in composition and particle sizes in each layer. Keeping this sample complexity in mind, it can be concluded that the one single sample spectra cannot be considered as the signature of the sample at that particular condition (Amigo 2010). Spectral signature can only be constructed by acquiring hyperspectral images of large number of sample (replicates) under that particular condition, to increase the sample representativeness.

Hyperspectral Data Pre-Processing Before Extraction of the Information

Unlike conventional imaging, hyperspectral imaging is more subjected to variations due to external factors and imaging components and complexities in food. Food samples being complex matrices with conditions like surface inhomogeneities bring variations in acquired data. Incident light on the food material experiences both scattering and absorption effect due to the complexity in its interaction with various components in food material. This scatter effect is due to physical properties of food material like cellular structure, particle size, density, etc., and absorption effect is due to chemical composition like carbohydrates, protein, fat, etc. Hence, before applying any modelling method, the homogeneity among the input data should be ascertained, as data are affected by outliers, subgroups or clusters (Centner et al. 1996). To reduce these variations and to extract the useful information from hyperspectral images, spectral pre-processing tools are generally used. In spectral data analysis, the most important function of the pre-processing techniques is to reduce the undesirable variation and noise during hyperspectral data acquisition and make the data analysis more meaningful. If fair results are expected from the hyperspectral data analysis, the pre-processing step is often of vital importance (ElMasry and Sun 2010).

Hyperspectral imaging involves the application of Beer-Lambert law which shows the interaction between the incident light and chemical properties of the sample through which this light passes. Beer-Lambert law can be mathematically represented as

where ‘a’ is the absorbance of the sample, ‘ε’ is the molar absorptivity of the sample, ‘b’ is the effective path length and ‘c’ is the constituent concentration in the sample. As the product of ‘ε × b’ remains constant, spectral pre-processing techniques aim to maintain the relationship between absorbance a and constituent concentration c to be linear and making the measured absorbance a perfect representation of constituent concentration. This linear relationship is affected by undesirable phenomena such as particle size effects, scattering of light, morphological differences like surface roughness and detector artefacts. For instance, if the spectrum of a sample is affected by light scattering, Beer-Lambert law is not true and this kind of spectrum need to be pre-processed in order to eliminate this effect before model development (Stordrange et al. 2002). Pre-processing techniques are applied on spectral data to promote the linear relationship between hyperspectral data and concentration of sample constituent and to compensate for these deviations. Pre-processing techniques that are established on feature selection or extraction always intend to reduce or transform the primary feature space into another space of lower dimensionality (Melgani and Bruzzone 2004). Pre-processing and collection of accurate auxiliary data are the most important requirements to extract qualitative information from the hyperspectral data.

Pre-processing techniques are used on the hyperspectral data for the following reasons:

-

1.

Identification and removal of trends, outliers and noise in data.

-

2.

Improvement of the performance of the subsequent qualitative and quantitative analyses.

-

3.

Enhancement of data interpretation.

-

4.

Simplified machine learning using pre-processed data.

-

5.

Removal of inappropriate and unnecessary information from the data and reduction of the scale of data mining step.

For more systematic understanding, different spectral pre-processing techniques can be categorized into reference-dependent and reference-independent groups.

Reference-Dependent Pre-Processing Techniques

Reference-dependent techniques use pre-determined reference values for spectral pre-processing. The main categories of reference-dependent techniques are orthogonalization, optimized scaling and spatial interface subtraction.

Orthogonal Signal Correction

Hyperspectral data are generally analysed as multivariate data. Application of partial least squares (PLS) to obtain projections for the latent structures is one of the main methods to analyse these multivariate data, where measurable relationship between a raw data matrix X and the response matrix Y is needed. PLS is a useful tool in various areas like multivariate calibration, classification, discriminate analysis and pattern recognition (Martens and Naes 1992). PLS is very successful in the multivariate data analysis because it can handle the data with collinearity among the variables, missing data and noisy data. Wold et al. (1998) had proposed the orthogonal signal correction (OSC) as a reference-dependent pre-processing technique. OSC technique removes all the variations in X that are not corresponding to Y, in order to attain exceptional models in multivariate data analysis (Trygg and Wold 2002). From the general understanding of hyperspectral dataset, most of the spectral variance is of very little or even nil analytical importance. Therefore, for better analysis, it is important to remove the variance that is orthogonal to the component of interest from the dataset. The number of orthogonal factors to be eliminated from the dataset must be decisive, and the more the number of factors eliminated, the greater is the reduction of orthogonal variance (Boysworth and Booksh 2007). In order to achieve orthogonal correction models, three important criteria must be met:

-

1.

Component of interest should involve the large systematic variation in X.

-

2.

Component of interest must be predictive by X.

-

3.

Component of interest must be orthogonal to Y (Trygg and Wold 2002).

These three criteria are met by performing an OSC on X, which will remove most of the components of X that are unrelated to the model developed from Y. The main disadvantages of OSC pre-processing technique are

-

1.

Time-consuming, as OSC methods follow a number of internal iterations to identify the orthogonal components of X.

-

2.

Additional time for cross-validation.

-

3.

Difficult to estimate the correct number of components that are needed to be eliminated from X, those are not correlated to Y.

Raw hyperspectral data before PLS modelling are generally pre-processed using OSC method to eliminate the variance from X that is not related to Y. OSC is also used for instrument standardization by removing the variability which is inappropriate to the predicted variables.

Orthogonal Projections to Latent Structures

Orthogonalization is not a single pre-processing technique; rather, it is a group of algorithms and programs, with a goal to separate the variation in the sample spectrum, which will not correlate with the reference value (Rinnan et al. 2009). When the factors are too many and well collinear, PLS is used to develop predictive models. PLS was first developed by Herman Wold in 1960, as a statistical technique for economics. Since then, it was subjected to continuous improvement and has been used in many different fields for multivariate calibration, regression, classification, discriminate analysis and pattern recognition. Projections to latent structures by means of PLS is one of the important methods to analyse the multivariate data, where measurable relationship between a raw data matrix X and the response matrix Y is needed (Trygg and Wold 2002). PLS is a very robust technique in handling collinearity, noises and missing data in both descriptor and response matrices. PLS was subjected to continuous improvement like development of non-linear iterative partial least square (NIPALS) method, partial least square discriminate analysis (PLS-DA), OSC, etc., since the time it was proposed. Orthogonal projections to latent structures (O-PLS) is a relatively new technique, which is developed as a spectral pre-processing technique to enhance the interpretation of PLS models and relatively reduce the model complexities. Similar to OSC, the objective of O-PLS is to eliminate the precise variations in X that are not correlated to Y. In O-PLS, systematic variability in matrix X is separated into Y predictive components and Y orthogonal components. Components containing the variation that is commonly correlated with X and Y are represented as Y predictive components; these variations are in linear correlation with Y. Components that have specific variation for X that is uncorrelated or orthogonal to Y are represented as Y orthogonal (Cloarec et al. 2005; Trygg et al. 2007; Stenlund et al. 2009).

The main advantages of O-PLS method are

-

1.

O-PLS-treated data are easier to interpret, as they have limited components.

-

2.

Data are more relevant because it not only separates non-correlated variation from the dataset but also provides an opportunity to study and analyse this non-correlated variation.

-

3.

Removal of non-correlated variance from the data before modelling makes the prediction of component of interest simple.

-

4.

Data prediction ability of the model also increases.

Optimized Scaling

Optimized scaling (OS) was first introduced by Karstang and Manne (1992), as a theoretical-based method for the linear calibration of spectral data, when it does not have a fixed intensity range. They proposed two calibration methods for spectral datasets. Initially, this method gained little attention, as it is advantageous only when one constituent calibration data are available. But the second method is more generalized. Multiplicative scatter correction method uses reference spectrum (usually mean spectrum) for the spectral calibration, but these problems are not faced when optimized scaling is used. Karstang and Manne (1992) had shown the successful application of optimized scaling for the data from X-ray diffraction data of mixture of minerals, infrared spectra of organic liquids and NIR spectra of various food products. They also proposed that care must be taken while applying optimized scaling on the data with additive or baseline effects.

Spectral Interference Subtraction

Target chemical constituent identification using hyperspectral imaging is a demanding task. Hyperspectral data obtained for the analysis of chemical components is generally affected by unidentified components, component interaction, temperature, light scattering, etc. Spectral interfaces are generally caused by interference (impurities) that interacts with the analyte or constituent of interest. Spectral interference subtraction (SIS) method involves the removal or elimination of certain additive interferences from the input spectra. Martens and Stark (1991) developed SIS method as a spectral pre-processing technique for near-infrared spectroscopy and then used it for the interference correction in the field of biomedicine for speech and language processing (Hu and Wang 2011), electrocardiography (Levkov et al. 2005) and trace elements using X-rays (Donovan et al. 1993). The three main purposes of this technique are

-

1.

To remove the additive interferences caused by the presence of known constituents from the spectra.

-

2.

To maintain the changes as small as possible to the spectral data.

-

3.

To make the later regression analysis effortless to interpret.

The use of SIS as a pre-processing technique not only makes the data more effective for chemical analysis but also decreases the analysis charges and enhances the accountability of the subsequent multivariate regression analysis technique. The effective use of SIS pre-processing technique can be identified in online process control (industrial scale) where there is a difficulty to generate real calibration samples for spectral correction.

Reference-Independent Pre-Processing Techniques

Reference-independent pre-processing techniques provide more generalized tools suitable for exploratory studies, where there is no reference value available (Rinnan et al. 2009). The most commonly used spectral pre-processing techniques are broadly divided into four categories: scatter correction, normalization, derivatives and baseline correction.

Scatter Correction

Scatter correction is a statistical method to remove the scatter variations in the spectral data. Multiplicative scatter correction, standard normal variate and detrending are the most common scatter correction techniques.

Multiplicative scatter correction (MSC) also called multiplicative signal correction is the most common pre-processing technique used for scatter correction of NIR and IR spectra (Fig. 5a). Martens et al. (1983) developed MSC as a multivariate linearity transformation technique; later, it was elaborated and applied to NIR reflectance of meat by Geladi et al. (1985). The main purpose of MSC is to reduce the scatter in the spectra that is caused by various particles in the sample. Initially, it was developed as a multiplicative scatter correction technique to handle the multiplicative problems that arise from scatter alone, but later, it was used to handle diverse problems, and the abbreviation was changed to multiplicative signal correction (Rinnan et al. 2009). The MSC technique initially calculates the correction factor for the original spectra (Fig. 5b) using reference spectra (generally mean spectrum) and then corrects the original spectra using this correction factor by back transformation. It is mathematically represented as

Near-infrared reflectance intensity spectra of 10 and 18 % moisture content of Canada Western Red Spring (CWRS) wheat: a raw reflectance intensity, b multiplicative scatter corrected reflectance intensity, c standard normal variate reflectance intensity, d normalized reflectance intensity and e Savitzky-Golay smoothing and derivative corrected reflectance intensity

where x i ORG, x REF and x i CORR are the original, reference and corrected spectrum, respectively.

a i and b i are the correction coefficients of the ith sample, that are estimated by least square regression of the sample’s original and reference spectrum. In other words, a i and b i are the intercept and slope of the correction coefficient curve drawn between reference spectrum on x-axis and original spectrum on y-axis. From the mathematical derivation, it is clear that MSC does not eliminate the scatter but decreases the inter-sample variance of the scatter by implementing an additive and multiplicative transformation of the individual spectrum into the simple idealized reference or mean spectrum (Andersson 1999). Initial use of MSC was to choose the range from the spectra with no chemical information, but in NIR spectra, it is difficult to identify such a region without chemical information at all (Stordrange et al. 2002), so Martens et al. (1983) proposed that whole spectral range should be used to determine the correction coefficients. MSC is restricted by assuming that there is no chemical variation between original and reference spectrum (Wang et al. 2011). If this assumption is not made, there might be error in the estimation of correction coefficient (Chen et al. 2006). This problem was addressed by Martens and Stark (1991) and Martens et al. (2003) in their extended MSC (EMSC) technique by including the wavelength correction to the MSC. Isaksson and Kowalski (1993) had proposed piecewise multiplicative scatter correction (PMSC) by introducing spectral value correction at each wavelength and independent intercept and slope correction terms to the traditional MSC technique.

Standard normal variate (SNV) was first proposed by Barnes et al. (1989) as a spectral pre-processing technique, with an aim to remove the multiplicative interferences due to particle size of the sample and scatter and to account for the differences in the curvilinearity and baseline shift in the reflectance spectra (Buddenbaum and Steffens 2012) (Fig. 5c). The mathematical model for SNV is given as

where x i CORR and x i ORG are the SNV corrected and original spectrum, respectively.

a i and b i are the mean and standard deviation of the ith sample, respectively.

As the correction factors used in this technique are the mean and standard deviation, SNV is also called a z-transformation, centering or scaling (Otto 1998). Although SNV-corrected spectrum is technically similar to MSC (Dhanoa et al. 1994), SNV does not need any reference spectrum for the calculation of correction factors; hence, the user loses the grip on computation. As there is no least square step, SNV technique is more affected by noise than the traditional MSC (Rinnan et al. 2009). This technique makes the data more understandable as corrected spectrum has a greater linear relationship between spectrum and constituent concentration.

Trend is a statistical technique that helps to interpret the spectral data. If the data follows a particular trend, this may strongly confuse or superimpose the changes of interest. Detrending is the most popular mathematical or statistical method of removing the trends from the data. Barnes et al. (1989) introduced the detrending along with SNV technique for the NIR reflectance spectra pre-processing and which was further applied by Buddenbaum and Steffens (2012). For instance, detrending is applied for removing the long-term spectral changes in spectral time series. One of the simple detrending methods is by subtracting mean values of spectrum from each column of that spectrum. More complicated detrending methods aim at disintegrating the spectral changes into the trend (low-frequency components) and the spectra of interest (which are generally characterized by the higher frequency component) (Lasch 2012).

Normalization

Normalization is a scatter correction technique applied to scale up the spectra within its similar range (Fig. 5d). Normalization was used in vibrational spectroscopy for correcting the spectra that was affected with differences in optical path length (light path) or difference in sample quality (Lasch 2012). In normalization to equal length, spectra are generally divided by their own norms, and eventually, the length of the spectra becomes 1 (Stordrange et al. 2002):

Another method of normalization is to sum the spectral data to 1. This technique is not commonly used as the data can be misinterpreted. For example, when summing up the positive and negative regions, improper zeros result in the corrected data. Normalization had shown an increase in accuracy and efficiency of the spectral distance measurement modelling (Heraud et al. 2006).

Derivative

Resolution of IR spectra is well enhanced by spectral derivatives. Savitzky-Golay smoothing and derivative and Norris-Williams derivation are the most common spectral derivative techniques used with an aim to resolve and remove the overlapping bands in complex IR spectral signals.

Savitzky-Golay (SG) smoothing and derivative technique was first proposed by Savitzky and Golay (1964) and further elaborated by Steinier et al. (1972). Initially, this technique was used for continuous and equally spaced data. Evaluation of derivative is achieved by executing the data through a window-wise symmetric filter (Brown et al. 2000) (Fig. 5e). In this technique, the spectrum is elaborated with a window having 2d + 1 points, where each window is used for the estimation of the centre point and with d points on each side. These 2d + 1 points are fitted by a polynomial of a given order, and the coefficients found by this fit are used for the estimation of the new value at this wavelength (either just by smoothing or by both smoothing and differentiation) (Rinnan et al. 2009). The main benefit of SG technique is that it can carry out smoothing, noise reduction and computing derivatives in one single step. The method proposed by Savitzky and Golay (1964) contains some numerical errors which were addressed and corrected by Steinier et al. (1972).

Norris (1983) introduced the derivative technique, which was later modified by Norris and Williams (1984). This technique was named the Norris-Williams (NW) spectral derivative technique (Norris 1983; Norris and Williams 1984). The principle behind NW technique is to smoothen the spectral data based on a moving average (Massart et al. 1997) over data points, and the gap between these data points is used to estimate the derivatives, and then, finite difference is calculated based on this smoothing spectrum. Unlike the SG method, the NW method is less prone to high-frequency noise, as it uses both smoothing by moving average and gap size for derivative. Application of NW pre-processing technique (gap size for derivatives part) for spectral data is not properly defended as the data are not presented in a time domain.

Baseline Correction

Reflectance or transmittance IR spectra often contain unwanted background features or noise additionally to the desired signal (Schulze et al. 2005). Spectral noise is caused due to scattering, external factors causing variations in data acquisition (illumination, temperature, etc.) and instrumental noises. To acquire proper information from the spectral data, it is important to remove this noise or background features from the signal. Baseline correction is a pre-processing technique used to eliminate these background noises from the spectral data, thus making a more easy illustratable signal. It also generates more accurately predictable spectral parameters like band positions and intensity values (Mazet et al. 2005). Most common baseline correction techniques are offset correction, detrending (Barnes et al. 1989), SG technique (Savitzky and Golay 1964; Steinier et al. 1972) and iterative polynomial baseline correction (IPBC) (Lieber and Mahadevan-Jansen 2003). As SG technique and detrending are discussed in previous sessions, the focus is on remaining techniques in this section. Offset correction is the easiest baseline correction technique. Offset correction is conducted by subtracting a linear horizontal baseline from the original spectrum. This horizontal baseline value is selected in such a way that no less than one point of the corrected spectrum equals to 1 and spectra remain unscaled (Lasch 2012). Instead of using simple straight horizontal baseline as offset correction, an nth-order iterative polynomial function is used by IPBC in order to fit the spectral data points. To prevent baseline correction artefacts, a lower order polynomial is recommended for IPBC (Lasch 2012).

Types of Information Extracted from Hyperspectral Data

The hypercube or datacube collected using high-performance hyperspectral imaging can be visualized in two different ways: one along all wavelengths of the imaging spectrum to obtain spectral data and another at each wavelength to obtain spatial data. Using these spectral and spatial data, either sample qualitative (e.g., detection of defects) or quantitative information (e.g., quantification of chemical composition and contamination) can be gathered.

Qualitative Analysis

Qualitative analysis of food and agricultural products includes analysis of chemical composition of the materials, detection of defects and purity of materials present. Hyperspectral imaging technique has proved to be a non-destructive analytical technique for the non-destructive analysis of food quality and safety (Wu and Sun 2013b). Qualitative information about the sample can be obtained when spectral data of a hypercube are analysed using proper chemometric techniques. Using the entire spectra for the qualitative analysis is time-consuming and burdensome on the statistical software. In order to overcome this, exploratory data analysis techniques are used to select indispensable wavelengths from the excessive data for multispectral analysis. When selecting the indispensable wavelengths, care should be taken to avoid loss of crucial data. Qualitative parameters are generally analysed using data exploratory techniques or supervised or unsupervised classification techniques. Out of all chemometric techniques, classification methods are the basis for the qualitative analysis of chemical composition and purity of the component in the food and agricultural sample. Classification methods are machine learning techniques that develop mathematical models that can recognize each member of the sample to its appropriate class on the grounds of some set model parameters. Once the model is developed, it has the capability to read the unknown sample using its model parameters and later assign it to one of the classes. Classification techniques are extensively used in the fields of chemistry, process monitoring, medicine, pharmacy, social sciences, economics and food science (Ballabio and Todeschini 2009). Rapid quality inspection is of major concern in automated food and agricultural industries. Classification modelling is the most appropriate technique to handle these concerns.

Quantitative Analysis

Initially, the use of hyperspectral imaging in the field of food and agriculture started to assess the quality of the product. Quantification of various chemical constituents in food and agricultural samples can be analysed using categorical regression techniques. Hyperspectral regression models are developed using known concentrations of chemical constituents, and once the model is developed and validated, analysis of an unknown sample is simple using this model. Detection of various materials using hyperspectral imaging involves identification and quantification of those in a spatial image using their respective spectral features. Every pixel of the identical sample in an image has identical spectral signature. Identification and quantification of various materials using hyperspectral imaging is a challenging task. Hyperspectral imaging applied along with image processing and chemometrics makes the detection and quantification of materials possible. Chemometric techniques like principal component regression (PCR), multilinear regression (MLR) and partial least square regression (PLSR) are generally used to measure various food components quantitatively. Regression models are quantitative feedbacks on the basis of a set of descriptive variables (Ballabio and Todeschini 2009). Regression analysis helps to develop an association between the hyperspectral data and the measured property of the material. This mathematical expression relating the systematic responses or signals to the constituent concentration of the sample is also known as the calibration equation (Mark and Workman 2003).

Chemometric Tools for the Extraction of Information from Hyperspectral Data

Exploratory Data Analysis

Exploratory data analysis technique when used as a statistical method can recognize the main characteristics of the data and see what the data tells us beyond classification modelling and regression analysis. Exploratory data analysis technique was promoted by John Tukey as a method to see what the data can offer for the researcher other than the general modelling for performing qualitative analysis (Tukey 1980). In a broad sense, these methods are used for detecting the data errors, cross validating the assumptions and selecting a suitable model. In hyperspectral imaging, these are also used to reduce the amount of information in the data. Most commonly used exploratory data analysis methods are principal component analysis (PCA) and independent component analysis (ICA).

Principal component analysis is a method to transform the dataset linearly into smaller dimension dataset with variables that are uncorrelated with each other. It was proposed for the first time by Pearson (1901) and Hotelling (1933) to explore the invisible structures in the data that assist in more accurate classification. In order to achieve this, the data are rotated in the sample space of observation. PCA is an important step for the identification of key wavelengths and reduces the massive hyperspectral imaging dataset. This reduction in data makes it possible to use in online sensing. PCA is used for feature reduction by changing data to a new set of axes and creating subsets, and these subsets show higher differentiability (when compared to the original data subsets) (Jiang et al. 2010). Projection-based methods like PCA are generally applied for data dimension reduction and feature selection problems.

Principal components are the new components that are generated by orthogonal linear transformation of the original dataset. The new components are formed by rotating the data so that the first principal component has the highest variance by any projections of the dataset; the second principal component has the second highest variance by any projection of the dataset and so on. Hyperspectral dataset (hypercube) H with X × Y × λ dimensions is decomposed using PCA into a set of scores and loadings (Amigo 2010). Transformed dataset can be represented in matrix form as

where H I the transformed dataset with dimension XY × λ, S is the score surface with dimension XY × F, L S is the loading profiles with dimension F × λ and E is the residual matrix with dimension XY × λ. The main advantage of PCA is that the number of principal components can be ascertained from the principal component score, variance and factor loading results. The loading profile and the score surface of each principal component can be an association of pure principal component, a physical effect or even a combination of both (Amigo et al. 2008). These properties of PCA make it a very robust technique to handle the massive hyperspectral data. PCA is the most useful exploratory data analysis technique for food and agricultural materials (Xing et al. 2007; Vargas et al. 2005; Barbin et al. 2012; Cho et al. 2013). Van Der Maaten et al. (2009) provided a comparative review between linear (PCA) and non-linear (multidimensional scaling, maximum variance unfolding, isomap, diffusion maps, multilayer autoencoders, kernel PCA, manifold charting and locally linear coordination) dimensionality reduction techniques and concluded that non-linear techniques, although they have large variance, are not capable of outperforming the PCA technique.

Independent component analysis (ICA) is another exploratory data analysis and hyperspectral image feature selection method. It is also used for feature extraction, pattern recognition and unsupervised classification. It was proposed for the first time by Herault and Jutten (1986) and further developed by Comon (1994). It is a method to separate spectral signal into a number of additive subcomponents. These subcomponents are believed to be statistically independent (occurrence on a subcomponent does not affect the other) of one another and are not following Gaussian function. It obtains the independent source signals by searching for a linear or non-linear transformation that maximizes statistical independence between components (Jiang et al. 2010). Before application of the ICA algorithm on the hyperspectral data, it is recommended to centre, whiten and reduce the dimension of the data (Polder et al. 2003). Data centring is done to simplify the ICA algorithm and is attained by subtracting the mean of the components of the vector from every component. Whitening transformation technique transforms the data so that they have the identity covariance which suggests that all dimensions of the matrix are statistically independent and the variance along each of the dimension is equal to 1. Whitening and data reduction can be done using PCA; hence, data are processed using PCA before applying ICA. Du et al. (2003) and Botchko et al. (2003) had used ICA for hyperspectral image processing primarily to reduce the dimensionality and select the specific bands for feature extraction. Polder et al. (2003) had applied ICA for tomato sorting using visible spectral images in the spectral range of 400 to 710 nm. Application of ICA for hyperspectral images was not as successful as PCA (Table 1), as it is necessary for defining the number of independent components prior to the computational analysis for ICA, which is not a usual practice with PCA.

Unsupervised Learning

Unsupervised machine learning techniques are used to explore the hidden structures in a hyperspectral dataset. The main difference that separates unsupervised from supervised machine learning techniques is that unsupervised techniques when dealing with the unlabelled data deliver an underlying structure of that data. As there are no generated response data for comparison of results, this technique cannot generate the error terms. Unsupervised learning will only have the original input data to work on. Two most common unsupervised machine learning methods are dimensionality reduction and clustering. PCA and ICA are the classic examples of dimensionality reduction techniques. PCA and ICA disintegrate the spectral data into a number of components (principal or independent) in order to distinguish the key directions of variability in the high-dimensional data space (Wu and Sun 2013b). The first few components of PCA and ICA carry most of the information that can accurately distinguish between the samples.

The K-means, fuzzy and hierarchical clustering are the classic examples of clustering techniques. The K-means clustering technique clusters input data into k cluster groups of equal variance. Samples belong to their respective cluster group by minimizing their distance to the cluster centroid. K-means uses the centroid of the cluster as the criterion to assign the cluster for each sample and is achieved by minimizing the sum of squared errors (Ding and He 2004). K-means is also called hard clustering as it verifies whether the object belongs to the cluster or not and the number of clusters is initially specified. In contrast, fuzzy clustering method assigns the samples to different clusters simultaneously with varying degrees of membership (Amigo et al. 2008). Hierarchical clustering is a method to build a hierarchy of clusters that is normally presented in the form of a binary tree diagram, commonly known as dendrogram. The main principle of hierarchical clustering is to group the sample to a cluster by measuring the distance between the two consecutive samples. The hierarchical clustering is not suitable for large datasets (Wu and Sun 2013b). Among the clustering techniques, fuzzy clustering is more prominently used for hyperspectral data analysis. Although clustering techniques are very robust, their use for hyperspectral data analysis is very limited as they lack the ability to guess the correct number of clusters to be developed (Amigo et al. 2008; Lopes and Wolff 2009). Table 2 shows the limited application of unsupervised learning techniques for food and agricultural products using hyperspectral spectral imaging technique.

Supervised Learning

Supervised learning is a machine leaning technique which groups the unknown samples into the known predefined groups as per their measured features (Wu and Sun 2013b). Supervised learning is a process of understanding a set of rules from the instance (training set) with an aim to create a classifier that can be used upon new instance (Kotsiantis 2007). A training set consists of pairs of input vectors and desired output. The output of the classifier can be a model to predict unknown samples (for regression) or can anticipate a class label of the input vectors (for classification). In other words, supervised learning evaluates input testing set to develop function that can be used for mapping the new set (testing set). Supervised learning is the most successful statistical technique used along with hyperspectral imaging in food and agricultural applications. Kotsiantis (2007) provided an extensive review on different supervised machine learning techniques and their use in classification of various real-world problems. Table 3 shows the extensive application of supervised learning techniques for food products using hyperspectral spectral imaging technique.

Discriminant Analysis

Discriminant analysis is by far the well-known and extensively used classification method (McLachlan 1992). It is a supervised learning technique which uses discriminant analysis function to assign a dataset to one of the previously established groups. Discriminant analyses are very robust, but they overfit the multicollinear data (correlated among themselves) (Hand 1997). Data reduction using stepwise discriminant analysis (SWDA) (Jennrich 1977) or PCA (Pearson 1901; Hotelling 1933) can resolve this problem. Quadratic, linear and partial least square discriminant analyses are a few important discriminant analysis techniques.

Like PCA, discriminant analysis technique separates samples into different classes by maximizing the variance between classes and minimizing the variance within a class. Mathematical relationship of this statement can be framed from considering a classic classification problem, where a test sample x i is designated to one of the prior defined class C based on j measurements [x i = (x i1 , x i2 .....x ij )T]. The discriminant function (d f ) (classification score) is given below, and the test sample is designated to the class which has the minimum classification score (Wu et al. 1996):

The first two terms of this equation express the between the test sample x i and centroid x̄ c (Mahalanobis 1936) where Σ c is the class C’s covariance matrix and calculated by

x̄ c is the centroid of class C and calculated by

πc is the class probability of class C and calculated by

where n c and n are the total number of samples in class C and training set.

Quadratic discriminant analysis (QDA) classifies the samples into the classes with quadratic-shaped boundaries and assuming that multivariate normal distribution is common in each class (Ballabio and Todeschini 2009). Mahesh et al. (2008) and Choudhary et al. (2009) applied QDA for the classification of wheat classes using hyperspectral imaging, and Singh et al. (2009, 2010) applied QDA for the classification of midge-damaged and insect-damaged wheat using hyperspectral imaging.

Linear discriminant analysis (LDA) is considered as the special case of discriminant analysis, first proposed by Fisher (1936). LDA follows all the principles of discriminant analysis, but it uses pooled covariance Σ p as it assumes that the covariance matrices of the class Σ c are equal.

Pooled covariance matrix of the class is given as

For classification of samples into different classes, LDA develops linear projections of independent variables (Wu and Sun 2013b). Although it is similar to PCA in considering the independent variables, LDA also contains class information of samples. Like QDA, LDA also assumes that multivariate normal distribution is common in each class, but the covariance matrices of the classes are equal; hence, both LDA and QDA are assumed to work well if the sample classes are normal. The main disadvantage of LDA is that it does not hold well with the condition where the number of samples is less than that of number of variables, as in this condition, the inversion of covariance matrices becomes difficult. Hence with LDA, it is always preferred to have more samples than the number of variables. As QDA generates covariance for each class, it can handle or require more number of samples than LDA. QDA and LDA are the most commonly used classification techniques of hyperspectral imaging data from food and agricultural products. Wang and Paliwal (2006) used LDA, QDA, k-nearest neighbour (KNN), probabilistic neural network (PNN) and least squares support vector machines (LS-SVM) for the classification of hyperspectral images of six Canadian wheat classes and confirmed that LDA gave the highest classification accuracy. Gowen et al. (2009) developed a technique with the combination of LDA and hyperspectral imaging method for accurately identifying freeze-damaged mushrooms.

PLS-DA is a supervised learning technique that combines the regression power of PLS and classification power of discriminant analysis. PLS-DA based on their spectral fingerprint assigns the unknown samples to one (and only one) of the available categories. This technique is derived from PLS regression (Wold et al. 1983) and is referred as a secondary data analytical step involving the construction of statistical classification models (Baker and Rayens 2003). PLS was designed for regression analysis (Wold 1975), but recently, it was combined with LDA and used as a discriminant technique. PLS-DA coincides with the inverse least square approach to LDA and gives the same result as that of LDA but with less noise and the advantage of variable selection (Ballabio and Todeschini 2009). There are two kinds of PLS algorithms: PLS1 algorithm deals with one dependent Y variable, and PLS2 deals with more than one dependent Y variable. PLS-DA is a PLS2 algorithm (many Y variables) that hunts for abstract (or latent) variables that have maximum covariance with Y variables (qualitative in nature). The Y block represents whether the sample is classified correctly or not. In PLS-DA classification with two classes, Y variable will be given 1 if the sample is classified correctly or else given 0 if it is wrongly classified. When dealing with multiclass classification, for each sample, a PLS2 algorithm for multivariate qualitative response is applied, and it will return prediction value between 0 and 1 for each class. If the value is closer to 1, then it indicates that the sample belongs to that particular class or else not. A threshold between 0 and 1 is used to assign a class for the sample. The use of PLS-DA as a classification tool for hyperspectral imaging of food and agricultural products is a recent development. Pearson et al. (2001) used NIR spectroscopy and PLS-DA for accurately (95 %) detecting aflatoxin-contaminated corn kernels. Serranti et al. (2013) used PLS-DA for accurate (100 %) classification of groat and oat kernels using hyperspectral imaging in the near-infrared range from 1006 to 1650 nm. Vermeulen et al. (2011) used PLS-DA for the online identification of contaminants in cereals by near-infrared hyperspectral imaging.

Categorical Regression

Regression is a statistical procedure which determines the relation between dependent variable and independent variable. Categorical regression calibrates the data by accrediting numerical values to classes, which results in optimal linear regression equation for the transformed variables. PCR and PLSR are the best-known categorical regression techniques used for extracting information from hyperspectral imaging data.

Multicollinearity is a statistical procedure where several independent variables participating in multiple regression modelling are highly correlated to one another. Due to multicollinearity, least square of the variables is unbiased, but they have more variance between them. PCR is a multivariate regression analysis technique for analysing data with high multicollinearity between their variables. PCR reduces the standard error by adding bias to the regression estimates and is hoped that more reliable estimates will be achieved due to this overall effect. The simple matrix notation of PCR is given as follows:

where Y is the dependent variable matrix (concentration matrix), X is the independent variable matrix (hyperspectral imaging data), Ḇ is the coefficient of regression and E is the error or the residual matrix. As PCR considers the variances between variables, more freedom is provided in variable selection, and hence, it avoids the problem during further mathematical calculations. PCR model developed using the independent and dependent variables sometimes gives a random error or noise rather than giving the anticipated relationship (model outfitting). This can be avoided by choosing optimal number of PCs. The main problem with PCR is that the PCs that describe the independent variable matrix (hyperspectral imaging data) may not be the perfect PCs for predicting the concentration of the sample. This problem can be resolved by applying an intermediate correlation coefficient calculation step between sample concentrations and the PC scores and selecting those components that are significantly correlated for subsequent regression (Romia and Bernardez 2009). Cogdill et al. (2004) developed PCR models to accurately predict the moisture content and oil content of single corn kernels using hyperspectral absorbance spectra in the wavelength region of 750–1090 nm.

PLSR is a well-known chemometric tool that is used to estimate the biological and chemical properties of the sample from their hyperspectral spectrum. PLSR is extensively used for the study of massive hyperspectral data and was introduced for the first time by Wold (1975) for the field of econometric as an alternative to the general least square regression to handle the data with variables showing high collinearity. Similar to PCs of PCR, when applied to create a relationship between concentration matrix Y and hyperspectral matrix X, PLSR develops latent variables known as PLSR components (PLSRCs) which are in linear combinations with variables in matrix X. Unlike PCR, PLSR ensures that the first few of its PLSRCs contain as much information as possible to classify the matrices X and Y. They also manage to explain most of the X and Y variances during calibration and compression. As PLSRCs are corrected for maximizing the prediction capability of matrix Y, they will not match fully with the direction of maximum variation (Romia and Bernardez 2009). The simple matrix notation of PLSR is given as follows:

where Y is the dependent variable matrix (concentration matrix), X is the independent variable matrix (hyperspectral imaging data), X p and Y p are the matrices of the X scores and Y scores, respectively, P and Q are the orthogonal loading matrices and E 1 and E 2 are the error or residual matrices. Similar to PLS-DA, PLSR also has two kinds of algorithms: PLS1 to handle only one response variable and PLS2 to handle more than two (multiple) response variables. PLS1 is generally used to determine concentration of only one component of Y, and PLS2 is used to determine the concentration of multiple components of Y. In the recent past, extensive literature on the application of PLSR for extraction of useful information from hyperspectral images of food and agricultural materials is available. Wu and Sun (2013b) reviewed the use of PLSR with hyperspectral imaging system for meat and meat products, fish (Xu et al. 2016a,b), fruits and vegetables’ quality and safety analysis.

Naïve Bayes Classifier