Abstract

Hyperspectral systems are characterised by offering the possibility of acquiring a large number of images at different consecutive wavebands. To ensure reliable and repeatable results using this kind of optical sensors, the intensity shown by the objects in the different spectral images must be independent from the differences in sensitivity of the system for the different wavelengths. The spectral efficiency of the acquisition devices and the spectral emission of the lighting system vary across the spectrum and the images, and therefore the results can reproduce these variations if the system is not properly calibrated and corrected. This is particularly complex, when several LCTF devices are used to obtain large spectral ranges. This work presents the development of a hyperspectral system based on two liquid crystal tuneable filters for the acquisition of images of spherical fruits. It also proposes a methodology for acquiring and segmenting images of citrus fruits aimed at detecting decay in citrus fruits that has been capable of correctly classifying 98 % of pixels as rotten or non-rotten and 95 % of fruit.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

In recent years, optical devices and sensors have been introduced as nondestructive tools for inspecting food in industry (Vadivambal and Jayas 2011; Lorente et al. 2012a; Pathare et al. 2013). Depending on how it is used, this technique can be used for a series of purposes in fruit inspection, being the most common is the estimation of internal properties like sugar content or acidity (Vélez-Rivera et al. 2013). On the other hand, the detection of external defects that are difficult to detect by traditional computer vision systems based on colour imaging is still a challenge (Cubero et al. 2011). Traditionally, the systems used to estimate food quality in the industry have been based on the visible range of the electromagnetic spectrum, although near-infrared (NIR) has occasionally been included (Aleixos et al. 2002; Zhu et al. 2012; Wu et al. 2012). This is mainly because such systems are designed with the intention of imitating the human eye, and the fact that they are relatively inexpensive. Machine vision, however, offers a series of possibilities that go far beyond the capabilities of the human eye. Certain organoleptic characteristics, some kinds of damage or the presence of contaminating agents cannot be seen with the naked eye (Lorente et al. 2012a), and therefore are rather difficult to detect using traditional cameras based on RGB (red, green, blue). Yet they can often be observed in particular regions of the spectrum or at certain specific wavelengths. One way of enhancing the capabilities of machine vision systems is to capture and analyse images at specific wavelengths using multispectral systems (Blasco et al. 2009), since they are the most powerful and flexible methods of detecting and estimating characteristics that are difficult to detect with RGB-based systems (Sun 2010).

Until recently, one of the most used ways to acquire chemical information of food was based on spectroscopy (Magwaza et al. 2011; Karoui and Blecker 2011). However, as the point detector has its size limitation, conventional spectroscopy system cannot cover a large area or a small area with high spatial resolution. Therefore, the technique does not provide the spatial information which is becoming regularly required for food inspection (Sun 2009; 2010). With the integration of the main features of imaging and spectroscopy, hyperspectral imaging can simultaneously acquire both spatial and spectral information that is critical to the detection of food safety and evaluation of food quality attributes. The cost of hyperspectral equipment has gradually gone down, and this has allowed it to be incorporated as a valuable tool in many laboratories devoted to developing hyperspectral computer vision applications for agriculture (Sun 2010). Today, three types of technology are basically used to acquire hyperspectral images for fruit analysis: image spectrophotometers (Menesatti et al. 2009; ElMasry et al. 2008), acousto-optical tunable filters (AOTF) (Bei et al. 2004; Vila-Francés et al. 2010) and liquid crystal tunable filters (LCTF) (Peng and Lu 2006; Kokawa et al. 2012). Image spectrophotometers are characterised by the fact that they acquire spectral data about a scene line by line using the relative movement of that scene with respect to the instrument. These systems offer a good spectral resolution, but they do not allow the complete image to be acquired without synchronising the image acquisition with the movement of the sample by means of a step motor or by using a mirror-scan (Lorente et al. 2012a). Systems based on AOTF are characterised by offering good tuning times (around 50 μs) and good frequency selectivity, but they have a limited field of vision due to the small size of the crystal used in this type of filters (Vila-Francés et al. 2011). On the other hand, the main advantage of LCTF-based systems in comparison to the previous two is that they are more compact and offer a wider field of vision. Nonetheless, their main drawback is that more time is needed to tune them (Hecht 2003).

The acquisition of hyperspectral images using LCTF entails a series of problems (i.e. spectral response, sensitivity, uniformity, spatial resolution etc.) that have to be minimised before acquiring images (Wang et al. 2012). These problems increase when more than one filter has to be used to cover a larger spectral domain than with only one, and the image acquisition needs to be synchronised with the tuning of all the filters. The acquisition of the whole spectral range provided by two tuneable filters (i.e. one for the visible region and one for the NIR) requires a setup to allow both to be used successively on the same scene without the need to assemble and disassemble them on each acquisition, which would make it difficult to acquire images automatically. Furthermore, the filters need to be tuned in each of the bands of interest to capture the corresponding monochromatic image. Normally, the software applications provided by the manufacturers of the equipment do not allow the two elements to be synchronised or the acquisition of a complete hyperspectral image in the range covered by both filters. Therefore, a special arrangement is needed to ensure coherent images across the spectral range under study (Erives and Fitzgerald 2005). An alternative is to acquire a set of images in one spectral range using one filter and later another set of images using the other filter, thus obtaining different views of the object for both ranges. Another alternative is to change the filter manually for each fruit after acquiring the first range, thereby making the acquisition of the images a repetitive manual task with a high risk of moving the camera or altering the scene, while the filter is being changed.

In addition, the spectral efficiency of the acquisition devices is not uniform across the spectrum, which can produce results that depend more on the devices used than on the intrinsic properties of the phenomena or the samples, if proper calibration and correction are not performed (Geladi 2007; López-Álvarez et al. 2009). Hence, it is necessary to ensure that the different components of the system (i.e. the camera lens, the lighting source or the electric power supply used with the lighting system) are chosen and calibrated properly. The work carried out in this research was aimed at describing and overcoming these problems, and included tasks such as designing and assembling the inspection chamber, setting up a mechanical system for exchanging the two LCTF, development of specific software for controlling and synchronising the different elements of the system, and determining the operating parameters of the hyperspectral vision system, such as the heat-up time of the lighting system or the integration times (exposure) of the charge-coupled device (CCD) in acquiring each band in order to obtain a uniform response. All this development is used to propose a methodology for acquiring hyperspectral images of spherical-shaped fruits or vegetables using two LCTF. An application is developed for acquiring and segmenting spectral images of citrus fruits in order to detect fungal infections caused by Penicillium italicum Wehmer, since they cause very serious postharvest loses around the world (Palou et al. 2008), and the external damage caused by this decay is hardly visible to the human eye, and therefore is not detected by standard automatic systems (Moltó et al. 2010; Vidal et al. 2012). It has been tested in citrus fruits due to the high economic importance of this fruit and the latest efforts to develop spectral systems to detect diseases caused by fungus in this fruit. Gómez-Sanchis et al. (2012) used the minimum redundancy maximal relevance method to select a reduced number of bands in order to detect decay lesions in citrus caused by P. Italicum and P. digitatum. They then used a multilayer perceptron (MLP) and classification and regression trees (CART) in order to build classification models to achieve a correct classification rate of 98 %, MLP performing slightly better than CART. Qin et al. (2009) detected citrus canker and other common defects of citrus fruits by means of the spectral information divergence classification method. This procedure was based on quantifying the spectral similarities using a predetermined canker reference spectrum in the spectral region that ranged from 450 to 930 nm. This work was extended to create a real-time system to detect citrus canker using two wavelengths (730 and 830 nm), a success rate of 95 % being achieved, but performance was not so high (five fruits per second), and the system could be influenced by the presence of other types of defects. Lorente et al. (2013) proposed the use of the area under the receiver operating characteristic curve as a method to optimise the selection of bands to detect decay lesions in citrus, achieving a success from 87 %, when the problem included different types of sound skin, common defects and two kinds of decay lesions, to 95 %, when the problem included only sound and decaying fruit. This work was later expanded to compare the proposed method with eight other feature selection methods (Lorente et al. 2012b).

Materials and Methods

Lighting System

An ideal lighting system that is suitable for acquiring hyperspectral images should fulfil a series of requirements, such as offering a uniform emission from the spectral point of view. Furthermore, it should have adequate spatial homogeneity, and, most important, it must shine over the whole spectral area where the images are going to be acquired. There are many different kinds of light sources, including incandescent, fluorescent, mercury vapour, sodium vapour, xenon, LEDs and so on. Each of them has a different spectral emission. For example, daylight-type fluorescent tubes seldom go beyond 700 nm. In contrast, incandescent lamps offer a high degree of efficiency in NIR. In any case, it is important to bear in mind the emission spectrum if the images obtained with the system are to be analysed properly.

In addition, it is important to observe the shape of the object to be analysed. For instance, the typical technique of illumination based on the geometry 45/0° is more appropriated to illuminate flat objects. If used to illuminate spherical objects, however, this type of illumination would produce bright spots on the object due to the curvature being in these cases more adequate to use a system based on a hemispherical dome. The vision system used in our experiments consisted of ten halogen spotlights that lit the scene indirectly by means of reflection inside a hemispherical dome (Fig. 1). This setup was chosen because it was to be used to inspect citrus fruits, which are spherical. The inner surface of the aluminium dome was painted white in order to maximise its reflectivity. Moreover, this coating had a rough texture in order to minimise the directional reflections that cause the bright spots. The semicircular chamber provides highly homogeneous light. This lighting system prevents unwanted bright spots from appearing, while also providing high-quality homogenous illumination.

One key point when it comes to choosing the light source is the spectral response. Depending on the application and the spectral range of the work, it is better to use one type of illumination or another. Several types of lighting that could be expected to provide a more uniform type of light, such as “daylight-type” fluorescent tubes, were ruled out because of their low efficiency in the near infrared. In this system, the lighting that was used was based on tungsten filament halogen bulbs (Philips Brilliantline Pro 20 W, 12 V). These lamps provide good light efficiencies, within the NIR region of the electromagnetic spectrum. The possibility of powering the lighting system with conventional AC ballasts (those recommended by the manufacturer) was considered, but this would have given rise to the appearance of flickering in the acquisition of hyperspectral images, modulated by the alternating current frequency (50–60 Hz). The solution that was finally adopted to supply power for the lighting system was to use a 350 W 12 V DC power supply because of the need for a supply that remained steady over time.

In order to evaluate whether the lighting system that was used provided a constant light intensity, an experiment was conducted to study the repeatability of the radiance using a reference white with a mean reflectance of 99 %. The experiment consisted in acquiring a hyperspectral image of the white reference at different times after turning on the lighting system. A hyperspectral image of the reference white was acquired 5, 10, 20, 30, 60 and 90 min after switching on the lighting system, and the images thus obtained were compared. To do so, the average reflectance of the whole reference white was calculated for each of the working bands of the system at each of the proposed moments. Later, the average difference between the data in each experiment was calculated in order to estimate the time needed by the lamps to emit stable radiation. The time needed by the lamps to reach a stable emission was 30 min.

Hyperspectral Image Acquisition Hardware

Like the lighting system, the camera also had to fulfil certain requirements. The most important of these specifications was that it had to be sensitive within the working range, in which the images were going to be acquired. A standard CCD is sensitive up to about 900 nm, which can impose restrictions on the system that prevent it from taking full advantage of the possibilities of the filters. In this system, the camera that was used was a CoolSNAP ES (Photometrics, USA). This camera is fitted with a Sony ICX285 CCD, which offers a 1392 × 1040 pixel matrix with a pixel size of 6.45 × 6.45 μm. This CCD uses interline-transfer technology, which provides high quantum efficiencies in both the visible and the NIR regions of the electromagnetic spectrum, but the quantum efficiency of the CCD decreases in a critical way beyond 1,030 nm, which imposes a higher bound on the range of spectral acquisition of the hyperspectral vision system (Fig. 2).

Another important element is the lens. A standard lens presents a high degree of chromatic dispersion in the infrared region of the electromagnetic spectrum because of the different optical paths taken by the infrared components of the light source. This is due to the variation in the refraction index of the lens depending on the wavelength. This means that the focus of the image can vary considerably between bands that are separated in the spectrum (for example, between the bands corresponding to visible and infrared), thus giving rise to images that are focused in some bands and out of focus in others. To avoid this problem, a Xenoplan 1.4/17 mm C-Mount lens (Schneider-Kreuznach, Germany) was used. This model is made with low-dispersion lenses so that images can be acquired in both the visible and the NIR (400–1,000 nm) without any significant variations in focus. Furthermore, the transmittance of the lens is practically uniform over the whole of the abovementioned range.

A filter is designed by the manufacturer to fit a camera and work individually, thus limiting the spectral range of acquisition. When working with two filters, it is necessary to use two cameras or to change the filter every time we intend to obtain an image that covers the spectral range of both of them. But this method has a serious drawback, consisting in the fact that it is difficult to ensure that the acquired scene does not vary with each of the filters. It is therefore necessary to design a system that allows the filters to be exchanged quickly and without altering the acquired scene.

To solve this problem, a guidance system for changing the filters was designed. This solution consists in using a tailor-made box that holds the filters inside and moves by means of a set of runners (Fig. 3). Two mechanical limits fix the position of each filter when they move under the camera, which makes it possible to quickly change from one filter to another. This ensures that exactly the same scene is acquired with both filters, since the camera is not touched, and therefore there is no risk of changing its position.

CCD Exposure Times

Given the same radiance, lower reflectance is obtained in a spectral band, in which the filter provides a lower degree of absolute efficiency than in another in which its efficiency is higher. To obtain robust and valid results, it is very important to acquire comparable images that are not altered by the efficiency of the sensor to different wavelengths. This can be achieved in two ways (1) by characterising the system by means of a white reference and correcting the images spectrally (thereby obtaining the reflectance relative to the white reference) or (2) by setting different exposure times for each band according to its spectral efficiency. In this work, the second solution has been adopted because in addition to obtaining a uniform system transmittance, it also avoids having to work with exposure times in which the signal-to-noise ratio of the sensor is inappropriate.

A CCD sensor with electronic shutter is capable of imaging at variable integration times (Ando 1990), the values of the pixels being linear to the integration time of a CCD sensor when the images are acquired at the linear range of the camera (Pang et al. 2012). The integration time of the sensor for a particular wavelength band is defined as the time that the CCD takes to acquire the image in this spectral band. Each component of the whole hyperspectral sensor has a different spectral efficiency. Thus, these times will be inversely proportional to the efficiency of the system in each band.

To determine the integration time for each band, a series of images of the white reference were acquired for each band in the working spectral range using different integration times from 1 to 5,000 ms, the time increasing by intervals of 1 ms. Once the images had been acquired for all bands, the average of all the pixels of the white reference was calculated for each image. Initially, the first band is tuned in the LCTF, and a monochromatic image of the white reference is acquired using an integration time of 1 ms. This image is then analysed to obtain the average pixel value, and this value is stored. The integration time is increased by 1 ms, and the process is repeated until an integration time of 5,000 ms is reached. The curve showing the relationship between the pixel value and the integration time to that particular band is obtained. The integration time selected for a particular band was the minimum needed to obtain an average pixel value of the white reference higher than 82 % of the dynamic range of the camera in the adjusted curve.

The correlation (R2) of least-square fittings of the curves showed that the linear range of the image sensor was between 10 and 90 % of the dynamic range (the dynamic range being defined as the values of pixel intensity between 0 and 255). An R2 value of 0.98 was found in this range, while, for instance, it fell to 0.71 for the range between 5 and 95 %. However, at the maximum of this linear range (90 %), there were still saturated pixels in the image. Hence, to select the threshold, we decided empirically to include a security range of 10 % over the linear range, resulting in a total of 82 % of the dynamic range of the camera. Using this value in citrus fruits allows full sensitivity of the sensor while ensuring the image remains unsaturated, which could happen if higher values are used.

To perform this task, a specific algorithm was developed and programmed in C language. In addition, the main capabilities of the application include initialisation of the camera and the filters, the acquisition of dark and white reference to correct the hyperspectral images and calibration options for determining the exposure time using different light sources.

Figure 4a shows the exposure times obtained for each band (each curve). More specifically, it depicts the curves obtained for each of the bands tuned in the part of the spectrum captured by each of the filters. For the VIS-07 filter, it can be seen that the blue bands are the ones that present a shallower slope, which increases as the wavelength advances towards red. This finding is in agreement with the efficiencies introduced into the system by the VIS-07 filter and the other parts of the system. The results obtained for the NIR-07 filter offer a system response that is the inverse of the one presented in the visible region. This fact can be confirmed by observing Fig. 4b, which shows a graph with the exposure times per band that were determined by means of the procedure described for the VIS-07 and NIR-07 filters. The same figure also shows that the NIR-07 curve has a tendency to grow, unlike the curve of the VIS-07 filter. This is due to the fact that as we go further into the NIR zone, the overall efficiency of the system diminishes due to the reduced efficiency of the CCD in this region. After studying the spectral characteristics of the different parts of the hyperspectral vision system, it was concluded that the optimal range of spectral acquisition for this system is between 460 and 1,020 nm.

Specific Software for Controlling the Hyperspectral Vision System

To control the acquisition of images, a software application was developed to handle and tune the LCTF within the desired band, as well as offering the possibility of scanning across the whole working region of the spectrum. The software also synchronises the filters with the image acquisition during the preestablished exposure time for each band. All the software was developed using the programming language C. The main capabilities incorporated into the application include automatic initialisation of the camera and the filters, the acquisition of hyperspectral images or live capture, the possibility of working with one or two synchronised filters, the acquisition of a dark and white reference to correct the hyperspectral images and calibration options for determining the exposure time using different light sources.

Detection of Fungal Infections in Multispectral Images of Citrus Fruits

With the aim of evaluating our LCTF-based hyperspectral vision system, we proposed a case study involving a system for segmenting hyperspectral images of citrus fruits damaged by the fungal infections. The early detection of infections due to fungi in the post-harvest processing of citrus fruits is especially important for the industry, since a small number of infected fruits can contaminate a whole batch, thus resulting in considerable economic losses. Even many efforts are done in order to avoid fungal attacks on fruits (Valencia-Chamorro et al. 2011), the most important post-harvest damage in citrus fruit warehouses is caused by the Penicillium family of fungi (Eckert and Eaks 1989). At present, the detection of rotten citrus fruits is performed by workers through the fluorescence-induced by ultraviolet (UV) light because the damage to the fruit is difficult to see under visible light with the naked eye. However, this process entails a number of problems because exposing people to this kind of lighting is potentially hazardous for human health, and it is therefore very important to develop a technology that allows the use of UV light to be avoided. One possible solution to this problem could be the use of hyperspectral computer vision systems (Gómez-Sanchis et al. 2008a).

Fruit Used in the Experiments

The experiments were carried out using two groups of mandarins (Citrus clementina Hort. ex Tanaka) cultivar ‘Clemenules’ collected at random from the packing line of a citrus trading company. The first group was composed of a total of 50 mandarins of excellent or good quality that included fruit free of defects and fruit with slight surface scarring produced by the grazing of the peel as it is rubbed against the branches by the wind (“wind scarring”). The second group was composed by other 50 fruits superficially injured in the rind and inoculated with spores of P. italicum isolate NAV-7, from the fungal culture collection of the IVIA CTP (Palou et al. 2008). The fruit were stored for 3 days in a controlled environment at 20 °C and 65 % relative humidity. After this period, all the inoculated fruit presented lesions due to decay of an average diameter of 20 mm.

The fruit presented different stages of maturity were used (with external colour varying from green to orange). Scarring or other common defects are easily visible, whereas in its early stages, decay lesions have almost the same colour as the healthy peel. Hence, as said above, visual discrimination of the damage caused by this fungus is difficult under visible light.

Hyperspectral images with a size of 801 × 651 pixels were acquired of all the fruit following the parameters and methodology described. The images were acquired in the 20 bands proposed by Gómez-Sanchis et al. (2008a), which are 460, 480, 520, 560, 590, 600, 620, 630, 680, 730, 740, 760, 800, 820, 870, 880, 950, 960, 980 and 1,010 nm. However, the effect introduced by the reflection of light on a spherical surface results in a darkening of the edges of the object, while the central part appears brighter, and therefore, the hyperspectral images of the citrus fruits were pre-processed using the method described in Gómez-Sanchis et al. (2008b), consisting in correcting the intensity of the light reflected by the fruit depending on the estimated curvature of the surface.

Labelled Dataset

In this work, an application specifically developed to select pixels in the hyperspectral images belonging to the different predefined classes in a supervised way was used, and storing the value of the pixels and the wavelength and class they belong to in the disc of the computer for later use. Segmentation of the hyperspectral images was performed using a method based on artificial neural networks. The dataset used in the experiments was composed of a total of 80,015 pixels that were manually selected from the images of the fruit, and classified into two different classes; pixels belonging to non-rotten skin (class “non-rotten”) and pixels belonging to decay lesions (class “rotten”). The pixels belonging to the “non-rotten” class were selected from the images of the fruit in the first group (sound fruit and fruit with scars), while the pixels belonging to the second class were selected from the decay lesions in the images of the fruit in the second group. This selection was performed using a specific application developed for this purpose. This application allowed particular regions of interest in the image to be selected with the computer mouse and the storage of the values of the 20 bands for each pixel in the selected region. This process can be repeated for different classes and different images.

This dataset was divided randomly into three subsets, i.e. training set (20 %), validation set (20 %) and test set (60 %). The first two were used to construct the neural model, and the last one was employed to assess the model’s capacity to be generalised. The proposed segmentation model is based on a MLP with one hidden layer of 17 neurons, which was an architecture that was effective in a previous trial-and-error experiment carried out with a totally different set of fruit (data not shown). The learning algorithm that was chosen was backpropagation, and 100 random initialisations of the synaptic weights of the neurons were performed with the aim of avoiding local minimums that did not provide the optimum solution.

Results

Segmentation of Pixels Belonging to Decay Lesions

Table 1 shows the statistics for the segmentation carried out with the proposed method for the training and validation, and test sets. The overall accuracy across all the tests is the same as that obtained in the training set (98.6 % right and 1.4 % wrong). This finding reveals that the capacity for generalisation of the proposed model (i.e. the capacity to correctly classify samples that the classifier has never seen) is good and extends to all the elements in the confusion matrix. The method of segmentation proposed obtained a success rate that is very similar for both the “non-rotten” class (98.5 %) and the “rotten” class (98.6 %), which shows that the classifier is not biased towards either of the two classes.

Let us remember that these data correspond to the accuracy of the pixel segmentation method, not to the fruit classification, which would be a next step involving a decision algorithm which is out of the scope of this work. However, the model has been applied to segment the whole multispectral images of the fruit obtaining good visual results.

Fruit Classification

There is no a standard criteria to decide whether a fruit should be considered as presenting decay lesions and therefore removed from the production line or not. It is supposed that the smaller decay lesion detected must imply that the fruit have to be discarded. But, in practise, the smaller unit to be considered in the images is the pixel, and a fruit with a single or very few pixels segmented as decay should obviously not be classified as decayed, since errors segmenting pixels occur. Otherwise, most of the inspected fruit would be erroneously classified as decayed. Therefore, an agreement or criterion is needed to set a threshold. A fruit was considered as presenting a decay lesion if 5 % of the total pixels (area) are classified as “rotten”. This threshold was already used by Gómez-Sanchis et al. (2008a); while in other works, this threshold is not stated (Qin et al. 2009 and 2012).

Table 2 shows the classification results of fruit for training and test sets. Success rate is around 95 %, which is similar to the results obtained in other works aimed at detecting decay lesions or serious defects in the citrus fruits skin (Gómez-Sanchis et al. 2008b; Qin et al. 2009 and 2012). On the contrary, Kim et al. (2009) achieved a success rate of 100 % in the detection of canker but not inspecting the entire fruit but only small regions of interest in the images containing the defect.

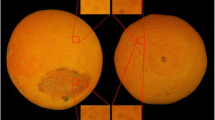

Figure 5 shows the segmentation performed on ten example fruits from the database. These examples are representative for a wide range of situations, in our case fruits in different stages of ripeness, with common damage and rotting. The defects observed in the fruit were labelled manually by an expert. The areas marked in yellow showing scars, spots with an abnormal colour or stems. The areas marked in blue are the areas that are affected by the early decay lesions. The segmented images show the areas that the system classified as “non-rotten” in orange and those classified as “rotten” in purple.

RGB images and segmented images of mandarins with decay lesions. In the segmented images, the orange pixels represent the healthy areas of the fruit, while the purple areas represent zones that the system has classified as rotten. The blue outlines are the areas that an expert classified visually as decay lesions

Conclusions

This work presents the main design aspects that a researcher in an applied subject should take into account in order to set up a hyperspectral vision system based on LCTF technology from its fundamental components. Details are given of the main points involved in designing this system, which is used to acquire images of spherical fruits (in this particular case, citrus fruits), and the design of each part of the system is described while also offering a methodology for the correct acquisition of images.

Several auxiliary elements were designed. These included the dome-shaped inspection chamber and the filter guidance and positioning system for acquiring hyperspectral images in a systematic manner using the two LCTF filters (VIS-07 and NIR-07), which made it possible to carry out the trials with a large number of samples.

We have justified the need to develop a software application that allows the different elements of the system to be synchronised, while also performing tasks related to calibration and the systematic acquisition of hyperspectral images. In addition, we attained the optimum working parameters of the hyperspectral vision system (working spectral range, resolution, heat-up time of the system and exposure times per band) that were needed to obtain high-quality hyperspectral images. A methodology has been put forward for unifying the spectral response of the system using variable exposure times and assuming a linear response with the exposure time of the CCD. Lastly, a protocol for acquiring hyperspectral images using the system developed in this work has also been proposed.

The system described above was used to detect rotting in citrus fruits by means of the hyperspectral system, since this is a problem that has still not been solved using conventional vision systems based on colour images. Our results show that the proposed technique does allow a good segmentation of decay lesions in multispectral images of citrus that lead to a rotting to be detected in the early stages of its development in citrus fruits.

References

Aleixos, N., Blasco, J., Navarrón, F., & Moltó, E. (2002). Multispectral inspection of citrus in real-time using machine vision and digital signal processors. Computers and Electronics in Agriculture, 33(2), 121–137.

Ando, F. (1990). Multi-functional solid state imaging techniques. Journal of the Institute of Television Engineering, 44(2), 127–131.

Bei, L., Dennis, G. I., Miller, H. M., Spaine, T. W., & Carnahan, J. W. (2004). Acousto-optic tunable filters: fundamentals and applications as applied to chemical analysis techniques. Progress in Quantum Electronics, 28(2), 67–87.

Blasco, J., Aleixos, N., Gómez-Sanchis, J., & Moltó, E. (2009). Recognition and classification of external skin damage in citrus fruits using multispectral data and morphological features. Biosystems Engineering, 103(2), 137–145.

Cubero, S., Aleixos, N., Moltó, E., Gómez-Sanchis, J., & Blasco, J. (2011). Advances in machine vision applications for automatic inspection and quality evaluation of fruits and vegetables. Food and Bioprocess Technology, 4(4), 487–504.

Eckert, J., & Eaks, I. (1989). Postharvest disorders and diseases of citrus. CA, USA: The citrus industry, University California Press.

ElMasry, G., Wang, N., Vigneault, C., Qiao, J., & ElSayed, A. (2008). Early detection of apple bruises on different background colours using hyperspectral imaging. LWT- Food Science and Technology, 41(2), 337–345.

Erives, H., & Fitzgerald, G. J. (2005). Automated registration of hyperspectral images for precision agriculture. Computers and Electronics in Agriculture, 47(2), 103–119.

Geladi, P. L. M. (2007). Calibration standards and image calibration. In H. F. Grahn & P. Geladi (Eds.), Techniques and applications of hyperspectral image analysis, pp 203–220. Chichester, England: John Wiley & Sons.

Gómez-Sanchis, J., Gómez-Chova, L., Aleixos, N., Camps-Valls, G., Montesino-Herrero, C., Moltó, E., et al. (2008a). Hyperspectral system for early detection of rottenness caused by Penicillium digitatum in mandarins. Journal of Food Engineering, 89(1), 80–86.

Gómez-Sanchis, J., Moltó, E., Camps-Valls, G., Gómez-Chova, L., Aleixos, N., & Blasco, J. (2008b). Automatic correction of the effects of the light source on spherical objects. An application to the analysis of hyperspectral images of citrus fruits. Journal of Food Engineering, 85(2), 191–200.

Gómez-Sanchis, J., Martín-Guerrero, J. D., Soria-Olivas, E., Martínez-Sober, M., Magdalena-Benedito, R., & Blasco, J. (2012). Detecting rottenness caused by Penicillium in citrus fruits using machine learning techniques. Expert Systems with Applications, 39(1), 780–785.

Hecht, E. (2003). Optics (4th ed.). Reading, USA: Addison Wesley.

Karoui, R., & Blecker, C. (2011). Fluorescence spectroscopy measurement for quality assessment of food systems—a review. Food and Bioprocess Technology, 4(3), 364–386.

Kim, D. G., Burks, T. F., Qin, J., & Bulanon, M. D. (2009). Classification of grapefruit peel diseases using colour texture feature analysis. International Journal of Agricultural and Biological Engineering, 2(3), 41–50.

Kokawa, M., Sugiyama, J., Tsuta, M., Yoshimura, M., Fujita, K., Shibata, M., Araki, T., & Nabetani, H. (2012). Development of a quantitative visualisation technique for gluten in dough using fluorescence fingerprint imaging. Food and Bioprocess Technology. DOI 10.1007/s1947-012-0982-7 (In press)

López-Álvarez, M., Hernández-Andrés, J., Romero, J., Campos, J., & Pons, A. (2009). Calibrating the elements of a multispectral imaging system. Journal of Imaging Science and Technology, 53(3), 31102-1-31102-10.

Lorente, D., Blasco, J., Serrano, A. J., Soria-Olivas, E., Aleixos, N., & Gómez-Sanchis, J. (2012b). Comparison of ROC feature selection method for the detection of decay in citrus fruit using hyperspectral images. Food and Bioprocess Technology. DOI: 10.1007/s11947-012-0951-1 (In press).

Lorente, D., Aleixos, N., Gómez-Sanchis, J., Cubero, S., García-Navarrete, O. L., & Blasco, J. (2012b). Recent advances and applications of hyperspectral imaging for fruit and vegetable quality assessment. Food and Bioprocess Technology, 5(4), 1121–1142.

Lorente, D., Aleixos, N., Gómez-Sanchis, J., Cubero, S., & Blasco, J. (2013). Selection of optimal wavelength features for decay detection in citrus fruit using the ROC curve and neural networks. Food and Bioprocess Technology, 6(2), 530–541.

Magwaza, L. S., Opara, U. L., Nieuwoudt, H., Cronje, P. J. R., Saeys, W., & Nicolaï, B. (2011). NIR Spectroscopy applications for internal and external quality analysis of citrus fruit—a review. Food and Bioprocess Technology, 5(2), 425–424.

Menesatti, P., Zanella, A., D’Andrea, S., Costa, C., Paglia, G., & Pallottino, F. (2009). Supervised multivariate analysis of hyper-spectral NIR images to evaluate the starch index of apples. Food and Bioprocess Technology, 2(3), 308–314.

Moltó, E., Blasco, J., & Gómez-Sanchis, J. (2010). Analysis of hyperspectral images of citrus fruits. In D.-W. Sun (Ed.), Hyperspectral Imaging for food quality analysis and control (pp. 321–348). San Diego, California, USA: Academic Press/Elsevier.

Palou, L., Smilanick, J. L., & Droby, S. (2008). Alternatives to conventional fungicides for the control of citrus postharvest green and blue moulds. Stewart Postharvest Review, 4(2), 1–16.

Pang, Z., Laplante, N. E., & Filkins, R. J. (2012). Dark pixel intensity determination and its applications in normalising different exposure time and autofluorescence removal. Journal of Microscopy, 246(1), 1–10.

Pathare, P. B., Opara, U. L., & Al-Said, F. A. (2013). Colour measurement and analysis in fresh and processed foods: a review. Food and Bioprocess Technology, 6(1), 36–60.

Peng, Y., & Lu, R. (2006). An LCTF-based multispectral imaging system for estimation of apple fruit firmness; part I. acquisition and characterization of scattering images. Transactions of ASAE, 49(1), 259–267.

Qin, J., Burksa, T., Ritenourb, M., & Bonn, W. (2009). Detection of citrus canker using hyperspectral reflectance imaging with spectral information divergence. Journal of Food Engineering, 93(2), 183–191.

Qin, J., Burks, T. F., Zhao, X., Niphadkar, N., & Ritenour, M. A. (2012). Development of a two-band spectral imaging system for real-time citrus canker detection. Journal of Food Engineering, 108(1), 87–93.

Sun, D.-W. (Ed.). (2009). Infrared spectroscopy for food quality analysis and control. San Diego, California, USA: Academic Press/Elsevier.

Sun, D.-W. (Ed.). (2010). Hyperspectral imaging for food quality analysis and control. San Diego, California, USA: Academic Press/Elsevier.

Vadivambal, R., & Jayas, D. S. (2011). Applications of thermal imaging in agriculture and food industry—a review. Food and Bioprocess Technology, 4(2), 186–199.

Valencia-Chamorro, S. A., Palou, L., del Río, M. A., & Pérez-Gago, M. B. (2011). Performance of hydroxypropyl methylcellulose (HPMC)-lipid edible coatings with antifungal food additives during cold storage of ‘Clemenules’ mandarins. LWT- Food Science and Technology, 44(10), 2342–2348.

Vélez-Rivera, N., Blasco, J., Chanona-Pérez, J. J., Calderón-Domínguez, G., Perea-Flores, M. J., Arzate-Vázquez, I., Cubero, S., & Farrera-Rebollo, R. (2013). Computer vision system applied to classification of ‘Manila’ mangoes during ripening process. Food and Bioprocess Technology. DOI: 10.1007/s11947-013-1142-4 (In press).

Vidal, A., Talens, P., Prats-Montalbán, J. M., Cubero, S., Albert, F., & Blasco, J. (2012). In-line estimation of the standard colour index of citrus fruits using a computer vision system developed for a mobile platform. Food and Bioprocess Technology. DOI: 10.1007/s11947-012-1015-2 (In press).

Vila-Francés, J., Calpe-Maravilla, J., Gómez-Chova, L., & Amorós-López, J. (2010). Analysis of acousto-optic tunable filter performance for imaging applications. Optical Engineering, 49(11), 113203.

Vila-Francés, J., Calpe-Maravilla, J., Gómez-Chova, L., & Amorós-López, J. (2011). Design of a configurable multispectral imaging system based on an AOTF. IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, 58(1), 259–262.

Wang, W., Li, C., Tollner, E. W., Rains, G. C., & Gitaitis, R. D. (2012). A liquid crystal tunable filter based shortwave infrared spectral imaging system: calibration and characterization. Computers and Electronics in Agriculture, 80, 145–154.

Wu, D., Wang, S., Wang, N., Nie, P., He, Y., Sun, D. -W., & Yao, J. (2012). Application of time series hyperspectral imaging (TS-HSI) for determining water distribution within beef and spectral kinetic analysis during dehydration. Food and Bioprocess Technology. DOI 10.1007/s11947-012-0928-0 (In press).

Zhu, F., Zhang, D., He, Y., Liu, F., & Sun, D. -W. (2012). Application of visible and near infrared hyperspectral imaging to differentiate between fresh and frozen–thawed fish fillets. Food and Bioprocess Technology. DOI 10.1007/s11947-012-0825-6 (In press).

Acknowledgements

This work has been partially funded by the Instituto Nacional de Investigación y Tecnología Agraria y Alimentaria de España (INIA) through research project RTA2012-00062-C04-01 and RTA2012-00062-C04-03 with the support of European FEDER funds, the Universitat de València through project UV-INV-AE11-41271, and the UPV-IVIA through collaboration agreement UPV-2013000005.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gómez-Sanchis, J., Lorente, D., Soria-Olivas, E. et al. Development of a Hyperspectral Computer Vision System Based on Two Liquid Crystal Tuneable Filters for Fruit Inspection. Application to Detect Citrus Fruits Decay. Food Bioprocess Technol 7, 1047–1056 (2014). https://doi.org/10.1007/s11947-013-1158-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11947-013-1158-9