Abstract

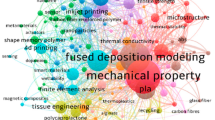

In this review, some of the latest applicable methods of machine learning (ML) in additive manufacturing (AM) have been presented and the classification of the most common ML techniques and designs for AM have been evaluated. Generally, AM methods are capable of creating complex designs and have shown great efficiency in the customization of intricate products. AM is also a multi-physical process and many parameters affect the quality in the development. As a result, ML has been considered as a competent modeling tool for further understanding and predicting the process of AM. In this work, most commonly implemented AM methods and practices that have been paired with ML methods along with their specific algorithms for optimization are considered. First, an overview of AM and ML techniques is provided. Then, the main steps in AM processes and commonly applied ML methods, as well as their applications, are discussed in further detail, and an outlook of the future of AM in the fourth industrial revolution is given. Ultimately, it was inferred from the previous papers that the most widely applied AM techniques are powder bed fusion, direct energy deposition, and fused deposition modeling. Also, there are other AM methods which are mentioned. The application of ML in each of the renowned techniques are reviewed more explicitly. It was found that, the lack of training data due to the novelty of AM, limitations of available materials to be applied in AM methods, non-standardization in AM data and process, and computational capability were some of the constraints of the application of ML in AM methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

1.1 Additive Manufacturing

The practice of manufacturing has been one of the key components of human development and progress through the ages. Some of the most significant methods include metal forming, machining, joining, casting, powder metallurgy, and three-dimensional printing or additive manufacturing (AM) [1]. AM is already in high demand in many industries such as aerospace and medical engineering [2, 3]. Moreover, considered to be an integral aspect leading to industry 4.0 [4]. In medical applications, AM has become the most commonly applied manufacturing method in hearing aid gadgets, dental implants and prosthetic bones and cartilages [5]. With the availability and commercialization of this technology, even novice, non-technical household applications have been reported to be functional and practical for either maintenance or self-customization [6]. The methods used in AM can create objects with sophisticated geometries layer after layer [7,8,9,10,11,12,13,14,15,16,17]. Although there are distinct methods under the class of AM, generally the process steps of each method follow the same stages and each step of different methods of AM fall under the same process step as depicted below (Fig. 1) [18].

The process steps in AM [18]

The most applied AM methods are powder bed fusion (PBF), direct energy deposition (DED), and binder jetting (BJ) [19]. A classification of AM processes is presented below (Fig. 2) [20]. Each technique is different in terms of used material, layer formation and printed product. For each material and manufacturing method, different measures and considerations need to be taken to finish the product and to achieve the highest quality. The considerations and steps required to establish a solid database are distinctive based on their difference in printing. Therefore, sampling, testing and material analysis in 3D printing methods are explained. Since each printing method is chosen based on the properties and applications of printed parts, properties differ in terms of surface smoothness, strength, durability, dimension preciseness and geometrical complexity [21].

Classification of notable methods of AM [20]

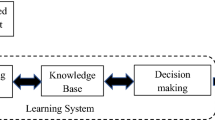

Overall, AM has lots of distinct advantages over traditional manufacturing methods. However, the preliminary parameters are quite hard to tune since they may significantly affect the microstructure of parts being printed and the overall quality of printed objects. Understanding process structure property performance (PSPP) relationship for 3D printing by using novel numerical and analytical modeling is a hurdle on its own, and nowadays, artificial intelligence (AI), particularly machine learning (ML) and neural networks (NN), are capable of performing advanced regression analyses and complex pattern recognition without the need to create analytical and physical models [22]. In this review, the aim is to identify and discuss the applications of ML in some commonly used AM processes. The general process is depicted in Fig. 3 and gives an intuition of how these two methods could work together along with their functionalities and considerations[23]. The application of ML techniques, identification of challenges and advantages, and possibility of improvement in future works as well as the integration of these two concepts (ML and AM) are of utmost importance for a thorough understanding. Also, different provisions need to be considered for the maintenance of the aforementioned AM installments due to rapid growth of AM. Therefore, to tackle this shortcoming, a practice known as prognostics and health management (PHM) which is a combined practice of state monitoring for refining the accessibility and competence of high-value industrial apparatus and plummeting the maintenance expenses [24].As it happens, a method known as quantum ML technique is very helpful for health monitoring purposes of the installments and other approaches which will be discussed in the AM outlook section of the paper. Conventional ML methods are shown to be not competent when it comes to handling large amounts of data in real-time [25].

Graphical representation of the steps taken in the application of ML in AM [23]

In the following, AM methods are evaluated as a preliminary introduction for painting a general picture regarding the capabilities of different AM methods.

1.1.1 Powder Bed Fusion

PBF is a state-of-the-art AM method that has progressed through many research works and advancements in industry [26]. PBF is subdivided into laser beam melting (LBM) or laser powder bed fusion (LPBF), electron beam melting (EBM) and selective laser sintering (SLS). Generally, in PBF, energy source directly melts and sinters the materials which are typically in powdered form [27]. Both metal and polymer-based procedures can be implemented for end-use manufactured parts and typically, this method is very demanding in terms of energy consumption [28]. Figure 4 [29] shows the aforementioned process. LPBF is particularly one of the well-established methods which has made notable progress to become fully commercialized [30,31,32].

Laser powder bed fusion device setting [29]

1.1.2 Directed Energy Deposition

DED technique allows manufacturing objects by liquidizing materials while being deposited. This method is mostly used for metallic powders. DED can be easily paired with conventional subtractive methods for machining. Furthermore, DED is very applicable in maintenance and repairing since it has high deposition rate that could be used in repairing large components [33, 34]. This process generates three-dimensional parts by melting materials as it is placed by means of intense thermal energy such as electron beam, laser, or plasma arc. A scaffold system or robotic arm operates both energy source and material nozzle. A portable compartment is fixed along with a laser emitting source. Metal powder is concurrently directed into the nozzle to the required area; laser melts the powder which is then solidified to form a layer. Portable compartments are not fixed at specific axes and have the freedom to move along different orientations. Some of the notable hindrances are low building resolution of manufactured parts, high cost of manufacturing, and supportless structures [35]. Figure 5 shows a DED laser process [36].

Laser DED installment [36]

1.1.3 Material Extrusion/Fused Deposition Modeling

FDM, sometimes referred to as fused filament fabrication (FFF), (Fig. 6) method is extensively used for manufacturing geometrically complex objects in a noticeably short time span to suit customer needs [37]. With control and command over processing parameters due to the enhanced capability of operating machines, customized biomedical parts are becoming easier to produce [38]. In FDM, an uninterrupted strand of a thermoplastic polymer is used to 3D print layers of materials. The process of FDM is generally described as the extrusion of heated feedstock plastic filaments via a nozzle tip to deposit multiple layers onto a platform to build up the structure layer after layer from a digital CAD model of the part [19, 39]. However, FDM full-scale use is compromised by limited materials available in the market. As a result, it is important to fix process parameters in the stage of fabrication [40, 41].

Fused deposition modelling process of polymer material [42]

1.1.4 Vat-photopolymerization

Vat-photopolymerization (VATP) methods are considered as one of the low-cost AM processes in terms of required energy input [43, 44]. Moreover, this method has been very effective because of its high resolution and printing speed, especially in drug delivery and bespoke medical devices [45]. The very essence of material formation is based on a chemical process known as photopolymerization, which occurs under light exposure in photocurable polymers resulting in cross-linking of the material to a 3D form. Some different approaches include mask-image-projection-based stereolithography, laser writing stereolithography, and continuous light interface process (CLIP) [46,47,48,49,50]. In this section, we put CLIP approach under scope due to its potential for continuous AM and discrete layer segmentation. In CLIP approach, the most important parameter to monitor is continuous elevation speed which is shown by letter V in Fig. 7. Non-accurate speed elevation results in poor bonding of solidified materials if CLIP is too fast and adhesion to oxygen preamble window occurs if CLIP is too slow. The knowledge behind the proper elevation speed is mostly derived from experimental data and because printing geometry differs for each design purpose. The data from the empirical trial and error methods could not always be helpful, thus propelling researchers to consider data-driven and ML approaches to set the right parameters for each design [51].

A CLIP process for prototyping microneedle species. The orientation of the most important parameter ‘V’ is shown in orange [52]

1.1.5 Jetting Based AM Processes

Several distinct jetting processes based on the classification made by ASTM [53] are binder jetting and material jetting. In general, droplets of build material are jetted to a build platform similar to two dimensional inkjet printing, either jetted countinusly or with drop on demand method. Material, and for binder jetting, liquid bonding agent, is jetted to bind powder materials. While both material jetting and binder jetting share some similar traits, they have their own applications, advantages and strategies. Material jetting is very accurate with a minimum amount of waste and a variation of material parts and hues of color could be printed under one process, but there are only waxes and some polymers that could be used as print material whereas in binder jetting, a plethora of materials with much better and reliable mechanical properties such as metals, ceramics and polymers are used. Furthermore, in binder jetting method, there are many possibilities in the creation of parts with different mechanical properties while material jetting method does not have this important advantage [54].

-

i.

Binder Jetting

BJ printing is a process where a binder material and a base material which are typically powder, are treated. Liquid binders are extended through a jetting nozzle which is referred to as inkjet printer. Inkjet printers spread powders and powdered materials are selectively combined into a solidified layer; needless to say the bound layer is only two dimensional [55].

-

ii.

Material Jetting

Material jetting (MJ), was first established by Objet Geometries Ltd. in 2000 and was later adopted by the company Stratasys in 2012 [56]. In accordance with the standards set by ISO/ASTM52900, the printing method is explained as droplets selectively deposited from the feedstock. There are variances between the steps printing devices operate based on, but the general process remains the same. This method has other variations such as Electrohydrodynamic jet printing, where instead of heat, pneumatic or piezo electric force is used. The electrical field exerts the liquid out of the nozzle, which helps immensely in printing electronic apparatuses [57]. In order to store the photopolymer materials, an air-excluding tanker is implemented to exert deposits of aforementioned materials from the tank to the nozzle to form a very thin layer on the construction platform. Afterward, ultra violet (UV) light with wavelengths of 190 and 400 nm is used as post-processing for curing [58, 59]. When curing is done, the construction platform is lowered until a certain layer thickness is achieved and then, the process gets repeated until the desired part is constructed. Furthermore, the support materials for overhung parts are a sort of gel-like material that can be removed by sonication in a vat of sodium hydroxide, heating, or waterjet [60, 61]. Figure 8 shows how MJ operation takes place [62].

A general depection of a material jetting process [62]

1.1.6 Sheet Lamination

Sheet lamination (SL) is a sub-branch of AM which was coined by Helisys of Torrance, in 1991. SL is sometimes also referred to as selective deposition lamination. However, the most prominent mode of SL is ultrasonic AM, which could have steps of other manufacturing techniques such as CNC milling, ultrasonic welding, and laminated object manufacturing. Generally, in SL methods, to make partitions, cuts are made via laser and contrariwise, In order to make bonds between sheets, typically ultrasound waves are implemended [63]. Figure 9 depicts how the process is undertaken [64].

A sheet lamination installment [64]

This method mostly consists of a mechanism in which sheets are directed above the build platform. A roller which acts as a heater applies the necessary pressure to attach the sheet above to the one below. After performing laser cut to the layer, build platform is lowered to the thickness of the pre-existing sheet, which is mostly between 0.002 and 0.020 inches. Thus, one iteration is completed and another sheet advances on the top. The former sheet is deposited, while the platform rises again and the roller heating source enforces pressure to make another bond. As mentioned, this method has a number of names used by academia and industry. To the best of the authors knowledge, there is no explicit research done relating to the optimization of SL by ML and data-driven approaches. In general, it is shown that SL has high fabrication speed and there is no need for support structures. It also has low warping and internal stress. Moreover, multi-material and multi-color modes can be used. However, the shortcomings of this approach which require further study and improvement, are high amount of waste creation and issues with removing support entrapped in internal cavities. Moreover, thermal cutting produces harmful gasses and the lamination is likely to happen due to the heat of laser [63].

1.2 Machine Learning

ML techniques function as a medium for understanding complex patterns. Visual words and histogram of oriented gradients (HOG) are some of the features that are used in ML for image analysis [65]. These features mostly fall in the unsupervised category, hindering the proper consistency of the outcome of recognition task. In this approach, ML applies NN and convolutional deep neural network (CDNN) methods with the aid of supervised learning. This method picks up underlying patterns that could not be identified easily by automatic image processing. This method, which is exclusively inspired by the visual cortex of brain, has proven to be effective in inspection, motion detection, etc. [66,67,68]. While implementing ML, some assumptions are considered initially. The underlying premises and assumptions applied to develop an ML model need to be thoroughly understood to avoid any misconception. Figure 10 shows a classification of some of the most important assumptions in this type of modeling.

ML models should be applied according to the assumptions considered in the model. Thus, resulting in a thorough assessment of the development of the model [69]. It is often believed that when a machine changes its configurations over time in a way that it performs better and more optimally, it has articulated learning behavior. Changes could vary between enhancement of an already applicable system or developing a totally new system. What a machine considers to change based on its experience could be a set of data or a structure of a program relating to the feedback it receives from its interaction with external information. ML is a branch of AI relating to changes that perform objectives in a more efficient way and it is associated with the AI. These tasks could be prediction, forecasting, diagnoses, robot control or planning Based on most classifications, ML could be classified into three paradigms (Fig. 11) [70].

Main paradigms of ML [70]

1.2.1 Supervised ML

Supervised learning is the most commonly implemented ML method. In this method, ML models need to learn functions in a way that inputs fit the outputs. Then, the function reveals information from categorized training data and each input is related to its assigned value. The algorithm embedded in a ML model is capable of making novel observations never made before or uncovering patterns in a training data set [71]. Some considerations need to be made and some initial steps are required in order to perform this task [72,73,74].

-

Acquiring a dataset and data processing

-

Feature selection (target variable)

-

Splitting the dataset (training, cross-validation, testing)

-

Hyperparameter tuning and prediction

Figure 12 shows the structure of a supervised ML model [72]. One of the most approached predictive statistical analysis in supervised method is linear regression [75]. Each analysis is carried out by a specific algorithm which should be chosen with prior knowledge based on linearity or non-linearity of the problem. However, by comparing error metrics of each regression, best algorithm could be identified [76].

General schematic diagram of a supervised ML model processing data related to cancer [72]

In the following, the aforementioned steps are discussed in more detail.

-

i.

Dataset acquisition and processing

Data acquisition can be regarded as a concept where physical events that happened in real world gets transformed into electrical signals, converted, and scaled in digital format for further analysis, processing, and storage within the computer memory storage. In general data acquisition systems are not only for gathering data but also for operating on the data [77]. Having complete data is very important for ML models to perform better and get a robust analysis [78]. Nowadays, DL models can even operate as good as real ophthalmologists in detecting diabetic eye issues from an image, all owning to the computational power of models and large amount of data to train the models [79]. With modernization and new fields of science used in the industry, lack of prior data is a problem that should be dealt with, particularly with deep learning models that require even more data than traditional ML. Initially, data acquisition approaches are used to harvest, augment, or generate of novel data sets. Afterwards, data labeling should be done and then, training the already achieved or improve the labeling and accuracy of the gathered dataset. In this aspect, ML engineers and data scientists and data managers should work together. In the following, a diagram of steps in data collection, acquisition and processing is presented (Fig. 13) [80].

Study landscape of data collection for ML [80]

-

ii.

Target variable selection

As the name indicates, the target variable is the feature that we aim to get or achieve in the ML task. Whether classification or regression, the features should be clear, such as the target variable. Target variables in the form of labeled targets are the pivotal point where supervised ML algorithms use historical data to pick apart patterns and discover relations amongst the other unknown features of the dataset and the set target variable. Without properly labeled data, supervised ML tasks would not be able to plot data to outcomes [81].

-

iii.

Splitting the dataset

Based on most references and as a convention, it is understood that it is best to split the dataset to prevent overestimation and overfitting. In the following, we discuss the most noticeable sets of grouping: training set, cross-validation set, and testing test. The training test is mostly the largest set. The model trains based on the insight that it gains from the data that is fed from the training set. The training set is basically the subset of the whole data set available. In this phase, we can forecast the weights, the bias of the model if it’s a NN. Therefore, we can optimize the hyperparameters, which are the parameters that control the initial setting of the system. They are immensely important because after setting them, they cannot be changed like the weights or biases or the parameters of the system. In the cross-validation phase, we estimated the loss function or error of the system, and therefore minimizing it to get the best prediction. And then finally, we use the testing set, which is the smallest of the aforementioned sets and results in a non-bias result because the testing data are new to the model. This stage acts as a close simulation to a real life occurrence and demonstrates how the model would operate in a real situation [82].

-

iv.

Hyperparameter tuning and prediction

Hyperparameters are very important due to the fact that they should be set before each iteration, and they define the very fundamentals of an ML model, unlike process parameters that can be manipulated while data learning process is in process. In the case of a DNN, a part of it is determining the number of hidden layers, nods, neurons, step size, and batch size. One needs to differentiate between the hyperparameters that are related to the algorithm, such as the aforementioned step size, batch size, and the ones that are related to the structure of the model, such as the number of hidden layers, method of nods connecting to each other and the number of nods. As is maintained, hyperparameters are constant while in operation, but process parameters can change. The progression to tuning or optimization of hyperparameters could be achieved when enough number of tests runs and trials are undertaken. The pace of training of a DL model is determined by the rate of convergence. There are methods known as super convergence methods where the crucial foundations of super-convergence Are training with a singular learning rate cycle and a hefty maximum learning rate [83, 84]. By comparison of the results of the test runs and making vigilant comparisons to real values of each data iteration, the accuracy of the model can be evaluated, and thereafter, we gain insight as to find the best values for the system to make a better combination of hyperparameters and more accurate predictions. A hyperparameter metric is a personal specification of a single target variable that is specified by choice of a human operator. The model accuracy is defined by a metric value and, therefore, can be determined if it is maximization or minimization that is the desired goal for our specified metric to fulfill [85].

1.2.2 Unsupervised ML

Methods classified as unsupervised ML are capable tools for the detection of similarities, thus drawing conclusions out of unclassified data by clustering them based on their similarities. When high dimensional problems are needed to be dealt with, these methods act fantastically in terms of finding correlations and patterns in such unclustered and vast environments and their visualization of many clusters that they classified. Furthermore, telling irrelevant inputs in models apart and eventually finding ways to produce materials under the same conditions and with the same quality are some features of unsupervised ML [86].

In industrial applications, a specific type of unsupervised ML, known as unsupervised transfer learning (UTL), has been found to be effective such that it could be considered as a robust anomaly detector that could be updated based on changing operating conditions. Needless to say, these models need comprehensive datasets [87]. Also, unsupervised learning could be used in cases where cyber-physical attack uncovering in AM is required [88].

1.2.3 Reinforced ML

ML approaches are very effective in dealing with repetitive tasks. Reinforced learning (RL) is very capable in the environments that machines are gathering field knowledge. There is no specific need for direct programming, and no human intervention is involved. The way this process undergoes is by defining objectives for the machine as rewards in the case of positive progress and punishments should the machine regresses away from the set objective. Figure 14 is a general workflow of a RL agent [89].

Generic depiction of process steps of a reinforced ML model [89]

As the illustration above shows, there is an iteration shown as the observation phase \(O_{t}\), in this iteration, everything that needs to be observed and acknowledged by the agent will be monitored and unveiled and the consensus is that there will be no information which will be concealed in this iteration. The embedded policy within the learning process signifies the agent’s decisions and reaction to the observation that it conveyed. There are typically two form of policies, deterministic and stochastic. A deterministic policy is a precise action over a current state of observation \(a = \pi (.|Ot)\). Contrariwise, a stochastic policy is a distribution of actions over a current state of observation \(a = \mu \left( {Ot} \right).\) Moreover, a reward signal is the objective of the RL problem and it’s directly influenced by the ongoing observation state and the actions taken in \(r = R\left( {Ot,a} \right)\). The ultimate aspiration of the system is to maximize the reward it accumulates and maximization of the return rate Gt with discount rate γ ∈ [0 1].

Modelling of the environment allows for a deeper understanding and accurate postulation as to how the environment would behave. The dynamics of the environment could be mnemonically expressed as P, that could be either deterministic (Ot = p(Ot, a)) or stochastic Ot = p(Ot, a) [90].

There are many possible applications of this ML approach, for instance if we consider an engineering design process, each decision taken in the process of design could have either positive or negative impact depending on the design objective. Numerous actions and approaches will be taken until the satisfactionary results will be achieved and each one of them has their rewards or punishments in the development of the process. There have been numerous reports of novel applications of RL method. In robotics, RL is a promising prospect for optimization and further enhancing robotic manipulations [90]. RL models are still somewhat considered as black box models. However, with methods to elucidate the intricate decision making of RL models and agent’s behavior, RL models could help in acquiring scientific insight on the complex approaches it takes [91]. In a study in order to make Corrections in the framework for process control of robotic wire arc AM (WAAM) RL was used and the results signified that by using RL, learning architecture can be used in conjunction with real parts printing giving better chance of in-situ study, therefore minimizing the obligatory training time and material depletion. The proposed learning framework is applied on an actual robotic WAAM system and empirically assessed [92].

1.2.4 Evaluation of ML Model Performance

In ML model performance, availability of data is an important factor. Tasks regarding voice recognition, natural language processing or image recognition have vast data availability, and in contrast, in a field like bio-informatics where data acquisition tasks are very hard to come by and are generated at quite a high price. In ML, there are three particular options to tackle the model evaluation (predictor). Independent dataset test, cross-validation test, and re-substituting. In the independent dataset approach for model evaluation, all the data sets are divided in a random fashion between two uneven parts, meaning that one part is deliberately smaller and the other larger, reason being that the larger part is designated as the training data and the smaller part for the final testing of the model. This approach is sometimes referred to as sub-sampling as well. The method however, operates on a small testing pool of datasets, which would result in volatile results on each testing evaluation. In simple terms, in each test, the results could be different from one another, which will result in inconsistency in results between real life results and test results, often higher or lower. In the resubstituting test, there is no difference between the testing pool and the training pool of data, and that becomes problematic as the model evaluation results are often far too optimistic and not very accurate. For example, a 99% accuracy could mean that the model is overestimated and rendered inoperable in real applications. The next type of model evaluation is known as cross-validation. Even though all the data available are used for testing, the way they are used are far more different compared to the resubstituting test, such that the whole data set is haphazardly divided in an arbitrary segmentation, denoted as ‘n’ part of equal magnitude. Furthermore, the testing and training operation will be carried out for ‘n’ times and in an arbitrary number of times where the objective is reached, denoted as ‘kth’ time, the ‘kth’ part will be used as the testing set while the remaining n-1 parts are still in training, and finally, after n rounds, every set of samples are used for testing phase for just once and by averaging the whole forecasting results over all the dataset, a better result for evaluation would be achieved (Fig. 15) [93].

A depiction of n-fold cross-validation [93]

Some other important measures of accuracy and precision of an ML model are described by confusion matrix and it could be expressed in four statements, as false negative (FN), false positive (FP), true negatives (TN), true positives (TP). Accuracy is therefore defined by how truthful the prediction of a model is. And it can be calculated as,

Precision is the amount of correct/ true positive cases divided by the number of real (true positive + false positive) examples forecasted by the classifier. The recall is the number of true positive instances divided by the number of predicted (true positive + false negative) instances by the classifier [94].

1.2.5 Deep Learning and Neural Networks Approach

In the past 40 years, ML and deep learning (DL) approaches to ML have proven to be very efficient and have made a huge impact in multiple industries [95]. Overall, both ML and DL belong to the much broader science of AI. The reason deep learning is addressed by this name is merely because of the multiple numbers of layers incorporated between the input layer, which is the first layer, and the last layer, which is the output layer [96]. Conventional NN models or multi-layer perceptrons have a singular nature that makes them well suited for binary problems via feeding them given inputs, which is inspired by the human’s brain learning and perception pattern. Dong et al. [97] generally break DL models down as ‘merely a great many classifiers working organized, which are grounded on linear regression followed by some activation functions. A variation of DL, known as convolutional neural networks (CNN), is proven to be very suitable for image recognition tasks. Image recognition data are multi-layered data and are well designed for such tasks [98]. This DL method has been applied in many different fields [99,100,101,102]. For the case of 3D topology optimization [103], a CNN method known as one-shot has been successfully used [104] . Furthermore, because of the broad usage and variety of applications of DL models, the following will be dedicated to the breakdown of different DL models. Additionally, Recurrent neural networks (RNN) are very complicated and intricated due to the fact that aside from the inputs that they are fed with, an internal addition task is also carried out, which is nothing but some older internal tasks which are recognized as a part of the output for the system [105].

-

i.

Classical neural networks (multi-layer perceptrons)

Conventional NN models or multi-layer perceptrons have a singular nature that makes them well suited for binary problems via feeding them given inputs, which is inspired by the human’s brain learning and perception pattern. Figure 16 [106] depicts genesis of the aforementioned models. This method was initially developed by an American psychology practitioner by the name of Frank Rosenblatt in 1958. Typically, a NN model with two sets of inner layers is a classical NN.

A single artificial neuron [106]

This method is best used for its adaptability and flexibility to different types of data, regression or classification task, under the condition when a real set of values are fed to it as inputs and are also very well implemented for datasets that are in the form of vectors or matrices [107, 108].

-

ii.

Convolutional neural networks

CNN can be considered as a more complicated and advanced form of classic NN. Capable of more complex pre-processing and data computation, these models have been used for image processing and handwritten digits (Fig. 17) [109] for the most part. However, it can also reach satisfactory results with non-image data as well. How this model makes its calculations is that the initial layers will pick apart traits like curves, edges, and lines of an image, the layers in between will group and assemble them and the final layers will recreate the image from the scratch all over. Moreover, there are some aspects where we can differentiate the CNN from its classic counterpart. Namely, the CNN models do not have to be directly connected to all out puts from the previous layers; or in other words, they have local connections. Second, the overlapping of input fields, within each layer. the neurons have the same weight in the whole layer and instead of the sigmoid function, it uses a non-linear function known as rectified linear function (RLF). Pooling layers will be combined with convolutional layers and the nominalization layers will be existent to keep the received signals in each level at the desired level. Overall, backpropagation will be used for training [110, 111].

A CNN sequence to classify handwritten digits [109]

-

iii.

Recurrent neural network

Recurrent neural networks (RNN) are very complicated and intricated due to the fact that aside from the inputs that they are fed with, an internal addition task is also carried out, which is nothing but some older internal tasks which are recognized as a part of the output for the system (Fig. 18) [105]. Therefore, the RNN treats a part of its output as input for the next reoccurring step, and we could postulate that this is where the origin of the name Recurrent came from. The embedded algorithm selects the part of the output that is going to be used as an input. This internal state is also referred to as memory. This extra step as memory has proven to be very effective in chronological data, such as audio, text, time series, and allows to handle data and inputs of different sequence span. The long-short term memory (LSTM) and the gated recurrent unit (GRU) are some the famous algorithm applied and for accounting in for the inference on an output, only the network's output after the last phase of step is applied. Time series forecast has many applications in astro-and geophysics where many stimulating systems have unseen conditions that cannot be determined due to their novelty and need past observations to be determined [112].

A RNN, where x represent the input words from the text, y represent the predicted next words and h hold the info for the former input words [105]

1.2.6 Common Loss Functions in Machine Learning

During the recent decades, ML methods have achieved great feats and one of the most influential aspects of this achievement is the performance of their corresponding algorithms. As a result, one of the functions that keeps the algorithms in check is known as the loss function. In this section, we discuss some of the most pragmatic and known loss functions in ML. Basically, ML algorithms could be grouped in two sets, supervised and unsupervised. Within the supervised learning set, the most important tasks to be carried out are regression and classification tasks. In regression, a continuous value is under scope, but a discrete target value in classification tasks. In simple terms, the common objective of regression tasks is to learn about an arbitrary function f(x) that derives the minimum loss value related to all training data. Table 1 compares mathematical statement for regression and binary classification tasks [113].

Furthermore, it is noteworthy to bear in mind that each loss function results demand a different ML approach. For example, the famous support vector machine (SVM) algorithm[114] needs the hinge loss to operate, exponential loss [115] leads to the classic boosting method, and logistic loss function lead to logistic loss function. The logistic loss function is a classification-oriented loss function that is very prominent in ML problems and the mathematical expression for logistic regression goes as follows:

where \(\left( {x,y} \right) \in D\) is a set of data containing a great number of arbitrary labels, y is a label assigned to an example, and owning to the fact that logistic regression is a classification task, then y should be 1 or 0, and \(y^{/}\) is the estimated value which could be any value between 0 and 1 [116]. Also, another important loss function that is very useful and worth mentioning is the cross-entropy loss function. This particular type of loss function is very useful for probability estimating of rare instances and stochastic environments and networks [117]. This powerful method has been nominated for applications such as importance sampling and optimal control [118] and probability density estimation [119].

2 Application of ML in AM

So far, the most commonly used ML method is supervised learning. This is due to its convenience in application and the fact that AM processes encompass several variables and complexities, which is a hurdle in exploiting full potential and advantages of ML. However, other ML methods are tested and implemented as well. For example, reports of using RL for toolpath optimization [120], unsupervised learning for anomaly detection [121], as well as finding trends in high dimensional data sets, constructing an analytical map of features detected in a manufacturing process, out of the inputs to the output responses for different problems, and finding patterns in dimensionally vast environments, are available [122]. ML models are classified as surrogate models and they are capable tools for studying non-linearities and could deliver good results with both simulated or experimental datasets [123]. Generally, ML modeling is often considered as surrogation modeling that could significantly speed up numerical simulation development [50]. Nonetheless, numerical methods such as finite element methods are quite common for the modeling of parameters such as heat source, feedstock, and melt pool dynamics [124,125,126]. Finite volume methods could also be implemented [127]. However, there are records of modifications on finite element analysis to best suit 3D printing process, such as quit/inactive method and other variations [128,129,130]. The most important aspect of ML is that it can be tuned by training data. In the case of AM, since it is a complex multi-physics process and a complex progression, there are many variables and parameters at play and therefore, considerable investments have been authorized to develop databases enriched with data informatics applicable within ML framework combined with legacy physics-based and Integrated Computational Materials Engineering (ICME). this combination had resulted in the development of tools to make predictive analysis regarding crack propagation due to fatigue and the nucleation, one example is known as \({\text{DigitalClone}}^{\textregistered }\) for AM which in a broader sense, is merely an ICME tool [131]. Consequently, this results in models with better process-structure property. Accurate grouping of each iteration of designed experiments improves and optimizes materials and designs [132]. ML models could only be efficient if it is fed with proper training data. Generally, the steps needed to be taken in an AM process could be divided into four major steps (Table 2) [133] and each step requires different considerations along its distinct ML method and algorithms. Considering the classifications presented in Fig. 19 [134], related common ML methods to the main process steps of AM are further described in Table 2.

Main process steps in AM [134]

2.1 AM Design

Typically, design for AM is a topic that could be divided into two segments; object design and the optimization of AM design. Also, owing to the novelty of the AM design, different materials and geometries need to be studied. Overall, matters related to topology optimization, design feature recommendation, shape deviations, material analysis for AM, and cost estimation fall within the scope of AM design. Therefore, studies regarding the aforementioned topics will be discussed in this section. The shape and dimensions of any desired part are among the initial phases that must be measured and considered. How efficiently and optimally the geometry of a shape is designed could go a long way and has major influences on price and even environmental pollution. An example of the importance of an optimal shape design is predominant in aeronautics, where reducing the volume of the material while still maintaining the quality and reliability can result in less fuel consumption. This can result in cheaper flights, less pollution, and lighter overall aeronautics [135]. One of the capabilities of AM methods is creating complex geometries and designs with intricate lattice structures. Also, the mass distribution of material [136, 137] in a part is well controlled and engineered in an AM design process. This new frontier of design poses its own challenges, including overhung structures [138, 139].

2.1.1 Topology Optimization

Topology optimization (TO) has started to gain attraction starting from the late 20’th century [140]. It became more popular as a reliable computational method for designing with less weight of parts being made in many fields including automotive and aerospace industries [135]. The main purpose of this step is to improve geometry to maximize load-bearing capacity while keeping stiffness and longevity at desired standard using as few materials as possible. TO methods also consider the optimization of natural frequency maximization and constraints and the minimization of constrictions. TO methods are applicable and have proven to be effective (Fig. 20) [141].

a Basic structural forms of problem, b TO executed on the part, c 3D printed object [141]

Nevertheless, TO faces many hurdles when paired with 3D printing. One of the main reasons stems from complex part shapes with overhung extensions, unique potential sections of each product with different geometries and cross-section areas and angles exceeding their threshold vertical to printing bed. Depending on the type of material considered to be applied in printing process and the geometrical shape of part as well as its actual functionality, support structures are selected and used (Fig. 21) [142].

a An additively manufactured part, b The support structure holding the shape upright, shown in blue [142]. (Color figure online)

However, the removal of support structure is an extra effort; that is why although AM and TO are theoretically compatible, most TO considerations end up slightly challenging when a part is 3D printed [143, 144]. Thus, designing the shape to eliminate the need for support structure will result in a non-optimal shape. Therefore, a balance between the application of support structure while decreasing its significance in designing is a favorable tactic. Figure 22 [145] is an attempt to achieve such a goal by conducting analysis on the assemblage and the prediction made by ML on strength and toughness. Overall, the premise is to introduce the angles that are overhung, regarded as a penalty term, which could be considered as constraints in the design space. Also, linear regression can be applied to all voids in solid interfaces to detect the areas which need support structure. It needs to be mentioned that self-supporting designs mostly hinder the design from being practical. One commonly used approach to tackle this issue is assigning a soft penalty label to the amount of effort required to remove support structure to the design objective of choice with small controllable coefficients. The proposed method is to start solving in an unconstrained design space followed by further reorganization of the solution iteratively to minimize the so-called soft penalties with respect to design objective [134].

One of the capabilities of TO in AM designs is the ability to optimize heat and fluid transfer via TO methods. Optimizations could also be implemented to generate more enhanced heat sink designs [147]. AM has improved the distribution of diverse lattice assemblies in designs (Fig. 23) [148]. Also, because of design freedom, the ability to include multiple materials in a part is practical and practiced today (Fig. 24) [149].

Complete scale recreation for the design made by a simulated design and b fixed design [148]

Negative Poisson’s ratio metamaterial designs with different volumetric ratios: a 20% for soft material and 40% for hard materials, green parts indicate hard materials and red parts present soft materials; b 35% green material and 25% red material; c 30% both hard and soft materials [149]

Shape and size optimization should also considered in TO [150,151,152,153,154]. Design and shape specifications related to each cell or voxel in each design could often be trained via ML methods by programming different material voxels as different features related to their assigned numbers (Fig. 25) [155].

Design of an AM product processed and fed to a ML model, each colored unit cell indicates complex assemblages of microstructures, giving each cell a number for the identification of each cell [155]

In a case when buckling due to enforcing maximum load to a desired area is likely to happen, a particle swarm optimization (PSO) method was used in order to ensure shape optimization. The process could be stated in the following general form:

where function F(X) is the objective to be minimized, and by optimization of variable X and the lower and upper bounds enforced to the variable are set as \(a_{j}\) and \(b_{j}\) In general, PSO is regarded as a gradient-free optimization technique. A definite number of randomly chosen particles, also stated as designs, are introduced over a specified design domain so that each and every particle or design are processed based on the initial objective function. Upgrading is perceptible in the form of their social and individual variances, meaning alterations they receive as a particle or in a batch compared to pre-upgrading state. Depending on the best global value of each particle in the swarm and individual initial optimal value of interest. In this instance of the application of this optimization (Fig. 26) [156], shape X and shape design variables were successfully optimized via PSO [156]. Further details were also provided in a study by Singh et al. [157]. The right side of Fig. 15 illustrates a feature knows as Rhino. Python was used to assess the buckling load applied to the stiffened panel-shaped part under load for each particle of the optimized method. Furthermore, this feature could be used for the creation of panel geometry. When geometry is generated with a different set of parameters optimized by PSO, in order to automate the generation of geometry while PSO is being operated. Then, the prepared geometry is transferred to be processed in MSC Nastran software environment for buckling analysis [156, 158].

Shape optimization via swarm particle optimization coupled with a trained DL model [156]

In other attempts to optimize TO, Sosnovik et al. [159] tried using CNN which resulted in significant positive results and overall acceleration in the process. The overall achievements were as following,

-

After just a few numbers of iterations done by the algorithm known as penalization of solid isotropic materials (SIMP), the proper input volume was delivered.

-

The SIMP algorithm delivered the output volume only after 100 iterations.

In the accounts of application of SIMP algorithm shown by Sosnovik et al. [159]. it was revealed that the computational effort was significantly reduced while results were ranging from 92% accuracy from 5 iterations to 99.2% after 80 iterations. Also, Harish et al. developed an algorithmic DL/ML based approach for TO for getting the optimized structure with a given condition of the structure and for that purpose, they trained a CNN model variant of DL with a decoder, encoder structure with decent results [160]. In another research, which was concerning the application of 3D cantilever beams, Banga et al. [103] reached the binary accuracy of 96%. This indicated that with significantly less time TO could be done with the same quality. Furthermore, higher resolution TO was used effectively in the practice phase therefore, leading to better quality outputs and finally, higher complexity was achieved leading to optimized structure without supports [161].

2.1.2 Design Feature Recommendation

Design feature recommendation is the very foundation of a successful AM process, as it is indicative of how efficient the print process would be in terms of cost, preciseness, amount of post-processing and usage of support structure [122]. ML approaches also have the history of being used to determine how complex or simple the process of manufacturing of a computer-aided design (CAD) model would be, based on its features, such as multi-scale clustering and heat kernel signature methods. This capability is very helpful in order to prevent futile designs early on and also in setting the proper build orientation. CNN, has shown significantly better accuracy in part mass estimation and build time forecasting than conventional linear regression modeling approaches [162, 163]. In a work done by Yao et al. [164], a method of hybrid approach was presented in order to come up with the best design features, using unsupervised and supervised techniques as the form of Hierarchical clustering (HC) and SVM, in a way that initial AM design features and targeted parts and components are coded and thereafter, HC method is applied on the coded design features resulting in an initial plotting, then the SVM classification is applied to iteratively optimize the plotting made by HC. the byproduct of the hybrid ML approach contains the recommended design features. In order to proceed with the proposed hybrid ML approach, four general steps were considered. These general steps contain a route to fully use unsupervised learning clustering and classification made by supervised learning. The first step is to code the design features and targeted components to be stored and classified in a referable dataset. Then, the second step would be to execute HC to all design features and targeted components within the database, resulting in plotting of hierarchical tree structure. The third step is to commence SVM classification by training on real industrial data, and in the fourth step, the classifier that was trained in the previous step is employed to form an algorithm capable of identification of recommended design features via cutting and selection of branches formed on the hierarchical tree shaped in the second step. The fundamental step toward the proposed approach is the multi-categorial coding which grants better intuition as to how the HC and SVM would function. The authors differentiated 3 distinct categories for initial coding of target components and design features, the “loading’’ category, “objectives’’ category and “properties’’ category. Moreover, these 3 categories are found to be co-related, such that either a target component or an AM design feature which is under a static tension load might be having resisting linear distortion as design objective, while Young's modulus and tensile strength are the important properties. The coding considerations are shown in Table 3 and the complete mapping of the aforementioned 4 steps is depicted in the Fig. 27 [164].

Process steps to hybrid ML design [164]

2.1.3 Geometric and Shape Deviation

There exist many aspects leading to geometry and shape deviation. In the process of converting the CAD model to standard input files, numerous inaccuracies and geometric deviations occur. Also, errors of the machine would result in shape and geometric inaccuracies and shape shrinkage. For getting into more specifications of this issue, Huang et al. [165] achieved optimal shape dimensions for 3D printed objects of polygon and cylindrical shapes, a new statistical model was established and their model delivered promising results in shape prediction where it was capable of compensation and prediction of 75% of deviations of a dodecagon shape deviations based on statistical approaches. In another research, a deviation modeling method was suggested by Zhu et al. [166] for making accurate forecasting of the shape and geometric deviation trends in AM. For realizing this purpose, Bayesian inference was used. Based on the data from different shapes, the models perform and thus, more accurate tolerancing of AM parts would be achieved.

Furthermore, in a study by Ghadai et al. [167] DL is used to learn from 3D CAD models and make better suggestions on DFAM, without the need for additional shape information. The layer-wise stacking process in AM and the fact that materials undergo repeated thermal expansion and shrinkage causes some issues in preciseness of the shape and geometry, causing the influence of deviations to be existent inside each layer or in between each layer. Nonetheless, other factors such as errors due to geometrical approximation, mostly because of converting the 3D CAD model to the standard file input, should be accounted for. In The approach by Huang et al. [165], the mathematical expression to address deviations is in 2D domain, due to negligible width of each layer is presumed. thus, resulting in an expression based on the original objective dimensions comparing with the shape that was attained after the process. In a 2D layer, the axes in which deviations could occur, based on the cartesian coordinate system are in the X, Y and the rotational expression with respect to the original shape. These expressions are used to make transformational expressions. However, the aforementioned expression could only account for overall in-plain shape deviations and not the more complex error sources such as location-dependent surfaces along the shape boundary. The authors assume that such complexity, which could not be expressed by parametric function, could be related to the uniqueness of the shape. As a result, the need to apply a data-driven model comes from the complexity of this multifaceted error source, and the multi-task Gaussian process (GP) was chosen to learn, characterize, and predict the deviations. GP is a supervised learning ML technique. This method is known for its probabilistic predictions and its capabilities in real-life applications. The more the number of training points increases, complexity in computations gets updated, and predictions improve [168]. Supposing that our objective is to model the deviations of M number shapes, formula (6) that expresses GP is as follows;

where M is the index of the shape, l = 1, 2, 3…M. \(f_{l} \left( {\theta ;\psi_{l} } \right)\) = the parametric function for expressing systematic deviation of shape l. where \(g_{l} \left( \theta \right)\) is a zero-mean and GP models the local variation of shape ‘l’. Eventually, random noise is learned while all M shapes are accessed via multi-task learning algorithm.

Overall, the relationship between occurring errors to shape and geometry deviations are far too non-linear to be modeled empirically from a set of samples. Therefore using data-driven ML techniques has proven to be a proper choice, as the results are shown in Fig. 28 [166].

Accuracy of the prediction in deviation forecasting compared to real observed deviation [166]

2.1.4 Materials in AM

In AM, specific manufacturing techniques are used for each type of material. Material types in AM could vary depending on the material scale (at mesoscale, macro-scale, or nano), type of material, which could be metallic, ceramic, polymer. Also, process parameters for printing the material are very influential when it comes to material quality. One of the reasons which makes AM unique compared to previous manufacturing methods like subtractive manufacturing is the fact that material inheritance is kept intact and unaffected. For example, in machining, only a block of material is subtracted and shaped to the manufacturer's desire. Therefore, this is only physical manipulation of the shape of the aforesaid block that is happening. On the other hand, in AM, the material is being transformed by a chemical or thermal process all the while geometry of the manufactured part is being established. The very materials implemented, such as powder or polymers, are quite influential. However, process parameters, such as printing speed, printing scalability and printing resolution, also play a significant role in material characteristics [169, 170]. Owning to this new niche in material manufacturing in AM, a large number of variables for material analytics are generated, and storing, clustering, and gaining insight from such vast amount of data is a cumbersome task. For example, in a case of powder chemistry of a certain metal or alloy, such as the case of Ti-6Al-4 V alloy powder, the chemistry could vary drastically because of the impurities like oxygen, carbon, iron, or nitrogen. This variation can affect the tensile properties, which could alter the configuration of each set [171]. As a result, ML methods can be used for better material composition and the usage of sensors such as pyrometers or acoustic sensors [172, 173]. In general, this study overlaps with the material science aspect, so it would be helpful to mention the most prominent ML method used in material science (Table 4).

In a case study in bone tissue engineering, conducted by Guo et al. [174], it was shown that the microstructure of the required porous geometry could be further improved by the control which would bring about possibilities such as precision in intricate micro and macro porous parts for printing bone tissues. These can be realized only via 3D printing (Fig. 29). They studied a case of printing Ti6Al4V titanium alloy and concluded that ML method could immensely aid in the following aspects of bone tissue printing:

-

Gradually updating the process-microstructure performance relationship based on repeated training

-

Providing guidance for process parameter selection for a higher quality of printed material

-

Minimizing the unwanted porosity caused by measly hole instability

-

Reduction in fusion defects due to inadequate overlapping of adjacent scanning routes

A wide-ranging deception of paring ML in printing bio-engineered materials [174]

In another attempt to construct a Bayesian framework based on GPR and Bayesian optimization approach, unification of nanocomposite design and part construction was done by Liu et al. [175]. The researchers combined the decent surface quality forecast and process parameter optimization to gain improved surface roughness. It was reported that GPR performed better than other targeted benchmark methods in terms of convergence with the highest coefficient of determination (R2) value (R2 = 0.84), and the model accuracy with the smallest mean absolute percentage error value (MAPE = 0.13) and root mean square error value (RMSE = 2.66). It can be stated that the type of material and complexity of the respected material is a challenge. To tackle this issue, an unsupervised method was suggested to reduce the amount of vast data generated from metallic material for the AM process [176].

2.1.5 Product Cost Estimation

In general, to determine whether a product development would result in financial gains, the product should neither be overpriced nor undervalued. The determination of such quality of products for manufacturing is product cost estimation related to many factors, which will be addressed in the following section. In a paper by Busachi et al. [177], three general approaches for product cost estimation were distinguished, “Intuitive Techniques”, “Analytical Techniques” and “Analogical Techniques”. In intuitive techniques the past successful designs are used as data for applying to new designs. In analytical approach, the cost of each product is estimated based on the features that the product is set to have, namely complexity of design, mass of the object or certain objective standards which the product should have. This approach has little flexibility and is better suited for the final stages of the design and the analogical techniques. In this technique, the most indicative factor is the amount of data, as the robustness of the historical data set indicates the accuracy of the analysis. Regression techniques are used to predict the cost of the novel designs. Well-structured mathematical relationships and background are very deterministic in this approach, and the weight each variable might have in the overall cost is derived. ANN modeling has proven to be very effective in cost estimation even without a comprehensive dataset. It can investigate in non-linear environments, and they are insightful tools for the early stages of the process [178, 179]. At large, ML algorithms are used for monitoring and system diagnosis and machine condition monitoring. In the coming years, owing to the rise in computational power and advancement in hardware, ML approaches have grown even faster as DL approaches are capable of making calculations on millions of parameters [180]. Methods such as least square SVM were used by Deng and Yeh et al. [181] to predict the production cost. Least squares support vector machines for the airframe structures manufacturing cost estimation, and K-nearest neighbors and meteorological parameter section for assembly of cost features in the form of vectors [182] are also used. In a suggested framework proposed by Chan et al. [183], maintaining consistent cost estimation is directly linked to processing the related feature extraction form the proposed model to the analysis of dynamical clustering. Also, in the proposed framework, the extracted features are considered to be the main factor in the amount of processing time and complexity of the design. For instance, in injection molding, features are size and number of cavities, venting system, surface quality, and the overall dimension of the objective part the material of choice and etc. However, in this approach, the method of choice is FDM (FFF). From a stereolithography format file (STL). Features that determine the cost could be obtained, such as print path length, printing duration, the volume and number of prints made and so on. The aforementioned features extracted from the 3D model are crucial in the overall print cost and are assembled in the form of feature vectors. The process of cost estimation is depicted in Fig. 30. The next phase is to process the extracted vectors and coding. the information will be in a G-code format, regardless of being geometry-related or non-geometric. The generated data will be stored in the Cassandra storage system. Afterward, in order to increase the preciseness of the cost forecasting, the data are first grouped based on their similarities, and regression models are embedded within each cluster that is constructed dynamically as a method to reduce variance. while prediction is ongoing, a cluster which shares the most similarity with the new generated model is imported. afterwards, two methods of regression known as least absolute square shrinkage (LASSO) and elastic net (EN). These regression models work well in high dimensional spaces while input variables are highly dependent upon one another. They show high selectivity towards correlations between inputs and outlier data. This suggested forecasting method to determine production cost estimation, collects a comprehensive data set for more accurate results to make cost predictions regarding a new task based on the history of previous tasks of similar merit. it is elucidated that G-codes generated could be a means to make cost estimation while geometry features could be extracted from the G-codes. geometrical features, non-geometrical features such as temperature settings could also be accessed, however, other features such as labor cost, print machine selection and type are decided by technicians [183].

Process steps to proposed cost estimation approach [183]

2.2 Process Parameters and Performance Optimization

Different ML models have different sets of parameters that rule the process of printing. After tuning the geometrical parameters, the next step is the selection of suitable process parameters. Different ML models have different sets of parameters that rule the process of printing. Process parameter optimization is studied in cases where either new materials or a novel approach is considered to be implemented [122]. Determining process parameters has a very big impact on AM products [184]. The relationship between process parameters are highly complicated, none-linear, vast dimensional space and even non-convex at times [185]. In an attempt by Rosen et al. a modeling framework for process-structure properties has been presented, shown in Fig. 31 [186]. In the following section, extensive case studies of process parameters and performance optimization-based application of aforesaid optimization approach on specific AM method is discussed in details.

Process structure property problem framework [186]

2.2.1 Implementation of Genetic Algorithm for Process Parameter Optimization

Typically, FDM consists of polymers extruded through the nozzle layer after layer. In this method, parameters such as layer height, printing speed and material flow from the cartridge cartilage are critical and choosing proper parameters with correct values could tremendously optimize the AM design (Fig. 32) [187]. In Table 5, proper parameter selection in FDM and applied ML approach is shown. in laser fused AM methods which are somewhat similar to FDM in terms of being layer-based, parameters such as laser scanning strategy, laser power, and laser speed are pretty influential as is shown in Table 6. overall, It has been found that genetic algorithms (GA) [188] used in ML modeling can influence the quality of 3D printed parts. Through a criterion known as fitness function, the first set of parameters also known as parent generation set is accessed. However, if the target is not reached, the process keeps repeating until the optimal results are generated and the objective is fulfilled. An instance of the implementation of GA is with the design of experiments (DOE) [189]. The objective is to find the optimal combination of process parameters that could potentially minimize the surface roughness and porosity of the manufactured objects. In this case, the parts were created by FDM method, the material used in the process was acrylonitrile butadiene styrene (ABS) and the process parameters of the study were slice thickness, road width, nozzle temperature, and air gap. At the beginning of the process, DOE operation was set to achieve the porosity the roughness of surface from a number of combinations of above-mentioned parameters. Then, based on the obtained results and in order to get fitness function, a methodology known as response surface method (RSM) was applied. This method is basically a selection of statistical and mathematical technical approaches applied for both approximation and optimization of stochastic modelling in manufacturing [190] extracted as a second-degree polynomial equation of the set of process parameters. Then, GA was used to forecast the optimal process parameter sets. Finally, it was concluded that minimal surface roughness was obtained at the smallest determined slice thickness, road width and air gap with an intermediate nozzle temperature, which was consistent with both experimental and GA model[188].

Optimization of process parameters suited for thin walled applications a The original setting of printing speed, b The original setting of extrusion multiplier, c Printing speed with an optimized setting, and d Optimized settings for extrusion multiplier to preform according to optimized process parameters [187]

2.2.2 Implementation of ANN for Process Parameter Optimization

Setting the process parameters could be better performed by using ANN models (Fig. 33) [203]. In general, there are two levels of quality indication in ML models which are related to the main process parameters of interest. The first one is related to mechanical properties which is at macroscale level and the other scope of analysis is at mesoscale which is linked to melt pool geometries, relative density and pores. Furthermore, in order to better identify the process, ML is used to lay down mapping of processes which is a helpful visualization tool [202]. Figure 34 shows a few of such instances [201].

A Support vector machines used for the prediction of macro scale properties of electron beam melting built products, B The cross-section views of defects, C Pores in the form of black spaces and the optimization process mapping on the right [201]

In a study on DED with Ti-6A1-4 V which is an alloy with remarkable strength, resistance corrosive agents and remarkable fracture toughness [204]. There exist reports regarding experiments on process parameters in SLM laser PBF and PBF technologies that are indicative of aforementioned occurrences [205,206,207,208]. Conversely, there are not many reports regarding alterations in part quality related to process parameters. It is a daunting task to select the proper process parameters while refraining from the costs experimentations could result to. thus, researchers are resorting to statistical approaches, namely Taguohi design approaches to design experiments for AM methods [209, 210]. Today, ML methods are used in many aspects of AM, such as melt pool signature, and overall defect generation. The primary objective of these methods is to reach a state capable of mutually corelating dependent factors at parameters and the overall generalization of complex problems between parameters to function as a map for conducting real experiments [211]. In this case study, for the purpose of mapping the indicted input into objective output, an ANN model has been developed. The major inputs of study are laser power, scan speed, rate of powder feed and layer thickness, and the density of the alloy material and the build height are designated to be the outputs. Based on trial and error and model tuning, minimized prediction error is understood and thereafter, the number of neurons and hidden layers are better determined. The general depiction of the model implemented in this study is shown in Fig. 35.

The proposed ANN model with indicated input, hidden, and output layers [211]

The steps to completion of ANN model begins with synchronizing the empirical values with an ANN model, resulting in minimum amount of error in the developed model [212]. For training stage, sigmoid back propagation algorithm was activated. The main objectives to be reached are the least amount of mean square error and average prediction error, that are expressed in (7) and (8) formulas,

where \(E_{tr} \left( y \right)\) is the mean error in output prediction (related to parameter y), N is the number of data sets, \(T_{i} \left( y \right)\) is targeted output and \(o_{j} \left( y \right)\) is processed output. In the case of the ongoing ANN model, pattern and variations in occurrences of errors and defects dictate the number of neurons and hidden layers. The model is set to have 2 hidden layers with 2–15 neurons in hidden layers. Figure 36 shows the value of mean square error and mean error in the output, on par with variations of these values while using one and two hidden layers. Furthermore, it was observed that by increasing the number of hidden neurons, mean error value was decreased. Ultimately, the 15-neuron ANN structure delivered the lowest overall error. The next step in the development of the model is to optimize the number of instances for iterations. Based on the results depicted in Fig. 41c, the initial number of iterations started from a range of 500–25,000. Nonetheless, when the number of iterations reached 18,000, the error values (MSE = 0.000001 and \(E_{tr}\) = 0.001124) were fixed when model was trained again. ANN hyperparameters such as momentum (α) and learning rate (η) both ranged from 0.1 to 0.9 and when momentum was set at 0.9, the minimum error value was reached (Fig. 35). An observation made at a momentum of 0.6 was a drastic shooting of error value which was a sign of overfitting. Similarly, in a case reported by Reddy et al. [212], it was shown that high learning rate could also lead to overfitting. At last, an ANN model of two hidden layers, 15 neurons, trained for 18,000 iterations with hyperparameters of 0.9 for momentum and learning rate of 0.7 was recognized as the optimal model for the estimation of process parameters related to DED AM.

Process of plotting the evolution of ANN model, a The optimal number of hidden neurons with respect to error value, b Number of hidden neurons with respect to error value, c Number of iterations and d Number of iterations and learning rate respectively [211]

In order to investigate the effects of process parameters, especially build height and density, as indicated in Figs. 37a and b, the areas shown in red indicate poor densities and unbalanced build heights, which are in proportion to scan speed and power. However, some process parameters such as thickness of layer (0.3 mm) and the fed rate of powder (2 g/min) were kept constant. Overall, it was construed that depending on the type of material and fabricability, in the case of specific alloy which is used in particular, it is most suitable to keep the power for deposition at a high output while keeping the scan speed at a low pace for avoiding lack of fusion defects [204]. Generally, scan speed and temperature gradient dictate laser power density, which consequently alters the build height of deposited material. Based on the results of this study, the best combination values of process parameters are for laser power and scan speed to be in the ranges of 320–400 w and 0.75–1.2 m/min, respectively. Other combinations that could lead to relatively optimal results are illustrated within the bounds of green area in Fig. 37. Additionally, high feed rate results in low layer thickness and conversely low feed rate and low layer thickness leads to increase in build height, whereas combination of low feeding rate /layer thickness will transfer the heat to the previous layers and reheats them. Also, regardless of layer thickness of as high as 0.3 mm, build height decreases owning to its insufficient energy expansion, further hindering the precision of the design. The green region also shows the best build height values. In summary, ANN model is evolved such that it can find proper density and build height for Ti-6AI-4v alloy, by DED and AM methods and the suggested set of parameters led to high quality printed products [211].

Process parameters plotted based on ANN predictions and green boundaries are shown as the areas where the combination of process parameters had optimal results. a shows density while b indicates build height of DED Ti-6Al-4V alloy based on considering power and scan speed, c shows density plot of and as powder feed rate and layer thickness d build hight related to process parameters such as powder feed rate and layer thickness [211]