Abstract

Composite laminates have found wide-ranging applications in various areas of structural, marine and aerospace industries. Their design and optimization is a challenging task due to involvement of a large number of design variables. Because of high accuracy of the laminate modeling theories and presence of numerous design variables, laminate design and optimization is primarily carried out in silico. Integratin the high accuracy of these laminate modeling theories using numerical solvers, like finite element method, boundary element method etc. with the iterative improvement capability of different optimization algorithms is a well-established approach and can be broadly referred to as high-fidelity optimization. However, in recent times with the advent of machine learning and statistical approaches, metamodel-based optimization has gained significant prominence, primarily due to its less computational time and effort. In this review paper, the essence of nearly 300 research articles (about 26% and 50% of them are from last 5 and 10 years respectively.) on high-fidelity and metamodel-based optimization of composite laminates is comprehensively assessed and presented. Special emphasis is provided on the discussion of various metamodels. The methodology and key outputs of each research article are concisely presented in this paper, which would make it an asset for the future researchers and design engineers.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Composite laminates are usually fabricated by overlaying several layers of composite materials. Each of these layers is commonly referred to as lamina. Many such laminae are held together by a resin and combined, thereby constituting a laminate. The overall sequence of orientations of each lamina in the laminate is called as the lamination scheme or stacking sequence [1, 2]. For a constant thickness, altering the stacking sequence of a laminate can significantly influence the in-plane stiffness and bending stiffness of the laminate due to the directional properties of each lamina. Each ply angle of the laminate has also a direct (but non-linear) effect on the in-plane stiffness and bending stiffness.

Optimization is a mathematical approach for making the ‘best’ possible use of available resources to achieve the desired target/goal [3]. Generally, the task of an optimization method is to maximize or minimize a desired target property, expressed in the form of an objective function. Additionally, locating a specific point or zone of the target property may also be a goal of optimization. A typical optimization problem can be stated as below:

where xi is the ith design variable (i = 1,2,…,k), k is the maximum number of design variables, and ximin and ximax are the lower and upper bounds of the ith design variable respectively.

An optimization algorithm is a technique that is employed iteratively while comparing the previously derived solutions with the current one until an optimal or a satisfactory solution is achieved. With the advancement of high-speed computing facilities, optimization has become an intricate part of computer-aided design. There are mainly two distinct types of optimization algorithms:

-

(a)

Deterministic algorithms: They employ specific rules for moving from one solution to the other. Given a particular input, they would produce the same output solution even when these algorithms are executed multiple times. In fact, these algorithms would pass through the same sequence of states.

-

(b)

Stochastic algorithms: These algorithms rely on probabilistic translation rules. They are gaining much popularity due to certain critical properties that the deterministic algorithms do not have. They can efficiently deal with inherent system noise and can take care of the models or systems that are highly nonlinear, high dimensional, or otherwise inappropriate for classical deterministic algorithms [4].

All the optimization algorithms can further be classified as single-objective or multi-objective techniques based on the number of objective functions to be dealt with. If the goal of the algorithm is to optimize only a single objective function at a time, it is referred to as single-objective optimization technique. On the other hand, if it has to optimize multiple objective functions simultaneously, it is called as multi-objective optimization technique. However, it is almost impossible to find out the global optima for all types of design-related optimization problems by applying the same optimization procedure since the objective function in a design optimization problem and the associated design variables largely vary from one problem to the other. One optimization algorithm suitable for a particular problem may completely fail or may even be counterproductive to another separate problem. The basic formulation of any typical optimization process is shown in Fig. 1.

1.1 Single-Objective Optimization

The basic aim of a single-objective optimization technique is to discover the ‘best’ solution, which corresponds to the minimum or maximum value of a single objective function. They are the simplest optimization techniques, and have found huge popularity among the decision makers due to their simplicity and apprehensiveness. Although, they can provide sufficient new insights about the nature of a problem, but usually, they have limited significance. Most of the design optimization problems need simultaneous consideration of a number of objectives which may conflict with each other. Thus, using single-objective optimization techniques, it is almost impossible to find out an optimal combination of the design variables that can effectively optimize all the considered objectives.

1.2 Multi-Objective Optimization

Numerous practical combinatorial optimization problems require simultaneous fulfillment of several objectives, like minimization of risk, deviation from the target level, cost; maximization of reliability, efficiency etc. Multi-objective optimization is generally considered as an advanced design technique in structural optimization [5], because most of the practical problems require information from multiple domains and thus are much complex in nature. Additional complexity arises due to involvement of multiple objectives which often contradict with each other. One of the main reasons behind wide applicability of multi-objective optimization techniques is their intrinsic characteristic to allow the concerned decision maker to actively take part in the design selection process even after formulation of the corresponding mathematical model. Since each structural optimization problem consists of multiple independent design variables significantly affecting the final solution, selection of the design variables, objectives and constraints are supposed to play pivotal roles. Sometimes, a multi-objective optimization problem may be replaced by an optimization problem having only one dominating objective function with the use of appropriate equality and inequality constraints. However, selection of limits of various constraints may be another challenging task in real-world design problems. When numerous contending objectives appear in a realistic application, the decision maker often faces a problem where he/she must find out the most suitable compromise solution among the conflicting objectives.

A multi-objective optimization problem can be converted into an equivalent single-objective optimization problem by aggregating multiple objective functions into a single one [6]. Reduction of a multi-objective optimization problem into a single-objective optimization problem is commonly known as scalarization. A classical scalarization technique is the weighted sum method where an auxiliary single objective function is formulated as follows:

where wi is the weight assigned to ith objective function and m is the number of objective functions.

Simplicity of the weighted sum scalarization method is indeed one of its major advantages [7]. However, in this method, values of the optimal solutions depend on the choice of the weight assigned to each of the objective functions. In absence of any prior knowledge with respect to the weights, it is desirable to have a set of equally feasible solutions. Each solution in the set should provide the best possible compromise among the objectives. This set of non-dominated solutions is referred to as Pareto optimal solutions or Pareto front. The Pareto optimality implies that no other solution can exist in the feasible range that is at least as good as some other member of the Pareto set, in terms of all the objectives, and strictly better in terms of at least one [8]. Thus, in the Pareto front, solution of one objective function can only be improved by worsening at least one of the other objective functions.

2 State-of-the-Art in High-Fidelity Design Optimization of Composite Laminates

Excellent mechanical properties of the composite laminates are mainly responsible for their widespread popularity in structural applications. However, to exploit the fullest potential of composite structures, optimal selection of shape, size, fiber angles, material etc. is essential which makes it a complex design optimization problem. This complexity arises not only due to involvement of various design variables, but also due to multimodal output response and large design space with unfeasible or expensive derivatives.

This section mainly categorizes and compares various optimization methods employed in optimal lay-up selection of composite laminates. The goal of the comprehensive literature review presented in this section is to offer a ready reference for choosing the suitable optimization techniques for a given problem. However, due to paucity of space, details of the adopted optimization algorithms are not explained here. Only their applications in composite laminate optimization are focused on.

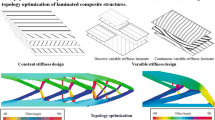

In the literature, several categorizations for optimization of composite laminates have been suggested. For example, Fang and Springer [9] identified four groups of optimization approaches, e.g. (a) analytical procedures, (b) enumeration methods, (c) heuristic schemes and (d) non-linear programming. From a more structure-specific context, Abrate [10] categorized laminate optimization applications based on the objective function that could be either one or a combination of in-plane properties, flexural rigidity, buckling load, natural frequency and thermal effects. Venkataraman and Haftka [11] recommended categorization of the design methods as (a) single laminate design and (b) stiffened plate design, whereas, Setoodeh et al. [12] suggested classifying the literature on optimization of composite laminates as constant stiffness design and variable stiffness design. In context of this paper, some prominent literature are briefly reviewed and the adopted optimization techniques are grouped into three broad classes, i.e. gradient-based methods, specialized algorithms and direct search methods.

2.1 Gradient-Based Methods

Gradient-based methods are based on the gradients of the objective and constraints, whose functions can be approximated when the corresponding mathematical closed form expressions are not available. However, they are computationally expensive. Generally, these methods are unable to locate the global optima, but have quicker convergence rate as compared to direct and heuristic methods.

The most common approach to search out a stationary point of an objective function is to set its first gradient to zero. This approach was adopted by Sandhu [13] to predict the optimal layer angle of a composite lamina. Its main advantage is the fastness to locate all the stationary points of the objective function just in one run. However, it depends on the expression of objective function as a closed form equation. Moreover, it performs only for single-variable, unconstrained optimization problems, which imposes a serious bottleneck to its practical applications.

Another popular gradient-based method is the steepest descent technique that performs, at each step, a line-search in the opposite direction of the gradient of the objective function. For composite structure stacking sequence design problem, it may be used as a standalone technique [14] or as an aid for other optimization techniques [15]. Initially, steepest descent technique has quick convergence, however, as it approaches closer towards the global optima, it becomes sluggish. It is known to be got trapped in the local optima and its inability to deal with discrete variables is its serious drawback.

Hirano [16] employed Powell’s conjugate gradient (CG) method for maximizing buckling load in laminated plate structures under axial compression, which could work only on unimodal functions, requiring no gradient information.

Newton (or Newton–Raphson) methods require second-order gradient information and are seldom used for optimization of laminated composite design problems. Quasi-Newton (QN) methods, on the other hand, are frequently applied as they allow determining the Hessian without using second-order derivatives. Davidon, Fletcher and Powell (DFP) [17] applied QN techniques for predicting the optimal lay-up of laminated composites. The DFP-QN method, originally proposed by Fletcher and Powell [18], was adopted by Waddoups et al. [19] and Kicher and Chao [20] for design of the optimal composite cylindrical shells. A quadratic interpolation of the objective function, including strength and buckling failure, was considered in the one-dimensional minimization problem. Kim and Lee [21] also applied DFP method for optimization of a curved actuator with piezoelectric fibers. The QN methods generally have higher convergence rate than CG method, although their performance is problem dependent and may change from one case to another.

Method of feasible directions (MFD) attempts to find out a move to a better point without violating any of the constraints. Since a composite lay-up design problem usually includes several inequality constraints, MFD has been a good candidate for solving this problem [22]. However, like other gradient-based methods, it is not always able to search out the global optima. It has been adapted to be used in combination with finite element analyses [23].

2.2 Specialized Algorithms

These methods are explicitly developed for optimizing composite laminates while exploiting a number of their properties to simplify the optimization process. Often developed for a particular application, they generally simplify the problem by restricting the design space with respect to allowable lay-up, loading condition and/or objective function. Since they are tailored to a specific design problem, they occasionally lose robustness when applied to a general optimization problem. However, when designed for a particular problem, they can be much faster than other optimization techniques.

Using lamination parameters [24], which are integrated trigonometric functions based on thickness of a laminate instead of lay-up variables, has the advantage of reducing the number of parameters required to express a laminate’s properties to a maximum of 12, regardless of the number of layers [25, 25].

Besides the promising advantage of using lamination parameters, the challenge in dealing with those parameters is that they are not independent and cannot be arbitrarily prescribed. Several authors, such as Fukunaga and Vanderplaats [27], and Grenestedt and Gudmundson [28] suggested the necessary conditions for different combinations of lamination parameters, but the complete set of sufficient conditions for all the 12 parameters is still unknown [29]. Miki [30] proposed a method to visualize the admissible range of lamination and their corresponding lay-up parameters. Just like the in-plane lamination diagram, the flexural lamination diagrams were also developed [31]. Fukunaga and Chou [32] adopted a similar graphical technique for laminated cylindrical pressure vessels. Lipton [33] developed an analytical method to find out the configuration of a three-ply laminate under in-plane loading conditions. Autio [34], Kameyama and Fukunaga [35], and Herencia et al. [36] employed GA to solve the inverse problem.

A layer-wise optimization technique optimizes the overall performance of a composite laminate by sequentially considering one or some of the layers within a laminate. This method performs with one layer or a subset of layers in the laminate, first requiring selection of the best initial laminate and then addition of the layer that best improves the laminate performance, which is usually achieved by an enumeration search [37]. Lansing et al. [15] determined the initial laminate by assuming the layers with ply angles of 0°, 90° and ± 45° carrying all the longitudinal, transverse and shear stresses respectively. Starting with a one-layer laminate, Massard [38] determined the best fiber orientation for single-ply laminate. Todoroki et al. [39] proposed two other approaches to find out the initial laminate. Narita [40], and Narita and Hodgkinson [41] endeavored to solve this problem while starting with a laminate having hypothetical layers with no rigidity. From the outermost layer, all the layers were sequentially replaced by an orthotropic layer and the optimal fiber orientation angle was determined by enumeration. The first solution derived was subsequently applied as an initial approximation for the next cycle. Farshi and Rabiei [42] proposed a method for minimum thickness design consisting of two steps. The first step aimed at introducing new layers to the laminate, while the second one examined the probability of replacing higher quality layers with weaker materials. Ghiasi et al. [43] applied layer separation technique to keep the locations of different layers unchanged when a layer had been added.

2.3 Direct Search Methods

While the analytical methods are known for their fast convergence rate, direct search methods have the advantage of requiring no gradient information of the objective function and constraints. This feature has a significant benefit because in composite laminate design, derivative calculations or their approximations are often costly or impossible to obtain. Direct search methods systematically lead to the optimal solution only by using function values from the preceding steps. As a result, several of these techniques have become popular for optimization of composite lay-up design, as described in the following paragraphs. Stochastic search algorithms, a sub-class of direct search methods “[…] are better alternatives to traditional search techniques […] they have been used successfully in optimization problems having complex design spaces. However, their computational costs are very high in comparison to deterministic algorithms” [44].

One of the first attempts in optimal design of composite laminates is the application of enumeration search, consisting of trying all the possible combinations of design variables and simply selecting the best combination. Although cumbersome, this technique was adopted to find out the lightest composite laminate during the 1970s [45]. Nelder and Mead (NM) method was employed by Tsau et al. [46] for optimal stacking sequence design of a laminated composite loaded with tensile forces, while evaluation of stresses was performed by an FEM. It has been reported by Tsau and Liu [47] that the NM method is faster and more accurate than a QN method for lay-up selection problems with smaller number of layers (i.e. less than 4). Foye [48] was the first researcher who employed a random search to determine the optimal ply orientation angles of a laminated composite plate. Graesser et al. [49] also adopted a random search, called improving hit and run (IHR), to find out a laminate with minimum number of plies that could safely sustain a given loading condition.

The SA technique, which mimics the annealing process in metallurgy, globalizes the greedy search process by permitting unfavorable solutions to be accepted with a probability related to a parameter called ‘temperature’. The temperature is initially assigned a higher value, which corresponds to more probability of accepting a bad solution and is gradually reduced based on a user-defined cooling schedule. Retaining the best solution is recommended in order to preserve the good solution [50]. It is the most popular method just after GA for stacking sequence optimization of composite laminates [51, 52]. Generation of a sequence of points that converges to a non-optimal solution is one of the major problems in SA. To overcome this shortcoming, several modifications of SA have been proposed, such as increasing the probability of sampling points far from the current point by Romeijn et al. [53] or employing a set of points at a time instead of only one point by Erdal and Sonmez [50]. To increase the convergence rate, Genovese et al. [54] proposed a two-level SA, including a ‘global annealing’ where all the design variables were perturbed simultaneously and a ‘local annealing’ where only one design variable was perturbed at a time. In order to prevent re-sampling of solutions, Rao and Arvind [55] embedded a Tabu search in SA, obtaining a method called Tabu embedded simulated annealing (TSA). Although SA is a good choice for the general case of optimal lay-up selection; however, it cannot be programmed to take advantage of the particular properties of a given problem.

GA is more flexible in this respect, although it is often computationally more time consuming [51]. In terms of [56], “GAs are excellent all-purpose optimization algorithms because they can accommodate both discrete and continuous valued design variables and search through nonlinear or noisy search spaces by using payoff (objective function) information only”. Callahan and Weeks [57], Nagendra et al. [58], Le Riche and Haftka [59], and Ball et al. [60] are among the first few researchers who adopted GA for stacking sequence optimization of composite laminates. It was employed for different objective functions, such as strength [59], buckling loads [56], dimensional stability [61], strain energy absorption [62], weight (either as a constraint or as an objective function to be minimized) [63], bending/twisting coupling [56], stiffness [62], fundamental frequencies [63], deflection [64] or finding out the target lamination parameters [65]. It was also applied for design of a variety of composite structures ranging from simple rectangular plates to complex geometries, such as sandwich plates [66], stiffened plates [58], bolted composite lap joints [67], laminated cylindrical panels [64] etc. GA can often be combined with finite element packages to analyze stress and strain characteristics of composite structures [64].

One of the main drawbacks of GA is its high computational intensity and premature convergence, which may happen if the initial population is not appropriately selected. Sargent et al. [51] compared GA with some other greedy algorithms (i.e. random search, greedy search and SA) and noticed that GA could provide better solutions than greedy searches, which in some instances, were unable to determine an optimal solution.

The PSO technique was applied by Suresh et al. [68] for optimal design of a composite box-beam of a helicopter rotor blade. Kathiravanand Ganguli [69] compared PSO with a gradient-based method for maximization of failure strength of a thin-walled composite box-beam, considering ply orientation angles as the design variables. Lopez et al. [70] illustrated the application of PSO for weight minimization of composite plates.

GA [71], ACO [72], PSO [73] and ABC [74] are the some of the most commonly used stochastic search algorithms in composite laminate optimization. However, there are only a few comparative studies on the performance of different stochastic search algorithms in composite laminate frequency parameter optimization. Apalak et al. [74] proposed the application of ABC algorithm to maximize the fundamental frequency of composite plates considering fiber angles as the design variables. It was observed that despite ABC algorithm having a simpler structure than GA, it was as effective as GA. Ameri et al. [71] adopted a hybrid NM algorithm and a GA technique to find out the optimal fiber angles to maximize fundamental frequency. It was concluded that the hybrid NM algorithm was faster and more accurate than GA. However, it is hard to state whether the superior performance of the NM algorithm was genuinely due to algorithmic superiority or because the authors chose to incorporate the design variables as continuous in NM algorithm, whereas, in GA, their discrete values were considered. Similarly, Koide et al. [72] presented the application of an ACO algorithm to maximize the fundamental frequency in cylindrical shells and compared the optimal solutions with GA-based solutions derived from the literature. It was noted that the optimal solutions obtained using ACO were almost comparable with those of GA technique. Tabakov and Moyo [75] compared the relative performance of GA, PSO and Big Bang-Big Crunch (BB-BC) algorithm while considering a burst pressure maximization problem in a composite cylinder. Hemmatian et al. [76] applied ICA techniques along with GA and ACO to simultaneously optimize weight and cost of a rectangular composite plate. It was reported that ICA would outperform both GA and ACO with respect to the magnitude of the objective function and constraint accuracy.

2.4 Discussions

Tables 1 and 2 provide a comprehensive list of research works on single-objective optimization of composite laminates, while some important works on multi-objective optimization of composite laminates are presented in Table 3. It can be observed from these tables that FEM has been the most preferred solver because of its ability to simulate laminates of various shapes and sizes. Additionally, various types of load conditions, discontinuities and boundary conditions can also be easily simulated in FEM to mimic real-world applications. It provides enormous flexibility in choosing from a wide array of elements. The degrees of freedom and order of elements can also be effortlessly adjusted.

The FSDT has been noticed to the most popular plate theory among the researchers during high-fidelity optimization of composite laminates. It is much more accurate as compared to CLPT and far less complicated than HSDT. However, it requires a good guess for the shear correction factor, which would be essential to account for the strain energy of shear deformation. Nevertheless, with a suitable value of shear correction factor, FSDT can estimate plate solutions that are comparable to HSDT, especially for thin and moderately thick plates. Majority of the works in the literature (and real-world applications) are either on thin plates or moderately thick ones, which have made FSDT so much popular.

Ply angles are the most preferred design variables in high-fidelity design and optimization of laminates. In most of the real-world applications, other parameters, like length, width, thickness, curvature of the laminate etc. cannot be easily altered as changing their values may generally require significant modifications in the plate design as well as associated components. Further, material variation may not always be feasible due to specialized nature of composite applications. For example, the composite material suitable for a structural load-bearing laminate may be unsuitable for an acoustics absorbent application or a rotor-blade application. From solution viewpoint, optimization of ply angles is an NP-hard problem. Further, the large design space of ply angles (± 90˚) poses significant challenges during the optimization phase. These reasons have encouraged the past researchers to attempt developing efficient strategies and algorithms to solve lay-up orientation optimization problems. For example, most researchers now treat lay-up orientation as a discrete optimization problem where ply angles with specific increments (say 5°, 15° or 45°) are only searched out during the optimization phase. This is not only computationally efficient but also resonates well with the traditional laminate manufacturing technologies that are unable to deal with arbitrary angles (say 19.21°). Lamination parameters are a convenient alternative to bypass discrete stacking sequence optimization. Moreover, lamination parameter optimization is a convex problem whose search space is a 12th-dimesnion hypercube with ± 1 bounds [26].

Weight reduction, buckling load maximization and frequency maximization have been the most common objective functions in high-fidelity optimization of laminates. It can also be noticed that majority of the researches have been conducted on rectangular composite plates. GA technique has been the most popular metaheuristic applied to high-fidelity optimization of laminates. However, gradient-based approaches have also been quite popular among the researchers. Researches on multi-objective high-fidelity optimization of laminates are much scarce which may be due to tremendous computational costs involved in such studies. Multi-objective GA has been the most popular optimizer employed for Pareto optimization of laminates.

3 State-of-the-Art in Metamodel-Based Design Optimization of Composite Laminates

High-fidelity design optimization is an important, accurate and powerful approach for determining the optimal parameters of a design problem. However, the finite element-based optimization strategy is quite time consuming and thus, computationally expensive. Based on the observations of Venkataraman and Haftka [11], optimization-related computational costs would depend on three indices, i.e. model complexity, analysis complexity and optimization complexity (see Fig. 2). For example, while evaluating a typical FEM run, say an 8-layer symmetric laminate using a 4 × 4 mesh, a 9-node isoparametric element-based Fortran program would require about 1/10th second for one function evaluation. However, an optimization trial of 50,000 function evaluations of the same FEM coupled with GA would roughly take 98 min, meaning that about 85–90% time would be consumed in objective function evaluations by the FEM core. The computation time would become a serious problem while considering the probabilistic nature of metaheuristic algorithms, each such optimization trial must be repeated multiple times to develop sufficient confidence in the predicted solutions. It has been noticed that despite continual advances in computing power, complexity of the analysis codes, such as finite element analysis (FEA) and computational fluid dynamics (CFD) seems to keep pace with the computing advancements [181]. In the past two decades, approximation methods and approximation-based optimization have attracted intensive attention of the researchers. These approaches approximate computation intensive functions with simple analytical models. This simple model is often called a metamodel and the process of developing a metamodel is known as metamodeling. Based on a developed metamodel, different optimization techniques can then be applied to search out the optimal solution, which is therefore referred to as metamodel-based design optimization (MBDO). The advantages of using a metamodel are manifold [182].

-

(a)

Efficiency of optimization is greatly improved with metamodels.

-

(b)

Because the approximation is based on sample points, which can be obtained independently, parallel computation (of sample points) is supported.

-

(c)

It can deal with both continuous and discrete variables.

-

(d)

The approximation process can help study the sensitivity of design variables, thus providing engineers insights into the problem.

Schematic showing types of complexity encountered in composite structure soptimization [11]

Considering all these advantages, it is thus advisable to deploy MBDO instead of high-fidelity design optimization when a little sacrifice in accuracy does not impose a serious problem. In fact, MBDO is now being widely recommended and employed for different applications in composite laminate structures (see Fig. 3) and research on this topic has gained significant interest recently.

Metamodeling and its role in support of engineering design optimization [182]

3.1 Metamodeling

A metamodel is a mathematical description developed based on a dataset of input and the corresponding output from a detailed simulation model, i.e. a model of a model (see Fig. 4). Once the model is developed, the approximate response (output) at any sample location can be evaluated and used in MBDO. The general form of a metamodel is provided as below:

where y(x) is the true response obtained from the developed model,\(\,\hat{y}(x)\,\,\) is the approximate response from the metamodel and ε is the approximation error. Typically, the following steps are involved in metamodeling (see Fig. 5):

Metamodel of a computational analysis for optimization applications produces approximations of the objective functions and constraints [183]

Concept of building a metamodel of a response for two design variables; a design of experiments, b function evaluations and c metamodel [184]

-

(a)

Choosing an appropriate sampling method for generation of data.

-

(b)

Choosing a model to represent the data.

-

(c)

Fitting the model to the observed data and its validation.

3.1.1 Sampling Strategy (Design of Experiments)

The process of identifying the desired sample points in a design space is often called the design of experiments (DOE). It can also be referred to as sampling plan [185]. Any metamodel generation process starts with a DOE, i.e. way to carefully plan experiments/simulations in advance so that the derived results are meaningful as well as valid. Ideally, any experimental design plan should describe how participants are allocated to experimental groups. A common method is a completely randomized design, where participants are assigned to groups at random. A second method is randomized block design, where participants are divided into homogeneous blocks before being randomly assigned to groups. The experimental design should minimize or eliminate confounding variables, which may offer alternative explanations for the experimental results. It should allow the decision maker to draw inferences about the existent relationship between independent and dependent variables. DOE reduces the variability to make it easier to find out differences in treatment outcomes. The most important principles in experimental design are mentioned as below:

-

(a)

Randomization: The random process implies that every possible allotment of treatments has the same probability, i.e. the order in which samples are drawn must not have any effect on the outcome of the metamodel. The purpose of randomization is to remove bias and other sources of uncontrollable extraneous variation. Another advantage of randomization (accompanied by replication) is that it forms the basis of any valid statistical test. Thus, with the help of randomization, there is a chance for every individual in the sample to become a participant in the study. This contributes to distinguishing a ‘true and rigorous experiment’ from an observational study and quasi-experiment [186].

-

(b)

Replication: The second principle of an experimental design is replication, which is a repetition of the basic experiment. While repeating an experiment multiple times, a more accurate estimate of the experimental error can be obtained. However, in context of in silico simulations, it has no consequence on the overall outcome, since FEM simulation-based data would have no variation even when repeated multiple times. Experimental error does not occur in high-fidelity FEM simulations because when the same experiment is run multiple times, same outputs are obtained.

-

(3)

Local control: It has been observed that all the extraneous sources of variation cannot be removed by randomization and replication. This necessitates a refinement of the experimental technique. In other words, a design needs to be chosen in such a manner that all the extraneous sources of variation are brought under control. The main purpose of local control is to increase efficiency of an experimental design by decreasing the experimental error. Simply stated, controlling sources of variation in the experimental results is local control. Again, in context of in silico simulations, it has no effect.

The DOE starts by choosing a training dataset. It refers to a set of observations used by the computer algorithms to train themselves to predict the process behavior. The computer algorithms learn from this dataset, and thus find relationships, develop understanding, make decisions and evaluate their confidence from the training data. Generally, better is the training data, better is the performance of a metamodel. In fact, quality and quantity of the training data have as much to do with the success of a metamodel as the algorithms themselves. In Kalita et al. [187], it has been shown how the quality of data would become an important factor in achieving a robust metamodel. A comprehensive list of various sampling strategies is reported in Fig. 6. Widely used ‘classic’ experimental designs include factorial or fractional factorial design [188], central composite design (CCD) [189], Box-Behnken [189], D-optimal design [190] and Plackett–Burman design [189].

3.1.2 Metamodeling Strategy

The act of developing an approximate model to fit a set of training data is the core of any metamodeling strategy. Metamodeling evolves from the classical DOE theory, where polynomial functions are used as response surfaces or metamodels. Besides the commonly used polynomial functions, Sacks et al. [191] proposed the use of a stochastic model, called kriging [192], to treat the deterministic response as a realization of a random function with respect to the actual system response. Neural networks have also been applied for generating response surfaces for system approximation [193]. Other types of models include RBFs [194], MARS [195], least interpolating polynomials [196] and inductive learning [197]. A combination of polynomial functions and ANNs has also been archived in [198]. Giunta and Watson [199] compared the performance of kriging model and PR model for a test problem, but no conclusion could be drawn with respect to the superiority of one model over the other. A comprehensive list of various metamodeling strategies is presented in Fig. 7. Additionally, Fig. 8 depicts the suitability of each traditional sampling method in various metamodeling strategies.

Surrogate modeling methods and corresponding sampling techniques [200]

3.1.3 Metamodel Validation

Validation of the accuracy of a metamodel with respect to the actual model or experiment is a prime task in completing the entire process of metamodeling. The objective of any metamodel is to represent the true model most accurately. Any metamodel should exhaustively and precisely capture all the information in the training dataset. In general, the performance of a metamodel representing the true model is validated based on the residuals. The difference between the metamodel value (yi) and true model value \((\hat{y}_{i} )\) is termed as residual.

where i represents the sample point among a total of n sample points. The algebraic sum of squares of residuals for the entire set of sample points is called SSR (squared sum of residuals).

Similarly, the total sum of squares (SST) is calculated using the following equation:

where \(\overline{y}\) represents the mean value of the sample points. The sum of squares for the model (SSM) can now be calculated as follows:

SSM = SST–SSR.

From the above equations, it is clear that the sum of squares of residuals is the fitting error. Thus, it is always desirable that it should be close to zero. Its zero value indicates that the metamodel perfectly fits the training data. But, it should be always kept in mind that a perfectly fit model does not guarantee that it would perform with the same accuracy on unknown design samples.

-

(a)

Goodness-of-fit metrics

Goodness-of-fit or how well the metamodel fits the training data is a common approach among the researchers to validate the accuracy of metamodels. The coefficient of determination (R2) is a statistic that provides some information about the goodness-of-fit of a model. Its value can be estimated using the following equation:

As shown in Kalita et al. [187], the inherent assumption of R2 is that all the model terms are made up of independent parameters and have an influence on the dependent parameter, which is not necessarily true. The R2adj corrects this presumption to a certain extent by penalizing the model when insignificant terms are added to the model.

where k is the number of variables. The R2pred goes a step further by constructing the model using all the data except the one that it predicts:

where \(\hat{y}_{i/i}\) is the observed \(\hat{y}_{i}\) value calculated by the model when the ith sample point is left out from the training set. This corresponds to the leave-one-out cross validation.

-

(b)

External validation metrics

All the three model accuracy metrics, i.e. R2, R2adj and R2pred are based on use or reuse of the training data. In Kalita et al. [187], the drawbacks of using R2s, and the importance of using independent testing data to have informed decisions regarding selection of the metamodels and their predictive power are discussed.

Thus, additional external validation metrics, like Q2F1 [201], Q2F2 [202] and Q2F3 [203] may also be used. The three metrics can be expressed as follows:

Equations (9) and (10) differ only in the treatment of the mean term. In Eq. (9), Q2F1 employs the mean value of the training data, whereas, mean value of the testing data is used in the calculation of Q2F2. This implies that Q2F2 contains no information regarding the training set since only testing dataset is used. On the other hand, Q2F3 attempts to remove any bias introduced in the estimations due to sample size, by dividing the total squared residual sum by the number of test samples and dividing the total squared sum of training data by the number of training samples. Consonni et al. [203] recently highlighted certain drawbacks of Q2F1 and Q2F2 in describing the predictive power of metamodels.

-

(c)

Error metrics

The R2-based metrics only provide an estimate of how much variation in a particular dataset is explained by the model. They render no information regarding the precision of the models. Precision, which determines, e.g. whether a model predicts frequencies with a standard error of 1 Hz or 10 Hz, is of great practical relevance in appraising quality of a metamodel. Root-mean-squared error (RMSE) is the standard deviation of residuals from the model [204]. It can be calculated from the test data using the following expression:

To calculate RMSE for training dataset, the errors in Eq. (12) are calculated for the training data and their squared sum is divided by ntrain. The RMSE can be a useful metric in identifying an appropriate metamodel, as a superior metamodel is always required to obtain an RMSE of 1 Hz for a lay-up metamodel encompassing (± 90°) range as opposed to one having a very small domain, say (± 10°). Since the residuals are squared in Eq. (12), a large residual for a particular sample point would have a greater influence on RMSE as compared to a sample point having a small residual in the same dataset. Thus, the calculation process for RMSE would provide more weight to the few samples with higher prediction error. This explicates why the researchers often tend to leave out 5% outliers in an effort to make better interpretations regarding the model. Due to this imbalanced nature of information provided by RMSE, a number of researchers have insisted on using mean absolute error (MAE) [205]. The MAE provides an absolute measure of prediction error in metamodels. It can be calculated for test data using the following equation:

A series of structural engineering test problems is solved in Kalita et al. [187] to identify the appropriate criteria for accepting or rejecting a metamodel. Additional insight into the predictive power of all these metrics is also included in Kalita et al. [187]. However, as stated by Chai and Draxler [206] “Every statistical measure condenses a large number of data into a single value […], any single metric provides only one projection of the model errors and, therefore, only emphasizes a certain aspect of the error characteristics. A combination of metrics […] is often required to assess model performance”.

3.2 Metamodel-Based Design Optimization

Any optimization algorithm can be coupled with metamodels to form the basic MBDO framework. Once a metamodel is identified, selection of the optimization algorithm becomes trivial because even less efficient algorithm becomes easily affordable. However, superior optimization algorithms would still outperform the inefficient ones.

Wang and Shan [182] classified the MBDO strategies into three types (see Fig. 9). The first strategy is the traditional sequential approach, i.e. fitting a global metamodel and then using it as a surrogate of the expensive function. This approach employs a relatively large number of sample points at the outset. It may or may not include a systematic model validation stage. In this approach, cross-validation is usually applied for the validation purpose. Its application is found in [189]. The second approach involves validation and/or optimization in the loop in deciding the re-sampling and re-modeling strategies. In [207], samples were generated iteratively to update the approximation to maintain the model accuracy. Osio and Amon [208] developed a multi-stage kriging strategy to sequentially update and improve the accuracy of surrogate approximations as additional sample points were obtained. Trust regions were also employed in developing several other methods to deal with the approximation models in optimization [209]. Schonlau et al. [210] described a sequential algorithm to balance local and global searches using approximations during constrained optimization. Sasena et al. [211] applied kriging models for disconnected feasible regions. Modeling knowledge was also incorporated in the identification of attractive design space [212].

Metamodel-based design optimization strategies: a sequential approach, b adaptive MBDO and c direct sampling approach [182]

Wang and Simpson [213] developed a series of adaptive sampling and metamodeling methods for optimization, where both optimization and validation were employed in forming the new sample set. The third approach is quite recent and it directly generates new sample points towards the optimal with the guidance of a metamodel [214]. Different from the first two approaches, the metamodel is not used in this approach as a surrogate in a typical optimization process. The optimization is realized by adaptive sampling alone and no formal optimization process is required. The metamodel is used as a guide for adaptive sampling and therefore, the demand for model accuracy is reduced. Its application needs to be explored for high-dimensional problems. If a metamodel is used instead of a true model, the optimization problem, stated in Eq. (1), would become:

subject to the constraint \(x_{i}^{\min } \, \le \,x_{i} \, \le \,x_{i}^{\max }\) where the tilde symbol denotes the metamodel for the corresponding function in Eq. (1). Often a local optimizer is applied to Eq. (14) to derive the optimal solution. A few methods have also been developed for metamodel-based global optimization.

One successful development can be found in [210], where the authors applied the Bayesian method to estimate a kriging model, and subsequently identified points in the space to update the model and perform the optimization. The proposed method, however, has to pre-assume a continuous objective function and a correlation structure among the sample points. A Voronoi diagram-based metamodeling method was also proposed where the approximation was gradually refined to smaller Voronoi regions and the global optimal could be obtained [215]. Since Voronoi diagram arises from computational geometry, the extension of this idea to problems with more than three variables may not be efficient. Global optimization based on multipoint approximation and intervals was performed in [216]. Metamodeling was also employed to improve the efficiency of GAs [217, 218]. Wang et al. [219, 220] developed an adaptive response surface method (ARSM) for global optimization. A so-called Mode-Pursuing Sampling (MPS) method was developed in [214], where no existing optimization algorithm was applied. The optimization was realized through an iterative discriminative sampling process. The MPS method demonstrated high efficiency for optimization with expensive functions on a number of benchmark tests and low-dimensional design problems.

Recent approaches to solve multi-objective optimization problems with black-box functions need to approximate each single objective function or directly approximate the Pareto optimal frontier [221, 221, [221, 221]. Wilson et al. [222] adopted the surrogate approximation in lieu of the computationally expensive analyses to explore the multi-objective design space and identify the Pareto optimal points, or the Pareto set from the surrogate. Li et al. [223] applied a hyper-ellipse surrogate to approximate the Pareto optimal frontier for bi-criteria convex optimization problems. If the approximation is not sufficiently accurate, the Pareto optimal frontier obtained using the surrogate approximation would not be a good approximation of the actual frontier. Recent work by Yang et al. [224] proposed the first framework dealing with the approximation models in multi-objective optimization (MOO). In that framework, a GA-based method was employed with a sequentially updated approximation model. It differed from [222] by updating the approximation model in the optimization process. The fidelity of the identified frontier solutions, however, would still depend on the accuracy of the approximation model. The work in [224] also suffered from the problems of GA-based MOO algorithm, i.e. the algorithm had difficulty in finding out the frontier points near the extreme points (the minimum obtained by considering only one objective function). Shan and Wang [225] recently developed a sampling-based MOO method where metamodels were employed only as a guide. New sample points were generated towards or directly on the Pareto frontier.

In all the MBDO methods which are often presented as a viable alternative to high-fidelity optimization, developing the accurate and reliable metamodels forms the basic goal. This is because by using a metamodel, the computation cost becomes inconsequential and thus even a less efficient metaheuristic search algorithm becomes affordable. The estimation power of the metamodel determines the effectiveness of the optimization task, because if the design space is not accurately modeled, the metaheuristic may locate a false global optimal.

3.3 Discussions

Considering the above facts, the literature on composite laminate metamodeling other than the optimization applications, like stochastic application, reliability analysis, damage identification etc. is also reviewed to better understand the metamodeling process. However, unlike in conventional literature review, this literature review is reported in tabulated form (see Tables 4 and 5).

Since the last few years, metamodels have gained immense popularity in structural analysis of laminates. Low computational requirement and abundance of machine learning algorithms to choose from have been the prime motivators for the researchers. As observed from Table 4, significant number of metamodel-based studies has been carried out on uncertainty quantification (UQ). The micromechanical properties (like elastic modulus, shear modulus, Poisson’s ratio etc.) and ply angles of the laminates have been generally considered as the sources of stochasticity by the researchers. Most of the works have considered Latin hypercube method for sampling the training data. Almost all the works have relied on FEM and FSDT to simulate the necessary data for training the metamodels. However, it should be pointed out that the metamodels for UQ studies are generally local in nature, i.e. they are trained for only a small section of the possible design space of the parameters. Thus, in most of the cases, remarkable accuracy (error < 1%) of the metamodels has been achieved. A handful of works on damage detection, predictive modelling and reliability analysis has also been available in the literature.

Table 5 summarizes the works on metamodel-based optimization of laminates. In most of the cases, response surface methodology (RSM) (polynomial regression) has been employed by the past researchers. Traditional DOEs, like CCD, BBD and D-optimal designs have been used in those works. The accuracy of such metamodels, especially those considering ply angles as the design variables, is bound to be low, primarily due to small training dataset and insufficient sampling capacity of the traditional DOEs to accurately map the complex landscape. However, it should be noted that most of those studies have reported excellent accuracy on training data. Further, in most of those RSM-based metamodeling studies, no independent testing data has been provided that makes it difficult to accurately gauge the overall accuracy of those metamodels. Some recent studies have adopted neural networks for metamodel-based laminate optimization. GA has been the most popular optimizer for single-objective optimization studies. A few studies on Pareto optimization have also been available, mostly dealing with multi-objective GA technique

3.4 Limitations

Selection of an appropriate metamodeling algorithm is a key step in any MBDO process. Many comparative studies have been made over the years to guide the selection of metamodel types, e.g. Dey et al. [200], Jin et al. [287], Clarke et al. [288], Kim et al. [289], Li et al. [290] and Shi et al. [291]. Despite this, it is not possible to draw any decisive conclusion regarding the all-purpose superiority of any of the metamodel types. In fact, efficiency and generalization of metamodels for each application is constrained due to the inherent assumptions and algorithms used [292].

However, as noticed from the literature survey, in structural engineering applications, LR, PR (RSM) and ANN are commonly employed in MBDO studies. The LR is simple to perform and a number of ready-to-use software platforms are available to implement it, thereby making it extremely popular. However, it is not useful for modelling of non-linear data [293]. Similarly, PR, despite its simplicity and widespread applicability, is often restricted in the literature to second-order [292]. It is seldom preferred for higher-order polynomials as the adequacy of the model is solely determined by systematic bias in deterministic situations [293]. ANNs are particularly suitable for deterministic applications and can be quickly deployed once trained. However, ANNs have relatively higher training time than LR and PR, and suffer from improper training if suitable hyperparameters are not selected [294]. In case of all the metamodels, a trade-off between the accuracy desired from the metamodel and time available to develop it needs to be decided. Thus, there is clearly no universally superior metamodel. In fact, each metamodel has its own advantages and disadvantages which coupled with the size, complexity and level of non-linearity of the problem (or phenomena to be modelled) can pose a serious decision making question to the user regarding which algorithm to choose.

Since the metamodels are dependent on high-fidelity data from physical experiments or simulation models, selection of suitable sampling points is a critical task [295]. If the training data used in metamodeling is skewed or does not adequately represent the true nature of the system or phenomena to be modelled, it would lead to bias and hence, inaccurate predictions. In general, for metamodeling, space filling sampling methods, like Latin hypercube sampling, Hammersley sampling etc. are found to be better than classical design of experiments, like factorial design, Box Behnken, CCD etc. [296, 296]. Moreover, the economic cost associated with physical experiments or computational expensiveness of high-fidelity data also needs to be addressed [298].

Another challenge of metamodels lies in its approximate nature which would introduce an added element of uncertainty to the analysis [293]. This problem is more in complex use cases, like structural engineering where the design space to be modelled is often too vast and complex. Any optimization search process when conducted on an ill-fitted metamodel would lead to erroneous optimal parameter prediction.

The lack of generalizability of metamodels is a serious hindrance to its real world applicability. Most metamodels have excellent interpolation but lack extrapolation capability [298]. In addition, there are often several parameters that must be tuned when a metamodel is developed. This signifies that the results can differ considerably depending on how well those parameters are tuned, and consequently, the results would also depend on the approach deployed to develop the metamodel. The lack of interpretability in many machine learning-based metamodels is also a serious hindrance in MBDO [299].

4 Conclusions

Optimizing composite structures to exploit their maximum potential is a realistic application with promising returns. In this paper, the majority of publications on optimization of composite laminated structures are reviewed and compiled. Based on the application of optimization techniques, the reviewed research papers are primarily classified into high-fidelity optimization and metamodel-based optimization. While high-fidelity optimization is characterized by excellent accuracy of the numerical solutions and is generally time consuming; the metamodel-based optimization can be quickly deployed and is cost-efficient, but it sacrifices some amount of numerical accuracy. Overall, from the comprehensive review of the literature, it can be concluded that:

-

(a)

FEM is by far the most popular numerical solver for modeling of composite structures. It is primarily due to its ability to model various complex geometries and boundary conditions. The liberty to choose from a plethora of elements with adjustable degrees of freedom according to the requirements also makes FEM extremely versatile.

-

(b)

FSDT is the most widely employed shear deformation theory in optimization of laminate structures. This is because it is less complex and has comparable accuracy with HSDT for thin and moderately thick plates.

-

(c)

Ply angle or stacking sequence is the most favored design variable for custom designing of laminates. This is perhaps because, for a given application, the other parameters, like geometry, thickness, material etc. are hard-to-change variables, i.e. changing their values may need extensive design changes in the structure and associated components. Moreover, lay-up orientation optimization is an NP-hard problem and the range of ply angles is ± 90˚, which makes the search space quite huge. Thus, most likely, any design methodology that succeeds to optimize lay-up orientations should conveniently succeed on material-as-design variable and geometry-as-design variable problems.

-

(d)

For high-fidelity design optimization, most of the pioneering works were carried out using gradient-based or mathematical direct search methods. However, subsequent researches have mostly used metaheuristics (90% of them being GA) to find out superior results and in cases, have shown the lacuna of gradient-based approaches in tackling local optima.

-

(e)

Metamodels for laminate modeling have become extremely popular since the last decade with majority of the works being concentrated in UQ and optimization. The computational cost of UQ-based studies involving multiple geometric, material and ply angle parameters is astronomical and thus, metamodels are the most promising option. However, majority of UQ-based studies have employed very small design parameter ranges, thereby making the metamodels local but with extremely high accuracy.

This review paper may have the following future scopes:

-

(a)

In allied fields, several recent metaheuristics, like GWO, WOA etc. have been appeared to be more efficient as compared to older generation metaheuristics. High-fidelity optimization studies involving those metaheuristics may yield better results leading to computational cost saving.

-

(b)

Despite their significant practical applications, studies involving laminated structures with holes, discontinuities or cut-outs are non-existent. This may be due to astronomical cost of high-fidelity optimization or inability to build high-accuracy global metamodels when such discontinuities are considered. Works towards using machine learning techniques to develop global metamodels for such cases may lead to promising results.

-

(c)

Optimization of laminated structures under uncertainties has gained limited attention. Probabilistic and non-probabilistic optimization studies on laminated plates and shells are the need of the hour.

-

(d)

Further research is also required on designing better sampling strategies which can more accurately represent the complexity of design landscape in stacking sequence optimization problems.

-

(e)

Detailed research on the impact of assumptions during metamodeling, effectiveness of hybrid metamodels and ensemble metamodels is also lacking in the literature. Owing to the curse of dimensionality, most machine learning-based metamodels are complex for high-dimensional problems and still treated as black-box type approaches. By integrating the designer’s domain knowledge and leveraging the knowledge derived from the mechanics of the problem, the black-box MBDO problems can perhaps be transformed to grey-box or white-box problems.

In essence, while high-fidelity design optimization methodology has overwhelming accuracy, the metamodel-based design optimization methodology has trifling computational time. As such, it is difficult to recommend one approach over the other. The final decision lies with the design engineer, who after carefully considering the application and its possible ramifications, should answer, what is more important—accuracy or computational time?

Abbreviations

- ABC:

-

Artificial bee colony

- ACO:

-

Ant colony optimization

- aeDE:

-

Adaptive elitist differential evolution

- ALO:

-

Ant lion optimization

- ANN:

-

Artificial neural network

- BBD:

-

Box Behnken design

- CBO:

-

Colliding bodies optimization

- CBT:

-

Classical beam theory

- CCD:

-

Central composite design

- CLPT:

-

Classical laminated plate theory

- CS:

-

Cuckoo search

- DA:

-

Dragonfly algorithm

- DE:

-

Differential evolution

- DL:

-

Deep learning

- DMO:

-

Discrete material optimization

- DMS:

-

Direct multi search

- DNN:

-

Deep neural network

- DQM:

-

Differential quadrature method

- DT:

-

Decision tree

- EDMS:

-

Evolutionary direct multi search

- ESL:

-

Equivalent single-layer laminate

- FEM:

-

Finite element method

- FSDT:

-

First-order shear deformation theory

- GA:

-

Genetic algorithm

- GBM:

-

Gradient-based method

- GBNM:

-

Globalized bounded Nelder-Mead algorithm

- GDA:

-

Gradient descent algorithm

- GHDMR:

-

General high dimensional model representation

- GLODS:

-

Global and local optimization using direct search

- GP:

-

Genetic programming

- GPR:

-

Gaussian process regression

- GRG:

-

Generalized reduced gradient

- GS:

-

Golden search

- GWO:

-

Grey wolf optimizer

- HS:

-

Harmony search

- HSDT:

-

Higher-order shear deformation theory

- ICA:

-

Imperialist competitive algorithm

- IP:

-

Integer programming

- LHS:

-

Latin hypercube sampling

- LOA:

-

Layerwise optimization approach

- LR:

-

Linear regression

- MARS:

-

Multivariate adaptive regression splines

- MCS:

-

Monte Carlo simulation

- MEGO:

-

Multi-objective efficient global optimization

- MFD:

-

Modified feasible direction

- MFO:

-

Moth flame optimization

- MLS:

-

Moving least square

- MOGA:

-

Multi-objective genetic algorithm

- MOPSO:

-

Multi-objective particle swarm optimization

- MP:

-

Mathematical programming

- MVO:

-

Multi-verse optimizer

- NLPQL:

-

Non-linear programming by quadratic Lagrangian

- NSGA-II:

-

Nondominated sorting genetic algorithm II

- PCE:

-

Polynomial chaos expansion

- PC-Kriging:

-

Polynomial chaos-kriging

- PNN:

-

Polynomial neural network

- PR:

-

Polynomial regression

- PSO:

-

Particle swarm optimization

- RBF:

-

Radial basis function

- RFR:

-

Random forest regression

- R-R:

-

Rayleigh Ritz method

- RS:

-

Random sampling

- RS-HDMR:

-

Random sampling-high dimensional model representation

- SA:

-

Simulated annealing

- S-FEM:

-

Smoothed finite element method

- SLP:

-

Sequential linear programming

- SPS:

-

Sequential permutation search

- SQP:

-

Sequential quadratic programming

- SSA:

-

Salp swarm algorithm

- SVR:

-

Support vector regression

- TLBO:

-

Teaching–learning-based optimization

- TSDT:

-

Third-order shear deformation theory

- WOA:

-

Whale optimization algorithm

References

Daniel IM, Ishai O, Daniel IM, Daniel I (1994) Engineering mechanics of composite materials. Oxford University Press, New York

Jones RM (1998) Mechanics of composite materials. CRC Press, London

Rao SS (2009) Engineering optimization: theory and practice. John Wiley & Sons, Hoboken, NJ

Spall JC (2012) Stochastic optimization. In: Handbook of Computational Statistics, Springer, 173–201

Eschnauer H, Koski J, Osyczka A (1990) Multicriteria design optimization: procedures and application. Springer-Verlag, Berlin

Savic D (2002) Single-objective vs. multiobjective optimisation for integrated decision support. Integr Assess Decis Support 1:7–12

Pardalos PM, Žilinskas A, Žilinskas J (2017) Non-convex multi-objective optimization. Springer, London

Mukherjee R, Chakraborty S, Samanta S (2012) Selection of wire electrical discharge machining process parameters using non-traditional optimization algorithms. Appl Soft Comput 12(8):2506–2516

Fang C, Springer GS (1993) Design of composite laminates by a Monte Carlo method. J Compos Mater 27:721–753

Abrate S (1994) Optimal design of laminated plates and shells. Compos Struct 29:269–286

Venkataraman S, Haftka RT (1999) Optimization of composite panels—a review. In: Proceedings—American Society for Composites, 479–488

Setoodeh S, Abdalla MM, Gürdal Z (2006) Design of variable stiffness laminates using lamination parameters. Compos B Eng 37:301–309

Sandhu RS (1971) Parametric study of optimum fiber orientation for filamentary sheet. Air Force Flight Dynamics Lab., AFFDL/FBR WRAFB, TM-FBC-71–1, Ohio, USA

Cairo RP (1970) Optimum design of boron epoxy laminates. In: TR AC-SM-8089, Grumman Aircraft Engineering Corporation Bethpage, New York

Lansing W, Dwyer W, Emerton R, Ranalli E (1971) Application of fully stressed design procedures to wing and empennage structures. J Aircr 8:683–688

Hirano Y (1979) Optimum design of laminated plates under axial compression. AIAA J 17:1017–1019

Davidon WC (1991) Variable metric method for minimization. SIAM J Optim 1:1–17

Fletcher R, Powell MJD (1963) A rapidly convergent descent method for minimization. Comput J 6:163–168

Waddoups ME, McCullers LA, Olsen FO, Ashton JE (1970) Structural synthesis of anisotropic plates. In: Proc. of AIAA/ASME 11th Structural Dynamics and Materials Conference, Denver, Colorado 1–8

Kicher TP, Chao TL (1971) Minimum weight design of stiffened fiber composite cylinders. J Aircr 8:562–569

Kim C, Lee DY (2003) Design optimization of a curved actuator with piezoelectric fibers. Int J Mod Phys B 17:1971–1975

Saravanos DA, Chamis CC (1990) An integrated methodology for optimizing the passive damping of composite structures. Polym Compos 11:328–336

Ha SK, Kim DJ, Sung TH (2001) Optimum design of multi-ring composite flywheel rotor using a modified generalized plane strain assumption. Int J Mech Sci 43:993–1007

Tsai SW (1992) Theory of composites design. Think composites Dayton, Ohio, 6–13

Gürdal Z, Haftka RT, Hajela P (1999) Design and optimization of laminated composite materials. John Wiley & Sons, New York

Macquart T, Maes V, Bordogna MT, Pirrera A, Weaver PM (2018) Optimisation of composite structures-enforcing the feasibility of lamination parameter constraints with computationally-efficient maps. Compos Struct 192:605–615

Fukunaga H, Vanderplaats GN (1991) Stiffness optimization of orthotropic laminated composites using lamination parameters. AIAA J 29:641–646

Grenestedt JL, Gudmundson P (1993) Layup optimization of composite material structures. Optimal design with advanced materials. Elsevier, Amsterdam, pp 311–336

Hammer VB, Bendsoe MP, Lipton R, Pedersen P (1997) Parametrization in laminate design for optimal compliance. Int J Solids Struct 34:415–434

Miki M (1984) Material design of fibrous laminated composites with required flexural stiffness. Mechanical behaviour of materials. Elsevier, Stockholm, pp 465–471

Miki M, Sugiyamat Y (1993) Optimum design of laminated composite plates using lamination parameters. AIAA J 31:921–922

Fukunaga H, Chou TW (1988) Simplified design techniques for laminated cylindrical pressure vessels under stiffness and strength constraints. J Compos Mater 22:1156–1169

Lipton R (1994) On optimal reinforcement of plates and choice of design parameters. Control Cybern 23:481–493

Autio M (2000) Determining the real lay-up of a laminate corresponding to optimal lamination parameters by genetic search. Struct Multidiscip Optim 20:301–310

Kameyama M, Fukunaga H (2007) Optimum design of composite plate wings for aeroelastic characteristics using lamination parameters. Comput Struct 85:213–224

Herencia JE, Weaver PM, Friswell MI (2007) Optimization of long anisotropic laminated fiber composite panels with T-shaped stiffeners. AIAA J 45:2497–2509

Kere P, Koski J (2002) Multicriterion optimization of composite laminates for maximum failure margins with an interactive descent algorithm. Struct Multidiscip Optim 23:436–447

Massard TN (1984) Computer sizing of composite laminates for strength. J Reinf Plast Compos 3:300–345

Todoroki A, Sasada N, Miki M (1996) Object-oriented approach to optimize composite laminated plate stiffness with discrete ply angles. J Compos Mater 30:1020–1041

Narita Y (2003) Layerwise optimization for the maximum fundamental frequency of laminated composite plates. J Sound Vib 263(5):1005–1016

Narita Y, Hodgkinson JM (2005) Layerwise optimisation for maximising the fundamental frequencies of point-supported rectangular laminated composite plates. Compos Struct 69(2):127–135

Farshi B, Rabiei R (2007) Optimum design of composite laminates for frequency constraints. Compos Struct 81(4):587–597

Ghiasi H, Pasini D, Lessard L (2008) Layer separation for optimization of composite laminates. In: Proc. of International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Brooklyn, 1247–1253

Kayikci R, Sonmez FO (2012) Design of composite laminates for optimum frequency response. J Sound Vib 331(8):1759–1776

Waddoups ME (1969) Structural airframe application of advanced composite materials-analytical methods. Air Force Materials Laboratory, Wright-Patterson Air Force Base, Ohio

Tsau LR, Chang YH, Tsao FL (1995) The design of optimal stacking sequence for laminated FRP plates with inplane loading. Comput Struct 55:565–580

Tsau LR, Liu CH (1995) A comparison between two optimization methods on the stacking sequence of fiber-reinforced composite laminate. Comput Struct 55:515–525

Foye R (1969) Advanced design for advanced composite airframes. Airforce Materials Laboratory, Wright-Patterson Air Force Base, Ohio, AFML TR-69–251.

Graesser DL, Zabinsky ZB, Tuttle ME, Kim GI (1991) Designing laminated composites using random search techniques. Compos Struct 18:311–325

Erdal O, Sonmez FO (2005) Optimum design of composite laminates for maximum buckling load capacity using simulated annealing. Compos Struct 71:45–52

Sargent PM, Ige DO, Ball NR (1995) Design of laminate composite layups using genetic algorithms. Eng Comput 11:59–69

Lombardi M, Haftka R, Cinquini C (1992) Optimization of composite plates for buckling by simulated annealing. In: Proc. of 33rd Structures, Structural Dynamics and Materials Conference, Dallas, 2552–2563s

Romeijn HE, Zabinsky ZB, Graesser DL, Neogi S (1999) New reflection generator for simulated annealing in mixed-integer/continuous global optimization. J Optim Theory Appl 101:403–427

Genovese K, Lamberti L, Pappalettere C (2005) Improved global—local simulated annealing formulation for solving non-smooth engineering optimization problems. Int J Solids Struct 42:203–237

Rao ARM, Arvind N (2007) Optimal stacking sequence design of laminate composite structures using tabu embedded simulated annealing. Struct Eng Mech 25:239–268

Soremekun G, Gürdal Z, Haftka RT, Watson LT (2001) Composite laminate design optimization by genetic algorithm with generalized elitist selection. Comput Struct 79(2):131–143

Callahan KJ, Weeks GE (1992) Optimum design of composite laminates using genetic algorithms. Compos Eng 2:149–160

Nagendra S, Haftka RT, Gürdal Z (1992) Stacking sequence optimization of simply supported laminates with stability and strain constraints. AIAA J 30:2132–2137

Le Riche R, Haftka RT (1993) Optimization of laminate stacking sequence for buckling load maximization by genetic algorithm. AIAA J 31:951–956

Ball NR, Sargent PM, Ige DO (1993) Genetic algorithm representations for laminate layups. Artif Intell Eng 8:99–108

Le Riche R, Gaudin J (1998) Design of dimensionally stable composites by evolutionary optimization. Compos Struct 41:97–111

Potgieter E, Stander N (1998) The genetic algorithm applied to stiffness maximization of laminated plates: review and comparison. Struct Optim 15:221–229

Sivakumar K, Iyengar NGR, Deb K (1998) Optimum design of laminated composite plates with cutouts using a genetic algorithm. Compos Struct 42(3):265–279

Walker M, Smith RE (2003) A technique for the multiobjective optimisation of laminated composite structures using genetic algorithms and finite element analysis. Compos Struct 62:123–128

Todoroki A, Haftka RT (1998) Stacking sequence optimization by a genetic algorithm with a new recessive gene like repair strategy. Compos B Eng 29(3):277–285

Lin CC, Lee YJ (2004) Stacking sequence optimization of laminated composite structures using genetic algorithm with local improvement. Compos Struct 63(3–4):339–345

Kradinov V, Madenci E, Ambur DR (2007) Application of genetic algorithm for optimum design of bolted composite lap joints. Compos Struct 77:148–159

Suresh S, Sujit PB, Rao AK (2007) Particle swarm optimization approach for multi-objective composite box-beam design. Compos Struct 81:598–605

Kathiravan R, Ganguli R (2007) Strength design of composite beam using gradient and particle swarm optimization. Compos Struct 81(4):471–479

Lopez RH, Lemosse D, de Cursi JES, Rojas J, El-Hami A (2011) An approach for the reliability based design optimization of laminated composites. Eng Optim 43(10):1079–1094

Ameri E, Aghdam MM, Shakeri M (2012) Global optimization of laminated cylindrical panels based on fundamental natural frequency. Compos Struct 94(9):2697–2705

Koide RM, França GVZD, Luersen MA (2013) An ant colony algorithm applied to lay-up optimization of laminated composite plates. Latin Am J Solids Struct 10(3):491–504

Bargh HG, Sadr MH (2012) Stacking sequence optimization of composite plates for maximum fundamental frequency using particle swarm optimization algorithm. Meccanica 47(3):719–730

Apalak NK, Karaboga D, Akay B (2014) The artificial bee colony algorithm in layer optimization for the maximum fundamental frequency of symmetrical laminated composite plates. Eng Optim 46(3):420–437

Tabakov PY, Moyo S (2017) A comparative analysis of evolutionary algorithms in the design of laminated composite structures. Sci Eng Compos Mater 24(1):13–21

Hemmatian H, Fereidoon A, Shirdel H (2014) Optimization of hybrid composite laminate based on the frequency using imperialist competitive algorithm. Mech Adv Composite Struct 1(1):37–48

Haftka RT, Walsh JL (1992) Stacking-sequence optimization for buckling of laminated plates by integer programming. AIAA J 30(3):814–819

Grierson DE, Pak WH (1993) Optimal sizing, geometrical and topological design using a genetic algorithm. Struct Optim 6(3):151–159