Abstract

Image stitching has a wide range of applications in computer vision/graphics and virtual reality. Seam estimation is one of the key steps in image stitching. This step can relieve ghosts and artifacts that were generated by misalignment or moving objects in the overlap region. This paper presents a fast and robust seam estimation method (FARSE) by defining gray-weighted distance and gradient-domain region of differences to avoid visible seams and ghosting. The optimal seam is estimated by searching in two weighted matrices, namely cost matrix and value matrix. The proposed method could be simply implemented. Results indicate that the FARSE method is scale-invariant and it is fast and more robust than the other methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Image alignment and stitching is one of the most important fields in the computer vision and graphics [1]. Since most cameras have narrow viewing angle, generating panoramic images is essential that can show large range view of the environment. Due to some problems, misalignment, illumination difference and moving objects, visible seams can occur in overlap region (OR). Image stitching algorithms blend images in a seamless manner. To this, the variation in exposure and the existence of moving objects in OR between images should be examined and corrected [2,3,4,5,6,7,8,9,10,11,12].

In this paper, a new seam estimation method is introduced to find the optimal seam. FARSE uses linear search between values in two weighted matrices. These are calculated based on image differences in RGB and gradient domain. Gray-weighted distances are calculated in gradient domain, so the estimated seam is scale-invariant and same seam could be calculated by downscaling.

The paper was structured as follows. In Sect. 2, the previous works were briefly described. Section 3 mainly reports the main steps of the proposed method. Section 4 illustrates registration method, and Sect. 5 depicts seam estimation algorithm. In Sect. 6, the results of the proposed method are compared with the other methods and finally the paper is concluded.

2 Related Work

There are numerous research literature and several commercial applications about the image stitching [1,2,3,4,5,6,7,8,9,10,11,12, 42,43,44]. Image stitching algorithms blend the images in a seamless manner, so the variation in exposure and the existence of moving objects in OR should be examined and corrected.

The simplest way to minimize visible seams is smoothing the OR between the images. Feathering and center-weighting or alpha blending approaches [13,14,15,16,17,18,19,20,21] take a simple or weighted average value at each pixel, heavy weight pixels near image center and down weight pixels near the edge. Also taking a median filter can remove visible movement in OR [13].

These methods do not work very well, because illumination differences, misalignment, blurring, and ghosting are visible. Weighted averaging with a distance map is often called feathering [1]. Some methods select pixels values in OR from images by increasing distance map values [22, 23]. These methods decide which pixels from which images will be shown in a panoramic image. However, some artifacts can occur (Fig. 16e). Other approaches define a seam between regions where intensity difference between the images in OR is minimum. Some methods used dynamic programming and search to find seam by starting from one edge to another in OR [4, 24]. Uyttendaele et al. [19] defined region of difference in OR where the images are dissident and then a graph is constructed with the region of differences. In this graph, edges indicate artifacts. So minimum vertices set could be removed by weighted vertex cover algorithm (Fig. 16f) [25, 27]. Agarwala et al. [14] proposed the interactive system that optimizes the sum of two objective functions. The first function is the image-objective that user selects it and indicates which pixels produce the good result. The second function is the interaction penalty that shows seam cost of placing a seam between pixels. Different optimization methods have been developed to minimize the sum of the two objective functions, including simulated annealing, graph cuts, and so on [26,27,28].

A lot of optimal seam finding methods were introduced [14, 27,28,29,30,31,32,33] which used various seam cost and minimized it by graph cut algorithm. Kwatra et al. [29] used the Euclidean metric color difference in energy function while Agarwala et al. [14] used gradient difference in their energy function. Zhang et al. [33] proposed a new method to eliminate misaligned areas with similar colors by combining alignment errors and a Gaussian-metric color difference.

Some recent methods combined homography warp with minimum seam cost, which improves warping to find best images alignment [34,35,36]. Li et al. [37] proposed a new method based on energy minimization in the seam estimation. They considered nonlinearity and the nonuniformity of human perception in energy minimization, so the estimated seam is not visible in OR.

3 Overview of the Proposed Method

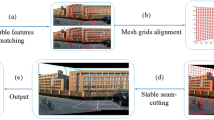

As shown in Fig.1, the proposed system takes input images and the SIFT [38] is used to find image features and nearest neighbor distance is applied to match them. Then, outlier features are removed, and best homography matrix between the pair images is found by RANSAC [39] algorithm.

Once the images are registered, in order to de-ghosting in OR, the optimal seam is estimated. Two weighted matrices are calculated, namely cost and value matrices, to find an optimal seam. The cost matrix specifies the pixels in which the optimal seam can be selected (candidate pixels), and value matrix specifies which pixel can be selected from candidate pixels.

At the end of the process, two images have been mapped to a final composite surface by the estimated seam.

4 Image registration

In the first step of the panoramic image creation, the correspondence relationships among images with varying degrees of overlap should be discovered. To this, at the first step, distinctive features are extracted from images and are matched to establish a global correspondence, and then the geometric transformation between the images is estimated.

4.1 Feature extraction and matching

To extract key points from images, the SIFT [38] feature is used. These feature points are invariant to image rotation and scale changes and are applied to provide a robust matching.

Nearest neighbor is used to match features, and ambiguous matches are rejected. A match is considered ambiguous when it is not significantly better than the second-best match. In Fig. 2, extracted SIFT [38] features from two images with OR are shown, (a) is all extracted features and (b) is matched features.

a, b Images with OR [45]. c, d Overlap regions. e Calculated mask (images differences)

4.2 Homography matrix

After finding image features and matching them, the transformation matrix between pair images is computed. But the all matched features are not inlier, so inlier matched features should be selected to find the best motion parameters between pair images. To this, the best consistent set of matches under a projective transformation is founded by RANSAC [39]. Random sample consensus (RANSAC) uses a set of randomly selected samples to estimate the best image transformation parameters by the direct linear transformation (DLT) method [40].

5 Stitching

To address the problems, such as blurring or ghosting, scene movement, and varying image exposures, stitching algorithm is applied. So after finding the images correspondences, image stitching algorithms blend the images in a seamless manner.

5.1 Seam estimation

Once the registration parameters are calculated, we need to decide which pixels and from which images should be selected to put in the final stitched image, and how to combine these pixels to minimize visible seams and ghosting. An effective method is to put the seams in areas where the images are similar so that transitions from one source to another are not visible [1].

In order to use all information about the images in the final stitched image, seam must be passed through the intersection of the images (Fig. 3).

Calculate GrDist and Value matrix The problem at hand is the search in the space of all the possible paths from one intersection point to another in the OR. If we consider the search space as a matrix, then finding the path between points is difficult, because there exist areas which the seam cannot cross. To simplify the procedure, the distances of all the OR points from one of the intersection points are calculated. These calculated distances are stored in a matrix (GrDist), which is the same size as the OR matrix. While the GrDist demonstrates how to move to the intersection points from another point, another matrix (Value) is needed to show the cost of crossing from points. The following is the description of the proposed method to calculate GrDist and Value matrices.

Eq. (1) is used to determine points which cannot be selected in the seam

where \({\textit{ImgDif}}\) is absolute differences of images in OR, \(\textit{max} \left( {{\textit{ImgDif}}_r } \right) \), \(\textit{max} \left( {{\textit{ImgDif}}_c } \right) \), \(\textit{min} \left( {{\textit{ImgDif}}_r } \right) \), and \(\textit{min} \left( {{\textit{ImgDif}}_c } \right) \) are maximum and minimum values in the row (subscript r) and column (subscript c) of image differences, respectively. The \({\textit{mean}}\) is the average and \({\textit{mean}}_{i\left( {{\textit{RGB}}} \right) } \) are the average in each separated R, G, and B spectrum. \({\textit{Thr}}_{{\textit{RGB}}} \) is the maximum value in OR, and mask is the image differences specifying the points which cannot be selected as seam (Fig. 4e).

To determine GrDist matrix, the geodesic time between points in a gray-scale image is used that was proposed by Soille [41]. To increase moving domain between points in OR, City Block distance is used. The City Block distance between points \(\left( {\hbox {x}_1 ,\hbox {y}_1 } \right) \) and \(\left( {\hbox {x}_2 ,\hbox {y}_2 } \right) \) is \(\left| {\hbox {x}_1 -\hbox {x}_2 } \right| +\left| {\hbox {y}_1 -\hbox {y}_2 } \right| \). One of the parameters of the graydist function is a gray image (GrImg), which is calculated using Eq. (2). Figure 5a depicts the calculated GrImg.

where \({\textit{grImg}}_i \) is image gradient and \(x\, {\textit{and}}\, y\) are the coordinates of one of the intersecting points in OR. The graydist function calculates gray-weighted distance from pixel (x, y) (Figs. 5b, 6).

By using GrDist matrix, we can move toward the (x, y) point. However, among all the possible points to move toward, the point which has the lowest cost should be selected. This cost is determined by Value matrix. To calculate Value matrix, Eq. (3) is used:

where \({\textit{Img}}_i \) are the images with OR and \({\textit{ImgDif}}\) is the absolute value of the images difference in RGB domain, and \({\textit{ImgDif}}_{{\textit{max1}}}\, and\, {\textit{ImgDif}}_{{\textit{max2}}} \) are matrices maximum values and middle values between R, G, and B domain, respectively. Value matrix and estimated seam are shown in Fig. 7.

Search to find seam Fig. 8 shows the pseudo-code of the search algorithm to find the optimal seam in OR.

In order to determine the path from one point to another, the neighboring points are selected in GrDist matrix which their values are less than the center point (Fig. 9a), and among these selected points, the point which has the lowest value in Value matrix will be selected as the next point (Fig. 9c). The value less than the center point value always exists so the new point will be selected in each iteration.

In each iteration of the pseudo-code, two points along the path are determined so that the existing extra path (if available) gets eliminated (Fig. 10).

Proposed method is linear search, so in the worst case, search time is O(max(m, n)) in the OR with \(\hbox {m}\times \hbox {n}\) size, the average time is \(\hbox {O}(5\times \hbox {max(m, n)})\), because the probability of each point selection is 1/5 (Fig. 9a). Since the proposed method is the scale-invariant, the algorithm can be used in downscale and after seam estimation, it is possible to resize the estimated seam.

The result of seam estimation method is shown in Fig. 11.

Final composites computed by a variety of algorithms: a average. b median. c feathered average. d p-norm p = 10. e Vornoi. f weighted ROD vertex cover with feathering. g graph cut seams with Poisson blending. h and pyramid blending [1]. i images boundary and estimated seams from proposed method. j stitched images with out blending

5.2 Scale variation in seam estimation

Value is calculated based on images differences in RGB domain, and GrImg is calculated based on gradient domain and is used to calculate the geodesic time between points (Figs. 5 b, 6) in OR; therefore, estimated seam is invariant to different scales. As is shown in Fig. 12, estimated seams from images (Fig. 2a, b) in various downscaling are similar, so proposed algorithm can be used in downscale to reduce search domain and elapsed time.

6 Evaluation

The proposed algorithm has been tested by various pair images with different illumination and moving objects and has been compared with preexisting solutions from both the commercial field and the research literature.

Image datasets from Visualize Web site [45] were used to evaluate the proposed method.

Many applications were proposed for image alignment and stitching, but we had compared the proposed method with two best methods, Panorama Maker [43] and ICE [44]. In Fig. 13, results of three methods are shown and faulty regions are separated with yellow circles.

The proposed method was tested on datasets from [35]. In most cases, our result was comparable with two methods that introduced by Zhang and Liu [35] and Lin et al. [36]. Figure 14 shows results of the three mentioned methods.

Also, FARSE has been tested on pair images [36, 45] with downscaling. Experiments showed that estimated seams were found in downscaling until 0.5 times of original sizes of images and were similar to the seams that were found for images in the original scale (Fig. 12). Also elapsed times to find the seams in different scales were shown in Fig. 15.

Figure 16 shows final composites computed by a variety of algorithms [1]. The results clearly show that FARSE is able to find a good seam and reduce artifacts in OR.

7 Discussion and Conclusion

In this paper, simple implementation of seam finding was introduced that is robust to deal with large exposure differences and ghosting, by defining two matrices, namely value and cost matrices, and using a simple search to estimate the optimal seam in OR.

Quality of estimated seam by FARSE and SEGUALL [36]

There are a lot of paths between the intersection points (Fig. 3) in the OR that they could be selected as an optimal seam. Proposed method tries to eliminate misalignment regions, and it selects one of them as an optimal seam.

Experiments show that FARSE can select a good seam in pair images with many moving objects and create a unique composite surface.

Figure 17 shows the qualities of seams that have been calculated by the proposed function in SEAGULL [36]. In SEAGULL, structure-preserving warp was used for images alignment, so structures had been matched closely to another in OR. In some cases that images have large parallax, using homography matrix to align the images may cause many mismatches. Although FARSE had used global homography matrix to images alignment, it can be seen in Fig. 17 that the quality of estimated seam is good.

Seam estimation methods are sensitive to the quality of images alignment. In the future, we will use warping methods for images alignment besides the proposed seam estimation method (FARSE). Also, it might be possible to remove value matrix and just compute gray-weighted distance for needed pixels instead of computing all in OR.

Abbreviations

- OR:

-

Overlap Region

- FARSE:

-

Fast and robust seam estimation.

References

Szeliski, R.: Image alignment and stitching: a tutorial. Found. Trends\(\textregistered \) Comp. Gr. Vis. J. 2(1), 1–104 (2006)

Peleg, S.: Elimination of seams from photo mosaics. Comput. Gr. Image Process. 16(1), 90–94 (1981)

Duplaquet, M.-L.: Building large image mosaics with invisible seam lines, in aerospace/defense sensing and controls. Int. Soc. Opt. Photonics 3387, 369–377 (1998)

Davis, J.: Mosaics of scenes with moving objects. In: Proceedings IEEE Conference on Computer Vision Pattern Recognition, pp. 354–360 (1998)

Fang, X., Zhu, J., Luo, B.: Image mosaic with relaxed motion. SIViP 6(4), 647–667 (2012)

Efros, A. A., Freeman, W. T.: Image quilting for texture synthesis and transfer. In: Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, ser. SIGGRAPH ’01 (341–346). ACM (2001)

Mills, A., Dudek, G.: Image stitching with dynamic elements. Image Vis. Comput. 27(10), 1593–1602 (2009)

Burt, P.J., Adelson, E.H.: A multi resolution spline with application to image mosaics. ACM Trans. Gr. 2(4), 217–236 (1983)

Zeng, L., Zhang, W., Zhang, S., Wang, D.: Video image mosaic implement based on planar-mirror-based catadioptric system. SIViP 8(6), 1007–1014 (2014)

Pérez, P., Gangnet, M., Blake, A.: Poisson image editing. ACM Trans. Gr. 22(3), 313–318 (2003)

Zaragoza, J. Chin, T.-J. Brown, M. S., Suter, D.: As-projective as- possible image stitching with moving DLT. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2339–2346 (2013)

Levin, A. Zomet, A. Peleg, S., Weiss, Y.: Seamless image stitching in the gradient domain. In: Proceedings 8th European conference computer vision, pp. 377–389 (2004)

Irani, M., Anandan, P.: Video indexing based on mosaic representations. Proc. IEEE 86(5), 905–921 (1998)

Agarwala, A., Dontcheva, M., Agrawala, M., Drucker, S., Colburn, A., Curless, B., Salesin, D., Cohen, M.: Interactive digital photomontage. ACM Trans. Gr. (TOG) 23(3), 294–302 (2004)

Borgefors, G.: Distance transformations in digital images. Comput. Vis. Gr. Image Process. 34(3), 227–248 (1986)

Danielsson, P.E.: Euclidean distance mapping. Comput. Gr. Image Process. 14(3), 227–248 (1980)

Szeliski, R., Shum, H.-Y.: Creating full view panoramic image mosaics and texture mapped models. In: Proceedings computer graphics (SIGGRAPH’97), pp. 251–258 (1997)

Chen, C. Y., Klette, R.: Image stitching—comparisons and new techniques. In: Computer analysis of images and patterns (CAIP’99), pp. 615–622 (1999)

Uyttendaele, M. Eden, A., Szeliski, R.: Eliminating ghosting and exposure artifacts in image mosaics. In: IEEE Computer society conference on computer vision and pattern recognition (CVPR’2001), pp. 509–516 (2001)

Porter, T., Duff, T.: Compositing digital images. Comput. Gr. (SIGGRAPH’84) 18(3), 253–259 (1984)

Blinn, J.F.: Jim Blinn’s corner: compositing, part 1—Theory. IEEE Comput. Gr. Appl. 14(5), 83–87 (1994)

Wood, D.N., Finkelstein, A., Hughes, JF., Thayer, CE., Salesin, DH.: Multi perspective panoramas for cel animation. In: Computer graphics proceedings, annual conference series, pp. 243–250 (1997)

Peleg, S., Rousso, B., Rav-Acha, A., Zomet, A.: Mosaicing on adaptive manifolds. IEEE Trans. Pattern Anal. Mach. Intell. 22(10), 1144–1154 (2000)

Efros, A.A., Freeman, W.T.: Image quilting for texture synthesis and transfer. In: Fiume, E. (ed.) SIGGRAPH, Computer Graphics Proceedings, pp. 341–346. ACM Press, New York (2001)

Herley, C.: Automatic occlusion removal from minimum number of images. In: Processing of the International Conference on Image, pp. 1046–1049 (2005)

Boykov, Y., Veksler, O., Zabih, R.: Fast approximate energy minimization via graph cuts. IEEE Trans. Pattern Anal. Mach. Intell. 23(11), 1222–1239 (2001)

Gemanand, S., Geman, D.: Stochastic relaxation, Gibbs distribution, and the Bayesian restoration of images. IEEE Trans. Pattern Anal. Mach. Intell. 6(6), 721–741 (1984)

Sun, J., Zheng, N., Shum, H.: Stereo matching using belief propagation. IEEE Trans. Pattern Anal. Mach. Intell. 25(7), 787–800 (2003)

Kwatra, V., Schödl, A., Essa, I., Turk, G., Bobick, A.: Graphcut textures: image and video synthesis using graph cuts. ACM Trans. Gr. 22(3), 277–286 (2003)

Boykov, Y., Kolmogorov, V.: An experimental comparison of mincut/ max-flow algorithms for energy minimization in vision. IEEE Trans. Pattern Anal. Mach. Intell. 26(9), 1124–1137 (2004)

Eden, A., Uyttendaele, M., Szeliski, R.: Seamless image stitching of scenes with large motions and exposure differences. Proc. IEEE Conf. Comput. Vis. Pattern Recogn. 2, 2498–2505 (2006)

Jia, J., Tang, C.-K.: Image stitching using structure deformation. IEEE Trans. Pattern Anal. Mach. Intell. 30(4), 617–631 (2008)

Zhang, G., He, Y., Chen, W., Jia, J., Bao, H.: Multi-viewpoint panorama construction with wide-baseline images. IEEE Trans. Image Process. 25(7), 3099–3111 (2016)

Gao, J., Li, Y., Chin, T.-J., Brown, M.S.: Seam-driven image stitching. In: Eurographics, pp. 45–48 (2013)

Zhang, F., Liu, F.: Parallax-tolerant image stitching. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3262–3269 (2014)

Lin, K. Jiang, N. Cheong, L.-F. Do, M., Lu, J.: Seagull: Seam-guided local alignment for parallax-tolerant image stitching. In: Proceedings 14th European Conference on Computer Vision, pp. 370–385 (2016)

Li, N. Liao, T., Wang, C.: Perception-based energy functions in seam-cutting. arXiv preprint arXiv:1701.06141 (2017)

Lowe, D.: Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 60(2), 91–110 (2004)

Fischler, M.A., Bolles, R.C.: Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 24(6), 381–95 (1981)

Hartley, R., Zisserman, A.: Multiple View Geometry in Computer Vision, 2nd edn. Cambridge University Press, Cambridge (2004)

Soille, P.: Generalized geodesy via geodesic time. Pattern Recogn. Lett. 15(12), 1235–40 (1994)

Rzhanov, Y.: Photo-mosaicing of images of pipe inner surface. Signal Image Video Process. 7(5), 865–871 (2013)

Panorama-Maker. http://www.arcsoft.com/panorama-maker/ (2013). Accessed 11 Aug 2015

ICE. http://research.microsoft.com/en-us/um/redmond/groups/ivm/ice/ (2015) Accessed 23 May 2016

VisualSize. http://www.visualsize.com/; (2011) Accessed 16 May 2015

Acknowledgements

The research leading to these results has received funding from the Bu-Ali Sina University Research Affairs by Grant Number 32-1178.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hejazifar, H., Khotanlou, H. Fast and robust seam estimation to seamless image stitching. SIViP 12, 885–893 (2018). https://doi.org/10.1007/s11760-017-1231-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-017-1231-3