Abstract

In this paper, C-means algorithm is fuzzified and regularized by incorporating both local data and membership information. The local membership information is incorporated via two membership relative entropy (MRE) functions. These MRE functions measure the information proximity of the membership function of each pixel to the membership average in the immediate spatial neighborhood. Then minimizing these MRE functions pushes the membership function of a pixel toward its average in the pixel vicinity. The resulting algorithm is called the Local Membership Relative Entropy based FCM (LMREFCM). The local data information is incorporated into the LMREFCM algorithm by adding to the standard distance a weighted distance computed from the locally smoothed data. The final resulting algorithm, called the Local Data and Membership Relative Entropy based FCM (LDMREFCM), assigns a pixel to the cluster more likely existing in its immediate neighborhoods. This provides noise immunity and results in clustered images with piecewise homogeneous regions. Simulation results of segmentation of synthetic and real-world noisy images are presented to compare the performance of the proposed LMREFCM and LDMREFCM algorithms with several FCM-related algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Image segmentation is a principle process in many image, video, scene analysis and computer vision applications [1]. It is the process of assigning a label to every pixel in an image such that pixels with the same label share certain characteristics [2]. Several image segmentation methods have been developed but still no satisfactory performance attained especially in noisy images [2–7], which makes development of segmentation algorithms to handle noise an active area of research. The existing segmentation algorithms can be categorized into threshold-based, region-based and edge-based, probabilistic-based, artificial neural-network-based and clustering-based methods [2–5]. Metaheuristic algorithms such as artificial bee colony (ABC) and genetic-based fuzzy ones have been used for segmentation to add diversity to the algorithms [6, 7]. Clustering and fuzzy-based clustering techniques have been widely adopted by many researchers since clustering needs no training examples [8–12].

C-means clustering algorithm is unsupervised approach in which data are basically partitioned based on locations and distance between various data points. Partitioning the data into C-clusters is carried out by compacting data in the same clusters and separating data in different ones. C-means clustering provides crisp segmentation which does not take into account fine details of infrastructure such as hybridization or mixing of data [13].

Fuzzy C-means (FCM) is one of the methods widely used for image segmentation. FCM’s success is chiefly attributed to the introduction of fuzzy sets and membership of belonging [14, 15]. Compared with the C-means algorithm which yields hard or crisp segmentation, the FCM one is able to provide soft one by incorporating membership degree of belonging [16]. However, one disadvantage of the standard FCM is not considering any spatial or local information in image context, which makes it very sensitive to noise and other imaging artifacts. To solve this problem different techniques have been developed [11, 12, 16–19]. Spatial or local data information has been involved for the enhancement and regularization of the performance of the standard FCM [11, 12, 16]. Local membership information has also been employed to generate a parameter to weight the membership function in order to give more weight to the pixel membership if the immediate neighborhood pixels are of the same cluster [16]. In [17–20], membership entropy and relative entropy have been used to fuzzify the hard C-means algorithm. In [6] a spatial fuzzy genetic algorithm (SFGA) has been presented for the segmentation of color images. The algorithm is not taking into account any spatial data information. The algorithm seeks the cluster-centers by employing the GA algorithm for optimizing both compactness and separation of classes. However, the membership functions are computed by the FCM algorithm after the cluster-centers are obtained. In [7], the artificial bee colony (ABC) search algorithm has been used for image segmentation. The ABC algorithm searches for the cluster-centers based on the minimization of the membership-weighting distances as a fitness measure. The membership functions are computed based on the obtained cluster-centers as type-2 fuzzy set.

In this paper, C-means clustering algorithm is modified by incorporating both local membership relative entropy (MRE) and local spatial data information. The modified objective clustering function consists of minimizing the standard C-means function plus two MRE functions for fuzzification and regularization. The rest of the paper is organized as follows. In Sect. 2, several FCM-related clustering algorithms are presented. In Sect. 3, the proposed Local Data and Membership Relative Entropy based FCM (LDMREFCM) algorithm is discussed. In Sect. 4, simulation results of clustering and segmentation of synthetic and real-world images are presented. In Sect. 5, the conclusion is drawn.

2 FCM-related algorithms

2.1 Conventional FCM

The standard fuzzy C-means (FCM) clustering objective function is given by [14, 15]

where \(d_{in}\) is the Euclidean distance given by \(d_{in}={(x_n-v_i)}^2\), \(u_{in}\in \ U=\{u_{in}\in [0,1],\sum _{i=1}^C u_{in}=1\) \( \forall n\ ,0<\sum _{n=1}^N u_{in}<N \) \(\forall i\}\) represents the membership of the nth pixel to the ith cluster and \(m > 1\) is an exponent number to control fuzziness. If \(m=1\), the FCM algorithm reduces to the hard C-means one. The membership \(u_{in}\) and the cluster-center \(v_i\in V=\{v_1, v_2,...,v_C\}\) that minimize the standard FCM function in (1), are given by [14, 15]

It is obvious from (2) and (3) that no local data information is involved in the computation of the pixel memberships and the cluster-centers. This makes classification of the nth pixel is independent of its neighbors and therefore prone to noise.

2.2 Membership Entropy based FCM (MEFCM)

In [20], the entropy of the membership and the entropy of the complement of the membership have been incorporated into the hard clustering version of the FCM (i.e., FCM with \(m=1\)) for fuzzification. The Membership Entropy based FCM (MEFCM) is given by [20]

where \(\gamma \) is a weighting parameter that controls the amount of fuzziness. The membership and the cluster-center functions obtained by the minimization of (4) are given by

It is obvious from (5) and (6) that the membership and cluster-center functions are independent of the local data and spatial membership information. This implies that additive noise can deteriorate their values. This has motivated us to seek modifying the MEFCM algorithm.

2.3 Spatial based fuzzy C-means (SFCM)

The SFCM [11] is a direct modification of the conventional FCM by replacing \(d_{in}\) in (1) by \(D_{in}\) given by

where \(\lambda \in [0,1]\) is an experimentally selected weight, and \(f_{in}\) is a local data function given by [11]

The membership \(u_{in}\) associated with the SFCM method is given by replacing \(d_{in}\) by \(D_{in}\) in (2). The cluster-center \(v_i\) is given exactly by (3).

3 Proposed FCM algorithms

3.1 Local Membership Relative Entropy based FCM (LMREFCM)

In [17, 19], a single Kullback–Leibler (KL) membership distance (i.e., a membership relative entropy (MRE) function) has been used for fuzzifying and regularizing the hard C-means clustering algorithm. Here, we propose to add a second MRE function for more fuzziness and regularization. The objective function of the proposed Local Membership Relative Entropy based FCM (LMREFCM) clustering algorithm is given by

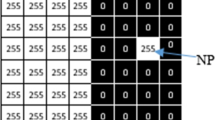

where \(\gamma \) is a parameter weighting fuzziness of the MRE terms; \(\widehat{u_{in}}=1-u_{in}\) is the complement of \(u_{in}\); \(\pi _{in}\) and \( \widehat{\pi _{in}} \) are the spatial moving averages of the membership and the membership-complement functions \(u_{in}\) and \(\widehat{u_{in}}\), respectively, computed by

Clustering of the synthetic image: a noise-free image; b the image plus zero-mean and 0.08 variance WGN; c FCM; d MEFCM; e SFCM; f LMREFCM; g LDMREFCM. It is obvious that the clustered images in panels f and g, generated by the LMREFCM and LDMREFCM algorithms, show a very small number of misclassified pixels (almost noise-free clustered images) compared with the other three clustered images. Clustering validation coefficients are summarized in Table 1

where \(N_n\) is a set of pixels falling in a neighboring square window around the nth pixel with the nth pixel itself excluded from the set and \(N_k\) is the cardinality of it. The first membership relative entropy term in (9) measures the proximity between the membership of a pixel in a cluster and its local average, while the second term ensures the proximity of the complement membership and its local average. This second term provides more fuzzification and regularization. This is since the first term in (9) pulls the membership toward \(\{0,1\}\), the second (the first MRE) term pulls the membership toward \(\{0,\pi _{in}\}\), and the third (the second MRE) term pulls the membership toward \(\{1,\pi _{in}\}\). Besides, computing \(u_{in}\) based on the local average membership \(\pi _{in}\) of the immediate neighborhood pixels can smooth out additive noise and bias the solution to piecewise homogeneous labeling. Thus, this leads to a segmented image with piecewise homogeneous regions. The minimization of the LMREFCM objective function in (9) yields

It is obvious from (12) that if \(\gamma \longrightarrow \infty \) (i.e., infinite fuzzification), then \(u_{in}\longrightarrow \pi _{in}\). In this case, \(u_{in}^{(t)}=\frac{1}{N_k}\sum _{k\varepsilon N_n}u_{ik}^{(t-1)}\) and \(u^{(t)}_{in}=\frac{{u^{(t)}_{in}}}{\sum _{j=1}^C{u^{(t)}_{jn}}}\), with t is the iteration number and is the twofold process for the computation of the membership function \(u_{in}\). This computation is indeed independent of or not influenced by the data to be clustered but dependent on the random process assigned to the initial membership \(u^{0}_{in}\). If \(u^{0}_{in}\) is generated from a random process with mean greater than zero, then \(u^{(\infty )}_{in}\) converges, because of recursive averaging and normalizing, to a normal distribution variable with mean equal to 1 / C. This has been proved experimentally by using a synthetic image of 4 clusters and \(\gamma =10^{10}\). Finally, as shown by (13), the computation of the cluster-centers is still not involving any local data information.

3.2 Local Data and Membership Relative Entropy based FCM (LDMREFCM)

For more noise robustness, local spatial data information can be incorporated into the LDMREFCM algorithm described above. The proposed LDMREFCM is given by

where \( \overline{d}_{in}={(\overline{x}_n-v_i)}^2 \) is a new distance of the locally smoothed pixel \(\overline{x}_n\) computed in advance prior to iterating the minimization of (14) and \(\alpha \) is a weighting parameter. The membership \(u_{in}\) and cluster-center \(v_i\) provided by (14) are, respectively, given by

The difference between the LMREFCM and the LDMREFCM algorithms is that the later incorporates the locally smoothed data in the computation of the membership and cluster-center functions which can handle additive noise. In the next section, the simulation results are presented. We will examine the benefit of using both the two local membership relative entropy fuzzification functions. We will also examine and present a procedure for initializing the membership and cluster-center functions of both the LMREFCM and LDMREFCM algorithms.

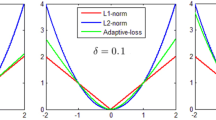

The average versus noise variance of the accuracy (a); sensitivity (b); specificity (c); right pointed triangle FCM; plus symbol MEFCM; star symbol SFCM; box LMREFCM; \(\circ \), LDMREFCM. It is clear that the proposed LMREFCM and LDMREFCM algorithms provide the superior performance among the five algorithms and the LDMREFCM one provides the highest noise robustness

4 Simulation results

4.1 Clustering validity

To evaluate the performance of a fuzzy clustering algorithm, several quantitative measures have been adopted in [21] and references therein. Among these measures are the partition coefficient \(V_{\mathrm{PC}}\) and the partition entropy \(V_{\mathrm{PE}}\) given, respectively, by

The best clustering is achieved when \(V_{\mathrm{PC}}=1\) and \(V_\mathrm{PE}=0\). In synthetic image where the labels of pixels are known, in addition to \(V_{\mathrm{PC}}\) and \(V_\mathrm{PE}\) coefficients, several measures have also been used such as the accuracy, sensitivity and specificity [18].

4.2 Synthetic image

The noisy images used in this simulation are generated by adding zero-mean white Gaussian noise (WGN) with different variances to the noise-free synthetic one shown in Fig. 1a. The noisy image for 0.08 noise variance is shown in Fig. 1b. The performance of the conventional FCM, MEFCM, the spatial distance-weighted FCM (SFCM), LMREFCM and LDMREFCM algorithms with \(m=2\) and \(C=4\) in segmenting these noisy images has been studied. For MEFCM, \(\gamma =1000\); SFCM, \(\lambda =0.5\); LMREFCM and LDMREFCM, \(\gamma =10{,}000\). These values have been selected experimentally. The neighboring window of size \(3\times 3\) has been used to compute the locally smoothed data \(\overline{x}_{n}\). The same window size has been used for computing the locally smoothed membership function \(\pi _{in}\). For the FCM algorithm, the initial values of the membership functions U are generated from a uniformly distributed random process with 0.5 mean and the initial values of the cluster-centers V are uniformly distributed random process with mean equal to the image mean. We have executed 25 runs of each algorithm. In each run, the initial values of U and V of the FCM are new samples, while the ones of the rest algorithms are generated by the FCM algorithm after a small number of iterations. Simulation results, omitted for space limitation, have shown that with these initial values the algorithms provide better performance than with the randomly generated ones. Also in each run, a new random sample of WGN is added to the noise-free image. Figure 1c–g shows the resulting clustered images produced by the five algorithms for noise case of 0.08 variance. It is obvious that the LMREFCM and LDMREFCM algorithms provide almost noise-free segmented images (i.e., a very small number of misclassified pixels). Also, the LDMREFCM algorithm provides the superior segmented image. Table 1 summarizes the means and variances of the clustering validation measures \(V_{\mathrm{PC}}\) and \(V_\mathrm{PE}\) (i.e., \(\mu \pm \sigma \)). The LMREFCM and LDMREFCM algorithms provide the superior \(V_{\mathrm{PC}}\) and \(V_\mathrm{PE}\) values.

The averages of the accuracy, sensitivity and the specificity performance measures of all algorithms have been studied against noise variance. These measures are shown in Fig. 2. It is seen that both the LMREFCM and the LDMREFCM algorithms provide the superior performance and the LDMREFCM algorithm offers the highest noise robustness.

The average runtime T of each algorithm has been measured via simulation. In each run, all algorithms are set to start from the same initial conditions, randomly generated once and used for all algorithms and to stop at the same fixed point. Figure 3 shows T versus noise variance. It is seen that the proposed two algorithms need more runtime than the other algorithms and the LDMREFCM one offers shorter runtime among the two algorithms. This implies that incorporating local data information as done in the LDMREFCM algorithm is vital for noise handling as well as for convergence rate improvement.

Segmentation of simulated MRI: a noise-free image; b the image in (a) plus WGN with zero-mean and 0.005 variance. Segmented images: c FCM; d MEFCM; e SFCM; f LMREFCM, g LDMREFCM. It is obvious that the clustered images in panels f and g, offered by the LMREFCM and LDMREFCM algorithms, show a very small number of misclassified pixels compared with the other three clustered images. The clustering validation coefficients summarized in Table 1 show that the LMREFCM and LDMREFCM provide the maximum \(V_{\mathrm{PC}}\) and the minimum \(V_\mathrm{PE}\)

Segmentation of real MRI: a noise-free image; b the image in (a) plus salt and pepper with variance of 0.05. Segmented images: c FCM; d MEFCM; e SFCM; f LMREFCM, g LDMREFCM. It is apparent that the clustered images in panels (f) and (g) provided by the LMREFCM and LDMREFCM algorithms show a small number of misclassified pixels compared with the other three clustered images. The clustering validation coefficients summarized in Table 1 show that the LMREFCM and LDMREFCM provide the maximum \(V_{\mathrm{PC}}\) and the minimum \(V_\mathrm{PE}\)

Segmentation of Lena image: a noise-free image; b the image in (a) plus WGN of zero-mean and 0.05 variance. Segmented images: c FCM; d MEFCM; e SFCM; f LMREFCM, g LDMREFCM. It is visible that the LMREFCM and LDMREFCM algorithms provide less noisy clustered images. The clustering validation coefficients summarized in Table 1 show that the LMREFCM and the LDMREFCM algorithms provide the superior \(V_{\mathrm{PC}}\) and \(V_\mathrm{PE}\)

Segmentation of real-world images [24]: a noise-free image; b the image in (a) plus WGN with zero-mean and 0.01 variance (top) and salt and pepper noise with 0.04 variance (bottom). Segmented images: c FCM; d MEFCM; e SFCM; f LMREFCM, g LDMREFCM. Top \(C=2\); bottom \(C=3\)

4.3 MRI

A simulated magnetic resonance image (MRI) from [22], shown in Fig. 4a, has been considered as a noise-free image. Additive WGN with zero-mean and 0.005 variance has been added to generate the noisy MRI shown in Fig. 4b. This noisy MRI has been segmented by the FCM, SFCM, MEFCM, LMREFCM and the LDMREFCM algorithms. The parameters of all algorithms have been taken similar to the ones used in the synthetic image simulation except \(\gamma =200\) for the MEFCM algorithm and \(\gamma =1000\) for both the LMREFCM and LDMREFCM algorithms. We have also executed 25 runs of each algorithm. The initial values of \(u_{in}\) and \(v_{i}\) have been set as mentioned in the synthetic image simulation. Figure 4c–g shows the resulting clustered images produced by the five algorithms in a single run, and Table 1 summarizes the clustering validation measures (\(\mu \pm \sigma \)) of the five algorithms. It is obvious that the LMREFCM and LDMREFCM provide the less noisy segmented images, the maximum \(V_{\mathrm{PC}}\) and the minimum \(V_\mathrm{PE}\).

Real magnetic resonance image (MRI) from [23], shown in Fig. 5a, has been used as a noise-free image. Additive salt and pepper noise with 0.05 variance has been added to generate the noisy MRI shown in Fig. 5b. This noisy MRI has been segmented by the five algorithms. The parameters of all algorithms have been set equal to the ones used with the synthetic image simulation except \(\gamma =300\) for the MEFCM algorithm and \(\gamma =800\) for both the LMREFCM and LDMREFCM algorithms. We have also executed 25 runs of each algorithm. The initial values of \(u_{in}\) and \(v_{i}\) have been adjusted as mentioned in the synthetic image simulation. Figure 5c–g shows the resulting clustered images of a single run. Table 1 summarizes the clustering validation coefficients. It is shown that the LMREFCM and LDMREFCM algorithms provide the smallest number of misclassified pixels, the maximum \(V_{\mathrm{PC}}\) and the minimum \(V_\mathrm{PE}\).

4.4 Lena image

The Lena image shown in Fig. 6a has been used as a noise-free image. Additive WGN noise with zero-mean and 0.01 variance has been used to generate the noisy image shown in Fig. 6b. All parameters of the five algorithms have been adjusted similar to the previous simulations except \(C=2\), \(\gamma =1000\) for the MEFCM algorithm and \(\gamma =2000\) for the LMREFCM and the LDMREFCM algorithms. We have also executed 25 Monte Carlo runs of each algorithm as explained above. Figure 6c–g shows the resulting segmented images obtained by the five algorithms. It is seen that the proposed LMREFCM and LDMREFCM algorithms provide the less noisy segmented images. The clustering validation measures \(V_{\mathrm{PC}}\) and \(V_\mathrm{PE}\) summarized in Table 1 show that the proposed two algorithms still provide the superior performance.

4.5 More images

Two images from Berkeley database [24] degraded by additive WGN and salt and pepper noise have been segmented by the five algorithms. The initial values have been adjusted as aforementioned, and the algorithms’ parameters have been experimentally selected. The resulting segmented images illustrated by Fig. 7 show that the proposed two algorithms continue providing the superior segmented images.

5 Conclusion

The C-means algorithm has been fuzzified by incorporating local spatial membership information via two functions. The first one measures the relative entropy between the membership of a pixel and its average in the immediate pixel vicinity. The second function measures the relative entropy between the complement of the membership and its average over the immediate neighboring pixels. For regularization, the local data information has been incorporated by modifying the pixel distance to be computed from both the original and locally smoothed image data. Incorporating both types of local information imposes classifying each pixel in correlation with its immediate neighbors. This handles additive noise and yields segmented images with piecewise homogeneous regions. Results of segmentation of synthetic and real-world images have been presented. These results have shown that the proposed LMREFCM and LDMREFCM algorithms outperform several widely used FCM-related ones. The LDMREFCM algorithm has shown more noise robustness and shorter runtime than the LMREFCM one.

References

Zhang, Y.-J.: Advances in Image and Video Segmentation. IGI Global, Hershey (2006)

Zhang, H., Fritts, J.E., Goldman, S.A.: Image segmentation evaluation: a survey of unsupervised methods. Comput. Vis. Image Underst. 110(2), 260–280 (2008)

Pham, D.L., Xu, C., Prince, J.L.: Current methods in medical image segmentation 1. Annu. Rev. Biomed. Eng. 2(1), 315–337 (2000)

Friedman, N., Russell, S.: Image segmentation in video sequences: a probabilistic approach. In: Proceedings of the Thirteenth conference on Uncertainty in artificial intelligence. Morgan Kaufmann Publishers Inc., pp. 175–181 (1997)

Khanna, A., Sood, M., Devi, S.: Us image segmentation based on expectation maximization and gabor filter. Int. J. Model. Optim. 2(3), 230–233 (2012)

Khan, A., Ullah, J., Jaffar, M.A., Choi, T.-S.: Color image segmentation: a novel spatial fuzzy genetic algorithm. SIViP 8(7), 1233–1243 (2014)

Bose, A., Mali, K.: Fuzzy-based artificial bee colony optimization for gray image segmentation. SIViP 10(6), 1–8 (2016)

Miyamoto, S., Ichihashi, H., Honda, K.: Algorithms for fuzzy clustering. In: Kacprzyk, J. (ed.) Methods in c-Means Clustering with Applications. Springer, Berlin (2008)

Ahmed, M.N., Yamany, S.M., Mohamed, N., Farag, A., Moriarty, T., et al.: A modified fuzzy c-means algorithm for bias field estimation and segmentation of MRI data. IEEE Trans. Med. Imaging 21(3), 193–199 (2002)

Cai, W., Chen, S., Zhang, D.: Fast and robust fuzzy c-means clustering algorithms incorporating local information for image segmentation. Pattern Recogn. 40(3), 825–838 (2007)

Guo, Y., Liu, K., Wu, Q., Hong, Q., Zhang, H.: A new spatial fuzzy c-means for spatial clustering. WSEAS Trans. Comput. 14, 369–381 (2015)

Gong, M., Liang, Y., Shi, J., Ma, W., Ma, J.: Fuzzy c-means clustering with local information and kernel metric for image segmentation. IEEE Trans. Image Process. 22(2), 573–584 (2013)

Wagstaff, K., Cardie, C., Rogers, S., Schrödl, S., et al.: Constrained k-means clustering with background knowledge. In: ICML, vol. 1, pp. 577–584 (2001)

Bezdek, J.C.: Pattern Recognition with Fuzzy Objective Function Algorithms. Springer, Berlin (2013)

Zadeh, L.A.: Fuzzy sets. Inf. Control 8, 338–353 (1965)

Chuang, K.-S., Tzeng, H.-L., Chen, S., Wu, J., Chen, T.-J.: Fuzzy c-means clustering with spatial information for image segmentation. Comput. Med. Imaging Graph. 30(1), 9–15 (2006)

Gharieb, R.R., Gendy, G.: Fuzzy c-means with a local membership kl distance for medical image segmentation. In: Biomedical Engineering Conference (CIBEC), 2014 Cairo International, pp. 47–50, IEEE (2014)

Zarinbal, M., Zarandi, M.F., Turksen, I.: Relative entropy fuzzy c-means clustering. Inf. Sci. 260, 74–97 (2014)

Gharieb, R.R., Gendy, G.: Fuzzy c-means with local membership based weighted pixel distance and KL divergence for image segmentation. J. Pattern Recogn. Res. 1, 53–60 (2015)

Yasuda, M., Furuhashi, T.: Fuzzy entropy based fuzzy c-means clustering with deterministic and simulated annealing methods. IEICE Trans. Inf. Syst. 92(6), 1232–1239 (2009)

Wang, W., Zhang, Y.: On fuzzy cluster validity indices. Fuzzy Sets Syst. 158(19), 2095–2117 (2007)

Online simulated brain web (2015)

Internet brain segmentation repository (ibsr)

Martin, D., Fowlkes, C., Tal, D., Malik, J.: A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In: Proceedings of 8th Int’l Conference Computer Vision, vol. 2, pp. 416–423 (July 2001)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gharieb, R.R., Gendy, G. & Abdelfattah, A. C-means clustering fuzzified by two membership relative entropy functions approach incorporating local data information for noisy image segmentation. SIViP 11, 541–548 (2017). https://doi.org/10.1007/s11760-016-0992-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-016-0992-4