Abstract

Recently, a blind resolution enhancement method that uses a two-dimensional and single-input multiple-output extension of the constant modulus algorithm has been developed for pure translational motion. The method works well in case of low bit depth unobserved true images, but its performance decreases for high bit depth true images. In this work, we propose a refined scheme in which complex representation of images and a set of complex deconvolution FIR filters are used. Simulations show that the refined method succeeds in reconstructing the low and high bit depth true images without the knowledge of blur parameters. Visual results for the restoration case (single image, no subsampling) are also given. No assumption is made about the blurs except that they have low-pass characteristics. Also, they do not have to be the same for the observed low-resolution images and they do not need to be shift invariant.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Super-resolution image reconstruction (or image resolution enhancement) can be defined as the process of reconstructing a high-quality and high-resolution image by combining several shifted, degraded, and under-sampled ones. Super-resolution is proven to be useful if multiple frames of the same scene can be obtained from a single sensor or from multiple sensors. For example, in areas such as medical imaging and satellite imaging, acquiring multiple images to form a higher-resolution image is possible although the sensor resolution quality is limited. Also, a number of low-resolution frames in a video sequence can be utilized to improve the resolution for frame freeze or zooming purposes.

The first attempt to combine several low-resolution images to construct a higher-resolution one goes back to the frequency domain approach of Tsai and Huang [1]. Since then, an abundant number of super-resolution techniques have been proposed. Early works include iterative back-projection [2], projection onto convex sets (POCS) approach [3, 4], stochastic reconstruction methods such as maximum a posteriori (MAP) or maximum likelihood estimations [5, 6], hybrid MAP/POCS super-resolution algorithm [7], among others. More recently, edge-preserving stochastic methods that perform adaptive smoothing based on the local properties of the image are studied [8]. Kang and Lee [9] provided a least-squares solution with regularization. In [10], a super-resolution algorithm that takes into account inaccurate estimates of the point-spread function and the registration parameters is presented. Frequency domain implementations of expectation–maximization (EM) and maximum a posteriori methods are given in [11], which also includes the shift estimation into the super-resolution routine. Other works on the topic concentrate on super-resolution of compressed video [12, 13], space–time super-resolution [14], etc. Comprehensive tutorials about the subject with emphasis on difficulties and future directions can be found in [15, 16]. Also a number of special journal issues on super-resolution image reconstruction provide collections of recent work on the topic [17, 18].

In almost all above methods, the blur and the motion operators should be known in advance in order to construct the high-resolution image. Although the motion parameters are estimated a priori to some extent, as known to the authors, the blur operators (point-spread function, PSF) are just assumed to be in hand. However, this is usually not the case in practice. The blur parameters must be estimated prior to the super-resolution stage, or the high-resolution image must be constructed without the need for the blur parameters. Super-resolution in case of unknown blur is called blind image super-resolution.

The blind super-resolution work up to date can be classified broadly into three categories: learning-based techniques, techniques that can handle parametric PSFs and techniques that can handle arbitrary PSFs. The methods that fall in the first class store several high-resolution images in the database in order to perform the training stage [19, 20], which obviously depends on the existence of such high-resolution images. The methods of the second category assume that the PSFs are of a special form and that only one parameter is needed to fully characterize the blur operators. Unfortunately, this assumption is not realistic for most of the applications. The methods based on generalized cross-validation Gauss quadrature theory [21], iterative expectation–maximization algorithm [22], and soft maximum a posteriori (MAP) estimation framework [23] fall into this category. The blind super-resolution techniques that do not belong to the first two categories constitute the third category which represents the most realistic case. In [24], a three-stage method for blind multi-channel reconstruction of high-resolution images is presented. The stages are a blind multi-channel restoration, a wavelet-based image fusion, and a maximum entropy image interpolation. The method is claimed to estimate a high-resolution image in the case of relatively co-prime blurring operators. In [25, 26], the super-resolution problem, which is single input multiple output (SIMO) in nature, is turned into multiple-input multiple-output (MIMO) case by using poly-phase components. In [27], nonparametric blind image super-resolution is achieved by building a regularized energy function and minimizing it with respect to the original image and blurs. In [28], a unified method for blind image super-resolution and single- or multi-image blind deconvolution is proposed. It is based on alternating minimization of a cost function that is based on the Huber–Markov random field (HMRF) model.

Blind image restoration is the process of reconstructing the original image when only a blurred version is available. Thus, blind image restoration can be considered as a special case of blind image super-resolution where there is no subsampling and only one image is available. Comprehensive reviews and references about the subject can be found in [29, 30]. The blind super-resolution algorithm presented here is also applicable to the blind restoration case.

Constant modulus algorithm (CMA) is a popular tool in the area of blind equalization in communications, where the aim is to suppress the inter-symbol interference (ISI) [31, 32]. If the source is of constant modulus or from a finite alphabet, then CMA can be used in the receiver to reduce the channel impairments on the transmitted signal. Vural and Sethares utilized the property of images that each pixel is represented by a finite number of bits to develop a CMA-based single-image blind blur removal algorithm [33]. In [34], this work was extended to cover the blind resolution enhancement problem such that the high-resolution image is estimated by superposing the degraded images after they passed through distinct adaptive finite impulse response (FIR) filters whose coefficients were updated by using the 2D version of the CMA. The method worked for pure translational motion only. However, both in [33, 34], it was mentioned that as the bit number per pixel increased, the performance of the methods decreased and that this was a consequence of increasing image kurtosis.

The work presented here is in fact based on [34], but to work out the stated problem, two significant modifications are made. The first one is the assumption that the image pixel values are complex numbers of constant modulus. As opposed to the previous method, which assumed that the image pixel values had odd integer values between \(-\)(\(L-1\)) and \(+\)(\(L-1\)) inclusive, where L is the number of gray levels, the kurtosis of the image does not increase as the bit number increases. Apparently, the image pixels are not complex-valued, but a pre-processing scheme in which the real image pixel values are mapped to some complex numbers via a complex mapping diagram can be utilized to fulfill the complex-value assumption. The second modification is the use of complex-valued FIR reconstruction filters instead of using real-valued ones. This is a direct consequence of using a complex-valued cost function and utilizing a gradient-descent-based optimization method.

The paper is organized as follows: In Sect. 2, the observation model that links the high-resolution image to the observed low-resolution images is presented. If the motion between the low-resolution images consists of only pure translational motion, then the model can be simplified. Based on the simplified model, the super-resolution problem is formulated. In Sect. 3, the improved CMA-based high-resolution image reconstruction algorithm is developed. In Sect. 4, experimental results are presented for single image (restoration) and multiple subsampled image (super-resolution) cases. Finally, some conclusions are drawn in Sect. 5. Preliminary versions of this work have been presented at two previous conferences [35, 36].

2 System description

Super-resolution is an inverse problem, where the desired unknown high-resolution image is to be constructed from the observed low-resolution ones. The desired and observed images are linked through linear operations such as geometric warp, blur, decimation, and additive noise [7]. The geometric warp operator is the representation of the scene/camera motion with respect to one reference frame in fractional-pixel units. It may consist of global or local translation, rotation, etc. The blurring process results from factors such as relative motion between the imaging system and the scene, out of focus, point-spread function of the sensor, and so on. It is generally modeled as a linear shift-invariant two-dimensional FIR filter to be convolved with the original (warped) image. Although some super-resolution frameworks assume that the blur is the same, the more general and accurate case would be that it is different for all low-resolution observations. Unlike in the single-image restoration problem, where the source of the blur is optical factors or motion, the super-resolution problem also deals with the blur caused by the finiteness of the dimensions of low-resolution sensors. This is incorporated in the model as spatial averaging. The aliased low-resolution image is then generated by the sensor by subsampling the warped and blurred high-resolution image. The model also assumes additive noise which may be caused by quantization errors, model errors, sensor measurement, etc. As a result, the image formation model can be summarized as follows:

where the true image is represented by x, the observed low-resolution images are represented by \(\mathbf{y}_{i}\), \(\mathbf{b}_{i}\) denotes the blur operators, \(i= 1, 2, {\ldots }, M\), and M is the number of low-resolution images. S denotes the subsampling operator, W shows the warping process, and \(\mathbf{v}_{i}\) is the additive noise. * is the 2D convolution operator.

In some applications, where the motion is controlled and there is no local movement, the only type of motion within the low-resolution image sequences is translational motion. For example, the scanner resolution can be increased by scanning the document more than once with slightly changed initial points. Also in some video sequences, the scene is static and image sequences are obtained by translational motion of the video camera. There are works in the literature which consider this special super-resolution case [37, 38]. Here, a framework which assumes this specific property is presented.

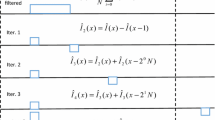

If the only motion within the observed low-resolution images is global translational motion, then the warp and the blur operators can be merged into a single blur operator [34] and the observation model described above can be reduced to what is seen in Fig. 1a.

For now, let us pretend that the subsampling operator does not exist. In this case, to recover the high-resolution and distortion-free image, a set of FIR complex reconstruction filters is applied to the low-resolution images as in Fig. 1b. By using FIR reconstruction filters instead of IIR (infinite impulse response) ones, we avoid the problem of instability. Also in [39], it is reported that using IIR reconstruction filters for the blind image deconvolution case requires recursion within a recursion, which is computationally complex. Based on these facts, FIR filters are chosen over IIR filters. In the ideal case, the impulse response sequence of the reconstruction filters, \(\mathbf{g}_{i}\), satisfies

In Eq. (2), \(\alpha \), \(S_{1}\) and \(S_{2}\) represent the gain and shift ambiguities that exist in the described blind image super-resolution setup just like in the blind image deconvolution problem. Thus, the purpose of the proposed blind super-resolution scheme can be stated as follows: Construct a set of 2D FIR filters to be applied on the observed low-resolution images in order to reconstruct a scaled and shifted version of the original high-resolution and high-quality image without the explicit knowledge of the incorporated blur operators.

3 Algorithm development

The constant modulus (CM) cost is a popular tool in communications for blind equalization of communication signals over dispersive channels [31, 32]. Recently, it has been reformulated for use in 2D in the area of blind image deconvolution [33] and blind image super-resolution [34]. For a true image \(x(n_{1}, n_{2})\) which has zero mean and whose elements are i.i.d. (independent and identically distributed), where \((n_{1},n_{2})\) shows high-resolution pixel locations, the 2D version of the CM cost can be stated as:

where \(\gamma \) and \(\kappa _{x}\) are the dispersion constant and the normalized kurtosis of the true 2D image, respectively. They are defined by

where \(\gamma =\sigma _{x}^{2}\kappa _{x}\) . The impact of \(J_\mathrm{CM}\) on the estimation of the true image \(x(n_{1}, n_{2})\) is twofold. First, it penalizes the deviations of \(\hat{{x}}^{2}(n_1 ,n_2)\) from the dispersion constant \(\gamma \), where the dispersion is caused by the PSF. Second, when the true image does not suffer from the effect of the PSF, its output histogram tends to be wide. Blurring the image has the effect of forcing the histogram to be narrower. By applying the constant modulus algorithm on the image, its histogram is constrained to be wider resulting in a sharper image. Only the types of PSFs that have low-pass characteristics have the effect of narrowing the image histogram, so the constant modulus algorithm cannot operate with less regular kernels such as the blur caused by camera shake.

The CM cost assumes two specific properties for the true image. The first one is the zero-mean assumption. In [33, 34], the high-resolution image pixels are thresholded to have odd integer values between \(-\)(\(L-1\)) and \(+\)(\(L-1\)) inclusive, where L is the number of gray levels in the original high-resolution image, to fulfill this assumption. Unfortunately, in this approach, the CM cost surface flattens as the number of gray levels (bit number per pixel) increases as a result of increasing image kurtosis. Hence, the algorithm that utilizes the CM cost with this approach shall have problems when the bit number per pixel is high, which is the case in [33, 34]. To figure out the stated problem, in this work, the true image pixel values are assumed to have complex values of constant modulus. A mapping diagram like the one shown in Fig. 2 can be used to validate this assumption. The diagram in Fig. 2 is for four-bit images. The diagrams for other bit levels can be constructed in a similar way. In the figure, the true image pixel values are represented by {.}, and the bold black dots represent the complex values for the pixels after mapping. Complex mapping also provides approximately zero-mean images if each pixel value is equally likely in an image. With this approach, the cost surface does not flatten as the bit number per pixel increases, because the image kurtosis is constant (equal to 1) for all bit levels in contrary with the previous approach.

The second assumption is that each gray level of the true image is an independent and identically distributed random variable. This assumption does not state that the pixels are i.i.d., which is also not the case in reality. It states that the gray levels are equally likely, which may not be true for a single image, but which may be a suitable assumption for a general image processing framework.

Because a closed-form solution does not exist for minimizing \(J_\mathrm{CM}\), a stochastic gradient-descent (GD) minimization method is used. A CM cost surface is generated by plotting the CM cost versus the (complex) adaptive filter parameters. The minimization algorithm tries to minimize the cost by starting at some point on the surface and then following the trajectory of the steepest descent. An instantaneous estimate of \(J_\mathrm{CM}\) is given by

In Fig. 1b, it is shown that the degraded images \(y_{i}(n_{1}, n_{2})\) are applied to a set of 2D adaptive complex FIR filters \(g_{i}(n_{1}, n_{2})\) which try to remove the blur and generate the high-resolution image (note that the subsampling process is still ignored). The true image estimate is then used to obtain a better estimate of the adaptive filter coefficients for the next spatial location in an adaptive manner. An estimate of the true image is obtained as the output of the complex adaptive filters at the jth iteration:

where \(A\times B\) is the support of the adaptive filters and \(g_{i,j}(a, b)\) are the coefficients for the ith adaptive filter at the jth iteration. The derivative of J with respect to the adaptive filter coefficients is needed in order to implement the GD minimization. Let \(\mathbf{g}_{j}\) denote the lexicographically ordered vector which is composed of the coefficients of the adaptive filters at the jth iteration:

and let \(\mathbf{y}(n_{1}, n_{2})\) be the regressor vector for the (\(n_{1}, n_{2})\)th pixel at the jth iteration:

where \(i= 1,2,\dots , M\). The estimate of the true image for the (\(n_{1}, n_{2})\)th pixel at the jth iteration can be written as

The derivative of J with respect to \(\mathbf{g}_{j}\) is given by

\((^{{\bullet }})\) denotes complex conjugate. The adaptive filters are updated according to

where \(\mu \) is a small positive step size that guarantees the algorithm stability. Unlike in [33, 34], where smaller step sizes must be chosen as the bit number per pixel increases, it can have a fixed value independent of the bit level here. In the simulations, something in the order of \(10^{-3}\) is chosen as the step size parameter. There is a trade-off in choosing the step size, setting it to a large value provides fast convergence with a risk of instability of the algorithm. It is determined empirically to obtain fastest possible convergence while maintaining stability.

The discussion above assumes that the subsampling operator does not exist. But obviously this is not the case in the super-resolution problem. To obtain resized versions of the observed low-resolution images as inputs to the adaptive filter set, the low-resolution images are registered on the high-resolution grid and zero values are assumed for the non-occupied pixels.

The CM cost is not convex; hence, the algorithm may result in a local minimum instead of the global minimum depending on how the adaptive filters are initialized. Initialization of each adaptive filter is made by using a 2D spike characterized by a nonzero coefficient whose location is determined by the motion vector of the corresponding filter input as the real part and zero as the imaginary part, i.e.,

This eliminates the effect of the motion at the beginning by initially shifting the interpolated low-resolution images to their original motion-free positions. Because only translational motion is assumed, the motion vector can be estimated using a simple block-matching method that considers sub-pixel movement [40, 41].

Based on the above discussion, the proposed CMA-based blind image super-resolution algorithm is given in Table 1. In the table, \((l_{1}, l_{2})\) shows low-resolution pixel locations. Note that bold case \(\mathbf{g}_{j}\) and y denote the lexicographically ordered vectors, while non-bold italic \(g_{i,j}\) and \(y_{i}\) denote scalar-valued 2D functions. The algorithm terminates when the average difference between successive iterations of the adaptive filter parameters falls below a pre-specified value. The image may be scanned more than once if the termination condition of the algorithm is not satisfied by processing of all pixels. Because the instantaneous gradient of the cost function is used to update the adaptive filter parameters, the proposed blind super-resolution scheme has the capability of coping with spatially varying blur filters also, if they change slowly and smoothly as a function of pixel location. The algorithm can also be used as a solution to the blind single-image deconvolution problem, where there is no subsampling and there is only one image.

4 Simulation results

In this section, some simulation results of the proposed method are provided for the restoration and super-resolution cases. To prevent ambiguity, all the algorithms mentioned are given names. For the restoration case, the previous (real-valued) method [33] is called CMA2, and the proposed method is called CMA2-C (to indicate that it uses complex values and complex mapping). For the super-resolution case, the previous method is called CMA2-SR, and the new method is called CMA2-C-SR.

4.1 Blind restoration

To evaluate the performance of CMA2-C for the single-image blind deconvolution case, a simulation is performed on a boat image (which is given in Fig. 3a). First, histogram equalization is applied to the 8-bit boat image, and then, complex-valued pixels are formed by using the mapping diagram described in Sect. 3. After this, a Gaussian blur of size \(7\times 7\) and variance 1 is applied on the original (complex-valued) image. Gaussian PSF is a common type of blur that arises especially in aerial imaging and remote sensing because of the long-term exposure through the atmosphere. Finally, the degraded image is obtained by adding varying levels of white Gaussian noise. The blurred and noisy image for blurred signal-to-noise ratio (BSNR) of 30 dB is shown in Fig. 3b.

Visual results for CMA2, CMA2-C, the maximum likelihood blind image deconvolution method [42], and the non-blind Lucy–Richardson method [43] are given in Fig. 3c–f, respectively, with the corresponding improvement in signal-to-noise ratio (ISNR) values. The image shown in Fig. 3b is used as the input. ISNR is a frequently used metric in image restoration [44] which is given by:

where \(x(n_{1}, n_{2})\) and \(y(n_{1}, n_{2})\) are the original image and the degraded image, respectively, and \(\hat{{x}}^{2}(n_1 ,n_2)\) is the reconstructed image. ISNR cannot be used when the original image is not known, but it can be used to compare performances of different methods when it is known. Both the visual results and the ISNR values suggest that CMA2-C has superior performance over CMA2. This is attributed to the fact that with the aid of complex mapping, the cost surface does not flatten and there is no need to choose a smaller step size as the bit number per pixel increases (8 bits in this case). Because CMA2 suffers from these effects, the improvement in image quality for high bit levels is minimal when compared to CMA2-C. Moreover, CMA2-C performs better than the maximum likelihood blind method and competes well against the Lucy–Richardson method both in visual and ISNR terms although the latter has the advantage of having the PSF parameters beforehand.

The ISNR values of CMA2-C and CMA2 for different bit depths and noise levels are given in Table 2. When the input is a 1-bit image, the performances of the methods are similar because for the 1-bit case, CMA2-C is essentially similar to CMA2. As the bit depth increases, the performance does not decrease much for CMA2-C although there is a significant performance loss for CMA2. As for the noise level, when the BSNR is about 10 dB, the ISNR drops below 2 dB and there is not a significant visual improvement in the reconstructed image. Hence, it can be said that the method is mostly useful when the BSNR is above 10 dB.

4.2 Blind super-resolution

To simulate the performance of the proposed method in the blind super-resolution case, two images (Lena and car) are used with two different kinds of blur (Gaussian and 2D uniform). Two-dimensional uniform blur is a representation of an out-of-focus blur. Both Lena and car images are 8-bit images, and their sizes are \(128 \times 128\) and \(176 \times 132\), respectively. To simulate the low-resolution and degraded image formation model along with the assumptions that are made throughout this work, first histogram equalization is applied on the Lena image, and then, the image is reduced to the desired bit level by using uniform quantization. After this, complex-valued pixels are formed by using the mapping diagram described in Sect. 3. Then, (i) global translation, (ii) Gaussian blur of size \(5 \times 5\) and variance 1, (iii) subsampling by two in both directions, and (iv) additive noise are applied on the image to obtain a low-resolution one. Stages (i) through (iv) are repeated three more times to have four low-resolution images. These images are the inputs to the algorithms under consideration. A similar process is also applied to the car image except that the blur is a uniform 2D blur with size \(5 \times 5\).

The visual results for the Gaussian blur case are given in Fig. 4, and the ISNR values for different bit and noise levels are given in Table 3. For comparison purposes, the visual result for the bilateral shift-and-add method [45] which uses the blind Lucy deconvolution algorithm [46] is also given in Fig. 4e. The parameters of the bilateral shift-and-add method and the iteration number for the blind Lucy deconvolution algorithm are chosen to give the best visual performance. The visual results are given only for the 8-bit case; the results for other bit levels are not shown in order to save space. Both the visual results and the ISNR values show that CMA2-C-SR outperforms CMA2-SR because of the same reasons for the single-image case. At first sight, the visual results for CMA2-C-SR and the bilateral shift-and-add method looks similar, but when the details are examined carefully, it can be seen that CMA2-C-SR is more successful in removing the blur. Besides, as seen in Table 3, there is not a significant improvement in SNR when the BSNR is at or below 20 dB. As a result, it can be said that CMA2-C-SR blind image super-resolution method can be utilized when the BSNR values of the images are above 20 dB. Similar results were obtained using the car images, but the figures are removed from the paper to save some space.

Finally, CMA2-C-SR is tested using a set of real images. Figure 5a shows four low-resolution images, and Fig. 5b shows one of the low-resolution images (interpolated and thresholded to 2 bits). The motion parameters are calculated by a simple correlation-based block-matching method which takes into account sub-pixel motion. The results of CMA2-SR and CMA2-C-SR are given in Fig. 5c, d, respectively, and the result for the bilateral shift-and-add method is given in Fig. 5e. CMA2-C-SR outperforms CMA2-SR in terms of readability, and it also competes well against the result of the bilateral shift-and-add method.

5 Conclusion and future work

In this work, a new blind image restoration and super-resolution method that is based on a 2D constant modulus algorithm and complex filtering is developed. This method does not suffer from the problem of degradation of performance as the bit number increases, which was the case in our previous method, because due to the complex mapping, kurtosis does not increase as the bit number per pixel increases. The method can remove the blur and achieve increase in resolution for any-bit images if the PSFs have low-pass characteristics.

An important drawback of the method is that it works only for pure translational motion. More general motion models include affine motion and local movement within images. Some studies are being performed to cover the affine motion model as well as pure translational motion. Another important disadvantage is the difficulty in applying the method to real-world distorted images. The reason for this situation is that during simulations, the true image is first mapped to the complex plane, and then, the degradations are simulated in the complex domain. However, for a true application, the distorted image(s) should be directly mapped to the complex plane and the algorithm must be run afterward. The results for this case are not as successful as the simulated ones, but this does not mean that the method cannot be used for real distorted images. As observed in the true image simulations, the method provides significant improvement over other methods including our previous method. Maybe if some means for incorporating the complex mapping mechanism to the imaging hardware can be found, then real-world applications of the method can become more available.

Normally, carrying out the iterations to scan the image a few times is enough for convergence, but for some cases, scanning the image several times becomes necessary resulting in a major increase in processing time. Hence, the method is more suitable for offline applications. Also the convergence analysis is not done properly, and the method can stuck in a local minimum at some point. Work is still being carried out to analyze and improve the convergence properties of the method.

References

Tsai, R.Y., Huang, T.S.: Multiple frame image restoration and registration. In: Advances in Computer Vision and Image Processing, pp. 317–339. JAI Press Inc., Greenwich (1984)

Irani, M., Peleg, S.: Improving resolution by image registration. CVGIP Graph. Models Image Process. 53, 231–239 (1991)

Stark, H., Oskoui, P.: High-resolution image recovery from image-plane arrays, using convex projections. J. Opt. Soc. Am. A 6, 1715–1726 (1989)

Patti, A.J., Sezan, M.I., Tekalp, A.M.: Superresolution video reconstruction with arbitrary sampling lattices and nonzero aperture time. IEEE Trans. Image Process. 6(8), 1064–1076 (1997)

Schultz, R.R., Stevenson, R.L.: Extraction of high-resolution frames from video sequences. IEEE Trans. Image Process. 5(6), 996–1011 (1996)

Tom, B.C., Katsaggelos, A.K.: Reconstruction of a high-resolution image by simultaneous registration, restoration, and interpolation of low-resolution images. In: Proceedings of 1995 IEEE International Conference Image Processing, vol. 2, pp. 539–542. Washington (1995)

Elad, M., Feuer, A.: Restoration of a single superresolution image from several blurred, noisy, and undersampled measured images. IEEE Trans. Image Process. 6(12), 1646–1658 (1997)

Farsiu, S., Robinson, D., Elad, M., Milanfar, P.: Fast and robust multi-frame super-resolution. IEEE Trans. Image Process. 13(10), 1327–1344 (2004)

Kang, M., Lee, E.: Regularized adaptive high-resolution image reconstruction considering inaccurate subpixel registration. IEEE Trans. Image Process. 12(7), 826–837 (2003)

He, H., Kondi, L.P.: An image super-resolution algorithm for different error levels per frame. IEEE Trans. Image Process. 15(3), 592–603 (2006)

Woods, N.A., Galatsanos, N.P., Katsaggelos, A.K.: Stochastic methods for joint registration, restoration, and interpolation of multiple undersampled images. IEEE Trans. Image Process. 15(1), 201–213 (2006)

Segall, C., Katsaggelos, A.K., Molina, R., Mateos, J.: Bayesian resolution enhancement of compressed video. IEEE Trans. Image Process. 13(7), 898–911 (2004)

Gunturk, B.K., Altunbasak, Y., Mersereau, R.M.: Super-resolution reconstruction of compressed video using transform-domain statistics. IEEE Trans. Image Process. 13(1), 33–43 (2004)

Shechtman, E., Caspi, Y., Irani, M.: Space-time super-resolution. IEEE Trans. Pattern Anal. Mach. Intell. 27(4), 531–545 (2005)

Park, S.C., Park, M.K., Kang, M.G.: Super-resolution image reconstruction—a technical overview. IEEE Signal Process. Mag. 20(3), 21–36 (2003)

Farsiu, S., Robinson, D., Elad, M., Milanfar, P.: Advances and challenges in super-resolution. Int. J. Imaging Syst. Technol. 14(2), 47–57 (2004)

Bose, N.K., Chan, R.H., Ng, M.K.: Special issue on high resolution image reconstruction. Int. J. Imaging. Syst. Tech. 14(2), 35 (2004). doi:10.1002/ima.20005

Ng, M., Chan, T., Kang, M.G., Milanfar, P.: Special issue on super-resolution imaging: analysis, algorithms, and applications. EURASIP J. Adv. Signal Process. 2006(1), 1–2 (2006). doi:10.1155/ASP/2006/90531

Baker, S., Kanade, T.: Limits on super-resolution and how to break them. IEEE Trans. Pattern Anal. Mach. Intell. 24(9), 1167–1183 (2002)

Capel, D., Zisserman, A.: Super-resolution from multiple views using learnt image models. In: Proceedings of IEEE Conference Computer Vision and Pattern Recognition, pp. 627–634 (2001)

Nguyen, N., Milanfar, P., Golub, G.: Efficient generalized cross-validation with applications to parametric image restoration and resolution enhancement. IEEE Trans. Image Process. 10(9), 1299–1308 (2001)

Woods, N., Galatsanos, N., Katsaggelos, A.K.: EM-based simultaneous registration, restoration, and interpolation of super-resolved images. In: Proceedings of IEEE International Conference Image Processing, pp. 303–306 (2003)

He, Y., Yap, K.H., Chen, L., Chau, L.P.: A soft MAP framework for blind super-resolution image reconstruction. Image Vis. Comput. 27, 364–373 (2009)

El-Khamy, S.E., Hadhoud, M.M., Dessouky, M.I., Salam, B.M., Abd El-Samie, F.E.: Blind multichannel reconstruction of high-resolution images using wavelet fusion. Appl. Opt. 44, 7349–7356 (2005)

Wirawan, P.D., Maitre, H.: Multi-channel high resolution blind image estimation. In: Proceedings of IEEE ICASSP, pp. 3229–3232 (1999)

Yagle: Blind superresolution from undersampled blurred measurements. In: Proceedings of Advanced Signal Processing Algorithms, Architectures, Implementation XIII, pp. 299–309 (2003)

Sroubek, F., Cristobal, G., Flusser, J.: A unified approach to superresolution and multichannel blind deconvolution. IEEE Trans. Image Process. 16(9), 2322–2332 (2007)

Faramarzi, E., Rajan, D., Christensen, M.P.: Unified blind method for multi-image super-resolution and single/multi image blur deconvolution. IEEE Trans. Image Process. 22(6), 2101–2114 (2013)

Kundur, D., Hatzinakos, D.: Blind image deconvolution. IEEE Signal Process. Mag. 13(3), 43–64 (1996)

Jiang, M., Wang, G.: Development of blind image deconvolution and its applications. J. X-ray Sci. Technol. 11, 13–19 (2003)

Godard, D.: Self-recovering equalization and carrier tracking in two dimensional data communication systems. IEEE Trans. Commun. 28(11), 1867–1875 (1980)

Treichler, J.R., Agee, B.G.: A new approach to multipath correction of constant modulus signals. IEEE Trans. Commun. 31(2), 459–473 (1983)

Vural, C., Sethares, W.A.: Blind image deconvolution via dispersion minimization. Digit. Signal Process. 16, 137–148 (2006)

Kara, F., Vural, C.: Blind image resolution enhancement based on a 2D constant modulus algorithm. Inverse Probl. (2008). doi:10.1088/0266-5611/24/1/015010

Kara, F., Vural, C.: Blind image deconvolution based on complex mapping. In: IEEE 15th Signal Processing and Communications Applications Conference (SIU) (2007)

Kara, F., Vural, C.: Complex mapping-based blind image super-resolution. In: IEEE 16th Signal Processing and Communications Applications Conference (SIU) (2008)

Elad, M., Hel-Or, Y.: A fast super-resolution reconstruction algorithm for pure translational motion and common space-invariant blur. IEEE Trans. Image Process. 10(8), 1187–1193 (2001)

Gillette, J.C., Stadtmiller, T.M., Hardie, R.C.: Aliasing reduction in staring infrared images utilizing subpixel techniques. Opt. Eng. 34(11), 3130–3137 (1995)

Vural, C., Sethares, W.A.: Recursive blind image deconvolution via dispersion minimization. Int. J. Adapt. Control Signal Process. 19(8), 601–622 (2005)

De Haan, G., Biezen, P.: Sub-pixel motion estimation with 3-D recursive search block-matching. Signal Process. Image Commun. 9, 229–239 (1994)

Kilthau, S.L., Drew, M.S., Moller, T.: Full search content independent block matching based on the fast Fourier transform. In: IEEE International Conference on Image Processing, Rochester (2002)

Nakajima, N.: Blind deconvolution using the maximum likelihood estimation and the iterative algorithm. Opt. Commun. 100, 59–66 (1993)

Richardson, W.H.: Bayesian-based iterative method of image restoration. J. Opt. Soc. Am. 62, 55–59 (1972)

Banham, M.R., Katsaggelos, A.K.: Digital image restoration. IEEE Signal Process. Mag. 14(2), 24–41 (1997)

Farsiu,S., Robinson, D., Elad, M., Milanfar, P.: Robust shift and add approach to super-resolution. In: Proceedings of the 2003 SPIE Conference on Applications of Digital Signal and Image Processing, pp. 121–130 (2003)

Tsumuraya, F., Miura, N., Baba, N.: Iterative blind deconvolution method using Lucy’s algorithm. Astron. Astrophys. 282, 699–708 (1994)

Acknowledgments

The authors would like to thank Prof. Peyman Milanfar for kindly providing the MDSP Resolution Enhancement Software. This work was supported by Scientific and Technological Research Council of Turkey (TUBITAK) under Project Number 107E193.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kara, F., Vural, C. Blind restoration and resolution enhancement of images based on complex filtering. SIViP 10, 1159–1167 (2016). https://doi.org/10.1007/s11760-016-0872-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-016-0872-y