Abstract

A new enhancement technique based on fuzzy intensity measure is proposed in this study to address problems in non-uniform illumination and low contrast often encountered in recorded images. The proposed algorithm, namely adaptive fuzzy intensity measure, is capable of selectively enhancing dark region without increasing illumination in bright region. A fuzzy intensity measure is calculated to determine the intensity distribution of the original image and distinguish between bright and dark regions. Image illumination is improved, whereas local contrast of the image is increased to ensure detail preservation. Implementation of the proposed technique on grayscale and color images with non-uniform illumination images shows that in most cases (i.e., except for processing time), the proposed technique is superior compared with other state-of-the-art techniques. The proposed technique produces images with homogeneous illumination. In addition, the proposed method is computationally fast (i.e., \(<\)1 s) and thus can be utilized in real-time applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Advancements in image processing have enabled the analysis of digital images in most computer vision applications [1–4], video surveillance [5–7], and biomedical engineering [8–14]. Digital images are often low in quality and suffer from non-uniform illumination or brightness, loss of details, and poor contrast. These problems become critical when the foreground of interest is difficult to be distinguished from the background, which worsens the segmentation problem and allows false recognition and detection to occur.

The human visual system has far larger dynamic ranges than most commercial cameras and video cameras. These devices have limited dynamic ranges; thus, recorded images obtained from these devices are usually non-homogeneous and low in contrast. Improper lighting condition and external disturbances, which worsen the aforementioned problems, are inevitable during image acquisition.

In this respect, most of the images acquired through commercial cameras and video cameras exhibit problems in non-uniform illumination and low contrast. Although these images contain significant information, such information is not visible because the images suffer from lack of sharpness and are easily influenced by noise. Image enhancement plays an important role as a preprocessing task that can significantly improve image quality. The basic idea of image enhancement is to increase the contrast of the bright and dark regions in order to attain better image quality. The visual information of the image is increased for better interpretation and perception to provide a clear image to the eye or assist in feature extraction processing in computer vision systems [15–18].

Various image enhancement algorithms have been proposed to enhance the degraded images in different applications. Image enhancement can be categorized into three broad types, namely transform, spatial, and fuzzy domains. The related studies on these three enhancement methods are discussed and presented in the succeeding section.

This paper is organized as follows. Related studies on image enhancement based on transform, spatial, and fuzzy domain approaches are elaborated in Sect. 2. Section 3 presents the proposed enhancement algorithm, and Sect. 4 explains the optimization procedure employed to obtain an optimum fuzzification factor. Sections 5 and 6 present the application of the proposed algorithm in color images and image analysis, respectively. The proposed algorithm is tested on non-uniform grayscale and color images in Sect. 7. The test images are compared in terms of visual representation and quantitative measures. Section 8 provides the conclusions of this paper based on the conducted analyses.

2 Related studies

The first method of image enhancement, namely the transform or frequency domain approach, is conducted by modifying the frequency transform of the image. Several enhancement techniques in the transform domain have been reported recently to solve the problem of non-uniform image illumination in face recognition and fingerprint enhancement applications [19–23]. In both applications, images normally exhibit non-uniform illumination; the details in the dark region of the images are less discernible. Enhancement is performed on the frequency transform of the image, and then the inverse transform is computed to obtain the resultant image. The intensities of the image are modified according to the transformation function [24, 25].

Although enhancement in the frequency domain produces good results, the low- and high-frequency components in the image are not easily constructed. This is because, the intensity values for low-contrast and non-uniform illumination images are mostly vague and uncertain. As a result, spatial information of the intensity values is insufficient; thus, image representation based on frequency components is not easily constructed. Furthermore, images enhanced by frequency domain methods are normally compressed and result in the loss of valuable information and details. Computing a two-dimensional transform for images with different sizes is very time consuming even with fast transformation techniques; such procedure is not suitable for real-time processing [26].

The second class of image enhancement methods modifies pixels directly. Histogram equalization (HE) represents a prime example of an enhancement technique in the spatial domain. Although HE is suitable for overall contrast enhancement, a few limitations exist. Enhancement by HE causes level saturation (i.e., clipping) effects as a result of pushing intensity values toward the left or right side of the histogram in HE [27]. Saturation effects not only degrade the appearance of the image but also lead to information loss. Furthermore, the excessive change in the brightness level induced through HE leads to the generation of annoying artifacts and unnatural appearance of the enhanced image.

Several brightness and detail-preserving modifications on HE techniques, which include adaptive HE techniques [28–35] as well as histogram specification [30, 36, 37], have been widely utilized to overcome these limitations in enhancing non-uniform illumination image. Adaptive methods provide better identification of different gray level regions through analysis of histogram in the local neighborhood window of every pixel. One example of modified HE approach is multi-histogram equalization technique [32, 38]. In this approach, image histogram is partitioned into multiple segments based on its illumination. The bright and dark regions in each segment are equalized independently. The techniques involve remapping the peaks, which produces perceivable changes in mean image brightness.

Ibrahim and Kong [34] proposed brightness preserving dynamic histogram equalization (BPDHE) to address the peak remapping problem. BPDHE utilizes Gaussian smoothing kernel to smooth peak fluctuations. The valley regions are then segmented, and the dynamic equalization is then performed on each segmented histogram.

Histogram equalization (HE) has furthermore been used in the context of tone mapping (TM) [39] in order to enhance images with non-uniform illumination and low contrast. At first, global histogram adjustment is conducted based on the TM operator. Subsequently, the image is segmented, and adaptive contrast adjustment with the TM operator is performed to increase the local contrast of the image and produce high-quality images.

The retinex approach was introduced by Land [40] to address problem with degraded images that exhibit non-uniform illumination and uneven brightness. This approach compensates for non-uniform illumination by separating illumination from reflectance in the given image.

Enhancement of images with non-uniform illumination can also be possibly conducted through mathematical morphology operation of top hat transform. Top hat transform is a mathematical morphology approach that utilizes structural elements to extract multi-scale bright and dark regions. The image is enhanced by enlarging the extracted bright and dark regions [41].

Another approach that addresses the non-uniform illumination of the image has been proposed by Eschbach [42]. A new parameter “exposure” was introduced and altered by iteratively comparing image intensity with a pair of preset thresholds of bright and dark regions. The image is processed until the threshold conditions are satisfied.

Although attempts have been made to enhance images by modifying every pixel in the spatial domain, vagueness in intensity values, which are caused by non-uniform lighting, have not been efficiently addressed. Therefore, a fuzzy enhancement technique is employed to overcome the aforementioned problem. Pixels are converted and modified in the fuzzy domain, which is the third category of image enhancement. The fuzzy system tool is adopted in image enhancement because this tool can mimic human reasoning and is beneficial in dealing with ambiguous situations that occur in non-uniform illumination image.

Fuzzy image enhancement was introduced as early as 1981 by Pal and King [43]. The smoothing algorithm of a linear non-recursive filter is employed. This filter acts as defocussing tool in which a part of the intensity of pixels is being distributed to their neighbor. The image is enhanced by optimizing objective parameters, namely index of fuzziness and entropy. Fuzzy set theory concept is widely adopted in image enhancement either globally, locally [44, 45], or combined with other approaches such as fuzzy histogram adjustment.

Sheet et al. [46] incorporated fuzzy set theory in histogram modification of digital images, and its performance was compared with the BPDHE approach. This new approach exhibited improved performance compared with BPDHE because the former involves computations employing an appropriate fuzzy membership function. Thus, the imprecision of gray levels is handled well, and histograms appear smoother in the sense that they do not exhibit random fluctuations. The new approach helps obtain meaningful bright and dark regions for brightness preserving equalization.

The fuzzy concept has been adopted by a few researchers [26, 47, 48]. The “exposure” parameter is further exploited, and its role in fuzzy enhancement is improved. The exposure is calculated and clustered into overexposed and underexposed regions. Two different functions of the modified fuzzy triangular membership function and power-law transformation are utilized to specifically enhance the overexposed and underexposed regions.

The non-uniform illumination problem was further investigated and improved by Verma et al. [48]. The image was categorized into three regions namely, underexposed, overexposed, and mixed regions. Enhancement was performed on color image, which the luminance component was modified with specific functions according to the three aforementioned regions. In this approach, the quantitative measure of exposure is optimized through an iterative procedure to improve image quality [47, 49–51]. However, this approach requires a complicated optimization process, which adds to the existing complication of the enhancement process in order to achieve good quality image.

Although numerous studies focus on the development of the enhancement algorithm either locally or globally, the enhancement process that produces images with optimum and best quality remain debatable. An optimally enhanced image refers to a well-illuminated image that with uniform brightness and detail preservation while existing noises are not enhanced.

A new approach in fuzzy enhancement is proposed in this study to address these problems and to efficiently enhance images with the non-uniform illumination and low contrast. The enhancement techniques proposed by the authors in [52, 53] successfully enhanced images with non-uniform illumination. However, the details of the image are not well preserved, and significant features are not enhanced and not fully developed which caused significant decrement in clarity of the image. Therefore, the new fuzzy intensity measure proposed in this study involves computations that consider the mean and deviation of histogram intensity distribution. The threshold that distinguishes between dark and bright regions is then determined. The image is clustered into two regions using the fuzzy membership function. The image is enhanced separately in each region to obtain an image with better quality.

3 Proposed algorithm

The proposed algorithm for adaptive fuzzy intensity measure (AFIM) is presented in this section. Considering that image information is vague, the pixel values that constitute the images with non-uniform illumination (i.e., non-uniform intensity and brightness of the image) may not be precise; inherent imprecision is possibly embedded in the images. Determining whether the pixels should be made darker or brighter than their original intensity level during enhancement is difficult. Visual assessment by a human observer is subjective, and quantitative analysis of the image contrast does not represent well the improvement that has been made in the original image. This is because the image contrast is quantitatively calculated by measuring the deviation in the intensity values. This situation justifies the scenario of having high value of image contrast while in terms of qualitative evaluation, the image appears over-enhanced and unnatural. The quantitative measurement of the image contrast only calculates the deviation of the intensity values without considering whether the image is naturally enhanced or unnaturally enhanced. The proposed approach thus adopts the fuzzy approach which addresses vagueness and image uncertainty to enhance the image. The process is performed by associating a degree of belonging to a particular cluster in the fuzzy membership function.

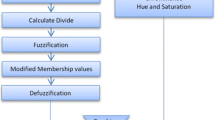

Fuzzy image enhancement has three main stages, namely image fuzzification, modification of membership value for enhancement process, and image defuzzification (Fig. 1). The intensity levels (i.e., pixels values) are converted from spatial to fuzzy domain in the image fuzzification process. Each pixel is assigned either to the dark or bright regions based on a predetermined threshold. The membership values of each pixel are computed.

We consider an image with non-uniform illumination of size \(R \times C\) denoted as \(A\) with intensity level \(m\) at pixel position \((i,j)\) in the range of \([0~L-1]\) in the image fuzzification stage. \(R\) and \(C\) are the number of rows and columns in the image, respectively. \(L\) is the total number of gray levels in the image. \(\mu (m)\) denotes the membership value of the pixels of image \(A\). \(\mu (m)\) is calculated for every pixel, and in this case, the \(\mu (m)\) is calculated globally to enhance the original image.

For the purpose of fuzzification, the intensity distributions in both regions (i.e., dark and bright regions) are assumed to be Gaussian. This means that the intensity distribution of the image is uniformly distributed in Gaussian shape which the most intensity values are accumulated in the middle of the histogram distribution (i.e., middle region of intensity values). This is because, in the low-contrast and non-uniform illumination images, most of the intensity values are mainly concentrated in the middle of the histogram distribution. This can be observed in Fig. 2a where the histogram has high amplitude at the middle region of the intensity values.

Therefore, a modified Gaussian membership function is utilized to determine the membership values of the pixels in the image that lies in the range [0,1]. The Gaussian membership function is selected in this study because even though separate functions are utilized to enhance the bright and dark regions, smooth transition is required to enhance both regions. The Gaussian membership function with continuous differentiable curves is selected. Other membership functions such as triangular or trapezoidal membership functions do not possess such abilities (Fig. 2).

A certain region in the image with non-uniform illumination appears darker or brighter than the other regions in the image. Thus, a parameter called fuzzy intensity measure is introduced. This parameter considers the mean and deviation of histogram intensity distribution, which is provided by Eqs. (1)–(3). These equations are calculated to determine the non-homogeneous intensity distribution of the image. The calculated fuzzy intensity measure is then utilized to determine a threshold \(T\), which clusters the image into bright and dark regions based on Eq. (4). The dark region is clustered in the range of \([0~T-1]\), whereas the bright region is clustered in the range of \([T, L-1]\).

where \(m\) is the intensity of the pixel at position \((i,j)\) and \(p(m)\) represents the number of pixels in the histogram of the entire image. \(g_{\mathrm{d}}\) and \(g_{a}\) are deviation and mean intensity distributions, respectively.

After the image is divided into two regions (dark and bright regions) based on the value of \(T\), fuzzification is performed in each region separately. The modified Gaussian membership function is utilized for the fuzzification of the dark region as follows:

where \(\mu _{\mathrm{d}}~(m)\) is the membership function in the dark region and \(m\) is the intensity value in the dark region in the range of [0 \(T-1\)]. \(m_{\mathrm{avg}}\) and \(m_{\mathrm{max}}\) are the average intensity and maximum intensity of the image, respectively. \(\zeta _{\mathrm{d}}\) the fuzzifier function of the dark region, is provided by:

where \(\sigma _{m}\) is the standard deviation of intensity of the entire image, \({{m_{\mathrm{d}}}_{\mathrm{avg}}}\) is the average intensity of the dark pixels, and \(m_{\mathrm{d}}\) and \(p(m_{\mathrm{d}})\) are the intensities and histogram of the dark region, respectively.

The mirror function of the aforementioned Gaussian membership function is utilized to fuzzify the bright region of the image for \(m \ge T\) as follows:

where \(\mu _{\mathrm{b}}~(m)\) is the membership function of bright region. \(\zeta _{\mathrm{b}}\) is the fuzzifier function in the bright region.

where \({{m_{\mathrm{b}}}_{\mathrm{avg}}}\) is the average intensity of the bright pixels, \(m_{\mathrm{b}}\) is the intensity of the bright region, and \(p(m_{\mathrm{b}})\) is the histogram of the bright pixels.

The fuzzifier functions of \(\zeta _{\mathrm{d}}\) and \(\zeta _{\mathrm{b}}\) calculate the intensity deviation in the dark and bright regions, respectively. \(\alpha \) is the fuzzification factor that depends on the intensity values of the input image. The selection of \(\alpha \) will be explained in details in the succeeding section.

Once fuzzification is completed, the original input pixels that exhibit non-uniform illumination and low contrast are transformed into Gaussian distributed pixels. The local contrast of the image is based on intensity difference in a small region, and it is computed to preserve the details of the image. Local contrasts are defined for the dark and bright regions as:

where \(\mu _{\mathrm{d}}~(i,j)\) and \(\mu _{\mathrm{b}}~(i,j)\) represents the \(3\times 3\) local fuzzified image (i.e., output image obtained after fuzzification process) of \(\mu _{\mathrm{d}}\) and \(\mu _{\mathrm{b}}\), respectively, which are centered at position \((i,j)\). Max \((\mu _{\mathrm{d}}~(i,j))\) and max \((\mu _{\mathrm{b}}~(i,j))\) represent the maximum gray level values of the local fuzzified image for dark and bright regions, respectively. Min \((\mu _{\mathrm{d}}~(i,j))\) and min \((\mu _{\mathrm{b}}~(i,j))\) denote the minimum gray level values of the local fuzzified image for dark and bright regions, respectively.

Modification of the fuzzified image is performed once the aforementioned steps are executed. Modification is performed to enhance the fuzzified image based on the dark and bright regions, which include the local contrast of the image as shown in Eqs. (11) and (12), respectively.

where \(\mu _{\mathrm{d}}^{\prime }\) and \(\mu _{\mathrm{b}}^{\prime }\) are the modified membership functions in the dark and bright regions, respectively. \(C_{L_{\mathrm{d}}}\) and \(C_{L_{\mathrm{b}}}\) are the local contrast of dark and bright regions, respectively, which are computed to preserve the details in the image.

The above functions modify the original membership functions of \(\mu _{\mathrm{d}}~(m)\) and \(\mu _{\mathrm{b}}~(m)\). The modified functions are then defuzzified with the respective inverse membership functions as shown in Eq. (13). Both regions are combined to obtain the enhanced image. The pixels in the dark region are scaled back to the range \([0~T-1]\), whereas the bright region is translated and scaled back to the region \([T L-1]\).

where \(M\) is the enhanced image obtained from the defuzzification process.

4 Optimization of fuzzification factor

The fuzzification factor differs with different input images as discussed in the previous section. As a result, the optimum parameter value of \(\alpha \) must be selected to obtain a pleasant image. Results obtained from simulation on 300 images with non-uniform illumination consisting of 150 grayscale images and 150 color images show that the optimum value of \(\alpha \) is set to the parameter value that yields the maximum image quality index \((Q)\). \(Q\) is computed by modeling any image distortion as a combination of three factors, namely loss of correlation, luminance, and contrast distortions as shown in Eq. (14).

The original and enhanced images are assumed to contain \(m = \{m_{y} {\vert } y = 1,2{\ldots }Z \}\) and \(M = \{M_{y} {\vert } y = 1,2{\ldots }Z\}\), respectively. \(m_{y}\) and \(M_{y}\) are the intensity levels of the original and enhanced images, respectively. The best value of ‘1’ is achieved if and only if \(m_{y} = M_{y}\). \(Q\) is defined as [54]:

where

Figure 3 shows three non-uniform illumination in which the illumination and intensity distribution of these images are non-homogeneous. The plots of \(Q\) in Fig. 3a–c) illustrate the changes in \(Q\) as fuzzification factor, \(\alpha \) varies from 0 to 30. Automated tuning is conducted until a homogeneous image is obtained. The homogeneous image is attained when Q reaches its maximum value. Figure 3 shows that \(Q\) reaches its highest value when alpha is 8, 5, and 4 as circled in Fig. 3d–f, respectively.

The optimal procedure for selecting \(\alpha \) is described as follows. For a given input image (i.e., original image), the value of \(\alpha \) is varied from a minimum of 1 to a maximum of 30. For each value of \(\alpha \), the following automated tuning procedures are performed:

-

1.

Apply the algorithm presented in Sect. 2 to generate an enhanced image

-

2.

Calculate \(Q\) with Eq. (14)

-

3.

Select the parameter value that produces the maximum \(Q\) as the optimum value of \(\alpha \), after the two aforementioned steps.

The enhanced image is generated by adopting optimum \(\alpha \) according to the enhancement process in Sect. 2 to produce the final output. Simulations are performed on 300 test images with non-uniform illumination to validate the automatic selection of \(\alpha \). Examples of the automatic selection of \(\alpha \) are presented in Fig. 3.

5 Application in color images

The aforementioned algorithm can also be applied to color images by modifying gray level values. Enhancement for color images is conducted by converting Red, Green, and Blue (RGB) color space into Hue, Saturation, and Intensity (HSI) color space. This conversion is performed because direct enhancement in RGB may produce color artifacts. HSI color space is able to separate chromatic from achromatic information, thus ensuring that the original color of the image is not distorted.

Enhancement is performed by preserving the hue component (H) and transforming the intensity component (I) based on the algorithm presented in Sect. 2. The saturation (S) component is modified with a power transformation function as shown in Eqs. (20)–(22).

where \({\overline{m}_{W_{i,j}}}\) is the local average gray level value in \(W_{i,j}\) window and \({{\sigma _{m}}_{W_{i,j}}}\) is the local intensity deviation in the \(W_{i,j}\) window. CF is the contrast factor that is calculated to enhance the local contrast of the image. \(S_{\mathrm{d}}'(m)\) and \(S_{\mathrm{b}}' (m)\) are the modified saturation values of the dark and bright regions in the HSV color space, respectively. \(S_{\mathrm{d}}~(m)\) and \(S_{\mathrm{b}}~(m)\) are the corresponding saturation components for the dark and bright regions, respectively. \(\tau _{\mathrm{d}}\) and \(\tau _{\mathrm{b}}\) are the saturation intensifier and de-intensifier selected experimentally.

In order to ensure the contrast and details in the local neighborhood window are enhanced, the S component is modified. The modification of S component is conducted by considering the local average gray level value and local intensity deviation as shown in Eqs. (20)–(22). The HSI color space is converted back to RGB color space after the S and I components are adjusted to enhance the image.

6 Image analysis

The quantitative measures for image analysis are presented in this section. Image quality measurement is an important research area. Establishing a correct and effective measure to quantify the quality of the enhanced images is difficult. The proposed algorithm as a new enhancement technique is expected to significantly improve the quality of the image while preserving the details. The dark pixels should be enhanced, and noises should not be amplified.

The performances of the proposed algorithm are evaluated and compared based on four quantitative measures, namely the image quality index \((Q)\), contrast (C), clarity index (PL) [41], and computational time \((t)\).

\(Q\) is computed with Eq. (14) as discussed in Sect. 3. The image quality index, called color fidelity metric, \(Q_{\mathrm{color}}\) proposed by [55], is utilized for color images to observe quality improvement during enhancement. The enhanced image, which is in RGB, is transformed to LAB color space. \(Q_{\mathrm{color}}\) is defined as:

where \(Q_{l}, Q_{\alpha }\), and \(Q_{\beta }\) represent the fidelity factors of \(l,\,\alpha \), and \(\beta \) channels, respectively. \(w_{l}, w_{\alpha }\), and \(w_{\beta }\) are the corresponding weights attributed to the perceived distortions in each of these channels.

As an addendum to the computed \(Q,\,C\) is employed as the contrast enhancement measurement of the sample images. Large \(C\) indicates that the enhancement technique successfully attained appropriate contrast. \(C\) is calculated with Eq. (24).

where \(M_{y},\,\overline{M}\), and \(p(M_{y})\) are the intensity of the enhanced image, mean intensity of the enhanced image, and histogram of the enhanced image, respectively.

The measure of PL [41] is calculated to measure both noise and clarity in the image. PL is computed by considering the peak signal-to-noise ratio (PSNR) and index of fuzziness \((\gamma )\) in the image, PL is defined as:

A large value of PSNR indicates that the corresponding algorithm enhances the image appropriately and produces minimal noise. \(\gamma \) is employed in the analysis because \(\gamma \) is commonly utilized to measure the clarity of the enhanced images. A small value of \(\gamma \) indicates that the enhanced result is clear and that enhancement of the corresponding algorithm produces a good quality image. Dividing the PSNR and \(\gamma \) generates a measure that includes noise condition and image clarity. A large value of PL indicates that the enhanced image contains minimal noise and that the clarity of the image is increased. PSNR and \(\gamma \) are calculated with Eqs. (26) and (28), respectively.

where

where \(M\) and \(M_{\mathrm{max}}\) are the intensity and maximum intensity of the enhanced image, respectively.

Computational time \((t)\) is investigated to measure the computational complexity of the enhancement algorithm. \(t\) is defined as the total time required to completely process the input image. It changes dynamically depending on the size of the image which is closely related to the total number of pixels of the image.

7 Results and discussions

The performance of the proposed enhancement technique is presented in this section. Quantitative and qualitative results obtained from the proposed technique are also compared with other fuzzy techniques such as BPDFHE [46] and fuzzy quantitative measure (FQM) presented in [48].

Brightness preserving dynamic histogram equalization (BPDHE) is utilized for comparison in this section because this method considers the crisp histogram of the image, which is beneficial for the enhancement process. FQM is also utilized for comparison because it is related to the proposed method, which computes the quantitative measures of gray levels to enhance the image.

The proposed enhancement technique is also compared with three other non-fuzzy techniques. The techniques include TM presented in [39] which involves the enhancement of non-uniform illumination in high dynamic range image. Discrete cosine transform (DCT), which is conducted in the frequency domain [56], is also included in the analysis. Gamma correction (GC) approachFootnote 1 [24] is likewise adopted for comparison.

The experimental results of this study are obtained by implementing and processing the degraded images with Matlab R2010a and Intel(R) Core(TM) i3 2100 3.10 GHz and 4 GB RAM. The degraded images utilized for comparative analysis include standard images with non-uniform illumination (size of \(400\times 264\)), that are obtained from the California Institute of Technology Computational Vision Database.

Subjective appearance evaluation is performed on several grayscale images with non-uniform illumination as shown in Figs. 4, 5, and 6. Comparative analysis includes observing whether the techniques are able to enhance an entire image without over-enhancing or under-enhancing certain regions in the image. The details of the images are observed to ensure that no information loss occurs in the enhanced image. The quantitative results from each image are also presented in Figs. 4, 5, and 6. The original images presented in Figs. 4, 5, and 6 are having non-uniform illumination. These images suffer from uneven lighting, where the dark regions accumulate on Man 1 and Man 2’s faces in Figs. 4 and 5. Meanwhile, the foreground (i.e., Man 3’s face) in Fig. 6 appears brighter than the background.

Figure 4 shows that most of the enhancement techniques are able to enhance the image and significantly improve overall brightness/illumination of the image. The performance of the enhancement techniques can be analyzed by observing the brightness of foreground (i.e., big rectangular area) and background (i.e., small rectangular area). TM technique attains the best-enhanced and best-illuminated foreground in which the Man 1’s face (Fig. 4f), appear the brightest as compared to the other techniques. However, over-enhancement is apparent in the background of the images enhanced by this method. This is due to the image pixels in the background are clipped to white; thus, the details of the image are not preserved. Similar situations are observed in the enhanced images produced by FQM (Fig. 4c) and GC (Fig. 4g). Although these methods improve the overall brightness of the image, details of the images are loss during the enhancement process. This scenario occurs because although specific functions are utilized and enhancement is conducted separately for bright and dark regions, the local contrast of the image is not considered, resulting in loss of details.

Discrete cosine transform (DCT) and BPDFHE improve image illumination while maintaining the background details. However, both techniques produce dark regions on Man 1’s face. BPDFHE amplifies the existing noise during enhancement as exhibited by the lowest value of PL (i.e., 22.63). This finding indicates that BPDFHE does not reduce fuzziness in the original image and the existing noise is de-attenuated.

The enhanced image produced by the proposed AFIM method (Fig. 4b) exhibits appropriate contrast; the edges of the wall and tree in the background are clear. Furthermore, the edges on Man 1’s face are clear and smooth as compared to its original image. As discussed in Sects. 3 and 6, \(Q\) is computed by considering the luminance (i.e., illumination) and contrast distortion as well as loss of correlation between the original and enhanced images. Therefore, the quantitative result of the proposed method attains the highest \(Q\) of 0.97, implies that overall image quality is improved without causing any saturation effect. Local contrast is enhanced, whereas noise is suppressed.

Although TM technique produces the brightest image (Fig. 4f), unnecessary enhancement is performed to the existing bright region (i.e., background), thus causing over-brightness at the background. Meanwhile, the proposed AFIM method provides more suitable approach in which the illumination of the image can be performed using fuzzifier and membership functions presented in Eqs. (5) and (6) for dark region as well as Eqs. (7) and (8) for bright region. In addition, the local contrast parameter in Eqs. (9) and (10) can be modified to preserve the image details.

Other examples of non-uniform illumination are presented in Fig. 5. The foreground of the image (i.e., Man 2’s face) in Fig. 5 is dark, whereas the background of the image is dominated by the bright region. The brightness on Man 2’s face (i.e., dark region) is increased with the function presented in Eq. (5). The proposed method successfully enhances the image, preserves the details of the image, and enhances local contrast as shown in Eq. (9). Figure 5b illustrates the details of the tree in the background are enhanced without causing any saturation. The enhanced image produced by the proposed method exhibits an increase in image contrast. Thus, the produced image looks natural.

Fuzzy quantitative measure (FQM) causes saturation in the background. The foreground is darker than the original image, causing the enhanced image to appear unnatural. FQM has the lowest value of \(Q\), which is 0.55. Images enhanced by TM and GC over-enhance the existing bright regions (i.e., the background of the image) which causes loss of details at the background. DCT and BPDFHE are able to improve image illumination; however, the foreground is darker, and the edges are less smooth compared with images enhanced by the proposed method.

Other images with non-uniform illumination image are presented in Fig. 6. In contrast to Figs. 4 and 5, the foreground (i.e., Man 3’s face) in this figure is brighter than the background. The TM operator over-enhances the existing bright region on Man 3’s face. The same effect also occurs in FQM where illumination of the enhanced image is uneven and non-homogeneous. Unwanted intensity saturations are avoided in the proposed method, DCT, and BPDFHE.

Figure 6 also shows that the proposed AFIM method yields the best enhancement result with smooth edges and details as shown in the small rectangle in the figure. Image illumination is enhanced with Eqs. (11) and (12) as refer to its dark and bright regions, respectively. The PL value of the proposed algorithm is bigger than other algorithms, which is 49.85. This result verifies that the proposed AFIM algorithm enhances non-uniform illumination while suppressing existing noise. In addition, the proposed AFIM algorithm improves image quality as exhibited by the highest \(Q\) and \(C\) values of 0.95 and 65.48, respectively.

Apart from the grayscale images presented in Figs. 4, 5, and 6, the enhanced images produced by the proposed method in comparison with other techniques are presented in Table 3, “Appendix 1”. Twenty supplementary images are illustrated, and their respective quantitative analysis is tabulated in Tables 5, 6, 7, and 8, “Appendix 2”. In terms of the overall performances, the proposed AFIM method outperforms other enhancement techniques by producing most images with either the highest or second highest \(C\) and the highest \(Q\). The capability of the proposed AFIM method to consistently produced high PL values indicates its advantage in producing image with improved clarity and minimal noises. In addition, the proposed AFIM method requires \(<\)0.5 s (in most cases) to compute which is comparable with other enhancement techniques.

The performance of the proposed algorithm in enhancing the grayscale images is evaluated by quantitative analysis of 150 grayscale non-uniform illumination images as tabulated in Table 1. Comparison is performed based on the average and standard deviation values of \(Q,\,C\), PL, and processing time, \(t\) for 150 grayscale images with non-uniform illumination. The best results are presented in bold for each analysis. The error bars of the quantitative analysis of grayscale images presented in Table 1 are also plotted in Figs. 10, 11, 12, and 13 in “Appendix 3”. The error bars give better representation of the quantitative analysis presented in Table 1. Each quantitative analysis (i.e., \(C,\,Q\), PL and \(t\)) is plotted in the error bars and compared with other enhancement techniques. Figures 10, 11, and 12 show that the proposed AFIM method attains the highest \(Q\) and PL with the lowest standard deviation. Meanwhile the proposed AFIM method obtains second highest rank in terms of \(C\). As elaborated in Sect. 6, high \(C\) and \(Q\) indicate that the image is successfully enhanced with better contrast and quality of image is improved. In addition, high PL represents that the enhancement technique is capable to enhance the image without enhancing the existing noise.

The comparison presented in Table 1 indicates that in terms of the average and standard deviation values, the proposed AFIM method attains the highest value of \(Q\) and PL and outperforms other methods. Meanwhile, the proposed method is able to preserve the details by considering the local contrast of the dark and bright regions in Eqs. (9) and (10), respectively. Image information is retained, and overall contrast is improved. The proposed method achieved the second highest overall contrast after BPDFHE. The proposed AFIM method has successfully reduced the fuzziness of the image, resulting in the highest value of PL as indicated by Eq. (25). Furthermore, the proposed AFIM method involves less complex computations and is comparably easier (i.e., ranked fourth) to execute compared with other enhancement techniques.

Comparison of enhancement performance is also conducted for color images (Figs. 7, 8, and 9) to examine the enhancement effect produced by the proposed method. Figure 7a shows the original image with non-uniform illumination where the foreground (i.e., Man 4’s face) is darker than the background. The details of the images are lost, and the intensities in the dark regions are decreased, resulting in the dim region surrounding the man’s face. Enhancement process is conducted to improve the quality of the original image and to ensure that the processed image exhibits homogeneous illumination.

The proposed AFIM method increases illumination in the dark region (i.e., around the man’s face) without causing any intensity saturation as compared to the FQM. The image enhanced by the proposed method obtained better illumination and improved image details without causing any color saturation. The intensifier and de-intensifier as presented in Eqs. (21) and (22) are utilized in the color enhancement process to ensure that the enhanced image appears natural with better homogeneous illumination. In addition, the proposed AFIM method produces enhanced image with the highest \(Q,\,C\) and PL of 0.78, 153.39, and 118.68, respectively. The proposed algorithm outperforms the other enhancement algorithms, obtains uniform illumination image with less noise, and increases image clarity.

Tone mapping (TM) produces a natural-looking enhanced image, similar and comparable to the image enhanced by the proposed AFIM method. However, the TM operator over-enhanced the existing bright region in the image, thereby producing an over-bright region at the background of the image. BPDFHE is able to improve overall image illumination; however, the foreground is darker than the rest of the regions in the image. Contrast is also increased which attains the second highest of C which is 115.06.

Another example of non-uniform illumination is depicted in Fig. 8. The bright regions are mainly distributed in the foreground (i.e., around Man 5’s face), and the background of the image is dark. Although this figure clearly shows that most of the methods enhance the original image, the problems regarding over-enhancement still occur in the existing bright regions of images produced by DCT, BPDFHE, TM, and GC. Although FQM de-enhanced the bright region, accumulated on Man 5’s face, the enhanced image appears unnatural and image appears blurred than the original image presented in Fig. 8a. This finding is supported by the fact that FQM has the lowest value of \(Q\) which is only 0.66. The proposed method on the other hand has a \(Q\) value of 0.90.

Figure 9 presents other example of images with non-uniform illumination. Visual appearance in this figure indicated that the proposed AFIM method produces a better-enhanced image where the intensity values in the bright region are decreased accordingly and important features are maintained. The saturation intensifier and de-intensifier are applied accordingly with Eqs. (21) and (22) for the dark and bright regions, respectively. A natural-looking enhanced image is attained as supported by the second highest value of \(Q\), which is 0.82.

Discrete cosine transform (DCT), FQM, and GC approaches enhance the existing bright regions in the image background, causing the background to be over-enhanced and blurred. The edges and details of the image appear deteriorated resulting in a lower value of \(Q\) and \(C\) compared with the enhanced image obtained through the proposed method. Unlike the other enhancement techniques, TM produced the brightest image; however, the image appears unnatural and saturated, especially the foreground. The performance of the proposed AFIM method is further depicted in Table 4, “Appendix 1”. Five non-uniform illumination color images are tabulated in this table. It is attested from this table that the proposed AFIM method successfully enhanced those color images without causing loss of details in the image.

The qualitative results presented in Figs. 7, 8, and 9 are supported by the average and standard deviation values presented in Table 2. As highlighted in Table 2, the proposed method attains the highest values of \(Q,\,C\), and PL. This result indicates that the proposed method successfully enhanced the image while preserving the details and suppressing existing noise. The graphs of 150 color images are plotted in these figures for different enhancement techniques. The results show that the proposed AFIM method obtains the highest \(C,\,Q\) and PL for most of the color images. In addition, the error bars in Figs. 14, 15, 16, and 17 depict that the proposed method produced the best results that possess the highest average and low standard deviation values for most of the analysis.

8 Conclusions

A novel enhancement technique based on the fuzzy intensity measure was proposed in this study to solve problems regarding degraded images with non-uniform illumination and low contrast. Image illumination is improved by applying a specific Gaussian function for dark and bright regions. The local contrast of the image was enhanced with a sigmoid function to ensure that the details of the image are preserved. The intensifier and de-intensifier were also applied to the saturation level of the color images to avoid over-saturation. Comparative analyses were performed to evaluate the performance of the proposed AFIM technique. The visual quality of the obtained images were compared, and their quantitative measures were computed. Qualitatively, the enhanced images produced by the proposed technique are able to enhance the dark region, and the existing noise was not enhanced. The qualitative findings also supported by quantitative analysis, which indicates that the proposed technique outperformed the other techniques in terms of improving non-uniform image illumination. The findings reveal that the proposed method surpassed the other techniques and obtained the best image quality index. The proposed algorithm was comparably fast because it selectively enhanced the image according to its corresponding bright or dark regions. Existing noise was successfully attenuated compared with the other techniques. Therefore, the proposed technique is suitable for use in real-time applications.

Notes

In the GC approach, the value of gamma is chosen based on the optimization procedure as presented in Sect. 4. However, for this approach, the gamma values are incremented from 0.1 to 1.0 and gamma value that produces the maximum \(Q\) is chosen as the optimum value of gamma.

References

Bumbaca, F., Smith, K.C.: A practical approach to image restoration for computer vision. Comput. Vis. Graph. Image Process. 42(2), 220–233 (1988)

Huang, K.-Q., Wang, Q., Wu, Z.-Y.: Natural color image enhancement and evaluation algorithm based on human visual system. Comput. Vis. Image Underst. 103(1), 52–63 (2006)

Chan, F.W.Y.: Measurement sensitivity enhancement by improved reflective computer vision technique for non-destructive evaluation. NDT & E Int. 43(3), 210–215 (2010)

Xue-bo, J., Hai-jiang, Z., Jing-jing, D., Ya-ming, W.: S-Retinex brightness adjustment for night-vision image. Procedia Eng. 15, 2571–2576 (2011)

Shieh, W.-Y., Huang, J.-C.: Falling-incident detection and throughput enhancement in a multi-camera video-surveillance system. Med. Eng. Phys. 34(7), 954–963 (2012)

Kim, S., Shin, J., Paik, J.: Real-time iterative framework of regularized image restoration and its application to video enhancement. Real-Time Imaging 9(1), 61–72 (2003)

Wu, Y., Sun, Y., Zhang, H.: A fast video illumination enhancement method based on simplified VEC model. Procedia Eng. 29, 3668–3673 (2012)

Bhaskar, H., Singh, S.: Live cell imaging: a computational perspective. J. Real-Time Image Process. 1(3), 195–212 (2007)

Feng, J., Xiong, N., Yang, L., Yang, Y.: A low distortion image enhancement scheme based on multi-resolutions analysis in next generation network. Multimed. Tools Appl. 56(2), 227–243 (2012)

Hasikin, K., Isa, N.A.M.: Fuzzy enhancement for nonuniform illumination of microscopic Sprague Dawley rat sperm image. In: Medical Measurements and Applications Proceedings (MeMeA), 2012 IEEE International Symposium on, pp. 1–6 (2012)

Kimori, Y.: Mathematical morphology-based approach to the enhancement of morphological features in medical images. J. Clin. Bioinform. 1(1), 33 (2011)

Mittal, D., Kumar, V., Saxena, S., Khandelwal, N., Kalra, N.: Enhancement of the ultrasound images by modified anisotropic diffusion method. Med. Biol. Eng. Comput. 48(12), 1281–1291 (2010)

Shiraishi, J., Li, Q., Appelbaum, D., Doi, K.: Computer-aided diagnosis and artificial intelligence in clinical imaging. Semin. Nucl. Med. 41(6), 449–462 (2011)

Shiraishi, J., Li, Q., Appelbaum, D., Pu, Y., Doi, K.: Development of a computer-aided diagnostic scheme for detection of interval changes in successive whole-body bone scans. Med. Phys. 34(1), 25–36 (2007)

Lasaponara, R., Masini, N.: Image enhancement, feature extraction and geospatial analysis in an archaeological perspective. In: Lasaponara, R., Masini, N. (eds.) Satellite Remote Sensing. Remote Sensing and Digital Image Processing, vol. 16, pp. 17–63. Springer, Netherlands (2012)

Pan, X.-B., Brady, M., Bowman, A.K., Crowther, C., Tomlin, R.S.O.: Enhancement and feature extraction for images of incised and ink texts. Image Vis. Comput. 22(6), 443–451 (2004)

Ryu, C., Kong, S.G., Kim, H.: Enhancement of feature extraction for low-quality fingerprint images using stochastic resonance. Pattern Recognit. Lett. 32(2), 107–113 (2011)

Islam, M.R., Sayeed, M.S., Samraj, A.: Technology review: image enhancement, feature extraction and template protection of a fingerprint authentication system. J. Appl. Sci. 10(14), 1397–1404 (2010)

Baradarani, A., Jonathan Wu, Q.M., Ahmadi, M.: An efficient illumination invariant face recognition framework via illumination enhancement and DD-DTWT filtering. Pattern Recognit. 46(1), 57–72 (2013)

Chen, T., Wotao, Y., Xiang Sean, Z., Comaniciu, D., Huang, T.S.: Total variation models for variable lighting face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 28(9), 1519–1524 (2006)

Eleyan, A., Ozkaramanli, H., Demirel, H.: Dual-tree and single-tree complex wavelet transform based face recognition. In: Signal Processing and Communications Applications Conference, 2009. SIU 2009. IEEE 17th, 9–11 April 2009 2009, pp. 536–539

Yun, E.-K., Cho, S.-B.: Adaptive fingerprint image enhancement with fingerprint image quality analysis. Image Vis. Comput. 24(1), 101–110 (2006)

Bal, U., Engin, M., Utzinger, U.: A multiresolution approach for enhancement and denoising of microscopy images. SIViP. (2013). doi:10.1007/s11760-013-0510-x

González, R.C., Woods, R.E.: Digital Image Processing. Prentice Hall, Englewood Cliffs, NJ (2002)

Cherifi, D., Beghdadi, A., Belbachir, A.H.: Color contrast enhancement method using steerable pyramid transform. SIViP 4(2), 247–262 (2010)

Hanmandlu, M., Jha, D.: An optimal fuzzy system for color image enhancement. IEEE Trans. Image Process 15(10), 2956–2966 (2006)

Qing, W., Ward, R.K.: Fast image/video contrast enhancement based on weighted thresholded histogram equalization. IEEE Trans. Consum. Electron 53(2), 757–764 (2007)

Chen Hee, O., Isa, N.A.M.: Quadrants dynamic histogram equalization for contrast enhancement. IEEE Trans. Consum. Electron. 56(4), 2552–2559 (2010)

Chen Hee, O., Isa, N.A.M.: Adaptive contrast enhancement methods with brightness preserving. IEEE Trans. Consum. Electron. 56(4), 2543–2551 (2010)

Sen, D., Pal, S.K.: Automatic exact histogram specification for contrast enhancement and visual system based quantitative evaluation. IEEE Trans. Image Process. 20(5), 1211–1220 (2011)

Joung-Youn, K., Lee-Sup, K., Seung-Ho, H.: An advanced contrast enhancement using partially overlapped sub-block histogram equalization. IEEE Trans. Circuits Syst. Video Technol. 11(4), 475–484 (2001)

Chao, W., Zhongfu, Y.: Brightness preserving histogram equalization with maximum entropy: a variational perspective. IEEE Trans. Consum. Electron. 51(4), 1326–1334 (2005)

Sengee, N., Sengee, A., Heung-Kook, C.: Image contrast enhancement using bi-histogram equalization with neighborhood metrics. IEEE Trans. Consum. Electron. 56(4), 2727–2734 (2010)

Ibrahim, H., Kong, N.S.P.: Brightness preserving dynamic histogram equalization for image contrast enhancement. IEEE Trans. Consum. Electron. 53(4), 1752–1758 (2007)

Lim, S., Isa, N.M., Ooi, C., Toh, K.: A new histogram equalization method for digital image enhancement and brightness preservation. SIViP. (2013). doi:10.1007/s11760-013-0500-z

Thomas, G., Flores-Tapia, D., Pistorius, S.: Histogram specification: a fast and flexible method to process digital images. IEEE Trans. Instrum. Meas. 60(5), 1565–1578 (2011)

Wang, C., Peng, J., Ye, Z.: Flattest histogram specification with accurate brightness preservation. Image Process. IET 2(5), 249–262 (2008)

Abdullah-Al-Wadud, M., Kabir, M.H., Dewan, M.A.A., Oksam, C.: A dynamic histogram equalization for image contrast enhancement. IEEE Trans. Consum. Electron. 53(2), 593–600 (2007)

Duan, J., Bressan, M., Dance, C., Qiu, G.: Tone-mapping high dynamic range images by novel histogram adjustment. Pattern Recognit. 43(5), 1847–1862 (2010)

Land, E.: The retinex. Am. Sci. 52(2), 247–264 (1964)

Bai, X., Zhou, F., Xue, B.: Image enhancement using multi scale image features extracted by top-hat transform. Opt. Laser Technol. 44(2), 328–336 (2012)

Eschbach, R.: Image-dependent exposure enhancement, Google Patents (1995)

Pal, S.K., King, R.A.: Image enhancement using smoothing with fuzzy sets. IEEE Trans. Syst. Man Cybern. 11(7), 494–501 (1981)

Chen, H.-C., Wang, W.-J.: Efficient impulse noise reduction via local directional gradients and fuzzy logic. Fuzzy Sets Syst. 160(13), 1841–1857 (2009)

Cheng, H.D., Xu, H.: A novel fuzzy logic approach to mammogram contrast enhancement. Inf. Sci. 148(1–4), 167–184 (2002)

Sheet, D., Garud, H., Suveer, A., Mahadevappa, M., Chatterjee, J.: Brightness preserving dynamic fuzzy histogram equalization. IEEE Trans. Consum. Electron. 56(4), 2475–2480 (2010)

Hanmandlu, M., Verma, O.P., Kumar, N.K., Kulkarni, M.: A novel optimal fuzzy system for color image enhancement using bacterial foraging. IEEE Trans. Instrum. Meas. 58(8), 2867–2879 (2009)

Verma, O.P., Kumar, P., Hanmandlu, M., Chhabra, S.: High dynamic range optimal fuzzy color image enhancement using Artificial Ant Colony System. Appl. Soft Comput. 12(1), 394–404 (2012)

Vlachos, I., Sergiadis, G., Melin, P., Castillo, O., Aguilar, L., Kacprzyk, J., Pedrycz, W.: The role of entropy in intuitionistic fuzzy contrast enhancement foundations of fuzzy logic and soft computing. In: Lecture Notes in Computer Science, vol. 4529, pp. 104–113. Springer, Berlin (2007)

Vlachos, I.K., Sergiadis, G.D.: Parametric indices of fuzziness for automated image enhancement. Fuzzy Sets Syst. 157(8), 1126–1138 (2006)

Cheng, H.D., Chen, J.-R.: Automatically determine the membership function based on the maximum entropy principle. Inf. Sci. 96(3–4), 163–182 (1997)

Hasikin, K., Isa, N.A.M.: Enhancement of the low contrast image using fuzzy set theory. In: Computer Modelling and Simulation (UKSim), 2012 UKSim 14th International Conference on, 28–30 March 2012, pp. 371–376 (2012)

Hasikin, K., Isa, N.A.M.: Adaptive fuzzy contrast factor enhancement technique for low contrast and nonuniform illumination images. SIViP. (2012). doi:10.1007/s11760-012-0398-x

Aarnink, R., Pathak, S.D., de la Rosette, J.J.M.C.H., Debruyne, F.M.J., Kim, Y., Wijkstra, H.: Edge detection in prostatic ultrasound images using integrated edge maps. Ultrasonics 36(1–5), 635–642 (1998)

Toet, A., Lucassen, M.P.: A new universal colour image fidelity metric. Displays 24(4–5), 197–207 (2003)

Jain, A.K.: Fundamentals of Digital Image Processing. Prentice Hall, Englewood Cliffs, NJ (1989)

Author information

Authors and Affiliations

Corresponding author

Additional information

This project is supported by the Ministry of Science, Technology & Innovation Malaysia through Sciencefund Grant entitled “Development of Computational Intelligent Infertility Detection System based on Sperm Motility Analysis”.

Appendices

Appendix 1

Appendix 2

Appendix 3

1.1 Quantitative analysis for grayscale images

Appendix 4

1.1 Quantitative analysis for color images

Rights and permissions

About this article

Cite this article

Hasikin, K., Mat Isa, N.A. Adaptive fuzzy intensity measure enhancement technique for non-uniform illumination and low-contrast images. SIViP 9, 1419–1442 (2015). https://doi.org/10.1007/s11760-013-0596-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-013-0596-1