Abstract

In many real classification problems a monotone relation between some predictors and the classes may be assumed when higher (or lower) values of those predictors are related to higher levels of the response. In this paper, we propose new boosting algorithms, based on LogitBoost, that incorporate this isotonicity information, yielding more accurate and easily interpretable rules. These algorithms are based on theoretical developments that consider isotonic regression. We show the good performance of these procedures not only on simulations, but also on real data sets coming from two very different contexts, namely cancer diagnostic and failure of induction motors.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The classical problem of classifying observations in one of K groups using a rule defined from a sample of observations for which the true group is known (training sample) is known as supervised classification. This problem has been receiving a lot of attention in the last decades due to its applicability in a very wide range of problems in different scientific fields such as economy, engineering, medicine or molecular biology. In fact, it could be said that the problem can appear in any scientific field. As a consequence, many methods and techniques to build classification rules have been developed, from the classic linear or quadratic rules to the more recent K Nearest Neighbors (KNN), Support Vector Machines (SVM) or decision trees. Even more recently, methods based on so-called weak classifiers (Schapire 1990) have been developed and a family of procedures called boosting procedures has been defined.

In practice, it is quite frequent to have some a priori knowledge on monotone relations among the predictors and the response groups. For example, in a cancer trial, it may be known that higher values of a predictor are associated with more advanced stages of the illness (Conde et al. 2012). One way of representing this information is to include order restrictions among the means of the predictors in the groups. This scheme allows to define rules (Fernández et al. 2006; Conde et al. 2012) that improve the performance of linear discriminant rules. Bootstrap estimators of the performance of these rules have been provided in Conde et al. (2013) and the rules have been implemented in the R package dawai presented in Conde et al. (2015).

There are some other procedures where isotonicity has been considered to define classification procedures, or regression models that can be adapted for classification. In Auh and Sampson (2006) a logistic rule is defined isotonizing the boundaries of the original rule. Ghosh (2007) proposes a semiparametric regression model, first smoothing and then isotonizing the components, to evaluate biomarkers, while Meyer (2013) considers a more general semiparametric additive constrained regression model with more efficient estimation and inferential procedures. Another approach is that of Hofner et al. (2016), where a unified framework to incorporate restrictions for univariate and bivariate effects estimates using P-splines is proposed. Finally, Pya and Wood (2014) considers a penalized spline method for generalized shape-restricted (under monotonicity and concavity) additive models and Chen and Samworth (2016) proposes a non-parametric estimator of the additive components under the same setting. The problem of building isotonic classification rules has also attracted the attention of the machine learning community where it is known as monotonic ordinal classification. Many of the procedures developed from that area of research require that the training data set satisfies the monotonicity relationships and others consider preprocessing algorithms to “monotonize” the data set. See Cano and García (2017) and Cano et al. (2019) and references therein for a complete overview of these procedures and how they work. Here, we will define procedures that neither need any preprocessing of the training data nor are obtained modifying a previous rule to obtain monotonicity. Their performance properties will come from the direct incorporation of monotonicity in their design.

The purpose of this paper is to develop isotonic boosting algorithms yielding classification rules that satisfy the monotone relations between the predictors and the response. Boosting algorithms are widely used nowadays. They are a family of iterative procedures that combine simple rules that may perform slightly better than random, to build an ensemble where the performance of the simple members is improved (“boosted”). Among these algorithms, we have decided to select LogitBoost (Friedman et al. 2000) as starting procedure in the development of our algorithms for the following reasons. In that paper, the authors explain the performance of AdaBoost, the first boosting algorithm of practical use (Freund and Schapire 1996, 1997), considering the algorithm as a stage-wise descent procedure in an exponential loss function. In the same paper, they also design LogitBoost replacing the loss function by a logistic loss function, so that the binomial log-likelihood is directly optimized. This leads to changes in the weights assigned to misclassified observations in such a way that the misclassified observations that are further from the boundaries are given in LogitBoost a smaller weight. Consequently, the procedure is less sensitive to outliers and noise than AdaBoost (Dietterich 2000; McDonald et al. 2003) and we expect it to perform better in monotonic classification problems.

In this paper we develop two isotonic boosting procedures for binary classification based on LogitBoost, yielding rules that follow the known monotone relations in the problem at hand and are therefore more easily interpretable and efficient. The first procedure, that we call Simple Isotonic LogitBoost (SILB), selects in each step the variable that best fits the appropriate weighted regression problem taking isotonicity into account where needed. In the second, Multiple Isotonic LogitBoost (MILB), the whole problem is refitted in each step, so that all predictors change their role in the rule in each step, also considering isotonicity where needed.

Moreover, multiclass rules are also developed in this paper. Instead of decomposing this problem in multiple binary problems, which makes more difficult the consideration of isotonicity, we develop theoretical results that allow us to define procedures for the multinomial log-likelihood, based on two ordinal logistic models, namely the adjacent categories model and the cumulative probabilities model (see Agresti 2010). For these two models again we develop simple and multiple isotonic LogitBoost algorithms that, following the previous notation, we call Adjacent-categories Simple Isotonic LogitBoost (ASILB), Adjacent-categories Multiple Isotonic LogitBoost (AMILB), Cumulative probabilities Simple Isotonic LogitBoost (CSILB) and Cumulative probabilities Multiple Isotonic LogitBoost (CMILB).

The layout of the paper is as follows. In Sect. 2 we recall the LogitBoost algorithm and present the new boosting algorithms developed from it, both for the binary and multiclass problems. We devote Sect. 3 to simulation studies showing that the new rules perform better than other up-to-date procedures in different scenarios. In Sect. 4 the results for two real data problems are presented. Finally, the discussion and future developments are exposed in Sect. 5.

2 Isotonic boosting classification rules

Let us consider \(K\ge 2\) classes and a training sample \(\{(\mathbf{x} _i, y_i), i = 1, \dotsc , n\}\), where \(\mathbf{x} _i\) is a d-dimensional vector of predictors and \(y_i \in \{1,\dots ,K\}\) the variable identifying the class. The aim is to classify a new observation \(\mathbf{x} \) into one of the K classes.

Moreover we assume that it is known that higher values of some of the predictors are associated to higher values of the class variable y (monotone increasing) and that higher values of other predictor variables are associated with lower values of y (monotone decreasing). We denote as I the set of indexes of the former set of predictors and as D the set of indexes of the latter one. Obviously, \(I\cup D\subseteq \{1,\dots ,d\}\).

2.1 Binary classification rules

Here, we develop two new boosting algorithms based on LogitBoost that incorporate the known monotonicity information between the predictors and the response. Let us define \(y_i^*=y_i-1 \in \{0,1\}, i = 1,\dotsc ,n\) and \(p(\mathbf{x} )=p(y^*=1|\mathbf{x} )\). For the two-class problem LogitBoost is based on the logistic model:

The general version of a LogitBoost procedure considers \(F(\mathbf{x} )=\sum _{m=1}^M f_m(\mathbf{x} )\) where M is the number of iterations of the procedure and \(f_m(\mathbf{x} )\) are the functions obtained in each iteration. In the most common versions of LogitBoost, each \(f_m(\mathbf{x} )\) function depends on a single predictor. These univariate functions make it easier to incorporate the isotonicity restrictions. For our first isotonic procedure, SILB, in model (1) we consider \(F(\mathbf{x} )=\sum _{m=1}^M f_m(x_{j_m})\), while for MILB in (1) we consider \(F(\mathbf{x} )=\sum _{j=1}^d f_j(x_j)\) where d is the number of predictors. In both cases, we impose that \(f_m(x_s)\) or \(f_j(x_s)\) is monotone increasing if \(s\in I\) or decreasing if \(s\in D\). These constraints imply that higher values of variables in I are associated with higher values of \(p(\mathbf{x} )\), i.e. of y, and that higher values of variables in D are associated with lower values of \(p(\mathbf{x} )\), i.e. of y.

Let us begin recalling the LogitBoost algorithm:

LogitBoost

-

1.

Start with weights \(w_i =1/n, i=1,\dotsc ,n,\) \(F(\mathbf{x} )=0\) and probability estimates \(p(\mathbf{x} _i)=\frac{1}{2}\) for \(i=1,\dotsc ,n.\)

-

2.

Repeat for \(m=1,\dotsc ,M\):

-

(a)

Compute \(w_i = p(\mathbf{x} _i)(1-p(\mathbf{x} _i)),z_i = \frac{y_i^*-p(x_i)}{w_i}, i=1,\dotsc ,n.\)

-

(b)

Fit \(f_m(\mathbf{x} )\) by a weighted least-squares regression of \(z_i\) to \(\mathbf{x} _i\) using weights \(w_i\).

-

(c)

Update \(F(\mathbf{x} ) = F(\mathbf{x} ) + f_m(\mathbf{x} )\) and \(p(\mathbf{x} )=\frac{1}{1+e^{-F(\mathbf{x} )}}\).

-

(a)

-

3.

Classify in class 0 if \(p(\mathbf{x} ) < 0.5\), in class 1 if \(p(\mathbf{x} ) \ge 0.5\).

Although \(f_m(\mathbf{x} )\) in 2(b) can be obtained using any weighted least-squares regression method, Friedman et al. (2000) uses 2 and 8 terminal node regression trees when comparing the performances of LogitBoost and other algorithms. For simplicity, we will use 2 terminal node trees (stumps) when comparing the performances. If stumps are used, only one predictor variable is incorporated in each \(f_m(\mathbf{x} )\). In this way we can reformulate the algorithm to incorporate the additional ordering information.

Next, two isotonic algorithms are proposed. We first present SILB, an algorithm based on LogitBoost, with 2(b) modified to incorporate the additional information as follows. For those variables j for which an monotonicity restriction holds (i.e. \(j\in I\cup D\)) a weighted isotonic regression, using the well known PAVA algorithm (Barlow et al. 1972; Robertson et al. 1988), is fitted instead of the usual weighted regression stump. Then, as usual, we choose for \(f_m(\mathbf{x} )\) the variable yielding the best weighted least squares fit among all.

Simple Isotonic LogitBoost (SILB)

-

1.

Start with weights \(w_i =1/n, i=1,\dotsc ,n,\) \(F(\mathbf{x} )=0\) and probability estimates \(p(\mathbf{x} _i)=\frac{1}{2}\) for \(i=1,\dotsc ,n.\)

-

2.

Repeat M times:

-

(a)

Compute \(w_i = p(\mathbf{x} _i)(1-p(\mathbf{x} _i)),z_i = \frac{y_i^*-p(\mathbf{x} _i)}{w_i}, i=1,\dotsc ,n. \)

-

(b)

Repeat for \(j=1,\dotsc ,d\):

-

If \(j \in I\cup D\), fit a weighted isotonic regression \(f_j(x)\) of \(z_i\) to \(x_{ij}\) using weights \(w_i\).

-

If \(j \notin I\cup D\), fit a 2 terminal node regression stump \(f_j(x)\) by weighted least-squares of \(z_i\) to \(x_{ij}\) using weights \(w_i\).

-

-

(c)

Consider \(h=arg\text { }min_{j\in \{1,\dotsc ,d\}} \sum \nolimits _{i=1}^n w_i(z_i-f_j(x_{ij}))^2\), and update \(F(\mathbf{x} ) = F(\mathbf{x} ) + f_h(x_h)\) and \(p(\mathbf{x} )=\frac{1}{1+e^{-F(\mathbf{x} )}}\).

-

(a)

-

3.

Classify in class 0 if \(p(\mathbf{x} ) < 0.5\), in class 1 if \(p(\mathbf{x} ) \ge 0.5\).

As in LogitBoost, the performance of the algorithm is expected to improve with the number of iterations M, although (Mease and Wyner 2008) suggests that LogitBoost can also be prone to overfitting if M is large enough, which could as well happen with SILB.

Now we present MILB. This algorithm is also based on LogitBoost but in this case in each step we fit the whole model using a backfitting algorithm (Härdle and Hall 1993) and considering weighted isotonic regression where needed. In LogitBoost (and subsequently in SILB), each new value of \(F(\mathbf{x} )\) was the sum of the old value of \(F(\mathbf{x} )\) plus the weighted expectation of the Newton step (see Friedman et al. 2000). For this algorithm, as in Hastie and Tibshirani (2014), each new value of \(F(\mathbf{x} )\) is the weighted expectation of the sum of the old value of \(F(\mathbf{x} )\) plus the Newton step.

Multiple Isotonic LogitBoost (MILB)

-

1.

Start with weights \(w_i =1/n, i=1,\dotsc ,n\), \(F(\mathbf{x} )=0\) and probability estimates \(p(\mathbf{x} _i)=\frac{1}{2}\) for \(i=1,\dotsc ,n.\)

-

2.

Repeat until convergence:

-

(a)

Compute \(w_i = p(\mathbf{x} _i)(1-p(\mathbf{x} _i))\), \(z_i = F(\mathbf{x} _i) + \frac{y_i^*-p(\mathbf{x} _i)}{w_i}, i=1,\dotsc ,n.\)

-

(b)

Using the backfitting algorithm, fit an additive weighted regression \(F(\mathbf{x} )=\sum _{j=1}^d f_j(x_j)\) of \(z_i\) to \(\mathbf{x} _i\) with weights \(w_i\) with \(f_j(x)\) being the isotonic regression if \(j \in I\cup D\), or the linear regression if \(j \notin I\cup D\), \(j=1,\dotsc ,d\).

-

(c)

Update \(p(\mathbf{x} )=\frac{1}{1+e^{-F(\mathbf{x} )}}\).

-

(a)

-

3.

Classify in class 0 if \(p(\mathbf{x} ) < 0.5\), in class 1 if \(p(\mathbf{x} ) \ge 0.5\).

Notice that, as \(F(\mathbf{x} )\) is now the addition of a fixed number of d terms, overfitting is not expected to appear.

2.2 Multiclass classification

Let us denote \(p_k(\mathbf{x} )=P(y=k|\mathbf{x} )\), \(y_k^*=I_{\left[ y=k\right] },k=1,\dotsc ,K\), and I and D as in Sect. 2.1. The most common way of tackling multiclass classification problems is to split the problem in multiple binary problems. Among the several ways of doing this, the most common (Allwein et al. 2000; Holmes et al. 2002) are: One-against-rest, where K binary classifications for each class against all others are considered, and One-against-one, which considers every pair of classes and performs \(\left( {\begin{array}{c}K\\ 2\end{array}}\right) \) binary classifications. However, direct multiclass procedures that fit a single model can also be used. They exhibit a performance comparable to (if not better than) those of these multiple binary problems strategies, and are more appropriate for the incorporation of the information about monotone relationships since they allow considering the full monotonicity existing among all the classes and not only the pairwise order. For these reasons, in this paper we will consider multiclass procedures instead of combinations of binary ones.

Moreover, models such as the baseline category model (see Agresti 2002) where each category is compared with a baseline are not appropriate to incorporate monotone relationships among the categories. In our setting, the response variable is ordinal and models where this characteristic is taken into account should be considered. The two most widely used models for ordinal responses are the cumulative logit and the adjacent-categories logit models (Agresti 2010).

The adjacent-categories logit model is

while the cumulative logit model is

It is known (see Agresti 2010, p. 70) that the cumulative logit model (3) has structural problems as the cumulative probabilities may be out of order at some setting of the predictors. To avoid this problem we will adopt, as in the above reference, the parallelism restriction for this model and assume that, in this case, \(F_k(\mathbf{x} )=\alpha _k+F(\mathbf{x} )\), \( k=2,\ldots ,K\).

The choice between these models is an interesting question that has been considered not only in Agresti (2010) but also in more recent books as Fullerton and Xu (2016). There are some technical reasons for preferring each model. For example, in the cumulative probabilities model the sample size used for fitting each equation does not change among equations, while the adjacent categories model belongs to the exponential family. However, according to the above-mentioned authors and from a practical point of view, the main reason for choosing among the models is the probability of interest in the problem. If the individual response categories are of substantive interest (as, for example, with Likert scales) the adjacent categories model is recommended, while the cumulative probabilities model is preferred when these cumulative probabilities are of interest. For these reasons, both models have been used in health research (Marshall 1999; Fullerton and Anderson 2013), cumulative probabilities models in, among many other, studies about worker attachment (Halaby 1986) or attitudes towards science (Gauchat 2011), and adjacent categories models in, for example, occupational mobility (Sobel et al. 1998) or credit scoring (Masters 1982).

Now, we develop our isotonic multiclass boosting procedures. We start with the adjacent-categories model (2). For this model the constraints imply that the higher the values of variables in I the greater the \(p_k(\mathbf{x} )\) with respect to \(p_{k-1}(\mathbf{x} )\), and the higher the values of variables in D the lower the \(p_k(\mathbf{x} )\) with respect to \(p_{k-1}(\mathbf{x} )\), so that high values of variables in I are associated with high values of y and high values of variables in D are associated with low values of y. As in the binary case, we propose two procedures for this model. These proposals require the development of theoretical results that can be found in “Appendix A.1”.

Our first proposal for this model is the ASILB procedure, where we assume that \(F_k(\mathbf{x} )=\sum _{m=1}^M f_{km}(x_{j_{km}})\), imposing \(f_{km}(x)\) to be isotonic if \(j_{km}\in I\cup D\) and we add terms fitting the new quasi-Newton steps according to the results in “Appendix A.1”. The reason to use quasi-Newton steps instead of full Newton steps in this case is the use of stumps when the predictor \(j\notin I\cup D\).

Adjacent-categories Simple Isotonic LogitBoost (ASILB)

-

1.

Start with \(F_k(\mathbf{x} )=0, k=1,\dots ,K,\) and \(p_k(\mathbf{x} )=\frac{1}{K},k=1,\dotsc ,K\).

-

2.

Repeat M times:

-

(a)

Let \(\mathbf{W} _i\) be a diagonal \((K-1)\times (K-1)\) matrix, where each diagonal element is \(W_{i_{kk}}= (\sum _{j=k}^K p_j(\mathbf{x} _i))(1-\sum _{j=k}^K p_j(\mathbf{x} _i))\), \(2\le k \le K\). Define also vector \(\mathbf{S} _i\) with \(S_{ik}=\sum _{j=k}^K (y_{ij}^*-p_j(\mathbf{x} _i))\) for \(2\le k \le K\) and compute \(\mathbf{z} _i= \mathbf{W} _i^{-1}{} \mathbf{S} _i, i=1,\dotsc ,n\).

-

(b)

For \(k=2,\dotsc ,K:\) Repeat for \(j=1,\dotsc ,d\):

-

If \(j \in I\cup D\), fit a weighted isotonic regression \(f_j(x)\) of \(z_{ik}\) to \(x_{ij}\) using weights \(W_{i_{kk}}\).

-

If \(j \notin I\cup D\), fit a 2 terminal node regression stump \(f_j(x)\) by weighted least-squares of \(z_{ik}\) to \(x_{ij}\) using weights \(W_{i_{kk}}\).

Consider \(h=arg\text { }min_{j\in \{1,\dotsc ,d\}} \sum \limits _{i=1}^n W_{i_{kk}}(z_{ik}-f_j(x_{ij}))^2\), and update \(F_k(\mathbf{x} ) = F_k(\mathbf{x} ) + f_h(x_h)\).

-

-

(c)

Update \(p_k(\mathbf{x} )=\frac{\exp (\sum _{j=1}^k F_j(\mathbf{x} ))}{\sum _{k=1}^K \exp (\sum _{j=1}^k F_j(\mathbf{x} ))},k=1,\dotsc ,K.\)

-

(a)

-

3.

Classify in class \(h=arg\text { }max_{k\in \{1,\dotsc ,K\}} p_k(\mathbf{x} )\).

For our second proposal for model (2), AMILB, we assume that \(F_k(\mathbf{x} )=\sum _{j=1}^d f_{kj}(x_j)\), imposing \(f_{kj}(x)\) to be isotonic if \(j_{km}\in I\cup D\). As in MILB, we fit the whole model in each iteration using a backfitting algorithm and the results obtained in “Appendix A.1”. In this case, instead of using a quasi-Newton step, as in ASILB, we use the full Newton step, since in this algorithm we consider linear or isotonic regression for which non-diagonal weights can be easily included. For this reason in this algorithm the weight matrix is not diagonal.

Adjacent-categories Multiple Isotonic LogitBoost (AMILB)

-

1.

Start with \(F_k(\mathbf{x} )=0, k=1,\dots ,K,\) and \(p_k(\mathbf{x} )=\frac{1}{K},k=1,\dotsc ,K\). Let \(\mathbf{F} (\mathbf{x} )=[F_2(\mathbf{x} ),\dots , F_K(\mathbf{x} )]'.\)

-

2.

Repeat until convergence:

-

(a)

Let \(\mathbf{W} _i\) be a \((K-1)\times (K-1)\) matrix, where each element is:

$$\begin{aligned} W_{i_{km}}=\left\{ \begin{array}{l} (\sum _{j=m}^K p_j(\mathbf{x} _i)) (1-(\sum _{j=k}^K p_j(\mathbf{x} _i))\text {, if }m\ge k\\ (\sum _{j=k}^K p_j(\mathbf{x} _i)) (1-(\sum _{j=m}^K p_j(\mathbf{x} _i)) \text {, if }m < k \end{array}\right. \end{aligned}$$for \(2\le k,m \le K,\) and for \(i=1,\dotsc ,n\) compute \(\mathbf{z} _i=\mathbf{F} (\mathbf{x} _i) +\mathbf{W} _i^{-1} \mathbf{S} _i\) with \(\mathbf{S} _i\) the vector defined in step 2(a) of the ASILB algorithm .

-

(b)

Using the backfitting algorithm, fit an additive weighted regression \(\mathbf{F} (\mathbf{x} )=\sum _{j=1}^d f_j(x_j)\) of \(\mathbf{z} _i\) to \(\mathbf{x} _i\) with weights matrices \(\mathbf{W} _i\), where \(f_j(x)\) is the isotonic regression if \(j \in I\cup D\) or the linear regression if \(j \notin I\cup D\), \(j=1,\dotsc ,d\).

-

(c)

Update \(p_k(\mathbf{x} )=\frac{\exp (\sum _{j=1}^k F_j(\mathbf{x} ))}{\sum _{k=1}^K \exp (\sum _{j=1}^k F_j(\mathbf{x} ))}, k=1,\dotsc ,K.\)

-

(a)

-

3.

Classify in class \(h=arg\text { }max_{k\in \{1,\dotsc ,K\}} p_k(\mathbf{x} )\).

Now, we consider the cumulative model (3) and describe the corresponding CSILB and CMILB algorithms. In this case, due to the parallelism assumption, we have a single function \(F(\mathbf{x} )\) but we additionally have to estimate the \(\alpha _k\), \(k=2,\dots ,K\) parameters. For this reason the algorithms are more involved. Their theoretical justification can be found at “Appendix A.2”.

Cumulative probabilities Simple Isotonic LogistBoost (CSILB)

-

1.

Start with \(F(\mathbf{x} )=0\) and \(p_k(\mathbf{x} )=\frac{1}{K},k=1,\dotsc ,K,\) so that \(\alpha _k=-\log \frac{k-1}{K-k+1},k=2,\dotsc ,K\). Denote as \(\varvec{\alpha }=(\alpha _2,\dots ,\alpha _K)'\) and let \(\gamma _k(\mathbf{x} )=\sum _{j=k}^K p_j(\mathbf{x} ),k=1,\dotsc ,K\) with \(\gamma _{K+1}(\mathbf{x} )=0\).

-

2.

Repeat M times:

-

(a)

Compute \(w_i=\sum _{k=1}^K y_{k,i}^* [\gamma _k(\mathbf{x} _i)(1-\gamma _k(\mathbf{x} _i)) + \gamma _{k+1}(\mathbf{x} _i)(1-\gamma _{k+1}(\mathbf{x} _i))]\), \(s_i=\sum _{k=1}^K y_{k,i}^*[1-\gamma _k(\mathbf{x} _i) - \gamma _{k+1}(\mathbf{x} _i)],i=1,\dotsc ,n\) and \(z_i=w_i^{-1}s_i.\)

-

(b)

Repeat for \(j=1,\dotsc ,d\):

-

If \(j \in I\cup D\), fit a weighted isotonic regression \(f_j(x)\) of \(z_i\) to \(x_{ij}\) using weights \(w_i\).

-

If \(j \notin I\cup D\), fit a 2 terminal node regression stump \(f_j(x)\) by weighted least-squares of \(z_i\) to \(x_{ij}\) using weights \(w_i\).

Consider \(h=arg\text { }min_{j\in \{1,\dotsc ,d\}} \sum \nolimits _{i=1}^n w_i(z_i-f_j(x_{ij}))^2\), and update \(F(\mathbf{x} ) = F(\mathbf{x} ) + f_h(x_h)\).

-

-

(c)

Consider the \(K-1\) dimensional score vector \(\mathbf{S} =(s_k)\) defined in (4) and the \((K-1)\times (K-1)\) Hessian matrix H defined in (5) to (7) in “Appendix A.2”. Compute \(\varvec{\alpha }=\varvec{\alpha }-\mathbf{H} ^{-1}{} \mathbf{S} \).

-

(d)

Update \(\gamma _k(\mathbf{x} ) = \frac{\exp (\alpha _k+F(\mathbf{x} ))}{1+\exp (\alpha _k+F(\mathbf{x} ))}\) for \(k=2,\dotsc ,K,\) and \(p_k(\mathbf{x} )=\gamma _k(\mathbf{x} )-\gamma _{k+1}(\mathbf{x} )\) for \(k=1,\dotsc ,K.\)

-

(a)

-

3.

Classify in class \(h=arg\text { }max_{k\in \{1,\dotsc ,K\}} p_k(\mathbf{x} )\).

Finally, we present the corresponding CMILB algorithm also based on the results developed at “Appendix A.2”. In this case the algorithm is more similar to CSILB than what happened for model (2).

Cumulative probabilities Multiple Isotonic LogistBoost (CMILB)

-

1.

As in CSILB, start with \(F(\mathbf{x} )=0\) and \(p_k(\mathbf{x} )=\frac{1}{K},k=1,\dotsc ,K,\) so that \(\alpha _k=-\log \frac{k-1}{K-k+1},k=2,\dotsc ,K\). Let \(\gamma _k(\mathbf{x} )=\sum _{j=k}^K p_j(\mathbf{x} ),k=1,\dotsc ,K,\) and \(\gamma _{K+1}(\mathbf{x} )=0\).

-

2.

Repeat until convergence:

-

(a)

Compute \(w_i\) and \(s_i\) as in CSILB step 2(a) and let \(z_i=F(x_i)+w_i^{-1}s_i\).

-

(b)

Using the backfitting algorithm, fit an additive weighted regression \(F(\mathbf{x} )=\sum _{j=1}^d f_j(x_j)\) of \(z_i\) to \(\mathbf{x} _i\) with weights \(w_i\) with \(f_j(x)\) being the isotonic regression if \(j \in I\cup D\), or the linear regression if \(j \notin I\cup D\), \(j=1,\dotsc ,d\).

-

(c)

Perform same computations as in CSILB step 2(c).

-

(d)

Update \(\gamma _k(\mathbf{x} )\) and \(p_k(\mathbf{x} )\) as in CSILB step 2(d).

-

(a)

-

3.

Classify in class \(h=arg\,max_{k\in \{1,\dotsc ,K\}} p_k(\mathbf{x} )\).

It can be checked that the multiclass simple LogitBoost rules (ASILB and CSILB) and the multiclass multiple LogitBoost rules (AMILB and CMILB) defined in this subsection coincide, respectively, in the case \(K=2\) with the corresponding SILB and MILB two class rules defined in the Sect. 2.1.

2.3 Rules implementation in R

We have developed an R (R Core Team 2019) package, called isoboost (Conde et al. 2020) and available from the Comprehensive R Archive Network (CRAN), which provides the functions asilb, csilb, amilb and cmilb implementing the correspoding procedures developed in this paper.

These functions depend on the R packages rpart (Therneau and Atkinson 2019) for performing weighted regression trees with functions rpart and predict.rpart, Iso (Turner 2019) for performing isotonic regression with function pava, and isotone (De Leeuw et al. 2009) for performing weighted isotonic regression with function gpava.

3 Simulation study

In this section we present the results of the simulation studies we have performed to evaluate the behavior of the new proposed methods. The full set of methods used in the simulation study can be found in Table 1 and the R code used to perform them is contained in the Supplementary material section of the paper.

These methods include not only standard up-to-date procedures such as LDA, logistic regression random forest, SVM or Logitboost, but also methods that account for monotonicity such as restricted LDA (Conde et al. 2012) or the monotone version of XGBoost (Chen and Guestrin 2016), which is one of the procedures more widely used nowadays. The R packages considered for these procedures are as follows. MASS (Venables and Ripley 2002) has been used for performing LDA, nnet (Venables and Ripley 2002) for performing LOGIT, randomForest (Liaw and Wiener 2002) for performing RF, e1071 (Meyer et al. 2019) for performing SVM, caTools (Jarek Tuszynski 2019) for performing LGB, dawai (Conde et al. 2015) for performing RLDA, and xgboost (Chen et al. 2019) for performing MONOXGB.

To compare the results from the procedures considered we have used several performance criteria. First, we have considered the total misclassification probability (TMP), i.e. the percentage of misclassified observations, which is equivalent to using a 0–1 loss and is the most commonly used performance measure. We have also considered the mean absolute error (MAE). MAE is a performance measure frequently used (see Cano et al. 2019) when evaluating monotone procedures as it also takes into account the “distance” between the observed and predicted values of the response. It is computed as \(MAE=\frac{1}{n}\sum _{i=1}^n\left| y_i-\hat{y}_i\right| \), where \(\hat{y}_i\) is the predicted class for observation i. Obviously, MAE equals TMP in the binary case. The third performance measure we have considered is the well-known area under the ROC curve (AUC). The multiclass version of this measure is defined in Hand and Till (2001). Notice that, while lower values of TMP and MAE indicate a better performance of the rule, the opposite happens for AUC.

Two different schemes of simulations designed following the lines considered in other related papers are considered. The first one is based on the adjacent categories model (2), while, for the second, a model where the means of the predictors in the groups follow a known order is considered. Table 2 shows the characteristics of each of these two schemes. Notice that, in each scheme, we generate a total of 100 datasets for each combination of number of classes K and predictors d.

The first scheme of simulations is based on model (2) instead of on the cumulative logit model (3) since the former is more flexible, not requiring the parallelism between the \(F_j(\mathbf{x} )\) functions. The functions considered are given in Table 3. Different monotone increasing additive functions in \(\mathbf{x} \) (polynomial, logarithmic, exponential) have been included. Simulations schemes similar to this one can be found, for example, in Bühlmann (2012), Chen and Samworth (2016), Dettling and Bühlmann (2003) and Friedman et al. (2000). For the predictors in this scheme we have generated d-dimensional vectors \(\mathbf{x} \) from a \(U(-1,1)^d\) distribution. For the response we consider the \(F_j(\mathbf{x} )\) functions and compute \(p_k(\mathbf{x} )\) for \(k=1,\dotsc ,K\) (see Table 2). Then, we generate a single observation from a multinomial distribution with probability vector \((p_1(\mathbf{x} _i),\dotsc ,p_K(\mathbf{x} _i))\) and take Y as the index where the observation appears.

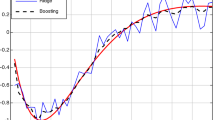

The mean results obtained with the three performance measures considered (TMP, MAE and AUC) are qualitatively similar and, for this reason, in the main text we only detail the TMP results while the full numerical values of the three measures are given in “Appendix A.3” to improve the readability of the paper. The TMP results for the first simulations scheme are shown in Fig. 1. Figure 1 shows, especially for \(K=2\) and \(K=5\), that the rules that incorporate additional information (ASILB, AMILB, CSILB, CMILB and RLDA) outperform not only the rules that do not account for this information (RF, SVM, LogitBoost, logistic regression and LDA) but also MONOXGB. We can also see that rules CSILB and CMILB perform as well as ASILB and AMILB although the cumulative logit model (3) under which the former algorithms were developed is not the one used for the simulations.

For the second set of simulations, uniform distributions for the predictors, as in Fang and Meinshausen (2012) or Bühlmann (2012), have been considered. Independent \(U(-1,1)+0.2h\) distributions are used to generate the predictors \(X_j\), \(j=1,\dots ,d\) for observations in class \(Y=h\), representing a simple order among the means all d predictors with respect to the K classes. Full details are given in Table 2.

As in the first scheme, the results with the three performance measures are qualitatively similar and we only detail here the mean TMP results while we include the full numerical mean results for all measures in “Appendix A.3”. The mean TMP results obtained in this second set of simulations are shown in Fig. 2.

We can see that the rules defined in this paper outperform clearly again the ones that do not take into account the additional information present in the data, and that they also improve over RLDA and MONOXGB, which are rules that take into account the monotonicity information available on the order of the means. In fact, under this scheme the new defined rules improve over RLDA more than they did for the first scheme where the difference with the new rules was smaller.

4 Real data examples

In this section we evaluate the performance of the new rules in two problems we have encountered in our statistical practice. The first appears in a medical context where we were trying to find a non-invasive diagnostic kit for bladder cancer. In the second, we were considering an interesting industrial engineering problem, namely the diagnostic of electrical induction motors.

4.1 Bladder cancer diagnostic

The correct diagnostic and classification of cancer patients in the appropriate class is essential to provide them with the correct treatment. It is also very relevant that this diagnostic can be done with a procedure as non-invasive as possible. These were the main motivations of the work that we developed with Proteomika S.L. and Laboratorios SALVAT S.A. as industrial and pharmaceutical partners. In that work we tried to build diagnostic kit for several types of cancer. The bladder cancer data, already considered in Conde et al. (2012), is analyzed here. Neither the values nor the names of the predictors considered are given for confidential issues.

The patients were initially classified in 5 classes. The first level is the control level (patients that did not have the illness) and the other four levels are Ta, T1G1, T1G3 and T2, corresponding to increasingly advanced levels of illness. As a first step, a pilot study with a moderate number of patients was developed previous to a possible larger scale multicenter study. First, we received a 141 patient dataset that we consider as training set and later on a second sample of 149 different patients that we will use as test set was provided. As the sample sizes in some groups were small compared with those of the others, and according to our partners, we decided to merge the initial 5 levels in 3 groups, namely the control group, the Ta+T1G1 group and the T1G3 \(+\) T2 group. Also according to information given by our partners we decided to consider 5 proteins as predictors. The mean values of each of these proteins were expected to increase with the illness level. The performance results for the test set obtained with the different methods considered throughout the paper appear in Table 4 with the best results marked in bold.

The results for RLDA, ASILB, AMILB, CSILB and CMILB, that take into account the order restrictions are much better than the rest. The main reason is that some of the predictors did not verify the restrictions in the training set. Therefore, we can see that these new methods are able to cope with a ‘bad’ training sample and obtain reasonable results without dropping any observations or manipulating the original data in that sample. This does not happen with MONOXGB. We can also see that the new methods also outperform RLDA.

4.2 Diagnostic of electrical induction motors

Electrical induction motors are widely used in industry. In fact, as Garcia-Escudero et al. (2017) points out, it is estimated that these motors account for 80% of energy converted in trade and industry. For this reason, and for the losses that a possible unexpected shutdown might yield, it is important to be able to detect possible failures in these machines as early as possible.

There are many techniques to diagnose a faulty motor, see Choudhary et al. (2019) and references therein. The most widespread is based on the spectral analysis of the stator current and is usually known as Motor Current Signature Analysis. The underlying principle is that motor faults cause an asymmetry that is reflected as additional harmonics in the current spectrum. Therefore, side bands around the main frequency are considered and amplitudes of these side bands around odd harmonics are measured as predictors of damage severity of the motor. Lower values of the amplitudes are expected to be related to higher levels of damage severity (see Fig. 3 for a graphic description of these variables). Four condition states were considered with state 1 corresponding to an undamaged motor, state 2 to a motor with an incipient fault, state 3 with a moderately damaged motor and state 4 with a severely damaged motor.

Here, we consider a sample of 280 motor observations, for which the real motor state was known, that were recorded at the Electrical Engineering Department laboratory of the Universidad de Valladolid. The distribution of these observations among the different groups appears in Table 5. Three variables, namely the amplitudes of the first lower and upper side bands around harmonic 1 and the first lower side band around harmonic 5 are the predictors. Three different classifications problems are solved. The first one considers the four different states, the second considers three states, joining states 2 and 3, and in the third the problem is to classify in group 1 vs the rest of groups. The second problem is interesting from the industrial point of view since in this case we are distinguishing healthy and incipiently or moderately damaged motors from those in a state that may cause operative problems. In the third undamaged motors are distinguished from those with any kind of damage. As no test sample is available here, the TMP estimators are obtained using tenfold crossvalidation. The code used for performing this analysis is contained in the Supplementary material section of the article while the data can be found in the isoboost (Conde et al. 2020) package.

The results for these three classification problems appear in Table 6. We can see again that the methods proposed in this paper perform very well in this case and that the best result is obtained with one of these new methods.

In this case the isotonic boosting methods perform much better than RLDA which in turn yields same results than LDA. This happens when the training sample fulfills the isotonicity restrictions imposed. Therefore, we can see that the new isotonic methods proposed here improve even when the training sample verifies the restrictions. We can also see that, in this example, the unrestricted methods perform well from the AUC point of view and that some of them perform slightly better than the monotone methods.

5 Discussion

In this paper, classification problems in scenarios where there are monotone relationships among predictors and classes are considered, and the idea of using isotonic regression, instead of standard regression, in boosting classification rules, is exploited.

From a methodological point of view, the specific contribution of this paper is the definition of novel rules developed for binary and multiclass classification problems. Theoretical results that endorse the classification rules based on maximum likelihood estimation are developed and simulations results, performed under different scenarios, validate the rules.

From a practical point of view, two real problems in different contexts have been efficiently solved using the new rules. In the first one, where cancer patients are classified in different diagnostic groups, a deficient training sample that does not verify the expected monotone relationships is available, so that standard procedures yield very high misclassification errors. In this case, the incorporation of the isotonicity information is compulsory, not only to get more efficient results but also to obtain meaningful ones. The new rules reduce the error rates between \(33\%\) and \(66\%\). In the second case, that deals with the diagnostic of induction motors, the training sample fulfills the expected monotone relationships and the error rates are quite low. Also in this case the new rules manage to reduce the error rates significantly.

The question of computational efficiency and scalability of statistical procedures is also interesting nowadays. We have performed a study recording the time consumed by the procedures considered in the paper in the simulations and we have found that the new procedures have a behavior similar to the other ones considered when the sample size of the dataset or the number of predictors is increased. When the number of classes increases we have found that CSILB and CMILB are also competitive when compared with previously existent procedures and more efficient than ASILB and AMILB.

Future developments that involve the procedures exposed here will include new ways of expressing the additional information, the incorporation of other type of additional information, such as concavity, and the consideration of other methodology, for example isotonic regression splines, in the design of the rules.

References

Agresti A (2002) Categorical data analysis. Wiley, Hoboken

Agresti A (2010) Analysis of ordinal categorical data, 2nd edn. Wiley, Hoboken

Allwein EL, Schapire RE, Singer Y (2000) Reducing multiclass to binary: a unifying approach for margin classifiers. J Mach Learn Res 1:113–141

Auh S, Sampson AR (2006) Isotonic logistic discrimination. Biometrika 93(4):961–972

Barlow RE, Bartholomew DJ, Bremner JM, Brunk HD (1972) Statistical inference under order restrictions. Wiley, New York

Bühlmann P (2012) Bagging, boosting and ensemble methods. In: Handbook of computational statistics, Springer. Chapter, vol 33, pp 985–1022

Cano JR, García S (2017) Training set selection for monotonic ordinal classification. Data Knowl Eng 112:94–105

Cano JR, Gutiérrez PA, Krawczyk B, Wozniak M, García S (2019) Monotonic classification: an overview on algorithms, performance measures and data sets. Neurocomputing 341:168–182

Chen Y, Samworth RJ (2016) Generalized additive and index models with shape constraints. J R Stat Soc B 78:729–754

Chen T, Guestrin C (2016) XGBoost: a scalable tree boosting system. In: Proceedings of the ACM SIGKDD international conference on knowledge discovery and data mining, pp 785–794

Chen T, He T, Benesty M, Khotilovich V, Tang Y, Cho H, Chen K, Mitchell R, Cano I, Zhou T, Li M, Xie J, Lin M, Geng Y, Li Y (2019) xgboost: Extreme Gradient Boosting. R package version 0.82.1 https://CRAN.R-project.org/package=xgboost

Choudhary A, Goyal D, Shimi SL, Akula A (2019) Condition monitoring and fault diagnosis of induction motors: a review. Arch Comput Methods Eng 1:2. https://doi.org/10.1007/s11831-018-9286-z

Conde D, Fernández MA, Rueda C, Salvador B (2012) Classification of samples into two or more ordered populations with application to a cancer trial. Stat Med 31(28):3773–3786

Conde D, Salvador B, Rueda C, Fernández MA (2013) Performance and estimation of the true error rate of classification rules built with additional information: an application to a cancer trial. Stat Appl Gen Mol Biol 12(5):583–602

Conde D, Fernández MA, Salvador B, Rueda C (2015) dawai: an R package for discriminant analysis with additional information. J Stat Softw 66(10):1–19

Conde D, Fernández MA, Rueda C, Salvador B (2020) isoboost: isotonic Boosting Classification Rules. R package version 1.0.0 https://CRAN.R-project.org/package=isoboost

De Leeuw J, Hornik K, Mair P (2009) Isotone optimization in R: pool-adjacent-violators algorithm (PAVA) and active set methods. J Stat Softw 32(5):1–24

Dettling M, Bühlmann P (2003) Boosting for tumor classification with gene expression data. Bioinformatics 19(9):1061–1069

Dietterich TG (2000) An experimental comparison of three methods for constructing ensembles of decision trees: bagging, boosting, and randomization. Mach Learn 40(2):139–157

Fang Z, Meinshausen N (2012) LASSO isotone for high-dimensional additive isotonic regression. J Comput Graph Stat 21(1):72–91

Fernández MA, Rueda C, Salvador B (2006) Incorporating additional information to normal linear discriminant rules. J Am Stat Assoc 101:569–577

Freund Y, Schapire RE (1997) A decision-theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci 55(1):119–139

Freund Y, Schapire RE (1996) Experiments with a new boosting algorithm. In: ICML’96 Proceedings of the thirteenth international conference on international conference on machine learning, pp 148–156

Friedman J, Hastie T, Tibshirani R (2000) Additive logistic regression: a statistical view of boosting. Ann Stat 28(2):337–407

Fullerton AS, Anderson KF (2013) The role of job insecurity in explanations of racial health inequalities. Sociol Forum 28(2):308–325

Fullerton AS, Xu J (2016) Ordered regression models: parallel, partial, and non-parallel alternatives. CRC Press, Boca Raton

Garcia-Escudero LA, Duque-Perez O, Fernandez-Temprano M, Moriñigo-Sotelo D (2017) Robust detection of incipient faults in VSI-fed induction motors using quality control charts. IEEE Trans Ind Appl 53(3):3076–3085

Gauchat G (2011) The cultural authority of science: public trust and acceptance of organized science. Public Understand Sci 20(6):751–770

Ghosh D (2007) Incorporating monotonicity into the evaluation of a biomarker. Biostatistics 8(2):402–413

Halaby CN (1986) Worker attachment and workplace authority. Am Sociol Rev 51(5):634–649

Hand DJ, Till RJ (2001) A simple generalisation of the area under the ROC curve for multiple class classication problems. Mach Learn 45:171–186

Härdle W, Hall P (1993) On the backfitting algorithm for additive regression models. Stat Neerl 47:43–57

Hastie T, Tibshirani R (2014) Generalized additive models. In: Wiley StatsRef: Statistics Reference Online. Wiley-Interscience. https://doi.org/10.1002/9781118445112.stat03141

Hofner B, Kneib T, Hothorn T (2016) A unified framework of constrained regression. Stat Comput 26(1–2):1–14

Holmes G, Pfahringer B, Kirkby R, Frank E, Hall M (2002) Multiclass alternating decision trees. In: European conference on machine learning. Springer, Berlin

Jarek Tuszynski (2019) caTools: tools: moving window statistics, GIF, Base64, ROC, AUC, etc. R package version 1.17.1.2 https://CRAN.R-project.org/package=caTools

Liaw A, Wiener M (2002) Classification and Regression by random. Forest R News 2(3):18–22

Marshall RJ (1999) Classification to ordinal categories using a search partition methodology with an application in diabetes screening. Stat Med 18:2723–2735

Masters GN (1982) A Rasch model for partial credit scoring. Psychometrika 47:149–174

McDonald R, Hand D, Eckley I (2003) An empirical comparison of three boosting algorithms on real data sets with artificial class noise. In: MSC2003: multiple classifier systems, pp 35–44

Mease D, Wyner A (2008) Evidence contrary to the statistical view of boosting. J Mach Learn Res 9:131–156

Meyer MC (2013) Semi-parametric additive constrained regression. J Nonparametr Stat 25(3):715–730

Meyer D, Dimitriadou E, Hornik K, Weingessel A, Leisch F (2019) e1071: Misc functions of the department of statistics, probability theory group (Formerly: E1071), TU Wien. R package version 1.7-1 https://CRAN.R-project.org/package=e1071

Pya N, Wood SN (2014) Shape constrained additive models. Stat Comput 25(3):543–559

R Core Team (2019) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/

Robertson T, Wright FT, Dykstra R (1988) Order restricted statistical inference. Wiley, New York

Schapire RE (1990) The strength of weak learnability. Mach Learn 5(2):197–227

Sobel ME, Becker MP, Minick SM (1998) Origins, destinations, and association in occupational mobility. Am J Sociol 104(3):687–721

Therneau T, Atkinson B (2019) rpart: recursive partitioning and regression trees. R package version 4.1-15 https://CRAN.R-project.org/package=rpart

Turner R (2019). Iso: functions to perform isotonic regression. R package version 0.0-18 https://CRAN.R-project.org/package=Iso

Venables WN, Ripley BD (2002) Modern applied statistics with S, 4th edn. Springer, New York

Acknowledgements

The authors thank the Associate Editor and two anonymous reviewers for suggestions that led to this improved version of the paper.

Funding

Funding was provided by Ministerio de Economía, Industria y Competitividad, Gobierno de España (Grant No. MTM2015-71217-R).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 A.1 Theoretical justification for algorithms under the adjacent categories model

Let \(\mathbf{x} \in \mathbb {R}^d,y\in \{1,\dots ,K\}, y_k^*=I_{\left[ y=k\right] },k=1,\dotsc ,K\) and assume the adjacent probabilities model (2). Denote further \(F_1(\mathbf{x} )=0\), so the a posteriori probabilities are:

Now, the expected log-likelihood is:

Conditioning on \(\mathbf{x} \), the score vector \(\mathbf{S}(x) =(s_k(\mathbf{x} ))\) for the population Newton algorithm is:

The Hessian is a \((K-1)\times (K-1)\) matrix, \(\mathbf{H} (\mathbf{x} )=(H_{km}(\mathbf{x} ))\), \(2\le k,m\le K\), where each element \(H_{km}(\mathbf{x} )\) is:

If \(\mathbf{W} (\mathbf{x} )=-diag(\mathbf{H} (\mathbf{x} ))\), a quasi-Newton update for the ASILB algorithm is:

The full Newton update, which is implemented in the AMILB algorithm, is:

1.2 A.2 Theoretical justification for algorithms under the cumulative probabilities model

In this case we have to update the function \(F(\mathbf{x} )\) and the parameters \(\alpha _k,k=2,\dotsc ,K\). We will perform a “two step” update, first on \(F(\mathbf{x} )\) and then on the \(\alpha \) parameters.

Let us denote \(\gamma _k(\mathbf{x} )=\sum _{j=k}^K p_j(\mathbf{x} ),k=1,\dotsc ,K\), and assume the cumulative probabilities model (3). For this model

with \(\gamma _1(\mathbf{x} )=1\) and \(\gamma _{K+1}(\mathbf{x} )=0.\)

First, we perform the \(F(\mathbf{x} )\) update. Here, as in the previous model, we consider a single observation as this step is used for updating the weights and the values to be adjusted. The expected log-likelihood is:

Conditioning on \(\mathbf{x} \), the first and second derivatives for the population Newton algorithm are:

and

so that the Newton update for \(F(\mathbf{x} )\) is

As for the parameters \(\alpha _k,k=2,\dotsc ,K\), let us denote \(\varvec{\alpha }=(\alpha _2,\dots ,\alpha _K)'\). Now, we use all the \(\mathbf{x} _i\) observations as in this case we are going to perform a Newton step for updating the \(\alpha \)’s which do not depend on \(\mathbf{x} \). Then, the log-likelihood is:

The score for the Newton algorithm \(\mathbf{S} =(s_2,\dots ,s_K)'\) is:

And the Hessian is a tri-diagonal symmetric \((K-1)\times (K-1)\) matrix \(\mathbf{H} =(H_{km})\) with \(H_{km} = \frac{\partial ^2 l(\varvec{\alpha })}{\partial \alpha _k\partial \alpha _m},\) for \(2\le k,m\le K\), such that

In these conditions the Newton update is \(\varvec{\alpha } \leftarrow \varvec{\alpha } - \mathbf{H} ^{-1} \mathbf{S} .\)

1.3 A.3 Full numerical results for the simulations performed

This subsection contains the Tables showing the full numerical mean results for TMP, MAE and AUC for the two sets of simulations. In Tables 7, 8 and 9 appear the results for the first set of simulations performed under model 2 for the different F functions appearing in Table 3. Tables 10, 11 and 12 contain the mean results for the simulations performed under the uniform order-restricted predictors scheme. In all cases the best results appear in bold. Notice that there are no results for CSILB and CMILB when \(K=2\) as in that case those algorithms coincide with ASILB and AMILB. For this case the TMP and MAE values also coincide. They are given in both tables for completeness.

Rights and permissions

About this article

Cite this article

Conde, D., Fernández, M.A., Rueda, C. et al. Isotonic boosting classification rules. Adv Data Anal Classif 15, 289–313 (2021). https://doi.org/10.1007/s11634-020-00404-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11634-020-00404-9