Abstract

Based on the memoryless BFGS quasi-Newton method, a family of three-term nonlinear conjugate gradient methods are proposed. For any line search, the directions generated by the new methods are sufficient descent. Using some efficient techniques, global convergence results are established when the line search fulfills the Wolfe or the Armijo conditions. Moreover, the r-linear convergence rate of the methods are analyzed as well. Numerical comparisons show that the proposed methods are efficient for the unconstrained optimization problems in the CUTEr library.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider the unconstrained optimization problem

where \(f{:}\,\mathbb {R}^n\rightarrow \mathbb {R}\) is continuously differentiable and its gradient g(x) is available. Among different kinds of numerical methods for solving problem (1.1), nonlinear conjugate gradient (CG) methods comprise a class of efficient approaches, especially for large-scale problems, due to their low memory requirements and good global convergence properties. A CG method generates a sequence of iterates \(x_0\), \(x_1\), \(x_2\), \(\ldots \) by using the recurrence

where \(\alpha _k\) is a positive steplength computed by a line search and \(d_k\) is the search direction generated by the rule

where \(g_k=g(x_k)\) and \(\beta _k\) is a CG parameter. Different choices for the CG parameter \(\beta _k\) correspond to different CG methods. So far, the researches on the CG methods have made great progress. There have been many famous CG methods, for example, see [5, 6, 9,10,11,12, 14, 19, 22, 23, 33], etc. In the survey paper [13], Hager and Zhang gave a comprehensive review of the development of different versions of CG methods, with special attention given to their global convergence properties. We refer to the survey paper for more details.

In this paper, we are particularly interested in the Hestenes–Stiefel (HS) [14] method, the Polak–Ribière–Polyak (PRP) [22, 23] method and the Liu–Storey (LS) [19] method, in which the CG parameters are specified by

where \(y_{k-1}=g_{k}-g_{k-1}\) and \(\Vert .\Vert \) is the Euclidean norm. These methods have been regarded as the most efficient CG methods and studied extensively, see [7, 11, 12, 14, 17, 22,23,24,25, 29, 30, 32, 33] etc. However, in summary, the convergences of these methods for general nonlinear functions are still uncertain. In [24], Powell designed a 3 dimensional example and showed that, when the function is not strongly convex, the PRP method may not converge, even with an exact line search. Moreover, by Powell’s example [24], the HS method may not converge for a general nonlinear function as well, since \(\beta _k^{\mathrm{HS}} = \beta _k^{\mathrm{PRP}}\) holds with an exact line search. In [24], for the PRP method, Powell also suggested to restrict the CG parameter to be non-negative, namely, \(\beta _k^{\mathrm{PRP+}} = \max \{\beta _k^{\mathrm{PRP}}, 0\}\). Inspired by Powell’s work, Gilbert and Nocedal [11] gave an elegant analysis and proved that the PRP+ method is globally convergent when the search direction satisfies the sufficient descent condition \(g_k^Td_k \le -c\Vert g_k\Vert ^2\) and the stepsize \(\alpha _k\) is determined by the standard Wolfe line search. This technique can also be used to analyze the global convergence of the HS+ method in which \(\beta _k^{\mathrm{HS+}} = \max \{\beta _k^{\mathrm{HS}}, 0\}\).

Recently, based on the standard HS, PRP and LS method, some descent CG methods have been developed, see [4, 12, 16, 29, 32, 33], etc. The first one is the well-known CG_DESCENT method proposed by Hager and Zhang [12, 13], in which the parameter \(\beta _k\) is defined by

An attractive property of this method is that, for any line search, the direction \(d_k\) satisfies the sufficient descent condition

Hager and Zhang dynamically adjusted the lower bound on \(\beta _k^{\mathrm{HZ}}\) by letting

and established the global convergence of their method when the line search fulfills the Wolfe conditions

where \(0<\delta \le \sigma <1\).

Similar to the CG_DESCENT method, there are some modified PRP and LS methods, such as the modified LS (MLS) method in [16] and the descent PRP (DPRP) method in [29]. In these two methods, the CG parameters are defined by

and

where \(\theta _k>\frac{1}{4}\) is a constant. It is clear that the formulas (1.5), (1.9) and (1.10) have similar structures. More interestedly, both MLS method and DPRP method satisfy the sufficient descent condition (1.6). Based on some conditions, the global convergent results were established.

Based on the secant condition often satisfied by quasi-Newton method, Zhang, Zhou and Li developed sufficient descent three-term PRP and HS methods [32, 33]. In the three-term PRP method [33], the search direction is defined by

Similarly, in the modified HS method [32], they set

A remarkable feature of these methods is that the sufficient descent condition \(g_k^Td_k = -\Vert g_k\Vert ^2\) will always hold. Zhang et al. [32, 33] analyzed the global convergence of their methods with suitable line search.

Among the descent CG methods above, the CG_DESCENT method is the most famous one. When the exact line search is used, the method will reduce to the standard HS method. Interestedly, it can also be seemed as a member of the Dai–Liao [5] CG methods, in which

where \(s_{k-1}=\alpha _{k-1}d_{k-1}\). The CG_DESCENT method can be viewed as an adaptive version of DL method corresponding to

Due to the existence of the parameter t, the DL method has been seemed as a family of CG methods and studied extensively, see [1, 2, 15, 28, 31], etc. Very recently, to seek an optimal choice of the parameter t in the DL method, Dai and Kou [4] provided the following family of CG methods for unconstrained optimization

where the parameter \(\tau _k\) is corresponding to the scaling parameter in the scaled memoryless BFGS method proposed by Perry [21] and Shanno [27]. Dai and Kou [4] established the global convergence of their method with a improved Wolfe line search.

Inspired by the Dai–Kou method and the descent CG methods introduced above, in this paper, we attempt to structure a family of three-term CG methods, in which the search directions are close to the directions of the memoryless BFGS method in [20, 26]. The rest of the paper is organized as follows: in the next section, we propose the algorithm. In Sects. 3 and 4, we analyze the convergence of the proposed methods. In the last section, we present some numerical results to test the efficiency of the algorithms.

2 The algorithm

The limited memory BFGS method [18, 20] is an adaptation of the BFGS method for large-scale problems. It requires minimal storage and often provides a fast rate of linear convergence. The direction of the self-scaling memoryless BFGS method [21, 27] is defined by

where

where I denotes the identity matrix and \(\tau _k\) is a scaling parameter. Therefore, the direction can be rewritten as

Dai and Kou [4] derived the new formula by seeking the conjugate gradient direction which is closest to \(d_k^{\mathrm{PS}}\).

In \(d_k^{\mathrm{PS}}\), if set \(\tau _k=1\), we will get the memoryless BFGS method proposed by Nocedal [20] and Shanno [26], in which

We note that the direction \(d_k^{\mathrm{LBFGS}}\) is in fact a linear combination of \(g_k\), \(d_{k-1}\) and \(y_{k-1}\), since \(s_{k-1} = \alpha _{k-1}d_{k-1}\). Based one the strategies used to design the the Dai–Kou method [4], we think it is reasonable to replace the term \((y_{k-1} - s_{k-1})\) in \(d_k^{\mathrm{LBFGS}}\) by \(t_ky_{k-1}\) to construct a new search direction. Here, the scalar \(t_k\) is a suitable parameter. Combining the similar structure of formulas (1.5), (1.9) and (1.10), we give the following scheme

where \(z_k\) is a scalar to be specific, and \(\overline{t}\) is a constant to guarantee the sufficient descent property of the new search direction. In practical computation, we set \(\overline{t}=0.3\) and calculate \(t_k\) by

In the formula above, the scalar \((1-\frac{y_{k-1}^Ts_{k-1}}{\Vert y_{k-1}\Vert ^2})\) is the solution of the univariate minimal problem

We calculate \(t_k\) by (2.4) to make the direction defined by (2.1) close to the direction of the memoryless BFGS method.

Lemma 1

If the sequences \(\{x_{k}\}\) and \(\{d_k\}\) are generated by (1.2) and (2.1), then

Proof

Since \(d_0=-g_0\), we have \(g_0^Td_0=-\Vert g_0\Vert ^2\), which satisfies (2.5) since \(0\le t_k\le \overline{t}<1\). Multiplying (2.1) by \(g_k^T\), we have

which completes the proof. \(\square \)

In the scheme (2.1)–(2.3), different choices for the scalar \(z_k\) in \(\beta _k^{\mathrm{NEW}}\) will correspond to different modified CG methods. In this paper, we are mainly interested in the following three cases.

-

For \(z_k=d_{k-1}^Ty_{k-1}\), we get a modified HS method and the parameter \(\beta _k^{\mathrm{NEW}}\) equal to \(\beta ^{\mathrm{HZ}}\) with \(\theta _k=1\). If an exact line search is used, this method will reduce to the standard HS method since \(g_k^Td_{k-1}=0\) holds for \(k>0\).

-

For \(z_k=\Vert g_{k-1}\Vert ^2\), we get a descent PRP method.

-

For \(z_k=-d_{k-1}^Tg_{k-1}\), we get a modified LS method.

It follows from Lemma 1 that the new methods are sufficient descent. However, how to prove the global convergence is still a problem, especially when the Armijo or the standard Wolfe line search is used. To establish the global convergences of CG methods, a common technique is to use the truncated technique in [11] to restrict the the parameter \(\beta _k\) to be nonnegative. Differently, in this paper, we will use a new technique to set the lower bound for \(z_k\) to guarantee the global convergence of the proposed methods.

-

We set \(z_k=\max \{\tau \Vert d_{k-1}\Vert , d_{k-1}^Ty_{k-1}\}\) with some constant \(\tau >0\) to get a modified HS method, which we call the THS method.

-

We let \(z_k=\max \{\tau \Vert d_{k-1}\Vert , \Vert g_{k-1}\Vert ^2\}\) to obtain a modified PRP method which we call the TPRP method.

-

We choose \(z_k=\max \{\tau \Vert d_{k-1}\Vert , -d_{k-1}^Tg_{k-1}\}\) to get a LS type method which we call the TLS method.

In these methods, we always have \(z_k\ge \tau \Vert d_{k-1}\Vert \). This inequality is very helpful to establish the global convergence of the proposed methods. In the rest of this paper, for simplicity, we will only analyze the global convergence of the TPRP method. The conclusion can be extended to other methods in a similar way. Based on the precess above, we present concrete steps of the TPRP method as follows:

Algorithm 2.1

(The TPRP method)

-

Step 0. Initiate \(x_{0}\in \mathbb {R}^{n}\), \(\varepsilon >0\), \(\tau >0\) and \(0<\delta \le \sigma <1\). Set \(k:=0\);

-

Step 1. Stop if \(\Vert g_{k}\Vert _\infty \le \varepsilon \); Otherwise go to the next step;

-

Step 2. Compute \(d_k\) by (2.1) with \(z_k=\max \{\tau \Vert d_{k-1}\Vert , \Vert g_{k-1}\Vert ^2\}\);

-

Step 3. Determine the steplength \(\alpha _k\) by a line search.

-

Step 4. Let \(x_{k+1}=x_{k}+\alpha _kd_k\);

-

Step 5. Set \(k:=k+1\) and go to Step 1.

It is not difficult to establish the global convergence of the TPRP method when the Wolfe or Armijo line search is used. However, the numerical performance of the TPRP method in practical computation is not as good as we expect. In the new method, we still can not guarantee the scalar \(\beta _k^{\mathrm{NEW}}\) to be nonnegative. When \(\beta _k^{\mathrm{NEW}}<0\), we think that the term \(\beta _k^{\mathrm{NEW}}d_{k-1}\) in (2.1) will reduce the efficiency of \(d_k\), since \(d_{k-1}\) is a sufficient descent direction of f at \(x_{k-1}\) which is close to \(x_k\). Therefore, we give a truncated TPRP (TPRP+) method by setting

where \(d_k^{\mathrm{TPRP}}\) is the direction defined in the TPRP method. It is clear that the TPRP+ method is sufficient descent and satisfies (2.5). Similarly, we can define THS+ and TLS+ methods by using this truncated technique.

In the next sections, we will establish the global convergence of the TPRP method when the Wolfe or the Armijo line search is used. All the convergence results of the TPRP method can be extended to the TPRP+ method in a similar way. From now on, throughout the paper, we always suppose the following assumption holds.

Assumption A

-

(I)

The level set \(\varOmega =\{x\in \mathbb {R}^n{:}\,f(x)\le f(x_0)\) is bounded.

-

(II)

In some neighborhood N of \(\varOmega \), function f is continuously differentiable and its gradient is Lipschitz continuous, namely, there exists a constant \(L>0\) such that

$$\begin{aligned} \Vert g(x)-g(y)\Vert \le L\Vert x-y\Vert ,\quad \quad \forall x,y\in N. \end{aligned}$$(2.6)

The assumption implies that there are positive constants B and \(\gamma _1\) such that

3 Convergence analysis of the TPRP method with Wofle line search

In this section, we devote to the global convergence of the TPRP method when the Wolfe line search is used. The following useful lemma was essentially proved by Zoutendijk [34].

Lemma 2

Suppose that the conditions in Assumption A hold, \(\{g_k\}\) and \(\{d_k\}\) are generated by the TPRP method with the Wolfe line search (1.7)–(1.8), then

Theorem 1

Suppose that the conditions in Assumption A hold and \(\{g_k\}\) is generated by the TPRP method with the Wolfe line search, then

Proof

It follows from the descent condition (2.5) that \(\Vert d_{k-1}\Vert \ne 0\) holds for \(k>1\). Since \(z_k=\max \{\tau \Vert d_{k-1}\Vert , \Vert g_{k-1}\Vert ^2\}\), we have

Combining this with (2.2), (2.6) and (2.7) gives

Namely,

Combining this with (3.1) gives

This implies \(\lim _{k\rightarrow \infty }\Vert g_k\Vert =0\). The proof is completed. \(\square \)

4 Convergence analysis of the TPRP method with Armijo line search

In this section, we analyze the global convergence of the TPRP method with the Armijo line search, that is, the steplength satisfies

where \(\alpha _k=\max \{\alpha _0\rho ^i,i=0,1,2,\ldots \}\) with \(0<\rho ,\delta <1\), \(\alpha _0\in (0,1]\) is an initial guess of the steplength. If the conditions in Assumption A hold, it follows directly from (3.3) and (2.7) that

Theorem 2

Suppose that the conditions in Assumption A hold, \(\{g_k\}\) is generated by the TPRP method with the Armijo line search (4.1). Then either \(\Vert g_k\Vert =0\) for some k or

Proof

Suppose for contradiction that \(\liminf _{k\rightarrow \infty } \Vert g_k\Vert >0\) and \(\Vert g_k\Vert \ne 0\) for all \(k\ge 0\). Define

Then

From (2.5), (4.1) and the conditions in Assumption A, we have

On one hand, if \(\liminf _{k\rightarrow \infty } \alpha _k>0\), (4.5) gives \(\liminf _{k\rightarrow \infty }\Vert g_k\Vert =0\), which contradicts (4.4). On the other hand, if \(\liminf _{k\rightarrow \infty } \alpha _k=0\), then there exists a infinite index set \(\mathbb {K}\) such that

When \(k\in \mathbb {K}\) is large enough, the Armijo line search rule implies that \(\rho ^{-1}\alpha _k \) does not satisfy (4.1), namely

We get from the mean value theorem and (2.6) that, there is a \(\xi _{k}\in [0,1]\), such that

This together with (4.7), (4.2) and (2.5) gives

Combining this with (4.6) gives \(\liminf _{k\in \mathbb {K},k\rightarrow \infty }\Vert g_k\Vert =0\). This also yields contradiction and the proof is completed. \(\square \)

5 Linear convergence rate

In this section, we analyze the r-linear convergence rate of the TPRP method when the objective function f is twice continuously differentiable and uniformly convex, that is, there are positive constants \(m\le M\) such that

where \(\nabla ^2f(x)\) denotes the Hessian of f at x.

Lemma 3

Suppose that f is twice continuously differentiable and uniformly convex. Then the problem (1.1) has a unique solution \(x^*\) and

Moreover, for any \(x_0\in {\mathbb {R}}^n\), the level set \(\varOmega _0 \triangleq \{x\in {\mathbb {R}}^n: f(x)\le f(x_0)\}\) is bounded and there is a constant \(B_0>0\) such that

Based on the assumptions above, it is no difficult to prove the following convergence theorem.

Theorem 3

Suppose that f is twice continuously differentiable and uniformly convex. If \(\{x_k\}\) is generated by the TPRP method with the Wolfe or the Armijo line search, then this sequence converges to the unique solution of problem (1.1).

In order to prove the r-linear convergence of the TPRP method, we first give the following lemma, which gives a lower bound of the stepsize \(\alpha _k\).

Lemma 4

Suppose that f is twice continuously differentiable and uniformly convex, the sequence \(\{x_k\}\) is generated by the TPRP method with the Wolfe or the Armijo line search. Then there is a constant \(C_1>0\) such that

Proof

Since \(z_k=\max \{\tau \Vert d_{k-1}\Vert , \Vert g_{k-1}\Vert ^2\}\), we get from (2.1), (2.2), (2.3), (5.5), (5.6) that

Therefore

When the Wolfe line search is used, we get from (2.5), (1.8) and (5.5) that

By (5.8),

When the Armijo line search is used. By the line search rule, \(\alpha _k\ne \alpha _0\) implies \(\rho ^{-1}\alpha _k\) dose not satisfy (4.1). Similar to the proof of Theorem 2, we can prove that

So we can get (5.7) by setting

The proof is completed. \(\square \)

Theorem 4

Suppose that f is twice continuously differentiable and uniformly convex, \(x^*\) is the unique solution of problem (1.1) and the sequence \(\{x_k\}\) is generated by the TPRP method with the Wolfe or the Armijo line search. Then there are constants \(a>0\) and \(r\in (0,1)\) such that

Proof

From the Wolf condition (1.7) or the Armijo condition (4.1), we have

Then we get,

where

Combining this with (5.2) gives

Hence we can obtain (5.9) by letting \(a = \sqrt{2\left( f(x_{0}) - f(x^*)\right) /m}\). The proof is completed. \(\square \)

6 Numerical results

In this section, we report some numerical results of the proposed methods and compare their numerical performances with that of the CG_DESCENT method [12]. The test problems are the unconstrained problems in the CUTEr library [3] with dimensions varying from 50 to 1000. We excluded the problems for which different solvers converge to different local minimizers. We often ran two versions of the test problem for which the dimension could be chosen arbitrarily. Table 1 lists the names (Prob) and dimensions (Dim) of the 152 valid test problems.

All the methods were coded in Fortran and ran on a PC with 3.7 GHz CPU processor and 4 GB RAM. The code for our methods are modifications of the subroutine of CG_DESCENT method. We terminated the iteration when \(\Vert g_k\Vert _\infty \le 10^{-6}\). Detailed numerical results are omitted here since the data is too much.

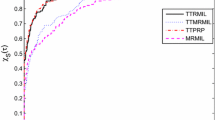

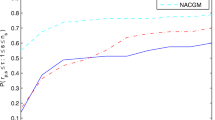

Figures 1, 2, 3 and 4 plot the performances of the methods relative to the total number of iterations and the CPU time by using the profiles of Dolan and Moré [8]. The curves in the figures have the following meanings:

-

“CG_DESCENT” stands for the CG_DESCENT method with the approximate Wolfe line search [12]. We use the Fortran code (Version 1.4, November 14, 2005) from Prof. Hager’s web page: http://www.math.ufl.edu/~hager/ and the default parameters there.

-

“TPRP” stands for the TPRP method with the same line search as “CG_DESCENT”. We set \(\tau = 10^{-6}\) for the scalar \(z_k\) in \(\beta _k^{\mathrm{NEW}}\) and the parameter \(t_k\) in (2.3) is calculated (2.4) with \(\overline{t}=0.3\).

-

“TPRP+”, “THS”,“THS+”,“TLS”,“TLS+” denote the TPRP+, THS, THS+, TLS and TLS+ methods with the same line search and parameters as “TPRP”, respectively.

We observe from Figs. 1, 2, 3 and 4 that the performances of the TPRP+, THS+ and TLS+ methods are close and obviously better than that of the CG_DESCENT method. Since all the methods are implemented with the same line search, we conclude that, on average, the TPRP+, THS+ and TLS+ methods seem to generate more efficient search directions. We also observe that, the performance of the TPRP+, THS+ and TLS+ methods are better than that of the TPRP, THS and TLS methods, correspondingly. This means that the nonnegative restriction on the CG parameter \(\beta _k^{\mathrm{NEW}}\) is benefit to improve the efficiency of the methods.

References

Andrei, N.: Open problems in nonlinear conjugate gradient algorithms for unconstrained optimization. Bull. Malays. Math. Sci. Soc. 34, 319–330 (2011)

Babaie-Kafaki, S., Reza, G.: A descent family of Dai–Liao conjugate gradient methods. Optim. Methods Softw. 21, 1–9 (2013)

Bongartz, I., Conn, A., Gould, N., Toint, P.: CUTE: constrained and unconstrained testing environments. ACM Trans. Math. Softw. 21, 123–160 (1995)

Dai, Y., Kou, C.: A nonlinear conjugate gradient algorithm with an optimal property and an improved Wolfe line search. SIAM J. Optim. 23, 296–320 (2013)

Dai, Y., Liao, L.: New conjugate conditions and related nonlinear conjugate gradient methods. Appl. Math. Optim. 43, 87–101 (2001)

Dai, Y., Yuan, Y.: A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10, 177–182 (2000)

Dai, Z.: Two modified HS type conjugate gradient methods for unconstrained optimization problems. Nonlinear Anal. 74, 927–936 (2011)

Dolan, E., Moré, J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Fletcher, R.: Practical Method of Optimization, Vol. 1: Unconstrained Optimization. Wiley, New York (1987)

Fletcher, R., Reeves, C.: Function minimization by conjugate gradients. Comput. J. 7, 149–154 (1964)

Gilbert, J., Nocedal, J.: Global convergence properties of conjugate gradient methods for optimization. SIAM J. Optim. 2, 21–42 (1992)

Hager, W., Zhang, H.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16, 170–192 (2005)

Hager, W., Zhang, H.: A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2, 35–58 (2006)

Hestenes, M., Stiefel, E.: Methods of conjugate gradients for solving linear systems. J. Res. Natl. Bur. Stand. 49, 409–436 (1952)

Li, G., Tang, C., Wei, Z.: New conjugacy condition and related new conjugate gradient methods for unconstrained optimization. J. Comput. Appl. Math. 202, 523–539 (2007)

Li, M., Feng, H.: A sufficient descent LS conjugate gradient method for unconstrained optimization problems. Appl. Math. Comput. 218, 1577–1586 (2011)

Li, M., Liu, J., Feng, H.: The global convergence of a descent PRP conjugate gradient method. Comput. Appl. Math. 31(1), 59–83 (2012)

Liu, D., Nocedal, J.: On the limited memory BFGS method for large-scale optimization. Math. Program. 45, 503–528 (1989)

Liu, Y., Storey, C.: Efficient generalized conjugate gradient algorithms, part 1: theory. J. Optim. Theory Appl. 69, 177–182 (1991)

Nocedal, J.: Updating quasi-Newton matrices with limited storage. Math. Comput. 35, 773–782 (1980)

Perry, J.M.: A class of conjugate gradient algorithms with a two-step variable-metric memory. Discussion Paper 269, Center for Mathematical Studies in Economics and Management Sciences, Northwestern University, Evanston, Illinois (1977)

Polak, B., Ribière, G.: Note sur la convergence de méthodes de directions conjuguées. Rev. Francaise Inform. Recherche. Opérat. 16, 35–43 (1969)

Polyak, B.: The conjugate gradient method in extreme problems. USSR Comput. Math. Math. Phys. 9, 94–112 (1969)

Powell, M.: Nonvonvex minimization calculations and the conjugate gradient method. In: Lecture Notes in Mathematics, vol. 1066. Springer, Berlin (1984)

Powell, M.: Convergence properties of algorithms for nonlinear optimization. SIAM Rev. 28, 487–500 (1986)

Shanno, D.: Conjugate gradient methods with inexact searches. Math. Oper. Res. 3, 244–256 (1978)

Shanno, D.F.: On the convergence of a new conjugate gradient algorithm. SIAM J. Numer. Anal. 15, 1247–1257 (1978)

Yabe, H., Takano, M.: Global convergence properties of nonlinear conjugate gradient methods with modified secant condition. Comput. Optim. Appl. 28, 203–225 (2004)

Yu, G., Guan, L.: Modified PRP methods with sufficient descent property and their convergence properties. Acta Scientiarum Naturalium Universitatis Sunyatseni 45(4), 11–14 (2006). (Chinese)

Yuan, G.: Modified nonlinear conjugate gradient methods with sufficient descent property for large-scale optimization problems. Optim. Lett. 3, 11–21 (2009)

Zhang, J., Deng, N., Chen, L.: New quasi-Newton equation and related methods for unconstrained optimization. J. Optim. Theory Appl. 102, 147–167 (1999)

Zhang, L.: New versions of the Hestenes–Stiefel nonlinear conjugate gradient method based on the secant condition for optimization. Comput. Appl. Math. 28, 1–23 (2009)

Zhang, L., Zhou, W., Li, D.: A descent modified Polak–Ribière–Polyak conjugate gradient method and its global convergence. IMA J. Numer. Anal. 26, 629–640 (2006)

Zoutendijk, G.: Nonlinear programming, computational methods. In: Abadie, J. (ed.) Integer and Nonlinear Programming, pp. 37–86. North-Holland, Amsterdam (1970)

Acknowledgements

This work is supported by the Chinese NSF Grant (No. 11401242) and the SFED Grant (No. 14B139) of Hunan Province.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Li, M. A family of three-term nonlinear conjugate gradient methods close to the memoryless BFGS method. Optim Lett 12, 1911–1927 (2018). https://doi.org/10.1007/s11590-017-1205-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-017-1205-y