Abstract

In this paper, we introduce a modified relaxed projection algorithm and a modified variable-step relaxed projection algorithm for the split feasibility problem in infinite-dimensional Hilbert spaces. The weak convergence theorems under suitable conditions are proved. Finally, some numerical results are presented, which show the advantage of the proposed algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(C\) and \(Q\) be the nonempty closed convex subsets of the real Hilbert spaces \(\mathcal H _1\) and \(\mathcal H _2\), respectively. The split feasibility problem (SFP) is formulated as finding a point \(x^*\) with the property

where \(A :\mathcal{H }_1\rightarrow \mathcal{H }_2\) is a bounded linear operator. The SFP in finite-dimensional Hilbert spaces was first introduced by Censor and Elfving [1] for modeling inverse problems which arise from phase retrievals and in medical image reconstruction [2]. Many researchers studied the SFP and introduced various algorithms to solve it (see [2–12] and references therein).

For the split feasibility problem in the finite-dimensional real Hilbert spaces, Byrne [2, 3] presented the so-called CQ algorithm for solving the SFP, that does not involve matrix inverses. Take an initial guess \(x_0\in \mathcal{H }_1\) arbitrarily, and define \(\{x_n\}\) recursively as

where \(0 < \gamma < 2/L\), and where \(P_C\) denotes the metric projection onto \(C\) and \(L\) is the spectral radius of the operator \(A^*A\). Then the sequence \(\{x_n\}\) generated by (2) converges strongly to a solution of SFP whenever \(\mathcal{H }_1\) is finite-dimensional and whenever there exists a solution to SFP (1). In the CQ algorithm, Byrne assumed that the projections \(P_C\) and \(P_Q\) are easily calculated. However, in some cases it is impossible or needs too much work to exactly compute the metric projection. Yang [13] presented a relaxed CQ algorithm, in which \(P_C\) and \(P_Q\) are replaced by \(P_{C_n}\) and \(P_{Q_n}\), which are the metric projections onto two halfspaces \(C_n\) and \(Q_n\), respectively. Clearly, Yang’s algorithm is easy to implement.

Recently, based on work of Yang [13] and Qu and Xiu [14], Wang et al. [15] presented two relaxed inexact projection methods and a variable-step relaxed inexact projection method. The second relaxed inexact projection methods (Algorithm 3.2 in [15]) is to find \(x_{n+1}\) satisfying

So \(x_{n+1}\) is in the ball \(B(s,r)\) with \(s=P_{C_n}(x_n-\gamma A^*(I-P_{Q_n})Ax_n)\) and \(r =\epsilon _n\Vert (I-P_{Q_n})Ax_n\Vert \). In the proofs of the convergence theorems, Wang et al. [15] used the fact that, from (3) and \(\lim _{n\rightarrow \infty }\Vert (I-P_{Q_n})Ax_n\Vert =0\), it follows that for sufficiently large \(n,\,x_{n+1}\) is the projection of \(x_{n}\) in \(C_n\). However, it is obvious that \(x_{n+1}\) may not in \(C_n\) if \(P_{C_n}(x_n-\gamma A^*(I-P_{Q_n})Ax_n)\in \partial C_n,\) since \(C_n\) is a half-space. To overcome the gap, we modify the algorithms of [13] and [14] and proposed a relaxed projection algorithm as follows

and a variable-step relaxed projection algorithm in infinite-dimensional real Hilbert space. From the following remark, it is easy to see that the algorithm (4) is the special case of (3) proposed by Wang et al. [15].

Remark 1.1

It follows, from (4),

Let \(\epsilon _n=\alpha _n\gamma \sqrt{L}\) and then (3) is derived.

This paper is organized as follows. In Sect. 2, we review some concepts and existing results. In Sect. 3, we present a modified relaxed projection methods for the SFP and establish the convergence of the algorithm. A modified variable-step relaxed inexact projection algorithm is presented in Sect. 4. In Sect. 5, we report some computational results with the proposed algorithms.

2 Preliminaries

In this section, we review some definitions and lemmas which will be used in this paper.

Definition 2.1

Let \(F\) be a mapping from a set \(\Omega \subset \mathcal{H }\) into \(\mathcal{H }\). Then

-

\(F\) is said to be monotone on \(\Omega \), if

$$\begin{aligned} \langle F(x)-F(y),x-y\rangle \ge 0,\quad \forall x,y\in \Omega ; \end{aligned}$$ -

\(F\) is said to be \(\alpha \)-inverse strongly monotone (\(\alpha \)-ism), with \(\alpha >0\), if

$$\begin{aligned} \langle F(x)-F(y),x-y\rangle \ge \alpha \Vert F(x)-F(y)\Vert ^2,\quad \forall x,y\in \Omega ; \end{aligned}$$ -

\(F\) is said to be Lipschitz continuous on \(\Omega \) with constant \(\lambda > 0\), if

$$\begin{aligned} \Vert F(x)-F(y)\Vert \le \lambda \Vert x-y\Vert ,\quad \forall x,y\in \Omega . \end{aligned}$$

The projection is an important tool for our work in this paper. Let \({\Omega }\) be a closed convex subset of real Hilbert space \(\mathcal{H }\). Recall that the (nearest point or metric) projection from \(H\) onto \(\Omega \), denoted \(P_{\Omega }\), is defined in such a way that, for each \(x\in \mathcal{H },\,P_{\Omega }x\) is the unique point in \(\Omega \) such that

The following two lemmas are useful characterizations of projections.

Lemma 2.1

Given \(x \in \mathcal{H }\) and \(z \in \Omega \). Then \(z = P_{\Omega }x\) if and only if

Lemma 2.2

For any \(x, y\in \mathcal{H }\) and \(z \in \Omega \), it holds

-

(i)

\(\Vert P_{\Omega }(x)-P_{\Omega }(y)\Vert ^2 \le \langle P_{\Omega }(x)-P_{\Omega }(y), x-y\rangle ;\)

-

(ii)

\(\Vert P_{\Omega }(x)-z\Vert ^2\le \Vert x-z\Vert ^2-\Vert P_{\Omega }(x)-x\Vert ^2\).

Remark 2.1

From Lemma 2.2 (i), we know that \(P_{\Omega }\) is a monotone, 1-ism and nonexpansive (i.e., \(\Vert P_{\Omega }(x)-P_{\Omega }(y)\Vert \le \Vert x-y\Vert \)) operator. Moreover, it is easily verified that the operator \(I- P_{\Omega }\) is also 1-ism, where \(I\) denotes the identity operator, i.e., for all \(x, y \in \mathcal{H }\):

Let \(F\) denote a mapping on \(\mathcal{H }\). For any \(x \in \mathcal{H }\) and \(\alpha > 0\), define:

From the nondecreasing property of \(\Vert e(x,\alpha )\Vert \) on \(\alpha > 0\) by Toint [16] (see Lemma 2(1)) and the nonincreasing property of \(\Vert e(x,\alpha )\Vert /\alpha \) on \(\alpha > 0\) by Gafni and Bertsekas [17] (see Lemma 1a), we immediately conclude a useful lemma.

Lemma 2.3

Let \(F\) be a mapping on \(\mathcal{H }\). For any \(x \in \mathcal{H }\) and \(\alpha > 0\), we have:

For the SFP, we firstly assume that the following conditions are satisfied:

-

(1)

The solution set of the SFP denoted by \(\Gamma :=\{x\in C: Ax\in Q\}\) is nonempty.

-

(2)

The set \(C\) is given by

$$\begin{aligned} C =\{x\in \mathcal{H }_1 : c(x) \le 0\}, \end{aligned}$$where \(c :\mathcal{H }_1\rightarrow \mathbb R \) is a convex function, and \(C\) is nonempty. The set \(Q\) is given by

$$\begin{aligned} Q=\{y\in \mathcal{H }_2 : q(y) \le 0\}, \end{aligned}$$where \(q :\mathcal{H }_2\rightarrow \mathbb{R }\) is a convex function, and \(Q\) is nonempty.

-

(3)

\(c\) and \(q\) are subdifferentiable on \(C\) and \(Q\). (Note that the convex function is subdifferentiable everywhere in \(\mathbb R ^N\).) For any \(x\in \mathcal{H }_1\), at least one subgradient \(\xi \in \partial c(x)\) can be calculated, where \(\partial c(x)\) is defined as follows:

$$\begin{aligned} \partial c(x)=\{z\in \mathcal{H }_1:c(u)\ge c(x)+ \langle u-x,z\rangle ,\quad \text{ for} \text{ all}\,\,u\in \mathcal{H }_1\}. \end{aligned}$$For any \(y\in \mathcal{H }_2\), at least one subgradient \(\eta \in \partial q(y)\) can be calculated, where

$$\begin{aligned} \partial q(y)=\{w\in \mathcal{H }_2:q(v)\ge q(y)+ \langle v-y,w\rangle ,\quad \text{ for} \text{ all}\,\, v\in \mathcal{H }_2\}. \end{aligned}$$ -

(4)

\(c\) and \(q\) are bounded on bounded sets. (Note that this condition is automatically satisfied if \(\mathcal{H }_1\) and \(\mathcal{H }_2\) are finite dimensional.)

From Banach-Steinhaus Theorem (see Theorem 2.5 in [18]), it is easy to get the following result.

Remark 2.2

Assumption (4) guarantees that if \(\{x_n\}\) is a bounded sequence in \(\mathcal{H }_1\) (resp. \(\mathcal{H }_2\)) and \(\{x_n^*\}\) is a sequence in \(\mathcal{H }_1\) (resp. \(\mathcal{H }_2\)) such that \(x_n^*\in \partial c(x_n)\) (resp. \(x_n^*\in \partial q(x_n)\)) for each \(n\), then \(\{x_n^*\}\) is bounded.

Lemma 2.4

[19] Let \(\{a_n\}\) be a sequence of nonnegative number such that

where \(\{\lambda _n\}\) satisfies \(\sum _{n=1}^{\infty }\lambda _n<+\infty \). Then \(\lim _{n\rightarrow \infty }a_n\) exists.

Lemma 2.5

[20] Let \(K\) be a nonempty closed convex subset of a Hilbert space \(\mathcal{H }\). Let \(\{x_n\}\) be a bounded sequence which satisfies the following properties:

-

every weak limit point of \(\{x_n\}\) lies in \(K\);

-

\(\lim _{n\rightarrow \infty }\Vert x_n-x\Vert \) exists for every \(x \in K\).

Then \(\{x_n\}\) converges weakly to a point in \(K\).

3 A modified relaxed projection algorithm

In this section we propose a modified relaxed algorithm and prove the weak convergence of the proposed algorithm.

Algorithm 3.1

Let \(x_0\) be arbitrary. Let \(\{\alpha _n\}\subset (0,\infty )\), and

where \(\gamma \in (0, M),\) \(M:=\min \{2/\Vert A\Vert ^2,{\sqrt{2}}/{\Vert A\Vert }\}\), and \(\{C_n\}\) and \(\{Q_n\}\) are the sequences of closed convex sets constructed as follows:

where \(\xi _n\in \partial c(x_n)\), and

where \(\eta _n\in \partial q(Ax_n)\).

Remark 3.1

By the definition of subdifferentials, it is clear that \(C \subset C_n\) and \(Q \subset Q_n\). Also note that \(C_n\) and \(Q_n\) are half-space, thus, the projections \(P_{C_n}\) and \(P_{Q_n}\) have closed-form expression.

Theorem 3.1

Let \(\{x_n\}\) be the sequence generated by the Algorithm 3.1 Let \(\{\alpha _n\}\subset (0,\infty )\) satisfy

Then \(\{x_n\}\) converges weakly to a solution of SFP(1).

Proof

Set \(\sigma =\gamma ^2\Vert A\Vert ^2-2\gamma ,\) then \(\sigma <0.\) Let \(\delta _n=\sqrt{-\frac{2}{\sigma }}\alpha _n.\) From (6), we have

Take arbitrarily \(x^*\in \Gamma \), then we have

From Cauchy-Schwarz inequality and arithmetic and geometric means inequality, it is follows

Substituting (9) into (8), we obtain

Since \(x^*\in {C_n}\), we have

It is easily seen that

Since \(Ax^*\in Q\subset Q_n\), by Lemma 2.1, we get

Applying (7), (10) and (14), we obtain

which with \(\sigma <0\) and \(\gamma \le \sqrt{2}/\Vert A\Vert \) implies

So, using Lemma 2.4, we have \(\lim _{n\rightarrow \infty }\Vert x_{n}-x^*\Vert \) exists, and hence \(\{x_n\} \) is bounded. From (15), it follows that

Consequently we get by \(\alpha _n\rightarrow 0\),

Set

We next demonstrate that

To see this, we note the identity

On the other hand, using \(x_{n+1} = \alpha _nP_{C_n}x_n+(1-\alpha _n)P_{C_n}(x_n-\gamma u_n)\), we have

Since \(x^*\in C\subset C_{n},\) we have by Lemma 2.1

Therefore,

Since \(\{x_n\}\) is bounded, by \(\alpha _n\rightarrow 0\) and (18), (20), (21), we get

which by (19) and existence of \(\lim _{n\rightarrow \infty }\Vert x_n-x^*\Vert \) yields

Since \(\{x_n\}\) is bounded, which implies that \(\{\xi _n\}\) is bounded, we see that the set of weak limit points of \(\{x_n\}\), \(\omega _w(x_n)\), is nonempty. We now show\(\square \)

Claim

\(\omega _w(x_n)\subset \Gamma \).

Indeed, assume \(\hat{x}\in \omega _w(x_n)\) and \(\{x_{n_j}\}\) is a subsequence of \(\{x_n\}\) which converges weakly to \(\hat{x}\). Since \(x_{n_j+1}\in C_{n_j}\), we obtain

Thus,

where \(\xi \) satisfies \(\Vert \xi _{n_j}\Vert \le \xi \) for all \(n\). By virtue of the lower semicontinuity of \(c\), we get by (22)

Therefore, \(\hat{x}\in C.\)

Next we show that \(A\hat{x}\in Q.\) To see this, by (17), set \(y_n=Ax_n-P_{Q_n}Ax_n\rightarrow 0\) and let \(\eta \) be such that \(\Vert \eta _n\Vert \le \eta \). Then, since \(Ax_{n_j}-y_{n_j}=P_{Q_{n_j}}Ax_{n_j}\in Q_{n_j},\) we get

Hence,

By the weak lower semicontinuity of \(q\) and the fact that \(Ax_{n_j}\rightarrow A\hat{x}\) weakly, we arrive at the conclusion

Namely, \(A\hat{x}\in Q.\)

Therefore, \(\hat{x}\in \Gamma \). Now we can apply Lemma 2.5 to \(K:=\Gamma \) to get that the full sequence \(\{x_n\}\) converges weakly to a point in \(\Gamma \).

4 A modified variable-step relaxed projection algorithm

In the Algorithm 3.1, the stepsizes \(\gamma \) are all fixed. In this section, based on the algorithm of Qu and Xiu [14], we present a modified variable-step projection method which needs not compute the spectral radius of the operator \(A^*A\), and the objective function can sufficiently decrease at each iteration.

For every \(n\), using \(Q_n\) we define the function \(F_n : \mathcal{H }_1\rightarrow \mathcal{H }_1\) by

The variable-step relaxed projection algorithm is defined as follows:

Algorithm 4.1

Given constants \(\gamma > 0,\,l\in (0, 1),\,\mu \in (0, \sqrt{2}/2)\). Let \(x_0\) be arbitrary. Let \(\{\alpha _n\}\subset (0,\infty )\), and let

where \(\beta _n=\gamma l^{m_n}\) and \(m_n\) is the smallest nonnegative integer \(m\) such that

Let

where \(\{C_n\}\) and \(\{Q_n\}\) are the sequences of closed convex sets constructed as follows:

where \(\xi _n\in \partial c(x_n)\), and

where \(\eta _n\in \partial q(Ax_n)\).

Although \(C_n, Q_n\) and \(F_n\) depend on \(n\), we have the following two nice lemmas (see [14]).

Lemma 4.1.

For all \(n = 0, 1, 2,\ldots ,\) \(F_n\) is Lipschitz continuous on \(\mathcal{H }_1\) with constant \(L\) and \(1/L\)-ism on \(\mathcal{H }_1\), where \(L\) is the spectral radius of the operator \(A^*A\). Therefore, Armijo-like search rule (23) is well defined.

Lemma 4.2

For all \(n=0,1,\ldots ,\)

Theorem 4.1

Let \(\{x_n\}\) be the sequence generated by the Algorithm 4.1 Let \(\{\alpha _n\}\subset (0,\infty )\) satisfy

Then \(\{x_n\}\) converges weakly to a solution of SFP(1).

Proof

From (23) and (24), it is easily seen that

Let \(x^*\) be a solution of the SFP (1), using the similar procedure in the proof of Theorem 3.1, we obtain

Following the line of the proof of (7) in [14], we have

which with \(\mu \in (0, \sqrt{2}/2)\) yields

So, using Lemma 2.4, we get that \(\lim _{n\rightarrow \infty }\Vert x_{n}-x^*\Vert \) exists, and hence \(\{x_n\} \) is bounded. From (28), it follows that

Consequently we get

We next demonstrate that

Using (23), we obtain

Since \(\{x_n\}\) is bounded, we see that the set of weak limit points of \(\{x_n\},\,\omega _w(x_n)\), is nonempty and \(\{Ax_n\}\) is bounded which implies that \(\{\eta _n\}\) is bounded. Let \(\eta \) be such that \(\Vert \eta _n\Vert \le \eta .\) We now show \(\square \)

Claim

\(\omega _w(x_n)\subset \Gamma \).

Assume \(\hat{x}\in \omega _w(x_n)\) and \(\{x_{n_i}\}\) is a subsequence of \(\{x_n\}\) which converges weakly to \(\hat{x}\). Using the similar procedure in the proof of Theorem 3.1, by (31), we get \(\hat{x}\in C.\)

Next we show that \(A(\hat{x})\in Q.\) Define

Then from Lemmas 2.3 and 4.2, and Eq. (30), we have:

which implies

Using Lemma 2.1 and \(x^*\in C\subset C_{n_i}\), we have for all \(i = 1,2,\ldots ,\)

which implies

which implies

From (5), it follows

Combining (33) and (34), we have

Since

and \(\{x_{n_i}\}\) is bounded, the sequence \(\{F_{n_i}(x_{n_i})\}\) is also bounded. Therefore, from (32) and (35), we get

By \(P_{Q_{n_i}}(Ax_{n_i})\in Q_{n_i}\), we have

Hence,

By the weak lower semicontinuity of \(q\) and the fact that \(Ax_{n_j}\rightarrow A\hat{x}\) weakly, we arrive at the conclusion

Namely, \(A\hat{x}\in Q.\)

Therefore, \(\hat{x}\in \Gamma \). Now we can apply Lemma 2.5 to \(K:=\Gamma \) to get that the full sequence \(\{x_n\}\) converges weakly to a point in \(\Gamma \).

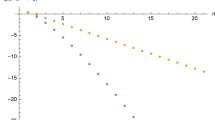

5 Numerical results

In this section, we will present the results of numerical tests for a problem (see [15]). We consider the following problem:

(P) Let \(C=\{x\in R^{10}|c(x)\le 0 \}\) where \(c(x)=-x_1+x_2^2+\cdots +x_{10}^2 \) and \(Q=\{y\in R^{20}|q(y)\le 0\}\) where \(q(x)=y_1+y_2^2+\cdots +y_{20}^2-1\). Note that \(C\) is the set above the function \(x_1=x_2^2+\cdots +x_{10}^2 \) and \(Q\) is the set below the function \(y_1=-y_2^2-\cdots -y_{20}^2+1\). \(A\in R^{20\times 10}\) is a random matrix where every element of \(A\) is in \((0,1)\) satisfying \(A(C)\cap Q\ne \emptyset \). Let \(x_0\) be a random vector in \(R^{10}\) where every element of \(x_0\) is in \((0,1)\).

The terminal condition is that \(\Vert x_n-x^*\Vert \le \epsilon \), where \(x^*\) is a solution of (P). Let \(\alpha _n = 1/n^2, n = 0,1,\ldots ,\) in the Algorithms 3.1 and 4.1 In the tables, \(\epsilon \) denotes the tolerance of the solution point of the problem, ’Iter.’ and ’InIt’ denote the terminating iterative numbers and the number of total iterations of finding suitable \(\beta _n\) in (23), respectively. \(C(x)\) and \(Q(Ax)\) denote the value of \(c(x)\) and \(q(Ax)\) at the terminal point, respectively.

We compare the algorithms 3.1 and 4.1 with the relaxed CQ algorithm in [13] and the variable-step CQ algorithm in [14], respectively.

Firstly, we compare the modified relaxed projection algorithm 3.1 (MRCQ) with relaxed CQ algorithm (RCQ) in [13] applied to (P). Let \(\gamma =0.99M\) where \(M=\min \{2/\Vert A\Vert ^2,\sqrt{2}/\Vert A\Vert \}\). In Table 1 we display the iteration history when using three different values of \(\epsilon \) and for each \(\epsilon \) four different start vectors (chosen at random). We see from Table 1 that none of the two tested algorithms come out as the best. We next use three different values of the stepsize \(\gamma \). For each test the same start vector was used. The results, Table 2, show that ’Iter.’ decreases slowly as the stepsize approaches \(M\).

Secondly, we compare the modified variable-step relaxed projection 4.1 (MVRCQ) with variable-step CQ algorithm (VRCQ) in [14] applied to (P). Let \(\gamma =0.5, l=0.4, \mu =0.7.\) From Table 3, we first note that ’Iter.’ and ’InIt.’ are slightly smaller for (MVRCQ) than that of (VRCQ); secondly we see that the iteration is not sensitive to the tolerance. It is also observed that the numbers of steps needed in Table 3 are larger than that of Table 2. This phenomenon might be caused by the choice of \(\beta _n\) in (23) which maybe be much smaller than \(M\). This issue is left for future studies.

6 Concluding remarks

In this paper, a modified relaxed projection algorithm and a modified variable-step relaxed projection algorithm with Armijo-like searches for solving the split feasibility problem have been presented. For the two algorithms, the orthogonal projections can be calculated directly. The algorithm 4.1 does not need to compute or estimate the norm of the matrix and the stepsize can be computed using a number of steps of the power method. Moreover, the objective function can decrease significantly at each iteration in these algorithms. In the feasible case of the SFP, the corresponding convergence properties have been established. We perform some numerical experiments, which have confirmed the theoretical results obtained.

References

Censor, Y., Elfving, T.: A multiprojection algorithm using Bregman projections in a product space. Numer. Algorithms 8, 221–239 (1994)

Byrne, C.L.: Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 18, 441–453 (2002)

Byrne, C.L.: A unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 20, 103–120 (2004)

Cegielski, A.: Generalized relaxations of nonexpansive operators and convex feasibility problems. Contemp. Math. 513, 111–123 (2010)

Censor, Y., Bortfeld, T., Martin, B., Trofimov, A.: A unified approach for inversion problems in intensitymodulated radiation therapy. Phys. Med. Biol. 51, 2353–2365 (2006)

Censor, Y., Elfving, T., Kopf, N., Bortfeld, T.: The multiple-sets split feasibility problem and its applications for inverse problems. Inverse Probl. 21, 2071–2084 (2005)

Censor, Y., Motova, A., Segal, A.: Perturbed projections and subgradient projections for the multiple-sets split feasibility problem. J. Math. Anal. Appl. 327, 1244–1256 (2007)

Censor, Y., Segal, A.: The split common fixed point problem for directed operators. J. Convex Anal. 16, 587–600 (2009)

Wang, F., Xu, H.K.: Cyclic algorithms for split feasibility problems in Hilbert spaces. Nonlinear Anal. 74, 4105–4111 (2011)

Xu, H.K.: Iterative methods for the split feasibility problem in infinite-dimensional Hilbert spaces. Inverse Probl. 26, 105018 (2010)

Dang, Y., Gao, Y.: The strong convergence of a KM-CQ-like algorithm for a split feasibility problem. Inverse Probl. 27, 015007 (2011)

Yu, X., Shahzad, N., Yao, Y.: Implicit and explicit algorithms for solving the split feasibility problem. Optim. Lett. doi:10.1007/s11590-011-0340-0

Yang, Q.: The relaxed CQ algorithm solving the split feasibility problem. Inverse Probl. 20, 1261–1266 (2004)

Qu, B., Xiu, N.: A note on the CQ algorithm for the split feasibility problem. Inverse Probl. 21, 1655–1665 (2005)

Wang, Z., Yang, Q., Yang, Y.: The relaxed inexact projection methods for the split feasibility problem. Appl. Math. Comput. 217, 5347–5359 (2011)

Toint, PhL: Global convergence of a class of trust region methods for nonconvex minimization in Hilbert space. IMA J. Numer. Anal. 8, 231–252 (1988)

Gafni, E.M., Bertsekas, D.P.: Two-metric projection methods for constrained optimization. SIAM J. Control Optim. 22, 936–964 (1984)

Rudin, W.: Functional Analysis, 2nd edn. McGraw-Hill, New York (1991)

Osilike, M.O., Aniagbosor, S.C.: Weak and strong convergence theorems for fixed points of asymptotically nonexpansive mappings. Math. Comput. Model. 32, 1181–1191 (2000)

Bauschke, H.H., Borwein, J.M.: On projection algorithms for solving convex feasibility problems. SIAM Rev. 38, 367–426 (1996)

Acknowledgments

The authors express their thanks to the reviewers, whose constructive suggestions led to improvements in the presentation of the results.

Author information

Authors and Affiliations

Corresponding author

Additional information

Supported by the NSFC Tianyuan Youth Foundation of Mathematics of China (No. 11126136) and Fundamental Research Funds for the Central Universities (No. ZXH2011C002).

Rights and permissions

About this article

Cite this article

Dong, QL., Yao, Y. & He, S. Weak convergence theorems of the modified relaxed projection algorithms for the split feasibility problem in Hilbert spaces. Optim Lett 8, 1031–1046 (2014). https://doi.org/10.1007/s11590-013-0619-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-013-0619-4