Abstract

Epilepsy is a chronic disorder, which causes strange perceptions, muscle spasms, sometimes seizures, and loss of awareness, associated with abnormal neuronal activity in the brain. The goal of this study is to investigate how effective connectivity (EC) changes effect on unexpected seizures prediction, as this will authorize the patients to play it safe and avoid risk. We approve the hypothesis that EC variables near seizure change significantly so seizure can be predicted in accordance with this variation. We introduce two time-variant coefficients based on standard deviation of EC on Freiburg EEG dataset by using directed transfer function and Granger causality methods and compare index changes over the course of time in five different frequency bands. Comparison of the multivariate and bivariate analysis of factors is implemented in this investigation. The performance based on the suggested methods shows the seizure occurrence period is approximately 50 min that is expected onset stated in, the maximum value of sensitivity approaching ~ 80%, and 0.33 FP/h is the false prediction rate. The findings revealed that greater accuracy and sensitivity are obtained by the designed system in comparison with the results of other works in the same condition. Even though these results still are not sufficient for clinical applications. Based on the conclusions, it can generally be observed that the greater results by DTF method are in the gamma and beta frequency bands.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Epilepsy is the fourth most common neurological disorder around the world in which one-fourth of the patients suffer from drug-resistant epilepsy. Accordance with the scientific research seizures last a few minutes to hours prior to the clinical evidence, so transmission from the Interictal state (the period between seizures) to the ictal state (seizure) is not abrupt (Lehnertz et al. 2007). EEG is used to analyze the epileptic problems, which is a method to record the electrical activity in the brain (Iasemidis et al. 2005; D’Alessandro et al. 2003). The primary purpose of this investigation is to design a system based on EC changes, which sends a warning signal to increase the patient’s security. Effective Connectivity is defined as the effect one neural system exerts on another, which is assumed in neurophysiology as the analysis of spike trains obtained from multi-electrode recordings (Aertsen and Preissl 1991).

Investigations in brain relationship are not limited to Effective or Functional connectivity. Some of them assess brain functions both based on entropy and particle trajectories and the relationship between brain time series (Déli et al. 2017). In terms of GC, this method is applied not only in the area of fMRI (Parhizi et al. 2018) but also in EEG dataset analysis such as applying spectral measures of linear Granger causality to study the causal connectivity between time series data (He et al. 2014), measuring connectivity across brain regions (Coben and Mohammad-Rezazadeh 2015) and studying in causality flow during Motor Imagery (MI) using scalp electroencephalograms (Hu et al. 2015). In addition in a study, phase resampling is introduced to make statistical inferences of Granger causality in frequency domain time series analysis (multivariate EEG and skin conductance data) (Liu and Molenaar 2016), then, Staljanssens et al. (2017) show that it is possible to estimate the Seizure Onset Zone (SOZ) from clinical or low-density scalp EEG with high accuracy using Electrical Source Imaging (ESI) and subsequent functional connectivity analysis based on Granger causality, furthermore, in a paper McBride et al. (2015), scalp EEG based causality measurements have different distributions for different cognitive groups and hence the causality measurements can be used to distinguish between Normal Control (NC), Mild Cognitive Impairment (MCI), and Alzheimer’s Disease (AD) participants.

Additionally, conditional Granger causality (cGC) and partitioned Granger causality (pGC) are applied to an EEG dataset to analyze neural information (Malekpour and Sethares 2015). In addition, a novel method of measuring directed connectivity by applying the framework of Granger causality to phase shift events is introduced, which is able to identify connectivity patterns in situations similar to EEG recordings (Marshall et al. 2014). Moreover, in a paper Georgiou and Nicolaou (2014), an introduced system extracts features from the patients’ electrical brain activity (EEG) to discriminate between “anesthesia” and “awareness” using of a neural network classifier and Granger causality (GC) features. What’s more, the aim of another study is to analyze drivers’ different mental states, such as alertness and drowsiness, and find out a neurometric indicator able to detect drivers’ fatigue level in terms of brain networks. The EEG signals are analyzed by using Granger-Causality-based brain effective networks (Kong et al. 2015). Also, in an investigation Siggiridou et al. (2015), a large number of Granger causality measures used to form causality networks from multivariate time series are assessed and the application to epilepsy is considered. On top of that GC analysis has the potential to help localize ictal networks from interictal iEEG (Park and Madsen 2018).

As well, GC is applied to electrocorticogram (ECoG) and stereotactic EEG (SEEG) to study temporal lobe epileptic seizures (Franaszczuk and Bergey 1998). It is also used to disclose directional connections between multichannel Local Field Potential (LFP) (Cadotte et al. 2010), and to determine connectivity between brain regions in ictal periods (Coben and Mohammad-Rezazadeh 2015). In addition, GC proposed an idea about the propagation of epileptic spikes, which are measured by MEG, and also measured path changes in the temporal and frequency areas (Lin et al. 2009). DTF is applied to assess seizure flow patterns in comparison with different origin sites (Franaszczuk and Bergey 1998) and to locate the dynamic flow patterns. The usefulness of this method as a measure of information flow in multivariate systems has been demonstrated in many articles (Kaminski et al. 2001; Kaminski 2005). This method is applied to epileptic loci localization (Franaszczuk and Bergey 1998) and to study epileptogenesis (Medvedev and Willoughby 1999). Determining the direction and strength of high-frequency dynamic propagation (70–175 Hz) during Ictal and Interictal recordings, the short-time direct directed transfer function (SdDTF) is used based on the idea of Granger causality (Korzeniewska et al. 2014).

In addition, GC is applied in several investigations in regards to epileptic patients, such as identifying patterns of distinct spatiotemporal causal connectivity (CC) in a group of children suffering from epilepsy and control group during the process of working memory task (Protopapa et al. 2016). Investigations in brain connectivity are not focused on the mentioned area, functional connectivity, in fact, is employed as measurements to investigate the brain networks during positive or negative emotions (Dasdemir et al. 2017). Additionally, the causal relationship can be modeled by a multi-layer perceptron artificial neural network to generate an input–output mapping (Talebi et al. 2018). It can be noted that in different researches not only different methods used to predict the epileptic seizures but detecting them is practical in research, such as using robust machine learning classification method to detect the seizures (Hussain 2018), and using wavelet packet based log and norm entropies with a recurrent Elman neural network (REN) for the automated detection of epileptic seizures (Raghu et al. 2017). Furthermore, the transition of brain activity from interictal to preictal states preceding a seizure by combining EEG network and clustering analyses together in different frequency bands is investigated (Li et al. 2019). In addition, a study showed that brain connectivity during emotional processes is different among females and men (Guntekin et al. 2017).

The organization of this research is as follows: first section is the introduction. Second section presents the methods used for Effective Connectivity estimation, while the results and discussion are provided in third and fourth sections, respectively.

Materials and methods

Since the early 1960s Adey et al. (1961), investigation concentrating on brain connectivity has been expanding. All over this period, expanding methods to determine brain connectivity precisely has been a challenging problem. In this section, an overview of the most widely used techniques is provided and some of the ideal measures in effective connectivity are depicted.

Database

The proposed methods are applied to the Freiburg EEG database to evaluate the efficiency of defined approaches (http://epilepsy.uni-freiburg.de/freiburg-seizure-prediction-project/eeg-database). The database contains electrocorticogram (ECoG) or iEEG from twenty-one patients with intractable focal epilepsy. The database is acquired using a digital video-EEG system in the Epilepsy Center of the University Hospital of Freiburg, Germany. The system is equipped with a 256 Hz sampling rate and a 16-bit analog-to-digital converter. A total of 83 seizures, 504 h of interictal, and 73 h of preictal or ictal data are available in the database. We evaluated the methods on 21 patients’ data of Freiburg database, which is a publicly available intracranial EEG database containing signals from six electrodes [three near the seizure focus (focal) and the other three distal to the focus (afocal)] ECoG recordings. The database is analyzed with regard to Pre-ictal (a period immediately before a seizure) and Interictal periods. The database is divided into different phases included Pre-ictal, Interictal, post-ictal and Ictal, and all of them are labeled by the University of Freiburg. In fact, seizure onset times and artifacts were identified by a certified epileptologist, as it can be seen in Fig. 1. For all 21 patients at least 24 h of EEG-recordings Interictal without seizure activity, 2–5 seizures for each patient, and Pre-ictal recordings including at least 50 min before each seizure are available.

A short period of the Invasive EEG of an epileptic seizure is annotated. All four states of ictal, pre-ictal, ictal, post-ictal and inter-ictal are coded with different colors. EEG signals belong to patient 2 from the Freiburg EEG Database and were formed a picture using the EEGLAB software (EEGLAB 2011)

Granger Causality

Granger causality has the basic idea that can be returned to Wiener (1956), who introduced a test to evaluate whether a time series is useful in predicting another, so the second one is assumed to have a causal effect on the first one. The basic principle of Granger (1969, 1980) following Wiener’s idea is based on linear regression models. The basic calculation of parametric Granger Causality methods can be acquired based on autoregressive (AR) modeling (Chatfield 2003).

Directed Transfer Function

DTF as a multivariate method based on a multivariate auto-regressive model (MVAR) is able to determine the causal relations between the signals and identify the directed propagation of EEG activity (Kaminski and Blinowska 1991). Indeed, DTF is a frequency domain characterization of connectivity that can be considered as a factorization of the coherence between pairs of time series. Also, DTF represents the existence of directional signal propagation even if it is only indirect.

Methods

To start, by separating the Ictal dataset from pre-ictal and interictal, checking for being stationary is not indispensable. To be clear, being stationary should be checked in EEG dataset included Ictal phase. Indeed, in this project, Effective Connectivity changes over the course of time are considered as an index to forecast the epileptic seizures. It can be clear that Pre-ictal and Interictal databases are under consideration. We proposed a data preparation framework for accurate determination so the database is divided into separate training and test dataset. As mentioned before, the databases are labeled, in fact, the first hour of Interictal recordings individually is used as the training dataset. Thus, the rest of the Interictal and Pre-ictal databases are supposed to be used as the testing dataset.

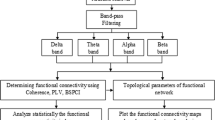

In this section, Fig. 2 shows a flowchart contained an overview of the proposed method in this study. The analysis of data is carried out using MATLAB (version R2013a, http://www.mathworks.com) and EEGLAB-SIFT toolbox (version 0.1. Alpha, https://sccn.ucsd.edu/wiki/SIFT).

Given is a flowchart illustrating the information about the process of signal analysis. Sliding-window technique is applied to the data by overlapping 2.5 s. In the next stage, normalization as a method of pre-processing is utilized to neutralize the effects of database’s amplitudes on calculation. After fitting a model (order = 5) on the dataset, EC matrices are extracted, then standard deviation of EC matrices are calculated. In accordance with the formula (2) thresholds are obtained, after that, they are compared to the SD matrices. In this level, Binary Thresholds are defined. Total of components (ToC) of Binary matrices is compared with Binary Thresholds. In that case, ToC is more than BT, it means that the epileptic seizure is diagnosed by the Binary Threshold and the alarm is sounded. Otherwise, the processor goes to the next window to continue the process

Sliding-window technique

Step (1) Sliding-window technique is implemented in most of the prediction methods published up to now to employ some (linear or non-linear) characterizing measures to calculate from a window of EEG data with a pre-defined length, then the following window is analyzed, and so forth. The analysis windows’ length usually ranges between 10 and 40 s, depending on whether the applied measure is used to describe the characters of a single EEG channel or relations between multichannel EEG, referring to as a univariate, bivariate or multivariate measure, respectively. According to the previous studies, in this study 5-s windows are considered (Cadotte et al. 2010; Niknazar 2013; Bandarabadi et al. 2015). 50% overlap (step size = 2.5 s) is applied in order to increase the data size and fitting the model more accurately to the data.

Pre-processing

Step (2) Pre-processing as an important step in data processing (normalization) is performed on the data. The variable measured the deviation from the mean in units of standard deviation is called a Standardized variable, is a quantity without any dimensions, as per given formula:

where z is a standard score. \( \bar{X} \) is the mean of the data. S is the standard deviation of the data.

If the deviations from the mean are given in units of the standard deviation, there are said to be expressed in standard scores. These values are practical in comparing distributions (Spiegel and Stephens 1999). The rational reason for using this method is that the effects of the altitudes in different channels should be eliminated. In this stage, as a result of similar altitudes, the only impact on calculations is dynamic effects.

Fitting model

Step (3) In the next step, an applicable model is fitted to the data (MVAR and AR model for DTF and GC method, respectively). An approach on the basis of a segmentation-based AMVAR is adopted rather similar to the concept behind short-time Fourier transforms or other windowing techniques. That is to say, a sliding window of length W is extracted from the multivariate dataset, and VAR[p] model is fitted to the data (similar to the dataset, 6 channels are included in the MVAR model). Then the window by a (small) quantity Q is increased and the procedure until the start of the window is greater than T-W is repeated. Thus floor ((T − W)/Q + 1) VAR coefficient matrices are produced and the evolution of the VAR[p] over the course of time is described. The rational idea here is that by using an adequately small window, the data within the window will be locally stationary and appropriate for VAR modeling. By using overlapping windows (small Q) coefficients can be obtained that change steadily in a particular degree with time. Figure 3 illustrates a schematic of the sliding-window AMVAR approach.

As a matter of fact, an appropriate model is fitted to the dataset in each 5-s window. It will be clarified in the Result section that a fixed window size equaled to 5 s is used while databases are separated into different lengths (300, 600 and 900 s) in order to a precise comparison. To check the Akaike Information Criterion (AIC), some parts of data are selected randomly and the criterion by SIFT toolbox is implemented in them to clarify the acceptability of selected model order. The window length, step size, and model order are considered 5, 2.5 and 5 s, respectively, then the validity of model order is proven in accordance with the Akaike Information Criterion (AIC), which is shown in the Fig. 4.

Extracting EC matrices

Step (4) To accelerate calculations, in each 5-s window a matrix in accordance with six employed channels is obtained. To have an accurate comparison, in fact, all the calculations are performed in five frequency bands (Delta: 1–4 Hz, Theta: 4–8 Hz, Alpha: 8–13 Hz, Beta: 13–32 Hz, and Gamma: 32–127 Hz). Using two methods calculating the divided data to some intervals is considered as a way to eliminate any disruptions in the calculation speed, which is probably to occured. It should be noted that the results in accordance with EC changes over the course of time are not functional, and in the next stage, the basis of the work is based on standard deviation of EC variables.

Calculating standard deviation of EC matrices

Step (5) As it is mentioned, EC as a changeable variable over the course of time is not compatible with the methods, while Standard Deviation of EC is introduced as an accurate variable. In fact, at this stage, the SD variations of EC matrices for two methods (DTF and GC) as variable indicators over the course of time are defined. Indeed, based on changing these features passing time and by approaching the seizures in the Pre-ictal dataset, epileptic seizures can be predicted.

Extracting thresholds and binary matrices

In the next step, the thresholds are calculated. To use the Thresholding technique, the first hour of the Interictal dataset is required for each patient. Indeed, in this work, this part of individual data is considered as Training dataset. It should be noted that the applied method is defined individually, and the Thresholding technique is used based on a variation over the course of time. In this way, by comparing the conditions and variations of the defined variable by approaching the seizures, they can be forecasted.

Two time-variant coefficients are defined; GCECIFootnote 1 and DTFECIFootnote 2 referred to the Effective Connectivity Standard Deviation (SD) changes obtained by GC and DTF methods passing time. The indexes are compared with EC Thresholds, which are achieved by the training dataset (Interictal). To acquire information over the course of time, matrices included the average index changes passing time, related to the Interictal and Pre-ictal dataset in each of the divided intervals are compared together.

Step (6) Thresholds are defined individually for each patient according to formula 2. In this formula, Average means the mean of EC and Standard Deviation is the EC standard deviation of the first hour of each patient’s Interictal dataset. Threshold Index is considered 300 to achieve a higher-resolution image. Then, the changes in thresholds are determined by this number, in case any other number could be selected, the image resolution would be changed in accordance with this number. Accordingly, the obtained maximum and minimum thresholds (threshold distances) for each patient are applied to all achieved time-variant indexes over the course of time. Changing of the indicators passing time, in fact, are placed among the threshold distances, as can be seen in Fig. 5. The numerical value of the components inside or outside the threshold distance is stored as 0 and 1 for each of the frequency bands. In this way, we have a binary matrix for each of the divided intervals.

This figure shows a view of the time-variant coefficients changes in a length of data. As indicated in the corner of the image, for each of the variables characterized variations between channels, threshold distance (included maximum and minimum threshold) is defined. As a result, if the change rate is out of the defined threshold distance, the seizure detection with the number 1 in the binary matrix is recorded. In this way, binary matrices are the basis for detecting or not detecting seizures passing time

The distance factor is considered 0.01. Matrices with regard to the defined indexes are obtained and compared to the threshold distances.

Step (7) By comparing the connectivity matrices with Thresholding method, the binary matrices which consist of 36 components are obtained. Each of the connectivity components was replaced with numbers 0 or 1, then binary matrices are formed. Each of which represents passing the threshold distance and the pattern changes of the signal is suspicious of the proximity to the epileptic seizure. Consequently, for each of the intervals, we consider the sum of the numbers in the binary matrices. Here a criterion called Binary Threshold (BT) is defined, which is numbered 5, 10, 15, 20, 25, and 30 (the maximum BT is lower than 30, which is the number of components in binary matrices). In this way, if the total of components (ones) in a binary matrix is greater than BT, it means that the epileptic seizure is compatible with the Binary Threshold and the proximity of the seizure is diagnosed correctly. Otherwise, the process will go to the next window to perform the calculations again. This means that no proximate seizure has been recognized in the current window.

Results

In this section, first the obtained results based on the proposed approach are compared with random and periodic predictors and the process of calculating Sensitivity and False Prediction Rate are explained. Then the effect of divided dataset separated to 300, 600 and 900 s on the method’s performance is illustrated in the following section.

As previously described, numbering more than the defined BT is considered as an alarm. Regards to two groups of the dataset (Pre-ictal and Interictal), the numbers should be saved in two different groups. As it is obvious, computations in a diagnosis group used to calculate False Prediction Rate (FPR) and Sensitivity, on the condition that they are in the Interictal and Pre-ictal dataset, respectively. In other words, Sensitivity was estimated on the preictal data segments from the relative number of correct predictions, while the FPR was determined on interictal segments. We, in fact, want to evaluate our method’s performance in the case of two statistical criteria “Sensitivity” and “False Prediction Rate”, on the basis of the following definitions (3) and (4):

The purpose of an ideal prediction algorithm is the start warning before the seizure and also to specify the exact time of onset. The uncertainty in determining the exact time of onset by defining the interval that is expected onset stated in, it is called seizure occurrence period (SOP), and seizure prediction horizon (SPH) which is the interval between the alarm and the beginning of the window of the SOP, can be seen in Fig. 6. A false alarm occurs when there is no component, while the true alarm is the existence of a component of EC matrices between the threshold distances in the dataset. To be clear, TP (True Positive) is accounted the right alarms in SOP and FN (False Negative) is assigned as the missed seizures in SOP, which are not considered by the system.

Given is a figure containing a base of the performance of a prediction method during an interictal and a preictal period. Vertical lines are considered as a symbol of seizure onset. a Illustrations of EEG recordings and b examples of a period of time included an extracted feature by a seizure prediction algorithm. The threshold for raising alarms is indicated by the horizontal line. A prediction is characterized by alarm events and two sequential time intervals, the seizure prediction horizon SPH and seizure occurrence period SOP are illustrated in c. It is notable that the timescales for the EEG data and the feature time series are different

To illustrate the effectiveness and performance attributes of our proposed method, the Sensitivity in terms of FPR graphs in GC and DTF methods compared with the random and periodic predictors shown in Fig. 7. These figures obviously show that our submitted method’s performance is considerably better. The periodic predictors provided seizure alarms with a constant time distance while the random predictors are based on a homogeneous Poisson distribution for the false prediction.

To be clear, the results base on BT in lower numbers are not considered in the last results. However, in calculating FPR and Sensitivity, all BTs are employed to achieve accurate results. For this reason, the graphs provided a precise comparison between the proposed approach and the others. Therefore, for the rest of the paper, we just present the results of divided dataset influenced the method’s performance.

For comparing the results, we clarify a hypothesis that changing the lengths of the database has affected calculations significantly. As it was mentioned in the previous sections, a fixed window size equaled to 5 s is used while for the sake of simplicity, in this study databases are broken to different lengths (300, 600 and 900 s) to compare the results precisely. The results of Table 1 clearly reveals that the hypothesis is affirmed. Accordingly, the findings confirm the impact on forecasting horizon, indeed, SPH and SOP are considered 10 and 50 min for 300 and 600 s Data Length (DL) while SPH and SOP for DL = 900 s are clarified as 15 and 45 min, respectively. We investigate the ability of our method in various circumstances such as DL and forecasting horizons. Also noteworthy is the fact that in case DL was greater than 900 s, it would complicate calculations and procrastinate the alarm warnings.

As shown in Table 1, the maximum sensitivity in GC and DTF methods are mentioned. As reported by this table for constant SPH (SPH = 10 min) shrinking dataset enhanced the sensitivity of the system equal to 66.27% and 79.76%, respectively in 300 s and 600 s data length. Assuming that DL is halved, the next DL is much accessible in a short period of time. Consequently, so long as postponing of alarming become negligible, the proposed method’s sensitivity enhances significantly. To evaluate our proposed method, we performed DL equals 900 s, while SPH is changed to 15 min. It is interesting to note that an extended period of time for SPH is much useful clinically. Simply put, it helps to improve the practical capabilities of the designed system. Because of a safe period of time for the patients to hesitate hazardous tasks or dangerous zones or taking some medications, whereas there are no probable seizures in this period. In addition, it is clear that accessibility of the next data length in similar SPH, despite the well-established role of SPH length, is influenced in the sensitivity noticeably.

Discussion

There are some reasons to use electrophysiological signals as the most appropriate means to study effective connectivity. First and foremost is because of measuring neuronal activity at an aggregated level. The second reason is the temporal resolution, which is compatible with the processing time at the neuronal level in the order of milliseconds (Schoffelen and Gross 2009). These signals can be measured with invasive or non-invasive methods. Data acquisition by invasive methods through implanting electrodes on the brain shows high quality and spatially accuracy. On the other side, non-invasive techniques are broadly used due to the high sampling frequency, and increased signal-to-noise ratio and spatial resolution are provided by means of source reconstruction techniques (Brookes et al. 2012).

We have trained and tested our algorithm on the Freiburg dataset because we assumed that the intracranial EEG recordings have much accuracy compared with the scalp EEG recordings. A study Zhang and Parhi (2016) evidenced that the scalp EEG recordings as the MIT (sEEG) database has a lower sensitivity and a higher FP rate than the Freiburg (iEEG) database. This is caused by the fact that intracranial EEG recordings usually have a greater spatial resolution and signal-to-noise ratio because of proximity to neural activity. For that reason, sEEG is a much noisier evaluation of the neural activity and the outer environment is suspected to interfere in the results more than the iEEG, which leads to the decrease of sensitivity and the increase of FP rate. Nevertheless, since iEEG is an invasive signal, the risk of infections has been brought by the process of acquiring iEEG recordings. Furthermore, the patient’s surgery to implant these electrodes can be costly. Additionally, the sEEG has a larger coverage of the brain than iEEG. As a result, in accordance to the time, cost and accuracy each of the datasets can be practical, while the results of our study on iEEG can be much accurate to consider as one of the most precise approaches to predict epileptic seizures.

The set of technical features extracted from the dataset including 21 patients shall be sufficient to achieve the technical result indicated in the description of the new approach while carrying out the method for the stated purpose. In accordance with some researches, the Freiburg dataset has been feasible to achieve the scientific outcomes, which are practical in clinical and research aspects in epileptic seizure prediction (Yildirim and Ozdemir 2014; Ghaderyana et al. 2014; Zhang and Parhi 2014; Mirowski et al. 2009; Donos et al. 2018; Wang and Lyu 2015; Schulze-Bonhage et al. 2011; Truong et al. 2018; Sharif and Jafari 2017; Zhang et al. 2014).

We have argued at the beginning of this article that epilepsy is one of the important neural disorders that patients suffer from. For this reason, in recent years, new methods have been proposed to predict seizures. In this research, interactions between brain regions using Granger Causality and Directed Transfer Function methods have been studied. The theory of the changeable variable nearby epileptic seizure has been evaluated by accurate calculations. Although EC is an inaccurate variable to provide significant results, the Standard Deviation of EC is taken into account as a particular fluctuating variable over the course of time. For this purpose, we introduced two changeable indexes (GCECI and DTFECI) in the course of time to consider as variations in the Thresholding technique.

Note that, binary matrices are defined base on the alternation in each channel over the course of time. At this point, we should note that the obtained results can be measured by the probability of seizure occurrence by a survey in each channel in different five frequency bands. These findings support the theory that by considering the alternation of variable the seizures can be predicted accurately. It is known that expanding SPH have the better results and this period of time is useful for patients to take precautions, additionally help them psychologically to feel safe, while the bigger data length would be affected our forecast horizon negatively. To be clear, selecting shorter data length is more convenient because the accessibility to the next part of the data in a short time is possible.

Our findings confirm the importance of the existence of variations altered tremendously nearby the seizure, which can be practical in predicting the seizures. Besides, our results agree with the literature in terms of the importance of multivariate models in comparison with bivariate ones. It is not strange that multivariate models can bring us perfect results, as it can be obvious in the results belonging to the DTF method which are better than GC. As a matter of fact, the AR model is adequate for a time series, in that case, MVAR should be used to model multiple time series. It is accepted that indirect connectivity can be eliminated by multivariate models and might be practical than bivariate models. In fact, the mentioned theory can be proved by defining matrices including two indexes (GCECI and DTFECI) over the course of time and using the Thresholding technique. Therefore, in accordance with the results, we are convinced that this approach is acceptable and flourishing.

The results show that great sensitivity, especially in the Gamma and Beta frequency bands is obtained. Significant and strong values are observed in the DTF method related to fitting a multivariate model to the data.

There are some studies in the field of epileptic seizure prediction, which Freiburg dataset and the Thresholding approach have been used in, while in accordance with a comparison between them and our study there are some differences in the number of patients and the length of SPH and SOP and so on. It can be seen that the fewer number of patients in the investigation, the greater sensitivity is obtained. Take some researches Bedeeuzzaman et al. (2014) and Zhang and Parhi (2016) as an example, which 100% sensitivity is achieved by the proposed techniques. It can be noticed that in some studies Winterhalder et al. (2003), Maiwald et al. (2004), Mamaghanian et al. (2008) and Niknazar (2013) used 21 patients’ dataset, sensitivity is acquired 42%, 13–42%, 42%, and 67.94%, respectively. Despite the fact that the employed methods are various, the maximum sensitivity in our study is obtained at 79.76%, which is substantially greater than the previous results in a relatively similar situation.

Limitations of the study

There are some limitations in this study, for instance, offline processing can be considered as a drawback. It would be productive to pursue further researches to accelerate calculations to achieve simultaneous prediction in order to obtain clinical applications. In addition, in the future study, we are planning to compare the results of the proposed method between invasive and non-invasive dataset. As it can be obvious, our results build up and support hypotheses of previous studies highlighting the distinctive role of Effective Connectivity changes in EEG concluded epileptic seizures.

Notes

Granger Causality Effective Connectivity Index.

Directed Transfer Function Effective Connectivity Index.

References

Adey WR, Walter DO, Hendrix CE (1961) Computer techniques in correlation and spectral analyses of cerebral slow waves during discriminative behavior. Exp Neurol 3(6):501–524

Aertsen A, Preissl H (1991) Dynamics of activity and connectivity in physiological neuronal networks. In: Schuster WG (ed) Nonlinear dynamics and neuronal networks. VCN publishers, New York, pp 281–301

Bandarabadi M, Teixeira CA et al (2015) Epileptic seizure prediction using relative spectral power features. Int Fed Clin Neurophysiol 126(2):237–248

Bedeeuzzaman M, Fathima T et al (2014) Seizure prediction using statistical dispersion measures of intracranial EEG. Biomed Signal Process Control 10:338–341

Brookes MJ, Woolrich MW, Barnes GR (2012) Measuring functional connectivity in MEG: a multivariate approach insensitive to linear source leakage. Neuroimage 63:910–920

Cadotte AJ, DeMarse TB, Mareci TH, Parekh MB, Talathi SS, Hwang DU, Ditto WL, Ding M, Carney PR (2010) Granger Causality relationships between local field potentials in an animal model of temporal lobe epilepsy. J Neurosci Methods 189(1):121–129

Chatfield C (2003) The analysis of time series: an introduction. CRC texts in statistical science, 6th edn. Chapman & Hall, London

Coben R, Mohammad-Rezazadeh I (2015) Neural connectivity in epilepsy as measured by Granger causality. Front Hum Neurosci 9:194

D’Alessandro M, Esteller R, Vachtsevanos G, Hinson A, Echauz J, Litt B (2003) Epileptic seizure prediction using hybrid feature selection over multiple intracranial EEG electrode contacts. IEEE Trans Biomed Eng 50(5):603–615

Dasdemir Y, Yildirim E, Yildirim S (2017) Cogn NeurodynAnalysis of functional brain connections for positive–negative emotions using phase locking value. Cogn Neurodyn 11:487–500

Déli E, Tozzi A, Peters JF (2017) Relationships between short and fast brain timescales. Cogn Neurodyn 11:539–552

Donos C, Maliia MD, Dumpelmann M (2018) Seizure onset predicts its type. Epilepsia 59:650–660

EEGLAB-Open source (2011) Vers. http://sccn.ucsd.edu/eeglab/. Accessed May 18 2014

Franaszczuk PJ, Bergey GK (1998) Application of the directed transfer function method to mesial and lateral onset temporal lobe seizures. Brain Topogr 11(1):13–21

Georgiou J, Nicolaou N (2014) Neural network-based classification of anesthesia/awareness using Granger causality features. Clin EEG Neurosci 45(2):77–88

Ghaderyana P, Abbasia A, Sedaaghi MH (2014) An efficient seizure prediction method using KNN-based undersampling and linear frequency measures. Neurosci Methods 232:134–142

Granger CWJ (1969) Investigating causal relations by econometric models and cross-spectral methods. Econometrica 37(3):424–438

Granger C (1980) Testing for causality: a personal viewpoint. J Econ Dyn Control 2:329–352

Guntekin B, Femir B, Golbas BT, Tulay E, Basar E (2017) Affective pictures processing is reflected by an increased long-distance EEG connectivity. Cogn Neurodyn 11:355–367

He F, Wei H-L, Billings SA, Sarrigiannis PG (2014) A nonlinear generalization of spectral Granger causality. IEEE Trans Biomed Eng 61:1693–1701

Hu S, Wang H, Zhang J, Kong W, Cao Y, Kozma R (2015) Comparison analysis: Granger causality and new causality and their applications to motor imagery. IEEE Trans Neural Netw Learn Syst

Hussain L (2018) Detecting epileptic seizure with different feature extracting strategies using robust machine learning classification techniques by applying advance parameter optimization approach. Cogn Neurodyn 12:271–294

Iasemidis LD, Shiau DS, Chaovalitwongse W, Sackellares JC, Pardalos PM, Principe JC et al (2003) Adaptive epileptic seizure prediction system. IEEE Trans Biomed Eng 50:616–627

Iasemidis LD, Shiau DS, Pardalos PM, Chaovalitwongse W, Narayanan K, Prasad A et al (2005) Long-term prospective on-line real-time seizure prediction. Clin Neurophysiol 116(3):532–544

Kamiński M (2005) Determination of transmission patterns in multichannel data. Phil Trans R Soc Lond Ser B Biol Sci 360(1457):947–952

Kaminski MJ, Blinowska KJ (1991) A new method of the description of the informationflow in brain structures. Biol Cybern 65(3):203–210

Kamiński M, Ding M, Truccolo WA, Bressler SL (2001) Evaluating causal relations in neural systems: Granger causality, directed transfer function and statistical assessment of significance. Biol Cybern 85(2):145–157

Kong W, Lin W, Babiloni F, Hu S, Borghini G (2015) Investigating driver fatigue versus alertness using the Granger causality network. Sensors 15:19181–19198

Korzeniewska A, Cervenka MC, Jouny CC, Perilla JR, Harezlak J, Bergey GK, Franaszczuk PJ, Crone NE (2014) Ictal propagation of high frequency activity is recapitulated in interictal recordings: effective connectivity of epileptogenic networks recorded with intracranial EEG. Neuroimage 101:96–113

Lehnertz K et al (2007) State-of-the-art of seizure prediction. J Clin Neurophysiol 24(2):147–153

Li F, Liang Y, Zhang L, Yi C, Liao Y, Jiang Y, Si Y, Zhang Y, Yao D, Yu L, Xu P (2019) Transition of brain networks from an interictal to a preictal state preceding a seizure revealed by scalp EEG network analysis. Cogn Neurodyn 13(2):175–181

Lin FH, Hara K, Solo V, Vangel M, Belliveau JW, Stufflebeam SM, Hamalainen MS (2009) Dynamic Granger–Geweke causality modeling with application to interictal spike propagation. Hum Brain Mapp 30(6):1877–1886

Liu S, Molenaar P (2016) Testing for Granger causality in the frequency domain: a phase resampling method. Multivar Behav Res 51(1):53–66

Maiwald T, Winterhalder M, Aschenbrenner-Scheibe R et al (2004) Comparison of three nonlinear seizure prediction methods by means of the seizure prediction characteristic. Phys D Nonlinear Phenom 194:357–368

Malekpour S, Sethares WA (2015) Conditional Granger causality and partitioned Granger causality: differences and similarities. Biol Cybern 109:627–637

Mamaghanian H, Shamsollahi MB, Hajipour S (2008) Tracking dynamical transition of epileptic EEG using particle filter. In: IEEE international symposium on signal processing and information technology, ISSPIT, pp 270–274. https://doi.org/10.1109/isspit.4775727

Marshall WJ, Lackner CL, Marriott P, Santesso DL, Segalowitz SJ (2014) Using phase shift granger causality to measure directed connectivity in EEG recordings. Brain Connect 4(10):826–841

McBride JC, Zhao X, Munro NB, Jicha GA, Schmitt FA, Kryscio RJ, Smith CD, Jiang Y (2015) Sugihara causality analysis of scalp EEG for detection of early Alzheimer’s disease. NeuroImage Clin 7:258–265

Medvedev A, Willoughby JO (1999) Autoregressive modeling of the EEG in systemic kainic acid-induced epileptogenesis. Int J Neurosci 97(3–4):149–167

Mirowski P, Madhavan D, LeCun Y, Kuzniecky R (2009) Classification of patterns of EEG synchronization for seizure prediction. Clin Neurophysiol 120:1927–1940

Niknazar H (2013) Epileptic seizures prediction using EEG signal similarity index. Report master’s thesis. Shahed University, Tehran, Iran

Parhizi B, Daliri MR, Behroozi M (2018) Decoding the different states of visual attention using functional and effective connectivity features in fMRI data. Cogn Neurodyn 12(2):157–170

Park E-H, Madsen JR (2018) Granger causality analysis of interictal iEEG predicts seizure focus and ultimate resection. Neurosurgery 82(1):99–109

Protopapa F, Siettos CI, Myatchin I, Lagae L (2016) Children with well controlled epilepsy possess different spatio-temporal patterns of causal network connectivity during a visual working memory task. Cogn Neurodyn 10:99–111

Raghu S, Sriraam N, Pradeep Kumar G (2017) Classification of epileptic seizures using wavelet packet log energy and norm entropies with recurrent Elman neural network classifier. Cogn Neurodyn 11:51–66

Schoffelen JM, Gross J (2009) Source connectivity analysis with MEG and EEG. Hum Brain Mapp 30:1857–1865

Schulze-Bonhage A, Feldwisch-Drentrup H, Ihle M (2011) The role of high-quality EEG databases in the improvement and assessment of seizure prediction methods. Epilepsy Behav 22:S88–S93

Sharif B, Jafari AH (2017) Prediction of epileptic seizures from EEG using analysis of ictal rules on Poincaré plane. Comput Methods Programs Biomed 145:11–22

Siggiridou E, Koutlis C, Tsimpiris A, Kimiskidis VK, Kugiumtzis D (2015) Causality networks from multivariate time series and application to epilepsy. In: 2015 37th annual international conference of the IEEE engineering in medicine and biology society (EMBC). https://doi.org/10.1109/embc.2015.7319281

Spiegel MR, Stephens LJ (1999) Schaum’s outline of theory and problems of statistics, 3rd edn. ch 4, p 94

Staljanssens W, Strobbe G, Van Holen R, Keereman V, Gadeyne S, Carrette E, Meurs A, Pittau F, Momjian S et al (2017) EEG source connectivity to localize the seizure onset zone in patients with drug resistant epilepsy. NeuroImage Clin 16:689–698

Swartz Center for Computational Neuroscience (SCCN), Source Information Flow Toolbox (SIFT) (2011) A center of the Institute for Neural Computation, the University of California San Diego

Talebi N, Nasrabadi AM, Mohammad-Rezazadeh I (2018) Estimation of effective connectivity using multi-layer perceptron artificial neural network. Cogn Neurodyn 12(1):21–42

Truong ND, Nguyen AD, Kuhlmann L, Bonyadi MR, Yang J, Ippolito S, Kavehei O (2018) Convolutional neural networks for seizure prediction using intracranial and scalp electroencephalogram. Neural Netw 105:104–111

University of Freiburg (2008) http://epilepsy.uni-freiburg.de/freiburg-seizure-prediction-project/eeg-database. Seizure Prediction Project Freiburg. Seizure Prediction Project Freiburg University of Freiburg. Accessed Oct 18 2016

Wang N, Lyu MR (2015) Extracting and selecting distinctive EEG features for efficient epileptic seizure prediction. IEEE J Biomed Health Inform 19(5):1648–1659

Wiener N (1956) In: Beckenbach EF (ed) The theory of prediction. Modern Mathematics for the Engineer. McGraw-Hill, New York, pp 165–190

Winterhalder M et al (2003) The seizure prediction characteristic: a general framework to assess and compare seizure prediction methods. Epilepsy Behav 4:318–325

Yildirim N, Ozdemir E (2014) Patient specific seizure prediction system using Hilbert spectrum and Bayesian networks classifiers. Comput Math Methods Med 2014:572082

Zhang Z, Parhi KK (2014) Seizure detection using wavelet decomposition of the prediction error signal from a single channel of intra-cranial EEG. In: 2014 36th annual international conference of the IEEE engineering in medicine and biology society

Zhang Z, Parhi KK (2016) Low-complexity seizure prediction from iEEG/sEEG using spectral power and ratios of spectral power. IEEE Trans Biomed Circuits Syst 10(3):693–706

Zhang Y, Zhou W, Yuan Q, Wu Q (2014) A low computation cost method for seizure prediction. Epilepsy Res 108:1357–1366

Acknowledgements

We are immensely grateful to all experts for their assessments and opinions on the manuscript, although any errors are our own and should not tarnish the reputations of these esteemed professionals. The authors are very grateful to the Epilepsy Center of the University Hospital of Freiburg, Germany, for their consent to use the invasive EEG recordings in this work.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Hejazi, M., Motie Nasrabadi, A. Prediction of epilepsy seizure from multi-channel electroencephalogram by effective connectivity analysis using Granger causality and directed transfer function methods. Cogn Neurodyn 13, 461–473 (2019). https://doi.org/10.1007/s11571-019-09534-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11571-019-09534-z