Abstract

A novel fuzzy 3D filter designed to suppress impulsive noise in color video sequences is proposed. In contrast to other state-of-the-art algorithms, the proposed approach employs the sequence data of the three RGB channels, analyzes eight fuzzy gradient values for each of the eight directions, and processes two temporal neighboring frames concurrently. Numerous simulation results confirm that this novel 3D framework performs well in terms of objective criteria (PSNR, MAE, NCD, and SSIM) and the more subjective measure of human vision in the different color sequences. An efficiency analysis of several promising 3D algorithms was performed on a DSP; computation times for various techniques are presented.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In image processing, different types of noise affect the performance of digital systems, and thus developing methods to reduce the number of spurious pixels is considered a priority in the field. Noise produces deficiencies during acquisition, broadcast or storage of color image sequences. Therefore, it is important to filter each frame of a color sequence before it is processed [1, 2]. It is difficult to design techniques that reduce noise while maintaining image content such as edges, fine details, chromaticity characteristics, etc. [3, 4].

Filters that adapt their characteristics to the curent image and noise data have been proven effective in restoring images that contain various types of noise with different distributions and image textures [2–6]. It is important that the filtering algorithm be able to discriminate between the local variations of pixels caused by noise and the natural variations in the image structure.

Many filters are based on the order statistics technique, in particular vector order statistics [1–5], [7–10, 20–23]. Recently, fuzzy logic techniques have been used to design several 2D impulsive noise suppression filters [2, 5, 11–19]. These filtering techniques can be applied frame by frame to color sequences. However, the omission of temporal corrections generates temporal inconsistencies. For color sequences, 2D filters do not perform as well as 3D filtering frameworks [24, 31].

Several proposed frameworks employ existing temporal correlations between neighboring frames [6, 9, 10, 25–27]. Algorithms designed for color sequences should utilize the data from three channels and might apply vector-based methods [13, 19, 28–34].

To avoid filtering noise-free pixels, which blurs the pixels, techniques based on weights and switching have been designed [2, 4, 8, 9, 14, 28, 35–41].

Filtering based on fuzzy sets is used in the proposed framework. A special feature of fuzzy filters is their ability to adapt based on local image data. The goal of a fuzzy filter is to remove noise in spurious pixels while preserving edges, fine features, noise-free pixels and texture and chromaticity characteristics. For noisy pixels, the output of a filter is a selected pixel or the result of the filtering procedure, which applies fuzzy rules to several neighboring pixels. During processing several frames, the filter should be able to distinguish between movement of the objects, fine details and corrupted pixels. Fuzzy sets and fuzzy rules form the knowledge base of a fuzzy rule-based reasoning system. Fuzzy sets are a generalization of classical sets. Whereas classical sets over a universe X can be modeled using X to {0, 1} mappings, fuzzy sets are characterized by X to [0, 1] mappings (membership functions). In classical set theory, an element \(x \in X\) is or is not a member of a set. In fuzzy set theory, a more gradual transition between membership and non-membership is allowed; the degree of membership is between 0 and 1. Therefore, fuzzy sets are useful for processing human knowledge where linguistic variables (e.g., large, small, etc.) are used. For example, a difference in grey level can be described as large, not large or large to some degree.

Fuzzy rules are linguistic IF-THEN constructions that have the general form "IF A THEN B", where A and B are the collections of propositions containing linguistic variables. The IF component of the rule, A, is called the premise or antecedent, and B is the consequence of the rule.

A fuzzy membership function defines how each point in the input space is mapped to a membership value (or degree of membership) between 0 and 1. The membership function must vary between 0 and 1. The function can take any form and is defined by the user from the point of view of simplicity, convenience and efficiency.

Fuzzy filters are based on the observation that noise causes a small fuzzy derivative, while a large fuzzy derivative is caused by the presence of fine details or edges. Fuzzy rules are applied in each direction and take into account variables that can occur, such as local variations, edges and fine features. In image denoising, the fuzzy rules distinguish between noisy pixels, plain areas, edges and fine image features. These distinctions allow the main characteristics of an image to be unchanged. In color video sequences, inter-channel processing and motion detection algorithms are used to preserve the fine details and edges in a color sequence, and only corrupted pixels should be filtered.

In this paper, we propose an efficient fuzzy approach for impulsive noise suppression in color sequences. In contrast to current state-of-the-art fuzzy filters, the proposed framework gathers red (R), green (G) and blue (B) channels sequence data, uses fuzzy logic to analyze the basic pixel gradient value and several related pixel gradient values in eight directions, and processes two neighboring frames concurrently. The results of numerous simulations demonstrate that the proposed 3D filtering framework performs well in objective criteria (PSNR, MAE, NCD, and SSIM) and a human subjective analysis of the frames in the color sequences. In addition, an efficiency analysis of several promising 3D algorithms was performed on a DSP and in MATLAB; the computation times for various techniques are presented.

The paper is organized as follows: Sect. 2 presents the noise model and performance criteria; Sect. 3 explains the proposed framework; Sect. 4 presents and analyzes the simulation results; and Sect. 5 concludes the paper.

2 Impulsive noise model and performance criteria

In all models of impulsive noise in an image, noise appears as color spots that have very small or very large values. There are several models of noise contamination. We use a simple model that is the most severe model of impulsive noise from the point of view of image degradation [30, 37]. This model needs only prior information about the probability of random spike appearance, p n , which is independent in each of the three RGB channels. We assume that the amplitudes of the impulsive noise are random and uniformly distributed in the interval of given values (0–255). This model is employed independently in each color channel of an image:

Here, e(i, j) is the original image (or a frame in a video sequence), n(i, j) is a noise pixel that appears with probability p n , and E(i, j) is the corrupted image.

The performance analysis of the filter image can be based on different objective criteria. We employ the following objective measures: the peak signal-to-noise ratio (PSNR), which measures the ability of a chosen algorithm to suppress noise, and the mean absolute error (MAE), which characterizes the extent to which edges and fine details are preserved. All of these metrics are defined in the RGB color space:

where the mean squared error (MSE) is defined as follows:

The MAE is given as:

For both MSE and MAE, R(i, j), G(i, j), and B(i, j) represent the RGB color components of the original image e(i, j), and R e (i, j), G e (i, j), B e (i, j) are the color RGB components of the output filtered image or frame. The normalized color difference (NCD) is commonly used to measure color preservation and is defined in the L*u*v* color space [1, 3, 37]. To calculate the NCD criteria, the image must be converted to the L*u*v* color space. The error between two color vectors, \(\Updelta E_{Luv} = [(\Updelta L^\ast)^2+(\Updelta u^\ast)^2+(\Updelta v^\ast)^2]^{\frac{1}{2}},\) is used to calculate the NCD measure:

where \(e^\ast _{Luv}=[(\Updelta L^\ast)^2+(\Updelta u^\ast)^2+(\Updelta v^\ast)^2]^{\frac{1}{2}}\) is the norm or magnitude of the uncorrupted original image pixel vector in the L*u*v* space, and N and M are the image dimensions.

In some cases, the standard quality metrics used in the past, such as MSE and PSNR, can be erroneous. Therefore, novel metrics are used to characterize the performance of the algorithm, e.g., the structural similarity index measure (SSIM), which is more consistent with human perception. For monochrome images, the SSIM metric is defined as follows [42, 43]:

where l, c and s are parameters calculated for each color channel using the following:

In Eqs. (7–9), E is the filtered image, e is the original (uncorrupted) image, μ and σ2 are the sample mean and sample variances of E or e, and σ eE is the sample cross-variance between E and e. The index β represents the R, G or B color channel. The luminance similarity is denoted by l, c characterizes the contrast similarity, and s is the structural similarity for the chosen channel (R, G or B). The justification of the SSIM index can be found in [42, 43]. The constants C 1, C 2, and C 3 are used to stabilize the metric when the means and variances are very small; usually C 1 = C 2 = C 3 = 1. The final quality measure is the average of the SSIMs across the image (also called the mean SSIM or MSSIM). The SSIM index is based on the fact that natural images are highly structured. The structural correlation between the original (uncorrupted) and the filtered image is an important measure of the overall visual quality. Further, the SSIM index measures quality locally and is better able to capture local dissimilarities than global quality measures such as the MSE and PSNR. Although the form of Eq. (6) is more complicated than the MSE, it is analytically tractable. We calculate the mean value of the SSIM quality index:

To compare the noise suppression and detail preservation capabilities of several filters, we use a subjective visual criterion by presenting the filtered frames for different color video sequences and/or their error images. When filtered images are observed by the human visual system, subjective visual comparisons provide information about spatial distortions and artifacts that are introduced by the different filters, the noise suppression quality of the algorithm and the performance of the filter.

3 Proposed 3D fuzzy framework

The goal of the proposed denoising method is to provide better results than those obtained by other state-of-the-art fuzzy filters. The method is divided into three steps. In the first step, where the output is denoted by E out(i, j)1, for each pixel there is a level at which it is considered to be noise-free and a level at which it is considered to be noisy. In this step, a basic gradient values and four related gradient values are calculated. In the second step, where the output is denoted by E out(i, j)2, the correlation between the R, G and B components in each frame is used to detect noise based primarily on existing information in the three RGB color channels. In the third step, where the result of filtering is denoted by E out(i, j)3, spatial and temporal color information from the current and previous frames are used to remove any remaining noise. Figure 1 explains the principal operations of the proposed method.

3.1 First processing step: channel detection and filtering

As an illustrative example, we explain the sequential procedures only for the red color component. The procedures are similar for the green and the blue components. By assuming that a 3 × 3 sliding window is located in the center of a 5 × 5 sliding window, a gradient value can be calculated in eight different directions with respect to the neighboring pixels in the 3 × 3 window (see Fig. 2). In this framework, we introduce the gradient values as absolute differences that represent the similarity level between neighboring pixels. Two hypotheses must be resolved: the central pixel is a noisy pixel, or it is a noise-free pixel. The differences with respect to the central element, E β c are defined as ∇ β(k,l) = |E β c (i, j) − E β(i + k, j + l)|. They are calculated for each direction, γ = {N, E, S, W, NW, NE, SE, SW}, where the point (i, j) = (0, 0) marks a central pixel in a sliding window. The parameter β characterizes the chosen color channel in the RGB space, and (k, l) denotes the direction of the gradient; k and l can take the values {−1, 0, 1} (Fig. 2). For any direction, the basic gradient value and four related gradient values are described by (k, l) values from {−2, −1, 0, 1, 2} [9]. The related gradients are introduced to avoid spreading edges and fine features. Eight basic gradient values, ∇ βγ , are defined for each direction, γ, and color component, β. Figure 2 shows the pixels used for the basic and four related components for the processing procedure in the SE direction. The basic gradient value for the SE direction is ∇ β(1,1) = \( \nabla_{{\text{SE}}(B)}^\beta\), and the four related gradient values are given as follows: ∇ β(0,2) = \( \nabla_{{\text{SE}}(R_{1})}^\beta\), ∇ β(2,0) = \( \nabla_{{\text{SE}}(R_{2})}^\beta\), ∇ β(−1,1) = \( \nabla_{{\text{SE}}(R_{3})}^\beta\), and ∇ β(1,−1) = \( \nabla_{{\text{SE}}(R_{4})}^\beta\).

To estimate the noise contamination in a central pixel of a 5 × 5 sliding window, we introduce the LARGE and SMALL fuzzy sets (Fig. 2). A large membership degree (i.e., close to 1) in the fuzzy set SMALL indicates that the central pixel is a noise-free pixel. A large membership degree in the fuzzy set LARGE set indicates that a central pixel has a large probability of being noisy. Membership functions can be built from basic functions, e.g., piece-wise linear functions, the sigmoid curve, quadratic and cubic polynomials, or the Gaussian function. Because of their simplicity and convenience, we use Gaussian membership functions [9, 10] to compute the membership degrees of fuzzy gradients:

The values of the parameters used in Eqs. (11) and (12) were determined based on the optimal values of the PSNR and MAE criteria (see Sect. 4.1). The following fuzzy rules are proposed to resolve the following hypothesis: a central component is noisy, noise-free, or belongs to coarse details (e.g., edge, fine features, etc.). Fuzzy Rule 1 defines the fuzzy gradient value for the γ direction,∇ β Fγ , that belongs to the fuzzy set LARGE. A color component pixel is considered to be a noisy pixel if its basic gradient value is similar to related gradient values 3 and 4 and differ from related gradient values 1 and 2 (Fig. 2).

Fuzzy Rule 1.

Defining fuzzy gradient value \( \nabla_\gamma^{\beta{F}}\) into the fuzzy-set LARGE: IF (∇ βγ is LARGE AND \( \nabla_{\gamma R_{1}}^\beta\) is SMALL AND \( \nabla_{\gamma R_{2}}^\beta\) is SMALL AND \( \nabla_{\gamma R_{3}}^\beta\) is LARGE AND \( \nabla_{\gamma R_{4}}^\beta\) is LARGE) THEN the fuzzy gradient value \( \nabla_\gamma^{\beta{F}}\) is LARGE.

Here, the logic operation: (A AND B) = A · B.

Fuzzy Rule 2 defines the noisy factor, r β, determined using the fuzzy gradient values obtained from Fuzzy Rule 1 and computed for each direction, γ.

Fuzzy Rule 2.

Defining fuzzy gradient value: IF MAX (∇ βN is LARGE, MAX (∇ βS is LARGE, MAX (∇ βE is LARGE, MAX (∇ βW is LARGE, MAX (∇ βSW is LARGE, MAX (∇ βNE is LARGE, MAX (∇ βNW is LARGE, ∇ βSE is LARGE))))))) THEN The noisy factor r β is LARGE.

Here, the logic operation MAX (A, B) is employed.

The noisy factor, r β, is computed from information found in the eight directions and is employed as a measure to distinguish between a noisy and a noise-free pixel. To determine the level of noise present in the processed sample in the fuzzy set LARGE, this factor is the maximum fuzzy weighted value and indicates if the central pixel is corrupted. In Sect. 4.1 we present the experimental justification for the threshold, Th1 in r β ≥ Th1, which was chosen according to the optimal PSNR and MAE criteria.

In the filtering procedure, the fuzzy gradient values of the corrupted pixels are used as weights. The weights are proportional to the noisy pixel value. If a pixel is corrupted (r β ≥ Th1), the fuzzy weights in the fuzzy set NO LARGE (no noise) are used in the standard negator function (ργ β = 1 − ρ βγ,LARGE ). First, the fuzzy weights are calculated in the order of the magnitude of the pixel values for each color component including the central pixel \((i,\,j): \zeta_\gamma^{\beta}=\{\zeta_{\rm SW}^{\beta}, \ldots,\zeta_{(i,j)}^\beta, \ldots, \zeta_{\rm NE} ^\beta\}.\) The pixels are ranked in ascending order according to the weights, ρ βγ , of the \( \zeta_\gamma ^\beta=\{\zeta_{\rm SW} ^\beta, \ldots, \zeta_{(i,j)} ^{\beta}, \ldots, \zeta_{\rm NE}^{\beta}\}\) pixels. Pixels that have values that are sufficiently outlined from the central pixel are removed. Second, the fuzzy weight for the central component in the fuzzy set NO LARGE is determined using: \(\rho_C^\beta= M \sqrt{1-r_\beta}, M=3.\) Third, the ordered pixels, ρ βγ(l) , are revised as candidates to change the corrupted central pixel by incrementally decreasing l from 9 to 1; decreasing l is valid if \(\sum_{q=9}^{l}\rho_q^\beta\geq\rho_0^\beta, \rho_0^{\beta}=\left(\sum_\gamma \rho_{\gamma(l)}^{\beta}+ M \sqrt{1-r_{\beta}}\right)/2, M=3.\) The pixels ρ βγ(l) are revised for \(l=9,8,\ldots,3, \) until the condition ∑ l q=9 ρ β q ≥ ρ β l ≤ 2 is satisfied. In this case, the l-th ordered value is the output E β(l)γ = E β (i, j)1.

3.2 Second processing step: inter-channel detection and filtering

3.2.1 Impulse noise detection

In this step, the noise detection is based mainly on the correlations between the R, G and B image (frame) components. Therefore, the fuzzy membership degree will be assigned for each color channel separately. Noisy pixels are detected by determining local differences of the R component with respect to the G and B components. Correlations between color components occur if a minimum number of the local differences coincide with neighbors.

Similarity degrees between the other color components with respect to the values of its neighbors, ρG and ρB, are included. The algorithm is applied independently in each color component where the fuzzy membership degree is assigned into the fuzzy set noise-free. The detection procedure checks two different relationships between the central color component and its neighboring color components: (1) if each color component value is similar to its neighbors in the same color band; and (2) if the magnitude differences for each color channel correspond to the magnitude differences of the other color channels. The pixel in the image color at the position (i, j) is a vector E i,j , with components R, G and B. Each pixel is defined as \(\zeta_{i}=(\zeta_{i}^{R},\zeta_{i}^{G},\zeta_{i}^{B});\) the central pixel is represented by \(\zeta_{0}=(\zeta_{C}^{R},\zeta_{C}^{G},\zeta_{C}^{B}).\) In a sliding 3 × 3 window, the neighboring pixels of the central pixel, \(\zeta_{C},\) are \(\zeta_{1},\zeta_{2},\ldots,\zeta_{8}.\)

The absolute magnitude differences between the central pixel, \(\zeta_{C},\) and the color neighbors are computed as follows [13]:

where \(\gamma=1,\ldots,8\) and \(\Updelta\zeta_{\gamma}^{R}, \Updelta\zeta_{\gamma}^{G}\) and \(\Updelta\zeta_{\gamma}^{B}\) represent the differences for the R, G, and B components, respectively.

The membership degree in the SMALL fuzzy set is defined according to the desired behavior, i.e., a relatively small difference is characterized by a large membership degree. Let employ the same Gaussian membership function (12). The values of the parameters \(\bigtriangledown_{2{\rm inter}}=9\) and σ 22inter = 750 used in membership function (12) at this filtering stage have been chosen experimentally from numerous simulations according to optimal values of PSNR and MAE criteria (see Sect. 4.1).

Found membership degrees, \(\rho(\Updelta\zeta_{\gamma}^{R}), \rho(\Updelta\zeta_{\gamma}^{G}), \rho(\Updelta\zeta_{\gamma}^{B})\) in the fuzzy set SMALL are used to decide if \(\zeta_{C}^{R}, \zeta_{C}^{G}\) and \(\zeta_{C}^{B}\) are similar to their neighbors. Below, only the red component is explained. Similar procedures are used for the G and B channels. To ensure that the most relevant differences are taken into account, the \(\rho(\Updelta\zeta_{k}^{R})\) are ranked in descending order. By applying Eq. (12) the similarities between \(\zeta_{C}^{R}\) and the closest neighbors are:

Next, the similarity measures between pixels in the chosen color channel and the corresponding pixels from the other two color channels are calculated, i.e., \(|\rho(\Updelta\zeta^{R}_{\gamma})-\rho(\Updelta\zeta^{G}_{\gamma})|\) and \(|\rho(\Updelta\zeta^{R}_{\gamma})-\rho(\Updelta\zeta^{B}_{\gamma})|.\) To obtain this, the membership degree of the next fuzzy set is computed using the experimentally determined parameter values ∇2,β1β2 = 0.01 and σ 2β1β2 = 0.0021 found experimentally:

The membership degrees ρ RGγ and ρ RBγ , indicate if the local difference between the center pixel and the pixel in position γ in the R component is similar to the local differences in the G and B components. The computed ρ RGγ and ρ RBγ are ranked in descending order, and the similarity measure is calculated as follows:

The following fuzzy rule defines the condition when the R component is noise-free.

Fuzzy Rule 3

Defining fuzzy gradient \(N\zeta_{\zeta^{R}_{C}}\) for the red component \(\zeta^{R}_{C}\) in the fuzzy set noise-free: IF (ρR is LARGE AND ρRG is LARGE AND ρG is LARGE) OR (ρR is LARGE AND ρRB is LARGE AND ρB is LARGE) THEN the noise-free degree of \(\zeta^R_{C}\) is LARGE. Conjunction (A AND B) = A · B and disjunction (A OR B) = A + B − A · B operations determine the membership degree in noise-free fuzzy set and its weight as follows:

3.2.2 Impulse noise suppression

The next step in the impulse noise suppression process is to find the fuzzy weights that will be used during the filtering in a sliding window (Fig. 1). The weights, \(W(\zeta^{R}_{\alpha,{\rm free}}),\) for the noise-free pixels are used, and the weights, \(W(\zeta^{R}_{\alpha}),\) for the other pixels, \(\zeta^R_{\alpha},\) should be computed according to Fuzzy Rules 4 and 5 if the central pixel is noisy (case of R component). Similar fuzzy rules are applied to the G and B components. The membership function level in fuzzy set SMALL (noise) for any noisy pixel \(\zeta_{\alpha}\) is defined using the negator operator as: \(N(\zeta^R_\alpha)=1-W(\zeta^{R}_{\alpha,{\rm free}}).\)

Fuzzy Rule 4

Defining the weight \(W\zeta^R_C\) for the red component \(\zeta^R_0:\) IF \(N\zeta_{F^R_C}\) is LARGE THEN \(W\zeta^R_C.\)

Fuzzy Rule 5

Defining the weight \(W\zeta^R_\gamma\) for the neighbor of the red component \(\zeta^R_\gamma: \) IF (\(N\zeta_{\zeta^R_C}\) is not LARGE AND \(N\zeta_{\zeta^R_\gamma}\) is LARGE AND \(\rho(\Updelta\zeta^G_\gamma)\) is LARGE AND \(N\zeta_{\zeta^G_\gamma}\) is LARGE) OR \((N\zeta_{\zeta^R_C}\) is not LARGE AND \(N\zeta_{\zeta^R_\gamma}\) is LARGE AND \(\rho(\Updelta\zeta^B_\gamma)\) is LARGE AND \(N\zeta_{\zeta^B_\gamma}\) is LARGE) THEN \(W_{\zeta^R_\gamma}\) is LARGE.

3.3 Third processing step: spatio-temporal denoising

In the spatial-temporal stage, the value of the threshold, Th2, for the fuzzy noisy factor, r β, is adjusted to avoid smoothing in the fine image details. The value r β = 0.5 was chosen according to the best PSNR and MAE criteria values (Sect. 4.1). A procedure similar to the first processing step (Fig. 1) is employed: IF r β ≥ Th2, then the denoising procedure using fuzzy membership degrees obtained for the fuzzy set LARGE is realized; else, the filter output is \(E^\beta_{out} = E^\beta_C\).

The remaining noisy pixels are now processed using temporal data gathered from two neighboring frames. In areas with no movement, the corresponding pixels from the previous frame can help detect the remaining corrupt pixels. The current E t,β(i, j) and previous E t-1,β(i, j) frames of a video sequence are located inside a 5 × 5 × 2 sliding window. Analogous to the first processing step explained for the R component, the two neighboring frames are analyzed concurrently to find noise-free pixels in the current frame (t). The differences between the (t) and (t − 1) frames are calculated as follows:

where δE β(k,l) denotes the frame difference, and \((k,l)\in\{-2,-1,0,1,2\}.\)

The frame difference, δE β(k,l) , is used to obtain the current error frame (t). The absolute difference gradient values, \(\Updelta^\beta_\gamma,\) for a central pixel with respect to its neighbors in a 5 × 5 × 1 window are calculated and processed as was performed in (Sect. 3.1). The absolute difference for the SE (basic) direction is given by \(\Updelta^\beta_{\rm {SE_{(basic)}}}=|\delta E^\beta_{(0,0)}-\delta E^\beta_{(1,1)}|.\) A similar procedure is repeated for all basic and related gradient values in any direction.

The Gaussian membership functions presented in Eqs. (11) and (12) are employed using parameters that have been adjusted for the difference frame, \(\delta E ^\beta_{(k,l)}\), according to the optimal values of the PSNR and MAE criteria (Sect. 4.1). The fuzzy rules in this final stage use the absolute differences of the neighboring frames to distinguish among a noisy pixel, local movement, and a noise-free pixel in this 5 × 5 × 1 sliding window.

Fuzzy Rule 6

Determines the first fuzzy gradient difference \((\nabla_\gamma^{\beta F})_{\rm I}\) and repeats the Fuzzy Rule 1 in this case only for gradient values: ∇ βγ , \( \nabla_{\gamma R_{1}}^\beta\), \( \nabla_{\gamma R_{2}}^\beta\), \( \nabla_{\gamma R_{3}}^\beta\), and \( \nabla_{\gamma R_{4}}^\beta\) found using Eq. (18). This rule defines the confidence movement noise for fuzzy gradient differences in a given direction, γ.

Fuzzy Rule 7

Determines the fuzzy gradient difference \((\nabla_\gamma^{\beta F})_{\rm II}:\) IF (∇ βγ is SMALL AND \( \nabla_{\gamma R_{1}}^\beta\) SMALL AND \( \nabla_{\gamma R_{2}}^\beta\) is SMALL) THEN \( \nabla_\gamma^{\beta{F}}\) is SMALL, defining the confidence no movement-no noise, using the fuzzy gradient differences in direction γ, distinguishing among the homogeneous and non-homogeneous regions.

Fuzzy Rule 8

computes fuzzy noisy r β and repeats the operations of Fuzzy Rule 2, changing \((\nabla_\gamma^{\beta F})\) by \((\nabla_\gamma^{\beta F})_{\rm I}.\)

Finally, Fuzzy Rule 9 defines the confidence to event no movement, introducing the factor ηβ:

Fuzzy Rule 9

IF MAX (\((\nabla_N^{\beta F})_{\rm II}\) is SMALL, MAX(\((\nabla_S^{\beta F})_{\rm II}\) is SMALL, MAX (\((\nabla_E^{\beta F})_{\rm II}\) is SMALL, MAX (\((\nabla_W^{\beta F})_{\rm II}\) is SMALL, MAX (\((\nabla_{SW}^{\beta F})_{II}\) is SMALL, MAX (\((\nabla_{NE}^{\beta F})_{II}\) is SMALL, MAX (\((\nabla_{NW}^{\beta F})_{II}\) is SMALL, \((\nabla_{SE}^{\beta F})_{\rm II}\)is SMALL))))))), THEN ηβ is SMALL.

According to fuzzy rules 6–9 for the case when r β ≥ 0.5, when a pixel is considered to be noisy, the denoising procedure is to apply a procedure similar to that described after Fuzzy Rule 2 in Sect. 3.1 with parameters: \(\rho_C^\beta=M \sqrt{1-r_\beta}+\eta_\beta, M=5, \rho_\gamma^\beta=1-(\nabla_\gamma^{t \beta})_I+(\nabla_\gamma^{t \beta})_{\rm II}\) and \(\rho_0^\beta=\left(\sum_\gamma \rho_ {\gamma(j)}^\beta+M\sqrt{1-r_\beta}+\eta_\beta\right)/2, M=5\) for each current frame using the calculated fuzzy weights for the R, G and B color components (Fig. 1).

4 Simulation results and performance evaluation

4.1 Parameter selection

First, the thresholds, Th1 and Th2, (Fig. 1) and the parameters that are used in the membership functions given in Eqs. (11) and (12) are determined. The optimal parameters were obtained, for which the PSNR is largest and the MAE is smallest, by investigating video frames from Salesman, Flowers, Stefan and Miss America that were corrupted in each of the color channels with random impulse noise. The intensity of the impulse noise ranged from 0 to 30 %. According to the computed performance, i.e., the PSNR and the MAE, of the proposed framework, we found the optimal values of the threshold parameters, Th1 and Th2, used in the proposed algorithm (Fig. 1). According to the PSNR and MAE measures for all four analyzed color video sequences, which are given in Tables 1 and 2, the optimal threshold values are Th1 = 0.3 and Th2 = 0.5.

Similar simulations were performed to obtain the values of the parameters ∇1, ∇2 and σ2 of the membership functions given in Eqs. (11) and (12); the following values were adopted: ∇1 = 60, ∇2 = 9 and σ2 = 1000 (Tables 3, 4). We investigated how to change the values of these parameters at the temporal stage of filtering and found the following: ∇1 = 0.1, ∇2 = 0.01 and σ2 = 0.1. Other values of the parameters: ∇2inter = 9, σ 2inter = 750, and ∇2,β1β2 = 0.01, σ 2β1β2 = 0.0021, and that we applied in the membership function Eq. (12), and parameter Q = 2 in Eqs. (14) and (16) were chosen according to optimal values of the PSNR and the MAE measures (Tables 5, 6). We have standardized all of these parameters to be constants so that a real-time implementation of the proposed technique on a DSP will be simpler.

4.2 Performance analysis of promising filters

The video color sequences Miss America and Salesman in 176 × 144 QCIF format and video color sequences Flowers and Stefan in 352 × 288 CIF format (Fig. 3) were used to evaluate 3D fuzzy algorithms. The frames of the color video sequences were contaminated artificially by random impulsive noise of different intensities (0–30 %) in each color channel independently. Several methods have been used to process the color video sequences.

During the first stage of processing in the proposed framework (Fig. 1), the edges and fine details were selected using fuzzy techniques. To preserve the image features during the filtering stage, an algorithm must be able to accurately detect the edges and fine features. The images shown in Fig. 4 were taken before the processing procedure of the first stage of the algorithm for color sequences Flowers (40th frame) and Miss America (57th frame). The results obtained using the FRINR_seq filter [24] and the proposed technique, FMINS, show the detection ability of these algorithms. Ideally, no details should be present in the image because every image detail (edge, sharpness, etc.) has to be detected. Nevertheless, a few details and edges are detected as noisy pixels and are observed in the images (Fig. 4). At the first step of spatial filtering, the proposed framework preserves better edges and fine features than the FRINR_seq filter.

Each row corresponds to a different intensity of noise (first row, 0 %; second row, 10 %). Independent of the amount of noise in a frame, the edges are detected well when the FMINS filter is employed.

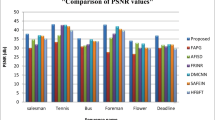

To demonstrate that the proposed filter takes advantage of temporal information, we compare the proposed filter to the 2D impulse noise reduction methods for color images. As was shown in recently published articles [24, 31], the INRC [13] and 2D FD [10, 35] filters outperform all other state-of-the-art 2D techniques. Therefore, by comparing simulation results obtained using the FRINR_seq, 3D FD and FMINS 3D filtering techniques, we can illustrate the comparative performance of the FMINS 3D framework. Table 7 presents the PSNR, MAE, NCD and SSIM metrics averaged per 100 frames for the FMINS 3D framework, FRINR_seq [24] and 3D FD [31]. For the Miss America, Salesman, Flowers, and Stefan color video sequences, the FMINS 3D outperforms the other methods. Because the textures and chromaticity properties of these sequences differ, this result shows the robustness of our novel filter. According to all four objective criteria, the best performance is realized by the proposed algorithm over a wide range of noise intensities. When the noise intensity is 30 %, there are cases when the objective performance of the FRINR_seq filter is slightly better than the proposed technique. This can be explained by the temporal processing of the two methods. The proposed filtering framework employs only two neighboring frames in the temporal process; the FRINR_seq filter processes three neighboring frames. The performance of all three methods vary from frame to frame when applied to the video sequences Miss America, Salesman, Flowers, and Stefan with random impulse noise levels ranging from 0 to 30 % in each color channel. The PSNR and MAE from each experiment on different frames are presented in Figs. 5 and 6, respectively. The designed approach outperforms the other methods. The novel performance measure SSIM captures human perception better than the traditional PSNR, MAE and NCD. It is well known that objective metrics do not always correspond to human perception.

Figures 7, 8, 9 and 10 show the filtered frames and the corresponding error images obtained by applying the three methods to the 40th frame (zoomed part) of Flowers, the 57th frame of Miss America(zoomed part), the 50th frame of Stefan and the 59th frame of Salesman, respectively.

The proposed framework leaves less edges, fine details (e.g., the letters and lines in the Stefan frame) and noises in error images than the other filters.

The proposed fuzzy filter combines sufficiently good detail preservation with good noise removal and appears to outperform other filters over a wide range of noise intensities. Note that the novel filter employs only two neighboring frames, in contrast to the FRINR_seq which uses three frames. In fast moving regions, this can be a benefit during spatio-temporal processing.

4.3 Some remarks on the computational efficiency of the proposed algorithm

The proposed filtering framework outperforms other state-of-the-art filters for video sequences that has been corrupted by random impulse noise. The development of the framework focused on the results of filtering and not on the complexity of the proposal. To better quantify the complexity, we compare our method to previously designed techniques that have been implemented on a DSP and in MATLAB. Some promising algorithms have been performed on a digital signal processor manufactured by Texas Instruments. This device is a fixed point processor (EVM DM642) running at 720 MHz. The processing times were measured for QCIF-sized frames of 176 × 144 pixels in format 565 RGB (5 bits for R, 6 bits for G, and 5 bits for B color channels) using images corrupted by random impulsive noise (Table 8). Because the processing times of the fuzzy algorithm vary according to the frame texture and level of noise intensity, the computation times have been averaged over 20 frames.

A recently proposed 3D-FD filter [31] runs at a speed of approximately 7.5 s/frame for 176 × 144 images. This filter computes the required membership functions and other fuzzy parameters by applying operations that are similar to the FMINS filter. In addition, the 3D-FD filter calculates arccos functions to estimate the angles of color pixels and combines them with gradient values in fuzzy rules. The additional stage of the designed filter, during which the inter-channel similarities are calculated, does present a large computational expense. The execution times of simulation experiments performed in MATLAB using the FMINS filter applied to the Miss America and Flowers color video sequences that were corrupted by impulsive noise of 10 % intensity were 18 % and 15 % less, respectively, than the execution times obtained when the 3D-FD filter was used. This gives the approximate processing times for the novel filter in a DSP implementation. Information about the speed of the FRINR_seq filter can be found in Table 3 of reference [24]. In general, the fuzzy-based algorithms implemented on a DSP take much more time than traditional algorithms which use less complex mathematical operations. Because the detection and filtering of each pixel is independent of the results obtained for other pixels in a frame, the computation time of the proposed filter can be reduced by performing the detection and filtering stages in parallel.

5 Conclusions

The proposed 3D filtering framework is based on fuzzy set logic in combination with the basic gradient values, four related gradient values in different directions, inter-channel correlations and previous and current temporal frames. We have demonstrated that this novel filter has better processing performance than the best fuzzy and non-fuzzy filters. In color video sequences, the proposed method successfully suppressed impulsive noise with a wide range of noise intensities and preserved edges and fine features. Based on results for the PSNR, MAE, NCD and SSIM criteria and a visual analysis of the filtered video sequences, the novel approach was extremely efficient at reproducing the chromatic characteristics of images. In addition, some techniques were implemented on a DSP platform and an analysis of the processing speeds of several promising 3D algorithms and computation times were presented in this study. Future work should be done on the improvement of the current method incorporating more information for better distinguishing between noisy pixels and image fine features adding other fuzzy rules. Additional efforts will be done in increasing the algorithm speed by performing the processing for several pixels in parallel.

References

Plataniotis, K.N., Androutsos, D., Venetsanopoulos, A.N.: Multichannel filters for image proccesing. Multichannel Filters Image Process. 9(2), 143–158 (1997)

Morillas, S., Gregori, V., Peris-Fajarns, G.: Isolating impulsive noise pixels in color images by peer group techniques. Comput. Vis. Image Understand. 110(1), 102–116 (2008)

Ponomaryov, V.I., Gallegos-Funes, F.J., Rosales-Silva, A.: Real-time color imaging based on RM-filters for impulsive noise reduction. J. Imaging Sci. Technol. 49(3), 205–219 (2005)

Lukac, R.: Adaptive vector median filtering. Pattern Recogn. Lett. 24(12), 1889–1899 (2003)

Jin, L., Li, D.: A Switching vector median filter based on the CIELAB color space for color image restoration. Signal Process. 87(6), 1345–1354 (2007)

Yin, H.B., Fang, X.Z., Wei, Z., Yang, X.K.: An improved motion-compensated 3-D LLMMSE filter with spatio-temporal adaptive filtering support. IEEE Trans. Circuits Syst. Video Technol. 17(12), 1714–1727 (2007)

Camacho, J., Morillas, S., Latorre, P.: Eficient impulse noise suppression based on statistical confidence limits,. J. Imaging Sci. Technol. 50(5), 427–436 (2006)

Ponomaryov, V., Rosales-Silva, A., Golikov, V.: Adaptive and vector directional processing applied to video colour images. Electron. Lett. 42(11), 623–624 (2006)

Ponomaryov, V., Rosales, A., Gallegos, F.: 3D filtering of colour video sequences using fuzzy logic and vector order statistics. In: Proceedings of International Conference, LNCS 5807, pp. 210–221 (2009)

Kravchenko, V.F., Ponomaryov, V.I., Pustovoit, V.I.: Three-dimensional filtration of multichannel video sequences on the basis of fuzzy-set theory. Doklady Phys. Springer 55(2), 58–63 (2010)

Schulte, S., De Witte, V., Nachtegael, M., Vander Weken, D., Kerre, E.E.: Fuzzy random impulse noise reduction method. Fuzzy Sets Syst. Springer 158, 270–283 (2007)

Schulte, S., Nachtegael, M., De Witte, V., Vander Weken, D., Kerre, E.E.: A fuzzy impulse noise detection and reduction method. IEEE Trans. Image Process. 15(5), 1153–1162 (2006)

Schulte, S., Morillas, S., Gregori, V., Kerre, E.E.: A new fuzzy color correlated impulse noise reduction method. IEEE Trans. Image Process. 16(10), 2565–2575 (2007)

Morillas, S., Gregori, V., Peris-Fajarns, G., Latorre, P.: A fast impulsive noise color image filter using fuzzy metrics. Real Time Imaging 11(5–6), 417–428 (2005)

Russo, F.: Hybrid neuro-fuzzy filter for impulse noise removal. Pattern Recogn. 32, 1843–1855 (1999)

Chan, R.H., Hu, C., Nikolova, M.: An iterative procedure for removing random-valued impulse noise. IEEE Signal Process. Lett. 11(12), 1843–1855 (2004)

Morillas, S., Gregori, V., Peris-Fajarns, G., Latorre, P.: A fast impulsive noise color image Filter using fuzzy metrics. Real Time Imaging 11(5–6), 417–428 (2005)

Morillas, S., Gregori, V., Hervs, A.: Fuzzy peer groups for reducing mixed Gaussian-impulse noise from color images. IEEE Trans. Image Process. 18(7), 1452–1466 (2009)

Camarena, J.G., Gregori, V., Morillas, S., Sapena, A.: Some improvements for image filtering using peer group techniques. Image Vis. Comput. 28(1), 188–201 (2010)

Smolka, B., Plataniotis, K.N., Venetsanopoulos, A.N.: Nonlinear signal and image processing: theory, methods, and applications. In: Barner, K.E., Arce, G.R. (Eds.) Nonlinear Techniques for Color Image Processing, pp. 445–505. CRC Press, Boca Raton (2004)

Smolka, B., Venetsanopoulos, A.N.: Noise reduction and edge detection in Color images. In: Color Image Processing: Methods and Applications, pp. 75–102. CRC Press, Boca Raton (2006)

Celebi, M.E., Kingravi, H.A., Aslandogan, Y.A.: Nonlinear vector filtering for impulsive noise removal from color images. J. Electron. Imaging 16(3), 033008 (2007)

Lukac, R., Smolka, B., Martin, K., Plataniotis, K.N., Venetsanopoulos, A.N.: Vector filtering for color imaging. IEEE Signal Process. Mag. 22(1), 74–86 (2005)

Melange, T., Nachtegael, M., Kerre, E.: Fuzzy random impulse noise removal from color image sequences. IEEE Trans. Image Process. 20(4), 959–970 (2011)

Melange, T., Nachtegael, M., Kerre, E.E., Zlokolica, V., Schulte, S., De Witte, V., Pizurica, A., Philips, W.: Video denoising by fuzzy motion and detail adaptive averaging. J. Electron. Imaging 17(4), 043005 (2008)

Melange, T., Nachtegael, M., Schulte, S., Kerre, E.: A fuzzy filter for the removal of random impulse noise in image sequences. Image Vision comput. 29, 407–419 (2011)

Jovanov, L., Pizurica, A., Schulte, S., Schelkens, P., Kerre, E., Philips, W.: Combined Wavelet-domain and motion-compensated video denoising based on video codec motion estimation methods. IEEE Trans. Circuits Syst. Video Technol. 19(3), 417–421 (2009)

Lukac, R.: Adaptive color image filtering based on center-weighted vector directional filters. Multidiment. Syst. Signal Process. 15(2), 169–196 (2004)

Lukac, R., Plataniotis, K.N., Venetsanopoulos, A.N., Smolka, B.: A statistically-switched adaptive vector median filter. J. Intell. Robot. Syst. 42(4), 361–391 (2005)

Kravchenko, V., Perez, H., Ponomaryov, V.: Adaptive signal processing of multidimensional signals with Applications. FizMatLit Edit, Moscow (2009)

Rosales Silva, A., Gallegos Funes, F., Ponomaryov, V.: Fuzzy Directional (FD) Filter for impulse noise reduction in colour video sequences. J. Visual Commun. Image Represent. 23(1), 143–149 (2012)

Lukac, R., Smolka, B., Plataniotis, K.N.: Sharpering vector median filters. Signal Process. Lett. 87(9), 2085–2099 (2007)

Celebi, M.E.: Real-time implementations of order-statics -based directional filters. IET Image Process. 3(1), 1–9 (2009)

Smolka, B.: Adaptive edge enhancing technique of impulsive noise removal in color digital images. Lect. Notes Comput. Sci. 6626, 60–74 (2011)

Ponomaryov, V., Gallegos-Funes, F., Rosales-Silva, A.: Fuzzy directional (FD) filter to remove impulse noise from colour images. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. E93-A(2), 570–572 (2010)

Morillas, S., Gregori, V., Hervs, A.: Fuzzy peer groups for reducing mixed Gaussian-impulse noise from color images. IEEE Trans. Image Process. 18(7), 1452–1466 (2009)

Ponomaryov, V.: Real-time 2D-3D filtering using order statics based algorithms. J. Real Time Image Process. 1(3), 173–194 (2007)

Lukac, R., Plataniotis, K.N.: A taxonomy of Color image filtering and enhancement solutions. In: Hawkes, P.W. (ed) Advances in Imaging and Electron Physics, Vol. 140, pp. 187–264. Academic Press, New York (2006)

Ng, P., Ma, K.K.: A switching median filter with boundary discriminative noise detection for extremely corrupted images. IEEE Trans. Image Process. 15(6), 1506–1516 (2006)

Celebi, M.E., Aslandogan, Y.A.: Robust switching median filter for impulse noise removal. J. Electron. Imaging 17(4), 043006 (2008)

Smolka, B.: Peer group switching filter for impulse noise reduction in color images. Pattern Recogn. Lett. 31(6), 484–495 (2010)

Wang, Z., Bovik, A., Seikh, H., Simoncelli, E.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Wang, Z., Bovik, A.: Mean squared error: love it or leave it? A new look at signal fidelity measures. IEEE Signal Process. Mag. 26(is1), 98–117 (2009)

Ponomaryov, V., Rosales, A., Gallegos, F., Loboda, I.: Adaptive vector directional filters to process multichannel images. IEICE Trans. Fundam. Electron. Commun. Comput. Sci E90B(2), 429–430 (2007)

Zlokolica, V., Philips, W., Van De Ville, D.: A new non-linear filter for video processing. In: Proceedings of Third IEEE Benelux Signal Process, pp. 221–224 (2002)

Acknowledgments

The authors would thank to National Polytechnic Institute of Mexico and CONACYT for their support to realize this work.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Ponomaryov, V., Montenegro, H., Rosales, A. et al. Fuzzy 3D filter for color video sequences contaminated by impulsive noise. J Real-Time Image Proc 10, 313–328 (2015). https://doi.org/10.1007/s11554-012-0262-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11554-012-0262-9