Abstract

Purpose

Propose a fully automatic 3D segmentation framework to segment liver on challenging cases that contain the low contrast of adjacent organs and the presence of pathologies from abdominal CT images.

Methods

First, all of the atlases are weighted in the selected training datasets by calculating the similarities between the atlases and the test image to dynamically generate a subject-specific probabilistic atlas for the test image. The most likely liver region of the test image is further determined based on the generated atlas. A rough segmentation is obtained by a maximum a posteriori classification of probability map, and the final liver segmentation is produced by a shape–intensity prior level set in the most likely liver region. Our method is evaluated and demonstrated on 25 test CT datasets from our partner site, and its results are compared with two state-of-the-art liver segmentation methods. Moreover, our performance results on 10 MICCAI test datasets are submitted to the organizers for comparison with the other automatic algorithms.

Results

Using the 25 test CT datasets, average symmetric surface distance is \(1.09 \pm 0.34\) mm (range 0.62–2.12 mm), root mean square symmetric surface distance error is \(1.72 \pm 0.46\) mm (range 0.97–3.01 mm), and maximum symmetric surface distance error is \(18.04 \pm 3.51\) mm (range 12.73–26.67 mm) by our method. Our method on 10 MICCAI test data sets ranks 10th in all the 47 automatic algorithms on the site as of July 2015. Quantitative results, as well as qualitative comparisons of segmentations, indicate that our method is a promising tool to improve the efficiency of both techniques.

Conclusion

The applicability of the proposed method to some challenging clinical problems and the segmentation of the liver are demonstrated with good results on both quantitative and qualitative experimentations. This study suggests that the proposed framework can be good enough to replace the time-consuming and tedious slice-by-slice manual segmentation approach.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Segmentation of the liver is regarded as a primary and essential step in liver quantitative analysis and may help clinician assess the progress of liver diseases [1]. However, liver segmentation from CT images is still a challenging task due to the low contrast of adjacent organs, the presence of pathologies, and the highly varying shapes between subjects [2, 3]. Thus conventional segmentation techniques are often insufficient to segment liver from a CT dataset.

Previous work on liver segmentation can be roughly classified into two categories. The first approach segments liver by considering pure image information, e.g., thresholding [4], region growing [5], and clustering [6]. The main limitation of these methods is the tendency to leak into neighboring organs with similar intensity values to liver tissue.

The second approach is based on model-based methods for liver segmentation. The basic idea behind these algorithms is to incorporate local and global liver shape prior knowledge for overcoming the limitation of the aforementioned methods [4–6]. They can be further divided into: (1) active and statistical shape models [7–9], (2) atlas-based segmentation [10, 11], and (3) level set-based segmentation [9, 11, 12].

Active shape model (ASMs) was first proposed by Cootes et al. [13]. Since its publication, active shape models have become one of the most active and successful research areas in medical image segmentation. In ASM-based approach, statistical shape models (SSMs) are usually employed to learn the shape prior models [8–10]. However, SSMs tend to overly constrain the shape deformations and overfit the training data due to the small size of training samples. These approaches result in shape prior models that have low generalization ability, that is, they cannot be adapted accurately to the finer local details of shapes in new images.

Atlas-based segmentation was first applied to abdominal organ segmentation by Park et al. [14]. They constructed probabilistic atlas (PA) of organ location for multi-organ segmentation. Okada et al. [15] statistically analyzed the shape of organs and constructed hierarchical multi-organ statistical PAs. Oda et al. [16] divided an atlas database into several clusters to generate multiple PAs of organ location. Shimizu et al. [17] proposed a PA-guided segmentation algorithm with a post-process of an expectation maximization (EM) algorithm to segment 12 organs simultaneously. Since PA was constructed prior to the segmentation process in most of the previous works, all target images shared the same PA for the segmentation process. In recent work, patient-specific PA-based methods have been proposed [18]. Different from the previous works, patient-specific PA is dynamically generated by registering multiple atlases to each new target image dynamically.

Level set methods are also used in medical image segmentation [18–21], and they take into account the local gradient features or/and region features. Gradient-based level set methods are popular for detecting regions of interests when the boundaries are relatively distinct from neighboring structures, for example, in the lungs and pelvis [18]. Oliveira et al. [19] proposed a gradient-based level set model with a new optimization of parameter weighting for liver segmentation. Region-based level set models are often applied to segment detailed anatomical structure such as liver and its vessels [20]. Li et al. [21] suggested a combination of gradient and region properties to improve level set segmentation.

Nevertheless, each of the existing techniques in the literature has limitations when used on challenging cases. The main challenges may be outlined as follows:

-

(i)

Liver tissues and neighboring organs (e.g., kidney, heart, gallbladder, stomach, and the muscles) have similar gray levels. This is particularly challenging for automatic liver extraction.

-

(ii)

Liver tissues containing severe pathological abnormalities are more difficult to handle. In such situations, a false segmentation might occur.

The existing methods cannot be used straightforwardly to achieve satisfactory segmentation result in the aforementioned cases, since challenges involved are different. Alleviating the above difficulties is exactly what we are concerned with in this paper.

We develop a fully automatic framework to segment liver from abdominal CT images. It starts by calculating the similarities between all atlases and the test dataset to dynamically generate a subject-specific probabilistic atlas, and followed by determining the most likely liver region (MLLR) of the test image based on the generated atlas. A maximum a posteriori (MAP) classification of probability map is described to perform the rough segmentation, and a shape–intensity prior level set is presented to produce the final liver segmentation inside the MLLR. Our method is evaluated and demonstrated on 25 test CT datasets, and its results are compared with two closely related approaches [12, 22]. Moreover, our performance results on 10 MICCAI test datasets are submitted to the organizers for comparison with the other automatic algorithms.

Methods

In this section, we describe the proposed segmentation framework in detail. It is a multistep approach that gradually accumulates information until the final result is produced. The flowchart of the segmentation framework is depicted in Fig. 1. It is subdivided into a training and test phase. In the rest of this section, we further describe its individual steps and explain how to segment the liver automatically from abdominal CT images combining probabilistic atlas and probability map constrains.

Preprocessing of CT datasets

The preprocessing stage contains three steps. All the preprocessed steps are applied to both training and test datasets. First, all volumes are regularized by rotating based on the centers of mass of lungs to reduce the individual change with respect to the body pose and position. The lung regions are extracted automatically from each image by thresholding and connected component analysis. Second, a 3D anisotropic diffusion filter introduced by [23] is utilized for reducing noise while preserving liver contour. Third, all the volumes are resampled in the transverse direction to the same number of slices using trilinear interpolation scheme since the principal component analysis (PCA) input vector requires a fixed number of elements.

In the next section, we will present the segmentation scheme, which consists of three major steps. The first step describes the determination of the most likely liver region (MLLR) of the test image based on the generated atlas, as shown in Fig. 2a. The second step illustrates the rough segmentation strategies based on the MAP classification of probability map, as shown in Fig. 2b–d. The third step provides a procedure for refining the rough boundary by using shape–intensity prior level set method, as shown in Fig. 2e, f.

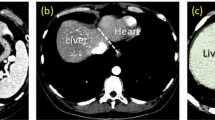

Illustration of liver segmentation steps. a The most likely region (MLLR) generated by the patient-specific weighted probabilistic atlas (PA). After constructing the patient-specific weighted PA for the input dataset, one binary mask is generated by thresholding the PA image to represent the most likely region. b The intensity histogram generated by the samples of the trained masks of each class. To generate probability map, we divide the CT intensities inside the MLLR into five classes: heart, liver, right kidney, spleen, and bone excluding the background. c Liver probability map in the MLLR of the selected slice. High probabilities are shown in white. d The segmented liver region after discarding erroneous subvolumes. e Image of initial contour voxels overlaid on original image. Initial contour is used for subsequent constrained level set evolution. f Final liver contour obtained by the constrained level set

Determination of most likely liver region by subject-specific weighted PA

For an input test dataset, the patient-specific weighted probability atlas (PA) is dynamically generated using the following steps. We first compute image similarities between all atlases and the test dataset, and sort the atlases in ascending order with respect to the similarities. The image similarity is evaluated by normalized cross-correlation (NCC) [16], and a weighted PA is calculated by the previous method [11].

After constructing the patient-specific weighted PA for the input dataset, one binary mask is generated by thresholding the PA image to represent the region of interest (ROI) for liver parenchyma. This ROI is the most likely liver region (MLLR see Fig. 2a). To reduce the estimation error of MLLR as much as possible, we perform a morphological dilation with a size of \(5 \times 5 \times 5\) spherical structuring element.

Rough segmentation based on the MAP classification of probability map

To generate probability map, we divide the CT intensities inside the MLLR into five classes ht (heart), lr (liver), rd (right kidney), sn (spleen), and be (bone) excluding the background, because we limit the region to be processed from voxels in the vicinity of the liver parenchyma mask where the prior probability of liver is greater than zero. The intensity histogram of each class is generated by the samples of the trained masks of each class in the training phase (see Fig. 2b). Likelihood function for each class \(p(I(\mathbf x )|l)(l={ ht,lr,rd,sn,be})\) is estimated convolving the intensity histogram of each class with a Gaussian kernel (SD of 2.5), where \(I(\mathbf x )\) is the CT intensity at a position \(\mathbf x =(x,y,z)\). \(p(I(\mathbf x )|l)\,{=}\,\sum \nolimits _{s=I_{\mathrm{min}}}^{I_{\mathrm{max}}}h(s)/H\times g_{\sigma }(I(\mathbf x )-s), g_{\sigma }(\mathbf y )\,{=}\,\exp (-\mathbf y ^2/(2 {\sigma }^2)), H\,{=}\,\sum _{s=I_{\mathrm{min}}}^{I_{\mathrm{max}}}h(s)\), with h(s) denoting the histogram of the observed intensities and \(s\in [I_{\mathrm{min}},I_{\mathrm{max}}]\). Figure 2c shows a generated liver probability map.

After the probability map generation step, the current MLLR volume may be split into several subvolumes, where each subvolume belongs to one of the aforementioned five classes. The liver parenchyma class calculated by a MAP classification of probability map \(P({ lr})\) may contain erroneous segmentations since liver and its adjacent organs, such as heart and right kidney show similar intensities in the CT datasets. Based on the fact that the liver is the biggest abdominal organ, hence by an additional morphological filling-holes operation, we determine the liver part by connected component analysis. In this way, some subvolumes that are wrongly classified as liver are discarded (Fig. 2d).

Refinement of the rough segmentation by shape–intensity prior level set method

MAP framework with shape–intensity prior

Considering a target image I that has an object \(\varphi \) of interest, a MAP framework can be used to realize image segmentation combining shape information and image intensity information. Our main concern is the segmentation of the object \(\hat{\varphi }\), so we assume that the synthetic image \(I_{\varphi }\) is very close to the real image I, thus \(I_{\varphi } \approx I\), and we can obtain the following equation:

where \(p(I| \varphi , I_{\varphi })\) is the image intensity information based term. \(p(\varphi , I_{\varphi })\) is the joint density function of shape \(\varphi \) and image intensity I. Assuming intensity homogeneity within the object, we use the following imaging model:

where \(c_1\) and \(\sigma _1\) are the average and variance of I inside \(\varphi \); \(c_2\) and \(\sigma _2\) are the average and variance of I outside \(\varphi \) but also inside a certain domain \(\varOmega _\varphi \) that contains \(\varphi \). \(p(\varphi ,I)\) is the joint density function of shape \(\varphi \) and image intensity I. It contains the shape prior information, the intensity prior information, as well as their relation.

Shape–intensity prior model

Consider a training set of n aligned images \(\{I_1, I_2, \ldots , I_n\}\), with an object of interest in each image. The boundaries of the n objects in the training set are embedded as the zero level sets of n separate higher-dimensional level set functions \(\{\varPsi _1,\varPsi _2,\ldots ,\varPsi _n\}\) with negative distances assigned to the inside and positive distances assigned to the outside of the object. We use vector \([\varPsi _i^\mathrm{T},I_i^\mathrm{T}]^\mathrm{T}\) as the representation of the object and intensity values. Each of the \(I_i\) and \(\varPsi _i\) is placed as a column vector with \(N_1 \times N_2 \times m\) elements where m is the number of slices and \(N_1 \times N_2\) is the number of pixels in each slice. Thus, the corresponding shape–intensity training set is \(\{[\varPsi _1^\mathrm{T},I_1^\mathrm{T}]^\mathrm{T},[\varPsi _2^\mathrm{T},I_2^\mathrm{T}]^\mathrm{T},\ldots ,[\varPsi _n^\mathrm{T},I_n^\mathrm{T}]^\mathrm{T} \}\). Using the technique developed in [20], we compute the mean shape–intensity pair \([\bar{\varPsi }^\mathrm{T},{\bar{I}}_i^\mathrm{T}]^\mathrm{T}=(\frac{1}{n})\sum _{i=1}^{n}[\bar{\varPsi }_i^\mathrm{T}, \bar{I}_i^\mathrm{T} ]^\mathrm{T}\). To extract the shape–intensity variabilities, \([\varPsi ^\mathrm{T},I^\mathrm{T}]^\mathrm{T}\) is subtracted from each of the training set and the result is placed as a column vector in a \(N \times n\) matrix S (where \(N = 2N_1 \times N_2 \times m\)). An estimate of the shape–intensity pair \([\varPsi ^\mathrm{T},I^\mathrm{T}]^\mathrm{T}\) can be represented by k principal components and a k-dimensional vector of coefficients \(\alpha \) (where \(k < n\)):

where \(U_k\) is a \(N \times k\) matrix consisting of the first k columns of matrix U.

Under the assumption of a Gaussian distribution of a shape–intensity pair represented by \(\alpha \), the joint probability \(p(\varphi ,I)\) of a certain shape \(\varphi \) and the related image intensity I, is incorporated to add robustness against noisy data \(p_B(\varphi )\), can be represented by

where \(\mu \) is a scalar factor. Substitute Eqs. (2) and (4) into Eq. (1), we derive \(\hat{\varphi }\), which is the minimizer of the following energy functional \(E(\varphi )\) shown below

This minimization problem can be formulated and solved using the level set method.

Level set formulation of the model and surface evolution with probability map constrained

In the level set method, \(\varphi \) is the zero level set of a higher-dimensional level set function \(\phi \), i.e., \(\varphi = \{\mathbf{x }|\phi (\mathbf{x } = 0)\}\). The evolution of the boundary is given by the zero-level surface at time t of the function \(\phi (t, \mathbf x )\). We define \(\phi \) to be positive outside \(\varphi \) and negative inside \(\varphi \).

For the level set formulation of our model, using the technique developed in [7], we replace \(\varphi \) with \(\phi \) in the energy functional in Eq. (5) using regularized versions of the Heaviside function H and the Dirac function \(\delta \), denoted by \(H_{\varepsilon }\) and \(\delta _{\varepsilon }\):

where \(\varOmega \) denotes the image domain, \(\varOmega _{\varphi }\) denotes the region of bounding box of MLLR, \(G(\cdot )\) is an operator to form the column vector of a matrix by column scanning. \(H_{\varepsilon }\) and \(\delta _{\varepsilon }\) are defined by Chan et al. [7]. The mean and the variance are given by

We set up \(p(\alpha )\) from the training set using the principal component analysis. Given the surface \(\phi \) at time t, we first compute the constants \(c_1(\phi ^t), \sigma _1(\phi ^t), c_2(\phi ^t), \sigma _2(\phi ^t)\) and then update \(\phi ^{t+1}\). This process is repeated until convergence. An example of the final converged result is shown in Fig. 2f.

Experimental results

Twenty clinical volumes with reference segmentations from the training set of the MICCAI’s 2007 workshop [24] are used to construct liver shape intensity models. Our test experiments are divided into three parts. In the first part, 25 hepatic CT cases from our partner site were used for evaluating the segmentation accuracy. These 25 selected datasets include two difficult situations: (1) 10 cases containing small liver tumors (tumor volume being \(<\)20 % of the whole liver volume; 4 at the border, 6 inside; 8 homogeneous intensity, 2 heterogeneous intensity); and (2) 15 cases having neighboring organs with similar gray values. Note that tumor volume estimations are made by segmenting the tumor region [25] and counting the number of voxels of the tumor region.

In the second part, 7 hepatic CT cases with large liver tumors were used for showing the influence of the tumor size on the segmentation result. These tumor volume percentages (ratio of tumor volume/liver volume) ranged from 23.2 to 47.3 %. In these two stages, CT images were generated by a Brilliance 64 of the Philips Medical Systems. All the patients were imaged by a common protocol (120 kV/Auto mA, helical pitch: 1.35/1). The image size varied from \(512 \times 512 \times 210\) to \(512 \times 512 \times 540\) voxels, with pixel sizes varying from 0.51 to 0.87 mm and interslice distance ranging from 0.8 to 3.0 mm.

In the final part, our method is tested on 10 MICCAI test datasets, and its results are compared with the other automated algorithms on the site as of July 2015. Parameters used in our experiment are all set to be the following values: \(\lambda = 0.2, \omega = 0.8, \mu = 0.0001 \times 255 \times 255\).

Test on 25 hepatic CT cases

In this section, our method is evaluated using three quality measures: average symmetric surface distance (ASD), root mean square symmetric surface distance (RMSD), and maximum symmetric surface distance (MSD) [26], and its results are compared with two related methods (Linguraru’s and Wolz’s methods). Moreover, we demonstrate further visual segmentation results of three different methods (Linguraru’s, Wolz’s and our methods) on some challenging datasets. These datasets include two difficult situations: (1) liver tissue containing severe pathological abnormalities; and (2) liver tissue having neighboring organs with similar gray values.

Quantitative analysis of segmentation accuracy

Figure 3a shows the ASD error using 25 datasets for each of three different segmentation methods. The average ASD is 1.57 \(\pm \) 0.34 mm (range 1.12–2.57 mm) by Linguraru’s method, 1.48 \(\pm \) 0.35 mm (range 1.02–2.47 mm) by Wolz’s method, and 1.09 \(\pm \) 0.34 mm (range 0.62–2.12 mm) by our method.

Figure 3b shows the RMSD error using 25 datasets for each of three different segmentation methods. The average RMSD error is 2.33 \(\pm \) 0.47 mm (range 1.57–3.48 mm) by Linguraru’s method, 2.23 \(\pm \) 0.46 mm (range 1.46–3.41 mm) by Wolz’s method, and 1.72 \(\pm \) 0.46 mm (range 0.97–3.01 mm) by our method.

Figure 3c shows the MSD errors using 25 datasets for each of three different segmentation methods. The average MSD error is 21.11 \(\pm \) 4.6 mm (range 14.81–33.01 mm) by Linguraru’s method, 20.1 \(\pm \) 4.45 mm (range 13.39–31.37 mm) by Wolz’s method, and 18.04 \(\pm \) 3.51 mm (range 12.73–26.67 mm) by our method.

Quantitative results show that the proposed method achieved the highest segmentation accuracy among the three approaches. This is because Linguraru’s and Wolz’s methods are actually an atlas-based method, and they require a large amount of training data for obtaining a satisfactory segmentation result. In the present study, 20 clinical volumes with reference segmentations from the MICCAI’s 2007 workshop are used as the training set. These limited training data cannot present all possible shape variations. Conversely, our method does not need a large amount of training data for obtaining a satisfactory segmentation result. Because the weighted probabilistic atlas was used for obtaining a rough liver region, a constrained active contour model is proposed to refine the segmentation.

Visual segmentation results on hepatic cases containing severe pathological abnormalities

Figure 4 shows the 2D images of segmentation results obtained from the three methods (Linguraru’s, Wolz’s and our methods) on three representative cases containing severe pathological abnormalities. Our method produced the liver segmentations that have level of variability similar to those obtained from the manual segmentation. It is observed that in comparison with our method, Linguraru’s and Wolz’s methods gave the under-segmentation of the livers due to the influence of tumors.

2D images of segmentation results on three representative liver tissues containing tumor. The first row, the second row and third row illustrate three different liver cases with tumor, respectively. First column shows the Linguraru’s segmentation, the second column shows the Wolz’s segmentation, and the third column shows our segmentation. The segmentation results obtained from each of computational methods (Linguraru’s, Wolz’s and our methods) and manual segmentations (ground truth segmentations) are shown in blue and red, respectively. The black arrows indicate liver tumors

3D visual representation of livers segmented by our method on three representative liver tissues containing tumor. 3D views of our segmentation results are of the same segmentation seen from the third column of Fig. 4. Graphs indicate the ASD error between liver surface segmented by our method and ground truth segmentation. Color bars are in mm. Red and green indicate large and small ASD errors, respectively

Figure 5 depicts the 3D visual results of the proposed method for the same segmentation seen from the third column of Fig. 4. The 3D visualization of errors is based on the average ASD error between our segmentation result and manual segmentation (ground truth segmentations). In the figure, the ASD distance errors were 1.31, 1.22 and 1.46 mm for the three datasets (from left to right), respectively.

2D images of segmentation results on five representative cases that liver tissue is adjacent to the other organ. Linguraru’s segmentation is in the first column, the second column shows the Wolz’s segmentation, the third column shows our segmentation. The segmentation results obtained from each of three computational methods and the ground truth segmentations are shown in blue and red, respectively. a Liver being adjacent to the kidney. The black arrows indicate the kidney. b Liver being adjacent to the heart. The black arrows indicate the heart. c Liver being adjacent to the gallbladder. The black arrows indicate the gallbladder. d Liver being adjacent to the stomach. The black arrows indicate the stomach. e Liver being adjacent to the muscles. The black arrows indicate the muscles

Visual segmentation results on hepatic cases having neighboring organs with similar gray values

In Fig. 6, we demonstrate the 2D visual segmentation results of three different methods (Linguraru’s, Wolz’s and our methods) on five challenging datasets. In these difficult cases, the liver tissue has to be separated from neighboring organs with similar intensity values, which include the kidney (Fig. 6a), the heart (Fig. 6b), the gallbladder (Fig. 6c), the stomach (Fig. 6d) and the muscles (Fig. 6e), respectively. As shown in Fig. 6, Linguraru’s and Wolz’s methods easily leaked into these neighboring organs with similar intensity values and over-segmented the live tissue, while our method successfully excluded them and achieved the higher accuracy.

Figure 7 shows the 3D visual results of the proposed method for the same segmentation seen from the third column of Fig. 6. Segmentation of the image data demonstrated the ASD errors between our segmentation result and ground truth segmentations in five cases, the ASD errors of the five cases ranged from 0.71 to 1.25 mm (mean 0.92 \(\pm \) 0.28 mm).

Influence of the tumor size on the segmentation result

Seven hepatic CT cases with large liver tumor were used for showing the influence of the tumor size on the segmentation result. These tumor volume percentages (ratio of tumor volume/liver volume) ranged from 23.2 to 47.3 %. One of these cases and its visual comparisons among three methods are shown in Fig. 8.

Visual representation of 3D livers segmented by our method on five representative cases that liver tissue is adjacent to the other organ. 3D views of our segmentation results are of the same segmentation seen from the third column of Fig. 6. Graphs indicate the ASD error between liver surface segmented by our method and ground truth segmentation. a Liver being adjacent to the kidney. b Liver being adjacent to the heart. c Liver being adjacent to the gallbladder. d Liver being adjacent to the stomach. e Liver being adjacent to the muscles. Color bars are in mm. Red and green indicate large and small ASD errors, respectively

2D images of segmentation results on one representative cases that tumor size accounts for about 42 %. The segmentation results obtained from each of three computational methods and ground truth segmentations are shown in blue and red, respectively. a Linguraru’s result. b Wolz’s result. c Our result

We also calculated the ASD, RMSD and MSD errors using seven datasets with large liver tumor for each of three different segmentation methods, respectively (Fig. 9). We find that when the tumor volume is 35 % or more, three methods introduce large segmentation errors. The reason for this is because large tumor changes the internal homogeneity and CT intensity value of liver tissue considerably. Thus, our method cannot evolve correctly toward the desired contour. Moreover, Linguraru’s and Wolz’s methods require highly accurate intensity-based registration, which may be itself a difficult task.

Evaluation on 10 MICCAI test datasets

Evaluation of MICCAI test data was performed by the organizers of the “SLIVER07” Web site. These organizers applied the same tools and scoring system that they used in the MICCAI workshop for liver segmentation in 2007, and this tool calculates the same five measures as “SLIVER07.” The evaluation framework consists of three categories that describe the amount of user interaction required: Automatic method, methods with minimum user interaction (semi-automatic) and interactive methods. Our evaluation results are summarized in Table 1 with an average overall score of 74.9, which ranks 10th in all the 47 automatic algorithms on the site as of July 2015.

Discussion

We improved the level set method by taking account into the statistical model information as shape energy. The sign distance function was applied to represent the implicit surface representation which allowed the PCA construction without the need of landmark correspondence.

All the methods have been programmed with MATLAB R2010a in an Intel (R) Core (TM) i7-4770 computer, 3.40 GHz, 16G RAM. The processing time will be a little less in Microsoft Visual C++ compiler environment than that in MATLAB environment. However, for an experienced radiologist it is required to take more than 2 h to segment the liver from abdominal CT images manually in a scan with slice by slice, so our approach is fast and suitable for medical application. In our experiments, the execution time for performing automatic segmentation on a personal computer was \(<\)7 min in MATLAB environment, which was mainly exhausted on the minimization problem solved by suing the level set method. The processing time for the 25 patient datasets was about 2 s per slice.

Probabilistic atlas-based methods were introduced to describe and capture more a priori information on the intensity distribution, shape, size, or position of abdominal organs and brain regions [27, 28]. The probabilistic atlas provides prior statistical information as partial prior knowledge under the Bayesian framework. However, some liver tissues are affected by hepatic diseases that may change its shape, so it is difficult to construct atlas of liver tissue with diseases. For example, the morphological change in cirrhosis is extensive, and a partially transplanted liver does not have a regular shape. Therefore, constructing such an atlas may lose some possible shape variations.

To overcome this problem, Okada et al. [8] used both a probabilistic atlas (PA) and a statistical shape model (SSM). Voxel-based segmentation with a PA is first performed to obtain a liver region, and then the obtained region is used as the initial region for subsequent SSM fitting to 3D CT images. Li et al. [28] propose a new joint probabilistic model of shape and intensity to segment multiple abdominal organs simultaneously. The model is based on the hypothesis that the shape distribution and intensity distribution of a specific type of organ can be statistically modeled as finite Gaussian distributions. This model is estimated by using maximum a posteriori (MAP) under a Bayesian framework. The variations of the object are captured through an implicit low-dimensional PCA. Li et al. use the probabilistic principle component analysis (PCA) optimized by EM to estimate the variations from a large number of training datasets based on the signed distance function representation of volumetric data.

In the present study, the most likely liver region is determined based on a subject-specific probabilistic atlas, and a MAP classification of probability map is performed to obtain a rough liver region in the most likely liver region. In the subsequent step, to capture the shape variations, we define a joint probability distribution over the variations of the object shape and the gray levels contained in a set of training images. By estimating the MAP shape of the object, we formulate the shape–intensity model in terms of level set functions. The shape–intensity joint prior information constrain is incorporated into an active contour model based on the level set method to steer the surface evolution. The contour evolves both according to the shape–intensity joint prior information and the image gray level information.

Conclusion

This paper proposed a shape–intensity prior level set method for liver segmentation from abdominal CT images using probabilistic atlas and probability map constrains. The applicability of the proposed method to some challenging clinical problems and the segmentation of the liver are demonstrated with good results on both quantitative and qualitative experimentations; our segmentation algorithm can delineate liver boundaries that have level of variability similar to those obtained manually.

In future, we plan to test our algorithm on a larger database and extend our work to multi-organ segmentation.

References

Meinzer HP, Thorn M, Crdenas CE (2002) Computerized planning of liver surgery—an overview. Comput Graph 26(4):569–576

Masumoto J, Hori M, Sato Y, Murakami T, Johkoh T, Nakamura H, Tamura S (2003) Automated liver segmentation using multislice CT images. Syst Comput 34(9):71–82

Shiffman S, Rubin GD, Napel S (2000) Medical image segmentation using analysis of isolable-contour maps. IEEE Trans Med Imaging 19(11):1064–1074

Bae KT, Giger ML, Chen CT, Kahn CE Jr (1993) Automatic segmentation of liver structure in CT images. Med Phys 20(1):71–78

Ruskó L, Bekes G, Fidrich M (2009) Automatic segmentation of the liver from multi- and single-phase contrast-enhanced CT images. Med Image Anal 13(6):871–882

Selver MA, Kocaoǧlu A, Demir GK, Doanǧ H, Dicle O, Güzeliş C (2008) Patient oriented and robust automatic liver segmentation for pre-evaluation of liver transplantation. Comput Biol Med 38(7):765–784

Chan TF, Vese LA (2001) Active contours without edges. IEEE Trans Image Process 10(2):266–277

Okada T, Shimada R, Hori M, Nakamoto M, Chen Y-W, Nakamura H, Sato Y (2008) Automated segmentation of the liver from 3D CT images using probabilistic atlas and multilevel statistical shape model. Acad Radiol 15(11):1390–1403

So R, Chung A (2009) Multi-level non-rigid image registration using graph-cuts. In: IEEE international conference on acoustics, speech and signal processing, 2009. ICASSP 2009. IEEE, pp 397–400

Wimmer A, Soza G, Hornegger J (2009) A generic probabilistic active shape model for organ segmentation. In: MICCAI. Springer, pp 26–33

Chu C, Oda M, Kitasaka T, Misawa K, Fujiwara M, Hayashi Y, Wolz R, Rueckert D, Mori K (2013) Multi-organ segmentation from 3D abdominal CT images using patient-specific weighted-probabilistic atlas. In: SPIE medical imaging. International Society for Optics and Photonics, pp 86693–86697

Linguraru MG, Sandberg JK, Li Z, Pura JA, Summers RM (2009) Atlas-based automated segmentation of spleen and liver using adaptive enhancement estimation. In: MICCAI. Springer, pp 1001–1008

Cootes TF, Taylor CJ, Cooper DH, Graham J (1995) Active shape models-their training and application. Comput Vis Image Underst 61(1):38–59

Park H, Bland PH, Meyer CR (2003) Construction of an abdominal probabilistic atlas and its application in segmentation. IEEE Trans Med Imaging 22(4):483–492

Okada T, Yokota K, Hori M, Nakamoto M, Nakamura H, Sato Y (2008) Construction of hierarchical multi-organ statistical atlases and their application to multi-organ segmentation from CT images. In: MICCAI. Springer, pp 502–509

Oda M, Nakaoka T, Kitasaka T, Furukawa K, Misawa K, Fujiwara M, Mori K (2012) Organ segmentation from 3D abdominal CT images based on atlas selection and graph cut. In: Abdominal Imaging. Computational and clinical applications. Springer, pp 181–188

Shimizu A, Ohno R, Ikegami T, Kobatake H, Nawano S, Smutek D (2007) Segmentation of multiple organs in non-contrast 3D abdominal CT images. Int J Comput Assist Radiol Surg 2(3–4):135–142

Wolz R, Chu C, Misawa K, Mori K, Rueckert D (2012) Multi-organ abdominal CT segmentation using hierarchically weighted subject-specific atlases. In: MICCAI. Springer, pp 10–17

Oliveira DA, Feitosa RQ, Correia MM (2011) Segmentation of liver, its vessels and lesions from CT images for surgical planning. Biomed Eng Online 10(1):30

Yang J, Duncan JS (2004) 3D image segmentation of deformable objects with joint shape–intensity prior models using level sets. Med Image Anal 8(3):285–294

Li BN, Chui CK, Chang S, Ong SH (2011) Integrating spatial fuzzy clustering with level set methods for automated medical image segmentation. Comput in Biol Med 41(1):1–10

Linguraru MG, Richbourg WJ, Watt JM, Pamulapati V, Summers RM (2012) Liver and tumor segmentation and analysis from CT of diseased patients via a generic affine invariant shape parameterization and graph cuts. In: Abdominal imaging. Computational and clinical applications. Springer, pp 198–206

Perona P, Malik J (1990) Scale-space and edge detection using anisotropic diffusion. IEEE Trans Pattern Anal Mach Intell 12(7):629–639

Van Ginneken B, Heimann T, Styner M (2007) 3D segmentation in the clinic: a grand challenge. 3D segmentation in the clinic: a grand challenge, pp 7–15

Chi Y, Zhou J, Venkatesh SK, Huang S, Tian Q, Hennedige T, Liu J (2013) Computer-aided focal liver lesion detection. Int J Comput Assist Radiol Surg 8(4):511–525

Heimann T, Van Ginneken B, Styner M et al (2009) Comparison and evaluation of methods for liver segmentation from CT datasets. IEEE Trans Med Imaging 28(8):1251–1265

Zhou X, Kitagawa T, Hara T, Fujita H, Zhang X, Yokoyama R, Kondo H, Kanematsu M, Hoshi H (2006) Constructing a probabilistic model for automated liver region segmentation using non-contrast X-ray torso CT images. In: MICCAI. Springer, pp 856–863

Li C, Wang X, Li J, Eberl S, Fulham M, Yin Y, Feng DD (2013) Joint probabilistic model of shape and intensity for multiple abdominal organ segmentation from volumetric CT images. IEEE Trans Inf Technol Biomed 17(1):92–102

Acknowledgments

The authors would like to thank the anonymous reviewers for their valuable comments and help suggestions that greatly improved the papers quality. This work was supported in part by the National Natural Science Foundation of China under Grant No. 61571158; the Scientific Research Fund of Heilongjiang Provincial Education Department (No. 12541164), and the Nature Science Foundation of Heilongjiang Province of China (No. F2015005).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

There is no conflict of interest in this study.

Rights and permissions

About this article

Cite this article

Wang, J., Cheng, Y., Guo, C. et al. Shape–intensity prior level set combining probabilistic atlas and probability map constrains for automatic liver segmentation from abdominal CT images. Int J CARS 11, 817–826 (2016). https://doi.org/10.1007/s11548-015-1332-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-015-1332-9