Abstract

Artificial intelligence (AI) is entering the clinical arena, and in the early stage, its implementation will be focused on the automatization tasks, improving diagnostic accuracy and reducing reading time. Many studies investigate the potential role of AI to support cardiac radiologist in their day-to-day tasks, assisting in segmentation, quantification, and reporting tasks. In addition, AI algorithms can be also utilized to optimize image reconstruction and image quality. Since these algorithms will play an important role in the field of cardiac radiology, it is increasingly important for radiologists to be familiar with the potential applications of AI. The main focus of this article is to provide an overview of cardiac-related AI applications for CT and MRI studies, as well as non-imaging-based applications for reporting and image optimization.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The concept of artificial intelligence (AI) has first been mentioned in the 1950s [1, 2]. The field of AI has made tremendous progress since then, especially in the last few decades due to technological innovations in computing power and increased availability of data. Along with these technological developments, there has been a significant increase in attention to AI research and development by government, academic, and private sectors resulting in an increase in investment of resources [3,4,5,6,7]. In recent years, the medical community has joined these efforts to develop and implement AI applications for medical-related purposes. Radiology has especially proven to be an excellent field for AI applications, with one of its major focuses being pattern recognition. AI is well on its way to become an integral part of daily clinical practice and has the ability to reduce workload and cost while increasing efficiency and improving patient care.

Within radiology, and especially cardiac radiology, there has been a steep increase in the volume of radiological imaging exams per day. The clinical acceptance and recommendations for the standardized use of coronary computed tomography angiography (CCTA), calcium scoring, and interest in screening programs [8,9,10,11,12,13,14] are expected to further increase the number of cardiac examinations. This increased workload puts a heavy demand on the radiologist, where studies estimate that an average radiologist may have to interpret up to one image every 3–4 s over an 8-h workday [15, 16].

Current cardiac AI applications are mostly designed to be integrated in the current radiology workflow, with the main goal being to assist radiologists with routine tasks, reducing workload, and increasing efficiency of patient care. Recently, a variety of studies have been published on AI applications for cardiac imaging purposes. AI can offer solutions for several parts of the imaging workflow, see Fig. 1. While AI-based applications can play a role in many different steps of the radiology workflow, currently they cannot simply replace it a radiologist entirely. Dependent on the application, human support or supervision is needed [17].

This article will provide an overview of cardiac-related AI applications for analysis of CT and MRI images, as well as some general applications for reporting and image optimization. The main focus of this article is to give an overview of the status of AI in the field of cardiac imaging in order to pave the road to clinical implementation of these applications.

Basic AI principles

To optimally understand the function of AI applications, some basic knowledge of the core pillars of AI is highly desired. The core ingredients of AI are computing resources and infrastructure, data, and algorithms.

Computing resources and infrastructure

The amount of computational power available for AI development and training has doubled every 3.4 months since 2012 [18]. The development of the field of AI has been made possible by the availability of faster CPUs, the use of graphics processing units (GPU), and overall better software infrastructure for distributed computing. In addition, when on-site computing power is not available, cloud computing services are now available for off-site training.

Data

In order to create clinically relevant AI algorithms, it is essential to have access to large datasets. Particularly imaging-related tasks require large amounts of data to reach good accuracy. The ideal dataset should include data from all manufacturers, scanner systems, and clinical settings (e.g., hospitals and outpatient imaging centers) and be a good representation of the clinical population to allow for generalization over multiple centers, cities, and countries. For the training phase, it is essential that these images are labeled according to their reference standard. Currently, there are no specific guidelines for this labeling process.

Ideally, a training, validation, and test set is used for AI application development. The training dataset is used to learn the parameters of the algorithm and form the general structure of the algorithm. After training the algorithm, the validation set is used to optimize the learning behavior of the algorithm. The test set is used to evaluate the performance of the optimized algorithm. Often only a training and test set is used, where 70–80% is used for model training (training and validation set combined) and 20–30% is used for performance evaluation. It is essential that the test set is independent of the data used for training and validation without overlapping observations [19].

Algorithms

A term commonly used in AI-related research is machine learning (ML), which refers to the ability of machines to “learn.” In recent years, the term deep learning (DL) has been introduced in the field of AI. DL itself can be considered as a subset of ML. DL is the next step in the evolution of AI, developed to accomplish ML. ML requires features to be manually provided to the algorithms, whereas DL can automatically create these features. DL approaches require considerably larger amounts of data and computing power. For a more elaborate overview of AI algorithms, tasks, and development, we refer to the following review paper [20].

Non-imaging applications

Workflow and image reconstruction

Several AI algorithms have been developed with the main goal of optimizing image quality and reconstruction. In these algorithms, a diagnostic-quality or “clean” data sample is the desired output, while a corrupted data sample is given as input.

Image reconstruction applications utilizing AI can optimize image quality from images taken with lower quality settings (e.g., a low-dose CT acquisition). For example, Wolterink et al. trained a generator convolutional neural network (CNN) to make image quality from low-dose CT images nearly equivalent to those of regular dose CT images with the use of an anthropomorphic phantom, non-contrast cardiac CT images taken at 20% and 100% of normal radiation dose [21]. They showed excellent results and were able to perform accurate calcium analysis on the AI-reconstructed images. Shan et al. designed a modularized neural network for the reconstruction of low-dose CT acquisitions and compared it with commercial iterative reconstruction methods from three leading CT vendors. Their study confirms that their AI approach performs either better or comparably in terms of noise suppression and structural fidelity and is much faster than commercial iterative reconstruction algorithms [22].

For cardiac magnetic resonance imaging (MRI), these algorithms mainly focus on the reconstruction of undersampled images and the detection of corrupted or incorrectly segmented data. Qin et al. developed a convolutional recurrent neural network (CRNN) architecture which reconstructs high-quality cardiac MR images (cardiac cine MRI SSFP acquisitions) from highly undersampled k-space data, which outperformed current MRI reconstruction methods in terms of reconstruction accuracy and speed [23, 24] Using these types of AI applications may allow for substantially accelerated cardiac MRI acquisitions.

In cardiac MRI, image quality and acquisition time are dependent on the ability to optimize acquisition parameters according to the individual patient, which in turn helps prevent or reduce artifact from cardiac and respiratory motion. Tarroni et al. propose an AI-based quality control pipeline application for cardiac MRI, specifically validated on 2D cine SSFP short-axis images. Their algorithm performs heart coverage estimation, inter-slice motion detection, and image contrast estimation in the cardiac region, which are all aspects important for quality control. The results show the capability to correctly detect incomplete or corrupted scans. This will allow timely exclusion of these scans and can trigger the need for a new acquisition within the same examination [25].

Reporting

With increasing imaging volumes and complexity, there is a significant clinical interest optimizing the reporting process in order to improve workflow efficiency. Thus, it reduces time spent on reporting.

Considering the increasing numbers of CCTA, and the high pretest probability showing that prevalence of coronary artery disease (CAD) is extremely low [26,27,28], this will result in a huge number of could be negative for CCTA images for CAD evaluation. It would be beneficial to reduce the amount of time radiologist spends on reporting CCTA. Additionally, there is an increasing utilization of structured reporting for all modalities in order to improve communication to referring providers and management guidance. For this purpose, the Coronary Artery Disease-Reporting and Data System (CAD-RADS) was developed by the Society of Cardiovascular Computed Tomography (SCCT), the American College of Radiology (ACR), and the North American Society for Cardiovascular Imaging (NASCI) in 2016. CAD-RADS has been endorsed by the American College of Cardiology (ACC) [29].

Therefore, in the next future, we see and expect a further focus of AI application to optimize reporting and reduce time needed for this process.

Accordingly, research and development of AI applications aimed at optimizing CAD-RADS reporting have been published. Muscogiuri et al. developed a deep learning-based AI algorithm allowing to discriminate patients without CAD (CAD-RADS 0) and patients with CAD (CAD-RADS > 0) in 1.40 min. Results show an excellent performance with a sensitivity, specificity, negative predictive value, positive predictive value, accuracy, and area under curve of 66%, 91%, 92%, 63%, 86%, 89% [30]. Therefore, the authors speculate that in the future, this approach could be helpful in clinical practice allowing preselect CCTA acquisitions that need to be reported in detail for positive CAD findings [30].

Imaging applications

CT

Calcium scoring

Coronary artery calcium (CAC) scoring is a robust technique for CAD identification and risk stratification and is considered as an independent predictor of adverse cardiovascular events [31,32,33,34,35,36]. The amount of CAC volume can be quantified using the Agatston scoring method when applied to non-contrast ECG-gated coronary CT images. The CAC Agatston score increases with increased calcification volume and/or density [37]. Semiautomatic segmentation and quantification of Agatston scores, calcium volume, and mass are a time-consuming process, but automatic quantification is not always accurate and reproducible [38]. Moreover, image quality can be deteriorated by image noise, motion artifacts, or blooming artifact from extensively calcified vessels or devices. In total, the CAC scoring process is often a time-consuming process, making it an ideal candidate for time-saving AI applications.

Excellent results were reported in the study performed by Sandstedt et al. describing the application of AI-based automatic calcium score evaluation on non-contrast CT images, compared to semiautomatic software as references in 315 patients [39]. Excellent agreement was described for Agatston score, volume score, mass score, and the number of calcified coronary lesions with an ICC of 0.996, 0.996, 0.991, and 0.977, respectively. Zhang et al. [40] described the application of a deep convolutional neural network (CNN) trained on CT images in a phantom study to correct motion artifacts. Three artificial calcified coronaries were installed in a moving robotic arm and Agatston score taken as output. An accuracy of 88.3 ± 4.9% was reported for heavy calcified plaques, and the sensitivity increased from 65 to 85% for the detection of calcifications using this AI approach. Thus, CNN showed the ability to classify motion-induced blurred images and correct calcium scores, reducing Agatston score variation. A study by Išgum et al. showed an automated method for coronary calcium detection for the automated risk assessment of CAD on non-contrast, ECG-gated CT scans of the heart. They reported a detection rate of 73.8% of coronary calcification. After a calcium score was calculated, 93.4% of patients were classified into the correct risk category [41].

Calcium score may be also used as an initial step for CAD distribution. Raw data from calcium score acquisitions were also used to predict the severity of plaque burden and to exclude the presence of obstructive plaques. From a training population of 435 patients with low-moderate risk of CAD, Glowacki et al. [42] created a gradient boosting machine (GBM) to predict obstructive CAD, validating the algorithm on a control population of 126 consecutive patients. Sensitivity and a specificity of 100% and 69.8% were reported, while the NPV and PPV were 100% and 38%, respectively.

Although previous studies used dedicated calcium scoring scans, with the increasing numbers of non-gated chest CT for lung cancer screening, several studies have shown the feasibility of CAC scoring in non-cardiac scans as well. Takx et al. applied a machine learning approach that identified coronary calcifications and calculated the Agatston score using a supervised pattern recognition system with k-nearest neighbor and support vector machine classifiers in low-dose, non-contrast enhanced, non-ECG-gated chest CT within a lung cancer screening program. Their results show that their fully automated CAC scoring algorithm was able to perform CAC scoring in non-gated chest CTs with acceptable reliability and agreement. However, the absolute calcium volume was underestimated when compared to dedicated ECG-triggered CAC score acquisitions [43].

Additionally, calcium score quantification can be performed on coronary CT angiography (CCTA) images using AI algorithms. Wolterink et al. [44] described a supervised learning method to directly identify and quantified calcified plaques in 250 patients. Both calcium scoring CT and cardiac CT angiography (CCTA) acquisition were available. The first step was to create a bounding box algorithm employing a combination of three CNNs, each detecting the heart in a different orthogonal plane (axial, sagittal, coronal) on a training dataset of 50 patients. The remaining 200 patients were divided into training (100) and validation (100) sets to evaluate the model for calcium detection. The best performance obtained a 72% sensitivity, whereas the interclass correlation between calcium scoring CT and CCTA was 0.944. This approach might reduce radiation exposure by deriving calcium information directly from CT angiographic acquisition.

A recent study by van Velzen et al. [45] evaluated the performance of a DL method, using two consecutive CNNs, for automatic calcium scoring across a wide range of CT examination types. The study included 7240 participants who underwent various types of non-enhanced cardiac/chest CT examinations including CAC scoring CT, PET attenuation correction CT, radiation therapy treatment planning CT, and low-dose and standard-dose CT of the chest. They showed that, independent of the type of scan acquisition, their AI approach showed good correlation with manual CAC scoring, with ICC ranging between 0.79 and 0.97 [45]. This study indicates that AI algorithms can assist in the quantification of coronary calcium in a clinical setting using a wide variety of Ct acquisitions, proving its clinical potential.

CCTA stenosis degree and plaque morphology

The role of CCTA in the evaluation of coronary stenosis has been extensively investigated both quantitatively and functionally [46,47,48,49,50]. The analysis of CCTA scans for manifestations of coronary artery disease allows excellent visualization of the coronary arteries with high spatial resolution, but nevertheless, its analysis can also be time-consuming and susceptible to inter-observer variability.

The recent development of AI-based algorithms in cardiac applications, including plaque characterization, may accelerate the clinical implementation of quantitative automated imaging technology aiding the diagnosis and prognosis of CAD.

The management of patient with CAD may differ based on plaque morphology and percent stenosis. Zreik et al. used a 3D convolutional neural network to extract coronary artery features by using 98 patients for the training and 65 patients for the validation sets [51]. Then, two simultaneous multi-class classification tasks were created to detect and characterize the type of the coronary artery plaque and determine the anatomical significance of stenosis, returning an accuracy of 0.77 and 0.80, respectively [51].

Utsunomiya and colleagues [52] used a random forest algorithm to identify the ischemia-related lesions. Different quantitative features were analyzed, such as severity of stenosis, lesion length, CT attenuation value, and calcium quantification. These were all significantly associated with ischemia-related features on univariate analysis, whereas only severity of stenosis and lesion length were significantly associated with ischemia on a multivariate analysis, with an AUC of 0.95 for random forest analysis [52].

Kang et al. [53] developed an automated method for detecting coronary artery lesions, utilizing a two-step algorithm that relied on support vector machines. The automated method was then used to detect coronary stenosis and compared against three experienced readers in 42 CCTA datasets, showing promising results for coronary stenosis detection with a sensitivity, specificity, diagnostic accuracy, and AUC of 93%, 95%, 94%, and 0.94 [53].

ML-based approaches could reduce inter-operator variability in stenosis detection. Recently, Yoneyama et al. investigated the detection of stenosis detection by using an artificial neural network (ANN) in a hybrid study combining CCTA and myocardial perfusion SPECT [54]. Results showed that the model was similar to an experienced reader in diagnostic accuracy, with a sensitivity and specificity of 84.7% and 72.2%, respectively [54].

Besides the detection and quantification of coronary stenoses, plaque characterization parameters for the prediction of hemodynamical significance of stenosis are another application that is highly time-consuming and operator-dependent. In a study by Hell et al., they utilized an AI-based software (AUTOPLAQ) to derive the contrast density difference (CDD), defined as the maximum percent difference of contrast densities within an individual lesion to help predict the hemodynamic relevance of a given coronary artery lesion [55]. They found that CDD was significantly increased in hemodynamically relevant lesions and could predict hemodynamical significance of the lesions with a specificity of 75% and negative predictive value of 73% when compared to invasive FFR. Using the same software, another group used imaging features provided to a LogitBoost algorithm to generate an integrated ischemia risk score to predict hemodynamic significance of coronary stenoses [56]. Their approach resulted in higher AUC (0.84) compared to manual CCTA analysis of individual features.

Another approach for the evaluation of stenosis significance was shown by Van Hamersvelt et al. [57]. In this study, the authors analyzed the myocardium for the detection of hemodynamically significant stenosis using FFR as a reference. The authors highlight the possibility to detect ischemia using a rest CCTA acquisition with high diagnostic accuracy compared to standard anatomical stenosis evaluation on CCTA (AUC 0.76 vs. 0.68) [57]. This finding is interesting because it changes the paradigm of ischemia detection based on anatomical evaluation of coronaries using a rest CCTA acquisition.

Currently, several vendors are developing AI-based software package for comprehensive cardiac CT evaluation, including CAC scoring and stenosis evaluation, see Fig. 2. Although vendor specific, these packages offer workflow integrated options for fully automated quantitative analysis.

CT-FFR

Invasive fractional flow reserve (FFR) performed during invasive coronary angiography is considered the reference standard for assessing the hemodynamic significance of coronary lesions. FFR derived from CCTA images (CT-FFR) is gaining an important role by providing anatomic and functional information in a single noninvasive examination [58,59,60,61]. In addition, CT-FFR, in combination with plaque morphology and composition, can add additional diagnostic value [62, 63].

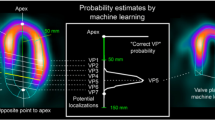

Recently, novel approaches utilizing AI methods for the computation of CT-FFR based have been described as “machine-learning based” CT-FFR (CT-FFRML), see Fig. 3 [63,64,65,66]. Initial results of CT-FFRML were described in the retrospective multicenter MACHINE registry study, where a machine learning approach was performed on 525 vessels from 5 sites in the USA, Europe, and Asia. The results showed higher diagnostic accuracy and sensitivity of CT-FFR compared to CCTA alone (78% vs 58% and 76% vs 38%, respectively) [66]. After the MACHINE registry results were published, several studies investigated the potential application of CT-FFRML. One such study from Tesche et al. [63] compared CT-FFRML to coronary CT angiography and quantitative coronary angiography (QCA), showing a per-lesion sensitivity and specificity of 79% and 94%, respectively. Von Knebel Doeberitz et al. [67] aimed at investigating the deep machine learning-based fractional flow reserve to identify lesion-specific ischemia using invasive FFR as the reference standard. One-hundred and three plaques were identified, and of these, 32/103 (31.1%) lesions were hemodynamically significant. A CT-FFRML of ≤ 0.80 was significantly associated with lesion-specific ischemia (OR 0.81, p < 0.01), returning an AUC of 0.89 to discriminate significant from nonsignificant plaques, with a sensitivity, specificity, PPV, and NPV of 82%, 94%, 88%, and 92%, respectively.

A machine learning approach may also guide therapeutic decision-making and correlate with patient outcomes. Qiao et al. [68] investigated the impact of CT-FFRML on treatment decision and patient outcomes in 1121 consecutive patients and showed a superior prognostic value of CT-FFRML for severe stenosis (HR, 6.84, p < 0.001) compared to CCTA (HR 1.47, p = 0.045) and ICA (HR 1.84, p = 0.002). In addition, a change in the proposed therapeutic management was seen in 167 patients (14.9%) after CT-FFRML results, concluding that CT-FFRML may guide therapeutic decision-making and potentially improving the utilization of ICA [68]. Following these results, Kurata et al. [69] demonstrated the improved diagnostic accuracy of machine learning CT-FFR compared to standard CCTA from 66 to 85% [30]. From a per-vessel analysis, AUC was significantly greater for CT-FFR than for CCTA stenosis ≥ 50% or ≥ 70% [69].

Combining CT-FFR with machine learning provides anatomical and functional information that may increase diagnostic accuracy for hemodynamically significant stenoses and CAD severity in a single noninvasive test. It should be mentioned that AI-based CT-FFR is currently only used as prototypes in a research setting and is mostly vendor specific, hampering widespread use. The use of a local software solution allows for user-variation and can influence per center accuracy, depending on user experience.

Prognosis

Besides quantification and automatization tasks, AI can also play a role in the prognostication of cardiovascular outcomes based on imaging data combined with clinically available risk predictors.

Motwani et al. [70] evaluated an AI-based approach for prognostication in a large population consisting of 10,030 patients with suspected CAD who underwent CCTA imaging and 5-year follow-up, with mortality as a main outcome of interest. Selected clinical and imaging parameters were used in an iterative LogitBoost algorithm to generate a regression model. Their AI-based prediction method showed higher AUC (0.79) compared to the Framingham Risk Score (0.61) or CCTA severity scores alone (0.62–0.64) in the prediction of 5-year mortality rate. Van Rosendael et al. [71] used the multicenter CONFIRM registry, which included 8844 patients with complete CCTA risk score information and at least 3-year follow-up for myocardial infarction and death. A boosted ensemble method was used to predict mortality using multiple CCTA risk scores. The AUC was significantly better for the AI-based approach (0.77) compared to individual risk scores (ranging from 0.69 to 0.70).

MRI

Function

Cardiac MRI (CMR) function parameters are proven to serve an important role in a wide variety of settings for patients suffering from various cardiac diseases [72,73,74,75,76]. Cine images are considered fundamental sequences in CMR and allow evaluation of wall motion abnormalities, myocardial thickness, and ventricular volumes [73, 74, 77, 78]. Commonly, biventricular function is calculated by transferring the images to an offline workstation and manually segmenting the endocardial and epicardial borders [79]. However, this approach is extremely time-consuming, often requiring 20–30 min for each patient [80, 81]. Semi-automated segmentation and training programs aim to increase the reader’s efficiency and reduce inter-operator variability [80, 81]. There has been significant development in semi-automated and fully automated methods for analyzing cine CMR images [82,83,84,85,86,87].

In order to choose the best AI approach for automatic segmentation, several recent competitions have been conducted [88]. For example, during the “Automatic Cardiac Diagnosis Challenge” (ACDC) that took place during the 20th International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI), Isensee et al. [89] developed a 2D and three-dimensional (3D) U-net model that had the highest Dice coefficients for diastole of the left ventricle (0.96), right ventricle (0.94), and myocardium (0.90) in diastole and systole (0.89 – 0.93) [46]. Using the ACDC data, Bernard et al. [88] tested deep learning methods provided by nine separate research groups for the segmentation task and four groups for the classification task. The results showed that the highest performing methods included a 0.97 correlation score for the automatic extraction of clinical indices. However, they also mention that although the results obtained on the LV are competitive, the results for the right ventricle and the myocardium are still sub-optimal [88].

Despite the high accuracy of the methods tested during the MICCAI competitions, one of the major limitations is represented by the small sample size used for training of segmentation networks [88]. This main limitation during the MICCAI competitions was overcome by Bai et al. [90], who trained a segmentation CNN using the UK Biobank data. This large dataset allowed them to train a fully convolutional network (FCN) on cine images of 4875 patients [90]. The FCN was obtained by manually drawing the ventricular contours and then exporting the annotations as XML (eXtensible Markup Language) files. A pixel-wise FCN image segmentation method was trained and tested on 600 patients. These authors demonstrated high DICE coefficients for the left ventricle cavity (0.94), left ventricular myocardium (0.88), and right ventricle (0.90) [90]. Interestingly, a head-to-head comparison of the trained FCN and manual segmentation showed a difference between the two approaches in terms of left ventricle end-diastolic volume, left ventricle end-systolic volume, left ventricular mass, right end-diastolic volume, and end-systolic volume (6.1 ml, 5.3 ml, 6.9 grams, 8.5 ml, and 6.2 ml, respectively) [90].

A study by Tao et al. showed that a CNN, depending on dataset heterogeneity, is able to quantify LV function parameters with high accuracy compared to experts with a correlation of 0.98 and an average perpendicular distance of approximately 1 mm, which was comparable to intra- and inter-observer variability [91]. In this multivendor, multicenter study, increasing the variability of the dataset during the training phase increased the generalizability of the algorithms performance.

Regardless of the strategies adopted for the aforementioned methods, the common aim of these AI applications is to shorten reporting time without loss of diagnostic accuracy or reproducibility (Fig. 4).

A 52-year-old patient underwent cardiac magnetic resonance (CMR) after acute myocardial infarction. a The segmentation of both right and left ventricles using the deep learning approach in end-diastolic phase. Manual segmentations are shown in part (b). The only significant difference between the manual (b) and AI segmentation (a) was the presence of hinge points

Tissue characterization

Reliable tissue characterization represents one of the main advantages of CMR [92,93,94]. During recent years, tissue characterization with cardiac MRI was strictly confined to T1 or T2 black blood images and late gadolinium enhancement (LGE) sequences [95,96,97,98,99]. T1 black blood (T1BB) images are useful for tissue characterization of cardiac masses and evaluation of myocardial fibrofatty infiltration or replacement [100, 101], while T2 black blood (T2BB) sequences are more often used for depiction of water content in cardiac masses or damaged myocardium [101, 102]. LGE images are acquired 10–15 min after the administration of gadolinium-based contrast material [103, 104]. In appropriately acquired LGE images, the normal myocardium is black, the normal blood pool is bright, and damaged myocardium shows enhancement. The latter reflects a slow accumulation of gadolinium contrast agent in the damaged tissues and could be the expression of replacement fibrosis [105].

Despite the clinical adaptation of T1BB and T2BB sequences, these sequences are becoming less used. The major issues regarding the T1BB and T2BB sequences are their qualitative analysis of myocardial tissue abnormalities [95]. T1 mapping and T2 mapping techniques overcame this issue, providing a quantitative approach for myocardial tissue characterization [95, 96, 106, 107]. Increased values of native T1 mapping can reflect an increase in extracellular space (amyloidosis, acute inflammation, replacement fibrosis), while decreases in native T1 mapping values are associated with myocardial diseases such as Fabry’s disease or iron overload [108]. Conversely, increased values of T2 mapping are extremely specific and sensitive for edema [109].

In some specific cardiomyopathies, the quantification of LGE could play a key role in determining their prognosis [110]; therefore, a correct quantification could be fundamental. Several strategies have been developed for accurate LGE quantification; however, all currently used strategies require manual segmentation and consequently a time-consuming approach.

The application of AI for tissue characterization is mainly focused on reducing the time of analysis and improving quantitation by optimizing the image acquisition and reducing artifacts [111,112,113,114,115]. Focusing on LGE quantification, Zabihollahy et al. [116] developed an algorithm based on 3D CNN for segmentation of 3D LGE in the left ventricle. The authors compared the results obtained from AI with manual segmentation, and they found a Dice similarity coefficient (DSC) of 0.94 [116], confirming that a 3D CNN approach and manual segmentation provide similar results [116]. Moccia et al. [112] also developed two fully convolutional networks for automated LGE segmentation in an ischemic population. The authors, comparing the FCN with segmentation, found sensitivity, specificity, accuracy, and DSC of 88.1%, 97.9%, 96.8%, and 71.3%, respectively [112]. Another interesting approach of AI for LGE evaluation has been described by Zhang et al. [117]. Whereas the two previous studies applied AI on segmentation of LGE images, Zhang derived LGE information from non-contrast cine images [117]. The authors extrapolated the LGE images from a single end-diastolic cine image and from the whole cine imaging dataset [117]. The depiction of LGE, using a fully connected CNN, showed a sensitivity, specificity, and AUC of 89.8%, 99.1%, and 0.94% on non-contrast cine images, while on a single non-enhanced cine image, a sensitivity, specificity, and AUC of 38.6%, 77.4%, and 0.58 was seen [117]. Interestingly, the quantification of LGE in the infarcted area using cine images did not show any significant difference if compared with standard LGE. The possibility to obtain LGE images from non-contrast cine images could represent a cornerstone in the future of myocardial tissue characterization without the use of a gadolinium-based contrast agent.

Besides LGE in the left ventricle, another interesting application of AI is left atrial scar tissue segmentation [118, 119]. The association of left atrium scar and the development of atrial fibrillation is well described [120], and therefore, the possibility to have an improved segmentation using AI may be useful in certain clinical scenarios. Li et al. [118] developed a network that was able to detect atrial scar with a mean accuracy of 0.86% ± 0.03% and a mean DSC of 0.70 ± 0.07.

Baessler et al. [111] used a combined approach for the evaluation of hypertrophic cardiomyopathy (HCM) using machine learning, texture analysis, and T1 weighted images. The authors found that using a gray-level non-uniformity (GLevNonU) architecture, it was possible to distinguish patients with HCM from normal patients with a sensitivity and specificity of 94% and 90%, respectively [111]. Considering that the AI algorithm would be biased by the presence of LGE, the authors combined their AI algorithm with textural analysis, showing increased sensitivity and specificity of 100% and 90% for identification of HCM, being able to capture even subtle myocardial changes [111].

Prognosis

Cardiac MRI plays a fundamental role in the management of some cardiomyopathies [110, 121]. Evaluation of tissue characterization analysis combined with ventricular function can be used for the prediction and risk stratification of patients at risk of death and sudden cardiac events [121, 122]. Similar to prognostication and risk stratification using CCTA imaging and combined with clinical risk factors, AI can also be used for prognostic purposes using cardiac MR. Dawes et al. [123] proposed an AI algorithm trained on patients with a known history of pulmonary hypertension to predict adverse events and patient mortality. The patients underwent cardiac MRI, right heart catheterization, a 6-min walking test and had a median follow-up of 4 years [123].

Chen et al. [124] used an AI-based approach for the prediction of 1-year mortality in dilated cardiomyopathy patients. Their algorithm was built using clinical data (including pharmacological data), ECG data, cardiac MRI, and echocardiography images [124]. The prognostic accuracy of the AI algorithm was significantly higher, with an AUC of 0.88 compared to 0.59 for the MAGICC score [125] and 0.50 for LVEF [124]. Despite the promising results, this application is limited by the small sample size used in this study. However, a larger study is ongoing for the evaluation of the prognostic value of AI in dilated cardiomyopathy [126]. Besides prognostication in cardiomyopathies, a recent article by Diller et al. shows the prognostic value of an AI-based approach in patients with repaired tetralogy of Fallot [127]. They showed that the right atrial median area and right ventricular long axis strain were accurate predictors of outcome [127].

Beyond the classic imaging data useful for prognostication and risk stratification, the use of AI algorithms shows promise in providing a prognostic evaluation for myocardial diseases.

Future directions

As many times before, the field of cardiothoracic imaging is again faced with new technological developments that have the potential to fundamentally change the field. With the increased imaging volumes and new insight into quantitative biomarkers, AI offers the possibility to improve radiology workflow, providing pre-readings for the detection of abnormalities, accurate quantifications, and prognostication. However, it should be noted that many of the applications described above are currently only being used in a research setting and are still far from being implemented into standard care. As a result of the fast-developing AI technology, most algorithms are developed and validated by only one group before they move on to a new and improved AI approach. For the clinical use of these algorithms, thorough validation is essential. To achieve this, there are two pathways that AI development can follow. The first will use transfer learning, where the algorithm will be developed and trained on a specific dataset and then distributed to other centers where the algorithm can be trained further and be refined to optimize accuracy for that specific population. This approach will be especially convenient for situations where the differences in population are large, or when data sharing, due to, for example, privacy reasons, is difficult. The second pathway will, instead of sharing algorithms, share data. More and more, we see national and international collaboration for the creation of large, standardized, and accurately labeled open source databases. This will allow for the development of more accurate, generalizable algorithms.

However, for AI to truly play a role in the daily clinical practice of the cardiothoracic imaging community, several issues require attention. First, AI applications will need to be tested and validated in a clinical setting, while assessing the true efficiency and accuracy in clinical workflows and a representative population. With the rise of AI applications in medicine in general, there also comes the need for stringent protection with regard to patient privacy and cybersecurity. The medical and AI communities are currently working together to optimize the legal framework for clinical AI applications.

Currently, in the USA, supporting AI applications that only assist in quantitative analysis tasks are covered by the U.S. Food and Drug Administration, only requiring a 510(k) approval [128, 129]. However, if an AI application is used for clinical interpretation of radiological images, it will require FDA pre-market approval (PMA), which requires extensive proof of accuracy and safety from clinical trials. The European commission’s white paper on medical AI, published in February 20 [20, 130], understated that current EU regulations already provide a high level of protection through medical device laws and data protection laws. Additionally, the EU commission for medical AI proposes to add specific regulations aimed for medical AI applications that include requirements on training data, record-keeping of used datasets, transparency, robustness and accuracy, and human oversight.

Additionally, there are some ethical considerations such as discrimination issues along the lines of race or economical groups due to disparities in the training populations. Effects AI can have on healthcare insurance. It is imperative that the medical field learns to balance the benefits and risks that the AI technology brings and makes sure everyone these are equal for everyone. A statement of the Joint European and North American Multisociety task force discusses this in detail, emphasizing that more research is needed to investigate how to implement AI optimally in clinical practice [131].

Conclusion

In summary, AI is being increasingly used for cardiac radiological applications. Current applications, most only tested in a research setting, focus on workflow and image reconstruction optimization, biomarker quantification, and prognostication. While CT is on the forefront of these developments, mostly due to the rapidly increasing number of CCTA and calcium scoring examinations, cardiac MRI is rapidly following, with its main focus on segmentation and functional analysis. The future steps of cardiac AI applications should focus on clinical implementation by assessing clinical accuracy in relevant representative populations, cost-effectiveness, and clinical efficiency in reducing time. Cardiac imagers, together with AI specialists, are responsible for creating the optimal framework for the next steps of AI implementation, ensuring the optimal use of AI leading to optimal clinical workflows that benefit both radiologists and patients.

References

Turing A (2009) Computing machinery and intelligence amplification. “Parsing the Turing Test.”. Comput Intell Expert Speak. https://doi.org/10.1109/9780470544297.ch3

McCarthy J (1990) Artificial intelligence, logic and formalizing common sense. Philos Log Artif Intell 1990:161–190

Singh G, Al’Aref SJ, Van Assen M et al (2018) Machine learning in cardiac CT: basic concepts and contemporary data. J Cardiovasc Comput Tomogr 12:192–201. https://doi.org/10.1016/j.jcct.2018.04.010

Obermeyer Z, Emanuel EJ (2016) Predicting the future—big data, machine learning, and clinical medicine. N Engl J Med 375(13):1216–1219. https://doi.org/10.1056/NEJMp1606181.Predicting

Mukherjee S (2017) A.I. vs M.D. What happens when diagnosis is automated. New Yorker, New York

Frost & Sullivan (2015) Cognitive computing and artificial intelligence systems in healthcare. Ramping up a $6 billion dollar market opportunity. Frost & Sullivan, New York

Cabitza F, Rasoini R, Gensini GF (2017) Unintended consequences of machine learning in medicine. JAMA. https://doi.org/10.1001/jama.2017.7797

Hecht HS, Shaw L, Chandrashekhar YS, Bax JJ, Narula J (2019) Should NICE guidelines be universally accepted for the evaluation of stable coronary disease? A debate. Eur Heart J 40(18):1440–1453. https://doi.org/10.1093/eurheartj/ehz024

Piepoli MF, Hoes AW, Agewall S et al (2016) 2016 European guidelines on cardiovascular disease prevention in clinical practice. Eur Heart J 37(29):2315–2381. https://doi.org/10.1093/eurheartj/ehw106

Sousa-Uva M, Neumann FJ, Ahlsson A et al (2019) 2018 ESC/EACTS guidelines on myocardial revascularization. Eur J Cardio-thoracic Surg 55(1):4–90. https://doi.org/10.1093/ejcts/ezy289

Sousa-Uva M, Ahlsson A, Alfonso F et al (2018) 2018 ESC/EACTS guidelines on myocardial revascularization. Eur Heart J 00:1–96. https://doi.org/10.1093/eurheartj/ehy394

Knuuti J, Wijns W, Chairperson I et al (2019) ESC guidelines for the diagnosis and management of chronic coronary syndromes. Eur Heart J 2019:1–71. https://doi.org/10.1093/eurheartj/ehz425

Ibanez B, James S, Agewall S et al (2018) 2017 ESC guidelines for the management of acute myocardial infarction in patients presenting with ST-segment elevation. Eur Heart J 39(2):119–177. https://doi.org/10.1093/eurheartj/ehx393

Arnett DK, Blumenthal RS, Albert MA et al (2019) 2019 ACC/AHA guideline on the primary prevention of cardiovascular disease: executive summary: a report of the American College of Cardiology/American Heart Association Task Force on Clinical Practice Guidelines. J Am Coll Cardiol 74(10):1376–1414. https://doi.org/10.1016/j.jacc.2019.03.009

Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJWL (2018) Artificial intelligence in radiology. Nat Rev Cancer 18(8):500–510. https://doi.org/10.1038/s41568-018-0016-5

McDonald RJ, Schwartz KM, Eckel LJ et al (2015) The effects of changes in utilization and technological advancements of cross-sectional imaging on radiologist workload. Acad Radiol 22(9):1191–1198. https://doi.org/10.1016/j.acra.2015.05.007

Coppola F, Faggioni L, Regge D et al (2020) Artificial intelligence: radiologists’ expectations and opinions gleaned from a nationwide online survey. Radiol Med. https://doi.org/10.1007/s11547-020-01205-y

Hernandez D, Amodei D, Girish S, Clark J, Brockman G, Ilya S (2019) OpenAI.org. AI and Compute. https://openai.com/blog/ai-and-compute/#addendum. Accessed 3 Nov 2020

Summers RM, Handwerker LR, Pickhardt PJ et al (2008) Performance of a previously validated CT colonography computer-aided detection system in a new patient population. Am J Roentgenol 191(1):168–174. https://doi.org/10.2214/AJR.07.3354

van Assen M, Lee SJ, De Cecco CN (2020) Artificial intelligence from A to Z: from neural network to legal framework. Eur J Radiol. https://doi.org/10.1016/j.ejrad.2020.109083

Wolterink JM, Leiner T, Viergever MA, Išgum I (2017) Generative adversarial networks for noise reduction in low-dose CT. IEEE Trans Med Imaging 36(12):2536–2545. https://doi.org/10.1109/TMI.2017.2708987

Shan H, Padole A, Homayounieh F et al (2019) Competitive performance of a modularized deep neural network compared to commercial algorithms for low-dose CT image reconstruction. Nat Mach Intell 1(6):269–276. https://doi.org/10.1038/s42256-019-0057-9

Tao Q, Lelieveldt BPF, Van Der Geest RJ (2020) Deep learning for quantitative cardiac MRI. Am J Roentgenol 214:529–535

Qin C, Schlemper J, Caballero J, Price AN, Hajnal JV (2018) Convolutional recurrent neural networks for dynamic mr image reconstruction. IEEE Trans Med Imaging PP(c):1. https://doi.org/10.1109/tmi.2018.2863670

Tarroni G, Oktay O, Bai W et al (2018) Learning-based quality control for cardiac MR images. IEEE Trans Med Imaging PP:1. https://doi.org/10.1109/tmi.2018.2878509

Chow BJW, Small G, Yam Y et al (2011) Incremental prognostic value of cardiac computed tomography in coronary artery disease using confirm coronary computed tomography angiography evaluation for clinical outcomes: an international multicenter registry. Circ Cardiovasc Imaging. https://doi.org/10.1161/CIRCIMAGING.111.964155

Foldyna B, Udelson JE, Karády J et al (2019) Pretest probability for patients with suspected obstructive coronary artery disease: re-evaluating Diamond-Forrester for the contemporary era and clinical implications: insights from the PROMISE trial. Eur Heart J Cardiovasc Imaging. https://doi.org/10.1093/ehjci/jey182

Papachristidis A, Vaughan GF, Denny SJ et al (2020) Comparison of NICE and ESC proposed strategies on new onset chest pain and the contemporary clinical utility of pretest probability risk score. Open Hear 7(1):1–9. https://doi.org/10.1136/openhrt-2019-001081

Cury RC, Abbara S, Achenbach S et al (2016) Coronary Artery Disease-Reporting and Data System (CAD-RADS) an expert consensus document of SCCT, Endorsed by the ACC. JACC-Cardiovasc Imaging 9(9):1099–1113. https://doi.org/10.1016/j.jcmg.2016.05.005

Muscogiuri G, Chiesa M, Trotta M et al (2019) Performance of a deep learning algorithm for the evaluation of CAD-RADS classification with CCTA. Atherosclerosis. https://doi.org/10.1016/j.atherosclerosis.2019.12.001

Becker CR, Majeed A, Crispin A et al (2005) CT measurement of coronary calcium mass: impact on global cardiac risk assessment. Eur Radiol. https://doi.org/10.1007/s00330-004-2528-5

Caruso D, De Cecco CN, Schoepf UJ et al (2016) Correction factors for CT coronary artery calcium scoring using advanced modeled iterative reconstruction instead of filtered back projection. Acad Radiol. https://doi.org/10.1016/j.acra.2016.07.015

Greenland P, Bonow RO, Brundage BH et al (2007) ACCF/AHA 2007 clinical expert consensus document on coronary artery calcium scoring by computed tomography in global cardiovascular risk assessment and in evaluation of patients with chest pain. A report of the American College of Cardiology Foundation Cl. J Am Coll Cardiol 49(3):378–402. https://doi.org/10.1016/j.jacc.2006.10.001

Greenland P, Blaha MJ, Budoff MJ, Erbel R, Watson KE (2018) Coronary calcium score and cardiovascular risk. J Am Coll Cardiol 72(4):434–447. https://doi.org/10.1016/j.jacc.2018.05.027

ó Hartaigh B, Valenti V, Cho I, Schulman-Marcus J, Gransar H, Knapper J, Kelkar AA, Xie JX, Chang HJ, Shaw LJ, Callister TQ (2016) 15-Year prognostic utility of coronary artery calcium scoring for all-cause mortality in the elderly. Atherosclerosis. https://doi.org/10.1016/j.atherosclerosis.2016.01.039

Tesche C, Duguay TM, Schoepf UJ et al (2018) Current and future applications of CT coronary calcium assessment. Expert Rev Cardiovasc Ther. https://doi.org/10.1080/14779072.2018.1474347

Agatston AS, Janowitz WR, Hildner FJ, Zusmer NR, Viamonte M, Detrano R (1990) Quantification of coronary artery calcium using ultrafast computed tomography. J Am Coll Cardiol 15(4):827–832. https://doi.org/10.1016/0735-1097(90)90282-T

de Vos BD, Wolterink JM, Leiner T, de Jong PA, Lessmann N, Isgum I (2019) Direct automatic coronary calcium scoring in cardiac and chest CT. IEEE Trans Med Imaging 38(9):2127–2138. https://doi.org/10.1109/TMI.2019.2899534

Sandstedt M, Henriksson L, Janzon M et al (2020) Evaluation of an AI-based, automatic coronary artery calcium scoring software. Eur Radiol. https://doi.org/10.1007/s00330-019-06489-x

Zhang Y, van der Werf NR, Jiang B, van Hamersvelt R, Greuter MJW, Xie X (2020) Motion-corrected coronary calcium scores by a convolutional neural network: a robotic simulating study. Eur Radiol. https://doi.org/10.1007/s00330-019-06447-7

Išgum I, Rutten A, Prokop M, Van Ginneken B (2007) Detection of coronary calcifications from computed tomography scans for automated risk assessment of coronary artery disease. Med Phys 34(4):1450–1461. https://doi.org/10.1118/1.2710548

Głowacki J, Krysiński M, Czaja-Ziółkowska M, Wasilewski J (2019) Machine learning-based algorithm enables the exclusion of obstructive coronary artery disease in the patients who underwent coronary artery calcium scoring. Acad Radiol. https://doi.org/10.1016/j.acra.2019.11.016

Takx RAP, De Jong PA, Leiner T et al (2014) Automated coronary artery calcification scoring in non-gated chest CT: agreement and reliability. PLoS ONE. https://doi.org/10.1371/journal.pone.0091239

Wolterink JM, Leiner T, de Vos BD, van Hamersvelt RW, Viergever MA, Išgum I (2016) Automatic coronary artery calcium scoring in cardiac CT angiography using paired convolutional neural networks. Med Image Anal 34:123–136. https://doi.org/10.1016/j.media.2016.04.004

van Velzen SGM, Lessmann N, Velthuis BK et al (2020) Deep learning for automatic calcium scoring in CT: validation using multiple cardiac CT and chest CT protocols. Radiology 295(1):66–79. https://doi.org/10.1148/radiol.2020191621

Apfaltrer P, Schoepf UJ, Vliegenthart R et al (2011) Coronary computed tomography-present status and future directions. Int J Clin Pract. https://doi.org/10.1111/j.1742-1241.2011.02784.x

Meinel FG, Bayer RR II, Zwerner PL, De Cecco CN, Schoepf UJ, Bamberg F (2015) Coronary computed tomographic angiography in clinical practice: state of the art. Radiol Clin North Am 53(2):287–296. https://doi.org/10.1016/j.rcl.2014.11.012

Newby D (2015) CT coronary angiography in patients with suspected angina due to coronary heart disease (SCOT-HEART): an open-label, parallel-group, multicentre trial. Lancet. https://doi.org/10.1016/S0140-6736(15)60291-4

Williams MC, Moss AJ, Dweck M et al (2019) Coronary artery plaque characteristics associated with adverse outcomes in the SCOT-HEART study. J Am Coll Cardiol. https://doi.org/10.1016/j.jacc.2018.10.066

Andrew M, John H (2015) The challenge of coronary calcium on coronary computed tomographic angiography (CCTA) scans: effect on interpretation and possible solutions. Int J Cardiovasc Imaging 31(2):145–157. https://doi.org/10.1007/s10554-015-0773-0

Zreik M, Van Hamersvelt RW, Wolterink JM, Leiner T, Viergever MA, Išgum I (2019) A recurrent CNN for automatic detection and classification of coronary artery plaque and stenosis in coronary CT angiography. IEEE Trans Med Imaging. https://doi.org/10.1109/TMI.2018.2883807

Utsunomiya D, Nakaura T, Oda S (2016) Artificial intelligence for the interpretation of coronary computed tomography angiography: can machine learning improve diagnostic performance? J Clin Exp Cardiol. https://doi.org/10.4172/2155-9880.1000473

Kang D, Dey D, Slomka PJ et al (2015) Structured learning algorithm for detection of nonobstructive and obstructive coronary plaque lesions from computed tomography angiography. J Med Imaging. https://doi.org/10.1117/1.jmi.2.1.014003

Yoneyama H, Nakajima K, Taki J et al (2019) Ability of artificial intelligence to diagnose coronary artery stenosis using hybrid images of coronary computed tomography angiography and myocardial perfusion SPECT. Eur J Hybrid Imaging. https://doi.org/10.1186/s41824-019-0052-8

Hell MM, Dey D, Marwan M, Achenbach S, Schmid J, Schuhbaeck A (2015) Non-invasive prediction of hemodynamically significant coronary artery stenoses by contrast density difference in coronary CT angiography. Eur J Radiol. https://doi.org/10.1016/j.ejrad.2015.04.024

Dey D, Gaur S, Ovrehus KA et al (2018) Integrated prediction of lesion-specific ischaemia from quantitative coronary CT angiography using machine learning: a multicentre study. Eur Radiol. https://doi.org/10.1007/s00330-017-5223-z

Van Hamersvelt RW (2018) Deep learning analysis of left ventricular myocardium in CT angiographic intermediate-degree coronary stenosis improves the diagnostic accuracy for identification of functionally significant stenosis. Eur Radiol 29(5):2350–2359

Min JK, Leipsic J, Pencina MJ et al (2012) Diagnostic accuracy of fractional flow reserve from anatomic CT angiography. JAMA 308(12):1237. https://doi.org/10.1001/2012.jama.11274

Kruk M, Wardziak Ł, Demkow M et al (2016) Workstation-based calculation of CTA-based FFR for intermediate stenosis. JACC Cardiovasc Imaging 9(6):690–699. https://doi.org/10.1016/j.jcmg.2015.09.019

Kitabata H, Leipsic J, Patel MR et al (2018) Incidence and predictors of lesion-specific ischemia by FFRCT: learnings from the international ADVANCE registry. J Cardiovasc Comput Tomogr 12(2):95–100. https://doi.org/10.1016/j.jcct.2018.01.008

Lu MT, Ferencik M, Roberts RS et al (2017) Noninvasive FFR derived from coronary CT angiography: management and outcomes in the PROMISE trial. JACC Cardiovasc Imaging 10(11):1350–1358. https://doi.org/10.1016/j.jcmg.2016.11.024

Tesche C, De Cecco CN, Caruso D et al (2016) Coronary CT angiography derived morphological and functional quantitative plaque markers correlated with invasive fractional flow reserve for detecting hemodynamically significant stenosis. J Cardiovasc Comput Tomogr 10(3):199–206. https://doi.org/10.1016/j.jcct.2016.03.002

Tesche C, De Cecco CN, Baumann S et al (2018) Coronary CT angiography–derived fractional flow reserve: machine learning algorithm versus computational fluid dynamics modeling. Radiology 288:64–72. https://doi.org/10.1148/radiol.2018171291

Tesche C, De Cecco CN, Albrecht MH et al (2017) Coronary CT angiography—derived fractional flow reserve. Radiology 285(1):17–33. https://doi.org/10.1148/radiol.2017162641

Coenen A, Lubbers MM, Kurata A et al (2015) Fractional flow reserve computed from noninvasive CT angiography data: diagnostic performance of an on-site clinician-operated computational fluid dynamics algorithm. Radiology 274(3):674–683. https://doi.org/10.1148/radiol.14140992

Coenen A, Kim Y-H, Kruk M et al (2018) Diagnostic accuracy of a machine-learning approach to coronary computed tomographic angiography-based fractional flow reserve. Circ Cardiovasc Imaging 11(6):e007217. https://doi.org/10.1161/CIRCIMAGING.117.007217

von Knebel Doeberitz PL, De Cecco CN, Schoepf UJ et al (2019) Coronary CT angiography—derived plaque quantification with artificial intelligence CT fractional flow reserve for the identification of lesion-specific ischemia. Eur Radiol. https://doi.org/10.1007/s00330-018-5834-z

Qiao HY, Tang CX, Schoepf UJ et al (2020) Impact of machine learning-based coronary computed tomography angiography fractional flow reserve on treatment decisions and clinical outcomes in patients with suspected coronary artery disease. Eur Radiol. https://doi.org/10.1007/s00330-020-06964-w

Kurata A, Fukuyama N, Hirai K et al (2019) On-site computed tomography-derived fractional flow reserve using a machine-learning algorithm: clinical effectiveness in a retrospective multicenter cohort. Circ J. https://doi.org/10.1253/circj.CJ-19-0163

Motwani M, Dey D, Berman DS et al (2017) Machine learning for prediction of all-cause mortality in patients with suspected coronary artery disease: a 5-year multicentre prospective registry analysis. Eur Heart J 38(7):500–507. https://doi.org/10.1093/eurheartj/ehw188

van Rosendael AR, Maliakal G, Kolli KK et al (2018) Maximization of the usage of coronary CTA derived plaque information using a machine learning based algorithm to improve risk stratification; insights from the CONFIRM registry. J Cardiovasc Comput Tomogr. https://doi.org/10.1016/j.jcct.2018.04.011

Marwick TH (2018) Ejection fraction pros and cons: JACC state-of-the-art review. J Am Coll Cardiol 72(19):2360–2379. https://doi.org/10.1016/j.jacc.2018.08.2162

Bluemke DA, Kronmal RA, Lima JAC et al (2008) The relationship of left ventricular mass and geometry to incident cardiovascular events. The MESA (Multi-Ethnic Study of Atherosclerosis) Study. J Am Coll Cardiol 52(25):2148–2155. https://doi.org/10.1016/j.jacc.2008.09.014

Moss MD, Bigger JT, Case R, Gillespie MD, Goldstein RE (2015) Risk stratification and survival after myocardial infarction. N Engl J Med 309(6):331–336

Di Cesare E, Cademartiri F, Carbone I et al (2013) Indicazioni cliniche per l’utilizzo della cardio RM. A cura del Gruppo di lavoro della Sezione di Cardio-Radiologia della SIRM. Radiol Med. 118(5):752–798. https://doi.org/10.1007/s11547-012-0899-2

Francone M, Carbone I, Agati L et al (2011) Utilità delle sequenze STIR T2 pesate in risonanza magnetica cardiaca: spettro di applicazioni cliniche in varie cardiopatie ischemiche e nonischemiche. Radiol Med 116(1):32–46. https://doi.org/10.1007/s11547-010-0594-0

Muscogiuri G, Suranyi P, Eid M et al (2019) Pediatric cardiac MR imaging: practical preoperative assessment. Magn Reson Imaging Clin N Am. https://doi.org/10.1016/j.mric.2019.01.004

Fratz S, Chung T, Greil GF et al (2013) Guidelines and protocols for cardiovascular magnetic resonance in children and adults with congenital heart disease: SCMR expert consensus group on congenital heart disease. J Cardiovasc Magn Reson 15(1):1–26. https://doi.org/10.1186/1532-429X-15-51

Varga-Szemes A, Muscogiuri G, Schoepf UJ et al (2016) Clinical feasibility of a myocardial signal intensity threshold-based semi-automated cardiac magnetic resonance segmentation method. Eur Radiol. https://doi.org/10.1007/s00330-015-3952-4

Petitjean C, Dacher JN (2011) A review of segmentation methods in short axis cardiac MR images. Med Image Anal 15(2):169–184. https://doi.org/10.1016/j.media.2010.12.004

Suinesiaputra A, Bluemke DA, Cowan BR et al (2015) Quantification of LV function and mass by cardiovascular magnetic resonance: multi-center variability and consensus contours. J Cardiovasc Magn Reson 17(1):1–8. https://doi.org/10.1186/s12968-015-0170-9

Avendi MR, Kheradvar A, Jafarkhani H (2016) A combined deep-learning and deformable-model approach to fully automatic segmentation of the left ventricle in cardiac MRI. Med Image Anal 30:108–119. https://doi.org/10.1016/j.media.2016.01.005

Ngo TA, Lu Z, Carneiro G (2017) Combining deep learning and level set for the automated segmentation of the left ventricle of the heart from cardiac cine magnetic resonance. Med Image Anal. https://doi.org/10.1016/j.media.2016.05.009

Ma Y, Wang L, Ma Y, Dong M, Du S, Sun X (2016) An SPCNN-GVF-based approach for the automatic segmentation of left ventricle in cardiac cine MR images. Int J Comput Assist Radiol Surg. https://doi.org/10.1007/s11548-016-1429-9

Tan LK, Liew YM, Lim E, McLaughlin RA (2017) Convolutional neural network regression for short-axis left ventricle segmentation in cardiac cine MR sequences. Med Image Anal. https://doi.org/10.1016/j.media.2017.04.002

Curiale AH, Colavecchia FD, Mato G (2019) Automatic quantification of the LV function and mass: a deep learning approach for cardiovascular MRI. Comput Methods Programs Biomed. https://doi.org/10.1016/j.cmpb.2018.12.002

Liao F, Chen X, Hu X, Song S (2017) Estimation of the volume of the left ventricle from MRI images using deep neural networks. IEEE Trans Cybern. https://doi.org/10.1109/tcyb.2017.2778799

Bernard O, Lalande A, Zotti C et al (2018) Deep learning techniques for automatic MRI cardiac multi-structures segmentation and diagnosis: is the problem solved? IEEE Trans Med Imaging 37(11):2514–2525. https://doi.org/10.1109/TMI.2018.2837502

Isensee F, Jaeger PF, Full PM, Wolf I, Engelhardt S, Maier-Hein KH (2018) Automatic cardiac disease assessment on cine-MRI via time-series segmentation and domain specific features. In: Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics). https://doi.org/10.1007/978-3-319-75541-0_13

Bai W, Sinclair M, Tarroni G et al (2018) Automated cardiovascular magnetic resonance image analysis with fully convolutional networks 08 Information and Computing Sciences 0801 Artificial Intelligence and Image Processing. J Cardiovasc Magn Reson 20(1):1–12. https://doi.org/10.1186/s12968-018-0471-x

Tao Q, Yan W, Wang Y et al (2018) Deep learning-based method for fully automatic quantification of left ventricle function from cine MR images: a multivendor, multicenter study. Radiology. https://doi.org/10.1148/radiol.2018180513

Muscogiuri G, Suranyi P, Schoepf UJ et al (2018) Cardiac magnetic resonance T1-mapping of the myocardium: technical background and clinical relevance. J Thorac Imaging. https://doi.org/10.1097/RTI.0000000000000270

Kammerlander AA, Marzluf BA, Zotter-Tufaro C et al (2016) T1 mapping by CMR imaging from histological validation to clinical implication. JACC Cardiovasc Imaging. https://doi.org/10.1016/j.jcmg.2015.11.002

White SK, Sado DM, Flett AS, Moon JC (2012) Characterising the myocardial interstitial space: the clinical relevance of non-invasive imaging. Heart. https://doi.org/10.1136/heartjnl-2011-301515

De Cecco CN, Muscogiuri G, Varga-Szemes A, Schoepf UJ (2017) Cutting edge clinical applications in cardiovascular magnetic resonance. World J Radiol. https://doi.org/10.4329/wjr.v9.i1.1

Kellman P, Hansen MS (2014) T1-mapping in the heart: accuracy and precision. J Cardiovasc Magn Reson 16(1):1–20. https://doi.org/10.1186/1532-429X-16-2

Ugander M, Oki AJ, Hsu LY et al (2012) Extracellular volume imaging by magnetic resonance imaging provides insights into overt and sub-clinical myocardial pathology. Eur Heart J 33(10):1268–1278. https://doi.org/10.1093/eurheartj/ehr481

Yamada A, Ishida M, Kitagawa K, Sakuma H (2018) Assessment of myocardial ischemia using stress perfusion cardiovascular magnetic resonance. Cardiovasc Imaging Asia 2(2):65–75. https://doi.org/10.22468/cvia.2017.00178

Hamlin SA, Henry TS, Little BP, Lerakis S, Stillman AE (2014) Mapping the future of cardiac MR imaging: case-based review of T1 and T2 mapping techniques. Radiographics 34:1594–1611. https://doi.org/10.1148/rg.346140030

Rastegar N, Te Riele ASJM, James CA et al (2016) Fibrofatty changes: incidence at cardiac MR imaging in patients with arrhythmogenic right ventricular dysplasia/cardiomyopathy1. Radiology. https://doi.org/10.1148/radiol.2016150988

Buckley O, Madan R, Kwong R, Rybicki FJ, Hunsaker A (2011) Cardiac masses, part 1: imaging strategies and technical considerations. Am J Roentgenol. https://doi.org/10.1021/acssynbio.5b00266.Quantitative

Edwards NC, Routledge H, Steeds RP (2009) T2-weighted magnetic resonance imaging to assess myocardial oedema in ischaemic heart disease. Heart. https://doi.org/10.1136/hrt.2009.169961

Kim RJ, Wu E, Rafael A et al (2000) The use of contrast-enhanced magnetic resonance imaging to identify reversible myocardial dysfunction. N Engl J Med. https://doi.org/10.1056/NEJM200011163432003

Thomson LEJ, Kim RJ, Judd RM (2004) Magnetic resonance imaging for the assessment of myocardial viability. J Magn Reson Imaging. https://doi.org/10.1002/jmri.20075

Satoh H (2014) Distribution of late gadolinium enhancement in various types of cardiomyopathies: significance in differential diagnosis, clinical features and prognosis. World J Cardiol. https://doi.org/10.4330/wjc.v6.i7.585

o h-Ici D, Jeuthe S, Al-Wakeel N et al (2014) T1 mapping in ischaemic heart disease. Eur Heart J Cardiovasc Imaging. 15(6):597–602. https://doi.org/10.1093/ehjci/jeu024

Florian A, Jurcut R, Ginghina C, Bogeart J (1997) Cardiac magnetic resonance imaging in ischemic heart disease a clinical review. J Med Life 4(4):330–345

Muscogiuri G, Suranyi P, Schoepf UJ et al (2017) Cardiac magnetic resonance T1-mapping of the myocardium. J Thorac Imaging 00(00):1. https://doi.org/10.1097/RTI.0000000000000270

Gannon MP, Schaub E, Grines CL, Saba SG (2019) State of the art: evaluation and prognostication of myocarditis using cardiac MRI. J Magn Reson Imaging. https://doi.org/10.1002/jmri.26611

Chan RH, Maron BJ, Olivotto I et al (2014) Prognostic value of quantitative contrast-enhanced cardiovascular magnetic resonance for the evaluation of sudden death risk in patients with hypertrophic cardiomyopathy. Circulation. https://doi.org/10.1161/CIRCULATIONAHA.113.007094

Baeßler B, Mannil M, Maintz D, Alkadhi H, Manka R (2018) Texture analysis and machine learning of non-contrast T1-weighted MR images in patients with hypertrophic cardiomyopathy—preliminary results. Eur J Radiol. https://doi.org/10.1016/j.ejrad.2018.03.013

Moccia S, Banali R, Martini C et al (2019) Development and testing of a deep learning-based strategy for scar segmentation on CMR-LGE images. Magn Reson Mater Phys Biol Med. https://doi.org/10.1007/s10334-018-0718-4

Yang F, Zhang Y, Lei P et al (2019) A deep learning segmentation approach in free-breathing real-time cardiac magnetic resonance imaging. Biomed Res Int. https://doi.org/10.1155/2019/5636423

Zabihollahy F, Rajchl M, White JA, Ukwatta E (2020) Fully automated segmentation of left ventricular scar from 3D late gadolinium enhancement magnetic resonance imaging using a cascaded multi-planar U-Net (CMPU-Net). Med Phys. https://doi.org/10.1002/mp.14022

Fahmy AS, Rausch J, Neisiusa U et al (2018) Fully automated quantification of cardiac MR LV mass and scar in hypertrophic cardiomyopathy using deep learning. Circulation 138(Suppl_1):A15085

Zabihollahy F, White JA, Ukwatta E (2019) Convolutional neural network-based approach for segmentation of left ventricle myocardial scar from 3D late gadolinium enhancement MR images. Med Phys. https://doi.org/10.1002/mp.13436

Zhang N, Yang G, Gao Z et al (2019) Deep learning for diagnosis of chronic myocardial infarction on nonenhanced cardiac cine MRI. Radiology. https://doi.org/10.1148/radiol.2019182304

Li L, Wu F, Yang G et al (2020) Atrial scar quantification via multi-scale CNN in the graph-cuts framework. Med Image Anal. https://doi.org/10.1016/j.media.2019.101595

Li L, Yang G, Wu F et al (2019) Atrial scar segmentation via potential learning in the graph-cut framework. In: Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics). https://doi.org/10.1007/978-3-030-12029-0_17

Vergara GR, Marrouche NF (2011) Tailored management of atrial fibrillation using a LGE-MRI based model: from the clinic to the electrophysiology laboratory. J Cardiovasc Electrophysiol. https://doi.org/10.1111/j.1540-8167.2010.01941.x

Halliday BP, Baksi AJ, Gulati A et al (2019) Outcome in dilated cardiomyopathy related to the extent, location, and pattern of late gadolinium enhancement. JACC Cardiovasc Imaging. https://doi.org/10.1016/j.jcmg.2018.07.015

Halliday BP, Gulati A, Ali A et al (2017) Association between midwall late gadolinium enhancement and sudden cardiac death in patients with dilated cardiomyopathy and mild and moderate left ventricular systolic dysfunction. Circulation. https://doi.org/10.1161/CIRCULATIONAHA.116.026910

Dawes TJW, De Marvao A, Shi W et al (2017) Machine learning of three-dimensional right ventricular motion enables outcome prediction in pulmonary hypertension: a cardiac MR imaging study. Radiology. https://doi.org/10.1148/radiol.2016161315

Chen R, Lu A, Wang J et al (2019) Using machine learning to predict one-year cardiovascular events in patients with severe dilated cardiomyopathy. Eur J Radiol. https://doi.org/10.1016/j.ejrad.2019.06.004

Pocock SJ, Ariti CA, McMurray JJV et al (2013) Predicting survival in heart failure: a risk score based on 39,372 patients from 30 studies. Eur Heart J. https://doi.org/10.1093/eurheartj/ehs337

Guaricci AI, Masci PG, Lorenzoni V, Schwitter J, Pontone G (2018) CarDiac MagnEtic Resonance for Primary Prevention Implantable CardioVerter DebrillAtor ThErapy international registry: design and rationale of the DERIVATE study. Int J Cardiol. https://doi.org/10.1016/j.ijcard.2018.03.043

Diller GP, Orwat S, Vahle J et al (2020) Prediction of prognosis in patients with tetralogy of Fallot based on deep learning imaging analysis. Heart. https://doi.org/10.1136/heartjnl-2019-315962

ACR-DSI (2019) FDA cleared AI algorithms. DSI-ACR. https://www.acrdsi.org/DSI-Services/FDA-Cleared-AI-Algorithms. Accessed 30 Jan 2020

Zuckerman DM, Brown P, Nissen SE (2011) Medical device recalls and the FDA approval process. Arch Intern Med 171(11):1006–1011. https://doi.org/10.1001/archinternmed.2011.30

European-Commission (2020) White paper on artificial intelligence

Geis JR, Brady AP, Wu CC et al (2019) Ethics of artificial intelligence in radiology: summary of the Joint European and North American Multisociety Statement. Can Assoc Radiol J 70(4):329–334. https://doi.org/10.1016/j.carj.2019.08.010

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Dr. De Cecco receives institutional research funding from Siemens Healthineers. Dr. Laghi receives funding or financial compensation from speakers’ bureau, GE Healthcare, Guerbet, Bayer, Merck, and Bracco. All other authors have conflicts of interests.

Ethics approval

For this review study, no animals or patients were included; therefore, no ethical approval was required and no informed consent was needed

Research involving human participants and/or animals

For this review study, no animals or patients were included.

Informed consent

For this review study, no animals or patients were included.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

van Assen, M., Muscogiuri, G., Caruso, D. et al. Artificial intelligence in cardiac radiology. Radiol med 125, 1186–1199 (2020). https://doi.org/10.1007/s11547-020-01277-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11547-020-01277-w