Abstract

The purpose of this paper is twofold. We first present a methodological framework for the analysis of embodied interaction with technology captured through video recording. The framework brings together a social semiotic approach to multimodality with the philosophical and theoretical roots of embodied cognition. We then demonstrate the application of the framework by exploring how the computational thinking of two fifth grade learners emerged as an embodied phenomenon during an educational robotics activity. The findings suggest that, for young children, computational thinking was extended to include the structures in the environment and guided by their embodiment of mathematical concepts. Specifically, the participants repeatedly used their bodies to simulate different possibilities for action while incorporating both perceptual and formal multiplicative reasoning strategies to conceptualize the robot’s movements. Implications for the design of embodied educational robotics activities and future application of the methodological framework are discussed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

There is growing interest in studying cognition and learning from an embodied perspective (Calvo and Gomila 2008; Rowlands 2010). Traditional perspectives view cognition as a computational process in which sensory input is transformed into representations that are stored and retrieved (Richey et al. 2010; Rowlands 2010; Shapiro 2019). From this perspective, the brain is the primary entity associated with cognition (Newell and Simon 1972; Paivio 1986). Embodied perspectives challenge the traditional role of the brain in cognition, arguing instead that cognition takes place through an intimate coupling of brain, body, and environment (Chemero 2009; Gibson 1977, 1986; Goodwin 2007; Merleau-Ponty 1962; Thelen and Smith 1994). This environmental coupling moves away from information processing and instead suggests that cognition is a reactive, reciprocal process in which one’s interaction with the environment shapes how one perceives the environment (Gibson 1977, 1986; Goodwin 2007). Knowledge is gained through “achieving the more immediate goal of guiding behavior in response to the system’s changing surroundings” (Milkowski 2013, p. 4). Brain and body work together to perceive the environment and this perception, in turn, affects how one thinks about and takes action within the environment.

A number of studies in mathematics education (e.g., Abrahamson and Lindgren 2014; Alibali and Nathan 2012; Lakoff and Núñez 2000; Nathan and Walkington 2017; Williams 2012) and language acquisition (e.g., Glenberg and Kaschak 2002; Gallese and Lakoff 2005; Lakoff and Johnson 2008; Gordon et al. 2019) offer empirical evidence that cognition is embodied for both children and adults. An embodied perspective is therefore viewed as a way to improve teaching and learning because it engages all aspects of the learner (i.e., brain, body, and environment) and more fully captures the way learners draw on their bodily experience to understand abstract concepts (Hall & Nemirovsky 2012; Abrahamson and Lindgren 2014). This is both timely and relevant in the field of learning, design, and technology. Applications of mobile technologies such as augmented reality, real-time data collection and analysis, and virtual reality offer an opportunity to create learning environments that integrate brain and body with the environment as a coupled unit when learning (Johnson-Glenberg and Megowan-Romanowicz 2017; Lan et al. 2018).

A common way to study embodiment is through gestures. Gestures represent a connection between one’s way of thinking and one’s bodily experience within the world (Hostetter and Alibali, 2008; Alibali and Nathan 2012; Atit et al. 2016). According to Alibali and Nathan (2012), gestures offer evidence that “the body is involved in thinking and speaking about the ideas expressed in those gestures” (p. 248). The underlying idea is that abstract thinking becomes an observable and bodily-based phenomenon that can be studied through one’s hand gestures and bodily movements (Nemirovsky and Ferrara 2009; Nathan and Walkington 2017). In this way, gestures serve as an indicator of the coupling that is taking place among mind, body, and environment.

Gestures, however, are only one indicator that cognition is embodied. More recent literature suggests that there are multiple forms of embodied interaction that reveal the way cognition is embodied (Goodwin 2007; Nemirovsky and Ferrara 2009). Embodied interactions are the social, verbal, and non-verbal aspects of human interaction that occur as people come together within a given space and interact with each other and the environment to solve problems or take action (Goffman 1964; Goodwin 2007; Streeck et al. 2011). The underlying idea of embodied interaction is that cognition is understood through the different ways that people come together – that is, through the “mutual orientation of the participants” (Streek et al. 2011, p. 2). This mutual orientation reflects the way that people use their bodies and the structures in the environment to make meaning: “Like gestures, these displays of mutual orientation are co-constructed through embodied signs” (Streeck et al. 2011, p.2). While gestures are one indicator that brain and body are intimately coupled with the environment, embodied interactions can also be evidenced through one’s spoken words, gaze, and use of tools within the environment (Goodwin 2007; Goffman 1964). This has led scholars to begin exploring how multimodal perspectives can be drawn upon to study embodied cognition (e.g., Nemirovsky and Ferrara 2009; Hutchins and Nomura 2011; Flood et al. 2016; Malinverni et al. 2019).

The purpose of this paper is to present scholars with a robust methodological framework that can guide the analysis of embodied interaction with technology. We first situate Bezemer’s (2014) methodological approach to multimodal transcription in the philosophical and theoretical roots of embodied cognition. We then apply the framework in the context of K-12 education. Drawing on a social semiotic perspective of multimodality, we conducted a fine-grained analysis of two fifth-grade children who engaged in an educational robotics activity over a two-day period. Our analysis was guided by an overarching question about computational thinking:

In what ways do young children experience computational thinking as an embodied phenomenon during an educational robotics activity?

By presenting and applying the framework in the same paper, our goal is to offer scholars a robust methodological framework that can be built upon to study the embodiment of computational thinking or other learning phenomena currently of interest to the field.

Literature review

(Gibson’s 1979, 1986) ecological approach to visual perception is considered foundational to embodied perspectives of cognition. In that work, Gibson introduces the notion of affordance, which represents the opportunities or possibilities for action that are available within the environment (see also Rietveld and Kiverstein 2014). These possibilities can come from the properties of an object; for example, “a flat horizontal surface affords standing and walking, a graspable rigid object affords throw” (Hirose 2002, p. 290). At the same time, possibilities for action are also determined by one’s perception. Although a flat horizontal surface might afford standing and walking for some, it might represent a place to lie down or sleep for others. This ambiguity is an essential piece of Gibson’s (1986) work:

...an affordance is neither an objective property nor a subjective property; or it is both if you like. An affordance cuts across the dichotomy of subjective-objective and helps us to understand its inadequacy. It is equally a fact of the environment and a fact of behavior. It is both physical and psychical, yet neither. An affordance points both ways, to the environment and to the observer. (p. 121)

Gibson’s point, that an affordance points both ways, acknowledges how our perception of an object is based on both the subjective properties as well as the cultural and situational meaning that develops within a local context.

Gibson drew on Merleau-Ponty’s (1962) phenomenological notions of embodiment, where brain and body are considered inseparable from both each other and the world. Merleau-Ponty presented embodiment as a dialectical and ongoing relationship between the objects in that world and one’s experience with the cultural aspects of that world. Dreyfus (1996) later explained this dialectical relationship through Gibson’s example of a postbox, noting how cultures that use a postbox daily perceive the purpose and meaning of that object differently than cultures who do not. His point was that social context and culture affects how one perceives the meaning of an object—that although objects in the world have objective properties, the way we experience those cannot be separated from our experience and, by extension, perception of the world (see also Romdenh-Romluc 2010). This helps explain the phrase, “points both ways” by Gibson. It suggests an ongoing, emergent ‘back-and-forth’ dynamic between person and environment as one interacts with different objects and people in the world. This notion sets embodied cognition apart from information processing in that cognition is based on our ongoing, bodily perception of the world (i.e., brain and body) rather than the way we store, process, and retrieve information stored in our brains.

Gestures and embodied cognition

Gestures are considered action-based evidence that the body is involved in cognition and reasoning (Alibali and Nathan 2012). The body (i.e., sensory system) becomes an affordance in the environment, simulating past concepts or ideas as if they were occurring at this moment (Alibali and Nathan 2012; Weisberg and Newcombe 2017). Gestures therefore have meaning beyond communicative purposes (Goodwin 2007), revealing how one’s thinking and perception is grounded in bodily experience (Lakoff and Núñez 2000).

There is growing evidence that gestures serve as a physical manifestation of one’s thinking and perception, particularly for young children in the area of mathematics (Alibali & Nathan 2012; Hall and Nemirovsky 2012; Alibali et al. 2014a, b). For example, Alibali and Nathan (2012) used McNeill’s (1992) typology of gestures to understand what kind of gestures emerge as mathematics teachers and learners communicate their ideas. Research on elementary school children further suggests that employing hand gestures can improve mathematical reasoning and performance (Hostetter and Alibali 2008; Nathan 2008; Howison et al. 2011). Howison et al. (2011) designed the Mathematical Imagery Trainer (MIT) to teach proportional equivalence to elementary and middle school children; they reported that engaging learners in proportional hand gesturing enhanced their conceptual understanding of proportionality. Others have similarly found that sensory-motor activity plays an important role in the way young children make sense of and work with abstract mathematical concepts (Williams 2012; Abrahamson and Lindgren 2014; Alibali et al. 2014a, b).

Embodied interaction

One concern over studying embodied cognition through gesturing is that gestures alone do not fully reveal how the brain and body are coupled with the environment during embodied interaction, or how that coupling develops over time (Goodwin 2007). This concern is relevant to the study of technologies for learning, where the interaction between people, technology, and the environment is a central focus. Streeck et al. (2011) argued that embodied interactions with technology are best understood through multiple modalities:

Rather than just aiding and supplementing verbal communication, every communication technology - just like each bodily modality that is deployed along with speech or in which speech is embedded—comes with its own affordances and constraints and reconfigures the ecology of action and interaction within which the parties operate. (p.17)

Streek et al.’s argument connects back to Gibson’s notion of affordances. Tools and technology become an extension of (Hirose 2002) or even an inseparable part of our cognition (Hutchins 1995) which, in turn, offers new possibilities for action. As a result, any analysis of embodied interaction with technology requires that tool and user are treated as an inseparable unit that is coupled with the environment (Harlow et al. 2018). While gestures are one way in which this coupling can be evidenced and understood, embodied cognition can also manifest through interactions such as utterances, tool use, stance, proximity to others, and facial expression (Hutchins and Nomura 2011; Goodwin 2007).

The idea that embodied interactions are environmentally coupled is not new. McNeill’s (1992) seminal work explained how gesture and language mutually advance one another in a complementary fashion. Drawing on Goffman (1964), Goodwin (2007) similarly argued that gestures cannot be understood in isolation because they are intimately tied to both the discourse and tools within the environment. Participants co-construct meaning during social interaction; their bodily activities, use of objects/tools, and discourse constitute a “small ecology” that shapes and reflects that meaning (Goodwin 2007, p.199).

This co-construction of meaning can be seen in several studies of embodiment. Nemirovsky and Ferrara (2009) focused on various modalities (e.g., facial expression, gesture, tone of voice) to examine how high school students co-constructed geometric concepts through their bodies and environment. Alibali and Nathan (2012) similarly acknowledged that the use of objects and tools in the environment can serve as evidence of the way learners embody their thinking. Overall, these studies suggest that any analysis of embodied interaction, both with and without technology, should reveal the meaning behind one’s bodily movement. In other words, it is important to understand both how one interacts with technology and what the meaning is behind that interaction. Studying technology from this perspective therefore requires an approach that emphasizes the complementarity of different modes of human interaction so that the meaning behind those interactions can be understood.

Methodological framework

This paper presents a methodological framework for the analysis of embodied interactions with technology that emphasizes the complementarity of different modes of human interaction. Bezemer and Mavers (2011) noted how growing access to digital technology has led to an increased need for and interest in multimodal, ethnographic methods in the social sciences. As a result, scholars have begun to explore multimodal perspectives when studying how people use technology as part of their learning, particularly through video-based research (e.g., Bezemer 2014; Jewitt et al. 2016). When transcribing video data, the overall goal is to bring together various modes of interaction (e.g., gestures, body position, tool use, discourse) in a way that visually reveals the meaning behind that interaction (Bezemer and Mavers 2011; Jewett et al. 2016; Mondada 2018).

Multimodal transcription

Bezemer’s (2014) framework for multimodal analysis offers a powerful starting point for transcribing and analyzing embodied interactions with technology through video data. Bezemer sought to analyze social interaction through images and text that aligned different modalities (e.g., speech, gaze, gesture) simultaneously. After reviewing current literature on multimodal methods, Bezemer ultimately created a framework that contained five common steps: (1) Choose a methodological framework, (2) Define purpose and focus of transcript, (3) Design transcript, (4) Read transcript, and (5) Draw conclusions. Bezemer recommended first adopting a methodological framework that aligns with the research question being asked (Step 1), then selecting episodes that help answer those questions (Step 2). He also recommended employing transcription conventions such as bold and italicized text during the design of the transcript (Step 3) to aid analysis when reading and annotating the transcript (Step 4) in that they visually represent different modalities taking place simultaneously (e.g., gesture and gaze with spoken words) or in rapid sequence. In the final step, Bezemer recommended addressing the driving research questions by returning to the literature to explain the findings.

Analyzing embodied interaction with technology

Bezemer’s (2014) framework for multimodal analysis aligns with current perspectives of embodied interaction. Goodwin (2007) and others (Nemirovsky and Ferrara 2009; Flood and Abrahamson 2015) have noted how embodied interaction is a temporally organized event in which different modalities (e.g., dialogue, gesture) help reveal the meaning behind participants’ actions. However, Bezemer’s (2014) framework was originally developed to study medical activity through conversation analysis. Our goal was to bring his framework into the context of embodied cognition and embodied interaction with technology. To achieve this goal, we drew on a social semiotic approach to multimodality. This helped us align Bezemer’s framework more strongly with the philosophical and theoretical roots of embodied cognition while offering a tool for analyzing embodied interactions with technology through video data.

Step 1: choose a methodological framework

We drew on a social semiotic approach to multimodality because it focuses on revealing how meaning is constructed with and through different modalities during social interaction (Streeck et al. 2011; Jewitt et al. 2016). The general idea is that the “micro-observation of modal features” (Jewitt et al. 2016, p. 74) can reveal the socially and culturally embedded aspects of a phenomenon. This aligns with Gibson’s notion of affordances in that each modality helps reveal the meaning behind participants’ perception of each other and the objects in the environment. This perspective strongly guided the design and reading of our transcript and helped us determine what each modality revealed about our participants.

Step 2: focus and purpose

To demonstrate the application of the framework in the context of embodied interaction with technology, we explored a topic of current interest in educational research: children’s computational thinking during educational robotics. Computational thinking is a process that engages students in “solving problems, designing systems, and understanding human behavior, by drawing on the concepts fundamental to computer science” (Wing 2006, p. 33). Learners typically engage in four practices as part of computational thinking: (1) Problem decomposition, (2) pattern recognition, (3) algorithmic thinking, and (4) debugging after testing a solution. These practices are considered essential for later success in STEM disciplines (Wing 2011; Grover and Pea 2013); they are important components of computer science, which has become the focus of various educational initiatives in recent years (National Research Council 2010; National Science and Technology Council 2018).

Educational robotics activities are one way to support children’s development of computational thinking skills and promote computer science. Robotics requires students to decompose a larger task, develop potential solutions, apply mathematical concepts as they program the robot, and debug problems as they negotiate the challenge at hand (Bers et al. 2014; Chen et al. 2017; Kopcha et al. 2017). In theory, it is likely that young children will experience these activities as an embodied phenomenon. Recent evidence suggests that children draw on their bodily understanding of the world when engaging in mathematics (e.g., Ferrara 2014) as well as educational robotics (Sung et al. 2017). More importantly, programming the robot supports learners in using their bodies to visualize and program potentially complex movements that will be enacted by the robot (Yuen et al. 2015).

Current studies of children’s computational thinking , however, do not fully address the embodied aspects of their thinking. Scholars more often focus on computational thinking as a ‘correct’ answer or solution than the development of a dynamic problem-solving process (e.g., Bers et al. 2014; Román-González et al. 2018). There is a current interest in exploring how children might embody computational thinking to better understand the cognitive aspects of the construct (Brennan and Resnick 2012; Grover and Pea 2013; Yasar 2018). The question that served as our focus, then, was: In what ways do young children experience computational thinking as an embodied phenomenon during an educational robotics activity?

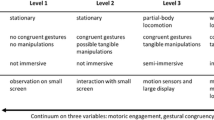

To answer this question, we transcribed 60 minutes of video in which a pair of 5th grade children completed an educational robotics activity over a two-day period. The activity was the culmination of a two-week unit that supported computational thinking through a hands-on STEM-integrated problem-solving experience (see Kopcha et al. 2017). As shown in Fig. 1, learner activity was recorded both at the computer (Fig. 1a), where the pair completed the activity and programmed the robot, and at a larger 3’ × 3’ grid (Fig. 1b) where the pair repeatedly tested their programming. In this way, we captured the embodied interactions of the learners through multiple modalities as they used technology as part of their learning. Analyzing learner behavior and interaction during computer programming activity would offer additional insight into children’s computational thinking as an embodied process (Brennan and Resnick 2012; Kopcha and Ocak 2019).

Step 3: design the transcript

To design a multimodal transcript from our video data, we combined the social semiotic approach described above with a fine-grained analysis of video data. The goal was not to create a verbatim report of the dialogue but rather engage in “re-making of observed activities in a transcript” (Bezemer and Mavers 2011, p.196) to reveal “fresh insight” (p. 196) into the embodied nature of young children’s computational thinking during educational robotics. Emphasis was therefore placed on examining both verbal and non-verbal interactions such as gestures, bodily actions, and mimicry (Garcez 1997; Jaspers 2013). Our overall approach was to extract and transcribe various forms of participant interaction in a way that revealed the meaning and complex dynamics behind that interaction (Baker et al. 2008; Garcez 1997).

We began by transcribing the audio data alongside images of the participant’s interactions such that the timing of the images and dialogue matched the time code in the video. Our selected images focused specifically on moments where the participants interacted with each other through gesture and/or different tools (e.g., the robot, the computer). Arranging the images and dialogue in this way helped us preserve the temporal organization of the modalities presented in the video and understand the nuanced meaning revealed through the participant’s bodies, their spoken words, and the elements of the environment over time. Table 1 contains an example of the side-by-side organization of our transcript, which included the transcribed dialogue (first column) and associated images (second column). The final transcript contained over 20 pages of text and images.

Step 4: read the transcript

According to Bezemer (2014), “transcripts don’t speak for themselves” (p.163), meaning that researchers must engage in reading the transcript in a way that addresses the guiding research question. To achieve this goal, we employed a process called transduction. Transduction is part of a social semiotic approach to multimodality in that it emphasizes the “remaking of meaning involving a move across modes” (Jewitt et al. 2016, p. 72). In other words, transduction represents an effort on the researcher’s part to look across the different modalities of interaction and develop insight about the research question. An important part of this process is the use of transcript conventions, such as notes in brackets, sign systems, and variation in font styles. This helps guide the reader’s attention to the most salient aspects of the transcript.

Our transduction appears in the third column of our transcript (see Table 1). The transduction process entailed constructing a single narrative that described how different modalities emerged and supported the participant’s computational thinking. This required us to treat the dialogue (first column) and related images (second column) as a single unit of analysis – meaning that they were analyzed together rather than separately. We then analyzed the transduction to identify major themes (e.g., CT was extended, embodiment of mathematical concepts). Themes were identified through consensus building. In this study, consensus was established through four cycles of independently reading the transcripts followed by group meetings in which the researchers determined the consistent patterns that were present in the data (Baxter and Jack 2008).

To aid consensus building, we drew on Grover and Pea (2013) and Wing (2006) to establish a shared understanding of the various computational thinking skills. For example, we agreed that decomposition was evident whenever the participants discussed the sub-steps associated with their overall goal. Algorithmic thinking was evident whenever the participants used precise, step-by-step calculations to solve the task at hand, whereas pattern recognition referred to the way that participants applied the knowledge gained from previous experience to the current situation. Debugging and testing involved moments in which the learners tested their computer program or fixed the program based on their test.

To aid our analysis, we employed two primary conventions. First, we incorporated a description of the participant’s gestures and body movements in the second column. Each description appears in a set of brackets (e.g., [holds up four fingers]). Second, we noted the specific computational thinking skills present during the participant’s embodied interaction. These appear in brackets in the third column (e.g., → algorithmic thinking). The right-facing arrow (→) was used to indicate the skill that participants were moving toward. Incorporating these conventions into our multimodal transcript helped reveal how computational thinking emerged as an embodied phenomenon for our participants.

Step 5: draw conclusions

Once we completed our transduction, we addressed our research question and drew conclusions about our findings as they related to the literature. Those findings and discussion of our conclusions are presented below.

Findings and discussion

Our multimodal analysis of video data revealed two major themes about the nature of young children’s computational thinking: (1) computational thinking was extended to include both the participant’s bodies and structures in the environment and (2) computational thinking was guided by participant’s embodiment of mathematical concepts. With regard to the extended nature of cognition, the participants used their bodies and resources in the environment to simulate the robot’s actions while away from the larger 3’ x 3’ grid by acting as if they, themselves, were the robot. As part of their computational thinking, the participants also embodied the mathematical concept of proportionality. The participants first established a set of unit movements for controlling the robot based on the relationship between distance, speed, and time. The participants then used multiplicative reasoning to manipulate the forward unit movement by halves and doubles. This helped them decompose the overall task and anticipate the success of the robot’s movements through pattern recognition and algorithmic thinking. Table 2 contains a summary and description of each theme; those themes are also described in detail below.

Computational thinking was extended

The first theme addresses the way that the participants’ computational thinking was extended onto their own bodies and the structures in the environment. Favela and Chemero (2016) explained how cognition is extended in that “cognition is not restricted to the boundaries of the organism” (p. 61) but rather is a matter of “what it does and how it relates to other aspects of a system” (p. 60). Simply put, extended cognition focuses on the dominant interactions between an organism and its environment. These interactions, which can occur spontaneously, are not restricted to one’s brain or even brain and body. Rather, they are part of an interacting system in which the brain, body, and the structures in the environment play an essential role in one’s reasoning (Richardson and Chemero 2014, p. 48).

In this study, the extended nature of cognition was reflected in the way that the robot and other resources in the environment became an integral aspect of the participants’ computational thinking. As shown in Fig. 3, the participants used their bodies to communicate and interact with each other, acting as if they, themselves, were the robot moving in a specific direction (Fig. 2a), making a 90° turn (2b), and pivoting in place (2c).

The participants used their bodies to simulate (a) the robot moving forward, (b) the robot’s wheels when turning, and (c) the robot pivoting when turning. They also used heir hands and bodies to indicate (d) numerical precision, (e) linear distance (e.g., pulling hands apart), and (f) the revolutions of the robot=’s wheels (e.g., rolling hands)

As shown in Fig. 3, the participants’ interaction with the environment also included a small map that contained their overall solution path at larger grid (Fig. 3a). The small map helped the participants develop numerically precise movements (3b) and make a connection between the robot, themselves, and the components of the computer program that controlled the robot (3c and d). Taken together, these interactions suggest how the participant’s cognitive system extended beyond their own bodies to include the resources and structures available in the environment (Favela and Chemero 2016).

The way that participants coordinated their physical activity with the robot and structures in the environment is an important finding. Maneuvering the robot is a complex task that is highly spatial in nature; one must conceptualize the robot’s movement without immediately seeing the movement play out, then convert that movement into block-based programming. For young children, such activities are cognitively demanding due to their abstract nature (Liben 2012; Sung et al. 2017). In this study, the participants negotiated these demands by acting as if they, themselves, were the robot when interacting with each other, the small map, and the computer program. By taking on the characteristics of the robot with their own bodies, the participants engaged in what Papert (1980) termed “analogizing oneself to a computer” (p. 155). The robot became an object to think with—that is, the robot became an object that helped ground and align the participant’s knowledge and thinking with their own bodily experience (Papert 1980; see also Reinholtz et al. 2010). By embodying the characteristics of the robot, the participants were able to simulate the robot’s actions while away from the larger grid and convert that action into a computer program. This finding supports other scholars who have suggested how working with a robot can support young children’s reasoning when engaged in computational thinking and computer science (Liben 2012; Sung et al. 2017).

This finding also offers insight into the results reported by Sung et al. (2017) who found that children who used their bodies to support their thinking were more successful than those who did not when programming a robot. The authors of that study attributed their results to the way that learners shifted their perspective from themselves to the robot, which in turn helped them focus on sequencing the robot’s actions with accuracy. Our findings expand on the nature of Sung et al.’s explanation, suggesting that their participants were doing more than taking the perspective of the robot. Rather, it is likely that their participants were including the objects in the environment, such as the robot, as an integral part of their cognitive system. By acting out the robot’s movements, the robot became an embodied artifact as an object-to-think-with that helped them simulate the robot’s actions and convert those actions into a block-based program more successfully than the participants who did not.

Computational thinking was guided by embodied mathematical concepts

The second theme addresses the way that the participants’ computational thinking was guided by their embodiment of mathematical concepts. As shown earlier in Fig. 2, a number of mathematical concepts were represented through gestures; the participants used their hands and bodies to indicate moving forward one unit square (Fig. 2a), communicate angle measurement such as a right angle (2b), and express numerical precision (2d), and represent distance (2e and f). The way that the participants used gestures to communicate and engage with mathematical concepts was not unexpected. Mathematics is considered integral during computational thinking (Wing 2006, 2011). Given growing evidence that mathematical thinking is embodied for young children (Alibali and Nathan 2012; Abrahamson 2017), it makes sense that the participants in this study used their hands and bodies as part of their thinking.

Of greater interest was the way that the participants drew on proportional reasoning to guide their computational thinking when working away from the larger 3’ × 3’ grid. Proportional reasoning involves making “multiplicative comparisons between quantities” (Lannin et al. 2013). It involves understanding the way two numbers relate to one another and how that relationship can be used to construct smaller or larger units that uphold the underlying relationship. In this study, the participants first engaged in proportional reasoning by creating unit movements to control the robot. They then used those unit movements to create novel movements by thinking in multiplicative quantities. The way proportional thinking emerged is explained below.

Unit movements

The participant’s proportional reasoning first became evident as they established two unit movements for controlling the robot—one for moving the robot forward and one for turning the robot. With regard to moving forward, the participants learned that the robot would move forward two unit squares for one second on the large grid if it moved at speed five (i.e., 2 units : 1 second). Next, they learned to move the robot one 90° turn by moving the wheels opposite of each other (i.e., one forward, one backward) for 0.4 seconds at speed four. Those unit movements played an essential role in the way the participants conceptualized and communicated about the movement of the robot while away from the larger 3’ × 3’ grid.

In the moment displayed in Table 3, the pair returns from testing the robot at the larger grid. The boy recounts the last movement made, then proposes the goal of moving the robot forward two unit squares. To do this, he draws on the unit movement for moving forward: “Now we need to go forward one second at speed 5.” As he speaks, he points at the small map. This suggests he is decomposing the task while also determining the speed and time values needed to move the robot with precision (i.e., algorithmic thinking).

In the rest of the moment displayed in Table 3, the girl considers the proposed action, pointing at the computer screen while the boy explains the mathematical concept underlying his thinking. This time he recalls the unit movement for making a turn while simulating the action with his body: “We are doing less time but more speed...so change that so the [time] is 0.4.” He then raises four fingers, suggesting that his body is involved in his understanding of the relationship between time and distance.

The way that participants established and called on unit movements reveals how their computational thinking emerged as an embodied phenomenon. From an embodied perspective, cognition is a tool for action; we use the information currently available (i.e., perception) to take action (Hirose 2002, Wilson 2002). Our bodies play an important role in this process—our ability to reason in a given moment draws on previous experiences that have become internalized as simulated action (Lakoff and Johnson 2008; Nathan and Alibali 2012; Abrahamson and Lindgren 2014). This helps reduce the cognitive work required to take action in a way that helps accomplish our immediate goals (Wilson 2002).

In this study, the unit movements served as the foundation for simulating possible action that repeatedly supported the participant’s computational thinking. For example, the boy used unit movements to decompose the overall task and conceptualize an action that would move the robot two units (i.e., “go forward one second”) and then turn. The boy’s body helped him simulate those possibilities for action so that he could achieve his immediate goals. Rather than generating new speed and time values for each proposed action, he used pattern recognition to recall the values previously established for each unit movement. He used his body to simulate the robot’s movements, coordinating that movement with the small map and the computer screen. This ultimately helped him conceptualize whether the proposed action would help move the robot the desired amount.

The unit movements also helped reveal the participants’ process of computational thinking. Recent trends in the computational thinking literature suggest that computational thinking is a complex, dynamic interaction among different systems (e.g., person, technology, environment) that supports effective problem solving (Berland and Wilensky 2015; Yasar 2018). Any change in one system affects each of the others, making computational thinking a reactive process in which learners must consider and respond to what emerges over time (see also Richardson and Chemero 2014).

The reactive nature of CT was evident in the participant’s interactions. At one point, the boy uses decomposition to conceptualize a possibility for action while at the same time engaging in pattern recognition and algorithmic thinking to bring accuracy to the action: “We need to go forward one second, at speed five.” The girl then reacts to what the boy suggested. She first considers the boy’s suggestion, repeating, “No, wait—we go forward one second,” while pointing at the computer. This suggests she is coordinating her brain and body with the computer program to consider the boy’s suggestion. She then immediately decomposes the next move: “...and then...turn.” Her response suggests that she has accepted the boy’s suggestion and is ready to continue. The boy reacts, engaging again in algorithmic thinking and pattern recognition to establish the appropriate time value (i.e., “change the [time] to 0.4”).

The unit movements played a critical role in supporting the participant’s reasoning during these interactions. Thinking in units helped the boy simulate the robot’s actions in one moment (i.e., moving his hands like the wheels of the robot making a turn) while also anticipating the results of that action in the next. Pattern recognition and algorithmic thinking served to bring precision to his thinking, which helped the pair decide whether the action was viable or not. The process was not linear or organized but rather reactive; the participants drew upon computational thinking skills as they were needed in the moment. This pattern supports the perspectives of Papert (1980) and others (Wing 2011; Grover and Pea 2013; Kong et al. 2019), who argued that computational thinking is more than computer programming; rather, it is a complex process of problem solving that is responsive to what emerges in the system over time.

Multiplicative reasoning

As the participants continued working on their task, they used the unit movements to create new movements through multiplicative reasoning. Multiplicative reasoning is related to proportional reasoning in that it involves thinking about quantities in terms of groups and the underlying relationship between groups (Lannin et al. 2013). It represents more formal mathematics in that it builds on children’s innate ability to think in additive terms (e.g., adding one object to another yields a group of two objects). An example of multiplicative reasoning is when children use the relationship between speed and time (e.g., 30 miles per hour) to predict the distance travelled over differing amounts of time. Multiplicative reasoning is therefore a precursor to more advanced mathematical operations such as algebraic functions and proportional reasoning.

Multiplicative reasoning: a perceptual approach

In this study, multiplicative reasoning first emerged just after the moment presented, earlier, in Table 3. The participants return from testing and realize that the original unit movement (i.e., 2 square units for 1 second) did not move the robot far enough to achieve their goal. As shown in Table 4, the girl conceptualizes a possible solution in which the robot needs to “go faster,” where “faster” refers to the timing of the movement (i.e., “...not [speed] faster”). She anticipates that making a small increase in time (i.e., 0.3 seconds) will result in a corresponding small increase in the distance the robot travels. Her approach relies on a perceptual strategy that develops in early childhood in which information about one length or distance is coded in a way that preserves a corresponding relation to another length or distance without the need for discrete or complex mathematical calculation (Boyer and Levine 2015). Her thinking is multiplicative in that she upholds the underlying relationship between distance and time while holding speed constant.

The boy considers the possible action, working between the small map and the computer program to simulate the robot’s movement. He first retraces the robot’s movement on the small map, forming a 90° angle with his arms: “Yea, that’s right...we already made it [past that turn].” He then acknowledges that “the [time] needs to go higher.” His body suggests that he is conceptualizing the same underlying relationship as the girl. His finger moves upward while also moving forward, indicating that he associates an increase in time with an increase in the distance the robot will travel.

Multiplicative reasoning: halves and doubles

After testing their idea at the larger grid, the participants use multiplicative halves and doubles to create new movements based on the unit movement. Table 5 presents the participants’ use of halving; it begins as the pair attempts to move the robot one square unit on the large grid. The girl recognizes this as a portion of a full forward unit movement, suggesting: “we wanna go forward for 25 hundreths [of a second].” Although the suggested time value will not achieve her goal, it reflects her multiplicative reasoning. The value 0.25 comes from the repeated reduction of the original unit movement by halves (i.e., 2 units: 1 sec → 1 unit : ½ sec → ½ unit : ¼ sec). Her body plays an integral role in her thinking; she rolls both hands forward, simulating the wheels of the robot moving the desired distance. This suggests that she is using her bodily experiences to conceptualize the results of the suggested movement.

The boy checks his notes about the original unit movement and realizes that the girl has made an error—she has halved the original unit movement too many times. He then suggests that the time should be “half a second,” which would move the robot one square unit (i.e., half of the original unit movement).

Table 6 presents the participants’ use of multiplicative doubling; it begins as the pair attempts to move the robot forward four unit squares. The boy recognizes the distance as being two forward unit movements and doubles the time value: “Because two [units] would be one second, so four would be two [seconds].” At the same time, he holds up four fingers, then points at the small map. These actions suggest that he is performing a mathematical calculation while also conceptualizing the effects of that calculation at the same time.

The embodied nature of the participant’s reasoning can be seen in the way they used their bodies and the environment to support more formal, symbolic forms of mathematics. This is most evident in Tables 5 and 6, where the boy repeatedly refers to his small map while using his body to simulate the robot’s actions as he multiplies and divides the original unit movement by twos. This highly coordinated activity is consistent with the findings of Reinholtz et al. (2010), who found that children come to understand proportions by first grounding the concept in their own bodies. The underlying principle, called dynamical conservation, suggests that “the learner needs to discover an action pattern...that maintains a constant property of the system.” In Reinholtz et al. (2010) study, children lifted two handheld devices above a table surface to discover an underlying proportional relationship (e.g., the left hand raised double the distance of the right). The bodily experience associated with discovering that action pattern later served to support more formal, symbolic mathematical calculations in the absence of the devices.

In our study, the robot may have helped to support the principle of dynamical conservation. Similar to the handheld devices in Reinholtz et al. (2010), the robot served as a body-based referent (i.e., an object-to-think-with) that helped the boy engage in a pattern of action that maintained the properties of the system. Simulating the robot’s movements with his body helped him uphold the underlying relationship between distance and time while also decomposing the task and calculating mathematically precise time values (i.e., algorithmic thinking). The way that the robot supported dynamical conservation is important. Children often struggle with multiplicative reasoning due to a lack of authentic learning activities that draw on their bodily understanding of proportion (Reinholtz et al. 2010; Abrahamson and Trninic 2015). Our findings suggest working with a robot may be one way to engage young children in a pattern of bodily action that supports more formal multiplicative reasoning.

The embodied nature of the participants' reasoning was also evident in the way they varied their approach based on the complexity of the task. They initially used a perceptual strategy, moving the robot a small amount more by increasing the time a corresponding small amount (see Table 4). Later, they manipulated the original unit movement by halves and doubles to create new movements (see Tables 5 and 6). The most likely explanation is that the participants were responding to the information in the environment in a way that efficiently and effectively met their immediate goal. Mathematics in grades 3–5 builds on children’s existing understanding of one-half as it relates to other fractions (Barth et al. 2009). Precisely halving and doubling the original unit movement, then, would have been somewhat easy because it builds on this focus. In contrast, moving the robot a small distance more would have been more difficult. It would have required the participants to first measure the desired distance, then create a fractional representation of the desired distance that could be used to calculate the proportional time value needed to move the corresponding distance. When faced with this mathematical complexity, it is likely that the participants instead chose the strategy that required less cognitive work.

The participants’ varied use of multiplicative reasoning makes sense from an embodied perspective. Embodied perspectives suggest that humans seek to reduce the cognitive work required to make decisions efficiently and effectively (see Wilson 2002; Shapiro 2019). Rather than analyze and process every small detail of the situation, humans make small adjustments to their actions based on their perception. In our study, the participants selected the strategy that best balanced their need for precision with their goal of being efficient. When the mathematical calculations required to move the robot became too complex, they instead made quick adjustments based on perception. This is consistent with other studies that found young children typically prefer visually comparing quantities over performing more complex mathematical calculations (Jeong et al. 2007; Boyer and Levine 2015).

Implications for practice

One implication of our study is that children’s computational thinking during educational robotics may be embodied. Our participants repeatedly used their bodies, the robot, and other structures in the environment to decompose the overall task, engage in algorithmic thinking, and develop unit movements that supported them in anticipating the results of their thinking. The design of our robotics activity likely supported those outcomes. Our participants were challenged to reason about the robot’s movement across two different representations of the same space — the larger 3’ × 3’ grid and the smaller map. As noted by Möhring et al. (2018), working between two representations of the same space is a cognitive challenge for young children. It requires them to conceptualize, visualize, and accurately predict how objects and movement in one space might be represented at a different scale in another space. This, in turn, naturally encourages children to engage in proportional reasoning as part of their computational thinking (see also Abrahamson and Lindgren 2014).

The use of a coordinate grid was also an important aspect of our design. Children often struggle with developing more formal, discrete mathematical understandings of proportion (Boyer and Levine 2015; Jeong et al. 2007). Working with a coordinate grid, however, can offer a referent that helps children encode their perceptual, bodily understanding of proportion with formal mathematical calculations (Abrahamson 2017; Möhring et al. 2018). This appears to be the case in our study. Our participants supported their computational and mathematical thinking by working in discrete units that corresponded with the unit squares on both the larger grid and small map. This likely helped them connect their embodied understanding of multiplicative reasoning with the symbolic manipulation of unit movements by half and double. Duijzer et al. (2017) and others (Reinholtz et al. 2010; Abrahamson and Trninic 2015) similarly found that children were better able to engage in formal mathematics when they connected their bodily experience of proportionality with square units on a grid. Future research might examine this design principle more fully, exploring how using a coordinate grid during robotics activity can help children use their bodies to conceptualize the robot’s movements and engage in a more formal understanding of proportion.

Our findings also hold implications for the study of computational thinking. Computational thinking is often assessed through scenarios in which there is ultimately a correct solution, and evidence of computational thinking is measured by how close participants come to the correct solution (e.g., Bers et al. 2014; Atmatzidou and Demetriadis 2016; Weintrop et al. 2016). Our findings suggest that it may be as important, if not more, to examine computational thinking through children’s embodied interactions. This would focus less on whether children generate a correct or incorrect solution and more on the ways that they draw on their bodies and the environment to learn to achieve their immediate goals with efficiency and accuracy. Future research might therefore focus on the ways that children’s use of gestures and other structures in the environment can reflect their ongoing development of computational thinking as an embodied process of problem solving. Studying computational thinking in this way would help address current issues with computational thinking, bringing additional insight into the cognitive aspects of computer science and how computer science skills develop over time (Brennan and Resnick 2012; Yasar 2018).

Implications for research

For scholars interested in studying the way that learning is embodied, our framework offers a strong methodological starting point for the study of learning phenomena currently of interest to our field. For example, learning through games and play is naturally embodied in that the learner determines how best to engage with the physical and/or social dynamics present in a game (Sutton-Smith 2009). Augmented and virtual reality draw on embodiment in that technology plays a critical role in coordinating learning through one’s brain and body, and blending real and virtual resources in the local environment (e.g., Gordon et al. 2019). User experience and user interface design (UX/UI) similarly assume an embodied perspective in that they focus on the way participants interact with objects in the environment and the meaning of that interaction for the participant. Our framework offers an adaptable approach to future research in these areas that focuses on learner’s embodied interactions with technology.

At the same time, there is no single way to transcribe embodied interaction. The purpose of our framework was to provide a structure for demonstrating through words and images how meaning develops during interaction with others and the environment (Mondada 2018). Scholars who draw on our framework must therefore take care to establish the temporal and spatial organization of both verbal and non-verbal interactions in a given context (Jewitt et al. 2016; Mondada 2018). This requires that scholars make clear the alignment between their phenomenon of interest, the roots of embodiment, and the “representational choices” made when transcribing and analyzing embodied interactions with technology through multimodal transcription video data (see Bezemer and Mavers 2011, p. 194). While our framework was grounded in Gibson and Goodwin’s work, notions of self-organization and emergence in nested systems (see Richardson and Chemero 2014) might align better with studies of the embodied nature of workplace dynamics or in-the-moment decision making. Thus, situating one’s purpose and focus not only helps others understand the perspectives that informed the temporal and spatial organization of the data, but also helps explain the decisions made throughout the analysis (e.g., selection of episodes, images used, focus of the transduction). This clarity is critical for establishing the credibility of multimodal transcripts based on video data; it helps others “assess whether inferences drawn from the data are plausible” (Blikstad-Balas 2017, p. 515).

Limitations

Several limitations are worth noting. First, video data is dense and full of details that reveal the meaning behind one’s words and actions. While our transcript revealed some of the ways that computational thinking might be embodied, these are not necessarily all of the ways young children might embody this type of thinking. There are, by necessity, layers of detail that are lost during transcription—some modes of interaction were prioritized over others because they helped address our focus and driving question. It is important to remember, then, that the findings of this study offer insight into what is possible — the goal being to better understand the nature of a phenomenon of interest. To that end, we engaged in practices that improve the validity and trustworthiness of our findings. For example, we explained the theory and the perspectives that drove our methodological decisions, established inter-rater reliability when coding our transcript, and employed a three-column structure that presented dialogue, images, and our transduction in a temporal fashion. These practices are essential for conducting high-quality multimodal transcript analysis (Bezemer 2014; Mondada 2018).

It is also important to note that the framework presented in this study does not represent the only way to engage in multimodal analysis of embodied interaction through video data. As Mondada (2018) explained, high quality multimodal transcription is not the result of following a set of steps; rather, it is the result of upholding specific requirements:

They must be able to accommodate a variety of resources, including unique, ad hoc, and locally situated ones, besides more conventional ones. In other words, they must be able to represent the specific temporal trajectories of a diversity of multimodal details, including talk where this is relevant, but also silent embodied action when talk is not the main resource or activity. (p. 88)

The framework we present reflects these requirements. It encourages others to attend to the locally situated aspects of embodied interactions and the modalities through which they emerge. It is not a prescription for but rather a flexible, modifiable structure that can consistently support scholars in analyzing the meaning behind both verbal and non-verbal interactions from a multimodal perspective.

Conclusion

While embodied cognition is still relatively in its infancy with regard to learning (Hall and Nemirovsky 2012), there is growing sentiment that the question is no longer if learning is embodied but how (Ferrara 2014; Flood and Abrahamson 2015). This study is timely, then, in that it offers insight into how young children’s computational thinking is embodied, and how such thinking can be studied through children’s embodied interactions with technology and others. Our hope is that scholars will find both the methodological framework and the findings presented in this paper useful as our field continues to explore how embodied interaction with technology might be leveraged to enrich our work in various learning contexts.

References

Alibali, M.W., Boncoddo, R., Hostetter, A.B. (2014). Gesture in reasoning: an embodied perspective. In The Routledge handbook of embodied cognition (pp. 168-177). Routledge.

Abrahamson, D. (2017). Embodiment and mathematical learning. The SAGE encyclopedia of out-of-school learning. New York: SAGE.

Abrahamson, D., & Lindgren, R. (2014). Embodiment and embodied design. In R.K. Sawyer (Ed.), The Cambridge handbook of the learning sciences (2nd ed.). Cambridge, MA: Cambridge University Press.

Abrahamson, D., & Trninic, D. (2015). Bringing forth mathematical concepts: Signifying sensorimotor enactment in fields of promoted action. ZDM, 47(2), 295–306. https://doi.org/10.1007/s11858-014-0620-0.

Alibali, M. W., Nathan, M. J., Wolfgram, M. S., Church, R. B., Jacobs, S. A., Johnson Martinez, C., et al. (2014). How teachers link ideas in mathematics instruction using speech and gesture: A corpus analysis. Cognition and Instruction, 32(1), 65–100. https://doi.org/10.1080/10508406.2011.611446.

Alibali, M. W., & Nathan, M. J. (2012). Embodiment in mathematics teaching and learning: Evidence from learners' and teachers' gestures. Journal of the Learning Sciences, 21(2), 247–286. https://doi.org/10.1080/10508406.2011.611446.

Atit, K., Weisberg, S. M., Newcombe, N. S., & Shipley, T. F. (2016). Learning to interpret topographic maps: Understanding layered spatial information. Cognitive Research: Principles and Implications, 1(2), 1–18. https://doi.org/10.1186/s41235-016-0002-y.

Atmatzidou, S., & Demetriadis, S. (2016). Advancing students’ computational thinking skills through educational robotics: A study on age and gender relevant differences. Robotics and Autonomous Systems, 75, 661–670. https://doi.org/10.1016/j.robot.2015.10.008.

Baker, W. D., Green, J. L., & Skukauskaite, A. (2008). Video-enabled ethnographic research: A microethnographic perspective. How to do educational ethnography, 76-114.

Barth, H., Baron, A., Spelke, E., & Carey, S. (2009). Children’s multiplicative transformations of discrete and continuous quantities. Journal of Experimental Child Psychology, 103(4), 441–454. https://doi.org/10.1016/j.jecp.2009.01.014.

Baxter, P., & Jack, S. (2008). Qualitative case study methodology: Study design and implementation for novice researchers. The qualitative report, 13(4), 544-559. https://www.nova.edu/ssss/QR/QR13-4/baxter.pdf

Berland, M., & Wilensky, U. (2015). Comparing virtual and physical robotics environments for supporting complex systems and computational thinking. Journal of Science Education and Technology, 24(5), 628–647. https://doi.org/10.1007/s10956-015-9552-x.

Bers, M. U., Flannery, L., Kazakoff, E. R., & Sullivan, A. (2014). Computational thinking and tinkering: Exploration of an early childhood robotics curriculum. Computers & Education, 72, 145–157. https://doi.org/10.1016/j.compedu.2013.10.020.

Bezemer, J. (2014). Multimodal transcription: A case study. Interactions, images and texts: A reader in multimodality, 11, 155–170.

Bezemer, J., & Mavers, D. (2011). Multimodal transcription as academic practice: A social semiotic perspective. International Journal of Social Research Methodology, 14(3), 191–206. https://doi.org/10.1080/13645579.2011.563616.

Blikstad-Balas, M. (2017). Key challenges of using video when investigating social practices in education: Contextualization, magnification, and representation. International Journal of Research & Method in Education, 40(5), 511–523. https://doi.org/10.1080/1743727X.2016.1181162.

Boyer, T. W., & Levine, S. C. (2015). Prompting children to reason proportionally: Processing discrete units as continuous amounts. Developmental Psychology, 51(5), 615. https://doi.org/10.1037/a0039010.

Brennan, K., & Resnick, M. (2012). New frameworks for studying and assessing the development of computational thinking. Paper presented in the proceedings of the 2012 annual meeting of the American Educational Research Association, Canada, Vancouver.

Calvo, P., & Gomila, T. (Eds.). (2008). Handbook of cognitive science: An embodied approach. Amsterdam: Elsevier.

Chemero, A. (2009). Radical embodied cognition. Cambridge, MA: MIT Press.

Chen, G., Shen, J., Barth-Cohen, L., Jiang, S., Huang, X., & Eltoukhy, M. (2017). Assessing elementary students’ computational thinking in everyday reasoning and robotics programming. Computers & Education, 109, 162–175. https://doi.org/10.1016/j.compedu.2017.03.001.

Dreyfus, H. L. (1996). The current relevance of Merleau-Ponty’s phenomenology of embodiment. The Electronic Journal of Analytic Philosophy, 4(4), 1–16.

Duijzer, C. A., Shayan, S., Bakker, A., Van der Schaaf, M. F., & Abrahamson, D. (2017). Touchscreen tablets: Coordinating action and perception for mathematical cognition. Frontiers in Psychology, 8, 144. https://doi.org/10.3389/fpsyg.2017.00144.

Favela, L. H., & Chemero, A. (2016). The animal-environment system. In Y. Coelllo & M. H. Fischer (Eds.), Foundations of embodied cognition: Perceptual and emotional embodiment (Vol. 1, pp. 59–74). New York: Routledge.

Ferrara, F. (2014). How multimodality works in mathematical activity: Young children graphing motion. International Journal of Science and Mathematics Education, 12(4), 917–939. https://doi.org/10.1007/s10763-013-9438-4.

Flood, V.J., & Abrahamson, D. (2015). Refining mathematical meanings through multimodal revoicing interactions: The case of ‘‘faster’’. In Annual Meeting of the American Educational Research Association, Chicago, April (pp. 16-20).

Flood, V. J., Harrer, B. W., & Abrahamson, D. (2016). The interactional work of configuring a mathematical object in a technology-enabled embodied learning environment. Singapore: International Society of the Learning Sciences.

Gallese, V., & Lakoff, G. (2005). The brain’s concepts: The role of the sensory-motor system in conceptual knowledge. Cognitive Neuropsychology, 22(3–4), 455–479. https://doi.org/10.1080/02643290442000310.

Garcez, P. M. (1997). Microethnography. In Encyclopedia of language and education (pp. 187-196). Springer, Dordrecht.

Gibson, J.J. (1977). The theory of affordances. In R. E. Shaw & J. Bransford (Eds.), Perceiving, Acting, and Knowing. Hillsdale, NJ: Lawrence Erlbaum Associates.

Gibson, J.J. (1986). The ecological approach to visual perception. New York, NY: Taylor & Francis Group. (Original work published in 1979).

Glenberg, A. M., & Kaschak, M. P. (2002). Grounding language in action. Psychonomic Bulletin & Review, 9(3), 558–565. https://doi.org/10.3758/BF03196313.

Goffman, E. (1964). The neglected situation. American Anthropologist, 66(6), 133–136.

Goodwin, M. H. (2007). Participation and embodied action in preadolescent girls' assessment activity. Research on Language and Social Interaction, 40(4), 353–375. https://doi.org/10.1080/08351810701471344.

Gordon, C. L., Shea, T. M., Noelle, D. C., & Balasubramaniam, R. (2019). Affordance compatibility effect for word learning in virtual reality. Cognitive Science, 43(6), 1–17.

Grover, S., & Pea, R. (2013). Computational thinking in K–12: A review of the state of the field. Educational Researcher, 42(1), 38–43. https://doi.org/10.3102/0013189X12463051.

Hall, R., & Nemirovsky, R. (2012). Introduction to the special issue: Modalities of body engagement in mathematical activity and learning. Journal of the Learning Sciences, 21(2), 207–215. https://doi.org/10.1080/10508406.2011.611447.

Harlow, D. B., Dwyer, H. A., Hansen, A. K., Iveland, A. O., & Franklin, D. M. (2018). Ecological design-based research for computer science education: Affordances and effectivities for elementary school students. Cognition and Instruction, 36(3), 224–246. https://doi.org/10.1080/07370008.2018.1475390.

Hirose, N. (2002). An ecological approach to embodiment and cognition. Cognitive Systems Research, 3(3), 289–299. https://doi.org/10.1016/S1389-0417(02)00044-X.

Hostetter, A. B., & Alibali, M. W. (2008). Visible embodiment: Gestures as simulated action. Psychonomic Bulletin & Review, 15(3), 495–514. https://doi.org/10.3758/PBR.15.3.495.

Howison, M., Trninic, D., Reinholz, D., Abrahamson, D. (2011). The Mathematical Imagery Trainer: From embodied interaction to conceptual learning. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 1989-1998).

Hutchins, E. (1995). Cognition in the Wild. MIT press

Hutchins, E., & Nomura, S. (2011). Collaborative construction of multimodal utterances. In J. Streeck, C. Goodwin, C. LeBaron (Eds.) Embodied interaction: Language and body in the material world, p. 29-43. Cambridge Press.

Jaspers, J. (2013). Interactional sociolinguistics and discourse analysis. In The Routledge handbook of discourse analysis(pp. 161-172). Routledge.

Jeong, Y., Levine, S. C., & Huttenlocher, J. (2007). The development of proportional reasoning: Effect of continuous versus discrete quantities. Journal of Cognition and Development, 8(2), 237–256. https://doi.org/10.1080/15248370701202471.

Jewitt, C., Bezemer, J., & O'Halloran, K. (2016). Introducing multimodality. Routledge.

Johnson-Glenberg, M. C., & Megowan-Romanowicz, C. (2017). Embodied science and mixed reality: How gesture and motion capture affect physics education. Cognitive Research: Principles and Implications, 2(1), 24. https://doi.org/10.1186/s41235-017-0060-9.

Kong, S.C., Abelson, H., Lai, M. (2019). Introduction to Computational Thinking Education. In Computational Thinking Education (pp. 1-10). Springer, Singapore.

Kopcha, T. J., McGregor, J., Shin, S., Qian, Y., Choi, J., Hill, R., et al. (2017). Developing an integrative STEM curriculum for robotics education through Educational Design Research. Journal of Formative Design in Learning, 1(1), 31–44. https://doi.org/10.1007/s41686-017-0005-1.

Kopcha, T., & Ocak, C. (2019). Embodiment of computational thinking during collaborative robotics activity. In Proceedings of Computer Supported Collaborative Learning, International Society of the Learning Sciences. https://repository.isls.org//handle/1/1604

Lakoff, G., & Johnson, M. (2008). Metaphors We Live By. University of Chicago press.

Lakoff, G., & Núñez, R. E. (2000). Where mathematics comes from: How the embodied mind brings mathematics into being. New York, NY: Basic Books.

Lan, Y. J., Fang, W. C., Hsiao, I. Y., & Chen, N. S. (2018). Real body versus 3D avatar: The effects of different embodied learning types on EFL listening comprehension. Educational Technology Research and Development, 66(3), 709–731.

Lannin, J. K., Chval, K. B., & Jones, D. (2013). Putting essential understanding of multiplication and division into practice in grades 3–5. Incorp: National Council of Teachers of Mathematics.

Liben, L. S. (2012). Embodiment and children’s understanding of the real and represented world. In W. F. Overton, U. Müller, & J. L. Newman (Eds.), Developmental perspectives on embodiment and consciousness (pp. 207–240). New York: Lawrence Erlbaum Press.

Malafouris, L. (2013). How things shape the mind. MIT Press.

Malinverni, L., Schaper, M. M., & Pares, N. (2019). Multimodal methodological approach for participatory design of full-body interaction learning environments. Qualitative Research, 19(1), 71–89. https://doi.org/10.1177/1468794118773299.

McNeill, D. (1992). Hand and mind: What gestures reveal about thought. University of Chicago press.

Merleau-Ponty, M. (1962). Phenomenology of Perception. Trans. by C. Smith. London: Routledge & Kegan Paul.

Milkowski, M. (2013). Explaining the computational mind. Cambridge, MA: MIT Press.

Möhring, W., Frick, A., & Newcombe, N. S. (2018). Spatial scaling, proportional thinking, and numerical understanding in 5-to 7-year-old children. Cognitive Development, 45, 57–67. https://doi.org/10.1016/j.cogdev.2017.12.001.

Mondada, L. (2018). Multiple temporalities of language and body in interaction: challenges for transcribing multimodality. Research on Language and Social Interaction, 51(1), 85–106. https://doi.org/10.1080/08351813.2018.1413878.

Nathan, M. J. (2008). An embodied cognition perspective on symbols, grounding, and instructional gesture. In M. DeVega, A. M. Glenberg, & A. C. Graesser (Eds.), Symbols and embodiment: Debates on meaning and cognition (pp. 375–396). Oxford, England: Oxford University Press.

Nathan, M. J., & Walkington, C. (2017). Grounded and embodied mathematical cognition: Promoting mathematical insight and proof using action and language. Cognitive Research: Principles and Implications, 2(1), 9. https://doi.org/10.1186/s41235-016-0040-5.

National Research Council. (2010). Report of a workshop on the scope and nature of computational thinking. National Academies Press.

National Science & Technology Council (2018). Charting a course for success: America’s strategy for STEM education.https://www.whitehouse.gov/wp-content/uploads/2018/12/STEM-Education-Strategic-Plan-2018.pdf

Nemirovsky, R., & Ferrara, F. (2009). Mathematical imagination and embodied cognition. Educational Studies in Mathematics, 70, 159–174. https://doi.org/10.1007/s10649-008-9150-4.

Newell, A., & Simon, H. A. (1972). Human problem solving. Englewood Cliffs, NJ: Prentice-Hall.

Paivio, A. (1986). Mental Representations: A Dual Coding Approach (p. 1986). New York: Oxford University Press.

Papert, S. (1980). Mindstorms (p. 607). New York: Basic Rooks.

Reinholz, D., Trninic, D., Howison, M., & Abrahamson, D. (2010). It's not easy being green: embodied artifacts and the guided emergence of mathematical meaning. In Proceedings of the thirty-second annual meeting of the North-American chapter of the international group for the psychology of mathematics education (PME-NA 32) (Vol. 6, pp. 1488-1496). Columbus, OH: PME-NA.

Richardson, M. J., & Chemero, A. (2014). Complex dynamical systems and embodiment. In The Routledge handbook of embodied cognition (pp. 57-68). Routledge.

Rietveld, E., & Kiverstein, J. (2014). A rich landscape of affordances. Ecological Psychology, 26(4), 325–352. https://doi.org/10.1080/10407413.2014.958035.

Richey, R.C., Klein, J.D., Tracey, M.W. (2010). The instructional design knowledge base: Theory, research, and practice. Routledge.

Román-González, M., Pérez-González, J. C., Moreno-León, J., & Robles, G. (2018). Can computational talent be detected? Predictive validity of the Computational Thinking Test. International Journal of Child-Computer Interaction, 18, 47–58. https://doi.org/10.1016/j.ijcci.2018.06.004.

Romdenh-Romluc, K. (2010). Routledge philosophy guidebook to Merleau-Ponty and phenomenology of perception. Routledge.

Rowlands, M. (2010). The new science of the mind: From extended mind to embodied phenomenology. MIT Press.

Shapiro, L. (2019). Embodied cognition. Routledge.

Streeck, J., Goodwin, C., LeBaron, C. (2011). Embodied interaction in the material world: An introduction. In Streek, Goodwin, and LeBaron (Eds.) Embodied interaction: Language and body in the material world, 1-26.

Sung, W., Ahn, J.H., Kai, S.M., Black, J. (2017, March). Effective planning strategy in robotics education: An embodied approach. In Society for Information Technology & Teacher Education International Conference (pp. 1065-1071). Association for the Advancement of Computing in Education (AACE). https://www.learntechlib.org/primary/p/177387/

Sutton-Smith, B. (2009). The ambiguity of play. Harvard University Press.

Thelen, E., & Smith, L. B. (1994). A dynamic systems approach to the development of perception and action. Cambridge, MA: MIT Press.

Weintrop, D., Beheshti, E., Horn, M., Orton, K., Jona, K., Trouille, L., et al. (2016). Defining computational thinking for mathematics and science classrooms. Journal of Science Education and Technology, 25(1), 127–147. https://doi.org/10.1007/s10956-015-9581-5.

Weisberg, S.M., & Newcombe, N.S. (2017). Embodied cognition and STEM learning: overview of a topical collection in CR:PI. Cognitive Research Principles and Implications, 2(38). https://doi.org/10.1186/s41235-017-0071-6

Williams, R. F. (2012). Image schemas in clock-reading: Latent errors and emerging expertise. Journal of the Learning Sciences, 21(2), 216–246. https://doi.org/10.1080/10508406.2011.553259.

Wilson, M. (2002). Six views of embodied cognition. Psychonomic Bulletin & Review, 9(4), 625–636. https://doi.org/10.3758/BF03196322.

Wing, J. (2006). Computational thinking. Communications of the ACM, 49(3), 33–35.

Wing, J. (2011). Research notebook: Computational thinking—What and why? The Link Magazine, Spring. Carnegie Mellon University, Pittsburgh. https://link.cs.cmu.edu/article.php?a=600

Yasar, O. (2018). Computational thinking, redefined. In Society for Information Technology & Teacher Education International Conference (pp. 72-80). Association for the Advancement of Computing in Education (AACE). https://www.learntechlib.org/primary/p/182505/

Yuen, T.T., Stone, J., Davis, D., Gomez, A., Guillen, A., Price Tiger, E., Boecking, M. (2015). A model of how children construct knowledge and understanding of engineering design within robotics focused contexts. International Journal of Research Studies in Educational Technology, 5(1). https://www.learntechlib.org/p/152295/

Funding

This study was not conducted with any funding sources.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This study was approved by our institution’s Internal Review Board (IRB).

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kopcha, T.J., Ocak, C. & Qian, Y. Analyzing children’s computational thinking through embodied interaction with technology: a multimodal perspective. Education Tech Research Dev 69, 1987–2012 (2021). https://doi.org/10.1007/s11423-020-09832-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11423-020-09832-y