Abstract

Collaborative filtering (CF) is one of the dominant techniques used in modern recommender systems. Traditional CF-based methods suffer from issues of data sparsity and cold start. Therefore, side information has been widely utilized by researchers to address these problems. Most side information is typically heterogeneous and in the form of the graph structure. In this work, we propose a deep end-to-end recommendation framework named GSIRec to make full use of the graph side information. Specifically, GSIRec derives a multi-task learning approach that introduces a side information task to assist the recommendation task. The key idea is that we design a delicate knowledge assistance module to be the bridge between tasks, which captures useful knowledge to complement each task. Also, we utilize a graph attention method to exploit the topological structure of side information to enhance recommendation. To show the wide application and flexibility of our framework, we integrate side information from two aspects: social networks (for users) and knowledge graphs (for items). We apply GSIRec in two recommendation scenarios: social-aware recommendation and knowledge-aware recommendation. To evaluate the effectiveness of our framework, we conduct extensive experiments with four real-world public datasets. The results reveal that GSIRec consistently outperforms the state-of-the-art methods on the rating prediction task and top-K recommendation task. Moreover, GSIRec can alleviate data sparsity and cold start issues to some extent.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the era of information explosion, recommender systems have been playing an indispensable role in meeting user preferences by recommending relevant items [22, 46, 51, 69, 74]. Collaborative filtering (CF) [21] is one of the most widely used techniques, which usually projects users and items in a low-dimensional vector space for predicting users’ preferences for items. Since traditional CF-based methods rely heavily on user-item interaction data to learn ID-based embeddings, they are often impeded by data sparsity and cold start problems. To mitigate these drawbacks, side information is widely used by a large body of research. Users and items are always associated with various side information. In this work, we mainly consider two typical kinds of side information with graph structure: social networks (for users) and knowledge graphs (for items). We focus on two recommendation scenarios: social-aware recommendation and knowledge-aware recommendation.

Social-aware recommendation

Social networks contain social relationships (e.g. friendship or trust) between users. According to the social influence theory [1, 3, 31], users’ preferences are influenced by their neighbors in the social network. For example, in Figure 1(left), shopping may become User A’s interest, since most of her friends are shopping fans. Social-aware recommendation aims at harnessing social networks to improve the performance of recommendation. Previous research has been attempted to use matrix factorization [68, 73, 78], regularization [39], and trust propagation [40] to learn the preference of users. As deep learning has grown in prominence, researchers merged social networks into recommendation by using different deep learning technologies including multi-layer perceptron [14], autoencoder [43], graph neural networks [15, 67].

Knowledge-aware recommendation

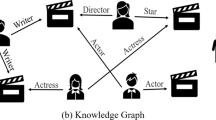

Knowledge graphs provide rich facts and relations about items. Exploiting the semantic information from knowledge graphs is particularly helpful to understand users and items. For instance, in Figure 1(right), User A is fond of music, and one of her favorite songs is linked with its namesake Song A in the knowledge graph. The singer Person A of Song A is also the lyricist of Song B. In this case, it would make sense that User A would like to listen to Song B. We also find Person A is the director and actor of Movie A and Movie B, and hence User A may love these two movies due to her preference for movies. Knowledge-aware recommendation integrates knowledge graphs into recommender systems and has attracted considerable interest. Some researchers derive different meta-paths [72] or meta-graphs [77] to mine semantic information from knowledge graphs. Another line of work [62, 76] uses knowledge graph embedding methods to integrate knowledge graphs into recommendation. Recently, researchers use propagation-based methods [55, 65] to consider semantic information and connectivity information in the whole knowledge graph.

Our approach

Nevertheless, we argue that most methods lack techniques to take full advantage of the graph side information. Most methods utilize the same user (item) representation in both recommendation and side information domains, which is likely to restrict user (item) representation learning in their respective domain and may further cause a negative knowledge transfer from the side information. To address the aforementioned shortcoming, for each user, we employ two kinds of embedding vectors to encode representations of users in the recommendation task and social network embedding task in social-aware recommendation. Similar to the user, item representations are also mapped into two low-dimensional vector spaces in the recommendation task and knowledge graph embedding task in knowledge-aware recommendation.

In this paper, we propose our multi-task learning framework GSIRec that combines the recommendation task with a side information task to enhance the recommendation performance. Specifically, GSIRec introduces the social network embedding task and knowledge graph embedding task for social-aware recommendation and knowledge-aware recommendation, respectively. The users are connected in the social network and the items they love may appear as corresponding entities in the knowledge graph, hence side information tasks have great relevance to the recommendation task. To transfer the knowledge between the recommendation task and side information tasks, we design a special knowledge assistance module as the bridge between these tasks. Such a module captures useful knowledge from latent representations in related tasks and integrates the knowledge to assist each task in improving its performance. There are two advantages of utilizing multi-task learning: (i) leveraging the training signals of related tasks can enhance performance for each task; and (ii) learning related tasks at a time reduces the risk of overfitting compared with single-task learning [45]. To further exploit the topological structure of the side information, we use graph attention network in the recommendation task. We design an attention mechanism to consider node information of the user (item) neighbors in graphs. The recommendation task and side information tasks are optimized alternatively, which enables the whole framework to be more flexible.

Contribution and organization

In summary, our contributions in this paper are as follows:

-

We proposed a deep end-to-end framework GSIRec for graph side information recommendation.

-

We provide a knowledge assistance module that can fully transfer knowledge and capture the interplay between related tasks to assist each task in enhancing its performance.

-

Extensive experiments demonstrate that GSIRec consistently outperforms the state-of-the-art recommendation solutions in different recommendation scenarios. Also, GSIRec shows advantages in mitigating the data sparsity and cold start issues.

The remainder of this paper is organized as follows. Our framework GSIRec is described in Section 2. In Section 3, we conduct experiments on four real-world datasets and present the experimental results. In Section 4, we review work related to our method GSIRec, followed by conclusions and future work in Section 5.

2 Methodology

In this section, we firstly introduce key mathematical notations and formulate the problems, then describe our GSIRec framework and define each component in more depth.

2.1 Notations and problem formulation

Table 1 depicts key notations in this paper. In a recommendation scenario, we suppose there are N users U = \(\left \{ u_{1},u_{2},\ldots ,u_{N} \right \}\) and M items V = \(\left \{ v_{1},v_{2},\ldots ,v_{M} \right \}\). We define R \(\in \mathbb {R}^{N \times M}\) as the user-item interaction matrix whose element rio indicates the rating score or implicit feedback (0 or 1) from ui to vo. In addition, we consider social networks and knowledge graphs as two kinds of side information. We define the matrix S \(\in \mathbb {R}^{N \times N}\) as the social network among users U, where its element sij = 1 if ui trusts uj and zero otherwise. As for the knowledge graph, it consists of real-world entities \(\mathcal {E}\) = \(\left \{ e_{1},e_{2},\ldots ,e_{W} \right \}\) and relations \(\mathcal {R}\) = \(\left \{ r_{1},r_{2},\ldots ,r_{C} \right \}\) among these entities. Since an item v ∈ V is likely to appear as an entity \(e \in \mathcal {E}\) in the knowledge graph G, entity set \(\mathcal {E}\) consists of items \(V (V \subseteq \mathcal {E})\) and non-items \(\mathcal {E}\setminus V\). Formally, we define the knowledge graph as \(\textbf {G} = \left \{ \left (e_{o}, r_{h}, e_{p}\right ) | e_{o}, e_{p} \in \mathcal {E}, \ r_{h} \in \mathcal {R} \right \}\) whose the i-th element \(\left (e_{o}, r_{h}, e_{p}\right )_{i}\) is a triple indicating that there is a relation rh between entity eo and entity ep. For user ui, \(\textbf {u}_{i}^{r} \in \mathbb {R}^{d}\) and \(\textbf {u}_{i}^{s} \in \mathbb {R}^{d}\) denote user embeddings corresponding to the recommendation domain and social domain respectively, where d is the embedding size. For item vo (corresponding to entity eo), we utilize \(\textbf {e}_{o}^{r} \in \mathbb {R}^{d}\) and \(\textbf {e}_{o}^{k} \in \mathbb {R}^{d}\) to denote its embeddings corresponding to the recommendation domain and knowledge graph domain respectively. In addition, we use \(\textbf {r}_{h} \in \mathbb {R}^{d}\) to denote embedding for relation rh.

The goal of GSIRec is to use the user-item interaction matrix R and the side information to predict user’s personalized interests in items. Specifically, for social-aware recommendation, given the user-item interaction information R and social network S, GSIRec aims to learn a prediction function \(\hat {r}_{u v} = \mathcal {F}(u, v | {{\varTheta }}, \mathbf {R}, \mathbf {S})\), where \(\hat {r}_{u v}\) is the predicted rating or preference probability from user u to item v, and Θ is the framework parameters of function \(\mathcal {F}\). Similarly, for knowledge-aware recommendation, given the user-item interaction information R and knowledge graphs G, GSIRec aims to learn a prediction function \(\hat {r}_{u v} = \mathcal {F}(u, v | {{\varTheta }}, \textbf {R}, \textbf {G})\).

Figure 2 shows the overview of the architecture of GSIRec in two recommendation scenarios: social-aware recommendation (left) and knowledge-aware recommendation (right). The whole framework consists of two tasks: recommendation task and side information task (social network embedding or knowledge graph embedding). Two tasks are bridged by the knowledge assistance modules. In what follows, we introduce the knowledge assistance module, then apply GSIRec in social-aware recommendation and knowledge-aware recommendation, finally discuss the learning algorithm for GSIRec.

2.2 Knowledge assistance module

To obtain communication and interaction between recommendation task and social network embedding task (or recommendation task and knowledge graph embedding task), we design a delicate knowledge assistance module, as shown in Figure 2 (the pink blocks). The design of the knowledge assistance module is motivated by feature sharing in multi-task learning [24, 70]. Specifically, the knowledge assistance module takes two user embeddings \(\mathbf {u}_{i}^{r}\) and \(\mathbf {u}_{i}^{s}\) of the user ui as inputs (or takes the two entity embeddings \(\mathbf {e}_{o}^{r}\) and \(\mathbf {e}_{o}^{k}\) of an item vo as inputs), enables them to capture useful knowledge from each other, and update themselves. Note that we set the knowledge assistance modules in the low-level layers in both recommendation task and side information task, because sharing of high-level feature layers may result in negative transfer problems in multi-task learning according to recent research [36, 64, 70]. We devise two kinds of knowledge assistance modules: attention-based (left) and gate-based (right), which are shown in Figure 3. Without loss of generality, we denote x1, x2 as two inputs and y1, y2 as two outputs of a knowledge assistance module:

where \(\mathcal {K}\) is the knowledge assistance module and we use a suffix \(\left \langle x_{1}\right \rangle \) or \(\left \langle x_{2}\right \rangle \) to obtain one of its outputs. We will elaborate on these two kinds of knowledge assistance modules in the following.

2.2.1 Attention-based knowledge assistance module

Inspired by [54], we first utilize a key matrix \(M_{k} \in \mathbb {R}^{d \times d}\) and a value matrix \(M_{v} \in \mathbb {R}^{d \times d}\) to map input vectors x1, x2, and then match them as follows:

where ⊙ is the element-wise product of vectors.

Then we use an attention mechanism to obain the final output representations y1 and y2:

where α. and β. are the attention weights which characterize the importance of corresponding to the vectors. Specifically, α. and β. are defined as:

where \(w_{.} \in \mathbb {R}^{d}\) is the parameter of the attention mechanisms and dot denotes the inner product of two vectors.

2.2.2 Gate-based knowledge assistance module

First, we utilize two trainable weight matrices \(M_{1} \in \mathbb {R}^{d \times d}\), \(M_{2} \in \mathbb {R}^{d \times d}\) to map input vectors x1, x2 into new spaces:

Then we perform concatenation operations to get two fusion vectors F1 and F2 as follows:

Next F1 and F2 pass through two non-linear transformations and get two gated structures: input gate Ii and transform gate Ti:

where \(w_{..} \in \mathbb {R}^{2d \times d}, b_{..} \in \mathbb {R}^{d}\) are the weight and bias parameters, and σ denotes the sigmoid function \(\sigma \left (x\right ) = 1/\left (1 + e^{-x} \right )\). With these gates structures, we will get the final outputs y1 and y2:

2.2.3 Comparing two knowledge assistance modules

We compare our two knowledge assistance modules from four aspects, including expressive power, output space, the number of parameters, and computational complexity.

Expressive power

For the attention-based knowledge assistance module, we explicitly utilize the attention mechanisms to calculate the transfer weights. Specifically, we utilize learnable matrices Mk and Mv to make enough interaction and communication between input vectors, and then utilize the attention mechanisms to calculate the transfer weights α⋅, β⋅ between feature vectors. As for the gate-based knowledge assistance module, we introduce gated structures I⋅,T⋅ to control the error flow from related tasks and reduce the risk of negative transfer in multi-task learning to a certain extent. Both knowledge assistance modules utilize learnable weight matrices for more fine-grained knowledge transfer between tasks. In the experiments section, we will further evaluate the effectiveness of these two knowledge assistance modules.

Output space

Both gate-based knowledge assistance module and attention-based knowledge assistance module take two embeddings as inputs (i.e., one of them belongs to the recommendation task, and the other belongs to the side information task), enables these two embeddings to capture useful knowledge from each other and update themselves. After the update, both gate-based knowledge assistance module and attention-based knowledge assistance module output the updated embeddings. The updated embeddings will send back the corresponding task. Through knowledge assistance modules, representations from related tasks can complement each other, assisting related tasks in improving generalization.

Space complexity

For an attention-based knowledge assistance module, we introduce two learnable matrices \(M_{k} \in \mathbb {R}^{d\times d}\), \(M_{v} \in \mathbb {R}^{d\times d}\), and attention weights \(w_{1}\in \mathbb {R}^{d}\), \(w_{2}\in \mathbb {R}^{d}\). Therefore, the number of parameters of an attention-based knowledge assistance module is \(\theta _{a}=\left \{ M_{k},M_{v},w_{1},w_{2} \right \}\). For a gate-based knowledge assistance module, we introduce two learnable matrices \(M_{1} \in \mathbb {R}^{d\times d}\) and \(M_{2} \in \mathbb {R}^{d\times d}\), and gated structure weights \(w_{1i} \in \mathbb {R}^{2d\times d}, w_{1t}\in \mathbb {R}^{2d\times d}, w_{2i}\in \mathbb {R}^{2d\times d}, w_{2t}\in \mathbb {R}^{2d\times d}, b_{1i}\in \mathbb {R}^{d}, b_{1t}\in \mathbb {R}^{d}, b_{2}i\in \mathbb {R}^{d}, b_{2}t\in \mathbb {R}^{d}\). Therefore, the number of parameters of a gate-based knowledge assistance module is \(\theta _{g}=\left \{ M_{1},M_{2},w_{1i},w_{1t},w_{2i},w_{2t},b_{1i},b_{1t},b_{2i},b_{2t} \right \}\). Compare the number of parameters of two knowledge assistance modules, gate-based knowledge assistance module introduces more parameters.

Time complexity

For an attention-based knowledge assistance module, it takes two embeddings \(x_{1}\in \mathbb {R}^{d},x_{2}\in \mathbb {R}^{d}\) as inputs, the time consumption in mapping and matching process is O(8 × d2 + 4 × d). The time consumption in the attention mechanism is O(4 × d). Therefore, the time complexity of a attention-based knowledge assistance module is O(8 × d2 + 8 × d) ⇒ O(d2). For a gate-based knowledge assistance module, it takes two embeddings \(x_{1}\in \mathbb {R}^{d},x_{2}\in \mathbb {R}^{d}\) as inputs, the time consumption in mapping process is O(2 × d2). The time consumption in gated structures is O(8 × d2 + 4 × d). Therefore, the space complexity of a gate-based knowledge assistance module is O(10 × d2 + 4 × d) ⇒ O(d2). We can find that the two knowledge assistance modules have similar time complexity.

2.2.4 Advantage

Recently, many recommenders leverage multi-task learning and design different transfer units to enhance recommendation performance, such as the cross-connection unit [24] and the cross-compress unit [64]. These transfer units and our method GSIRec follow the parameter sharing paradigm for multi-task Learning [45]. However, our methodology is different from theirs. Compared with the above transfer units, GSIRec uses attention mechanisms and gated structures to design completely different but more effective transfer units for knowledge transfer between related tasks.

The advantages of the knowledge assistance module are two-fold: (i) attention mechanism and gated structures in the knowledge assistance module can capture and integrate useful information to update representations in a more fine-grained way; and (ii) enough interaction and communication between embedding vectors enable related tasks to complement each other and enhance their performance instead of improving one task at the expense of poor performance on other tasks. We will further compare our knowledge assistance modules with different transfer units in the experiments.

2.3 Social-aware recommendation

In social-aware recommendation, we introduce a social network embedding task to assist the recommendation task.

2.3.1 Recommendation task

Given user ui and item vo, such task predicts rating or preference probability \(\hat {r}_{io}\) from user ui to item vo. We will learn two user embeddings from different perspectives. We first utilize L knowledge assistance modules to obtain social enhanced user embedding \(\mathbf {u}_{i}^{r(s)}\) as follows:

In addition, we consider neighbors of user ui to further exploit the topological structure of the social network. A common way to aggregate neighbor information is the mean pooling operation. However, such an operation ignores different weights of neighbors. In our paper, we utilize the attention mechanism to study the importance of different neighbors in the graph for better neighbor aggregation. Specifically, we aggregate the user ui’s neighbor information by linear combination as follows:

where nei(ui) is the ui’s neighbor set. att is function to study the importance of different neighbors for the given user, which is defined as follows:

In social network, the size of nei(⋅) is different for different users. Following the approaches in [19, 65], we sample a fixed-size set neis(u) for each user’s neighbors to keep training the whole framework more efficient, where |neis(u)| = ns. Such sampling strategy can facilitate computation on large graphs. In this way, we compute the neighbor enhanced embedding \(\mathbf {u}_{i}^{r(n)}\) for user ui by replacing nei(⋅) with neis(⋅):

where \(w \in \mathbb {R}^{d \times d}\) is the weight and \(\mathcal {A}\) is the activation function.

Through a single neighbor aggregation operation, neighbor enhanced embedding \(\mathbf {u}_{i}^{r(n)}\) is dependent on itself as well as the direct social neighbors. We can further extend neighbor aggregation operation to multi-hop neighbors. More formally, to obtain J-order neighbor information (J is a pre-defined value), neighbor enhanced embedding \(\mathbf {u}_{i(J)}^{r(n)}\) is defined as:

Then two user embeddings \(\mathbf {u}_{i}^{r(s)}\) and \(\mathbf {u}_{i(J)}^{r(n)}\) are fused by an attention mechanism as follows:

where α and β are defined as:

Finally, we utilize a multi-layer perceptron (MLP) to output the final prediction \(\hat {r}_{io}\) as follows:

where \({\mathscr{M}}(x)\) = \(\mathcal {A}\left (w x + b\right )\) is the non-linear transformation for vector x (with weight matrix w and bias vector b), and H is the number of layers in the MLP. If the user-item interaction matrix R is constructed as an implicit feedback matrix, \(\hat {r}_{io}\) will be further operated by the sigmoid function to obtain the preference probability from user ui to item vo.

2.3.2 Social network embedding task

Such a task is used for link prediction which predicts the trust relationships between users. We encode the social relations into low-dimensional representations by using an embedding-based method. For a relationship between user ui and user uj, we obtain their recommendation enhanced social embedding \(\mathbf {u}_{i}^{s(r)}\) and \(\mathbf {u}_{j}^{s(r)}\) through knowledge assistance modules:

Finally, leveraging two MLPs, the probability of the trust relationship \(\hat {s}_{ij}\) between user ui and user uj is calculeted as follows:

where S is the similarity function between two real feature vectors. In this paper, we use the cosine function \(S(a, b) = \frac {dot(a, b)}{\left \| a \right \| \left \| b \right \|}\) as our similarity function. One can design other similarity metrics here such as normalized inner product S(a,b) = σ(a⊤b).

2.4 Knowledge-aware recommendation

In knowledge-aware recommendation, we design a knowledge graph embedding task to assist the recommendation task.

2.4.1 Recommendation task

Similar to the recommendation task in social-aware recommendation, such task predicts rating or preference probability \(\hat {r}_{io}\) from user ui to item vo. We first learn two item embeddings: knowledge enhanced embedding \(\mathbf {e}^{r(k)}_{o}\) and neighbor enhanced embedding \(\mathbf {e}^{r(n)}_{o(J)}\) as follows:

where neis(vo) is the vo’s sampled fixed-size neighbor set in the knowledge graph, where |neis(vo)| = nk. att is the function to study the importance of different neighbors for the given item vo, which is defined as follows:

Then we utilize an attention mechanism to weigh two item embeddings \(\mathbf {e}^{r(k)}_{o}\) and \(\mathbf {e}^{r(n)}_{o(J)}\) as follows:

where α and β are defined as:

Finally, we utilize a MLP to predict final ratings \(\hat {r}_{io}\) from user ui to item vo as follows:

Note that if we focus on users’ implicit feedback to items, \(\hat {r}_{io}\) will be further operated by the sigmoid function to obtain the preference probability from user ui to item vo.

2.4.2 Knowledge graph embedding task

Knowledge graph embedding methods can be categorized into two groups: translational distance models and semantic matching models [57]. We utilize a deep semantic matching method for the triple classification task which predicts whether a triple is correct or not. Note that, one can employ or redesign other knowledge graph embedding methods, such as SLM [49] and SME [4]. For a triple \(\left (e_{o}, r_{w}, e_{p}\right )_{i}\), we first utilize knowledge assistance modules to obtain recommendation enhanced entity embedding \(\mathbf {e}_{o}^{k(r)}\) and \(\mathbf {e}_{p}^{k(r)}\):

Then we integrate relation rw in our method and use MLPs to predicte probability \(\hat {sco}_{i}\):

We consider \(\hat {sco}_{i}\) as the score of the triple \(\left (e_{o}, r_{w}, e_{p}\right )_{i}\) which indicates the plausibility of the fact in the knowledge graph.

2.5 Optimization

To estimate model parameters, we have two following objective functions for GSIRec according to kinds of side information:

\({\mathscr{L}}_{OSS}^{S}\) and \({\mathscr{L}}_{OSS}^{K}\) are for social-aware recommendation and knowledge-aware recommendation, respectively. \({\mathscr{L}}_{REC}\) measures the loss in the recommendation task. Depending on the ways of constructing of the user-item interaction matrix R (rating score or implicit feedback), we use different loss functions for \({\mathscr{L}}_{REC}\), as follows:

The first one is for the rating prediction task, where \(\mathcal {O}_{r}\) denotes the observed values in R. The second is for recommendation with implicit feedback. We denotes \(\mathcal {I}_{i}\) is the item set which user i has interacted with, and \(\mathcal {D}_{r}\) can be defined as:

\({\mathscr{L}}_{SNE}\) and \({\mathscr{L}}_{KGE}\) measure the loss in the social network embedding task and knowledge graph embedding task, respectively:

For \({\mathscr{L}}_{SNE}\), it uses binary cross-entropy function to calculate the loss, where \(\mathcal {D}_{s}\) is defined as:

As for \({\mathscr{L}}_{KGE}\), we let observed triples in knowledge graph G tend to have higher scores and decreasing the scores for all unobserved triples.

The last term in loss function is the L2 regularization term to control the model complexity and avoid over-fitting. γs, γk and λ are the balancing parameters in the objective function.Footnote 1 We utilize an alternating strategy to optimize our framework, which enables the whole framework to be more flexible. The overall logic of GSIRec is summarized in Algorithm 1.

3 Experiment

In this section, we conduct extensive experiments to evaluate our GSIRec framework through addressing the following research questions:

-

(Q1) Compared with the state-of-the-art baselines, how does our framework GSIRec perform?

-

(Q2) Can GSIRec effectively alleviate data sparsity and cold start issues?

-

(Q3) Do the settings of key hyper-parameters and designs affect the performance of GSIRec?

-

(Q4) Can the recommendation task be helpful for the side information task in GSIRec?

In what follows, we first introduce the experimental settings, then answer the above four questions.

3.1 Experiment setup

In this subsection, we introduce the datasets, baselines, the choice of hyper-parameters, and evaluation protocols.

3.1.1 Datasets

Four datasets Ciao,Footnote 2 Epinions,Footnote 3 DBbook,Footnote 4 MovieLensFootnote 5 are used in our experiments. Ciao and Epinions are for social-aware recommendation, and DBbook and MovieLens are used for knowledge-aware recommendation.

-

Ciao dataset was obtained from a real-world social media website. The dataset contains both users’ explicit ratings (ranging from 1 to 5) on items and users’ social relations.

-

Epinions dataset was obtained from a popular review website. The dataset contains rating data (ranging from 1 to 5) and user-user trust relationships.

-

DBbook is a public dataset in book recommendations, which contains users’ explicit ratings (ranging from 1 to 5) on books. The knowledge graph for DBbook is released by [5].

-

MovieLens dataset is a widely used movie dataset and it contains users’ explicit feedbacks (ranging from 1 to 5). The knowledge graph MovieLens is released by [64].

In the experiment, we evaluate our method GSIRec in both rating prediction task and top-K recommendation task. Similar to the related work [61, 64], for top-K recommendation, we transform the explicit rating as implicit feedback indicating that the user has rated the item, and generate a negative sample set for each user, which is of equal size with the rated ones. The statistics of the four datasets are summarized in Table 2.

3.1.2 Baselines

We apply GSIRec in social-aware recommendation and knowledge-aware recommendation. Therefore, we compare GSIRec with the following four classes of baselines: (i) pure collaborative filtering method GCMC [2]; (ii) feature enhanced methods including NFM [20] and DeepFM [16]; (iii) social enhanced methods which take users’ social influences into consideration including DeepSoR [14], GraphRec [15] and DiffNet [67]; (iv) knowledge graph enhanced methods which take knowledge graph into consideration including KGCN [65], MKR [64] and KGAT [55]. The characteristics of the comparison methods are listed as follows:

-

GCMC is a graph-based recommendation framework, which adopts a graph auto-encoder in the user-item bipartite graph to learn user and item embeddings for rating prediction. In our experiment, we treat GCMC as a plain collaborative filtering method that only leverages user-item interactions for recommendation.

-

NFM is a factorization model that improves FM [44] by using a neural network to capture high-order feature interaction. Here we utilize all the side information as additional input features. Specifically, we concatenate the user embedding, item embedding, and the average embeddings of user neighbors in the social network (or average embeddings of item neighbors in the knowledge graph) as the inputs.

-

DeepFM is a general feature enhanced factorization model, which combines factorization machines and deep neural networks for recommendation. We provide the same inputs as NFM for DeepFM.Footnote 6

-

DeepSoR combines a neural network to learn latent preferences of users from social networks with PMF [42].

-

GraphRec is a state-of-the-art social recommender, which considers the user-item graph and user-user graph for social recommendation.

-

DiffNet is a state-of-the-art method for social recommendation, which utilizes graph convolutional networks and designs layer-wise influence diffusion structure for users to learn user embeddings.

-

KGCN is a state-of-the-art model for knowledge-aware recommendation, which uses graph attention convolutional methods to capture semantic information in the knowledge graph.

-

MKR is a state-of-the-art multi-task learning framework, which employs knowledge graph embedding tasks to enhance collaborative filtering tasks.

-

KGAT is a state-of-the-art propagation-based model, which utilizes graph attention networks for knowledge-aware recommendation. Compared with KGCN, KGAT combines the user-item interaction graph and knowledge graph as a unified graph for feature learning.

-

GSIRec(s)-a is our model with attention-based knowledge assistance modules for social-aware recommendation.

-

GSIRec(s)-g is our model with gate-based knowledge assistance modules for social-aware recommendation.

-

GSIRec(k)-a is our model with attention-based knowledge assistance modules for knowledge-aware recommendation.

-

GSIRec(k)-g is our model with gate-based knowledge assistance modules for knowledge-aware recommendation.

3.1.3 Parameter settings

We implemented GSIRec framework with Pytorch which is a Python library for deep learning. For each dataset, we randomly split it into training, validation, and test sets following 6 : 2 : 2. We repeated each experiment 5 times and reported the average performance. The model parameters are first initialized according to the Gaussian distribution. We utilized ReLU as the activation function and Adam [28] for optimization with an initial learning rate of 0.001. For the numbers of layer J in neighbor aggregation operation, we find that when J= 1 is good enough and similar results can be found in many other studies [2, 65]. The hyper-parameters were tuned on the validation set. The coefficient of L2 regularization λ was tuned in [ 10− 7, 10− 6, 10− 5, 10− 4, 10− 3 ]. For all neural components, we implement two hidden layers by default. In addition, we sampled one negative instance per positive instance in the social network embedding task or knowledge graph embedding task. Table 3 shows our hyper-parameters settings. The parameters of the baselines are carefully tuned to achieve the best performance for fair comparisons. We will study the impact of hyper-parameters in the following subsection.

3.1.4 Evaluation protocols

We evaluate our method GSIRec in the rating prediction task and top-K recommendation. For the rating prediction task, we adopt Root Mean Square Error (RMSE) and Mean Absolute Error (MAE) to evaluate the performance of the methods. Smaller values of these two metrics indicate the better recommendation. For the top-K recommendation, we choose Precision@K and Recall@K to evaluate. We perform item ranking on all items to guarantee that the evaluation process is unbiased [30]. In our experiment, we set K = 5.

3.2 Performance comparison (Q1)

Tables 4 and 5 show the performance of all compared methods in social-aware recommendation. Tables 6 and 7 show the performance of all compared methods in knowledge-aware recommendation respectively. ** denotes the best values among all methods, and * denotes the best values among all baselines. According to the results, we have the following main observations:

-

Feature enhanced methods NFM and DeepFM outperform pure collaborative filtering method GCMC in most cases, which indicates that side information is complementary to user-item interaction data in recommendations.

-

Since DeepSoR, GraphRec, and DiffNet utilize social information to improve recommendation, they outperform pure collaborative filtering model GCMC. In addition, GraphRec and DiffNet generally achieve better performance than other baselines in social-aware recommendation in most cases. These results imply that the graph convolutional methods are powerful for graph side information recommendation.

-

For knowledge-aware recommendation, KGCN and KGAT stronger than other baselines, indicating the power of graph convolutional networks in graph data. In addition, MKR performs much better than GCMC, which suggests the cross-compress units capture useful knowledge in knowledge graph embedding tasks.

-

GSIRec leverages multi-task learning and graph attention method to learn user and item representations for recommendation. In general, GSIRec with gate-based knowledge assistance modules stronger than GSIRec with attention-based knowledge assistance modules. Without special mention, we show the results of GSIRec with gate-based knowledge assistance modules in the following experiment. In both rating prediction task and top-K recommendation, our method GSIRec achieves the best results on all metrics compared with other methods. Specifically, for the rating prediction task, GSIRec improves over the state-of-the-art baselines w.r.t. RMSE by 1.81%, 0.60%, 0.97% and 1.05% in Ciao, Epinions, DBbook, and MovieLens respectively. For the top-K recommendation, GSIRec improves over the strongest baselines w.r.t. Precision by 3.17%, 4.06%, 0.99% and 4.12% in Ciao, Epinions, DBbook, and MovieLens respectively.

3.3 Cold start and data sparsity issues (Q2)

As mentioned in the introduction section, data sparsity and cold start are two challenges faced by most recommenders. In this subsection, we investigated the ability of our model in handling these two issues.

3.3.1 Results in data sparse scenarios

We first evaluate the capabilities of addressing data sparsity by respective competitors. The experiments were conducted by utilizing different ratios (from 80% to 20%) of the training set on four datasets and keeping fixed the validation and test set during the experiment. Figures 4 and 5 show the results of all methods with respect to different proportions of the training set in social-aware recommendation and knowledge-aware recommendation, respectively. For all datasets, we observe that as the increase of sparsity level, the overall performance decreases among all models. Our method GSIRec outperforms other baselines in most cases. It is worth mentioning that our method consistently outperforms all baselines when the ratio is 20%, which verifies that GSIRec can maintain a good performance when data are extremely sparse.

3.3.2 Results in cold start scenarios

According to the side information of the datasets, we consider addressing the cold-start user problem on Ciao and Epinions datasets, and addressing the cold-start item problem on DBbook and MovieLens datasets. We treat those who have rated x or fewer ratings as cold start users and those that have been rated less than x as cold start items. Followed by other related works [25, 35], we set x= 5. Figure 6 illustrates the RMSE results of our method GSIRec and several strong baselines in cold-start scenarios on four datasets. We can see that our method GSIRec is beneficial to the relatively inactive users and items in recommendation scenarios.

3.4 Model analysis (Q3)

In order to gain more insight into our method, we study an in-depth model analysis in this subsection. We first investigate the effect of the knowledge assistance module, then analyze the sensitivity of key hyper-parameters, and finally explore whether the combination of the user side information task and item side information task can enhance the performance.

3.4.1 Effect of knowledge assistance module

In order to further explore the utility of the knowledge assistance module, we replace our knowledge assistance module with other four feature enhanced modules: cross-stitch unit [41], cross-connection unit [24], cross-compress unit [64], and feature evolution unit [66]. These feature enhanced units are utilized to be the connection between the recommendation task and the side information task for multi-task feature learning, and they are related to our knowledge assistance modules. We will further discuss them in the related work section. The comparison results are shown in Figures 7 and 8. According to the results, our proposed feature enhanced module achieves the best results indicates that our knowledge assistance modules can capture useful knowledge from the side information task to assist the recommendation.

The results of Precision on GSIRec with different feature enhanced modules. KAM(a): attention-based knowledge assistance module; KAM(g): gate-based knowledge assistance module; C-Stitch: cross-stitch unit; C-Connection: cross-connection unit; C-Compress: cross-compress unit; FEU: feature evolution unit

3.4.2 Effect of hyper-parameters

We explore the effect of two hyper-parameters: embedding size d and neighbor sample size ns(or nk) in side information tasks. The results are shown in Figures 9 and 10. We have the following observations: (i) In general, we find that the performance is improved as the embedding size d increases because large embedding sizes mean more expressive. However, when d further increases, our methods degrade the performance, because a too large dimension increases the complexity of our method and may overfit the datasets. (ii) In addition, we find that our method achieves the best performance when ns = 7 in social-aware recommendation and nk = 5 knowledge-aware recommendation. The reason is that a too small neighbor sample size does not have enough capacity to aggregate neighbor information, while a too large neighbor sample size may introduce noises.

3.4.3 Results on the unified framework

GSIRec is designed for side information recommendation. In the above experiments, we have validated the effectiveness of GSIRec in social-aware recommendation and knowledge-aware recommendation. In this subsection, we would like to apply our method to the scenario where both users and items are associated with the side information. We further explore whether the combination of the user side module and item side module can enhance the performance. For this purpose, we plug the user side module and item side module into the unified framework GSIRec. The objective function for the unified framework GSIRec can be defined as follows:

where γs and γk are the balancing parameters for user side information task and item side information task, respectively. Because we didn’t find the suitable datasets containing both social networks and knowledge graphs information that can be used for both the rating prediction task and top-K recommendation, we construct top-n semantic friends (implicit social information) for each user in the knowledge-aware datasets (DBbook and MovieLens datasets) according to the approach in [75]. The research reveals that semantic friends (implicit social information) can identify with whom a user has similar preferences [37, 75]. In this way, both users and items in these two datasets are associated with side information. In our experiment, we set n = 5,γs = 10− 5,γk = 10− 5. One can utilize other methods to generate implicit user friends, such as the methods mentioned in [37] and [27]. To evaluate the performance of the unified framework GSIRec, we compare it with its two variants: GSIRec(s) (only using user side information) and GSIRec(k) (only using item side information). Figure 11 shows the performance of different methods. From the results, we find that GSIRec which leverages both user and item side information performs better than two variants GSIRec(s) and GSIRec(k), indicating that leveraging both user side and item side information can enhance the recommendation performance.

3.5 Results on side information tasks (Q4)

The goal of multi-task learning is to utilize useful information contained in related tasks to help enhance the performance of all the tasks [6]. Therefore, we would like to investigate whether the recommendation task benefits the side information tasks in GSIRec. Specifically, we conducted the following experiments of link prediction tasks to show performance differences between the two settings: (i) only side information task is trained and (ii) side information task and recommendation task are trained together. We split the graph data into training and test sets following 6:4 and use F1-score as the evaluation metric. Figure 12 presents the performance comparison on four datasets. From the results, we find that making use of the recommendation task improves the F1-score of link prediction in the social network embedding task and knowledge graph embedding task, respectively. The results indicate knowledge assistance module can indeed capture useful information from related tasks to assist each task in enhancing its performance.

The results of F1-score on the side information task. “None” means only side information task is trained, while “KAM-a” and “KAM-g” means side information task and recommendation task are trained together using attention-based knowledge assistance module and gate-based knowledge assistance module, respectively

4 Related work

4.1 Social-aware recommendation

With the prevalence of online social media, many E-commerce sites have become popular social platforms in which users can not only select items they love but also follow other users. According to the social influence theory [1, 3, 31], users’ preferences are influenced by their social neighbors. Therefore, researchers propose using social networks as another information stream to mitigate the lack of user-item interaction data and improve recommendation, also known as social recommendation.

The most common approach in social recommendation is to design loss terms for social influence and integrate both social loss and recommendation loss into a unified loss function for jointly optimizing [32, 39, 52]. For instance, SoReg assumes that social neighbors share similar feature representations and design regularization terms in the matrix factorization framework [39]. CSR models the characteristics of social relations and designs a characterized regularization term to improve SoReg [32]. SoDimRec exploits the heterogeneity of social relations and weak dependency connections to regularization terms [52]. In addition to the above-mentioned regularization-based social recommenders, a more explicit and straightforward way is to explicitly models social relations in the predictive model [7, 17, 38]. For example, TrustSVD extended SVD++ [29] by treating the preferences of social neighbors as auxiliary implicit feedbacks [17].

With the development of deep learning, more and more social recommenders utilize neural components to enhance performance. For instance, SAMN considers both aspect-level and friend-level differences and utilizes memory network and attention mechanisms for social recommendation [10]. EATNN utilizes attention mechanisms to assigns a personalized transfer scheme for each user [12]. In recent years, Graph Neural Networks (GNNs) have achieved state-of-the-art performance of graph representation learning. Since the social network can be represented as a user-user social graph, researchers develop social recommenders based on GNNs to combine the user representation with the user’s social neighbors [9, 15, 34, 67]. For example, HOSR [34] and DiffNet [67] stack more graph convolutional layers and propagate user embeddings along the social network to capture high-order neighbor information.

In this work, GSIRec utilizes the graph attention method to learn social neighbor enhanced representations in social-aware recommendation. In addition, GSIRec derives a multi-task learning approach that introduces a social network embedding task to assist the recommendation task. The social network embedding task can be seen as the constraint term explicitly to provide regularization for recommender systems.

4.2 Knowledge-aware recommendation

Knowledge-aware recommendation has been shown effective in alleviating data sparsity issues for recommender systems by using the knowledge graph as the side information. The utilization of the knowledge graph can be roughly categorized into three groups [18, 56]: (i) the embedding-based method; (ii) the path-based method; and (iii) the propagation-based method.

The embedding-based method utilizes knowledge graph embedding algorithms to preprocess the knowledge graph and incorporates pre-trained entity embeddings in the recommendation task [5, 62, 64, 76]. For instance, CKE [76] adopts TransR [33] to learn entity embeddings with the involvement of the knowledge graph. KTUP [5] adopts TransH [60] to design a translation-based recommender model that jointly learns the task of recommendation and knowledge graph completion. DKN [62] treats entity embeddings (learned from TransD [26]) and word embeddings as different channels and designs a CNN framework to incorporate knowledge-level and semantic-level representations of news for news recommendation.

The path-based method leverages multiple patterns of connections among entities in knowledge graphs for recommendation [8, 23, 48, 50, 58, 71]. For example, PER [72] and FMG [77] treat knowledge graphs as heterogeneous information networks and design different meta-paths or meta-graphs to extract semantic information for recommendation.

The propagation-based method, which is based on the idea of embedding propagation, considers both semantic information and connectivity information in knowledge graphs [47, 53, 55, 56, 59, 61, 63, 65]. For instance, RippleNet [61] introduces the concept of preference propagation which propagates users’ potential preferences along links in knowledge graphs to enrich user representations. KGCN [65] adopts graph convolutional networks to aggregate item neighbor information in the knowledge graph to enhance item representations. KGCN-LS [63] further enhances the performance of KGCN [65] by adding a label smoothness mechanism. KGAT [55] combines the user-item interaction graph and knowledge graph as a unified graph and utilizes graph attention networks for feature learning. KGPolicy [59] adopts graph convolutional networks to prepare high-quality representations of nodes in knowledge graphs and uses reinforcement learning to explore high-quality negative items for positive user-item interactions. CKAN [56] explicitly encodes the collaborative signals by embedding propagation and combine the collaborative signals with knowledge associations in an end-to-end manner.

Our method GSIRec is a hybrid method that combines the embedding-based method and propagation-based method. GSIRec designs the knowledge assistance module to capture useful knowledge from the knowledge graph embedding task. In addition, GSIRec utilizes a graph attention method to exploit semantic information from knowledge graphs.

4.3 Multi-task learning for recommendation

Multi-task learning (MTL) is an approach that improves learning for one task by using the knowledge contained in the training signals of other related tasks [6]. Recently, many recommenders leverage MTL to improve recommendation performance [5, 12, 24, 64, 66]. KTUP [5] jointly trains the tasks of recommendation and knowledge graph completion and both tasks show excellent performance. JNSKR [11] designs an efficient negative sampling optimization for knowledge-aware recommendation which integrates the recommendation task and the knowledge graph embedding task in a multi-task learning framework. MKR [64] designs a cross&compress unit to be the connection between the collaborative filtering task and knowledge graph embedding task, and two tasks share their latent features in the cross&compress unit for knowledge-aware recommendation. CoNet [24] replaces the trainable transfer scalars in cross-stitch units [41] with trainable transfer matrices, and designs the cross-connection unit to transfer the knowledge between the source task and target task. EATNN [12] integrates both the subtasks of item domain and social domain into a unified multi-task learning framework for social-aware recommendation. TrustEV [66] designs feature evolution units to enable the user social embeddings and user recommendation embeddings to exchange their features for social recommendation.

In this work, we proposed a multi-task learning framework GSIRec that can be applied in social-aware recommendation and knowledge-aware recommendation. We proposed completely different knowledge assistance modules from the above-mentioned transfer units. The knowledge assistance modules use attention mechanisms and gated structures to capture useful knowledge from related tasks for enhancing the performance of each task.

5 Conclusions and future work

In this work, we advance the graph side information recommendation. We presented a novel framework called GSIRec, which can leverage multi-task learning and graph neural networks to enhance the recommendation task. Extensive experimental results on four real-world datasets demonstrate GSIRec not only outperforms the state-of-the-art recommendation solutions but also has advantages in handling data sparsity and cold start issues. For future work, we plan to (i) investigate GSIRec’s theoretical performance thoroughly; and (ii) do the running time analysis of GSIRec.

Notes

Following the related work [64], γ⋅ can be seen as the ratio of two learning rates for the recommendation task and side information task.

MovieLens: https://grouplens.org/datasets/movielens/1m/

References

Anagnostopoulos, A., Kumar, R., Mahdian, M.: Influence and correlation in social networks. In: Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 7–15 (2008)

Berg, R.V.D., Kipf, T.N., Welling, M.: Graph convolutional matrix completion. arXiv:1706.02263 (2017)

Bond, R.M., Fariss, C.J., Jones, J.J., Kramer, A.D., Marlow, C., Settle, J.E., Fowler, J.H.: A 61-million-person experiment in social influence and political mobilization. Nature 489(7415), 295–298 (2012)

Bordes, A., Glorot, X., Weston, J., Bengio, Y.: A semantic matching energy function for learning with multi-relational data. Mach. Learn. 94 (2), 233–259 (2014)

Cao, Y., Wang, X., He, X., Hu, Z., Chua, T.S.: Unifying knowledge graph learning and recommendation: Towards a better understanding of user preferences. In: The World Wide Web Conference, pp 151–161 (2019)

Caruana, R.: Multitask learning. Mach. Learn. 28(1), 41–75 (1997)

Chaney, A.J., Blei, D.M., Eliassi-Rad, T.: A probabilistic model for using social networks in personalized item recommendation. In: Proceedings of the 9th ACM Conference on Recommender Systems, pp 43–50 (2015)

Chen, H., Li, Y., Sun, X., Xu, G., Yin, H.: Temporal meta-path guided explainable recommendation. In: WSDM, pp 1056–1064 (2021)

Chen, H., Yin, H., Chen, T., Wang, W., Li, X., Hu, X.: Social boosted recommendation with folded bipartite network embedding. IEEE Trans. Know. Data Eng. (2020)

Chen, C., Zhang, M., Liu, Y., Ma, S.: Social attentional memory network: Modeling aspect-and friend-level differences in recommendation. In: Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining, pp 177–185 (2019)

Chen, C., Zhang, M., Ma, W., Liu, Y., Ma, S.: Jointly non-sampling learning for knowledge graph enhanced recommendation. In: SIGIR (2020)

Chen, C., Zhang, M., Wang, C., Ma, W., Li, M., Liu, Y., Ma, S.: An efficient adaptive transfer neural network for social-aware recommendation. In: Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, pp 225–234 (2019)

Cheng, H.T., Koc, L., Harmsen, J., Shaked, T., Chandra, T., Aradhye, H., et al: Wide & deep learning for recommender systems. In: Proceedings of the 1st Workshop on Deep Learning for Recommender Systems, pp 7–10 (2016)

Fan, W., Li, Q., Cheng, M.: Deep modeling of social relations for recommendation. In: Thirty-Second AAAI Conference on Artificial Intelligence, pp 8075–8076 (2018)

Fan, W., Ma, Y., Li, Q., He, Y., Zhao, E., Tang, J., Yin, D.: Graph neural networks for social recommendation. In: The World Wide Web Conference, pp 417–426 (2019)

Guo, H., Tang, R., Ye, Y., Li, Z., He, X.: DeepFM: a factorization-machine based neural network for CTR prediction. arXiv:1703.04247 (2017)

Guo, G., Zhang, J., Yorke-Smith, N.: Trustsvd: Collaborative filtering with both the explicit and implicit influence of user trust and of item ratings. In: AAAI, pp 123–125 (2015)

Guo, Q., Zhuang, F., Qin, C., Zhu, H., Xie, X., Xiong, H., He, Q.: A survey on knowledge graph-based recommender systems. arXiv:2003.00911 (2020)

Hamilton, W., Ying, Z., Leskovec, J.: Inductive representation learning on large graphs. In: Advances in Neural Information Processing Systems, pp 1024–1034 (2017)

He, X., Chua, T.S.: Neural factorization machines for sparse predictive analytics. In: Proceedings of the 40th International ACM SIGIR conference on Research and Development in Information Retrieval, pp 355–364 (2017)

He, X., Liao, L., Zhang, H., Nie, L., Hu, X., Chua, T.S.: Neural collaborative filtering. In: Proceedings of the 26th International Conference on World Wide Web, pp 173–182 (2017)

He, X., Zhang, H., Kan, M.Y., Chua, T.S.: Fast matrix factorization for online recommendation with implicit feedback. In: Proceedings of the 39th International ACM SIGIR conference on Research and Development in Information Retrieval, pp 549–558 (2016)

Hu, B., Shi, C., Zhao, W.X., Yu, P.S.: Leveraging meta-path based context for top-n recommendation with a neural co-attention model. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 1531–1540 (2018)

Hu, G., Zhang, Y., Yang, Q.: Conet: Collaborative cross networks for cross-domain recommendation. In: Proceedings of the 27th ACM International Conference on Information and Knowledge Management, pp 667–676 (2018)

Jamali, M., Ester, M.: A matrix factorization technique with trust propagation for recommendation in social networks. In: Proceedings of the Fourth ACM Conference on Recommender Systems, pp 135–142 (2010)

Ji, G., He, S., Xu, L., Liu, K., Zhao, J.: Knowledge graph embedding via dynamic mapping matrix. In: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7Th International Joint Conference on Natural Language Processing, pp 687–696 (2015)

Jiang, X., Hu, B., Fang, Y., Shi, C.: Multiplex Memory Network For Collaborative Filtering. In: Proceedings of the 2020 SIAM International Conference on Data Mining, pp 91–99 (2020)

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv:1412.6980 (2014)

Koren, Y.: Factorization meets the neighborhood: a multifaceted collaborative filtering model. In: Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 426–434 (2008)

Krichene, W., Rendle, S.: On sampled metrics for item recommendation. In: KDD, pp 1748–1757 (2020)

Lewis, K., Gonzalez, M., Kaufman, J.: Social selection and peer influence in an online social network. Proc. Natl. Acad. Sci. 109(1), 68–72 (2012)

Lin, T.H., Gao, C., Li, Y.: Recommender systems with characterized social regularization. In: Proceedings of the 27th ACM International Conference on Information and Knowledge Management, pp 1767–1770 (2018)

Lin, Y., Liu, Z., Sun, M., Liu, Y., Zhu, X.: Learning entity and relation embeddings for knowledge graph completion. Google Scholar Google Scholar Digital Library Digital Library (2015)

Liu, Y., Chen, L., He, X., Peng, J., Zheng, Z., Tang, J.: Modelling high-order social relations for item recommendation. arXiv:2003.10149 (2020)

Liu, B.Y.Y.L.D., Liu, J.: Social collaborative filtering by trust. In: Proceedings of the Twenty-Third International Joint Conference on Artificial Intelligence. IEEE (2013)

Long, M., Cao, Z., Wang, J., Philip, S.Y.: Learning multiple tasks with multilinear relationship networks. In: Advances in Neural Information Processing Systems, pp 1594–1603 (2017)

Ma, H.: An experimental study on implicit social recommendation. In: Proceedings of the 36th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp 73–82 (2013)

Ma, H., King, I., Lyu, M.R.: Learning to recommend with social trust ensemble. In: Proceedings of the 32nd International ACM SIGIR Conference On Research and Development in Information Retrieval, pp 203–210 (2009)

Ma, H., Zhou, D., Liu, C., Lyu, M.R., King, I.: Recommender systems with social regularization. In: Proceedings of the fourth ACM International Conference on Web Search and Data Mining, pp 287–296 (2011)

Massa, P., Avesani, P.: Trust-aware recommender systems. In: Proceedings of the 2007 ACM Conference on Recommender Systems, pp 17–24 (2007)

Misra, I., Shrivastava, A., Gupta, A., Hebert, M.: Cross-stitch networks for multi-task learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 3994–4003 (2016)

Mnih, A., Salakhutdinov, R.R.: Probabilistic matrix factorization. In: Advances in Neural Information Processing Systems, pp 1257–1264 (2008)

Pan, Y., He, F., Yu, H.: Learning social representations with deep autoencoder for recommender system. World Wide Web pp. 1–21 (2020)

Rendle, S.: Factorization machines. In: 2010 IEEE International Conference on Data Mining, pp 995–1000 (2010)

Ruder, S.: An overview of multi-task learning in deep neural networks. arXiv:1706.05098 (2017)

Sarwar, B., Karypis, G., Konstan, J., Riedl, J.: Item-based collaborative filtering recommendation algorithms. In: Proceedings of the 10th International Conference on World Wide Web, pp 285–295 (2001)

Sha, X., Sun, Z., Zhang, J.: Attentive knowledge graph embedding for personalized recommendation. arXiv:1910.08288 (2019)

Shi, C., Zhang, Z., Ji, Y., Wang, W., Philip, S.Y., Shi, Z.: SemRec: a personalized semantic recommendation method based on weighted heterogeneous information networks. World Wide Web 22(1), 153–184 (2019)

Socher, R., Chen, D., Manning, C.D., Ng, A.: Reasoning with neural tensor networks for knowledge base completion. In: Advances in Neural Information Processing Systems, pp 926–934 (2013)

Sun, Z., Yang, J., Zhang, J., Bozzon, A., Huang, L.K., Xu, C.: Recurrent knowledge graph embedding for effective recommendation. In: Proceedings of the 12th ACM Conference on Recommender Systems, pp 297–305 (2018)

Tang, Y., Guo, K., Zhang, R., Xu, T., Ma, J., Chi, T.: ICFR: An effective incremental collaborative filtering based recommendation architecture for personalized websites. World Wide Web 23(2), 1319–1340 (2020)

Tang, J., Wang, S., Hu, X., Yin, D., Bi, Y., Chang, Y., Liu, H.: Recommendation with social dimensions. In: AAAI, pp 251–257 (2016)

Tang, X., Wang, T., Yang, H., Song, H.: AKUPM: Attention-enhanced knowledge-aware user preference model for recommendation. In: Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 1891–1899 (2019)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, L., Polosukhin, I.: Attention is all you need. In: Advances in Neural Information Processing Systems, pp 5998–6008 (2017)

Wang, X., He, X., Cao, Y., Liu, M., Chua, T.S.: Kgat: Knowledge graph attention network for recommendation. In: Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 950–958 (2019)

Wang, Z., Lin, G., Tan, H., Chen, Q., Liu, X.: CKAN: Collaborative knowledge-aware attentive network for recommender systems. In: Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, pp 219–228 (2020)

Wang, Q., Mao, Z., Wang, B., Guo, L.: Knowledge graph embedding: A survey of approaches and applications. IEEE Trans. Knowl. Data Eng. 29(12), 2724–2743 (2017)

Wang, X., Wang, D., Xu, C., He, X., Cao, Y., Chua, T.S.: Explainable reasoning over knowledge graphs for recommendation. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 5329–5336 (2019)

Wang, X., Xu, Y., He, X., Cao, Y., Wang, M., Chua, T.S.: Reinforced negative sampling over knowledge graph for recommendation. In: Proceedings of The Web Conference 2020, pp 99–109 (2020)

Wang, Z., Zhang, J., Feng, J., Chen, Z.: Knowledge graph embedding by translating on hyperplanes. In: AAAI, pp 1112–1119 (2014)

Wang, H., Zhang, F., Wang, J., Zhao, M., Li, W., Xie, X., Guo, M.: Ripplenet: Propagating user preferences on the knowledge graph for recommender systems. In: Proceedings of the 27th ACM International Conference on Information and Knowledge Management, pp 417–426 (2018)

Wang, H., Zhang, F., Xie, X., Guo, M.: DKN: Deep knowledge-aware network for news recommendation. In: Proceedings of the 2018 World Wide Web Conference, pp 1835–1844 (2018)

Wang, H., Zhang, F., Zhang, M., Leskovec, J., Zhao, M., Li, W., Wang, Z.: Knowledge-aware graph neural networks with label smoothness regularization for recommender systems. In: Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 968–977 (2019)

Wang, H., Zhang, F., Zhao, M., Li, W., Xie, X., Guo, M.: Multi-task feature learning for knowledge graph enhanced recommendation. In: The World Wide Web Conference, pp 2000–2010 (2019)

Wang, H., Zhao, M., Xie, X., Li, W.J., Guo, M.Y.: Knowledge graph convolutional networks for recommender systems. In: Proceedings of the 27th International World Wide Web Conference, pp 3307–3313 (2019)

Wu, Q., Jiang, L., Gao, X., Yang, X., Chen, G.: Feature evolution based multi-task learning for collaborative filtering with social trust. In: Proceedings of the 28th International Joint Conference on Artificial Intelligence, pp 3877–3883 (2019)

Wu, L., Sun, P., Fu, Y., Hong, R., Wang, X., Wang, M.: A neural influence diffusion model for social recommendation. In: Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, pp 235–244 (2019)

Yang, B., Lei, Y., Liu, J., Li, W.: Social collaborative filtering by trust. IEEE Trans. Pattern Anal. Mach. Intell. 39(8), 1633–1647 (2016)

Ying, H., Wu, J., Xu, G., Liu, Y., Liang, T., Zhang, X., Xiong, H.: Time-aware metric embedding with asymmetric projection for successive POI recommendation. World Wide Web 22(5), 2209–2224 (2019)

Yosinski, J., Clune, J., Bengio, Y., Lipson, H.: How transferable are features in deep neural networks?. In: Advances in Neural Information Processing Systems, pp 3320–3328 (2014)

Yu, X., Ren, X., Gu, Q., Sun, Y., Han, J.: Collaborative filtering with entity similarity regularization in heterogeneous information networks. In: IJCAI (2013)

Yu, X., Ren, X., Sun, Y., Gu, Q., Sturt, B., Khandelwal, U., Norick, B., Han, J.: Personalized entity recommendation: A heterogeneous information network approach. In: Proceedings of the 7th ACM International Conference on Web Search and Data Mining, pp 283–292 (2014)

Zhang, Z., Liu, Y., Zhang, Z., Shen, B.: Fused matrix factorization with multi-tag, social and geographical influences for POI recommendation. World Wide Web 22(3), 1135–1150 (2019)

Zhang, H., Shen, F., Liu, W., He, X., Luan, H., Chua, T.S.: Discrete collaborative filtering. In: Proceedings of the 39th International ACM SIGIR conference on Research and Development in Information Retrieval, pp 325–334 (2016)

Zhang, C., Yu, L., Wang, Y., Shah, C., Zhang, X.: Collaborative User Network Embedding For Social Recommender Systems. In: SDM, pp 381–389 (2017)

Zhang, F., Yuan, N.J., Lian, D., Xie, X., Ma, W.Y.: Collaborative knowledge base embedding for recommender systems. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 353–362 (2016)

Zhao, H., Yao, Q., Li, J., Song, Y., Lee, D.L.: Meta-graph based recommendation fusion over heterogeneous information networks. In: Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 635–644 (2017)

Zheng, X., Luo, Y., Sun, L., Ding, X., Zhang, J.: A novel social network hybrid recommender system based on hypergraph topologic structure. World Wide Web 21(4), 985–1013 (2018)

Acknowledgments

This work was supported by the National Natural Science Foundation of China under Grant No. 61876069; Jilin Province Key Scientific and Technological Research and Development Project under Grant Nos. 20180201067GX and 20180201044GX; and Jilin Province Natural Science Foundation under Grant No. 20200201036JC.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, A., Yang, B. GSIRec: Learning with graph side information for recommendation. World Wide Web 24, 1411–1437 (2021). https://doi.org/10.1007/s11280-021-00910-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11280-021-00910-6