Abstract

This paper proposes a novel approach to safeguarding location privacy for GNN (group nearest neighbor) queries. Given the locations of a group of dispersed users, the GNN query returns the location that minimizes the total or the maximal distance for all group users. The returned location is typically a meeting place such as a cinema or coffee shop where the group would like to meet. In our work, we highlight the challenges for private GNN queries and propose a general framework that have two key features: (i) it ensures privacy in a decentralized manner and (ii) can compute an optimal location for GNN query that maximizes the group’s overall preference for the meeting place. To mask their precise locations, we assume that user locations are given as regions to a location-based service provider (LSP). The LSP computes then a set of candidate answers (i.e., meeting places) for the GNN query. We identify two privacy attacks on the user locations, the distance intersection attack and the rank disclosure attack. These attacks are possible when the answer of a GNN query is determined from the candidate answers in a straightforward manner. We develop private filters that prevent these attacks and compute the GNN from the retrieved candidate answers. Our decentralized approach ensures that neither the users nor the LSP can learn the location of any group member. Our algorithms compute from the candidate set an optimal meeting place given the group members’ imprecise locations. Our key insight to an efficient computation is to prune the meeting places that cannot be GNNs given the locations of the group members within the search region. A comprehensive experimental evaluation shows the effectiveness of our approach to answering private GNN queries.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The advent of social networking sites such as Facebook [9], Google+ [17], Loopt [39], Friend Finder [49] has enabled location-based services (LBSs) for a group instead of a single user. Consider a scenario, where a group of friends decide to have their dinner together while participating in a chat using Facebook. The group may want to meet at a restaurant that minimizes their total travel distance to the restaurant. Facebook allows users to share their locations with others. Thus, it is not hard to imagine that Facebook or a third party application in Facebook can offer an LBS to find the suitable nearest restaurant for the group based on user locations. A group nearest neighbor (GNN) query allows friends to meet at their nearest place such as restaurant, shopping center, pizza place or movie theater. In military applications, a GNN query can be used if a group of soldiers wants to meet in the shortest possible time.

Along with the benefits, location-based applications also bring privacy concerns among the users [7, 11]. For example, if a group of students wants to meet at the nearest library and one of them is located at a student counseling center at that time then this student might not want to reveal that location to others. The fact that a student is stressed or seeks professional help is personal information that the student might not want to disclose. Recently, Microsoft has conducted a survey [41] to assess the state of LBSs. The study showed that though 92% of the users consider LBSs as valuable services, 52% of the users are worried about privacy risks that could arise from accessing LBSs. The main concerns of the users come from sharing location data with unspecified organizations or people. Nowadays, not only large corporations like Google or Microsoft offer LBSs but also small developers, companies or employers can run their location-based applications via Apple’s iPhone OS and Google’s Android operating systems. The privacy threat is exacerbated if the available information from the access of LBS is linked with other data sources; in the example, if the location-based service provider (LSP) corroborates with the counseling center, then more specific information about the user could be revealed. Due to an increasing awareness of privacy risks, users might refrain from using LBSs, which would hinder the use of these valuable services [36, 51].

Existing research mainly focuses on developing techniques (e.g., [5, 20, 42, 48, 54]) that preserve user privacy during the request of nearest neighbor (NN) queries. These researchers address the problem of answering GNN queries while preserving privacy for all group members, which we call the private GNN query. Our approach for private GNN queries does not disclose a user’s location to others including the LSP and other group members thus ensuring privacy all the participants of LBS.

Formally, in a GNN query [44, 45], a group of users provide their current locations, and the LSP returns the location (e.g., a meeting place) that minimizes an aggregate distance for the group. Depending upon the application scenarios, the aggregate functions could be sum and max, which return the total distance and the maximum distance from the users to the meeting location. Minimizing the total distance allows users to optimize their combined travel costs and minimizing the maximum distance enables users to meet at the shortest possible time.

In this paper, we introduce a new type of GNN query, a GNN query based on a weighted-rank (w-rank), that maximizes the overall preference of the group. In a GNN query based on w-rank, the meeting places are independently ranked by the users of the group and a set of weights are set by the group, where a weight is associated with each rank to express a group’s level of cost for a meeting place with that rank. The meeting place that has the minimum total weighted-rank is considered as the GNN for the group. A weight representing the group’s cost increases for a lower rank meeting place and is inverse to the group’s preference. We formally define the GNN query based on w-rank in Section 2.

The ranking of meeting places can be done using a single or multiple criteria, for example, the travel distance or food choice could be the factors affecting the ranking of the restaurants. A user’s cost for traveling to a meeting place such as a restaurant is minimal if the other group members come at her nearest restaurant; the cost might be the time or the transportation cost to reach the meeting place. Thus, every user has the highest preference for the closest meeting place. A location is less preferred if the group decides to meet at the user’s second nearest restaurant instead of the first one. In this paper, we focus on rankings that are based on the proximity of meeting places, an important factor for users for selecting any type of meeting place, since protecting user privacy for GNN queries based on proximity ranking is the most challenging among different criteria.

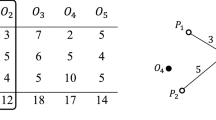

Figure 1a shows an example scenario, where \(\{u_{1}, u_{2}, \dots , u_{11}\}\) are user locations, and \(p_{1}\) and \(p_{2}\) are two meeting places. Figure 1b shows the distances of \(u_{1}, u_{2}, \dots , u_{11}\) to the meeting places. A GNN query that is based on traditional aggregate functions sum or max and minimizes the total and maximum distance of the group to the meeting place, respectively, returns \(p_{2}\) as the answer. On the other hand, \(p_{1}\) is the closest to the majority (i.e., 9 users \(u_{1}\) to \(u_{9}\)) of the users. Thus, a GNN query based on w-rank returns \(p_{1}\) as the answer. A group may select \(p_{1}\) over \(p_{2}\) since \(p_{1}\) maximizes the preferences of the group, and as a result the total and the maximum travel distance of the group increases slightly. The query answer varies depending on the users involved in a group. For example, if a group consists of users \(\{u_{1}, u_{2}, \dots , u_{9}\}\) then the returned answer is same for a GNN query based on sum, max and w-rank. There are also scenarios, where the answer of a GNN query based on w-rank and sum is the same but different from the answer based on max, e.g., for the group \(\{u_{2}, u_{2}, \dots , u_{10}\}\), and vice versa, the answer based on w-rank and max is different to sum, e.g., for the group \(\{u_{1}, u_{3}, u_{6}, u_{7}, u_{9}, u_{11}\}\). In summary, depending on the user locations in a group, a GNN query based on w-rank can return the same or different answer from a GNN query based on aggregate functions sum and max but always maximizes the overall preference of the group. Thus, a group can request a GNN query based on w-rank, sum or max to find the appropriate meeting place according to their requirements. In this paper, we develop privacy preserving approaches for GNN queries based on sum and max, and w-rank.

1.1 Research problem

Recent research (e.g., [12, 18, 20]) has shown that sending a user’s imprecise instead of exact location to the LSP while accessing LBSs is an effective model of protecting a user’s location privacy from the LSP. Usually, the LSP returns a candidate answer set to the user that includes the answer for a LBS (e.g., a nearest neighbor (NN) query) for every location of the imprecise location. The user finds the query answer for her actual location from the candidate answer set. However, this straightforward technique does not work if the answer of a location-based query like a GNN query depends on the actual locations of a group of users instead of a single user. More specifically, if the LSP returns a candidate answer set to the group that includes the answer for a GNN query based on the group’s required aggregate function (sum, max or w-rank) considering that a group member’s location can be anywhere within her imprecise location, none of the group members can identify the actual GNNs without knowing the locations of other members.

Not only the LSP, but also a group member may invade the privacy of other members. There are occasions where group members may wish to hide their current locations from other members for personal reasons. For example, a user who is at a job interview may wish to hide this location from a group of co-workers. To ensure a user’s privacy, no involved party should have access to the locations of others. Thus the key research challenge in a private GNN query is to determine the actual GNN without allowing the LSP or other members to determine locations of users in the group.

1.2 Contribution

We identify two new privacy attacks: distance intersection attack and rank disclosure attack on a user’s location while processing a private GNN query. In particular, we will show in Section 5.1 that a straightforward technique to determine the actual GNN based on sum and max that does not share the actual user locations with other users, is prone to a privacy attack, which we call the distance intersection attack. In this technique, each user updates the distances for the retrieved candidate answers with respect to the user’s actual location. However, from these updates it is possible to identify the distance of users to the candidate answers. The distance intersection attack uses the identified distances to the locations of the candidate answers to triangulate the user’s location. A user is located at the intersection point of three circles having centers at the locations of any three candidate answers and radii equal to the user’s distances from the locations of the corresponding candidate answers. We propose a private filter technique to address this attack. Our private filter technique passes the retrieved candidate GNN answers in an aggregated form to each user in the group. Based on each user’s location, the answers are modified in such a way that no group member can derive other member locations but the actual GNN can still be computed.

For a GNN query based on w-rank, the actual GNNs are determined from the candidate answer set based on group members’ ranks instead of distances for the candidate answers. We will show in Section 7.1 that if group members straightforwardly reveal their ranks for the candidate answers, where the ranks are computed based on user distances to the meeting places, then the group members’ locations can be approximated using the concept of Voronoi diagram [10]. We call such an attack on the user’s location the rank disclosure attack. If the nearest meeting place of a user is known then the user’s location can be refined in the area represented by the Voronoi cell of the meeting place within the Voronoi diagram. If the meeting places with the next ranks (e.g., the second nearest, the third nearest) are also known than the user’s location can be further refined using a higher order Voronoi diagram.

Similar to GNN queries based on sum and max, we propose a private filter technique for a GNN query based on w-rank that prevents the rank disclosure attack by updating group members’ ranks for the candidate answers in an aggregate form. From the aggregate form, no party can identify a user’s ranks for the candidate answers and thereby approximate the user’s location. To date, there exists no algorithm for an LSP to evaluate a GNN query based on w-rank even for a set of point locations. Thus, an additional challenge for a GNN query based on w-rank is to develop an algorithm for the LSP to evaluate a GNN query based on w-rank with respect to a set of regions.

In summary, in this paper, we identify how the privacy of group members can be invaded participating in GNN queries and develop a novel approach to preserve their privacy. Our contributions are:

-

Proposal of a framework to preserve each group member’s privacy during the access of LBSs as a group. The unique advantage of our framework is its decentralized architecture, which does not require any trusted intermediary server.

-

Provision of novel techniques that find the actual GNN based on aggregate functions sum and max from a set of candidate GNNs without disclosing a member’s location to others. Our techniques prevent the distance intersection attack. We extend the existing GNN algorithm [45] for point locations to regions, which is a necessary component to provide the candidate GNNs efficiently while preserving the privacy of group members.

-

Introduction of GNN queries based on w-rank and a privacy preserving solution to access the query. We develop a private filter technique that overcomes the rank disclosure attack while finding the GNNs from the candidate answer set. We propose an algorithm for the LSP to evaluate GNN queries based on w-rank with respect to a set of regions.

-

Evaluation of our techniques in extensive experiments and showing its efficacy.

This paper extends our previous paper [21], where we proposed the first privacy preserving approach to access GNN queries using the aggregate functions sum and max. In this paper, we extend our work by introducing GNN queries based on w-rank that maximize the level of preference for the group. We present an approach to protect user privacy while accessing GNN queries based on w-rank. More specifically, (i) we identify the rank disclosure attack on a user locations that arise if the users disclose their ranks for meeting locations to others, (ii) we develop algorithms to prevent the attack and to process GNN queries based on w-rank with respect to a set of regions, and (iii) we present a new set of experimental analysis to verify the efficiency of our algorithms.

2 Problem setup

We formally define GNN queries in Section 2.1 and give an overview of privacy preserving GNN queries in Section 2.2. In Section 2.3, we discuss the privacy model including possible adversaries and privacy attacks for GNN queries.

2.1 GNN queries

We assume a system architecture where the users and the LSP are connected through a network (e.g., the cellular network or the Internet). Given a group of n users \(u_{1}, u_{2}, \dots , u_{n}\) located at points \(l_{1}, l_{2}, \dots , l_{n}\), respectively, issue a query for the group nearest data point (GNN) such as a cinema or coffee shop. The formal definition of the GNN query is given below:

Definition 1 (GNN Query)

Let D be a set of data points in a 2-dimensional space, Q be a set of n query points \(\{l_{1}, l_{2}, \dots , l_{n}\}\) and f be an aggregate function. The GNN query finds a data point p from D, such that for any \(p^{\prime } \in D-\{p\}\), \(f(Q,p) \leq f(Q,p^{\prime })\).

A GNN query can be extended to a k group nearest neighbor (kGNN) query that returns k data points having the k smallest aggregate distances. For example, a group may want to know the locations of k group nearest restaurants and then select one from k options based on other parameters like budget or food preference. We develop privacy preserving solutions for k GNN queries.

In this paper, we first focus on two aggregate functions sum and max, which return the total distance and the maximum distance from the users to a data point, respectively. Then we consider a GNN query based on weighted-rank (w-rank), which is described below.

The aggregate function w-rank returns the total weight of a group for a data point, where the total weight represents the cost associated with a group for a data point. The total weight of a data point is computed by adding the weights for all group members based on their ranks for the data point, where the ranks are computed based on user distances to the data points. A weight \(w_{i}\) is added to the total weight of a data point \(p_{h}\) if a user’s rank for \(p_{h}\) is i. Since a user has the highest preference for her nearest data point, and a weight represents the cost associated with a rank of the data point, the weight increases with the decrease of the rank, i.e., \(w_{i + 1} > w_{i}\) (e.g., a user’s nearest data point has the smallest rank 1 and the lowest cost \(w_{1}\)).

The group sets the rules to compute the set of weights according to their requirements. For example, the ratio between two consecutive weights \(w_{i + 1}\) and \(w_{i}\) can be predefined; if it is set to 2 then \(w_{i + 1}= 2 \times w_{i}\). The ratio can be also predefined with respect to the weight \(w_{1}\); if the ratio between \(w_{1}\) and \(w_{i}\) is set to i, then \(w_{i}\) is computed as \(i \times w_{1}\). The GNN query based on w-rank returns the data point that minimizes the total weight (i.e., maximizes the preferences) for the group. For simplicity, in this paper, we assume that all group members use a same rule to compute the weights. In general, in a GNN query based on w-rank, users can predefine their rules to compute weights independently according to their preferences.

Although distance is the most widely used metric to represent travel cost, in practice there are also cases where the cost is not directly proportional to the distance. For example, many big cities (e.g., Melbourne) are divided into zones for the public transport system; if a user travels within a zone using a public transport then the transport cost is same irrespective of the actually traveled distance. Similarly, in a postal service, usually the local postage cost is same for any distance. There are scenarios where the cost for a train ticket increases with increasing number of crossed stations to reach the destination though the distances between two consecutive stations may not be the same. In this paper, we focus on weighted user ranks for the data points in addition to distance based aggregate functions sum and max to provide the group with an option of maximizing their preferences for the data points.

Note that if the weight given by a user for a data point is equal to the distance of the user to the data point then w-rank maps to sum. Thus, the aggregate function w-rank is a more generalized function; the weights can be used to represent costs for different metrics such as distance, rank, or the number of train stations a user needs to cross to reach at a data point.

2.2 Privacy preserving GNN queries

When a user requests a location-based query the user reveals her location, identity and the requested query to the LSP. From the revealed information the LSP can derive sensitive and private information about the user. A popular privacy model to hide a user’s location from the LSP is to send a cloaked area [16, 52], which is typically a rectangle containing the user’s location, instead of the user’s location. The higher the spatial imprecision is, the more private is the user’s location; the probability is higher that a larger rectangle area contains a diverse range of locations. On the other hand, if a user wants to hide her identity then the rectangle is computed based on K-anonymity [12, 18], which ensures that the rectangle includes the locations of at least \(K-1\) other users in addition to the user’s location so that the user becomes K-anonymous.

In our proposed privacy preserving (private) k GNN queries, the users in the group do not reveal their exact locations and identities to the LSP; instead they provide rectangles \(R_{1}, R_{2}, \dots , R_{n}\) that contain user actual locations \(l_{1}, l_{2}, \dots , l_{n}\), respectively in addition to the \(K-1\) other user locations. Thus the LSP needs to return a set of candidate data points A with respect to the provided rectangles that include the k GNNs for the actual user locations. Afterwards the actual k GNNs has to be computed from A without revealing the location of any user to any group member.

As we have seen in Section 1, providing an answer for privacy preserving k GNN queries has two parts: (i) The group of users use a technique, called a private filter (privacy preserving filter), to find the actual k GNNs from the retrieved set of candidate answers without compromising their privacy, and (ii) the LSP evaluates the k GNN query with respect to a set of rectangles to provide the candidate answers for the private filter while preserving user privacy. We formally define the private filter and the k GNN query with respect to rectangles as follows.

Definition 2 (Private Filter)

Let \(\{u_{1}, u_{2}, \dots , u_{n}\}\) be a group of n users, A be a set of candidate data points, f be an aggregate function, and k be a positive integer. The precise location \(l_{i}\) of a user \(u_{i}\) is only known to \(u_{i}\). A private filter is a mechanism that computes the k GNNs from A and provides k smallest values for f with respect to the set \(\{l_{1}, l_{2}, \dots , l_{n}\}\) without revealing a user \(u_{i}\)’s location \(l_{i}\) to others.

Definition 3 (kGNN Query with respect to Rectangles)

Let D be a set of data points in a 2-dimensional space, \(\{R_{1}, R_{2}, \dots , R_{n}\}\) be a set of n query rectangles, \(r_{i}\) be any point in \(R_{i}\) for \(1 \leq i \leq n\), and f be an aggregate function. The kGNN query with respect to rectangles returns the set of candidate data points A that includes all data points having the \(j^{th}\) smallest (1 ≤ j ≤ k) value for f with respect to every point set \(\{r_{1}, r_{2}, \dots , r_{n}\}\).

The symbols used in this paper are summarized in Table 1.

2.3 Privacy model: adversaries and privacy attacks

We consider the LSP and the users involved in processing k GNN queries as potential adversaries. In our approach, the group reveals users’ imprecise locations (i.e., rectangles) to the LSP anonymously. On the other hand, since k GNN queries are used by the users to meet at a place, we assume that a user is not concerned to hide her identity from other group members. Instead the user does not disclose her location in any form to other group members to protect her privacy. We make the following assumptions for the privacy model in our approach:

-

The LSP and the group members do not collude with each other to violate a user’s privacy. We assume a semi-honest model, where an involved entity in the k GNN query processing follows the rules of our proposed approach and tries to infer private information of other members only from the obtained information during the execution of our proposed approach.

-

The adversaries do not have any background information about user locations and do not know any probabilistic distribution of users locations.

A privacy attack, in general, refers to an intrusive inference through which an adversary is able to derive a user’s sensitive and private information that the user does not want to reveal. For example, if a user reveals a rectangle as her location and an adversary can refine the user’s location within the rectangle then an inference attack can be successful. The privacy attacks that the LSP can apply to violate user privacy are already identified and addressed in the literature. We use the approach proposed in [20] to hide user identities and locations from the LSP. In [20], each user computes a rectangle that includes \(K-1\) other users’ rectangles in addition to the user’s exact location, and anonymously sends to the LSP. In Section 3.1, we elaborate the superiority of [20] over other existing approaches for hiding user identities and locations from the LSP. In addition, we propose efficient algorithms for the LSP to evaluate the candidate k GNNs with respect to a set of rectangles.

In this paper, we focus on how to protect a user’s location privacy from others while finding the actual k GNNs from the returned candidate answer set by the LSP. In Sections 5.1 and 7.1, we show that if a user straightforwardly reveals her distance or rank for the candidate data points then other group members can apply the distance intersection attack or the rank disclosure attack, respectively, to identify the user’s location. We have developed private filter techniques that prevent these attacks and protect user privacy while processing k GNN queries.

3 Related work

In Section 3.1, we present the solutions for protecting location privacy of users accessing LBSs, and in Section 3.2, we discuss the existing work related to GNN queries.

3.1 User privacy in LBSs

Most research (e.g., [12, 18, 42, 53]) to preserve user privacy in LBSs is based on a centralized architecture, where an intermediary trusted server performs all tasks for the privacy protection of users. The limitations of a centralized architecture is a single point of failure, bottlenecks due to communication overheads, and privacy threats as the intermediary server stores all information in a single place. Therefore, a few decentralized approaches (e.g., [8, 13, 20, 22]) preserve a user’s privacy in cooperation with her peers and do not involve an intermediary trusted server. However, all approaches, centralized or decentralized, focused on LBSs involving a single user.

A centralized architecture for LBSs involving a group like private GNN queries can be implemented in a similar way, where an intermediary trusted server transforms exact locations of users to regions (e.g., a rectangle or a circle) and forwards the GNN query with regions instead of the exact user locations to the LSP. The LSP evaluates the candidate answers that include GNNs for any position of the users within their regions and returns the candidate answers to the intermediary server. The intermediary server computes the actual GNN for the exact locations of the users from the candidate answers and forwards the actual answer to the group. To overcome the limitations of a centralized architecture, we propose a framework based on a decentralized architecture for a private GNN query.

Most of the decentralized techniques (e.g., [8, 13, 14, 20, 29]) hide a user’s location from the LSP by exploiting P2P network (e.g., Bluetooth, WiFi). In these techniques, an imprecise location of the user (i.e., a rectangle or a circle) includes \(K-1\) other users’ locations in addition to the location of the user requesting the query so that the user’s location becomes K-anonymous. In [8, 13, 14], the users need to trust their peers with their locations for computing their imprecise locations.

In [29], K users in the proximity form a group and then using the proximity information, the group progressively finds a bounding box as their rectangle that covers all users’ locations. In this technique, all users in a group use the same rectangle for accessing LBSs. Though the users do not share their actual locations with anyone, not even their peers, a user’s rectangle is revealed to others in the group. Since our proposed private filter requires that the user’s rectangle sent to the LSP is not revealed to anyone, and therefore we do not use the technique proposed in [29] to compute the user’s rectangle for a private k GNN query.

The solution proposed in [20] is a two step process. In the first step, a local imprecise location (i.e., a rectangle) is randomly computed by each user such that the rectangle includes the user’s location and the area of the rectangle is set according to the user’s privacy requirement. In the second step, when a user requires to access a LBS, the user’s global imprecise location for the LSP is computed as a minimum bounding rectangle that includes \(K-1\) others’ local imprecise locations (i.e., rectangles) in addition to the user’s exact location. Since neither the user’s actual location nor the rectangle sent to the LSP is revealed to others, we use this technique to request a private GNN query.

Besides K-anonymity [50] and imprecision [25], space transformation [33] and private information retrieval techniques [15] are also used to preserve the privacy of the users. Although the architecture of both of these approaches eliminates an intermediary trusted server, these approaches incur cryptographic overheads and cannot use existing spatial indexing techniques to store data on the server. In this paper, we assume that the users in a group disclose their imprecise locations to the LSP, which evaluates the query based on these imprecise locations on a database, where the data points are indexed using a spatial indexing technique such as R-tree [19].

In [23], the authors have proposed a privacy preserving approach that eliminates the role of the LSP, and evaluates GNN queries with crowd sourced data points. However, the limitation of this approach is that the considered POI set is small and can be incomplete. Thus, the group nearest data points identified with this approach may not be the actual GNNs. Recently three cryptographic approaches, [2, 3, 30], have been developed to address user privacy for GNN queries. In [2, 3], the authors have developed a cryptographic technique to find the centroid of all group members’ locations and the LSP evaluates the GNN query with respect to the centroid. Thus, the LSP cannot return the actual group nearest data point with respect to the group, instead the LSP has to approximate the query answer, which is the main limitation of their approach. On the other hand, in [30], the authors have not provided any algorithm to evaluate the candidate answer set for a GNN query from which the group can find the actual query answer. Cryptographic approaches, in general, incur high processing overheads. We develop a lightweight approach to evaluate GNN queries in a privacy preserving manner. We note that our system assumes that each user collaborates with others. If users are malicious, our approach can be complemented with secure multi-party protocols (e.g., [6]).

3.2 Group nearest neighbor queries

In [44], Papadias et al. first proposed k GNN queries for the aggregate function SUM. In [45], the authors have extended the proposed solution [44] for GNN queries for minimizing the minimum and maximum distance of group members in addition to minimizing the total distance of group members, and called the GNN query as the aggregate nearest neighbor query. Both depth first search (DFS) [47] and best first search (BFS) [27] algorithms can be used to implement the proposed solutions [44, 45], where R-tree [19] is used to index the data points.

Among the three methods, MQM (multiple query method), SPM (single point method) and MBM (minimum bounding method), shown in [44, 45], MBM outperforms both MQM and SPM in terms of the query processing overhead. The key idea of MBM is to traverse the R-tree in order of the minimum aggregate distances of R-tree nodes with respect to the set of query points. The search terminates when k data points with k smallest aggregate distances have been identified. To increase the efficiency of MBM, the authors have proposed strategies to prune the R-tree nodes/data points using the minimum bounding box of the set of query points.

In [34], Li et al. have approximated the query distribution area using an ellipse and used a distance or a minimum bounding box derived from the ellipse to prune the R-tree nodes/data points for processing k GNN queries. In [40], Luo et al. have only considered non-indexed data points and developed a GNN algorithm based on projection-based pruning strategies, and in [43], Namnandorj et al. have estimated the search space using a vector property and proposed GNN algorithms for both indexed and non-indexed data points. In [35], Li et al. have developed exact and approximation algorithms for GNN queries that minimize the maximum distance of the data points from a set of points. The authors have used convex hull, minimum enclosing ball and furthest Voronoi diagram in their algorithms to prune R-tree nodes/data points. However, their work does not consider an important and popular aggregate function sum that minimizes the total travel distance of the group. We do not extend the point-based GNN query evaluation algorithm [35] for the set of rectangles because it is not straightforward to extend the concept of furthest Voronoi diagram for rectangles.

Our paper is the first study to propose an algorithm for processing a k GNN query with respect to a set of regions instead of a set of points in order to preserve user privacy. In our algorithm for GNN queries with respect to a set of rectangles for aggregate functions sum and max, we extend the concept of the minimum bounding box proposed in [34, 44, 45] to prune R-tree nodes/data points. To the best of our knowledge, there exists no algorithm in the literature for processing GNN queries based on w-rank with respect to a set of points or rectangles.

The work proposed in [37] considers a new type of GNN query that minimizes the total travel distance and the maximum distance for a fixed size of a subgroup. For example, a group may request for a data point that have the minimum total distance from any 60% users in the group. Formally, a group provides their locations and the subgroup size and the LSP returns the subgroup and the data point that result in an optimal answer for aggregate functions sum and max. In this paper, we focus on GNN queries that involve all group members to determine the answer. In [38], the authors have proposed an efficient solution to evaluate GNN queries for moving groups, whereas in our work, we assume that the groups are static.

Besides GNN queries, researchers have also focused on other applications that involve data from more than one user or entity like group trip planning queries [24, 26], group trip scheduling queries [32], flexible group spatial keyword queries [1], personalized app recommendation [46], distributed outlier detection [55], and emerging topic tracking in microblog stream [31].

4 Framework based on a decentralized architecture

In this section, we first present a framework for processing a private GNN query based on a decentralized architecture, which eliminates the need for any intermediary trusted server.

In our proposed framework a coordinator for the group is selected randomly before a query request. The coordinator assists in processing the private GNN query and can be a group member or anyone outside the group who does not participate in the query. Note that the coordinator differs from an intermediary server in a centralized architecture because the coordinator can be different for every GNN query. Moreover, the coordinator only knows the user identities in the group and the type of query requested but has no knowledge about their locations. The total process of accessing a private k GNN query is performed in three steps: (i) sending the query, (ii) evaluating the query, and (iii) finding the answer. We detail these components in the following subsections.

4.1 Sending the query

Each user in the group first registers to the coordinator with their identities (e.g., IP address, phone number) and receives a query identity (QID) from the coordinator. Each group member sends her imprecise location and the QID anonymously to the LSP using either a pseudonym service [14] in the Internet or through a randomly selected peer [8, 20] connected in a wireless personal area network (e.g., Bluetooth or 802.11). These techniques hide the users’ identities from the LSP as well as from the cellular infrastructure provider. The coordinator only sends the k GNN query for the required service, which includes the QID, the description of the required service, the value for k and the number of users in the group to the LSP.

4.2 Evaluating the query

After receiving the request, the LSP evaluates the k GNN query with respect to the set of rectangles. Since the LSP does not know the exact user locations, it cannot determine the actual k GNNs. Therefore, it returns a set of candidate answers that include the actual GNNs to the coordinator.

4.3 Finding the answer

The final step is to determine the actual k GNNs without revealing the user locations to anyone. The retrieved answer set has to go through all users of the group. Each user updates the distance to the candidate GNN answers with respect to her actual location. The communication between the users in the group can be done with or without the coordinator.

In the first case (with the coordinator), the coordinator randomly selects one of the user identities in the group and sends the answer set to that user. After receiving the modified answer set, the coordinator marks that user’s identity as visited. The coordinator repeats this procedure with the remaining unmarked user identities.

In the second case (without the coordinator), the coordinator forwards the retrieved answer set together with the list of identities of all participants to a randomly selected user in the group. The selected user modifies the candidate answers and marks her identity as visited. Then she randomly selects a user with an unmarked identity and forwards the updated answer set and the list of identities to the next selected user.

After the answer set has been modified by all users, the coordinator (in the first case) or the last selected user (in the second case) sends the actual GNNs to all users in the group.

5 Private filters

5.1 Minimizing the total distance

Without loss of generality, consider an example scenario for a private k GNN query with a group of five users and \(k = 2\). The users \(\{u_{1},u_{2},\dots ,u_{5}\}\) provide their query rectangles \(\{R_{1},R_{2},\dots ,R_{5}\}\), respectively, to an LSP. The LSP returns the locations of a set of data points A: \(\{p_{1},p_{2},\dots ,p_{8}\}\) that includes the 2 GNNs that minimize the total travel distance with respect to the actual locations \(\{l_{1},l_{2},\dots ,l_{5}\}\) of the users (Please see Section 6 for the algorithm to compute A). The locations of the data points in A, the actual and imprecise locations of the users are shown in Figure 2, and the actual distances of the users to all data points in A are presented in Table 2.

First, we show a straightforward technique to determine the actual GNNs and the privacy attack associated with this technique. As mentioned in Section 4, A has to be updated by all users in the group with respect to their exact locations for finding the actual GNNs from A. Suppose user \(u_{1}\) first receives A from the coordinator c. Then \(u_{1}\) updates A: \(\{p_{1},p_{2},\dots ,p_{8}\}\) by inserting a new distance field for each data point in A and initializing the fields with her actual distances from those data points as A:\(\{(p_{1},3),(p_{2},8.5)\dots ,(p_{8},14)\}\). Then, A is forwarded to a randomly selected user \(u_{2}\), either directly or via c. The user \(u_{2}\) adds her actual distances for all data points in A with those of \(u_{1}\) and forwards them to another user. This process continues until all users have added their actual distances for all data points in A. After all updates, the final value of the distance field for each data point in A represents the total distance of that data point to all group members (see Table 3). Thus, the last user (u5 in this example) or the coordinator c can determine the \(1^{st}\) and \(2^{nd}\) group nearest data points \(p_{3}\) and \(p_{1}\), and sends them to all participant users in the group.

However, the privacy of users can be violated in this technique using the distance intersection attack. The distance intersection attack is based on 2D trilateration. If a user’s distance from a known location is revealed, then the user’s location has to be on the circle centered at the known location with the radius of the revealed distance. If a user’s distances from two known locations are revealed, the user’s location is one of the two intersection points of the circles. If a user’s distances from three or more known locations are revealed, the user’s exact location is the intersection point of all circles.

In our example, if \(u_{2}\) receives a message from \(u_{1}\) directly, the message includes A, the identities of \(\{u_{1},u_{2},u_{3},u_{4},u_{5}\}\), and the identity of \(u_{1}\) marked as visited. Inspecting the visited field, \(u_{2}\) knows that she is the second randomly selected user who receives A. Since \(u_{2}\) also knows that the distances in A are the actual distances of \(u_{1}\) to \(\{p_{1},p_{2},\dots ,p_{8}\}\), the unknown location \(l_{1}\) of \(u_{1}\) can be computed from any of the three revealed distances using the distance intersection attack (Figure 3). In the case that the communication among the group members is done via a coordinator, \(u_{2}\) can again determine a location from the intersection point of the circles. However, \(u_{2}\) does not know which user is located at that intersection point, because \(u_{2}\) has no access to the list of identities showing that only the identity \(u_{1}\) is marked as visited. In this case, the coordinator c can compute the exact locations of all users using the distance intersection attack. The coordinator c monitors A before sending it to \(u_{i}\) and after receiving it from \(u_{i}\) and then computes the actual distances of \(u_{i}\) for all data points in A. For example, the actual distance of \(u_{4}\) to \(p_{4}\) is found by deducting 22 (observed before sending A to \(u_{4}\)) from 32 (observed after receiving A from \(u_{4}\)) in Table 3.

We present now our private filter technique that counters the distance intersection attack on the users’ privacy. Let n be the number of users in the group, where \(n>2\), and \(MaxDist(R_{i},p_{h})\) be a function that returns the maximum Euclidean distance between a user’s rectangle \(R_{i}\) and a data point \(p_{h}\) for a positive integer h. In our private filter technique, the LSP returns for each data point \(p_{h} \in A\) the sum of the maximum distances of \(p_{h}\) to the query rectangles \(d_{max}(p_{h})\), expressed as:

On receiving A, a user \(u_{i}\) in the group updates \(d_{max}\) for all data points with respect to her actual position \(l_{i}\). Let the function \(Dist(l_{i},p_{h})\) return the Euclidean distance between a user’s actual location \(l_{i}\) and \(p_{h}\). The user \(u_{i}\) computes \(d^{\prime }_{max}(p_{h})\) for a data point \(p_{h}\) using the following equation:

Then \(u_{i}\) updates \(d_{max}(p_{h})\) by assigning \(d^{\prime }_{max}(p_{h})\) to \(d_{max}(p_{h})\) for a data point \(p_{h}\). After completing the updates for all data points, \(u_{i}\) forwards A to another user, either directly or via the coordinator. Each user updates \(d_{max}\) for all data points in A using this procedure.

Let X represent a subset of users in the group who have already updated \(d_{max}\) for all data points in A and Y represent the remaining users in the group who have not yet received and updated A. In every step of the private filter technique, \(d_{max}(p_{h})\) can be in general expressed by the following equation:

As a result, when \(d_{max}(p_{h})\) of a data point \(p_{h}\) has been updated with respect to all users’ exact locations, it represents the aggregate distance (\({\sum }_{i = 1}^{n}{Dist(l_{i},p_{h})}\)) from \(p_{h}\) to the group. Table 4 shows the steps for updating \(d_{max}\) by every user for the given example. After the updates of \(u_{5}\), \(d_{max}(p_{1}),d_{max}(p_{2}),\dots ,d_{max}(p_{8})\) represent the actual aggregate distance of \(\{p_{1},p_{2},\dots ,p_{8}\}\) from the group of users \(\{u_{1},u_{2},\dots ,u_{5}\}\) (see the last row of Table 4). Depending on the communication method used, \(u_{5}\) or the coordinator c forwards 2 GNNs, \(p_{3}\) and \(p_{1}\), to all users in the group.

In this technique, the privacy of all users is preserved in both scenarios: communication without or with the coordinator c. In the first scenario, the second randomly selected user \(u_{2}\) cannot compute the actual distances of the first randomly selected user \(u_{1}\) for the data points in A as the actual distance of \(u_{i}\) from the data points are hidden in the revealed \(d_{max}\)s as shown in (3). In the second scenario, although c can monitor the change in \(d_{max}\)s for the data points in A before sending it to \(u_{i}\) and after receiving it from \(u_{i}\), c cannot determine the actual distance of \(u_{i}\) from any data point in A because the coordinator does not know the locations of the users’ rectangles. For example, c monitors the change of \(d_{max}(p_{4})\) from 54 to 52 before sending A to \(u_{4}\) and after receiving A from \(u_{4}\), respectively (see Table 4). However, as the location of \(R_{4}\) is unknown to c, c cannot determine \(MaxDist(R_{4},p_{4})\) to compute \(Dist(l_{4},p_{4})\). To remind the readers, as we already mentioned in Section 2.3, we assume that the LSP and the group members do not corroborate among themselves to retrieve any extra information about a user; our approach is based on a semi-honest model, where an involved entity in the k GNN query processing follows the rules of our proposed approach and tries to infer private information of other members only from the obtained information during the execution of our proposed approach.

The private filter technique discussed so far cannot perform any pruning of the data points from A until all users in the group update A with respect to their actual locations. We call this private filter for sum a final pruning private filter (sum_FPPF). In the next step, we propose an incremental pruning private filter for sum (sum_IPPF) that allows each user to perform a local pruning of those data points from the answer set that cannot be the actual GNNs.

In sum_IPPF, the LSP provides the sum of the minimum distances from the query rectangles \(d_{min}\) in addition to the sum of the maximum distances from the query rectangles \(d_{max}\) for all data points in A. The addition of \(d_{min}\) allows a user to perform a local pruning of the data points from A after the update and to send a smaller answer set to the next user. Let \(MinDist(R_{i},p_{h})\) be a function that returns the minimum Euclidean distance between \(R_{i}\) and \(p_{h}\). The LSP computes \(d_{min}(p_{h})\) as follows:

On receiving A, each user updates both \(d_{min}\) and \(d_{max}\) for all data points in A. Similar to \(d^{\prime }_{max}(p_{h})\), a user computes \(d^{\prime }_{min}(p_{h})\) for a data point \(p_{h}\) using the following equation:

Afterwards the user updates \(d_{min}(p_{h})\) by assigning \(d^{\prime }_{min}(p_{h})\) to \(d_{min}(p_{h})\).

Algorithm 1 summarizes the steps performed by a user on receiving A for the aggregate function sum. After updating \(d_{min}\) and \(d_{max}\) for all data points in A, the user finds the \(k^{th}\) smallest of all \(d_{max}\) as \(maxdist_{k}\) using the function \(kMin\). Then \(d_{min}\) of every data point in A is compared with \(maxdist_{k}\). If \(d_{min}(p_{h})\) of a data point \(p_{h}\) is greater than \(maxdist_{k}\), then \(p_{h}\) is removed from A as \(p_{h}\) can never be one of the k nearest data point from the group. Table 5 shows the steps for updating \(d_{min}\) and \(d_{max}\), and the pruning of data points by every user in our example. From Table 5, we see that the user \(u_{2}\) determines \(maxdist_{k}\) as 42 for \(k = 2\) and removes \(p_{6}\) (dmin(p6) = 46.5), \(p_{7}\) (dmin(p7) = 45), and \(p_{8}\) (dmin(p8) = 48) from A. Hence, the next user \(u_{3}\) can process a smaller answer set, and more importantly, the local pruning reduces the communication overhead among the users.

For sum_IPPF, a special case may arise if a data point \(p_{h}\) overlaps with all rectangles \(\{R_{1},R_{2},\dots ,R_{n}\}\). In this case, the retrieved \(d_{min}(p_{h})\) (i.e., \({\sum }_{i = 1}^{n}MinDist(R_{i},p_{h})\)) from the LSP is 0 and if the users communicate via the coordinator, the coordinator learns each user’s distances to \(p_{h}\). Therefore if any \(d_{min}(p_{h})\) is 0, users communicate directly to avoid the distance intersection attack.

In summary, for any group size \(n>2\), the discussed private filter techniques find the actual GNNs without revealing users’ locations to others. However for a group of two users (i.e., \(n = 2\)), an extra attention is required: if users communicate directly for \(n = 2\), a user \(u_{2}\) determines herself as the second user by observing the list of identities with one identity marked as visited. Then for every data point \(p_{h}\) in the answer set, \(u_{2}\) can determine \(Dist\)\(({\kern -.8pt}l_{1}{\kern -.8pt},p_{h}{\kern -.8pt})\) by subtracting \(M{\kern -.8pt}a{\kern -.8pt}x{\kern -.8pt}D{\kern -.8pt}i{\kern -.8pt}s{\kern -.8pt}t({\kern -.8pt}R_{2}{\kern -.8pt},p_{h}{\kern -.8pt})\) from \(d_{max}(p_{h})\) as shown in (3). Therefore, \(u_{2}\) can apply the distance intersection attack to find \(u_{1}\)’s precise location \(l_{1}\). On the other hand, if the second user \(u_{2}\) receives the candidate data points from the coordinator then she does not know that she is the second user as she does not have the list of identities with one identity marked as visited. Thus, \((MaxDist(R_{1},p_{h})+ MaxDist(R_{2},p_{h}))\) or \((Dist(l_{1},p_{h})+ MaxDist(R_{2},p_{h}))\) could be \(d_{max}(p_{h})\), and user \(u_{2}\) cannot discover \(Dist(l_{1},p_{h})\). Hence for \(n = 2\), users need to communicate via the coordinator to find the actual GNNs.

The proof of correctness of our private filter is elaborated in [21].

5.2 Minimizing the maximum distance

In this section, we consider private k GNN queries that minimize the maximum distance of a group of users from the data points. Similar to the case of minimizing the total distance, we cannot use the straightforward technique due to its vulnerability of the distance intersection attack.

We can use both techniques, FPPF and IPPF proposed in Section 5.1, with some modifications for finding the data point that has the minimum maximum distance from the group of users. In this case, the LSP uses the aggregate function max instead of sum to compute \(d_{min}(p_{h})\) and \(d_{max}(p_{h})\) for a data point \(p_{h}\) as shown in the following two equations:

Algorithm 2 shows the steps of max_IPPF. In case of the aggregate function max, a user \(u_{i}\) only updates \(d_{min}(p_{h})\) as \(Dist(l_{i},p_{h})\) when \(Dist(l_{i},p_{h})\) is larger than the current \(d_{min}(p_{h})\) for a data point \(p_{h}\) (Lines 2.2-2.3). By construction, there is always at least a user in the group whose distance from \(p_{h}\) is equal to or greater than \(d_{min}(p_{h})\).

On the other hand, a user cannot modify \(d_{max}(p_{h})\) even if \(Dist(l_{i},p_{h})\) is smaller than the current \(d_{max}(p_{h})\) as the other users in the group can also have distances from \(p_{h}\) equal to \(d_{max}(p_{h})\). Thus, in contrast to Algorithm 1, \(maxdist_{k}\) never needs to be updated and remains constant. Therefore, the LSP computes \(maxdist_{k}\) from \(d_{max}\) of the data points in A and directly sends \(maxdist_{k}\) instead of sending \(d_{max}\) for each data point.

If a user updates \(d_{min}(p_{h})\) in Algorithm 2, it represents the user’s actual distance from \(p_{h}\) (Line 2.3). If the communication among the users is done via a coordinator c for filtering the retrieved answer set from the LSP, c can observe \(d_{min}\) for each data point in A before sending A to a user \(u_{i}\) and after receiving it back from \(u_{i}\), and determine which \(d_{min}\)s have been updated by \(u_{i}\). The changed \(d_{min}(p_{h})\) denotes the actual distance of \(u_{i}\) from \(p_{h}\). Thus c can compute the location of \(u_{i}\) with the distance intersection attack using \(d_{min}\)s changed by \(u_{i}\). Thus in our proposed private filter for the aggregate function max, users avoid the coordinator and communicate directly.

In the direct communication, after performing the update a user sends A directly to another user in the group whose identity has not been yet marked as visited. In order to apply the distance intersection attack for revealing a user’s unknown location, we need to know the user’s distances from known locations. A user who receives A knows the location of the data points in A and the distances in the form of \(d_{min}\) for each data point in A. The user also knows that a \(d_{min}(p_{h})\) represents either the distance of \(p_{h}\) from a user’s actual location or the distance of \(p_{h}\) returned by the LSP. However, the user does not know which \(d_{min}(p_{h})\) corresponds to which user’s actual distance from \(p_{h}\) as she has no knowledge about the previous states of A and the order in which the identities are marked as visited. Thus, the user who receives A cannot discover others’ locations in the group using the distance intersection attack.

We know that the second user who receives A can easily identify the user who has received A before her by inspecting the visited field. We also know that if a subset of \(d_{min}\)s are the actual distances from the same user, then the circles with radii equal to those \(d_{min}\)s and centers at the corresponding locations of the data points must intersect at a single point. Using these observations, one may argue that if the second user finds from the received \(d_{min}\)s that a number of circles intersect at a single point, then she would be able to identify the intersection point as the location of the first user. However, it is not guaranteed that the intersection point is the location of the first user, because the values of \(d_{min}\)s that are assigned by the LSP may have caused the intersection point and they might not have been changed by the first user at all.

Figure 4 shows some examples, where \(d_{min}\)s computed by the LSP are shown with dashed lines and the intersection point of all circles are shown with a black dot. In Figure 4a, the intersection point of all circles does not refer to any user’s location, and in Figure 4b and c, the intersection point is the location of a user’s provided rectangle which is not ensured to be the actual location of any user in the group as a user’s actual location can be anywhere within the rectangle.

In contrast to sum_FPPF, for max_FPPF, the LSP returns \(d_{min}\) instead of \(d_{max}\) for each data point in A. This is because if the LSP returns \(d_{max}\) for the data points in A and a user \(u_{i}\) updates \(d_{max}(p_{h})\) as \(Dist(l_{i},p_{h})\) if \(Dist(l_{i},p_{h}) < d_{max}(p_{h})\), then after the update of A by the first user \(u_{1}\), each \(d_{max}\) represents \(Dist(l_{1},p_{h})\). As a result, the user who receives A as a second user can determine \(u_{1}\)’s precise location \(l_{1}\) using the distance intersection attack. In max_FPPF, the users update \(d_{min}\) for each data point in A as shown in Lines 2.2-2.3 of Algorithm 2 and determine the actual GNN after A has been updated by all users in the group.

There is a limitation of our proposed private filter techniques for the aggregate function max. It does not work for \(n = 2\), which we leave for further investigation in our future research. For \(n = 2\), after the update by the first user \(u_{1}\) each \(d_{min}(p_{h})\) represents either \(Dist(l_{1},p_{h})\) or \(MinDist(R_{2},p_{h})\), where \(R_{2}\) is the rectangle of the second user \(u_{2}\). When \(u_{2}\) receives A she can determine that she is the second user by observing the visited field in the list of identities and determine whether \(d_{min}(p_{h})\) represents \(Dist(l_{1},p_{h})\) as she knows \(MinDist(R_{2},p_{h})\). This allows \(u_{2}\) to apply the distance intersection attack if \(d_{min}(p_{h})\) has been modified by \(u_{1}\).

From the above discussion, we summarize that FPPF and IPPF enable users to request k GNN queries without revealing their locations to anyone with any group size for sum and with a group size greater than two for max.

6 k GNN queries with respect to regions

In this section, we propose an algorithm for the LSP to process k GNN queries with respect to a set of rectangles (i.e, regions). Our algorithm uses a modified best first search (BFS) to find the candidate answers that include the k GNNs for any position of the users in their provided rectangles. We assume that the data points are indexed using an \(R^{\ast }\)-tree [4] in the database. Since the query is based on a set of rectangles instead of a set of points, the distance between a data point or an \(R^{\ast }\)-tree node and a query rectangle is defined with a range bounded by the minimum and maximum values.

We summarize the notation used in this section as follows:

-

M: the minimum bounding box that encloses the given set of n query rectangles \(\{R_{1},R_{2},\dots ,R_{n}\}\).

-

\(MinDist(q,p)\) (MaxDist(q,p)): the minimum (maximum) Euclidean distance between q and p, where q represents \(R_{i}\) or M and p represents a data point or a minimum bounding rectangle of an \(R^{\ast }\)-tree node.

-

\(d_{min}(p)\) (dmax(p)): the aggregate distance (i.e., the total or maximum distance) of p computed from the minimum (maximum) distances between p and all query rectangles, where p again represents a data point or a minimum bounding rectangle of an \(R^{\ast }\)-tree node.

-

\(maxdist[k]\): the \(k^{th}\) smallest distance of already computed \(d_{max}(p)\)s.

The algorithm starts the search from the root of the \(R^{\ast }\)-tree and inserts the root together with its \(d_{min}(root)\) and \(d_{max}(root)\) into a priority queue \(Q_{p}\), where \(d_{min}(root)= 0\) and \(d_{max}(root)=f_{i = 1}^{n}({MaxDist(R_{i},root)})\), f being sum or max. The elements of \(Q_{p}\) are stored in order of their minimum \(d_{min}\). Then the algorithm removes an element p from \(Q_{p}\) and checks whether p is an \(R^{\ast }\)-tree node or a data point. If p represents an \(R^{\ast }\)-tree node, then it retrieves its child nodes and enqueues them into \(Q_{p}\) as they might contain one of the candidate answers with respect to the set of rectangles. On the other hand, if p is a data point it is added to A until all data points have been found that are candidates for one of the k GNNs with respect to the set of rectangles.

In the case of k GNN queries for a set of points, the algorithm terminates as soon as k data points have been dequeued from \(Q_{p}\). However, for a set of rectangles the termination is not as simple, because the total or maximum distance of a data point from the query rectangles is a range \([d_{min}, d_{max}]\) instead of a fixed value. The following lemma describes the termination condition of the algorithm as no other data point can further qualify as a candidate answer once the condition is true.

Lemma 1

Let p be a data point or an \(R^{\ast }\)-treenode dequeued from \(Q_{p}\).The algorithm terminates if \(d_{min}(p)> maxdist[k]\).

Note that not all visited data points or \(R^{\ast }\)-tree nodes are inserted to \(Q_{p}\). Before inserting p into \(Q_{p}\), the algorithm checks if p can be pruned with respect to the current \(maxdist[k]\). As \(d_{min}\) and \(d_{max}\) involve a large number of distance computations, similar to [44, 45], the algorithm checks if p can be pruned according to the following lemma.

Lemma 2

A data point or an \(R^{\ast }\)-treenode p can be pruned if \(n \times MinDist(M,p) > maxdist[k]\)for \(f=\textsc {sum}\)andif \(MinDist(M,p) > maxdist[k]\)for \(f=\textsc {max}\).

If p is not pruned using Lemma 2, then the algorithm uses the tighter condition \(d_{min}(p)> maxdist[k]\) of Lemma 1 to check if p can be discarded before inserting it into \(Q_{p}\). Since \(n \times MinDist(M,p) \leq d_{min}(p)\) for \(f=\textsc {sum}\) and \(MinDist(M,p) \leq d_{min}(p)\) for \(f=\textsc {max}\), it may happen that p is not pruned using the condition of Lemma 2 but satisfies the condition of Lemma 1 and is pruned.

Note that for sum the LSP directly returns A, a set of candidate answers with their \(d_{min}\)s and \(d_{max}\)s, to the coordinator. On the other hand, for max the LSP removes \(d_{max}\) of each data point from A, and returns \(maxdist[k]\) and A that includes a set of candidate answers with their \(d_{min}\)s to the coordinator.

Although we present our algorithm for a set of the query rectangles, our algorithm can evaluate k GNN queries for a set of query regions with any geometric shape.

The detail pseudocode and the proof of correctness of the algorithm is shown in [21].

7 Private k GNN queries based on weighted-rank

In a private k GNN queries based on weighted-rank, a group of users provide their query rectangles and specify the number of required group nearest data points k, and a rule \(\mathcal {R}\) to compute weights representing the costs associated with the ranks of the data points to the LSP. Let a weight \(w_{i}\) represent the cost associated with rank i for \(i>0\) and \(w_{i + 1}>w_{i}\). The weight \(w_{i}\) can be defined according to the preference of the group; for example, the rule \(\mathcal {R}\) to compute the weights can be with respect to \(w_{1}\) as \(i \times w_{1}\) or with respect to \(w_{i-1}\) as \(rt \times w_{i-1}\) for any positive integer \(rt>0\). The LSP evaluates the query and returns the set of data points A as candidate answers. To determine the actual GNN from the returned set of data points by the LSP, each user in the group needs to reveal her ranks for the data points in the candidate answers. Since a user does not need to update her actual distance for each data point in the candidate answers for private k GNN queries based on w-rank, there is no possibility of violating the user’s privacy with the distance intersection attack. However, we will show that the user’s privacy could be still violated with the rank disclosure attack, if the user straightforwardly provides her rank information.

In Section 7.1, we discuss the rank disclosure attack and in Section 7.2, we present a final pruning private filter technique to overcome the rank disclosure attack. In Section 7.3, we propose an algorithm to evaluate k GNN queries based on w-rank with respect to a set of rectangles. In Section 7.4, we show why it is not possible to design an incremental pruning private filter technique for k GNN queries based on w-rank.

7.1 Rank discloser attacks

Users need to disclose their ranks for data points in A to determine the actual k GNNs. Let the first user \(u_{1}\) that receive A from the coordinator c, insert and initialize a weight with 0, denoted as \(w(p_{h})\), for each data point \(p_{h} \in A\). Then the user finds her ranks for the data points in A and updates \(w(p_{h})\) according to the following equation.

Note that, \(l_{1}\) is the first user \(u_{1}\)’s actual location and \(rank(l_{1},p_{h})\) is the \(u_{1}\)’s rank for \(p_{h}\) computed with respect to \(l_{1}\); for example if \(p_{h}\) is the user’s \(3^{rd}\) nearest data point then \(rank(l_{1},p_{h})\) is 3 and the cost associated with \(rank(l_{1},p_{h})\), \(w_{3}\), is assigned to \(w(p_{h})\). The answer set A is forwarded to the next user randomly either directly or via c. The process continues until all users have updated the w field for all data points in A. On receiving A, each user \(u_{i}\) computes \(w^{\prime }(p_{h})\) for the data points with respect to her actual location \(l_{i}\) as follows:

Then the user updates \(w(p_{h})\) for those data points by assigning \(w^{\prime }(p_{h})\) to \(w(p_{h})\). After all users’s updates, the value of \(w(p_{h})\) of a data point \(p_{h}\) represents the cost of the group for that data point, and the data point with the minimum weight (cost) is selected as the GNN. Similarly, the k data points in A with k smallest weights are selected as k GNNs.

Thus, if the users communicate directly, then the second user can know the first user’s ranks for the data points by observing visited field of user identities. On the other hand, when the users communicate via c then c can monitor the change of \(w(p_{h})\) before sending and after receiving back from a user and thereby know each user’s ranks for the data points. Learning the ranks for the data points of a user in the group enables the coordinator c or a group member to approximate the user’s location by applying the rank disclosure attack.

The underlying idea of the rank disclosure attack on a user’s location is based on the well known concept of ordered Voronoi diagram [10]. The first order Voronoi diagram for the data points is the division of the total space into subspaces, called Voronoi cells, where each Voronoi cell corresponds to a data point \(p_{h}\) such that \(p_{h}\) is the nearest data point for every location on that cell. Figure 5a shows an example of 1-order Voronoi Diagram of candidate answers \(A:\{p_{1},p_{2},\dots ,p_{8}\}\). Since the location of candidate answers are known to the group member and c, if they also know the nearest data point of a user then they can easily identify the user’s location as the Voronoi cell corresponding to that data point. For example, if \(p_{2}\) is a user’s nearest data point then the user must be located in the Voronoi cell shown with ash color in Figure 5a.

For an m-order Voronoi diagram, each Voronoi cell corresponds to a fixed rank for m data points. Figure 5b and c show 2-order Voronoi diagram and 3-order Voronoi diagram, respectively, for the same candidate answers used in Figure 5a. If \(p_{2}\) and \(p_{3}\) are auser’s \(1^{st}\) and \(2^{nd}\) nearest data points, respectively, then the user must be located in the ash Voronoi cell shown in Figure 5b. Similarly, if \(p_{2}\), \(p_{3}\), and \(p_{7}\) are a user’s \(1^{st}\), \(2^{nd}\), and \(3^{rd}\) nearest data points, respectively, then the user must be located in the ash Voronoi cell shown in Figure 5c. From the construction of order Voronoi diagram, we know that the Voronoi cells of higher order diagram are originated from the recursive division of Voronoi cells of lower order diagram. Thus, the more information about a user’s rank is known (i.e., the larger the value for m), the more precise location of the user could be determined by constructing a higher order Voronoi diagram.

7.2 Private filter

To avoid the rank disclosure attack, similar to the k GNN queries for the aggregate function sum, we propose a final pruning private filter (FPPF) technique for private k GNN queries based on w-rank. We call the private filter as w-rank_FPPF. For k GNN queries based on w-rank, it is not possible to apply an incremental pruning private filter (IPPF) technique as IPPF cannot overcome the rank disclosure attack, which is discussed in Section 7.4. In w-rank_FPPF, each user’s update of rank information remains hidden in an aggregate form from others but at the end \(w(p_{h})\) represents the actual cost of \(p_{h}\) for the group.

For w-rank_FPPF, in addition to returning the location of the set of data points as candidate answers, the LSP returns \(w(p_{h})\) for each data point \(p_{h} \in A\). The LSP initializes \(w(p_{h})\) as the sum of the maximum distances of \(p_{h}\) to the query rectangles:

Note that, \(R_{i}\) is a user \(u_{i}\)’s query rectangle and n is the group size. On receiving A, each user computes \(w^{\prime }(p_{h})\) as follows:

Then the user updates \(w(p_{h})\) for those data points by assigning \(w^{\prime }(p_{h})\) to \(w(p_{h})\). Algorithm 3 summarizes the steps for w-rank_FPPF.

Table 6 shows the ranks of the users for the data points in A shown in Figure 2. Table 7 shows the steps for updating \(w(p_{h})\) by every user for the given example, where without loss of generality, the rule \(w_{i}=i \times w_{1}\) is used for \(w_{1}= 1\). After the updates of \(u_{5}\), \(w(p_{1}),w(p_{2}),\dots ,w(p_{8})\) represent the actual aggregate weight (i.e., cost) of \(\{p_{1},p_{2},\dots ,p_{8}\}\) of the group of users \(\{u_{1},u_{2},\dots ,u_{5}\}\) (see the last row of Table 7). Depending on the communication method used, \(u_{5}\) or the coordinator c forwards 2 GNNs, \(p_{2}\) and \(p_{1}\) (or \(p_{3}\)), to all users in the group.

In this private filter technique, similar to FPPF for the aggregate function sum, an individual user’s costs for the ranks of the data points remain hidden in aggregate form in both scenarios: communication without or with the coordinator. Since a group member or the coordinator cannot identify a user’s added weights (i.e., cost) for the data points, the group member or the coordinator cannot also infer the ranks of the user for the data points. Thus, w-rank_FPPF finds the GNNs without revealing the ranks of the users for the data points to others and prevents the rank discloser attack.

Let X represent a subset of users in the group who have already updated w for all data points in A and Y represent the remaining users in the group who have not yet received and updated A. In every step of the private filter technique, \(w(p_{h})\) can be in general expressed by the following equation:

For a group size of \(n = 2\), the user \(u_{y}\) who receives directly from the user \(u_{x}\) can know the identity of \(u_{x}\) by observing the list of entities and determine \(w_{rank(l_{x},p_{h})}\) (thus, \(rank(l_{x},p_{h})\)), since \(u_{y}\) knows her own \(MaxDist(R_{y},p_{h})\) in (12). Therefore, similar to the private filter for sum, for \(n = 2\), the users communicate via the coordinator to avoid the rank disclosure attack.

We omit the proof of correctness for w-rank_FPPF due to its similarity with sum_FPPF.

Note that for w-rank_FPPF, the LSP does not provide the minimum and maximum weights with respect to the set of rectangles for each data point in the candidate answer set. As a result, users cannot perform any local pruning of the data points from the candidate answer set after updating the weight of each data point, which is a property of incremental private pruning filter (IPPF) technique. In Section 7.4, we will show the possible privacy attacks on a user’s location that can arise from the IPPF technique for k GNN queries based on weighted-rank.

7.3 k GNN queries based on weighted-rank with respect to regions

In this section, we present an algorithm, \(\textsc {region}\_{\textit {k}}GNN\_rank\), tol evaluate k GNN queries based on w-rank with respect to a set of rectangles. The algorithm performs the evaluation in two parts. In the first part, the algorithm finds a set of data points that includes k GNNs based on w-rank with respect to a set of rectangles. Then, in the second part, the algorithm computes the minimum and maximum weight of those data points with respect to the set of rectangles and prunes the data points based on the computed weights that cannot be the part of candidate answers. Algorithm 4, \(\textsc {region}\_{\textit {k}}GNN\_rank\), shows the evaluation process of k GNN queries based on w-rank with respect to a set of rectangles. The input to Algorithm 4 are a set of rectangles \(\{R_{1},R_{2},\dots ,R_{n}\}\), the number of required data points k, and the weights associated with ranks \(\{w_{1},w_{2},\dots \}\). The output is the candidate answers A with their weights, where a weight of a data point is the sum of the maximum distances of the data point to the rectangles.

In contrast to the algorithm for evaluating k GNN queries with respect to a set of rectangles for the aggregate function sum and max in Section 6, the first part of Algorithm 4 cannot compute the candidate answer set directly, instead it computes a superset of the candidate answer set. In the algorithm for evaluating k GNN queries with respect to a set of rectangles for the aggregate function sum and max in Section 6, at the same time of searching the data points on the database, the aggregate function sum or max is used to prune the data points that cannot be candidate answers. In Algorithm 4, however, the weight of a data point cannot be computed before knowing the ranks of the data points for every rectangle. Thus, pruning the data points based on the weight is performed in the second part of Algorithm 4 (Line 4.37) using the function \(Refine\_Answers\).

In the first part, the algorithm finds a set of data points that includes k nearest data points with respect to every point of each rectangle since a user can be located anywhere within her rectangle. Then the algorithm extends the search until a certain distance, i.e., enddist (defined later in this section), that ensures that the set of data points include k GNNs based on w-rank with respect to the set of rectangles. To evaluate k nearest data points with respect to every point of a rectangle, any existing algorithm [28, 42] can be used. However, executing those algorithms separately for each rectangle requires multiple searches on the database and incurs high IOs and query processing overhead. Our algorithm finds k nearest data points for all rectangles with a single search on the database. The first part of Algorithm 4 (Lines 4.1-4.36) is based on a concept similar to the algorithm that evaluates GNN queries with respect to a set of rectangles for the aggregate function sum and max.

We use the notations M, \(MinDist(q,p)\), \(MaxDist(q,p)\), \(maxdist[k]\) as defined in Section 6. We define enddist and redefine \(d_{min}(p)\) and \(d_{max}(p)\) for k GNN queries based on w-rank as follows.

-

enddist: the maximum of the maximum distances between query rectangles \(\{R_{1},R_{2},\dots ,R_{n}\}\) and the data points in A, i.e., the maximum of \(\bigvee _{i} \bigvee _{p_{h} \in A} \{MaxDist(R_{i},p_{h})\}\).

-

\(d_{min}(p)\) (dmax(p)): the minimum (maximum) distance of p computed from the minimum (maximum) distances between p and all query rectangles, where p represents a data point or a minimum bounding rectangle of an \(R^{\ast }\)-tree node.

The algorithm starts the search from the root of the \(R^{\ast }\)-tree and inserts the root together with its \(d_{min}(root)\) and \(d_{max}(root)\) into a priority queue \(Q_{p}\), where \(d_{min}(root)= 0\) and \(d_{max}(root)=\max _{i = 1}^{n}({MaxDist(R_{i},root)})\). The elements of \(Q_{p}\) are stored in order of their minimum \(d_{min}\)s. The search continues until k nearest data points with respect to every point of each rectangle have been dequeued from \(Q_{p}\). To preserve a user’s privacy while finding the actual GNNs from A through the group of users (the private filter technique discussed in Section 7.2), the weight of each data point p in A is initialized as the sum of the maximum distances of p to the rectangles (Line 4.11). Note that similar to the algorithm for evaluating k GNN queries with respect to a set of rectangles for the aggregate function sum and max, Lemma 1 is used in the algorithm to check the termination condition of the search for k nearest data points with respect to the set of rectangles. After k NNs with respect to every point of each rectangle have been found, the algorithm computes enddist (Line 4.20) and finds all data points whose \(d_{min}\)s are less than or equal to enddist (Lines 4.21-4.36). The extension of search for the data points until enddist ensures that A includes k GNNs based on w-rank with respect to the set of rectangles.

Without loss of generality, we can provide an example that shows why it is required to extend the search until enddist in order to ensure that k GNNs based on w-rank with respect to every point set within the rectangles are included in A. Let \(R_{1}\) and \(R_{2}\) be two rectangles and \(r_{1}\) and \(r_{2}\) be two points in \(R_{1}\) and \(R_{2}\), respectively. Assume that for \(k = 1\), the data points \(p_{1}\) and \(p_{2}\) are in A, where \(p_{1}\) is the \(1^{st}\) and \(5^{th}\) NN for \(r_{1}\) and \(r_{2}\), respectively and \(p_{2}\) is the \(4^{th}\) and \(1^{st}\) NN for \(r_{1}\) and \(r_{2}\), respectively. Let \(p_{3}\) be another data point that is not in A and is the \(3^{rd}\) NN for both \(r_{1}\) and \(r_{2}\). For the ratio between two consecutive weights \(rt= 2\), i.e., \(w_{i + 1} = 2 \times w_{i}\), \(p_{3}\) has a smaller weight than the weights of \(p_{1}\) and \(p_{2}\), i.e., \(p_{3}\) is the GNN based on w-rank with respect to points \(r_{1}\) and \(r_{2}\). Thus, we observe that simply finding k NNs for \(r_{1}\) and \(r_{2}\) cannot ensure that the actual GNN based on w-rank, i.e., \(p_{3}\), is included in A. To address the above mentioned scenario, the algorithm extends the search until enddist and includes all data points that have higher ranks (e.g., rank 1 is higher than rank 2) with respect to a point in the rectangles than the ranks of the data points already included in A.

The second part of Algorithm 4 is to prune the data points from A that cannot be part of the candidate answer based on the weight with respect to the set of rectangles. The function \(Refine\_Answers\) (Line 4.37) prunes the data set. To compute the weight of a data point with respect to a set of rectangles, we need to know the rank of that data point with respect to each individual rectangle, which is not straightforward to compute. Since a user can be located anywhere within her rectangle, the rank of a data point with respect to a rectangle is a range instead of a fixed rank, which is defined with the minimum and maximum values. Therefore, the weight of a data point with respect to a set of rectangles is also computed as a range with the minimum and maximum bounds.