Abstract

The present technology, based on laser and visual SLAM navigation and positioning, does not apply to the event of a fire in a room where there is a large amount of smoke, for its increasingly obvious defects. In addition, traditional track deduction technology based on photoelectric encoder has accumulated error, and noise disturbance exists in the INS inertial navigation measurement technology, and the UWB positioning technology is vulnerable to NLOS disturbance caused by site occlusion. To solve the problem of accurate positioning of Autonomous Mobile fire-fighting robot in smoke scenes, the system design is optimized by adopting the following methods: using IMU-assisted residual chi-square criterion to detect whether there is NLOS in UWB, introducing IMU instantaneous compensation positioning data and adopting Chan algorithm fitting of the second multiplication to ensure the stability and accuracy of UWB data; meanwhile, a tight combination model of navigation and positioning is designed: via the improved Kalman filter algorithm, fused with the magnetic encoder track estimation pose, UWB absolute and IMU heading angle pose to realize the accurate positioning of the fire robot in the smoke scene. Finally, the fusion simulation model and algorithm are verified by MATLAB, as it shows, the method has an average positioning accuracy of 98.63% in the X-axis direction, 99.52% in the Y-axis direction, and 97.24% in the heading angle, which solves the inherent physical defects of a single positioning sensor and serves as a reliable and accurate solution for indoor robot positioning.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

With the development of robotics technology, the demand for special robots such as indoor welcoming robots, fire rescue robots [1,2,3], nursing robots [4], and sweeping robots is increasing rapidly.

The autonomous movement of indoor robots rely on precise positioning and navigation technology. The positioning process is to determine the position of the robot in its working environment. At present, the indoor robot positioning technology is basically mature. The main applications are positioning system based on Bluetooth [5], WIFI [6], Radio frequency identification [7], Ultra wide band [8], near field communication [9], ZigBee [10], indoor positioning system based on inertial navigation dead reckoning [11], and positioning system based on vision [12] At present, the research of multi-sensor fusion positioning is mainly based on the fusion mode dominated by vision and lidar [13,14,15,16].

Robot positioning technology falls into two categories: absolute positioning and relative positioning technology. Navigation beacon, active or passive identification, map matching or satellite navigation technology are mainly adopted in absolute positioning. However, for the comparatively high cost of constructing and maintaining beacons or signs, the slow processing speed of map matching technology, and the reason that the satellite positioning can only be used outdoors, three-view-angle method, three-line-of-sight method and model matching algorithm are applied in calculating the absolute position. Relative positioning is to determine the current position of the robot by measuring its initial distance and direction relatively, which is usually called the track estimation algorithm. Frequently used sensors include odometer and inertial navigation system (speed gyroscope, accelerometer, etc.). The advantage of the track estimation algorithm is that the pose of the robot is self-calculated and does not require the perception of the external environment. The disadvantage is that the drift error will accumulate over time and is not suitable for precise positioning [17]. From above mentioned, absolute positioning and relative positioning have their own advantages and disadvantages.

Although some scientific research achievements have been made in the exploration and research of single- sensor-adopted indoor positioning technology, many shortcomings have also been detected. Among them, how to solve the inherent cumulative error caused by the inertial sensor, which results in low positioning accuracy and reliability is a key research problem. Since the PDR positioning system [18] can only provide relative position information, the error will accumulate over time, thus absolute position information is required to correct it [19, 20].

The literature [21] focuses on the study of Kinect vision sensor-based SLAM, which constructs rich two-dimensional maps. However, due to the low measurement accuracy of Kinect, the maps are inaccurate. Literature [22] does research on laser-sensor-based SLAM, which can construct accurate two-dimensional maps. However, the laser sensor can only detect obstacle information on a specific plane, so maps cannot provide complete information. In addition, when there is a large amount of smoke in an indoor fire, the SLAM technology is prone to large errors or failures, as a result, this technology is invalid when an active fire-fighting robot is applied.

In recent years, research on indoor positioning mainly focuses on wireless-sensor-networks-based and inertial-sensors-based ones. RSSI (signal strength measurement), TDOA (Time Difference of Arrival), TOA (Time of arrival), AOA (Arrival of angle) and other algorithms to obtain absolute position information [23] are used in the WIFI-network-based indoor positioning; [24] A feasible method is proposed for the determination of wireless signal strength switching. However, relying only on wireless positioning technology will cause wireless signal multipath effects, scattering, diffraction and other phenomena due to occlusion and metal interference, and there will be a certain instantaneous measurement error. Therefore, some studies have integrated inertial navigation technology. Literature [25] points out using extended Kalman to integrate UWB and IMU, and designing fault detection, improving identification and isolation functions in extended Kalman help to reduce the interference of obstacles, multipath and other factors to wireless ranging. However, due to the large amount of noise interference in the inertial navigation technology, measurement jitter errors often occur. Recently, there are trajectory prediction and fusion methods based on tensor and data analysis [26], the real effect needs to be verified.

In view of the above-mentioned problems of indoor robot positioning technology in the smoke state, this paper introduces a positioning method based on multi-source information technology fusion, which effectively overcomes the disadvantages of using single sensor technology for indoor positioning and realizes the complementary advantages of different positioning methods, improves the accuracy of positioning and makes it more feasible to adopt robots to actively fight fire in special scenes of heavy smoke.

When both relative and absolute positioning methods are combined, the simplest method is to perform a weighted average of different sensor data, but this method has low reliability and severe limitation. Therefore, some researchers have proposed statistics-based Kalman filtering, Markov method, and Monte Carlo method, etc., all of which have got certain effect, but not an ideal one in the smoke scene. Therefore, by considering the elements regarding the robot, its features and different scenes, this paper brings forward a new approach to make residual chi-square judgement and improve Chan algorithm to compensate and correct the UWB positioning data, and then fuse the gyroscope and encoder navigation data via improved Kalman filter algorithm Methods.

1 Robot control structure

The relative positioning method can iteratively recursive the robot pose at each moment according to the kinematics model of the mobile robot and achieve better short-term accuracy without depending on the external environment information.

The mobile chassis of the fire-fighting robot adopts two-wheel differential drive with the support of front and rear universal wheels. By changing the rotation speed of the two driving wheels, the car body can be controlled to move in a straight line and make turns. In the track calculation algorithm, the relationship between the speed of the two wheels, the linear velocity and angular velocity needs to be clarified to make an analysis of mobile chassis kinematic.

The motion model of the robot is shown in Fig. 1, where point O stands for the instantaneous center of velocity of the mobile chassis in the current state of motion, and it is assumed that the chassis is traveling in an ideal state without considering factors such as slippage. According to the kinematics analysis, the speed at point C of the chassis can be calculated as:

The angular velocity of the chassis is θ, the moving chassis moves clockwise in Fig. 1(b), we get:

Then the angular velocity of the mobile chassis can be figured out:

To estimate the pose of the mobile chassis at a certain moment, it is necessary to set up a model which shows the movement of the mobile chassis. The position and posture change of the robot is assumed to accord with the Markov process, that is to say, the current posture of the robot xk only depends on the posture xk−1 and the control uk at the previous moment, which as nothing to do with the posture in the past moment.

Therefore, the mobile chassis motion model can be calculated as:

In the formula, f(xk-1,uk) represents the nonlinear state transition equation. As is shown in Fig. 1(b), it is the model for calculating the track of the moving chassis. From time k-1 to time k, the mobile chassis moves from position M to position N, and the mobile chassis state is xk(X′, Y′, θ′). In the figure, Lk, Rk are the trajectories of the left and right driving wheels of the moving chassis from time k to time k-1, so uk can be expressed as (Lk, Rk, ∆θ). Due to the small time interval, the distance from M to N can be approximated as (Lk + Rk)/2. Mobile chassis motion models are depicted as follows:

2 Track deduction positioning method

The odometer information is measured according to the encoder fixed at the end of the motor shaft, and the angle that the motor has rotated during the sampling time is calculated by analyzing the motion model of the two-wheel differential mobile chassis, so that the current position and azimuth angle of the mobile chassis are calculated. Since the encoder code disc selected is an incremental code one, the resolution of the odometer is defined here as:

In the formula, R is the radius of the driving wheel of the mobile chassis, P is the number of pulses per revolution of the encoder (number of encoder lines × number of phases), and η is the reduction ratio of the harmonic gear reducer. The sampling period of the code disc is ∆t. During this period, the pulse numbers of the left and right drive wheel encoders collected by the lower computer are NL and NR respectively, then the displacements of the two wheels are:

The driving wheelbase of the mobile chassis is W, the arc length of its displacement within Δt is ΔLt, and the azimuth angle change is Δθt, then the position change of the mobile chassis from t-1 to t is:

If the cumulative error and drift caused by the sliding of the mobile chassis wheel are not considered, the odometer model can be expressed as:

The random error model of odometer includes translation model and rotation model. The translation model assumes that the mobile chassis only completes translational motion in a small amount of time, and the rotation includes both position and posture changes, which is consistent with the actual situation. For the mobile chassis in this paper, by calculating the above-mentioned odometer and track deduction model, it is possible to obtain the accumulation of the position and attitude changes from the origin of the motion to the end of the motion during the motion time, and the final position and posture can be derived. But if it only relies on the code wheel, the slip of the wheel and the change of circumference will cause the drift of the odometer model and the accumulation of errors. It can be seen from Fig. 1 that in translational motion, the measurement error in the direction perpendicular to the direction of motion increases faster, while the increase in measurement error of rotary motion is not perpendicular to the direction of motion.

In order to overcome the influence of odometer modeling error and random wheel sliding, some researches have proposed various positioning methods based on probability and statistics for various uncertainties in the process of system modeling and sensor measurement.

One is the positioning method based on filtering estimation. By linearizing the system equations, Kalman filtering and its variants are used to filter and estimate the position of the robot, such as: Extended Kalman Filter (EKF), Kalman Filter (KF), Unscented Kalman Filter (UKF) positioning method [27,28,29].

The other is the positioning method based on Bayesian inference, which adopts grids and particles to describe the robot position space, and recursively calculates the probability distribution in the state space, such as Markov Localization (MKV), Monte Carlo Localization (MCL) positioning methods.

Although various optimization algorithms have played a certain effect, all the trajectory deduction models are designed under the ideal state of dual differential wheels, and when one or more universal wheel supports are added, the navigation model errors will turn to be relatively large. In particular, there is a certain relationship between the steering angle and the initial orientation of the universal wheel during track deduction, which determines the error scale of the deduced heading angle, and the error is highly random. Therefore, it is difficult to fundamentally solve the system errors caused by physical reasons such as robot wheel slip, double-wheel diameter deviation, and encoder accuracy from the perspective of algorithm optimization alone.

This paper designs a robot track deduction model, verifies the effect of robot encoder track deduction through experiments, and collects and analyzes the deduction data. As is shown in Table 2 in the track deduction pose 1, this data is used for later algorithm fusion comparison verification.

3 Inertial navigation system (INS)

INS integrates the angular velocity and acceleration output by the gyroscope and accelerometer, and can output navigation information such as the position, velocity, and attitude of the carrier, and does not require any information exchange with the outside world. It is completely autonomous and shows good dynamic performance. The MPU6050 six-axis gyroscope (IMU) is selected in the design, and its heading angle is served as the observation quantity, and the heading angle derived from the encoder track is used as the control quantity. Using an improved Kalman filter for fusion can output a more stable and accurate heading angle. The system angle fusion uses the linear system state equation, through the input and output observation data, to estimate the optimal algorithm of the system state. Since the observation data involves the influence of noise and interference, the optimal estimation can also be regarded as a filtering process.

3.1 Course angle model design

Angle is the final filtered heading angle put out by the gyroscope. However, this angle is the same as the angle without filtering, and has no specific heading angle nature, that is, this is just a data, from 0 to positive infinity or negative infinity, and will not reset to zero when rotated 360°, thus, it needs to be dealt with a mathematical model Performing processing to make it have angle nature in order to be used in the plane coordinate system.

According to the attitude conversion principle of quaternion space rotation, the quaternion attitude matrix equation of the gyroscope is presented as follows:

In the process of coordinate system conversion, gyroscope rotates to the final posture in the order of z-y-x, and the rotation is shown in Eq. (11).

The Euler angle is calculated by the rotation matrix \({\text{M}}\left( {\beta ,\alpha ,\gamma } \right)\) = \({C}_{b}^{R}\):

Via this method, the heading angle information of the IMU can be calculated. The experiment is carried out in-situ rotation test in the two-dimensional space of the robot. The results of multiple tests are shown in Table 1.

It can be seen from Table 1 that it is quite accurate to get the heading angle information by the mobile robot in the two-dimensional plane space through the IMU. In Fig. 2, Z represents the offset angle of the heading angle. When the robot only rotates 360° leftwards and rightwards on the two-dimensional plane, the heading angle does not drift and can accurately return to 0°, as is shown in position 1 in Fig. 2. However, when the robot walks, the heading angle will shift when the pitch angle and roll angle are jittered, as is shown in position 2 in Fig. 2. After testing, the offset angle reaches 5.63°, which cannot be reset to zero, causing subsequent random error.

3.2 Attitude position model

According to the acceleration of the IMU, the position coordinate information of the robot can be obtained by double integration. Supposing the IMU carrier robot coordinate system is the positioning coordinate system, and the instantaneous position of the robot is represented by x, y, and z. Using IMU's three-axis acceleration fx, fy, fz, the robot's three-axis speed is vx, vy, vz, and the robot's three-axis instantaneous position is x, y, z, and the instantaneous position formula is shown in (13). However, the position coordinates obtained by this method have large errors, and the accumulated errors increase over time.

By the analysis, it is found that the error is mainly caused by two points:

-

a.

The influence of gravity cannot be removed for the improper pose, making the error keeping accumulated;

-

b.

The drift of the device itself cannot be removed. For example, if acc goes from static to motional state then to static, the speed obtained by acc integration should be 0, but the actual speed is not 0; the influence of gravity, for the acc + gyr method, the acc can only get the attitude at rest It cannot be in motion, so the attitude is inaccurate in the case of motion, and the influence of gravity cannot be removed; for acc + gyr + mag, you can use mag to obtain the attitude to improve the accuracy of the attitude. When there is magnetic interference, this method also causes problems: the drift problem itself. The general resolution is to use high-pass filtering to remove, but after high-pass filtering, only the relative motion relationship is obtained, and accurate position cannot be obtained.

The main advantage of the inertial sensor-based positioning method is that it can realize the positioning of the mobile robot without relying on external conditions, and the short-term accuracy is better. The disadvantage is that it will drift with time, and the relatively small constant error will become infinitely larger after being integrated. It can be seen that the track estimation method based solely on inertial sensors cannot meet the precise positioning requirements of mobile robots traveling long distances.

The effect and test data of the robot based on INS navigation are shown in θu of UWB + gyro pose 2 in Table 2. This data is used for later algorithm fusion comparison verification. The posture position model is used to compensate and fit when the robot is positioned based on UWB when NLOS occurs in the data.

4 UWB positioning method

UWB positioning is similar to GPS positioning to a large extent. The base station is like a GPS positioning satellite. The position of each base station is set in advance. Therefore, the constantly moving tags can be located via radio signals. Through radio transmission, the linear distance from each base station to the tag can be measured at any time. The position of the tag in the base station coordinate system can be calculated through geometric knowledge, and the relative base station positioning information can be obtained. In the laboratory scenario, the UWB positioning system adopts the structure of 1 tag + 4 base stations, and the layout is shown in Fig. 3.

Carrying 4 Anchors (base stations) indoors, the unit of coordinate axis is m, Anchor is abbreviated by A, set the coordinates Tag: (x, y, z), A0: (x0, y0, z0), A1: (x1, y1, z1), A2: (x2, y2, z2), A3: (x3, y3, z3), the distances of base stations A0, A1, A2, and A3 are calculated as dis0, dis1, dis2, and dis3 respectively. Then you can list the spherical equations to each base station, the joint equation.

According to the system model, the positioning equations can be obtained, as is shown in formula (14).

In the formula, by subtracting any equation from the last equation and expressing it in matrix form, Eq. 15 can be obtained.

Solving the equation can get:

By solving the equation, the two-dimensional coordinates (xu,yu) of the real-time absolute position of the label can be obtained. The actual layout of the base station and tags is shown in Fig. 4.

The robot walking space is transformed into a two-dimensional map as is shown in Fig. 5. The black area represents obstacles on the table, which is the area where the robot cannot walk. The white part represents the road surface, which is the area where the robot can travel. The blue triangle indicates the location of the UWB base station. The yellow track is the driving track of the UWB positioning robot.

In the actual application test, two problems are found in UWB positioning. First, when the label position is placed at the top position 1 of the robot in Fig. 4, the robot travel path is shown in Fig. 5(a), and the path travel location is completely consistent with the actual position. When the label position is placed in the bottom position 2 of the robot in Fig. 4, the robot travel path is shown in Fig. 5(b). It can be seen that when the robot walks close to the tables and chairs on both sides of the indoor path, the base station are blocked by obstacles such as the robot tables and chairs. The wireless signal between the tags generates NLOS, which causes errors in the positioning data. In this case, a large range of jitter is reflected in the figure.

When the robot label is set in position 1, Fig. 4 in static state, the actual coordinates of the measurement are (3.80, 4.00), and the system is designed with a limited sliding serial port filter algorithm. The positioning result is shown in Fig. 6(a). The data analysis shows that the positioning data is stable and the measurement error is within ± 5 cm. However, when a man passes the robot, the data shows obvious jitter, as is shown in Fig. 6(b). When this phenomenon is placed in the motion state of a mobile robot, it is hard to tell whether it is the real motion trajectory or disturbing the jitter data by simply analyzing the data itself. Therefore, other sensors need to be added for NLOS determination and fusion compensation.

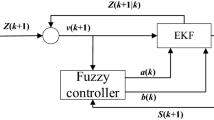

In this paper, IMU information is used to determine the pseudo-movement of UWB system, and IMU short-time sampling double integration is used as UWB compensation fitting. The tight combination mode of IMU and UWB is designed as is shown in Fig. 7. The characteristic of the model lies in obtaining the pseudo-range rate through pseudo-range differentiation and at the same time realizing the amplification of the measurement noise, which is helpful for distinguishing and fitting compensation of NLOS error.

The model stipulates that when the UWB coordinate data deviates from the planned path data, it should be judged whether the heading angle of the IMU has a corresponding deflection, and if the corresponding deflection occurs, the residual mean value is further used to determine whether there is a NLOS disturbance [30]. The literature [24] proposes a wireless network joint anti-interference method that has valuable reference significance. The sampling frequency of UWB and IMU are both set to 20 ms. Since the fastest moving speed of the robot is 2 m/s, the judgment threshold of NLOS ldes can be set to 4 cm according to the sampling rate.

In the above formula, \({x}_{u,t}\), \({y}_{u,t}\) are the coordinates of UWB at the current time t,\({P}_{u,t}\) is the current position of UWB, and \(\Delta {P}_{i,t-1}\) is the rate of change of IMU position at the previous time.

By introducing IMU as NLOS determination and fusion compensation, if the residual chi-square is within the threshold range, the UWB positioning data at that moment does not need fusion compensation; if the residual chi-square exceeds the threshold range, the UWB data at the previous moment plus the previous one The instantaneous displacement measured by MEMS at the moment is used as the result of fusion compensation [31]. Through design, the system can effectively correct UWB data errors caused by non-line-of-sight and base station loss, thus ensuring the accuracy of UWB absolute coordinates.

In the laboratory scenario, the robot's UWB navigation effect and collected data are shown as xu, yu in UWB + gyro pose 2 in Table 2. The data is used for the verification of the later multi-sensor algorithm fusion comparison.

5 Multi-source perception fusion

Indoor positioning methods can be roughly divided into two, one is relative positioning; the other is absolute positioning. Although the relative positioning method has a cumulative error, the error between two adjacent moments is very small; although the absolute positioning method has no cumulative error, its instantaneous error will be relatively large.

Therefore, this design uses an encoder based on the mobile robot to calculate the relative position information of the robot1. On the other hand, the distance measurement module DWM1000 and gyroscope based on UWB positioning technology are used to calculate the position of the robot through model calculation2. Finally, an improved Kalman filter (KF) algorithm is used to fuse the two data to obtain the estimated position information of the robot. The actual posture information of the robot is obtained by manual and accurate measurement, as is shown in xr, yr, θr in Table 2. This design adopts a combination of relative positioning method and absolute positioning method, and uses improved Kalman filter to correct the position information obtained by the two methods, thereby further reducing the cumulative error in the relative positioning method, and also correcting the absolute The instantaneous error in the positioning method, which has significantly improved the indoor positioning accuracy of the mobile robot.

5.1 System structure of indoor positioning integration

First, obtain the robot position information 1 through the encoder on the mobile robot. Then, the Infinite Future's I-UWB-LPS PA module and an external gyroscope are used to measure the position information of the mobile robot 2. Later, the improved Kalman filter algorithm is utilized to fuse the position information 1 and the position information 2 as the estimated position information of the robot. According to the needs, the system designs the positioning fusion compact combination model, and the structure is shown in Fig. 7.

5.2 Location information fusion

In the design of the tight combination model in this paper, the position information obtained by the encoder 1, this data information contains the cumulative error. There is a certain instantaneous error in the position information 2 obtained by UWB and gyroscope together, and there is no cumulative error. The error of position information 1 agrees with Gaussian distribution, and both position information 1 and position information 2 meet the conditions of using Kalman filter algorithm. Therefore, in order to reduce these two errors, improved Kalman filter algorithm to data are adopted to process the data to achieve high-precision indoor positioning.

The improved Kalman filter algorithm model is as follows, set U(t)T = (\({x}_{e}\),\({y}_{e}\),\({\theta }_{e}\)),Z(t)T = (\({x}_{u}\),\({y}_{u}\),\({\theta }_{u}\)),X(t)is each n × Δt time Estimated location information within.

In the above formula, U(t)T is the gyroscope navigation pose information 1 at a certain moment, ΔU(t) is the difference between the previous and current moment pose information 1, and Z(t)T is the UWB + IMU at the same time Pose information 2, W(t) and V(t) are the noise when obtaining pose information 1 and pose information 2, Q and R are the noise variances of W(t) and V(t), P is The prediction error of X(t), U(t), Z(t), W(t), V(t), Q, R, P are all three-dimensional column vectors. In the algorithm, pose information 1 is used as the control variable array, and pose information 2 is used as the observation array [32].

-

(1)

Initialize the system first, and reset the estimated pose;

-

(2)

Use formula (22) to obtain robot position information;

-

(3)

Obtain position information 2 of the robot position information Via Formula (23);

-

(4)

In formula (24), the estimated position information at time t is predicted by the estimated position information at time t-1;

-

(5)

Predict the prediction error at time t by the prediction error at time t-1 in formula (25);

-

(6)

Calculate the Kalman gain Kg at time t by formula (26);

-

(7)

Obtain the estimated position information at time t by combining the predicted position information and the observed position information through formula (27);

-

(8)

Calculate the prediction error at time t by formula (28).

Through the above improved Kalman filter algorithm, the influence of noise and measurement error is reduced, therefore, high-precision mobile robot’s estimated position information is acquired. The flow of location information fusion algorithm is shown in Fig. 8.

5.3 Simulation to obtain estimated position information

After completing the design of the system’s tight combination positioning model, this paper employs MATLAB to perform the fusion simulation of the experimental data on the improved Kalman filter algorithm, As shown in Table 2, the analysis results can get the following information. In the simulation experiment, MATLAB obtains the estimated position information. After the simulation, 3 sets of position information can be obtained, that is, the time information is included, and the position information measured by the encoder, gyroscope and UWB indoor positioning module is processed. After the system is initialized, the position information 1 measured by the encoder installed on the mobile robot and the position information 2 measured by the UWB indoor positioning system module and gyroscope will be obtained respectively, and the estimated position information after fusion with Kalman filter algorithm.

After simulation, 3 sets of position information can be obtained, that is, images and data containing time information. By comparing the position information 1, the position information 2, the fusion position information, and the actual position information at the same time, the accuracy of the positioning can be obtained. The calculation of is shown in formula (29).

Here ε is the accuracy, H is the position information obtained by measurement or the position information after fusion, and L is the actual position information of the mobile robot. Four groups of coordinates and heading angle of the position information are placed on a coordinate system to intuitively reflect the position information. The location information simulation is shown in Fig. 9.

As Table 3 shows, the experimental results acquired by means of filter algorithm and the improved Kalman filter algorithm are compared regarding the accuracy. It can be seen that the accuracy of the improved Kalman filter algorithm designed in this paper has been improved to a certain extent.

6 Conclusion

In this paper, a tight combination model of indoor positioning and navigation based on encoder track deduction + UWB + IMU is designed. the NLOS judgment basis and compensation method based on IMU are applied in the model to ensure the accuracy of UWB absolute coordinate data and improve its anti-interference ability. Finally, the multi-source positioning pose data fusion is carried out by improving the Kalman filter algorithm.

The fusion effect is shown in Fig. 9 and Table 2. Through the analysis, it can be seen that the cumulative error of the coordinates measured by the encoder gradually increases with time, and as time changes, the error of the coordinates measured by the UWB + IMU positioning system module has a large jitter and the error begins to increase. The accuracy of the robot's pose after the fusion of this algorithm is significantly improved than when a single sensor is used. In particular, the accuracy of the fused coordinate position information in the Y-axis direction reached 99.52%, and the posture of the robot after fusion positioning is basically the same as the actual posture, achieving a good fusion effect. And, it can be seen that the accuracy of the heading angle after the algorithm fusion is not very obvious, which will be conducted in the future.

References

Niculescu, A., Dijk, B. V., & Nijholt, A., et al. (2012). The influence of voice pitch on the evaluation of a social robot receptionist. In 2011I nternational conference on user science and engineering (pp. 18–23).

Shadrin, V.A., Lisakov, S.A., & Pavlov, A.N., et al. (2016). Development of fire robot based on quadcopter. In International conference of young specialists on micro/ nanotechnologies and electron devices (pp. 364–369).

Hashimoto, T., & Kobayashi, H. (2009). Study on natural head motion in waiting state with receptionist robot SAYA that has human-like appearance. In IEEE Workshop on Robotic Intelligence in Informationally Structured Space, 2009, 93–98.

Lu, F., Wang, X., & Tian, G. (2012). The structure and application of intelligent space system oriented to home service robot. In International conference on information and automation (pp. 289–294).

Davidson, P., & Piche, R. (2016). A survey of selected indoor positioning methods for smartphones. IEEE Communications Surveys & Tutorials, 19(2), 1347–1370.

Kandel, L.N., & Yu, S. (2019). Indoor localization using commodity Wi-Fi APs: techniques and challenges. In 2019 international conference on computing, networking and communications (ICNC). IEEE (pp. 526–530).

Sakpere, W. E, Mlitwa, N. B. W., & Oshin, M.A., et al. (2017). Towards an efficient indoor navigation system: A Near Field Communication approach. Journal of Engineering Design & Technology 15, 505–527.

Mazhar, F., Khan, M., & Sallberg, B. (2017). Precise indoor positioning using UWB: A review of methods, algorithms and Implementations. In Wireless Personal Communications, 97(3), 4467–4491.

Sakpere, W., Adeyeye-Oshin, M., & Mlitwa, N. B. W. (2017). A state-of-the-art survey of indoor positioning and navigation systems and technologies. South African Computer Journal, 29(3), 145–197.

Liu, F.T., Chen, C.H., Kao, & Y.C., et al. (2017). Improved ZigBee module based on fuzzy model for indoor positioning system. In 2017 International Conference on Applied System Innovation (ICASI). IEEE (pp. 1331–1334).

Tao, Z., Chengcheng, W., & Jie, Y. (2018). Research on Indoor Positioning Technology Based on MEMS IMU (pp. 143–156). Artificial Intelligence and Robotics. Springer: Cham.

Li, J., Wang, C., & Kang, X., et al. (2019). Camera localization for augmented reality and indoor positioning: A vision-based 3D feature database approach. International Journal of Digital Earth (pp. 1–15).

Bo, Z. H. O. U., Kun, Q. I. A. N., Xu-dong, M. A., & Xian-zhong, D. A. I. (2017). Multi-sensor fusion for mobile robot indoor localization based on a set-membership estimator. Control Theory & Applications, 34(4), 541–550.

Zifei, S. U. N., Kun, Q. I. A. N., Xudong, M. A., & Xianzhong, D. A. I. (2017). Self-localization of mobile robot in dynamic environments based on localizability estimation with multi-sensor observation. CAAI Transactions on Intelligent Systems, 12(4), 443–449.

Zhengjia, W. A. N. G., Zhengqiao, X. I. A., Chujie, S. U. N., Xing, W. A. N. G., & Ming, L. I. (2019). Method of autonomous tracking location and obstacle avoidance based on multi-sensor information fusion. Chinese Journal of Sensors and Actuators, 23(5), 723–727.

Xiaolong, X., Shen, B., Yin, X., Khosravi, M., Huaming, W., Qi, L., & Wan, S. (2020). Edge server quantification and placement for offloading social media services in industrial cognitive IoV. IEEE Transactions on Industrial Informatics. https://doi.org/10.1109/TII.2020.2987994.

Yan-hui, W. E. I., Sheng, L. I. U., & Yan-bin, G. A. O. (2010). Research on integrated positioning method of two-wheeled self -balancing mobile robot. Machinery & Electronics, 10, 58–63.

Choh, K. Y. (2016). RSS-based indoor localization with PDR location tracking for wireless sensor networks. AEU-International Journal of Electronics and Communications 70(3), 250–256.

Zampella, F., Antonior, J.R., & Seco, F. (2014). Robust indoor positioning fusing PDR and RF technologies: The RFID and UWB case. In International conference on indoor positioning and indoor navigation. IEEE, (pp. 1–10).

Xu, X., Mo, R., Dai, F., Lin, W., Wan, S., Dou, W. (2020). Dynamic resource provisioning with fault tolerance for data-intensive meteorological workflows in Cloud. IEEE Transactions on Industrial Informatics 16(9), 6172–6181.

Oliver, A., Kang, S, & Macdonald, B. (2012). Using the kinect as a navigation sensor for mobile robotics. In: The Proceedings of 27th New Zealand Conference on Image and Computer Vision.New York: IEEE, 2012: pp. 509–514.

Li, Y., Yuan, P., & Han, W., et al. (2015). A mapping method based on EKF and point-segment matching for indoor environment.Industrial Electronics and Applications.New York: IEEE, 945–950.

Chengchegn, J. (2013). Based on the received signal strength measurements of indoor positioning technology research. JINAN: Shandong Normal University.

Zhao, Y., Fang, X., Huang, R., & Fang, Y. (January 2014). Joint Interference Coordination and Load Balancing for OFDMA Multi-hop Cellular Networks. IEEE Transactions on Mobile Computing, 13(1), 89–101.

Yao, L., Wu Y.W.A., & Yao, L., et al. (2017). An integrated IMUand UWB sensor based indoor positioning system. In International conference on indoor position-ing &indoor navigation. IEEE.

Xu, X., Qi, W., Qi, L., Dou, W., Sang-Bing Tsai, Md., & Bhuiyan, Z. A. (2020). Trust-aware Service Offloading for Video Surveillance in Edge Computing Enabled Internet of Vehicles. IEEE Transactions on Intelligent Transportation Systems. https://doi.org/10.1109/TITS.2020.2995622.

Xu, Z., & Zhuang, Y. (2009). Comparative study of methods for mobile robot localization. Journal of System Simulation 21 (07), 1891–1896.

Xian, W. (2016). Research on localization method of mobile robots based on multi-sensor data fusion. Beijing: Beijing Jiaotong Univer-Siyt.

Xiaolong, X., Zhang, X., Liu, X., Jiang, J., Lianyong Qi, Md., & Bhuiyan, Z. A. (2020). Adaptive Computation Offloading with Edge for 5G-Envisioned Internet of Connected Vehicles. IEEE Transactions on Intelligent Transportation Systems. https://doi.org/10.1109/TITS.2020.2982186.

Zhu, J., Zhao, J., Cui, C., & Liu C. (2019). Robustness design of UWB/IMU tightly integrated navigation and positioning system based on EKF. Computer Simulation (vol. 11).

Zhu, C., Zhao, D., Peng, S., Zhang, L. (2017). Indoor positioning method of micro inertial sensor aiding UWB. Journal of Henan Polytechnic University(Natural Science) 36(5), 100–105.

Zhang, B., Wang, Y., & Shao, X, Qin ,Q, & Fu X. (2018). Precise robot indoor localization based on information fusion of heterogeneous sensors. Control Engineering of China, 25(7), 1335–1343.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, Yt., Sun, Rz., Zhang, Xn. et al. An autonomous positioning method for fire robots with multi-source sensors. Wireless Netw 30, 3683–3695 (2024). https://doi.org/10.1007/s11276-021-02566-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11276-021-02566-6