Abstract

Wireless sensor networks (WSNs) have recently received increasing attention in the areas of defense and civil applications of sensor networks. Automatic WSN fault detection and diagnosis is essential to assure system’s reliability. Proactive WSNs fault diagnosis approaches use embedded functions scanning sensor node periodically for monitoring the health condition of WSNs. But this approach may speed up the depletion of limited energy in each sensor node. Thus, there is an increasing interest in using passive diagnosis approach. In this paper, WSN anomaly detection model based on autoregressive (AR) model and Kuiper test-based passive diagnosis is proposed. First, AR model with optimal order is developed based on the normal working condition of WSNs using Akaike information criterion. The AR model then acts as a filter to process the future incoming signal from different unknown conditions. A health indicator based on Kuiper test, which is used to test the similarity between the training error of normal condition and residual of test conditions, is derived for indicating the health conditions of WSN. In this study, synthetic WSNs data under different cases/conditions were generated and used for validating the approach. Experimental results show that the proposed approach could differentiate WSNs normal conditions from faulty conditions. At last, the overall results presented in this paper demonstrate that our approach is effective for performing WSNs anomalies detection.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Recently, wireless sensor networks (WSNs) have emerged as an important technology for different research areas [1, 2]. There is also an increasing deployment of WSNs to provide a rich and multi-dimensional view on different environments ranging from military, scientific, and commercial applications [1]. A WSN typically consists of a large number of small size, inexpensive, and low-power sensor nodes working together to monitoring and measuring the environment surrounding data. Most often, these sensor nodes are deployed in a harsh environment, under which sensor nodes are vulnerable to become physically damaged, environmentally interfered, and power depleted. With a large amount of data being acquired by each sensor node, the cost of transmitting collected data to sink node is expensive and congestion may often happen amid data transfer [3–7]. In a large-scale WSN, it is always demanding to have cost and energy effective fault management solution at the same time. In order to enhance the overall reliability of system with limited power consumption, the aim of this study is to detect anomalies and faults during WSN operations. The signal used for the analysis and detection is based on the operation information provided by the Zigbee communication protocol [8, 9].

There are numerous studies on anomaly detection and fault diagnosis in industrial electronics [10–16], but these studies are mostly less focused on WSNs [17]. Due to the self-organizing and ad-hoc working mechanism of WSNs, it is often difficult to observe the structures, interactions, and operations of WSNs from an external monitoring system. Existing approaches for performing fault diagnosis of WSN are mostly proactive [10, 11, 18–22], because they can be embedded in a system to perform faulty sensor nodes detection and periodically health condition scan. For example, Luo et al. [23] propose a fault-tolerant detection scheme to address the problem of measurement error and sensor fault. In their proposed method, they also employ a technique of optimizing the neighborhood size aimed at relieving the problem of limited-energy in each sensor node. A cross-validation-based technique is proposed to detect sensor faults on-line [24]. Zhao et al. [25] propose a residual energy scan (eScan) technology that can provide an overview of the energy resource distribution for WSNs health conditions monitoring. A more recent technique, called Sympathy, enables WSNs to collect system metrics corresponding to the information of connectivity and data flow for detecting and analyzing sensor nodes failures [26]. Despite exhibiting effective performance on monitoring sensors conditions, these proactive approaches often lead to an undesirable effect of speeding up the energy depletion, which subsequently results in reducing the lifespan of the whole WSNs.

In order to overcome the disadvantages of proactive approaches, passive diagnosis techniques that do not incur additional energy consumption on WSN nodes and extra traffic loading on the whole network appear to have significant advantages. Zaidi et al. [27] introduce a passive diagnosis scheme based on principal component analysis (PCA) and Chi square test to detect WSNs anomalies real time. Liu et al. propose an online passive approach based on probabilistic inference model to diagnose WSNs from the data collected at the sink node. Zhao et al. [28] perform sensor nodes diagnosis via analyzing the output signals of WSNs by chaos particle swarm optimization (PSO) algorithm and support vector machine (SVM). They show their proposed approach is able to obtain a relative high detection accuracy compared to other methods, such as PSO–SVM and back-propagation neural networks. However, compared with the studies on the proactive approaches, the studies on passive diagnosis approaches for WSN are limited. Recent research findings show approaches based on non-parametric test and autoregressive (AR) model are useful in machine health monitoring [29–31]. As AR model is well known for being able to filter out faulty system affected signal effectively, and non-parametric test is also reliable for classifying different operation conditions, we in this paper propose a passive diagnosis approach based on non-parametric test and AR model for anomaly detection of WSNs. Specifically, an AR model with optimal order of normal data is firstly built. It then acts as a filter to process the testing data for highlighting the fault-affected signals for comparative analysis. The difference between training error of normal condition and residual of test conditions is compared by using Kuiper test (a non-parametric test) and the degree of healthiness or faultiness is measured by a statistical distance. Anomaly detection is conducted by comparing the statistical distances corresponding to different conditions. Our proposed method will not pose additional energy loading to each sensor; it will only be deployed at the sink node which is the central control center where energy supply is never a concern, Hence, energy consumption of our approach is not a problem.

The contribution of this paper can be summarized as follows: A new WSN passive diagnosis approach is introduced. Health indicator based on Kuiper test is proposed to indicate the health conditions of WSNs. Extensive synthetic WSNs data is used to validate the effectiveness of the method. Simulation results show health indicator exhibits better performance compared to standard statistical parameters. It is also found that Kuiper’s statistic has the best performance in a way that the separation between faulty condition and referenced normal condition of WSNs is clear, which is useful for anomaly detection.

The rest of this paper is organized as follows. In Sect. 2, Theoretical background of the Kolmogorov–Smirnov (K–S) test, Kuiper test, and AR model are briefly reviewed. Numerical simulation of WSN and the proposed approach for WSN fault diagnosis are introduced in Sect. 3. Diagnosis of the congestion in WSNs and anomaly detection of fault nodes in WSNs are reported and discussed in Sects. 4 and 5, respectively. Finally, the conclusions are drawn in Sect. 6.

2 Theoretical background

This section presents a brief overview of the methods used in the article: K–S test, Kuiper test, and AR model. AR model is used to filter out normal signals for highlighting fault-affected signals. The signals are compared between using K–S test and Kuiper test for measuring the similarities. The fundamental and detailed information of these methods are well described in Kay [32] and Press et al. [33].

2.1 Kolmogorov–Smirnov test and Kuiper test

Non-parametric tests: K–S test and Kuiper test, which are distribution free tests, are statistical methods used for determining if two given distributions are similar or significantly different. With the test results, it is theoretically possible to compare test states with known healthy states. As a result, we are able to determine whether or not they are statistically similar. Note that the application of non-parametric test for fault diagnosis assumes that the anomalies, such as failure of nodes and congestion, happen in WSNs causing a variation in the cumulative distribution function (CDF) of the data travel time (TT) series.

Suppose the CDF of a sample is F N (x) and x 1, x 2, …, x N are independent and identically distributed (iid) random variables, and then the empirical CDF can be defined as

For comparing two different CDFs \( F_{{N_{1} }} (x) \) and \( F_{{N_{2} }} (x) \), the K–S statistic D quantifies the maximum value of the absolute difference between them. Thus, the K–S statistic is defined as follows:

The mathematical function for variable D to compute probability is defined as follows:

where \( \lambda = \left( {\sqrt {N_{e} } + 0.12 + \frac{0.11}{{\sqrt {N_{e} } }}} \right)D \) and \( N_{e} = \frac{{N_{1} N_{2} }}{{N_{1} + N_{2} }} \), where N e is the effective number of the data points, and N 1 and N 2 are the number of data point in the first and second distribution respectively. Q KS (λ) is a monotonic function with limits Q KS (0) = 1 and Q KS (∞) = 0.

The main characteristic of K–S test is that it is invariant of the scale x. It tends to be more sensitive around the median value, and less sensitive at the extreme ends of the distribution. Differentiating small changes is difficult and remains to be a major challenge [29]. One way to improve the performance of K–S test on the tails is to replace D by a so-called stabilized statistic, such as Kuiper’s statistic [33], which is defined as

Mathematical function for variable V for computing the probability is defined as follows:

where \( \lambda = \left( {\sqrt {N_{e} } + 0.155 + \frac{0.24}{{\sqrt {N_{e} } }}} \right)V \) and \( N_{e} = \frac{{N_{1} N_{2} }}{{N_{1} + N_{2} }} \), where N e is the effective number of the data points, and N 1 and N 2 are the number of data point in the first and second distribution respectively. Q KP (λ) is a monotonic function with limits Q KP (0) = 1 and Q KP (∞) = 0. In this article, K–S statistic, D, and Kuiper’s statistic, V, have been taken and compared for decision-making.

2.2 Autoregressive model

AR model specifies the linear relationship between output variable and its own previous values describing the random process in nature [30, 31, 34]. The AR model is defined as below:

where a[i] is the coefficients of the model, p is the order of AR model, and e[n] is the white noise with zero mean and variance σ 2. In this article, the coefficients of AR model are estimated by using Levinson–Durbin recursion (LDR) method because LDR is more efficient than Gaussian method and its property is favorable for selecting the orders of AR model [34].

It is critical to select the optimal order of AR model because spectral peaks will be smoothed if the order is too small, while statistical instability and spurious spectral peaks will appear if the model order is too large [32]. Many existing methods can be used to determine the optimal order, such as Akaike information criterion (AIC), Bayesian information criterion (BIC), and Hannan–Quinn criterion (HQ). It is worth noting that AIC method can usually determine an accurate good model order [35]. Thus, the popular AIC method is used to determine the optimal AR model order for which AIC attains its minimum value as a function of, p. The AIC function is defined as follows:

where N is the number of samples, σ 2 and p are the estimated variance of the white noise and the order of the AR model, respectively. In this paper, normal WSN data is used to construct the AR model, and AR technique is used to pre-whiten the TT series data for testing.

3 Numerical simulation of WSN and proposed diagnosis approach

3.1 Numerical simulation of WSN

Zigbee-based WSN is used to verify the proposed approach in this article [8, 9]. The topology is randomly generated by adjacency matrix with transmission cost ranging from 1 to 200 to include both dense and less dense clusters. It is drawn by Graphiz as shown in Fig. 1. A network size of 100-node is considered a practical selection for most WSNs applications. There is always one sink node/central control in the network. It operates with unlimited power supply and the ID is denoted as 0 (blue hexagon). The other sensor node IDs are labeled from 1 to 100, which are displayed by green circles [36].

Data are randomly picked up, packed and transmitted from the source nodes to the sink node by their own shortest routes defined by AODV routing. The successful transmitted packets with AODV routing protocol always use the shortest path for transmission. Hence TT will be varied when anomalies occur. This optimizes the sensitivity and performance of our proposed algorithm. Two parameters in a WSN model, which are traffic conditions and the number of faulty nodes, are controlled to simulate different network conditions. The other parameters include 127 bytes packet size, 8 kb buffer size, 3 counts of backoffs, 2 counts of retransmission and the maximum communication range are fixed for evaluating the performance of the proposed method. When a sensor or multiple sensors fail, the data linkage that involves the faulty node will be broken. The nodes using the broken linkage have to find another route. The newly determined route will still be the shortest available route, but will be longer than the original route because of the faulty nodes. As a result, a longer TT is recorded. In this paper, the different conditions of WSNs including normal condition (no failure), 1-node faulty condition, 2-node faulty condition, 3-node faulty condition, 4-node faulty condition, and 5-node faulty condition are thoroughly examined. As sensors with higher data rate may be used in WSNs such as accelerometers, 3 different traffic conditions: namely ideal case (no congestion), light congestion, and heavy congestion are considered in the simulations in order to provide TT data for a more comprehensive assessment. The data are collected randomly under different traffic conditions to simulate congestions in the network. Different traffic conditions cause packet loss in data transmission to the sink. There are 1–5 % packet loss in light congestion case and 50–60 % packet loss in heavy congestion case.

In this study, TT is defined as the time required for the data packet transmitted from source nodes to the sink node. Extensive simulations were conducted to generate the TT data for analysis. In normal conditions, over 100 k data were generated. The data are used for training and referencing in AR model and non-parametric testing. In each faulty condition, ten groups of data were simulated; each group has over 2 k of data. These data are used to assess the performance of the proposed WSN diagnosis method.

3.2 Kuiper test and autoregressive model-based WSN diagnosis approach

Recent research findings show approaches based on non-parametric test and AR model are useful techniques for performing machine health monitoring [29–31, 34, 37]. For example, Kay and Mohanty show that K–S test can deliver reliable results for diagnosing bearing faults through vibration signals [29]. AR model, together with K–S test, has also been proved to be able to perform bearing fault diagnosis and gear performance prognosis [30, 31].

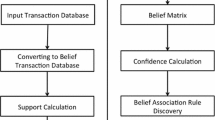

Both bearing and gear are rotary machines, and their characteristics are very different from those of WSNs. In this paper, we extend the AR modeling and non-parametric test based framework to WSNs fault analysis. The overall framework of the proposed methodology is shown in Fig. 2. The TT of the data acquired by a node and transferred to the sink node of the WSN is analyzed for indicating the health condition of WSNs. Since the estimated AR parameter is inconsistent when too low a model order is selected, while the variance increases significantly when too high a model order is used [38], optimal AR model order of the TT process is selected using the AIC method in normal conditions. For performance assessment, the AR model with the optimal order is used as a linear prediction filter to pre-whiten the test condition data. Since anomalous conditions in WSN, such as congestion, faulty nodes, will lead their signals to deviate from the normal ones, the training error and prediction error are compared by K–S test and Kuiper test to indicate the different conditions of test data.

4 Diagnosis of the congestion in WSNs

In practical applications, WSNs have different traffic conditions and traffic patterns. When there are less data, the packet transmission is not delayed by congestion, which is called the ideal case in this paper. Transmission congestion happens when there are large amount of data required to be transmitted to the sink node at the same time. It is obvious that traffic congestion will become increasingly worse when the data rate increases.

Data of normal condition under ideal case are used to train the AR model. AIC method is used to determine the optimal order of the AR model. In this study, it is noticed that using the normal condition data under ideal case, the AIC function attains its minimum value at an order of 38. Thus, an AR(38) model is selected, and is used to filter the testing TT data.

In theory, the testing TT data under different ideal case should exhibit similar characteristic compared with the reference data, while sample data corresponding to congestion cases should deviate from the reference data pattern. Results shown in Figs. 3, 4, and 5 corroborate with the above statement showing the ideal case has a small K–S statistic and Kuiper’s statistic, it is also noticed that congestion cases exhibit a large K–S statistic and Kuiper’s statistic. The heavier the congestion in the WSN is, the higher the K–S statistic and Kuiper’s statistic are. Although K–S test and Kuiper test also exhibit similar characteristics in differentiating the different traffic conditions, Kuiper’s statistic achieves significantly better performance compared to K–S statistic in a way that bigger deviations are found in different cases. For example, the mean deviation is 0.1 for Kuiper’s statistic while it is only 0.04 for K–S statistic between light and heavy congestion. Standard statistics, such as skewness, kurtosis, and crest factor, from different traffic conditions were also studied as shown in Fig. 6. As we can see that these standard statistics have larger variations and heavy overlap. They are found to be unreliable for determining different WSNs traffic conditions. It is also worth noting that the standard statistics measures are unable to classify the severity of congestions.

5 Detection of fault sensor node(s) in WSNs

During the operation of WSNs, sensor node(s) may fail. Ten groups of data corresponding to different conditions, such as normal (no faulty node), 1-node fault, 2-node fault, 3-node fault, 4-node fault, and 5-node fault, in three different traffic cases were simulated. Normal data in different traffic cases are used to train the AR model, and detection of faulty conditions is conducted in individual traffic case.

5.1 Ideal case

AR(38) model is used for filtering the test sample data in ideal case. K–S test and Kuiper test are used to compare the similarity between the prediction error and the training error of the AR model. Results are shown in Figs. 7 and 8. As we can see K–S statistics that correspond to different conditions overlap heavily and there is also a large variation of K–S statistic under the same number of failure sensor node. Kuiper’s statistic shows similar results with K–S statistic; nevertheless, it can separate the normal condition from the faulty conditions clearly. Although an increasing trend in mean statistic is observed in Fig. 9, both K–S statistic and Kuiper’s statistic fail to differentiate the faulty conditions. Figure 10 shows the standard statistics, such as skewness, kurtosis, and crest factor, from different faulty conditions under ideal case. None of these measures can identify the different conditions reliably. They also fail to classify the normal condition from the faulty conditions.

5.2 Light congestion case

Data of normal condition under light congestion are used to train the AR model. The optimal order of the AR model is 24. Thus, an AR(24) model is then used for filtering the test sample data under light congestion. K–S test and Kuiper test are then used to compare the similarity between prediction error and the reference.

Results are shown in Figs. 11, 12, and 13. Similar symptoms with the ideal case are found: large variation in statistic exists, and the statistic fails to differentiate faulty conditions. Kuiper’s statistic shows better performance over K–S statistic because a larger and clearer deviation between normal condition and faulty conditions can be found. Figure 14 shows the standard statistics of different faulty conditions under the light congestion case. The results indicate none of these measures can identify the different conditions reliably. They also fail to classify the normal condition from the faulty conditions.

5.3 Heavy congestion case

Normal condition data under the case of heavy congestion case are used to train AR model. The optimal order of the model is 28, and AR(28) model is then used for filtering the test sample data. K–S test and Kuiper test are used to compare the similarity between prediction error and the reference.

Results are shown in Figs. 15, 16, 17 and 18. Similar results under the ideal case and the light congestion case are observed: large variation in statistics exists, and statistics fail to differentiate the faulty conditions. Kuiper’s statistic shows better performance compared to K–S statistic. The statistic indicates a much bigger and clearer deviation between normal condition and faulty conditions.

6 Conclusion

As WSNs usually involve a large number of sensors working under a harsh environment, automatic detection and diagnosis of faulty nodes is critical for maintaining a stable WSN operation. This paper demonstrates that sensor nodes fault detection can be effectively performed by using Kuiper test together with AR model. Our study shows prediction error of an AR model can serve as a reliable measure indicating the abnormal conditions of WSNs. The statistics of K–S test and Kuiper test have also been thoroughly studied in this paper and our analysis show that both of these statistics can indicate the health conditions of WSNs. Comparing the performance of these two statistics in detail, Kuiper’s statistic clearly exhibits better performance over the K–S statistic in the sense that Kuiper’s statistic provides clear difference and separation between faulty conditions and normal conditions. Compared with other widely used statistical parameters, such as skewness, kurtosis, and crest factor, our results indicate that the standard statistical parameters fall short in classifying traffic congestions, and faulty node(s) in WSNs. Results obtained in this study show that the proposed approach is capable of detecting the abnormal conditions such as the degree of traffic congestion, and the failure sensor nodes. It is important to note that the proposed approach does not consume additional energy which is essential for maintaining a robust and reliable WSNs operation. However, the proposed method is still unable to determine the number of faulty nodes. Future work will thus involve developing a probabilistic or statistical model in estimating the number of faulty sensor nodes.

References

Akyildiz, I., Su, W., Sankarasubramaniam, Y., & Cayirci, E. (2002). Wireless sensor networks: a survey. Computer Networks, 38(4), 393–422.

Megerian, S., Koushanfar, F., Qu, G., Veltri, G., & Potkonjak, M. (2002). Exposure in wireless sensor networks: theory and practical solutions. Wireless Networks, 8, 443–454.

Ni, K., & Pottie, G. (2012). Sensor network data fault detection with maximum a posteriori selection and Bayesian modeling. ACM Transactions on Sensor Networks, 8(3), 23:1–23:21.

Yu, M., Mokhtar, H., & Merabti, M. (2007). Fault management in wireless sensor networks. IEEE Wireless Communications, 14(6), 13–19.

Liu, W., Zhang, Y., Lou, W., & Fang, Y. (2006). A robust and energy-efficient data dissemination framework for wireless sensor networks. Wireless Networks, 12, 465–479.

Rosberg, Z., Liu, R. P., Dinh, T. L., Dong, Y. F., & Jha, S. (2010). Statistical reliability for energy efficient data transport in wireless sensor networks. Wireless Networks, 16, 1913–1927.

Anisi, M. H., Abdullah, A. H., & Razak, S. A. (2013). Energy-efficient and reliable data delivery in wireless sensor networks. Wireless Networks, 19, 495–505.

Sung, T.-W., Wu, T.-T., Yang, C.-S., & Huang, Y.-M. (2010). Reliable data broadcast for Zigbee wireless sensor networks. International Journal on Smart Sensing and Intelligent Systems, 3(3), 504–520.

Zigbee: Setting standards for energy-efficient control networks, Schneider, http://www2.schneider-electric.com/documents/support/white-papers/40110601_Zigbee_EN.pdf.

AboElFotoh, H. M. F., Iyengar, S. S., & Chakrabarty, K. (2005). Computing reliability and message delay for cooperative wireless distributed sensor networks subject to random failures. IEEE Transactions on Reliability, 54(1), 145–155.

AboElFotoh, H. M. F., Elmallah, E., & Hassanein, H. (2006). On the reliability of wireless sensor networks. In IEEE international conference on communications (pp. 3455–3460).

Jin, X., Ma, E. W. M., Cheng, L. L., & Pecht, M. (2012). Health monitoring of cooling fan based on Mahalanobis distance with mRMR feature selection. IEEE Transactions on Instrumentation and Measurement, 61(8), 2222–2229.

Jin, X., & Chow, T. W. S. (2013). Anomaly detection of cooling fan and fault classification of induction motor using Mahalanobis–Taguchi system. Expert Systems with Applications, 40(15), 5787–5795.

Son, J.-D., Niu, G., Yang, B.-S., Hwang, D.-H., & Kang, D.-S. (2009). Development of smart sensors system for machine fault diagnosis. Expert Systems with Applications, 36(9), 11981–11991.

Wang, Y., Miao, Q., Ma, E. W. M., Tsui, K.-L., & Pecht, M. G. (2013). Online anomaly detection for hard disk drive based on Mahalanobis distance. IEEE Transactions on Reliability, 62(1), 136–145.

Rabatel, J., Bringay, S., & Poncelet, P. (2011). Anomaly detection in monitoring sensor data for preventive maintenance. Expert Systems with Applications, 38(6), 7003–7015.

Liu, Y., Liu, K., & Li, M. (2010). Passive diagnosis for wireless sensor networks. IEEE/ACM Transactions on Networking, 18(4), 1132–1144.

Paradis, L., & Han, Q. (2007). A survey of fault management in wireless sensor networks. Journal of Network and Systems Management, 15(2), 171–190.

Jiang, P. (2009). A new method for node fault detection in wireless sensor networks. Sensors, 9(2), 1282–1294.

Krishnamachari, B., & Iyengar, S. (2004). Distributed bayesian algorithms for fault-tolerant event region detection in wireless sensor networks. IEEE Transactions on Computers, 53(3), 241–250.

Lee, M.-H., & Choi, Y.-H. (2008). Fault detection of wireless sensor networks. Computer Communications, 31(14), 3469–3475.

Wang, S.-S., Yan, K.-Q., Wang, S.-C., & Li, C.-W. (2011). An integrated intrusion detection system for cluster-based wireless sensor networks. Expert Systems with Applications, 38(12), 15234–15243.

Luo, X., Dong, M., & Huang, Y. (2006). On distributed fault-tolerant detection in wireless sensor networks. IEEE Transactions on Computers, 55(1), 58–70.

Koushanfar, F., Potkonjak, M., & Sangiovanni-Vincentelli, A. (2003). On-line fault detection of sensor measurements. In Proceedings of IEEE sensors (pp. 974–979).

Zhao, Y. J., Govindan, R., & Estrin, D. (2002). Residual energy scan for monitoring sensor networks. In IEEE wireless communications and networking conference (pp. 356–362).

Ramanathan, N., Chang, K., Kapur, R., Girod, L., Kohler, E., & Estrin, D. (2005). Sympathy for the sensor network debugger. In Proceedings of the 3rd international conference on embedded networked sensor systems (pp. 255–267).

Zaidi, Z. R., Hakami, S., Landfeldt, B., & Moors, T. (2010). Real-time detection of traffic anomalies in wireless mesh networks. Wireless Networks, 16, 1675–1689.

Zhao, C., Sun, X., Sun, S., & Jiang, T. (2011). Fault diagnosis of sensor by chaos particle swarm optimization algorithm and support vector machine. Expert Systems with Applications, 38(8), 9908–9912.

Kar, C., & Mohanty, A. R. (2004). Application of KS test in ball bearing fault diagnosis. Journal of Sound and Vibration, 269(1–2), 439–454.

Cong, F., Chen, J., & Pan, Y. (2011). Kolmogorov–Smirnov test for rolling bearing performance degradation assessment and prognosis. Journal of Vibration and Control, 17(9), 1337–1347.

Wang, X., & Makis, V. (2009). Autoregressive model-based gear shaft fault diagnosis using the Kolmogorov–Smirnov test. Journal of Sound and Vibration, 324(3–5), 413–423.

Kay, S. M. (1988). Modern spectral estimation: theory and application. New Jersey: Prentice Hall.

Press, W. H., Flannery, B. P., Teukolsky, S. A., & Vetterling, W. T. (1992). Numerical recipes in Fortran 77: The art of scientific computing. Cambridge: Cambridge University Press.

Wang, W., & Wong, A. K. (2002). Autoregressive model-based gear fault diagnosis. Journal of Vibration and Acoustics, 124, 172–179.

Shittu, O., & Asemota, M. (2009). Comparison of criteria for estimating the order of autoregressive process: a Monte Carlo approach. European Journal of Scientific Research, 30(3), 409–416.

Lau, C. P. (2013). Failure detection of wireless sensor network. Master’s thesis, City University of Hong Kong, Hong Kong.

Gong, R., & Huang, S. H. (2012). A Kolmogorov–Smirnov statistic based segmentation approach to learning from imbalanced datasets: with application in property refinance prediction. Expert Systems with Applications, 39(6), 6192–6200.

Shibata, R. (1976). Selection of the order of an autoregressive model by Akaike’s information criterion. Biometrika, 63(1), 117–126.

Acknowledgments

The work described in this paper was supported in part by a collaborative project associated with China Electronic Product Reliability and Environmental Testing and Research Institute (CEPREI) from Guangdong Provincial Department of Science and Technology (Project number: 2011A011302002), in part by National Natural Science Foundation of China under Grant No. 51275474, in part by Zhejiang Provincial Natural Science Foundation of China under Grant No. LZ12E05002, and in part by the Open Research Project of the State Key Laboratory of Industrial Control Technology, Zhejiang University, China (No. ICT1443). The authors would like to thank Zhang Fan and Li Dong of CEPRI for providing technical support in this project, and would also like to thank the reviewers for their valuable comments and constructive suggestions to improve this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Jin, X., Chow, T.W.S., Sun, Y. et al. Kuiper test and autoregressive model-based approach for wireless sensor network fault diagnosis. Wireless Netw 21, 829–839 (2015). https://doi.org/10.1007/s11276-014-0820-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11276-014-0820-0