Abstract

Water utilities render a public service that highly influences sensitive domains such as human health, society, economy, environment and policy. In the context of diversification of water uses, scarcity of water resources, pollution of surface and underground resources, energy optimisation and public funding cuts, the improvement of water utility performance represents a real challenge. A water utility is an organisation of human and material resources for the purpose of taking advantage of water resources to deliver drinking water to the population, implying a trade-off between technical, economical, financial and organisational aspects. A partial performance is commonly measured by considering each aspect separately, so a real challenge consists in developing a methodology that includes all the aspects of water utility performance using multiple indicators. The current paper is based on an original conceptual vision of the water utility as an organisation focused on the customer and built on four fundamental pillars: human resources, financial resources, assets and environment. The proposed approach uses an elicitation procedure involving the decision maker to co-build comprehensive core indicators belonging to each pillar and then calibrates them according to the context of the water utility, decision maker preferences and ad-hoc performance thresholds. In the end, performance is assessed both at the individual and overall scale. A specific metric is defined for overall performance calculation that should be monitored over a specified time period to check its trend and redefine implemented policy in the case of a downgraded situation. An illustration of the methodology is given for the water utility of Grenoble (France).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Performance assessment represents a real challenge whose purpose is to guarantee the sustainability of any type of organisation, especially in the context of mutation characterised by natural resources depletion, funding cuts and global change. Aydin et al. (2014) discussed links between performance and the sustainability of water distribution systems. They developed a methodology for determining sustainability indices for pressure and water age. These sustainability indices are based on performance indicators including reliability, resiliency, and vulnerability. Farmani and Butler (2013) investigated the relationship between urban form and the performance of a water distribution system. They concluded that the rate and type of urban development has major implications for the redesign and operation of existing water infrastructures in terms of total cost, water quality and system resilience.

For Nudurupati et al. (2011), performance measurement can be defined as the process of quantifying the effectiveness and efficiency of actions. It aims at helping decision makers to build strategies by monitoring performance trends and the effectiveness of past or potential actions. According to Eccles (1991), a fundamental evolution in performance measurement occurs as a result of the involvement of new categories of non-financial parameters as relevant factors for the performance assessment, with a weight similar to that of financial measures in the decision making process. Nazif et al. (2013) discussed the necessity of having a performance evaluation and monitoring system to provide the expected level of security in water distribution systems. They proposed using a Physical Performance Index (SPVI).

The need to develop methodologies for overall performance measurement seems relevant in order to take the multidimensional nature of the performance assessment into account. This is even more valid for public utilities and for water utilities, in particular. Priorities change, especially in developed countries. The primary objective of providing the population with drinking water has been achieved. Water utilities have to adapt their organisation in such a way as to be in adequacy with the new paradigm of water management that encourages the integrated management of water resources by involving all of the concerned stakeholders at the level of the watershed Varis (2005). Water utilities have to be aware of pressures that could harm their performance and require a continuous adaptation. In fact, the evolution of user behaviours, the diversification of water uses, water scarcity, funding cuts and asset ageing, combined with the decrease in water consumption and the more restrictive legislation concerning the environment and human health protection, constitute serious performance disability factors that should be well addressed and managed.

Vilanova et al. (2015) give an interesting overview of practices all around the world in terms of the definition of performance measurements and indicators. The authors highlight the importance of considering the efficiency of resources, the effectiveness of services provided and the involvement of all of the stakeholders in addition to technical actors, according to collaborative processes that revolve around performance goals. The sustainability and environmental dimensions become relevant, in addition to the traditional dimensions of performance. For Berg (2007), the need for performance assessment and benchmarking metrics concerns all stakeholders: managers, policy makers and customers. The use of empirical and structured benchmarking procedures involving multiple water utilities makes it possible to quantify the specific and the relative performance of each one, leading to the identification of gaps and potential areas to be improved. Information availability appears to be a prerequisite for performance measurement. Berg (2007) considers that knowledge is power since providing information to stakeholders disturbs the status quo. For him, information is a catalyst to reform. Within this scope, the emergence of information systems (IS) encouraged by the development of informatics, networks and systems dedicated to data analysis leads to the generation of a large quantity of data that need to be computed according to specific indicators in order to provide decision aid for utility managers. Data collection could be carried out at either the utility or the utility network level for mutualisation and benchmarking purposes. Awareness of data requirements to improve the decision making process has encouraged public authorities and water stakeholders both at the European and international scale to implement a series of internal and external initiatives and programmes for data collection and the definition of core indicators dedicated to water utility performance assessment. According to the ISO (2007), an indicator is a parameter or a value derived from a parameter, which provides information about a subject matter with a significance extending beyond that directly associated with a parameter value. In France, the information system on water and wastewater public services (Système d’information sur les services public d’eau et d’assainissement, SISPEA) was created in 2008. It constitutes a national observatory that collects and compares mandatory performance indicators. The key step in this initiative was the definition of a common list of shared indicators (Guérin-Schneider and Nakhla 2003; Canneva and Guérin-Schneider 2011).

Other initiatives concern the creation of cross-border groups or networks such as the European Benchmarking Co-operation (EBC), an industry-based, not-for-profit benchmarking initiative for water services that helps water utilities to improve their performance.

Other benchmarking water utility networks can be cited: Africa’s Water Utilities Partnership (WUP), South East Asia Water Utility Network (SEAWUN) and PAWWA (Pacific Water and Wastes Association). Networks of utilities regulators such as the “Asociación de Entes Reguladores de Agua y Saneamiento de las Américas” (Association of Water and Sanitation Regulatory Entities of the Americas, ADERASA) also exist. Most benchmarking networks include the World Bank’s International Benchmarking Network for Water and Sanitation Utilities (IBNET, http://www.ib-net.org) that aims at developing core indicators and data collection approaches by promoting and supporting national and regional initiatives in order to make performance comparison possible and to create links between utilities. An overview of regional initiatives for performance benchmarking is given in Berg (2010, p. 63). The International Water Association (IWA), an organisation of water professionals, supports and encourages performance benchmarking initiatives and programmes. One of the relevant contributions in this field was the manual developed by the IWA task force on ‘performance indicators’ dedicated to performance indicators for water supply services (Alegre et al. 2000). The manual adopts an exhaustive approach by defining several indicators that cover large domains of water utility management, including both operation and investment activities. For each indicator, details about calculation formula, input data, time scale and frequency are provided. The diversity of built indicators constitutes a real advance in terms of data processing and valorisation for decision aid. The standardised approach used to define these indicators led to a broad consensus on water utilities worldwide. Even if the proposed indicators enhance the level of knowledge about water utilities, they reveal a disparity between utilities in terms of their ability to access information systems capable of gathering a large quantity of data. It appears that access to reliable information and the capacity to manage it in a reliable manner constitutes a handicap for most utilities. The large palette of indicators should not be considered as a constraint but, instead, should provide the decision maker with a wide range of choices of relevant indicators and the possibility of adapting them according to context and needs. The selection of indicators remains a challenge for utilities, especially small ones. To facilitate this task, national initiatives were conducted to guide water utilities in their choice by publishing national best practices manuals and by determining a set of mandatory indicators that water utilities must provide. Most of them are a breakdown of IWA indicators (Van de Berg and Danielko 2011, p 135; ISO 2007; PAWWA 2012; CWWA 2009).

In the French context, the decree of 2 May 2007 (MEDD 2007) related to annual reports on price and service quality of water utilities establishes a list of mandatory indicators that must be provided. This legal obligation leads to a harmonisation of assessed indicators and allows comparison between utilities.

Romano and Guerrini (2011) list four main types of models and methods dedicated to performance assessment of water and wastewater utilities. The first type concerns models based on key performance indicators (KPI) reported using scorecards, which are addressed by cited initiatives for building specific or mandatory indicators. However, the processing and interpretation of measured indicators remains a dimension that is not completely addressed, and the lack of definition of a clear limit or threshold for indicators does not facilitate the decision making process.

The second type of model concerns those that focus on financial performance, based on financial ratios and indexes that measure profits or efficiency. In our opinion, it constitutes a partial vision of performance and merits to be improved by involving other dimensions. The third type of method is based on production and cost frontier functions for assessing the productive efficiencies of operating units using parametric or non-parametric mathematical methods for linear programming, statistical analysis and regression. The literature review carried out by Berg and Marques (2011) indicates that Data Envelopment Analysis (DEA) (Thanassoulis 2000) is the most commonly used method for benchmarking multiple water utilities. Romano and Guerrini (2011) indicate that most studies used operating expenditures or derived components, the number of employees or the length of the water main as input variables. Concerning output variables, the produced, delivered or billed volume of water seems to be the most commonly used. It appears that these methods present advantages of benchmarking multiple utilities but only on partial dimensions of performance and with regard to productive efficiency, which, in our opinion, constitutes a shortfall in the overall performance measurement.

The latter type of model is based on the aggregation of individual or partial performance indicators in order to measure a single score for performance. It seems more appropriate to investigate and improve works done in this domain in order to develop a comprehensive methodology for overall performance assessment of water utilities (Stahre and Adamsson 2002; Coulibaly and Rodriguez 2004; Kulshrestha 2006; Bhanoji 2009, p. 6; Sadiq et al. 2010; PAWWA 2012).

Considering all of the elements provided in the literature review, the proposed work aims at improving existing methods for performance measurement by overcoming financial and technical aspects or the production efficiency of water utilities, and by involving other factors. Therefore, a way to deal with the problematic of overall performance assessment is to clearly establish the dimensions of performance. For us, overall performance deals with the condition of the technical system and its ability to sustain the delivery of water to customers at the required service level and in optimised technical and financial conditions. Three other dimensions also seem to be relevant: (i) the involvement of human resources and their ability to treat users’ complaints, as well as possessing the technical skills to efficiently manage assets (operational dimension); (ii) the ability of the utility to ensure an economic equilibrium between incomes and outcomes and to self-budget itself to sustain the rendered service; and (iii) environmental aspects dealing with energy optimisation, natural resources management and protection against pollution.

The proposed work aims at developing a methodology for overall performance assessment based on the implementation of an elicitation process involving stakeholders in order to: (i) select the most appropriate existing indicators (IWA, mandatory or adaptation of them) or to co-build ad-hoc indicators with regard to the four listed pillars of performance; (ii) define a specific mathematical utility or performance function that makes it possible to normalise the value of each selected indicator and to define intrinsic thresholds depending on the context of the utility and its goals in terms of performance in order to address inadequacies concerning the interpretation of an absolute indicator value; (iii) to define a specific metric for single utility year-by-year ex post overall performance calculation based on the aggregation of individual core indicators that is different from weighted sum methods, making it possible to maintain legibility on all of the performance dimensions considered in the context of non-consensus concerning the most appropriate aggregation method; and (iv) to analyse the historical trend of overall performance over a specific time period in order to mitigate and to adapt current policy. The novelty of the paper consists in the development of an adaptive and comprehensive methodology for the definition and the assessment of the overall performance of water utilities with the help of a specific metric based on co-built indicators normalised with the help of specific mathematical functions according to a cognitive process involving decision makers and aimed at explaining their preferences in terms of performance levels.

The present paper is divided into three main sections. After describing the challenges and issues involved in understanding and assessing the overall performance of water in the first section, the second section details the methodological concepts and main steps of the developed approach by highlighting the relevant dimensions of performance and how indicators are co-built and calibrated. The last section describes the implementation of the method for the assessment of overall performance of the water utility of Grenoble in southeastern France.

2 Methodology

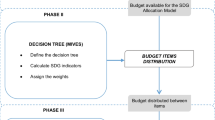

The developed approach aims at providing water utilities with a step-by-step comprehensive and adaptive methodology capable of measuring overall performance based on existing French mandatory indicators and those defined in Alegre et al. (2000) to build an innovative set of individual core indicators for performance assessment and annual reporting. Core indicators have to be co-built with decision makers and should be selected in such a way as to take account of all decision levels: strategic, tactical and operational. Profiles of involved decision makers should include governance, management and technical skills. The choice of stakeholders is relevant to ensure the exhaustiveness of defined indicators to handle all performance dimensions. The construction of indicators should be consistent with the conceptual definition of a water utility that considers that the existence of the utility is motivated by the delivery of the service to the user, who is the focal point, as illustrated in Fig. 1. Indicators are therefore built according to four pillars of overall performance: (i) Human; (ii) Finance & Economy; (iii) Patrimony and Assets; and (iv) Environment.

The pillars described here are close to the six domains for partial indicator calculation (customer satisfaction, quality, availability, environment, organisation/personnel and economy) proposed in Stahre and Adamsson (2002) to assess overall performance indicators and those defined in Alegre et al. (2000) dealing with water resources, assets, personnel and operational management, quality of service and finance. The PAWWA (2012, p. 2) considers six key areas (water production, customer satisfaction, operational performance, human resources, health and environment and financial performance) for performance assessment and benchmarking. This appears to be a consensus of involved areas that are consistent with those recommended by the ISO (2007). Indicator construction is performed separately for each pillar according to an iterative and cognitive process based on interaction with decision makers. It is performed according to the current level of knowledge derived from data availability and experience feedback. The construction process is conducted according to the procedure illustrated in Fig. 2:

The first steps consist in identifying existing indicators and in co-building ad-hoc indicators with the decision maker(s). The combination of these indicators establishes an exhaustive list of potential indicators. Among the listed indicators, some are validated based on their relevance and the ability of the utility to assess and monitor them. After validation, indicators are clearly defined by the recommended formula of calculation and by identifying input data, the periodicity of calculation, and the sense of preference that may be increasing (preference for low values) or decreasing (preference for high values). Following value calculation, each indicator is fitted based on the preference of the decision maker(s) and the desired level of performance.

A performance or utility function is calibrated for each indicator in order to clearly define the relationship between levels or thresholds of measured indicators and the desired level of performance.

It also serves to normalise the indicator according to an increasing scale from 0 to 1 (or 0 to 100 %), where 1 (or 100 %) indicates the highest level of performance. Once the indicators are normalised, they are plotted with the help of a radar diagram. The overall performance reflects the total surface of the radar by weighting all indicators according to their relevance for the decision maker(s).

It is clear that the existence of an IS where data are managed in a reliable way permits the assessment of a variety of core indicators. The availability, accuracy and reliability of data are a prerequisite for indicator calculation and monitoring.

This aspect is not discussed in this paper but is widely addressed in Berg et al. (2006), CWWA (2009) and Alegre et al. (2000). Based on the assumption of availability of required data, the following sections detail the main steps of the methodology.

2.1 Indicator Construction and Validation

The construction of core indicators is based on a cognitive process with decision makers who are selected according to various skills in order to ensure the exhaustiveness of defined indicators. The set of indicators is a combination between mandatory ones and co-built ad-hoc indicators. The construction follows the predefined four pillars that constitute the overall performance.

2.1.1 Finance and Economy

The financial pillar includes all indicators that make it possible to assess the financial and economical sustainability of the water utility. Indicators measure the ability of the utility to support operating and capital expenditures without necessarily increasing water price in the context of a decrease in water consumption. Six indicators, designated F i, with i varying from 1 to 6, are defined in Table 1.

2.1.2 Human Resources

The proposed conceptual approach considers human resources as key factors for the sustainability of water utilities and their performance. For this pillar, indicators assess the ability of the water utility to dispose of required human resources in order to ensure the desired service level and to sustain water delivery.

This corresponds to the availability of skilled employees to deal with the technical dimension of the water network and to address customers’ complaints. This pillar includes six indicators, H i , as illustrated in Table 2:

2.1.3 Patrimony and Assets

Assets represent the principal means of delivering drinking water to customers. Their maintenance and renewal are recommended to guarantee service continuity within the required level. This pillar includes indicators that make it possible to assess the condition of structural and functional assets and the ability of the water utility to maintain it. This pillar measures the annual effort devoted to maintenance, renewal and related benefits.

It contains seven indicators, designated P i, as described in Table 3:

2.1.4 Environment

This pillar deals with some relevant environmental aspects that could potentially harm raw water and the customer’s health. It makes it possible to assess the pressure on raw water and its potential pollution or decrease on its quality. The energy savings is also addressed by a specific indicator. Other mandatory indicators regarding public health safety, raw and drinking water quality are also addressed. This pillar contains six indicators, designated E i, as described in Table 4:

The indicator co-building process shows that decision makers consider that all performance pillars are equivalent in terms of importance in reaching the required level of service, which is why the number of indicators per pillar is approximately the same and oscillates between six and seven for each. The proposed methodology leads to the validation of 26 indicators. It should be noted that the validation process assumes that data required for calculation and monitoring are available and seem to be reliable. The next step consists in fitting specific mathematical functions that measure decision makers’ preferences and perceptions of the relationship between the indicator value and a normalised scale that indicates the level of performance.

2.2 Performance Function

One of the main added values of the proposed methodology is the capacity of translating decision makers’ expectations in terms of performance according to a comprehensive elicitation process. One way to deal with this aspect is to fit ad-hoc mathematical functions for the set of validated performance indicators, inspired from a utility function that establishes a relationship between the intrinsic value of the indicator and a normalised scale that measures the level of performance. Because indicators are disparate and assess several dimensionalities of the performance, they require a specific approach to be used for overall performance assessment. The calibration of the performance function allows: (i) the establishment of an ad-hoc relationship between the value of an indicator and performance assessment in order to take decision maker preference and the context of the utility into account, which allows interpretation of each individual indicator; (ii) the harmonisation of the indicators’ preference sense. Some of them are decreasing (expenditure) and others increasing (income); and (iii) the normalisation of the indicator in order to limit a bias related to the scale. The proposed normalised scale assumes that performance oscillates between the lowest value 0 and the highest value 1, or 100 % in the case of a percentage scale.

Let F i (x) designate the performance function of the indicator I, where x is the variable indicating the performance values. Two senses of preference are taken into account: (i) the increasing sense for indicators that should be maximised; and (ii) the decreasing sense for indicators to be minimised. F i (x) allows scale changes based on the variation of performance values, and the shape of the function depends on the type of measured performance aspect and the decision maker’s preference. One possible function could be a linear function defined by a certain slope. This function could be used in the case of a lack of information or limited knowledge about the indicator measured.

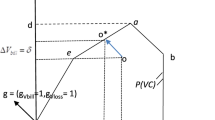

It should be improved by taking account of threshold effects that characterise most of the performance’s dimensionalities. One way to deal with this is to use the survival function proposed by Herz (1996) as a performance function. In fact, this function, which is close to a logistic function, offers the advantage of being calibrated according to three parameters. These parameters could be assimilated to thresholds that express specific performance levels. The calibration process is achieved on the basis of an interactive process with decision makers to fit thresholds for performances that are used as parameter values defined by a, b and c, where a>b>c.

The parameter “a” indicates a threshold of performance above which the normalised performance reaches a permanent or constant value (asymptote). The value of parameter “b” is obtained based on any point of the function curve. It is generally chosen as the value of the indicator corresponding to a normalised performance equal to 0.5 or 50 % (in the case of a percentage scale).

The mathematical expression of the performance function varies depending on the sense of preference; both cases are considered by the function defined in Eq. (1):

Based on Eq. (1), the value of parameter “b” can be calculated according to Eq. (2):

Figure 3 illustrates the shape of the performance function depending on the sense of preference and the calibrating parameters a, b and c.

Notice that the proposed performance function with a mathematical formulation close to the logistic function assumes that the performance level is characterised by three levels: low, average and high, which is not always valid. For more flexibility and to be more exhaustive with regard to possible variations and perceptions of some indicators, the mathematical formulation of performance function could be adapted or chosen by the user of the methodology according to considered indicators, the context of the study and decision maker preferences.

The calibration of performance functions offers the possibility to harmonise indicators in the same preference sense (increasing or decreasing) and to normalise them for overall performance assessment. The interpretation of indicator values should be done according to a cost-benefit approach by comparing the effort (action or cost) required to reach a certain target with the obtained (or desired) gain in performance. The efficiency will not be acceptable at any cost value; cost-benefit balancing is recommended. A way to address this aspect could be done by calculating the relative slope of the portion of the performance curve function between two points, x 1 and x 2 , representing indicator values with the level of performance F(x 1 ) and F(x 2). We designate this indicator as “Gain-Effort”, and it should be greater than 1. GE is calculated by Eq. (3):

GE estimates the potential enhancement of performance due to an improvement per unit of effort. The value \( \left(\frac{1}{GE}\right) \) indicates the percentage of effort required for one unit of performance improvement.

The overall performance assessment could be assimilated to a multi-criteria problem by considering indicators as criteria to be aggregated. Multi-criteria decision aid is widely dealt with in the literature (Roy 1985). The calculation of an overall note using a weighted sum approach or another additive method could be possible.

As mentioned in Berg et al. (2006), SEAWUN and SUNASS assign equal weights to partial indicators and aggregate them to assess the overall performance indicator. However, the graphical dimension seems important to be able to detect possible distortions between the considered performance dimensions. Therefore, the analysis of sub-performance per pillar could be helpful for decision makers to clearly identify the dimension to be improved. The use of a radar diagram seems relevant because it makes it possible to show the sub-surface formed by the indicators belonging to it for each pillar, as shown in Fig. 4. It provides a holistic representation of all of the indicators and the shape formed makes it possible to clearly identify dimensions to be improved. It also makes it possible to compare two or more overall performance assessments by comparing obtained charts.

The choice of an aggregating method or any other method for overall performance calculation remains sensitive; it should take all of the indicators into consideration without diluting information about performance. Another relevant aspect concerns the relative importance of each indicator for the decision makers: the weighting of an indicator constitutes a real challenge. This dimension is partially addressed in the current work where, in fact, all of the indicators are considered to be equivalent in the case study. Assigned weights are assumed to be equal to the inverse of the number of indicators.

The overall performance calculation should be performed with a method that is understood and approved by decision makers. The next section details the chosen aggregating approach for overall performance calculation.

2.3 Overall Performance Assessment

Coulibalya and Rodriguez (2004) define an overall performance indicator within the scope of a comparative performance analysis of ten small drinking water utilities in Quebec (Canada). The developed multivariable weighted additive indicator includes 18 partial indicators that comprise the following aspects: agricultural land use, raw water quality, disinfection-related, infrastructure and maintenance, and tap water quality. Rehan et al. (2010) extend the work done by Coulibalya and Rodriguez (2004) by applying OWA (Ordered Weighted Averaging) operators for the aggregation of partial indicators to better encompass decision preferences on the basis of OWA weight values. (PAWWA 2012) defines an “Overall Efficiency Index” (OPI) based on financial performance that indicates the percentage of water produced that generates actual utility revenue.

According to Bhanoji (2009, p. 6), the SEAWUN Benchmarking Programme in Asia has developed an OPI based on two sub-indicators: (i) a service delivery index (SDI) that involves coverage (COV), water consumption (CON) and water availability (AVI) indicators; and (ii) an Operational Efficiency Index (OEI) that involves water that is unaccounted for (UNW), an operating ratio (OR), accounts receivable in months (ARM) and Staff Strength per connection (ST/C). All indicators are normalised on the 0–100 scale. The overall performance is equal to the average of SDI and OEI, which corresponds to an average sum of all individual indicators.

Stahre and Adamsson (2002) benchmarked the performance of water utilities in six cities in Sweden. The authors developed an interesting diagram—the so-called “fingerprint model”—to illustrate overall performance. It is based on the plot of relevant individual indicators in such a way that they are normalised according to a reference value, e.g., the average value for the six-city group.

The general principle of the diagram is that the longer the “beams” are, the worse the conditions are. Even if the approach seems useful, no details were provided about the metric used for overall performance calculation. The benchmarking seems to be exclusively based on graph comparison. It appears from the literature review that the analysis of overall performance should include individual contradictory core indicators within an adequate metric, as well as illustrate the performance by a diagram that gives an overview of both individual and overall performance. Even if identified indicators aim at integrating some similar individual indicators, the metric is different. For all these reasons, the metric developed in the current work is based on the calculation of the total area of a polygon drawn from the set of validated indicators (see Fig. 4).

The total surface is obtained by summing up the sub-areas (S k ), as illustrated in Fig. 4. Let us consider the plotted indicators as vectors. The sub-surface S i comprised between two successive indicators, I k and I k+1 (weighted by wk and wk+1, respectively, where \( {\displaystyle \sum_{k=1}^n}{w}_k=1\ \mathrm{f}\mathrm{o}\mathrm{r}\ n\ \mathrm{indicators} \)), can then be estimated by the area of the parallelogram spanned by the vectors, which corresponds to half of the value of the cross product, as shown by Eq. (4):

According to Eq. (4), indicators are not necessarily weighted in the same way and distortions appear in the shape of parallelograms depending on the relevance or not of indicators, according to the decision maker. Distortion could decrease or increase the value of the sub-surface derived from two successive indicators and influences the value of overall performance. The overall performance OP(t) for the past year t can be calculated from sub-surfaces S k as follows:

The value obtained is dimensionless because it was assessed from normalised indicators. It should be noted that indicators harmonise the sense of preference in an increasing direction. As a result, overall performance has to be maximised. Therefore, the interpretation of a result is not an easy task because no known values of this indicator exist. Thus, the calculation of the absolute value does not seem to be sufficient to diagnose the level of the water utility’s overall performance in the absence of a reference value. One possible way to address this lack is to compare this value to a theoretical one that represents the best possible performance (utopic).

This situation corresponds to the maximum surface that could be formed by indicators. The maximum surface is reached when all indicators have the value of 1 or 100 %. The value of maximum theoretical performance is calculated by the following equation:

where R is the radius of the radar chart that becomes a circle when the maximum value of the indicator is reached, which could be equal to 1 or 100 %. The relative overall performance OP r (t) allows comparison between the overall performance and a reference threshold defined by the maximum theoretical performance. It is calculated by the following equation:

The relative overall performance has to be maximised. It is easier to interpret because values are comprised between a minimum value of 0 and a maximum value of 1 or 100 %, depending on the chosen scale.

The relative overall performance value is assessed for a given year t. It can be monitored over a specified period of time. It allows overall performance comparison and mitigation in order to adapt or improve water utility management and governance. A simple and interesting overall indicator can be defined to assess the overall relative potential margin of progression or improvement, designated OI r (t), as follows:

The next section illustrates the use of the proposed method on an actual case study.

3 Case Study

The developed approach was implemented on the public water utility of Grenoble in southeastern France. It delivers some 11 million m3 of water for approximately 51,000 customers using a long urban distribution network with a length of 260 km. The overall performance analysis was performed over 5 years between 2007 and 2011.

This section focuses on two main steps of the proposed approach: (i) the calibration of the performance function for individual indicators; and (ii) the assessment of overall performance based on the proposed indicators. It should be noted that all indicators (26) were suggested for performance assessment.

3.1 Input Data

The calculation of validated indicators assumes the availability of reliable data in the IS. Lack of data is not addressed in this paper but could constitute a real handicap for the implementation of the developed approach.

Before the indicators were calculated, data coherence and reliability were checked by comparing various sources and files of similar information. For some data, the decision makers were asked to check their pertinence in terms of values and to determine if they really corresponded to their order of magnitude, especially for financial and technical data.

It appears that a specific control scheme is required to assess the validity of data. Many errors were discovered, most of which were generated when the databases were constituted. The coherence of values must be verified and can be done either manually or automatically since both appear to be relevant.

3.2 Implementation and Results

After data control, the indicators were computed. The next step consists in fitting an ad-hoc performance function according to the sense of preference of each indicator. The calibration is carried out according to an interactive process with the decision makers. In the case of the Grenoble utility, two decision maker profiles were chosen that covered technical, tactical and strategic decision scales. The process involved the head of the utility (strategic profile) and the technical director (tactical and technical profile). The interactive process makes it possible to define the sense of preference of the indicators and to estimate the value of performance function parameters a, b and c. Some indicators are already normalised with an increasing sense of preference, so they do not require calibration. The performance function is directly derived from indicator values such as P 4 .

Let us consider the indicator P 5 of the patrimony pillar. The interaction with decision makers implies that the sense of preference of this indicator is decreasing, which means that lower values are preferred. They consider that if the network ages by less than 6 months each year (c = 6 months), the performance should be maximum; otherwise, it decreases to 0 when it ages by 12 months each year (a = 12). The value of b is derived from Eq. (2) by considering that half of performance F(x) = 0.5 corresponds to 8 months, as recommended by decision makers (Fig. 5).

Decision makers consider that the indicator P 6 that estimates the average annual rate of pipe renewal has an increasing sense of preference. They assume that the minimum value should be equal to c = 0.005 (0.5 %) of annual renewal. The maximum performance is reached for a threshold of at least a = 0.03 (3 %) of annual renewal. The value of b = 186.83 is obtained by considering that if the rate is equal to 0.01, the performance reaches 0.60, according to the decision makers (Eq. (2)) (Fig. 6).

This process is repeated for the 26 indicators in order to determine the parameter values of the performance function. Table 5 gives an overview of the parameter fitting of parameter values for the patrimony pillar.

The calibration of parameter values is performed for all indicators for the human, financial and environmental pillars. This allows the normalisation of all indicators and the plot radar chart for overall performance assessment. The plots obtained from the assessment indicators over the period 2007 to 2011 are illustrated in Fig. 7.

The radar chart offers an overview of the performance of policy implemented between 2007 and 2011. It clearly appears that the level of performance between pillars is not completely homogenous. For example, the environmental pillars seem to be better addressed than the other pillars. This makes it possible to identify shortfalls such as F 4 , P 2, P 3 and P 6. Improvement of the performance of the patrimony pillar seems to be a priority. The comparison of individual indicator values shows a real improvement for some of them such as E 1 , which varies from 50 to 99 %. Stagnation is observed for some others such as F 4 , P 3 and F 4.

The use of the surface of the radar as a specific metric for overall performance assessment makes it possible to calculate OP(t) and OP r(t), and to deduce OI r(t) from 2007 until 2011, which are summarised in Table 6.

The overall performance allows benchmarking from year to year but does not clearly reflect the level of performance for a given year. The use of the relative overall performance offers a normalised scale of performance between 0 and 1 (0 to 100 %). The use of normalised weighted sums overestimates the value of performance because of the compensatory effect between indicators. It appears that the overall performance oscillates between 0.69 and 0.79, with an average value for 5 years equal to 0.75. The margin of progression to theoretical performance is comprised between 0.20 and 0.31. A real progression is possible to improve the overall performance, especially for the patrimony pillar. Moreover, it is interesting to measure the cost of progression by calculating the marginal cost of improvement. In the context of Grenoble, overall performance satisfies the decision makers, despite the potential improvement, and the trend of overall performance seems stable at around 0.70. This indicates a certain coherence of the implemented policy with homogeneous benefits over the specified time period. Analysis of the results shows a certain stagnation of indicators P 3, P 4 and P 6. This could be explained by the fact that both P 3 and P 4 count the occurrence of failures on pipes and connections, which is not exclusively dependent on preventive maintenance or renewal programs. A meteorological condition can explain some of the observed failures, especially in winter, which are completely due to temperature decrease. These indicators should include climate variables to correct the annual assessment, as well as benchmarking from year to year by considering the variation of temperature over the specified time period. Concerning P 6 , it reflects the annual renewal effort, which actually seems low at around 1 %, corresponding to 0.60 of performance according to the decision makers’ preferences. The analysis of performance function (Fig. 6) shows that the annual renewal rate should be increased from 1 to 1.5 % to improve the performance from 0.60 to 0.80, i.e., to increase performance by 33 %, the renewal effort should be increased by 50 % (GE = 0.66, meaning that an additional 1 % of effort increases performance by only 0.66 %). This value reaches 100 % of supplementary effort if the target is 3 %, which represents a performance gain of 40 % to reach a performance level of 1 (GE = 0.40). The definition of a target by the decision makers should therefore consider the marginal effort required to reach a certain level of performance. In some cases, the effort could be considerable compared to the gain in performance. The calculation of GE allows mitigation between indicators in order to direct the effort to where the improvement of performance is the most suitable. The amount of effort required in terms of cost and adaptation of actual policy must not create an imbalance for the water utility, especially in terms of finances. For example, an increase in capital or operating expenditures could require an increase in water prices that could penalise customers.

4 Conclusion

The current work discusses the necessity to go beyond technical boundaries for performance assessment and to involve all aspects that could downgrade the level of service. The main added value of the proposed approach is the definition of a structured step-by-step method based on existing and co-built individual indicators in order to be able to establish links between relevant dimensionalities of the overall performance and to assess it in an original way with regard to the level of knowledge of the water utility and the decision maker’s preferences. The proposed indicators could be adapted or replaced by others involving the multiple dimensions of performance, but the way to calibrate them could be performed as recommended, based on the definition of an ad-hoc performance function according to an interactive cognitive process with the stakeholders. It clearly appears that the involvement of decision makers in the implementation of the methodology is a key factor because it makes it possible to describe preferences, to clearly establish thresholds for performance analysis and to adapt existing indicators to be assessed and monitored. Another important aspect concerns the evolution of knowledge about the water utility and how IS could be improved, especially for small utilities.

In fact, data collection and management constitute a potential breakthrough for the implementation of this type of method. However, implementation can be progressive.

The set of indicators is enriched over time within the scope of the knowledge management improvement process to enhance the water utility performance. In other words, the proposed method is a pedagogical learning guide for utilities to better improve their performance assessment. The number of involved indicators depends on the level of knowledge about the water utility and the dimensionalities to be considered that are still specific to each utility. Ad-hoc specificity offers a certain degree of freedom for water utilities to adapt their performance assessment to their context. The approach developed here seems to be more relevant for the benchmarking of the year-by-year individual performance results than for comparing utilities among themselves since the calibration and performance indicators involved are not necessarily the same.

The assessment of overall performance could be considered as a multi-criteria issue. The selection of a pertinent aggregating method is not an easy task because there is no consensus as to the most appropriate one. The relative importance of each indicator was considered to be similar within the context of the study, which is not always true. In this case, a weighting process involving stakeholders should be implemented in order to include the non-homogeneity of indicator weights.

It appears that performance should be improved on the basis of a cost-benefit process that ensures a trade-off between the required effort and the targeted performance. A satisfactory level of performance describing consensus between stakeholders should be defined in order to ensure the sustainability of the service being delivered. The developed approach contributes to more effectively understanding and monitoring the ex post overall performance of water utilities. It clearly provides an overview of the general performance trend resulting from past decisions by including the potential inertia due to past policies as well that continue to have an impact for several years after, which is why the performance analysis should be done over successive years. A question naturally rises concerning the use of the method developed for ex ante performance assessment, which constitutes one of the suitable improvements of the methodology.

References

Alegre H, Hirnir W, Baptisia JM, Parena R (2000)Performance indicators for water supply services. Manual of Best Practice Series, IWA Publishing, London, ISBN 1 90022202702. 146p

Aydin NY, Mays L, Schmitt T (2014) Sustainability assessment of urban water distribution systems. Water Resour Manag 28(12):4373–4384. doi:10.1007/s11269-014-0757-1

Berg SV (2007) Conflict resolution: benchmarking water utility performance. Public Adm Dev 27(1):1–11

Berg SV (2010) Water utility benchmarking: measurement, methodologies, and performance incentives. IWA Publishing. pp 1–80

Berg SV, Marques RC (2011) Quantitative studies of water and sanitation utilities: a literature survey, Water Policy, Forthcoming

Berg SV, Corton ML, Lin C, Sabbioni G, Jiang L, Jones A (2006) Water benchmarking support system: survey of benchmarking methodologies. PURC report. 97p. http://warrington.ufl.edu/centers/purc/purcdocs/PAPERS/TRAINING/Jamaica/July2006/advancedreadings/Benchmarking_Survey_Report.pdf. Accessed Mar 2015

Bhanoji R (2009) A ranking scheme for asian utilities. institute of water policy. Lee Kuan Yew School of Public Policy. National University of Singapore. Working Paper Series, Serial No IWP/WP/No.9/2009. http://lkyspp.nus.edu.sg/iwp/wp-content/uploads/sites/3/2013/04/A_Ranking_Scheme_for_Asian_Water_Utilities.pdf. Accessed Mar 2015

Canadian Water and Wastewater Association, CWWA (2009). Water conservation and efficiency performance measures and benchmarks within the municipal sector. An identification of current practices and an assessment of the feasibility of expanding their use. 50p. http://www.cwwa.ca/pdf_files/Final%20Project%20Report%20MOE%20Performance%20Indicators-%202009-03-31.pdf. Accessed Mar 2015

Canneva G, Guérin-Schneider L (2011) National monitoring of water utility performance in France. Water Sci Technol Water Supply 11(6):745–753

Coulibalya HD, Rodriguez MJ (2004) Development of performance indicators for small Quebec drinking water utilities. J Environ Manag 73:243–255

Eccles RG (1991) The performance measurement manifesto. Harv Bus Rev 69:131–137

Farmani R, Butler D (2013) Implications of urban form on water distribution systems performance. Water Resour Manag 28:83–97. doi:10.1007/s11269-013-0472-3

Guérin-Schneider L, Nakhla M (2003) Les indicateurs de performance : une évolution clef dans la gestion et la régulation des services d’eau et d’assainissement. Flux 52(53):55–68

Herz R (1996) Ageing processes and rehabilitation needs of drinking water distribution networks. J Water Supply Res Technol-Aqua 45(5):221–231

ISO (2007) ISO 24512, Activities relating to drinking water and wastewater services — Guidelines for the management of drinking water utilities and for the assessment of drinking water services. 54p. http://www.iso.org. Accessed Mar 2015

Kulshrestha M (2006) A scorecard for measuring the water supply utility performance: the case of large Indian metropolises. Int J Manag Decis Making 7(4):418–437

Ministère de l’écologie et du développement durable, MEDD(2007) Décret no 2007–675 du 2 mai 2007 pris pour l’application de l’article L. 2224–5 et modifiant les annexes V et VI du code général des collectivités territoriales.. Journal Officiel de la République Française

Nazif S, Karamouz M, Yousefi M, Zahmatkesh Z (2013) Increasing water security: an algorithm to improve water distribution performance. Water Resour Manag 27:2903–2921. doi:10.1007/s11269-013-0323-2

Nudurupati SS, Bititci US, Kumar V, Chan FTS (2011) State of the art literature review on performance measurement. Comput Indutrial Eng 60(2):279–290

Pacific Water and Wastes Association, PAWWA (2012) Pacific water and wastewater utilities: benchmarking report. 108p. http://www.ib-net.org/docs/PWWA.pdf. Accessed Mar 2015

Romano G, Guerrini A (2011) Measuring and comparing the efficiency of water utility companies: a data envelopment analysis approach. Util Policy 19(3):202–209

Roy B (1985), Méthodologie multicritère d’aide à la décision, Economica, Paris, 423 p

Sadiq R, Rodríguez MJ, Tesfamariam S (2010) Integrating indicators for performance assessment of small water utilities using ordered weighted averaging (OWA) operators. Expert Syst Appl 37:4881–4891

Smets H (2008) De l’eau potable à un prix abordable, la pratique des États, Académie de l’Eau, 254 p. http://www.water-tariff-for-vunerable.com/documents/Case_Studies/FR_Eau%20potable%20prix%20abordable%20smets%20La%20pratique%20des%20etats.pdf. Accessed Mar 2015

Stahre P, Adamsson J (2002) Performance benchmarking – A powerful management tool for water and wastewater utilities, 23p. www.urbaneconomics.ru/eng/download.php?dl_id=59. Accessed Mar 2015

Thanassoulis E (2000) DEA and its use in the regulation of water companies. Eur J Oper Res 1:1–13

Varis O (2005) Water and sustainable development: Paradigms, challenges and the reality. University partnerships for international development, Finish development knowledge. Finland future research center, Helsinki, pp 34–60

Ven der Berg C, Danielko A (2011) The IBNET Water Supply and Sanitation Performance Blue Book The International Benchmarking Network for Water and Sanitation Utilities Databook. The World Bank. 152 p. http://www.seawun.org/uploads/Focus%20Programs/IBNet-Blue%20Book.pdf. Accessed Mar 2015

Vilanova MRN, Filho PM, Balestieri JAP (2015) Performance measurement and indicators for water supply management: Review and international cases. Renewable and Sustainable Energy Reviews 43:1–12

Acknowledgments

The work presented was carried out within the framework of a specific collaboration contract funded by the water utility of Grenoble. We are grateful to all of the utility employees who helped us with our research and provided us with data, information and advice.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Nafi, A., Tcheng, J. & Beau, P. Comprehensive Methodology for Overall Performance Assessment of Water Utilities. Water Resour Manage 29, 5429–5450 (2015). https://doi.org/10.1007/s11269-015-1127-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11269-015-1127-3