Abstract

Linear regression for Hidden Markov Model (HMM) parameters is widely used for the adaptive training of time series pattern analysis especially for speech processing. The regression parameters are usually shared among sets of Gaussians in HMMs where the Gaussian clusters are represented by a tree. This paper realizes a fully Bayesian treatment of linear regression for HMMs considering this regression tree structure by using variational techniques. This paper analytically derives the variational lower bound of the marginalized log-likelihood of the linear regression. By using the variational lower bound as an objective function, we can algorithmically optimize the tree structure and hyper-parameters of the linear regression rather than heuristically tweaking them as tuning parameters. Experiments on large vocabulary continuous speech recognition confirm the generalizability of the proposed approach, especially when the amount of adaptation data is limited.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Hidden Markov Models (HMM) have been widely used for time series analysis (e.g., speech, text, and image processing). HMM parameters can be estimated by statistical methods, effectiveness of which depends on the quality and quantity of available data that should distribute according to the statistical feature of intended signal space or conditions. As there is no sure way of collecting sufficient data to cover all conditions, adaptive training of HMM parameters from a set of previously obtained parameters to a new set that befits a specific environment with a small amount of new data is an important research issue.

In speech recognition, one approach is to view the adaptation of model parameters to new data as a transformation problem; that is, the new set of model parameters is a transformed version of the old set: λ n+1 = f(λ n ,{x} n ), where {x} n denotes the new set of data available at moment n for the existing model parameters λ n to adapt to. Most frequently and practically, the function f is chosen to be of an affine transformation type [1, 2]: λ n+1 = A λ n + b, when various parts of the model parameters, e.g., the mean vectors or the variances, are envisaged in a vector space. The adaptation algorithm therefore involves deriving the affine map components, A and b, from the adaptation data {x} n . A number of algorithms have been proposed for this purpose. (See [3, 4] for detail. There are many variants of transformation types for HMMs, e.g., [5-9]). Some techniques bear the name "linear regression", and our paper also uses this name by convention. There are many other applications of the adaptive training of HMMs than speech recognition (e.g., speech synthesis [10], speaker recognition [11], face recognition [12] and activity recognition [13]).

For automatic speech recognition, the number of the Gaussian distributions or simply Gaussians, which are used as component distributions in forming state-dependent mixture distributions, is typically in the thousands or more. If each mean vector in the set of Gaussians is to be modified by a unique transformation matrix, the number of “adaptation parameters” can therefore be quite large. The main problem of this method is thus how to improve “generalization capability” by avoiding the over-training problem when the amount of adaptation data is small. To solve the problem, there are mainly two approaches: 1) model selection and 2) prior knowledge utilization.

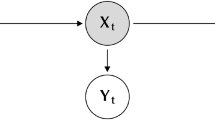

The model selection approach is originally proposed within the estimation of linear transformation parameters by using the maximum likelihood EM algorithm (called Maximum Likelihood Linear Regression (MLLR)). MLLR proposes to share one linear transformation in a cluster of many Gaussians in the HMM set, thereby effectively reducing the number of free parameters that can then be trained with a small amount of adaptation data. The Gaussian clusters are usually constructed as a tree structure, as shown in Fig. 1, which is pre-determined and fixed throughout adaptation.

This tree (called regression tree) is constructed based on a centroid splitting algorithm, described in [14]. This algorithm first makes two centroid vectors from a random perturbation of the global mean vector computed from Gaussians assigned to a target leaf node. Then, it splits a set of these Gaussians according to the Euclidean distance between Gaussian mean vectors and two centroid vectors. Obtained two sets of Gaussians are assigned to child nodes, and this procedure is continued to finally build a tree.

The utility of the tree structure is commensurate with the amount of adaptation data; namely, if we have a small amount of data, it uses only coarse clusters (e.g., the root node of a tree in the top layer of Fig. 1) where the number of free parameters in the linear transformation matrices is small. On the other hand, if we have a sufficiently large amount of data, it can use fine clusters where the number of free parameters in the linear transformation matrices is large, potentially improving the precision of the estimated parameters. This framework needs to select appropriate Gaussian clusters according to the amount of data, i.e., it needs an appropriate model selection function. Usually, the model selection is performed by setting a threshold value manually (e.g., the total number of speech frames assigned to a set of Gaussians in a node).

While the regression tree in MLLR can be considered one form of prior knowledge, i.e., how various Gaussian distributions are related, another approach is to explicitly construct and use prior knowledge of regression parameters in an approximated Bayesian paradigm. For example, Maximum A Posteriori Linear Regression (MAPLR) [15] and quasi-Bayes linear regression [16] replace the ML criterion with the MAP and quasi-Bayes criteria, respectively, in the estimation of regression parameters. With the explicit prior knowledge acting as a regularization term, MAPLR appears to be less susceptible to the problem of over-fitting. The MAPLR is extended to the structural MAP (SMAP) [17] and the structural MAPLR (SMAPLR) [18], both of which fully utilize the Gaussian tree structure used in the model selection approach to efficiently set the hyper-parameters in prior distributions. In SMAP and SMAPLR, the hyper-parameters in the prior distribution in a target node are obtained by the statistics in its parent node. Since the total number of speech frames assigned to a set of Gaussians in the parent node is always larger than that in the target node, the obtained statistics in the parent node is more reliable than that in the target node, and these can be good prior knowledge for transformation parameter estimation in the target node. Another extension of MAPLR is to replace MAP approximation to a fully Bayesian treatment of latent models, called variational Bayes (VB). VB has been developed in the machine learning field based on a variational technique [19-23], and has been successfully applied to HMM training in speech recognition [24-31]. VB is also applied to the estimation of the linear transformation parameters of HMMs [32, 33] to achieve further generalization capabilities.

This paper also employs VB for the linear regression problem, but we focus on the model selection and efficient prior utilization at the same time, in addition to the estimation of the linear transformation parameters of HMMs proposed in previous work [32,33]. In particular, we consistently use the variational lower bound as the optimization criterion for the model structure and hyper-parameters, in addition to the posterior distributions of the transformation parameters and the latent variables.Footnote 1 Since this optimization leads the approximated variational posterior distributions to the true posterior distributions theoretically in the sense of minimizing Kullback Leibler divergence between them, the above consistent approach yields to improve the generalization capability [20, 22, 23]. To do this, this paper provides an analytical solution to the variational lower bound by marginalizing all possible transformation parameters and latent variables introduced in the linear regression problem. The solution is based on a variance-normalized representation of Gaussian mean vectors to simplify the solution as normalized domain MLLR. As a result of variational calculation, we can marginalize the transformation parameters in all nodes used in the structural prior setting. This is a part of the solution of the variational message passing algorithm [34], which is a general framework of variational inference in a graphical model. Furthermore, the optimization of the model topology and hyper-parameters in the proposed approach yields an additional benefit to the improvement of the generalization capability. For example, the proposed approach infers the linear regression without controlling the Gaussian cluster topology and hyper-parameters as the tuning parameters. Thus linear regression for HMM parameters is accomplished without excessive parameterization in a Bayesian sense.

This paper is organized as follows. It first introduces the conventional MLLR framework in Section 2. Then, we provide a formulation of the Bayesian linear regression framework in Section 3. Based on the formulation, Section 4 introduces a practical model selection and hyper-parameter optimization scheme in terms of optimizing the variational lower bound. Section 5 reports unsupervised speaker adaptation experiments for a large vocabulary continuous speech recognition task, and confirms the effectiveness of the proposed approach. The mathematical notations used in this paper are summarized in Table 1.

2 Linear Regression for Hidden Markov Models Based on Variance Normalized Representation

This section briefly explains a solution for the linear regression parameters for HMMs within a maximum likelihood EM algorithm framework. This paper uses a solution based on a variance normalized representation of Gaussian mean vectors to simplify the solution.Footnote 2 In this paper, we only focus on the transformation of Gaussian mean vectors in HMMs.

2.1 Maximum Likelihood Solution Based on EM Algorithm and Variance Normalized representation

First, we explain the basic EM algorithm of the conventional HMM parameter estimation to set the notational convention and to align with the subsequent development of the proposed approach. Let O ≜ {o t ∈ ℝD|t = 1,⋯,T} be a sequence of D dimensional feature vectors for t speech frames. The latent variables in a continuous density HMM are composed of HMM states and mixture components of GMMs. A sequence of HMM states is represented by S ≜ {s t | t = 1,⋯, T}, where the value of s t denotes an HMM state index at frame t. Similarly, a sequence of mixture components is represented by Z ≜ {z t | t = 1, ⋯, T}, where the value of z t denotes a mixture component index at frame t. The EM algorithm deals with the following auxiliary function as an optimization function instead of directly using the model likelihood:

where Θ is a set of HMM parameters. The brackets 〈〉 denote the expectation i.e. 〈 g(y)〉 p (y) ≡ ∫ g(y)p(y)dy for a continuous random variable y and < g (n)> p (n) ≡ ∑ n g(n) p(n) for a discrete random variable n. p(O,S,Z|Θ) is a complete data likelihood given Θ. p(S, Z|O; Θ̂) is the posterior distribution of the latent variables given the previously estimated HMM parameters Θ̂. Eq. 1 is an expected value, and is efficiently computed by using the forward-backward algorithm as the E-step of the EM algorithm.

The M-step of the EM algorithm estimates HMM parameters, as follows:

The E-step and the M-step are performed iteratively until convergence, and finally we obtain the HMM parameters as a close approximate of the stationary point solution.

Now we focus on the linear transformation parameters within the EM algorithm. We prepare a transformation parameter matrix W j for each leaf node j in a Gaussian tree. Here, we assume that the Gaussian tree is pruned by a model selection approach as a model structure m, and the set of leaf nodes in the pruned tree is represented as \({\mathcal {J}}_{m}\). Hereinafter, we use V to denote a joint event of S and Z (i.e., V ≜ {S, Z}). This will much simplify the following development pertaining to the adaptation of the mean and the covariance parameters. Similar to Eq. 1, the auxiliary function with respect to a set of transformation parameters \(\boldsymbol {\Lambda }_{{\mathcal {J}}_{m}} = \{\mathbf {W}_{j} | j = 1, \cdots, |{\mathcal {J}}_{m}| \} \) can be represented as follows:

k denotes a unique mixture component index of all Gaussians in the target HMMs (for all phoneme HMMs in a speech recognition case), and k is the total number of Gaussians. \(\zeta _{k, t} \triangleq p(v_{t} = k|\mathbf {O}; \boldsymbol {\Theta }, \hat {\boldsymbol {\Lambda }}_{{\mathcal {J}}_{m}})\) is the posterior probability of mixture component k at t, derived from the previously estimated transformation parameters \(\hat {\boldsymbol {\Lambda }}_{{\mathcal {J}}_{m}}\).Footnote 3 \(\boldsymbol {\mu }_{k}^{ad}\) is a transformed mean vector with \(\boldsymbol {\Lambda }_{{\mathcal {J}}_{m}}\), and the concrete form of this vector is discussed in the next paragraph. In the Q function, we disregard the parameters of the state transition probabilities and the mixture weights since they do not depend on the optimization with respect to \(\boldsymbol {\Lambda }_{{\mathcal {J}}_{m}}\). \({\mathcal {N}}(\cdot | \boldsymbol {\mu }, \mathbf {\Sigma })\) denotes a Gaussian distribution with mean parameter μ and covariance matrix parameter Σ, and is defined as follows:

where tr[·] and ′ mean the trace and transposition operations of a matrix, respectively. g(Σ k ) is a normalization factor, and is defined as follows:

In the following paragraphs, we derive Eq. 3 as a function of \(\boldsymbol {\Lambda }_{{\mathcal {J}}_{m}}\) to optimize \(\boldsymbol {\Lambda }_{{\mathcal {J}}_{m}}\), similar to Eq. 2.

We consider the concrete form of the transformed mean vector (μ k ad) based on the variance normalized representation. We first define Cholesky decomposition matrix C k as follows:

C k is a D×D triangular matrix. If the Gaussian k is included in a set of Gaussians \({\mathcal {K}}_{j}\) in leaf node j (i.e., \(k \in {\mathcal {K}}_{j}\)), the affine transformation of a Gaussian mean vector in a covariance normalized space (C k )−1 μ k ad is represented as follows:

ξ k is an augmented normalized vector of an initial (non-adapted) Gaussian mean vector μ k ini. W j is a D×(D+1) affine transformation matrix. j is a leaf node index that holds a set of Gaussians. Namely, transformation parameter W j is shared among a set of Gaussians \({\mathcal {K}}_{j}\). The clustered structure of the Gaussians is usually represented as a binary tree where a set of Gaussians belongs to each node.

The Q function of \(\boldsymbol {\Lambda }_{{\mathcal {J}}_{m}}\) is represented by substituting Eqs. 7 and 4 into Eq. 3 as follows:

where Ξ j and Z j are 0th and 1st order statistics of linear regression parameters defined as:

Here Z j is a D×(D+1) matrix and Ξ j is a (D+1)×(D+1) symmetric matrix. ζ k , ν k , and S k are defined as follows:

These are the 0th, 1st, and 2nd order sufficient statistics of Gaussians in HMMs, respectively.

Since Eq. 8 is represented as a quadratic form with respect to W j , we can obtain the optimal W̄ j , similar to Eq. 2. By differentiating the Q function with respect to W j , we can derive the following equationFootnote 4

Thus, we can obtain the following analytical solution:

Therefore, the optimized mean vector parameter is represented as:

Therefore, μ k ad is analytically obtained by using the statistics (Z j and Ξ j in Eq. 9) and initial HMM parameters (C k and ξ k ). This solution corresponds to the M-step of the EM algorithm, and the E-step is performed by the forward-backward algorithm, similarly to that of HMMs, to compute these statistics.

3 Bayesian Linear Regression

This section provides an analytical solution for Bayesian linear regression by using a variational lower bound. The previous section only considers a regression matrix in leaf node \(j \in {\mathcal {J}}_{m}\), we also consider a regression matrix in leaf or non-leaf node \(i \in {\mathcal {I}}_{m}\) in the Gaussian tree given model structure m. Then, we focus on a set of regression matrices in all nodes Λ I m = {W i |i = 1,⋯,|I m |}, instead of \(\boldsymbol {\Lambda }_{{\mathcal {J}}_{m}}\), and marginalize Λ I m in a Bayesian manner. This extension involves the structural prior setting as proposed in SMAP and SMAPLR [17,18].

In this section, we mainly deal with:

-

the prior distribution of model parameters \(p(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}; m,\boldsymbol { \Psi }) \)

-

the true posterior distribution of model parameters and latent variables \(p (\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}, \mathbf {V} | \mathbf {O}; m, \mathbf {\Psi })\)

-

the variational posterior distribution of model parameters and latent variables \(q (\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}, \mathbf {V} | \mathbf {O}; m, \mathbf {\Psi })\)

-

the output distribution \(p(\mathbf {O}, \mathbf {V}|\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}; \boldsymbol {\Theta })\)

For simplicity, we omit some conditional variables in these distribution functions, as follows:

3.1 Variational Lower Bound

With regard to the variational Bayesian approaches, we first focus on the following marginalized log-likelihood p(O;Θ,m,Ψ) with a set of HMM parameters Θ, a set of hyper-parameters Ψ, and a model structure.Footnote 5 Footnote 6

where \(p(\mathbf {O}, \mathbf {V}|\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}; \boldsymbol {\Theta })\) is the output distribution of the transformed HMM parameters with transformed mean vectors μ k ad. p(Λ I m ; m, Ψ) is a prior distribution of transformation matrices Λ I m . In the following explanation, we omit Θ, m, and Ψ in the prior distribution and output distribution for simplicity, i.e., p(Λ I m ; m, Ψ) → p (Λ I m ), and p(O, V|Λ I m ; Θ) → p(O, V|Λ I m ).

The variational Bayesian approach focuses on the lower bound of the marginalized log likelihood \({\mathcal {F}} (m, \mathbf { \Psi })\) with a set of hyper-parameters Ψ and a model structure m, as follows:

The inequality in Eq. 15 is supported by the Jensen’s inequality: log(〈 X〉 p(X)) ≥ 〈 log (X) 〉 p(X). \(q(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}, \mathbf {V})\) is an arbitrary distribution, and is optimized by using a variational method to be discussed later. For simplicity, we omit m, Ψ, and O from the distributions. The variational lower bound is a better approximation of the marginalized log likelihood than the auxiliary functions of maximum likelihood EM and maximum a posteriori EM algorithms that point-estimate model parameters, especially for small amount of training data [21-23]. Therefore, the variational Bayes can mitigate the sparse data problem that the conventional approaches must confront with.

The variational Bayes regards the variational lower bound \({\mathcal {F}} (m, \mathbf { \Psi })\) as an objective function for the model structure and hyper-parameter, and an objective functional for the joint posterior distribution of the transformation parameters and latent variables [22,23]. In particular, if we consider the true posterior distribution \(p (\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}, \mathbf {V} | \mathbf {O})\) (we omit conditional variables m and Ψ for simplicity), we obtain the following relationship:

This equation means that maximizing the variational lower bound \({\mathcal {F}} (m, \mathbf { \Psi })\) with respect to \(q(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}, \mathbf {V})\) corresponds to minimizing the Kullback-Leibler (KL) divergence between \(q(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}, \mathbf {V})\) and \(p (\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}, \mathbf {V} | \mathbf {O})\) indirectly. Therefore, this optimization yields to find \(q(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}, \mathbf {V})\), which approaches to the true posterior distribution.Footnote 7

Thus, in principle, we can straightforwardly obtain the (sub) optimal model structure, hyper-parameters, and posterior distribution, as follows:

This optimization steps are performed alternately, and finally lead to local optimum solutions, similar to the EM algorithm. However it is difficult to deal with the joint distribution \(q(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}, \mathbf {V})\) directly, and we propose to factorize them by utilizing a Gaussian tree structure. In addition, we also set a conjugate form of the prior distribution \(p(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}})\). This procedure is a typical recipe of VB to make a solution mathematically tractable similar to that of the classical Bayesian adaptation approach.

3.2 Structural Prior Distribution Setting in a Binary Tree

We utilize a Gaussian tree structure to factorize the prior distribution \(p(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}})\). We consider a binary tree structure, but the formulation is applicable to a general non-binary tree. We define the parent node of i as p(i), the left child node of i as l(i), and the right child node of i as r(i), as shown in Fig. 2, where a transformation matrix is prepared for each corresponding node i. If we define W 1 as the transformation matrix in the root node, we assume the following factorization for the prior distribution \(p(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}})\),

To make the prior distribution a product form in the last line of Eq. 18, we define p(W 1) ≜ p(W 1|W p(1)). As seen, the effect of the transformation matrix in a target node propagates to its child nodes.

This prior setting is based on an intuitive assumption that the statistics in a target node is highly correlated with the statistics in its parent node. In addition, since the total number of speech frames assigned to a set of Gaussians in the parent node is always larger than that in the target node, the obtained statistics in the parent node is more reliable than that in the target node, and these can be good prior knowledge for the transformation parameter estimation in the target node.

With a Bayesian approach, we need to set a practical form of the above prior distributions. A conjugate distribution is preferable as far as obtaining an analytical solution is concerned, and we set a matrix variate normal distribution similar to Maximum A Posteriori Linear Regression (MAPLR [15]). A matrix variate normal distribution is defined as follows:

where M i is a D×(D+1) location matrix, Ω i is a (D+1)×(D+1) symmetric scale matrix, and Φ i is a D×D symmetric scale matrix. Ω i represents correlation of column vectors, and Φ i represents correlation of raw vectors. These are hyper-parameters of the matrix variate normal distribution. There are many hyper-parameters to be set, and this makes the implementation complicated. In this paper, we try to find another conjugate distribution with fewer hyper-parameters than Eq. 19. To obtain a simple solution for the final analytical results, we use a spherical Gaussian distribution that has the following constraints on Ω i and Φ i :

where I D is the D×D identity matrix. ρ i indicates a precision parameter. Then, Eq. 19 can be rewritten as follows:

where h(ρ i −1 I D+1) is a normalization factor, and defined as

This approximation means that matrix elements do not have any correlation each other. This can produce simple solutions for Bayesian linear regression.Footnote 8

Based on the spherical matrix variate normal distribution, the conditional prior distribution p(W i |W p(i)) in Eq. 18 is obtaining by setting the location matrix as the transformation matrix W p(i) in the parent node with the precision parameter ρ i as follows:

Note that in the following sections W i and W p(i) are marginalized. In addition, we set the location matrix in the root node as the deterministic value of W p(1) = [0,I D ]. Since \(\boldsymbol {\mu }_{k}^{ad} = \mathbf {C}_{k} \mathbf {W}_{{\mathsf {p}}(1)} \boldsymbol {\xi }_{k} = \boldsymbol {\mu }_{k}^{ini}\) from Eq. 7, this hyper-parameter setting means that the initial mean vectors are not changed if we only use the prior knowledge. This makes sense in the case of small amount of data by fixing the HMM parameters as their initial values; this in a sense also inherits the philosophical background of Bayesian adaptation, although the objective function has been changed from a posteriori probability to a lower bound of the marginalized likelihood. Therefore, we just have \(\{\rho _{i} | i = 1, \cdots, |{\mathcal {I}}_{m}|\}\) as a set of hyper-parameters Ψ, which will be also optimized in our framework.

3.3 Variational Calculus

In VB, we also assume the following factorization form to the posterior distribution \(q (\mathbf {V}, \boldsymbol {\Lambda }_{{\mathcal {I}}_{m}})\):

Then, from the variational calculation for \({\mathcal {F}} (m, \mathbf { \Psi })\) with respect to q(W i ), we obtain the following (sub) optimal solution for q(W i ):

where we use Eqs. 18 and 24 to rewrite the equation. Operation \(\propto \) denotes the proportional relationship between the left and the right hand sides of the probabilistic distribution functions. It is a useful expression since we do not have to write normalization factors explicitly, which are disregarded in the following calculations. In Eq. 25, ∝ is also used in the logarithmic domain where normalization factors can be represented as constant terms.

In this expectation, we can consider the following two cases of variational posterior distributions:

3.3.1 1) Leaf Node

We first focus on the prior term of Eq. 25. If i is a leaf node, we can disregard the expectation with respect to \(\prod _{i' \in {\ I} _{m} \setminus i} q (\mathbf {W}_{i'})\) in the other nodes than the parent node p(i) of the target leaf node. Thus, we obtain the following simple solution:

3.3.2 2) Non-Leaf Node (with child nodes)

Similarly, if i is a non-leaf node, in addition to the parent node p(i) of the target node, we also have to consider the child nodes l(i) and r(i) of the target node for the expectation, as follows:

In both cases, the posterior distribution of the transformation matrix in the target node depends on those in the parent and child nodes. Therefore, the posterior distributions are iteratively calculated. This inference is known as a variational message passing algorithm [34], and Eqs. 26 and 27-1 are specific solutions of the variational message passing algorithm to a binary tree structure. The next section provides a concrete form of the posterior distribution of the transformation matrix.

3.4 Posterior Distribution of Transformation Matrix

We first focus on Eq. (27), which is a general equation of Eq. 26 that has additional terms based on child nodes to Eq. 26. Equation (27-4) is based on the expectation with respect to \(\prod _{i' \in {\ I} _{m} \setminus i } q (\mathbf {W}_{i'})\) and q(V). The term with q(V) is represented as the following expression similar to Eq. 8:

Here sufficient statistics (ζ k , S k , Ξ i , and Z i in Eqs. 9 and 10) are computed by the VB-E step (e.g., ζ k,t = q(v t = k)), which is described in the next section. This equation form means that the term can be factorized by node i. This factorization property is important for the following analytic solutions and algorithm. Actually, by considering the expectation with respect to \(\prod _{i' \in {I}_{m} \setminus i} q (\mathbf{W} _{i'})\), we can integrate out the terms that do not depend on W i , as follows:

Next, we consider Eq. 27-1-1. Since we use a conjugate prior distribution, q(W p(i)) is also represented by the following matrix variate normal distribution as the same distribution family with the prior distribution.

Note that the posterior distribution has a unique form that the first covariance matrix is an identity matrix while the second one is a symmetric matrix. We discuss about this form with the analytical solution, later.

By substituting Eqs. 21 and 30 into Eq. (27-1), Eq. (27-1) is represented as follows:

To solve the integral, we use the following matrix distribution formula:

Then, by disregarding the terms that do not depend on W i , Eq. 31 can be solved as the logarithmic function of the matrix variate normal distribution that has the posterior distribution parameter M p(i) as a hyper-parameter.

Similarly, Eqs. (27-2) and (27-3) are solved as follows:

Thus, the expected value terms of the three prior distributions in Eq. (27) are represented as the following matrix variate normal distribution:

It is an intuitive solution, since the location parameter of W i is represented as a linear interpolation of the location values of the posterior distributions in the parent and child nodes. The precision parameters control the linear interpolation ratio.

Similarly, we can also obtain the expected value term of the prior term in Eq. 26, and we summarize the prior terms of the non-leaf and leaf node cases as follows:

where

Thus, the effect of prior distributions becomes different depending on whether the target node is a non-leaf node or leaf node. The solution is different from our previous solution [37] since the previous solution does not marginalize the transformation parameters in non-leaf nodes. In the Bayesian sense, this solution is stricter than the previous solution.

Based on Eqs. 28 and 36, we can finally derive the quadratic form of W i as follows:

where we disregard the terms that do not depend on W i . Thus, by defining the following matrix variables

we can derive the posterior distribution of W i analytically. The analytical solution is expressed as

where

The posterior distribution also becomes a matrix variate normal distribution since we use a conjugate prior distribution for W i . From Eq. 39, M̃ i by hyper-parameter M̂ i and the 1st order statistics of the linear regression matrix Z i . ρ̂ i controls the balance between the effects of the prior distribution and adaptation data. This solution is the M-step of the VB EM algorithm and corresponds to that of the ML EM algorithm in Section 2.1.

Compared with Eq. 21, Eq. 40 keeps the first covariance matrix as a diagonal matrix, while the second covariance matrix \(\tilde {\mathbf {\Omega }}\) has off diagonal elements. This means that the posterior distribution only considers the correlation between column vectors in W. This unique property comes from the variance normalized representation introduced in Section 2, which makes multivariate Gaussian distributions in HMMs uncorrelated, and this relationship is taken over to the VB solutions.

Although the solution for a non-leaf node would make the prior distribution robust by taking account of the child node hyper-parameters, this structure makes the dependency of the target node with the other linked nodes complex. Therefore, in the implementation step, we approximate the hyper-parameters of the posterior distribution for a non-leaf node to those for a leaf node by M̂ i ≈ M p(i) and ρ̂ i ≈ ρ i in the Eq. 37, to make an algorithm simple. We would evaluate the effect of the non-leaf node solution in future work.

Next section explains the E-step of the VB EM algorithm, which computes sufficient statistics ζ k , S k , Ξ i , and Z i in Eqs. 9 and 10. These are obtained by using \(\tilde {q}(\mathbf {W}_{i})\), of which mode M̃ i is used for the Gaussian mean vector transformation.

3.5 Posterior Distribution of Latent Variables

From the variational calculation of \({\mathcal {F}} (m, \mathbf { \Psi })\) with respect to q(V), we also obtain the following posterior distribution:

By using the factorization form of the variational posterior (Eq. 24), we can disregard the expectation with respect to the other variational posteriors than that of the target node i. Therefore, to obtain the above VB posteriors of latent variables, we have to consider the following integral.

Since the Gaussian mean vectors are only updated in the leaf nodes, node i in this section is regarded as a leaf node. By substituting Eqs. 40 and 4 into Eq. 43, the equation is represented as (see Appendix):

where

The analytical result is almost equivalent to the E-step of conventional MLLR, which means that the computation time is almost the same as that of the conventional MLLR E-step.

Note that the Gaussian mean vectors are updated in the leaf nodes in this result, while the posterior distributions of the transformation parameters are updated for all nodes.

3.6 Variational Lower Bound

By using the factorization form (Eq. 24) of the variational posterior distribution, the variational lower bound defined in Eq. 15 is decomposed as follows:

The second term, which consists of q(V), is an entropy value and is calculated at the E-step in the VB EM algorithm. The first term (\({\mathcal {L}} (m, \mathbf {\Psi })\)) is a logarithmic evidence term for m and \(\boldsymbol {\Psi } = \{\rho _{i} | i = 1, \cdots |{\mathcal {I}}_{m}|\}\) and we can obtain an analytical solution of ℒ(m, Ψ). Because of the factorization forms in Eqs. 24, 18, and 28, ℒ(m, Ψ) can be represented as the summation over i, as follows:

where

Note that this factorization form has some dependencies from parent and child node parameters through Eqs. 37 and 39. To derive an analytical solution, we first consider the expectation with respect to only q(V) for cluster i. By substituting Eqs. 8, 21, and 40 into ℒ i (ρ i ,ρ l(i),ρ r(i)), and by using Eq. 39, the expectation can be rewritten, as follows:

The obtained result does not depend on W i . Therefore, the expectation with respect to q(W i ) can be disregarded in ℒ i (ρ i , ρ l (i), ρ r(i)). Consequently, we can obtain the following analytical result for the lower bound:

The first line of the obtained result corresponds to the likelihood value given the amount of data and the covariance matrices of the Gaussians. The other terms consider the effect of the prior and posterior distributions of the model parameters. This is used as an optimization criterion with respect to the model structure m and the hyper- parameters Ψ. Note that the objective function can be represented as a summation over i because of the factorization form of the prior and posterior distributions. This representation property is used for our model structure optimization in Section 4.2 for a binary tree structure representing a set of Gaussians used in the conventional MLLR.

4 Optimization of Hyper-Parameters and Model Structure

In this section, we describe how to optimize hyper-parameters Ψ and model structure m by using the variational lower bound as an objective function. Once we obtain the variational lower bound, we can obtain an appropriate model structure and hyper-parameters at the same time that maximize the lower bound as follows:

In this paper, we use two approximations for the variational lower bound to make the inference algorithm practical. First, we fix latent variables V during the above optimization. Then, < log q(V) > q(V) in Eq. 46 is also fixed for m and Ψ, and can be disregarded in the objective function. Thus, we can only focus on ℒ(m, Ψ) in the optimization step, which reduces computational cost greatly, as follows:

This approximation is widely used in acoustic model selection (likelihood criterion [38] and Bayesian criterion [26]). Second, as we discussed in Section 3.4, the solution for a non-leaf node (Eq. 36) makes the dependency of the target node with the other linked nodes complex. Therefore, we approximate ℒ i (ρ i , ρ l (i), ρ r (i)) ≈ ℒ i (ρ i ) by ρ̂ i ≈ ρ i and so on, where ℒ i (ρ i ) is defined in the next section. Therefore, in the implementation step, we approximate the posterior distribution for a non-leaf node to that for a leaf node to make an algorithm simple.

4.1 Hyper-Parameter Optimization

Even though we marginalize all transformation matrix (W i ), we still have to set the precision hyper-parameters ρ i for all nodes. Since we can derive the variational lower bound, we can optimize the precision hyper-parameter, and can remove the manual tuning of the hyper-parameters with the proposed approach. This is an advantage of the proposed approach with regard to SMAPLR [18], since SMAPLR has to hand-tune its hyper-parameters corresponding to {ρ i } i .

Based on the leaf node approximation for variational posterior distributions, in addition to the fixed latent variable approximation (\({\mathcal {F}} (m, \mathbf {\Psi }) \approx {\mathcal {L}} (m, \mathbf {\Psi })\)), in this paper the method we implement approximately optimize the precision hyper-parameter as follows:

where

This approximation makes the algorithm simple because we can optimize the precision hyper-parameter within the target and parent nodes, and do not have to consider the child nodes. Since we only have one scalar parameter for this optimization step, we simply used a line search algorithm to obtain the optimal precision hyper-parameter. If we consider a more complex precision structure (e.g., a precision matrix instead of a scalar precision parameter in the prior distribution setting Eq. 20), the line search algorithm may not be adequate. In that case, we need to update hyper-parameters by using some other optimization technique (e.g., gradient decent).

4.2 Model Selection

The remaining tuning parameter in the proposed approach is how many clusters we prepare. This is a model selection problem, and we can also automatically obtain the number of clusters by optimizing the variational lower bound. In the binary tree structure, we focus on a subtree composed of a target non-leaf node i and its child nodes l(i) and r(i). We compute the following difference based on Eq. 54 of the parent and that of the child nodesFootnote 9

This difference function is used for a stopping criterion in a top-down clustering strategy. Then, if the sign of Δ ℒ is negative, the target non-leaf node is regarded as a new leaf node determined by the model selection in terms of optimizing the lower bound. Then, we prune the child nodes l(i) and r(i). By checking the signs of Δℒ i for all possible nodes, and pruning the child nodes when Δℒ i have negative signs, we can obtain the pruned tree structure, which corresponds to maximizing the variational lower bound locally. This optimization is efficiently accomplished by using a depth-first search. This approach is similar to the tree-based triphone clustering based on VB[26].

Thus, by optimizing the hyper-parameters and model structure, we can avoid setting any tuning parameters. We summarize this optimization in Algorithm 1, 2, and 3. Algorithm 1 prepares a large Gaussian tree with a set of nodes \({\mathcal {I}}\), prunes a tree based on the model selection (Algorithm 2), and transforms HMMs (Algorithm 3). Algorithm 3 first optimizes the precision hyper-parameters Ψ, and then the model structure m. Algorithm 3 transforms Gaussian mean vectors in HMMs at the new root nodes in the pruned tree \({\mathcal {I}}_{m}\) obtained by Algorithm 2.

5 Experiments

This section shows the effectiveness of the proposed approach through experiments with large vocabulary continuous speech recognition. We used a Corpus of Spontaneous Japanese (CSJ) task [39].

5.1 Experimental Condition

The training data for constructing the initial (non-adapted) acoustic model consists of 961 talks from the CSJ conference presentations (234 hours of speech data), and the training data for the language model construction consists of 2,672 talks from the complete CSJ speech data (6.8M word transcriptions). The test set consists of 10 talks (2.4 hours, 26,798 words). Table 2 provides information on acoustic and language models used in the experiments[40]. We used context-dependent models with continuous density HMMs. The HMM parameters were estimated based on a discriminative training (Minimum Classification Error: MCE) approach [41]. Lexical and language models were also obtained by employing all the CSJ speech data. We used a 3-gram model with a Good-Turing smoothing technique. The OOV rate was 2.3 % and the test set perplexity was 82.4. The acoustic model construction, LVCSR decoding, and the following acoustic model adaptation procedures were performed with the NTT speech recognition platform SOLON [42].

5.2 Experimental Result

To check whether the proposed approach steadily increase the variational lower bound for each optimization in Section 4, Fig. 3 examines the values of the variational lower bound for each condition. Namely, we compare the proposed approach that optimizes both model structure and hyper-parameters, as discussed in Section 4 with those did not optimize each or any of them, in terms of the ℒ(m, Ψ) value. Figure 3 shows that the proposed approach indeed steadily increases the ℒ(m, Ψ) value. Therefore, this result indicates that the optimization works well by obtaining appropriate hyper-parameters and model structure.

Next, Fig. 4 compares the proposed approach with MLLR based on the maximum likelihood estimation, and SMAPLR based on the approximate Bayesian estimation, as regards the Word Error Rate (WER) for various amounts of adaptation data. With a small amount of adaptation data, the proposed approach outperforms the conventional approaches by about 1.0 %, while with a large amount of adaptation data, the accuracies of all approaches are comparable. This property is theoretically reasonable since the variational lower bound would be tighter than the EM-based objective function for a small amount of data, while would approach it for a large amount of data asymptotically. Therefore, we conclude that this improvement comes from the optimization of the hyper-parameters and the model structure of the proposed approach, in addition to the mitigation of sparse data problem based on the Bayesian approach.

Thus, from the values of the lower bound and the recognition result, we show the effectiveness of the proposed approach.

6 Summary and Future Work

This paper presents a fully Bayesian treatment of linear regression for HMMs by using variational techniques. The derived lower bound of the marginalized log-likelihood can be used for optimizing the hyper-parameters and model structure, which was confirmed by speech recognition experiments. One promising extension is to apply the proposed approach to advanced adaptation techniques. Actually, [43,44] provide a fully Bayesian solution for standard transformation parameters (not variance normalize representation in this paper), and apply it to both the feature space and model parameter transformations. The model structure and hyper-parameters are also optimized automatically during adaptation. Thus, feature space normalization and model space adaptation are consistently performed based on a variational Bayesian approach without tuning any parameters.

Another important plan for the future work is joint optimization of HMM parameters and linear regression parameters in a Bayesian framework. This paper assumes that the HMM parameters are fixed during the estimation process of linear regression parameters. These parameters depend on each other, and the variational approximation can deal with the problem (in the sense of local optimum solutions). However, to consider the model selection in this joint optimization, we have to think of many possible combinations of HMM and linear regression topologies. One promising approach to this problem is to consider a non-parametric Bayesian approach (e.g., variational inference for Dirichlet process mixtures [45] in the VB framework), which can efficiently search an appropriate model structure in the many possible combinations.

Finally, how to integrate Bayesian approaches with discriminative approaches theoretically and practically is also important future work. One promising approach for this direction is the marginalization of model parameters and margin variables to provide Bayesian interpretations with discriminative methods [46]. However applying [46] to acoustic models requires some extensions to deal with large-scale structured data problems [47]. This extension enables the more robust regularization of discriminative approaches, and allows structural learning by combining Bayesian and discriminative criteria.

Notes

Strictly speaking, since transformation parameters are not observables and are marginalized in this paper, these can be regarded as latent variables in a broad sense, similar to HMM states and mixture components of Gaussian Mixture Models (GMMs). However, these have different properties, e.g., transformation parameters can be integrated out in the VB-M step, while HMM states and mixture components are computed in the VB-E step, as discussed in Section 3. Therefore, to distinguish transformation parameters from HMM states and mixture components clearly, this paper only treats HMM states and mixture components as latent variables, which follows the terminology in variational Bayes framework [22]

k denotes a combination of all possible HMM states and mixture components. In the common HMM representation, k can be represented by these two indexes.

We use the following matrix formulate for the derivation: \(\begin {array}{rll} \frac {\partial }{\partial \mathbf {X}} \text {tr} [\mathbf {X}' \mathbf {A}] &=& \mathbf {A}\\ \frac {\partial }{\partial \mathbf {X}} \text {tr} [\mathbf {X}' \mathbf {X} \mathbf {A}] &=& 2 \mathbf {X} \mathbf {A} \quad (\mathbf {A} \text {is a symmetric matrix}) \end {array} \)

Ψ and m can also be marginalized by setting their distributions. This paper point-estimates Ψ and m by a MAP approach.

We can also marginalize the HMM parameters Θ. This corresponds to jointly optimize HMM and linear regression parameters.

The following sections assume factorization forms of \(q(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}, \mathbf {V})\) to make solutions mathematical tractable. However, this factorization assumption weakens the relationship between the KL divergence and the variational lower bound. For example, if we assume \(q(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}, \mathbf {V}) = q(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}) q(\mathbf {V})\), and focus on the KL divergence between \(q(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}})\) and \(p (\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}} | \mathbf {O})\), we obtain the following inequality:

\( \text {KL} [ q(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}) || p (\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}} | \mathbf {O}) ] \leq \log p(\mathbf {O}; \boldsymbol {\Theta }, m, \mathbf { \Psi }) - {\mathcal {F}} (m, \mathbf { \Psi }). \)

Compared with Eq. (16), the relationship between the KL divergence and the variational lower bound are less direct due to the inequality relationship. In general, the factorization assumption distances optimal variational posteriors from the true posterior within the VB framework.

Matrix variate normal distribution in Eq. 19 is also represented by the following multivariate normal distribution [36]:

\( \begin {aligned} {} {\mathcal {N}}(\mathbf {W}_{i}|\mathbf {M}_{i}, \mathbf { \Phi }_{i}, \mathbf { \Omega }_{i})\\ {} \propto \exp \left (- \frac {1}{2}\ \text {vec}(\mathbf {W}_{i} - \mathbf {M}_{i})' (\mathbf { \Omega }_{i} \otimes \mathbf { \Phi }_{i})^{-1} \text {vec}(\mathbf {W}_{i} - \mathbf {M}_{i})^{-1} \right), \end {aligned} \)

where vec(W i −M i ) is a vector formed by the concatenation of the columns of (W i −M i ), and ⊗ denotes the Kronecker product. Based on this form, a VB solution in this paper could be extended without considering the variance normalized representation used in this paper according to [16].

Since we approximate the posterior distribution for a non-leaf node to that for a leaf node, the contribution of the variational lower bounds from the non-leaf nodes to the total lower bounds can be disregarded, and Eq. 55 is used as a pruning criterion. If we don’t use this approximation, we just compare the difference between the values ℒ i (ρ i , ρ l (i), ρ r (i)) of the leaf and non-leaf node cases in Eq. 50.

References

Leggetter, C.J., & Woodland, P.C. (1995). Maximum likelihood linear regression for speaker adaptation of continuous density hidden Markov models. Computer Speech and Language, 9, 171–185.

Digalakis, V., Ritischev, D., Neumeyer, L. (1995). Speaker adaptation using constrained reestimation of Gaussian mixtures. IEEE Transactions on Speech and Audio Processing, 3, 357–366.

Lee, C.-H., & Huo, Q. (2000). On adaptive decision rules and decision parameter adaptation for automatic speech recognition. In Proceedings of the IEEE (Vol. 88, pp. 1241–1269).

Shinoda, K. (2010). Acoustic model adaptation for speech recognition. IEICE Transactions on Information and Systems, 93(9), 2348–2362.

Sankar, A., & Lee, C.-H. (1996). A maximum-likelihood approach to stochastic matching for robust speech recognition. IEEE Transactions on Speech and Audio Processing, 4(3), 190–202.

Chien, J.-T., Lee, C.-H., Wang, H.-C. (1997). Improved bayesian learning of hidden Markov models for speaker adaptation. In Processing of ICASSP (Vol. 2, pp. 1027–1030). IEEE

Chen, K.-T., Liau, W.-W., Wang, H.-W., Lee, L.-S. (2000). Fast speaker adaptation using eigenspace-based maximum likelihood linear regression. In Proceedings of ICSLP (Vol. 3, pp. 742–745).

Mak, B., Kwok, J.T., Ho, S. (2005). Kernel eigenvoice speaker adaptation. IEEE Transactions on Speech and Audio Processing, 13(5), 984–992.

Delcroix, M., Nakatani, T., Watanabe, S. (2009). Static and dynamic variance compensation for recognition of reverberant speech with dereverberation preprocessing. IEEE Transactions on Audio, Speech and Language Processing, 17(2), 324–334.

Tamura, M., Masuko, T., Tokuda, K., Kobayashi, T. (2001). Adaptation of pitch and spectrum for HMM-based speech synthesis using MLLR. In Proceedings of ICASSP (Vol. 2, pp. 806–808).

Stolcke, A., Ferrer, L., Kajarekar, S., Shriberg, E., Venkataraman, A. (2005). MLLR transforms as features in speaker recognition. In Proceedings of Interspeech (pp. 2425–2428).

Sanderson, C., Bengio, S., Gao, Y. (2006). On transforming statistical models for non-frontal face verification. Pattern Recognition, 39(2), 288–302.

Maekawa, T., & Watanabe, S. (2011). Unsupervised activity recognition with user’s physical characteristics data. In Proceedings of international symposium on wearable computers (ISWC 2011), (pp. 89–96).

Young, S., Evermann, G., Gales, M., Hain, T., Kershaw, D., Liu, X., Moore, G., Odell, J., Ollason, D., Povey, D. (2006). The HTK book (for HTK version 3.4). Cambridge: Cambridge University Engineering Department.

Chesta, C., Siohan, O., Lee, C.-H. (1999). Maximum a posteriori linear regression for hidden Markov model adaptation. In Proceedings of Eurospeech (Vol. 1, pp. 211–214).

Chien, J.-T. (2002). Quasi-Bayes linear regression for sequential learning of hidden Markov models. IEEE Transactions on Speech and Audio Processing, 10(5), 268-278

Shinoda, K., & Lee, C.-H. (2001). A structural Bayes approach to speaker adaptation. IEEE Transactions on Speech and Audio Processing, 9, 276–287).

Siohan, O., Myrvoll, T.A., Lee, C.H. (2002). Structural maximum a posteriori linear regression for fast HMM adaptation. Computer Speech & Language, 16(1), 5–24.

MacKay, D.J.C. (1997). Ensemble learning for hidden Markov models. Technical report, Cavendish Laboratory: University of Cambridge.

Neal, R.M., & Hinton, G.E. (1998). A view of the EM algorithm that justifies incremental, sparse, and other variants. Learning in Graphical Models, 355–368.

Jordan, M.I., Ghahramani, Z., Jaakkola, T.S., Saul, L.K. (1999). An introduction to variational methods for graphical models. Machine Learning, 37(2), 183–233.

Attias, H. (1999). Inferring parameters structure of latent variable models by variational Bayes. In Proceedings of uncertainty in artificial intelligence (UAI) (Vol. 15, pp. 21-30).

Ueda, N., & Ghahramani, Z. (2002). Bayesian model search for mixture models based on optimizing variational bounds. Neural Networks, 15, 1223–1241.

Watanabe, S., Minami, Y., Nakamura, A., Ueda, N. (2002). Application of variational Bayesian approach to speech recognition. NIPS 2002: MIT Press.

Valente, F., & Wellekens, C. (2003). Variational Bayesian GMM for speech recognition. In Proceedings of Eurospeech (pp. 441–444).

Watanabe, S., Minami, Y., Nakamura, A., Ueda, N. (2004). Variational bayesian estimation and clustering for speech recognition. IEEE Transactions on Speech and Audio Processing, 12, 365–381.

Somervuo, P. (2004). Comparison of ML, MAP, and VB based acoustic models in large vocabulary speech recognition. In Proceedings of ICSL (Vol. 1, pp. 830–833).

Jitsuhiro, T., & Nakamura, S. (2004). Automatic generation of non-uniform HMM structures based on variational Bayesian approach. In Proceedings of ICASSP (Vol. 1, pp. 805–808).

Hashimoto, K., Zen, H., Nankaku, Y., Lee, A., Tokuda, K. (2008). Bayesian context clustering using cross valid prior distribution for HMM-based speech recognition. In Proceedings of Interspeech.

Ogawa, A., & Takahashi, S. (2008). Weighted distance measures for efficient reduction of Gaussian mixture components in HMM-based acoustic model. In Proceedings of ICASSP (pp. 4173–4176).

Ding, N., & Ou, Z. (2010). Variational nonparametric Bayesian hidden Markov model. In Proceedings of ICASSP (pp. 2098–2101).

Watanabe, S., & Nakamura, A. (2004). Acoustic model adaptation based on coarse/fine training of transfer vectors and its application to a speaker adaptation task. In Proceedings of ICSLP (pp. 2933–2936).

Yu, K., & Gales, M.J.F. (2006). Incremental adaptation using bayesian inference. In Proceedings of ICASSP (Vol. 1, pp. 217–220).

Winn, J., & Bishop, C.M. (2006). Variational message passing. Journal of Machine Learning Research, 6(1), 661.

Gales, M.J.F., & Woodland, P.C. (1996). Variance compensation within the MLLR framework, Technical Report 242: Cambridge University Engineering Department.

Dawid, A.P. (1981). Some matrix-variate distribution theory: notational considerations and a Bayesian application. Biometrika, 68(1), 265–274.

Watanabe, S., Nakamura, A., Juang, B.H. (2011). Bayesian linear regression for hidden Markov model based on optimizing variational bounds. In Proceedings of MLSP (pp. 1–6).

Odell, J.J. (1995). The use of context in large vocabulary speech recognition. PhD thesis: Cambridge University.

Maekawa, K., Koiso, H., Furui, S., Isahara, H. (2000). Spontaneous speech corpus of Japanese. In Proceedings of LREC (Vol. 2, pp. 947–952).

Nakamura, A., Oba, T., Watanabe, S., Ishizuka, K., Fujimoto, M., Hori, T., McDermott, E., Minami, Y. (2006). Evaluation of the SOLON speech recognition system: 2006 benchmark using the corpus of spontaneous japanese. IPSJ SIG Notes, 2006(136), 251–256. (in Japanese).

McDermott, E., Hazen, T.J., Le Roux, J., Nakamura, A., Katagiri, S. (2007). Discriminative training for large-vocabulary speech recognition using minimum classification error. IEEE Transactions on Audio, Speech, and Language Processing, 15(1), 203–223.

Hori, T. (2004). NTT speech recognizer with outlook on the next generation: SOLON. In Proceedings of NTT workshop on communication scene analysis (Vol. 1, p. SP-6.)

Hahm, S.J., Ogawa, A., Fujimoto, M., Hori, T., Nakamura, A. (2012). Speaker adaptation using variational Bayesian linear regression in normalized feature space. In Proceedings of Interspeech (pp. 803-806).

Hahm, S.J., Ogawa, A., Fujimoto, M., Hori, T., Nakamura, A. (2013). Feature space variational Bayesian linear regression and its combination with model space VBLR. In Proceedings of ICASSP (pp. 7898-7902).

Blei, D.M., & Jordan, M.I. (2006). Variational inference for Dirichlet process mixtures. Bayesian Analysis, 1(1), 121–144.

Jebara, T. (2004). Machine learning: discriminative and generative (Vol. 755). Springer.

Kubo, Y., Watanabe, S., Nakamura, A., Kobayashi, T. (2010). A regularized discriminative training method of acoustic models derived by minimum relative entropy discrimination. In Proceedings of Interspeech (pp. 2954–2957).

Author information

Authors and Affiliations

Corresponding author

Additional information

The work was mostly done while Shinji Watanabe was working at NTT Communication Science Laboratories.

Appendix: Derivation of Posterior Distribution of Latent Variables

Appendix: Derivation of Posterior Distribution of Latent Variables

This section derives the posterior distribution of latent variables \(\tilde {q}(\mathbf {V}_{i})\), introduced in Section 3.5, based on the VB framework. To obtain VB posteriors of latent variables, we consider the following integral (this is the same equation as Eq. 43).

In this derivation, we omit indexes i, k, and t for simplicity. By substituting the concrete form (Eq. 4) the multivariate Gaussian distribution into Eq. 56, the equation is represented as:

where we use

Now, we focus on the quadratic form (∗1)of the second line of Eq. 57. By considering Σ = C(C)′ in Eq. 6, (∗1) is rewritten as follows:

where we use the cyclic and transpose properties of the trace, as follows:

We also define (D+1) × (D+1) matrix Γ, D × (D+1) matrix Y, and D × D matrix U in Eq. 59 as follows:

The integral of Eq. 59 over W can be decomposed into the following three terms:

where we use the following property:

and use Eq. 58 in the third term of the second line in Eq. 63.

We focus on the integrals (∗2) and (∗3). Since \(\tilde {q}(\mathbf {W})\) is a scalar value, (∗3) is rewritten as follows:

Here, we use the following matrix properties:

Thus, the integral is finally solved as

where we use

Similarly, we also rewrite (∗2) in Eq. 63 based on Eqs. 66 and 67, as follows:

Thus, the integral is finally solved as

where we use

Thus, we solve the all integrals in Eq. 63.

Finally, we substitute the integral results of (∗2) and (∗3) (i.e., Eqs. 71 and 71) into Eq. 63, and rewrite Eq. 63 based on the concrete forms of Γ, Y, and U defined in Eq. 62 as follows:

Then, by using the cyclic property in Eq. 60 and Σ = C(C)′ in Eq. 6, we can further rewrite Eq. 63 as follows:

Thus, we obtain the quadratic form with respect to o, which becomes a multivariate Gaussian distribution form. By recovering the omitted indexes i, k, and t, and substituting integral result in Eq. 74 into Eq. 57, we finally solve Eq. 43 as:

Here, we use the concrete form of the multivariate Gaussian distribution in Eq. 4.

Rights and permissions

About this article

Cite this article

Watanabe, S., Nakamura, A. & Juang, BH.(. Structural Bayesian Linear Regression for Hidden Markov Models. J Sign Process Syst 74, 341–358 (2014). https://doi.org/10.1007/s11265-013-0785-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-013-0785-8