Abstract

Unsupervised person re-identification has achieved great success through the self-improvement of individual neural networks. However, limited by the lack of diversity of discriminant information, a single network has difficulty learning sufficient discrimination ability by itself under unsupervised conditions. To address this limit, we develop a population-based evolutionary gaming (PEG) framework in which a population of diverse neural networks are trained concurrently through selection, reproduction, mutation, and population mutual learning iteratively. Specifically, the selection of networks to preserve is modeled as a cooperative game and solved by the best-response dynamics, then the reproduction and mutation are implemented by cloning and fluctuating hyper-parameters of networks to learn more diversity, and population mutual learning improves the discrimination of networks by knowledge distillation from each other within the population. In addition, we propose a cross-reference scatter (CRS) to approximately evaluate re-ID models without labeled samples and adopt it as the criterion of network selection in PEG. CRS measures a model’s performance by indirectly estimating the accuracy of its predicted pseudo-labels according to the cohesion and separation of the feature space. Extensive experiments demonstrate that (1) CRS approximately measures the performance of models without labeled samples; (2) and PEG produces new state-of-the-art accuracy for person re-identification, indicating the great potential of population-based network cooperative training for unsupervised learning. The code is released on github.com/YunpengZhai/PEG.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Person re-identification (re-ID) aims to match persons in an image gallery collected from non-overlapping camera networks, which has attracted increasing interest thanks to its wide applications in security and surveillance. Though supervised re-ID methods (Yang et al., 2020; Zheng et al., 2016) have achieved very decent results, they are largely dependent on sufficient data with expensive manual annotation, which also require substantial personal identity information and entail privacy issues. By contrast, unsupervised re-ID not only reduces the cost of labeling but also protects personal privacy without checking images manually. Commonly, unsupervised re-ID can be divided into two categories: unsupervised domain adaptation (UDA) (Zhai et al., 2020; Zhong et al., 2020) and fully unsupervised re-ID (FU) (Chen et al., 2021; Lin et al., 2019) depending on whether using extra labeled data. In this study, we will mainly focus on the fully unsupervised setting which learns directly from unlabeled images and allows for more scalability in real-world deployments.

Feature distribution of the same samples with different methods where each color denotes a person identity. Single model training (b) uses the self-learning mechanism only to enhance the discrimination ability it already has before training (a) and still suffers from inaccurate pseudo-labels. However, multi-model training (c) explores and exploits the complementary information among different models (marked by corresponding colored boxes) and achieves more discrimination

To address the challenges of unsupervised re-ID, recent efforts concentrate on training individual neural networks by means of a self-improvement strategy (Song et al., 2018; Ge et al., 2020). They attempt to learn better representations based on self-predicted pseudo-labels via clustering algorithms (Caron et al., 2018) or graph neural networks (Ye et al., 2017). However, a single model can use such a self-learning mechanism only to enhance the discrimination ability it already has and cannot tackle the incorrectly predicted pseudo-labels, which prevents it from maximizing its discrimination. Due to the lack of diversity of single models, incorrect pseudo-labels are likely to remain the same after unsupervised training such as the false positive samples where images of different persons are clustered into the same group or the false negative samples where the images of the same person are clustered into different groups, as shown in Fig. 1. Importantly, since models learn diverse discrimination with different architectures, the incorrect pseudo-labels predicted by a model may be predicted correctly by another model, marked by boxes in Fig. 1b. In this paper, we attempt to address unsupervised re-ID by multiple model training, in which the complementary information of different models can be integrated and utilized effectively to explore the various latent knowledge contained in unlabeled data (the quantitative analysis is shown in Sect. 4.4.1).

However, multiple model training still faces two challenging issues: (1) How to learn diverse discrimination with multiple different models? (2) How to select a set of better models from many diverse models for training? To tackle these issues, we propose a population-based evolutionary gaming (PEG), which selects and trains discriminative models by exploration and exploitation of their diversity. PEG trains a population of models concurrently by iterative selection, reproduction, mutation, and population mutual learning of neural networks, as shown in Fig. 2. Specifically, selection adapts the whole population to the unlabeled data by selecting and preserving the optimal subset of networks with complementary discrimination ability while abandoning other networks out of the subset. This combinatorial optimization of networks in selection is modeled as a multi-agent cooperative game and solved by the best response dynamics, in which each agent attempts to learn the best response to the other agents’ action and thus leads to Nash equilibrium. Then, reproduction and mutation are performed on the selected population to increase its diversity by making multiple copies of each network and applying a stochastic disturbance to their hyper-parameters. Selection and reproduction jointly maintain the size of the population. Afterward, population mutual learning is conducted among networks to assemble and further explore the discrimination capacity via knowledge distillation within populations. Each network learns representations from both population-shared pseudo-labels and soft-labels predicted by other individual networks. Utilizing periodically performing selection, reproduction and mutation, population mutual learning, the evolutionary gaming process enables favorable traits and knowledge of neural networks to be transmitted through successive generations.

In the evolution gaming, a core issue is to define the utility function of the game, that is, the criterion of network selection in the evolution. However, the evaluation of CNN models without labeled datasets has not been well studied. Here, we propose cross-reference scatter (CRS), which can approximately evaluate the quality of networks using unlabeled samples. Generally, the pseudo-labels predicted by better networks are more accurate; however, their accuracy cannot be directly evaluated when the ground truth is unavailable. Moreover, models trained by more accurate pseudo-labels tend to achieve larger intra-cluster cohesion and inter-cluster separation in the feature space because incorrect labels will enforce models to separate samples of the same class or aggregate samples of different classes. Motivated by this phenomenon, we indirectly evaluate a network according to the feature cohesion and separation of a reference model that is trained by pseudo-labels of the evaluated network. Hence, the CRS is defined by the ratio of the inter-cluster and intra-cluster variance of features to measure both separation and cohesion. We demonstrate that the CRS approximately reflects the discrimination capacity of models without ground truth data and thus promotes the evolution gaming to learn better representations.

A preliminary version of this work has been partially published (Zhai et al., 2020), which has demonstrated the effectiveness of mutual learning among multiple networks in unsupervised conditions. Based on that version, this manuscript has made great improvements, including: (1) We propose a novel population-based evolutionary gaming (PEG) framework (Sect. 3.1). The previous algorithm works passively only on given networks, and cannot adaptively select the most suitable models from the model base. Based on the mutual learning, PEG additionally contains an iterative selection of networks via a multi-agent cooperative game preventing the weak networks to distract the overall discrimination capability (Sect. 3.1.1). (2) We propose a new cross-reference scatter (CRS) to approximately measure re-ID models without labeled data. To evaluate the model discrimination, the previous version introduced inter-/intra-cluster scatter to roughly modulate the weights of models during mutual learning. However, it cannot be considered as the utility function of the cooperative game in PEG due to the lack of capability to accurately evaluate models. This paper improves inter-/intra-cluster scatter to cross-reference scatter by adding a cross-reference evaluation (CR) scheme (Sect. 3.1.1). (3) More qualitative and quantitative experiments are conducted to evaluate the effectiveness of the method, including but not limited to the validation and analysis of CRS, the cooperative game, and PEG.

In summary, our contribution is as follow:

-

It proposes a novel population-based evolutionary gaming framework for unsupervised person re-ID which trains a diverse population of neural networks by iterative selection, reproduction, mutation and mutual learning.

-

It introduces a multi-agent cooperative game for the selection of networks in the PEG, which aims to find and preserve an optimal subset of the population on unlabeled data.

-

It investigates the evaluation of re-ID models using unlabeled data and proposes a cross-reference scatter which approximately measures a model’s discrimination capability by indirectly estimating its predicted pseudo-labels according to the cohesion and separation of feature space.

-

Experiments show that PEG outperforms state-of-the-art methods on large-scale datasets, indicating the great potential of population-based multiple model training.

2 Related Works

2.1 Unsupervised Person Re-ID

Unsupervised person re-ID can be categorized into Unsupervised Domain Adaptation (UDA) and Fully Unsupervised Re-ID (FU). UDA methods try to train a re-ID model by unlabeled target data together with labeled source data, while FU methods attempt to train models with only unlabeled data after pre-training. Despite the different data conditions, most UDA and FU methods adopt similar learning strategies which can be summarized into two categories. A line of works are mainly based on alignment to reduce distribution shift between cameras or domains in pixel level, such as SPGAN (Deng et al., 2018), CamStyle (Zhong et al., 2019b), HHL (Zhong et al., 2018), ECN (Zhong et al., 2019), ATNet (Liu et al., 2019), PDA-Net (Li et al., 2019), DG-Net++ (Zou et al., 2020) and GCL (Chen et al., 2021), or feature level, such as TJ-AIDL (Wang et al., 2018), DAAM (Huang et al., 2019), UCDA-CCE (Qi et al., 2019) IICS (Xuan & Zhang, 2021) and CAP (Wang et al., 2020). This line of methods sufficiently utilize the reliable information of camera or domain styles but ignore the latent relationship among unlabeled samples, which hinders them from better performance. Another line of works are based on pseudo label discovery, which rely on the iteration of pseudo-label mining and model fine-tuning (Fan et al., 2018; Song et al., 2018; Zhang et al., 2019; Jin et al., 2020; Zhao et al., 2020; Zheng et al., 2021), such as BUC (Lin et al., 2019), SSG (Fu et al., 2019; Zhai et al., 2020), HCT (Zeng et al., 2020) and SpCL (Ge et al., 2020). Recent works mainly focus on label generation, label refinery, the assistance of extra information, and optimization of representation. BUC (Lin et al., 2019) proposed a bottom-up clustering approach to generate pseudo labels. To reduce pseudo label noise, DCML (Chen et al., 2020) selected credible training samples and MMT (Ge et al., 2020) proposed a mutual learning scheme for better pseudo labels. JVTC (Li & Zhang, 2020) and CycAs (Wang et al., 2020) explore temporal information to refine visual similarity. Contrastive learning with feature memory bank has been widely used in many works to learn more robust representation (Zheng et al., 2021; Chen et al., 2021). SpCL (Ge et al., 2020) progressively generated more reliable clusters for the unified contrastive loss. Cluster Contrast (Dai et al., 2021) proposed to store feature vectors and compute contrast loss in the cluster level. Although great success has been made, this line of methods usually leverage a single model to learn the knowledge that it already has, making it hard to learn sufficient capability due to the lack of diverse discrimination. To alleviate this problem, we propose PEG based on multi-model training where diversity of discrimination can be explored and exploited by the evolution of networks.

2.2 Multiple Model Ensemble

There is a considerable number of previous works on ensembles with neural networks. Explicit ensemble methods often train a series of base-level networks and average the predictions across them as the final result, which have low efficiency during both training and testing (Hansen & Salamon, 1990; Perrone & Cooper, 1992; Krogh & Vedelsby, 1994; Dietterich, 2000; Huang et al., 2017; Lakshminarayanan et al., 2017). Recently, implicit ensemble methods are explored to tackle this problem. A typical approach (Srivastava et al., 2014; Wan et al., 2013; Huang et al., 2016; Singh et al., 2016) generally create a series of networks with shared weights during training and then implicitly ensemble them at test time. Another approach (Shen et al., 2019) focuses on label refinery by distilling and transferring knowledge from a variety of trained networks to a single network for higher discrimination capability. However, these supervised methods cannot be directly used on unsupervised re-ID tasks, especially when the training set and the testing set share non-overlapping label space. On the other hand, existing methods accomplish the ensemble on all base-level networks while they ignore the problem that a very weak base-level network could drag down the overall performance when included. Commonly, “All” is not the “Best”. In this work, we propose a cooperative game in the selection phase of the framework to find and preserve the optimal combination of base-level networks using the unlabeled data and obtain progressive ensemble by an iterative population evolutionary gaming under unsupervised conditions.

2.3 Algorithmic Game Theory

Machine learning methods with multi-agent game are proposed to address various tasks, such as image generation (Goodfellow et al., 2014), attacks and defenses for deep learning (Yuan et al., 2019), playing computer games (Vinyals et al., 2019; Peng et al., 2020), etc. SVM can be considered as a game between two agents where one agent challenges the other to find the best hyper-plane after providing the most difficult points for classification. Generative adversarial networks (GANs) (Goodfellow et al., 2014) train two networks, the discriminator and the generator, against each other in order to generate images that can pass for real data. These methods are designed for non-cooperative games where agents have contrary rewards. However, in this work, the selection of networks is modeled as a multi-agent cooperative game, where rewards are global and shared by all agents. Although methods with cooperative games have been explored for reinforcement learning (Peng et al., 2020), they can not be used for such a computer vision task. Our approach consider the Best-response dynamics in cooperative game theory to solve a Nash equilibrium of model selection strategy.

2.4 Unsupervised Evaluation Metrics of Models

Metrics used in person re-ID always depend on samples with ground truth, such as mean Average Precision (mAP) and Cumulative Match Characteristic (CMC) curve, which are calculated between model prediction and the corresponding ground truth labels. However, these supervised metrics are not available during unsupervised learning when labels of data are unknown, therefore, they cannot be used as the criterion of the model selection in our PEG framework. On the other hand, several unsupervised evaluation metrics which require no data label have been designed to measure the performance of clustering algorithms as internal evaluation metrics (Davies & Bouldin, 1979; Baker & Hubert, 1975; Hubert & Levin, 1976; Maulik & Bandyopadhyay, 2002; Halkidi et al., 2002). For example, the silhouette coefficient (Rousseeuw, 1987) estimates the average distance between each point in one cluster and points in the nearest neighboring cluster. The Dunn index (Dunn, 1973) calculates the ratio of the minimum of inter-cluster distance to the maximum of intra-cluster distance between samples. Nevertheless, these cluster validations cannot be directly used for the evaluation of re-ID models, for example, by the quality of clustering with their extracted features under the same clustering algorithm. That’s because the distribution of feature clusters cannot measure the performance of models, especially in unsupervised settings. For instance, the metrics may estimate well clustering of features even when the model is poor but only is trained to overfit on its inaccurate labels. In this paper, we propose a cross-reference scatter which approximately measures a model’s discrimination capability by indirectly estimating its predicted pseudo-labels. It utilizes the pseudo-labels to train a reference network for a few iterations and then observes the cohesion and separation of its feature space to estimate the discrimination of the evaluated model. This method mines the latent visual relationships between image samples and so can approximately estimate models’ discrimination on unlabeled data.

2.5 Population-Based Evolutionary Training

Population-based evolution has been widely studied to solve real-valued optimization problems. For distance metric learning, a related task of re-ID, EDML (Fukui et al., 2013) and its variants (Kalintha et al., 2019; Ali et al., 2020) were proposed to optimize a linear or non-linear transformation using differential evolution. However, these approaches cannot address the training of deep neural networks in re-ID due to the large scale of learnable parameters. Our approach is inspired by and built upon another line of Population Based Training (PBT) (Jaderberg et al., 2017), which is originally proposed for optimization of hyperparameters of networks. PBT trains a population of networks and performs periodically a process of exploiting and exploring, leading to automatic learning of the best configurations. It has been proved effective for a suite of challenging problems, including Atari and StarCraft II of reinforcement learning (Vinyals et al., 2019; Jaderberg et al., 2019), training Generative Adversarial Network (GAN) (Jaderberg et al., 2017) and data augmentation (Ho et al., 2019). However, such a population-based training of networks has not been explored in unsupervised conditions, in which the criterion of network selection is difficult to determine. On the other hand, existing PBT approaches follow the principle of best individual selection, while our method selects and preserves optimal groups of networks that are more complementary. We additionally incorporate mutual learning within the population into the framework, leading to superior performance on the unsupervised re-ID.

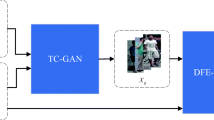

Population-based evolutionary gaming framework. PEG iteratively performs selection, reproduction and mutation and population mutual learning to learn diverse and discriminative models under unsupervised conditions. In every generation, (1) selection preserves the optimal combination of models through a cooperative game with a set of selector agents to maximize the utility function (CRS). (2) Reproduction and mutation clone models and fluctuate their hyper-parameters to explore more diversity. (3) Population mutual learning trains models with mutated hyper-parameers by knowledge distillation from each other to enhance and assemble their discrimination

3 Methodology

3.1 Population-Based Evolutionary Gaming

Due to the lack of diversity of individual networks, sufficient discrimination for unsupervised person re-ID is difficult to achieve. In contrast to previous works that use a single network for self-training, we propose a PEG that concurrently trains a diverse population of neural networks through an evolutionary game. In our formulation, the population \(\mathcal {P}\) contains K networks, each of which is denoted as \(\mathcal {M}(\theta , \phi )\). \(\theta \) is the learnable parameters, and \(\phi \) is its hyper-parameters including the learning rate and loss ratios. The proposed training algorithm consists of three iterative phases, namely, selection to preserve adaptive networks, reproduction and mutation to learn more diversity, and population mutual learning to assemble knowledge, as illustrated in Fig. 2. The procedure of PEG is also described in Algorithm 1.

3.1.1 Selection

Since poor models may drag down the performance in multiple model training, we first propose a selection phase to preserve better models in PEG. Given a population \(\mathcal {P}\) of K neural network models \(\{\mathcal {M}^1,\ldots ,\mathcal {M}^K\}\), selection aims to find an optimal subset of the population that is more adaptive to the given data, as shown in Fig. 2. Then, networks of the subset are preserved for later training, while other networks are abandoned to reduce the population size. The selection scheme is considered as a multi-agent cooperative game among L selector agents characterized by \((\mathcal {A}_1,\mathcal {A}_2,\ldots ,\mathcal {A}_L, u)\), where \(\mathcal {A}_l\) is the action space of agent l; and \(u:\mathbf {A}\rightarrow \mathbb {R}\) denotes the utility function of the joint action \(\mathbf {A} \in \mathcal {A}_1 \times \mathcal {A}_2 \times \ldots \times \mathcal {A}_L\). The action of each agent \(\mathbf {a}_l \in \mathcal {A}_l\) is to select one neural network from the population \(\mathcal {P}\), \(\mathcal {A}_l = \{\mathcal {M}_1,\ldots ,\mathcal {M}_K\}\). The number of agents is restricted due to the limitation of computational resources. In the cooperative game, agents pursue the same goal to maximize their team utility u. To maximize the discrimination and complementarity of the preserved networks, we define the utility function u by the performance of the ensemble model. However, a model’s performance is difficult to estimate without labeled testing data. To address this problem, we design cross-reference scatter \(J_{cr}\) to evaluate the ensemble model and consider it the formula of the utility function, \(u(\mathbf {A})=J_{cr}(\vartheta (\mathbf {A}))\), where \(\vartheta \) denotes the ensemble model produced by the networks currently selected by the agents. The detailed description of the cross-reference scatter will be provided in Section 3.1.1. Since there are approximately \(K^L\) possible action combinations, a global optimal solution is impossible to derive by enumerating all the possibilities. Therefore, we turn to obtain a Nash equilibrium solution \({\tilde{\mathbf {A}}}=\{{\tilde{\mathbf {a}}}_l\}\), where each agent attempts to learn the best response to the other agents’ actions:

where \(\mathbf {a}'_l\) is any unilateral deviation, \({\tilde{\mathbf {A}}}_{-l}=\{{\tilde{\mathbf {a}}}_{l'}\}_{l'\ne l}\) and \(-l\) represents all agents except \(\mathbf {a}_{l}\). We solve Eq. 1 via best-response dynamics. Each agent acts in a circular manner until it falls into a Nash equilibrium, where the action of each agent is the best response to the other agents. Below, we provide the detailed procedure of the cooperative selection game.

-

(1)

Initialization of agent actions: randomly initialize \(\mathbf {a}_l \in \mathcal {A}_l\) for \(l=1,\ldots ,L\).

-

(2)

For agent l, solve the optimal action of agent \(\mathbf {a}_l\) in response to the actions of the other \(L-1\) agents. The objective for optimization of \(\mathbf {a}_l\) can be formulated as,

$$\begin{aligned} \mathbf {a}_l^*= \mathop {\arg \max }_{\hat{\mathbf {a}}_l\in \mathcal {A}_l} u(\hat{\mathbf {a}}_l,\mathbf {a}_{-l}), \end{aligned}$$(2)where \(\mathbf {a}_{-l}\) means all agents except \(\mathbf {a}_{l}\).

-

(3)

Then update the joint action to \((\mathbf {a}_l^*, \mathbf {a}_{-l})\), as \(\mathbf {a}_l^*\) is a best response to \(\mathbf {a}_{-l}\).

$$\begin{aligned} \mathbf {A} \leftarrow (\mathbf {a}_l^*, \mathbf {a}_{-l}). \end{aligned}$$(3) -

(4)

Repeat steps 2 to 3 for agent \(l+1\).

-

(5)

If the joint action \(\mathbf {A}\) has not changed in the last \(L-1\) optimization rounds, the utility falls into Nash equilibrium, where every agent implements the best response to all other agents. In this case, we stop the optimization process, preserve the selected networks for the next generation, and abandon the other networks.

Cross-reference Scatter

A core issue is to estimate a model’s performance using unlabeled data in the selection phase of the proposed evolutionary game; however, such a measurement has not been explored. In this study, we propose a cross-reference scatter (CRS) for the approximate evaluation of re-ID models using unlabeled samples. Generally, the pseudo-labels predicted by better networks are more accurate, but the specific accuracy of the labels cannot be directly evaluated without the ground truth. However, models trained by more accurate pseudo-labels tend to achieve larger intra-cluster cohesion and inter-cluster separation in the feature space because incorrect labels will enforce models to separate samples of the same class or aggregate samples of different classes, which is difficult to accomplish. Therefore, it is reasonable to indirectly evaluate a network according to the feature cohesion and separation of a reference model that is trained by pseudo-labels which are predicted with the evaluated model.

First, we introduce an inter-/intra-cluster scatter (ICS) to estimate the separation and cohesion of clusters in the feature space. Although existing metrics such as DBI (Davies & Bouldin, 1979), SC (Rousseeuw, 1987) have been studied to estimate clustering, they usually pay more attention to the hard edge samples of clusters while ignoring the overall distribution, and thus are not applicable to measure re-ID models. Inspired by the objective of linear discriminant analysis, that is, to maximize the ratio of the between-class variance and the within-class variance, the inter-/intra-cluster scatter is defined as the ratio of the inter-cluster variance and intra-cluster variance in the clustered feature space. Given the set of images represented by feature vectors \(\mathbf {f}(X|\varTheta )\), where \(\varTheta \) denotes the parameters of the feature extractor network, we cluster all samples into M groups as \(\mathbb {C}\). We measure the cohesion of each cluster by the variance of features assigned to it. The intra-cluster scatter of cluster \(\mathbb {C}_i\) is defined as

where \(\mu _i=\sum _{x \in \mathbb {C}_i} \mathbf {f}(x|\varTheta )/n_i\) is the centroid of cluster \(\mathbb {C}_i\) (with \(n_i\) samples). Then, the intra-cluster scatter of all clusters is computed as

To measure the separation of feature clusters, the inter-cluster scatter is defined as the variance of cluster centroids

where \(\mu =\sum _{n=1}^{N} {\textbf {f}}(x_n|\varTheta )/N\) is the center of the entire dataset. Considering both the separation and cohesion of feature clusters, the inter-/intra-cluster scatter \(J(X|\varTheta )\) is defined as the ratio of the inter-cluster scatter and intra-cluster scatter

\(J(X|\varTheta )\) increases when the inter-cluster scatter is larger and the intra-cluster scatter is smaller, which entails larger separation and cohesion within feature clusters.

Utilizing inter-/intra-cluster scatter (ICS), we attempt to evaluate a model by indirectly estimating its predicted pseudo-labels in a cross-reference (CR) manner. Given a network model with parameter \(\varTheta \) for evaluation, we first implement the model to extract the convolutional features of all samples \(\mathbf {f}(X|\varTheta )\). Then, minibatch k-means clustering is performed on \(\mathbf {f}(X|\varTheta )\) to classify all samples into M different clusters. After clustering, the produced cluster IDs are used as pseudo-labels \(\widetilde{Y}(\varTheta )\) for samples X. To estimate the accuracy of the predicted pseudo-labels \(\widetilde{Y}(\varTheta )\), we adopt them as supervision to train a reference model with parameters \(\theta ^{ref}\) by optimizing the cross-entropy loss with label smoothing and the softmax triplet loss for a certain number of iterations, and then measure the separation and cohesion of the reference model by computing the inter-/intra-cluster scatter \(J(X|\theta ^{ref})\) as cross reference scatter (CRS) \(J_{cr}(\varTheta )\). The value of CRS is then used for model evaluation, where a larger CRS indicates better discrimination capability of the evaluated model \(\varTheta \). The evaluation scheme is illustrated in Fig. 3 and the detailed process is shown in Algorithm 2.

Importantly, we use the k-means clustering because the fair comparison of CRS among models requires the same cluster number during clustering. Specifically, since CRS is defined by the ratio of intra-cluster variance and inter-cluster variance, it is relative to the cluster numbers. For example, a larger cluster number may lead to a larger CRS value due to the smaller intra-cluster variances. And the cluster numbers by other clustering algorithms, i.e. DBSCAN, with different evaluated feature models are likely to be different, making it unfair to compare their CRS for model selection.

For fast and fair evaluation, we adopt a slight network, OSNet, with the same initial parameters as the reference model to evaluate different models. The number of training iterations of it is set to a small value from 500 to 1000 according to the number of samples.

3.1.2 Reproduction and Mutation

Reproduction and mutation provide more diversity within the population by reproducing networks and mutating their hyper-parameters, including the learning rate and loss ratios after selection. In the reproduction and mutation phase, each network reproduces multiple descendants, one of which preserves the original hyper-parameters while the others apply a stochastic disturbance to their hyper-parameters to attempt to learn different information and increase the diversity of the population. Specifically, the mutated hyper-parameters \(\phi '\) are sampled from a uniform distribution \(\mathbf {U}((1-r)\phi , (1+r)\phi )\) that fluctuates within r of the original value. The steps 5-11 in Algorithm 1 summarize the process of reproduction and mutation. Note that the mutation does not immediately change the weight parameters of neural networks. Changes occur to them when networks are trained by their mutated hyper-parameters in mutual learning.

3.1.3 Population Mutual Learning

After mutation, population mutual learning is performed among networks in the population \(\mathcal {P}\) to access and assemble diverse discrimination capability using unlabeled data in an iteratively collaborative way, as shown in Fig. 2. Each network accomplishes learning from the whole population by means of its own hyper-parameters acquired from mutation. The learning scheme consists of a clustering-based pseudo-label prediction procedure and a mutual feature learning procedure. In each iterative epoch, pseudo-labels are first predicted for all samples via clustering and then utilized to fine-tune the networks of the population. In this phase, networks learn representations of images in two ways: from the shared pseudo-labels predicted by the whole population via clustering and from the output of other networks as soft labels via knowledge distillation. The procedure of this population mutual learning is described in Algorithm 3.

Pseudo-label prediction. Pseudo-labels are predicted at the beginning of each iterative epoch. In order to predict reliable pseudo-labels, the framework utilizes all networks in the population \(\{\mathcal {M}^1,\ldots ,\mathcal {M}^K\}\) jointly as a combinatorial model \(\vartheta (\mathcal {P})\) to extract features for sample clustering. The clustering-based pseudo-label prediction procedure consists of three steps in total: (1) First, ensemble features of unlabeled samples \(\{\mathbf {X}\}\) are obtained by concatenating features that are individually extracted by all networks, \(\mathbf {f}(\mathbf {X}|\vartheta (\mathcal {P})) = [ \mathbf {f}(\mathbf {X}|\theta ^{1});\ldots ;\mathbf {f}(\mathbf {X}|\theta ^{k})]\); (2) Then, DBSCAN (Ester et al., 1996) clustering is performed on \(\mathbf {f}(\mathbf {X}|\vartheta (\mathcal {P}))\)to classify all unlabeled samples into M different clusters. (3) The produced cluster IDs are used as pseudo-labels \(\widetilde{Y}\) for the training samples \(\mathbf {X}\). The steps 2 and 3 in Algorithm 3 summarize this clustering process.

Different from CRS, we use DBSCAN here for model learning to generate more accurate pseudo-labels, since DBSCAN has been proven effective and efficient for a lot of clustering-based unsupervised person re-identification (Ge et al., 2020; Chen et al., 2021). Compared with the k-means cluster algorithm, DBSCAN mines sample relations more accurately according to their density without setting the number of clusters and then helps learn more discriminative models.

Mutual feature learning Utilizing the predicted pseudo-labels, the framework aims to organize networks within the population to learn from each other and enhance themselves in a mutual learning manner, as shown in Fig. 2. In each training iteration, the same batch of images with different random augmentations is first fed to all the networks in the population parameterized by \(\{\theta ^{k}\}\) to predict the classification confidence predictions \(\{\mathbf {p}(x_{n}|\theta ^{k})\}\) and feature representations \(\{\mathbf {f}(x_{n}|\theta ^{k})\}\). The classification confidence predictions are computed by a linear transformation of the feature representations followed by a softmax function. To transfer knowledge from one network to others, the outputs of each network serve as soft labels for training other networks. However, directly using the current predictions as soft labels to train each model decreases the independence of the model outputs, which might result in error amplification. To avoid this issue, the temporally averaged model (Tarvainen & Valpola, 2017) of each network, which preserves more original knowledge, is used to generate reliable soft pseudo-labels for supervision. The parameters of the temporally averaged model of network \(\theta ^{k}\) at current iteration t are denoted as \(\varTheta _t^{k}\), which is updated as

where \(\alpha \in [0,1]\) is the scale factor, and the initial temporal average parameters are \({\varTheta }_{0}^{k} = \theta ^{k}\). For each network \(\mathcal {M}^{k}\), three loss functions are computed as optimization objectives: mutual identity loss, mutual triplet loss and voting loss. The mutual identity loss (Zhang et al., 2018) of models learned by a certain network \(\mathcal {M}^{e}\) is defined as the cross entropy between the ID prediction of the student network \({\mathcal {M}^k}\) and the teacher network \(\mathcal {M}^{e}\)

The mutual triplet loss (Ge et al., 2020) of models learned by a certain network \(\mathcal {M}^{e}\) is defined as the binary cross entropy

where \(\mathcal {P}_n({\theta }^{k})\) denotes the softmax of the feature distance between negative sample pairs

where \(x_{n+}\) denotes the hardest positive sample of anchor \(x_{n}\) according to the pseudo-labels \(\widetilde{Y}\) and \(x_{n-}\) denotes the hardest negative sample. \(\Vert \cdot -\cdot \Vert \) denotes \(L_2\) distance.

To learn stable and discriminative knowledge from the pseudo-labels obtained by clustering, we introduce voting loss, which consists of the classification loss and triplet loss. For each model \({\mathcal {M}^k}\), the classification loss is defined as the cross entropy with label smoothing (Szegedy et al., 2016)

where \(\widetilde{\mathbf {q}}\) is the smoothing label according to pseudo-labels \(\widetilde{Y}\). Each element is calculated by

The softmax triplet loss is defined as:

where \(x_{n+}\) denotes the hardest positive sample of anchor \(x_{n}\) according to the pseudo-labels and \(x_{n-}\) denotes the hardest negative sample. The voting loss is defined by summarizing the classification loss and the triplet loss

where \(w_{id}\) and \(w_{tri}\) are the loss ratios. For each model \({\mathcal {M}^k}\), the overall optimized objective is defined by

Each model is trained by its own hyper-parameters \(\phi =\{ \varepsilon , w_{id}, w_{tri}, w_{mid}, w_{mtri} \}\) to explore different information. In addition, direct descendants of the same networks do not learn from each other in the mutual learning phase since they acquire similar knowledge. Note that training of a large-size population requires unaffordable computational resources. To address this problem, we use a random sampling strategy of networks. Specifically, for each batch of data, mutual learning is performed only on a randomly sampled subset of networks, as shown in step 5 in Algorithm 3.

3.2 Analysis of Escaping Capacity

Here we analyze the escaping capacity from the local optimum of our approach. The optimization of our approach can be formulated into two interactive phases. The first one is to optimize the label assignment of samples according to feature models

where \(\widetilde{Y}\) is the assigned labels and \(f_{a}\) is the objective loss function of the phase which is determined by the used clustering algorithm. \(\varTheta \) and X denote the model parameters and input samples, respectively. The second phase is to optimize the parameters of feature models according to the label assignment

\(f_{m}(\cdot |\phi )\) is loss function to train the models \(\varTheta \) as Eq. 16, and \(\phi \) is the hyper-parameters. The two optimization phases interact as a two-agent game and the local optimum occurs when the game halts at a Nash equilibrium:

In our approach, function \(f_{m}(\cdot |\phi )\) will be changed when the hyper-parameters \(\phi \) are mutated to new values \(\phi '\), leading to the shift of the local optimal model parameters \(\varTheta ^*\). Therefore the Nash equilibrium between \(\widetilde{Y}\) and \(\varTheta \) will be broken, and the local optimum at \((\widetilde{Y}^*, \varTheta ^*)\) will not exist exactly since the mutation changes the condition of the Nash equilibrium.

4 Experiments

4.1 Datasets and Evaluation Metrics

We evaluate the proposed method on three large-scale person re-identification benchmarks including Market-1501 (Zheng et al., 2015), DukeMTMC-reID (Ristani et al., 2016; Zheng et al., 2017) and MSMT17 (Wei et al., 2018).

Market-1501 This dataset contains 32,668 images of 1,501 identities from 6 disjoint cameras, among which 12,936 images from 751 identities form a training set, 19,732 images from 750 identities (plus a number of distractors) form a gallery set, and 3,368 images from 750 identities form a query set.

DukeMTMC-reID This dataset is a subset of the DukeMTMC. It consists of 16,522 training images, 2228 query images, and 17,661 gallery images of 1812 identities captured using 8 cameras. Of the 1812 identities, 1404 appear in at least two cameras and the rest (distractors) appear in a single camera.

MSMT17 contains 126,441 images of 4,101 IDs captured from a 15-camera network. The training set has 32,621 images of 1,041 identities, and the testing set has 93,820 images of 3,060 identities. During inference, 11,659 images are selected as query and the other 82,161 images are used as gallery from the testing set.

Evaluation Metrics: We use the Cumulative Matching Characteristic (CMC) curve and mean average precision (mAP) for performance evaluations and comparisons.

4.2 Implementation Details

Model settings. We adopt eight models with architectures of similar-weight parameters to initialize the population, including DenseNet-121 (Huang et al., 2017), DenseNet-169, IBN-DenseNet-121 (Pan et al., 2018), IBN-DenseNet-169, Inception-v3 (Szegedy et al., 2016), ResNet-50, IBN-ResNet-50a and IBN-ResNet-50b. All model are pretrained using ImageNet (Deng et al., 2009). In every model, the convolutional feature output by the last pooling layer is used for image representation.

PEG settings. The maximum size of networks in the selection phase L is set to 3 for experiments. A lightweight OSNet (Zhou et al., 2019) is used as the reference model of CRS for faster training. In addition, we conduct minibatch k-means clustering for CRS, and the number of clusters M is set to 500 for Market-1501 and DukeMTMC-reID following MMT (Ge et al., 2020). In the reproduction and mutation phase, each network reproduces 3 networks with mutation factor \(r=0.5\). The whole population evolves for 3 generations in total. Our method is trained on 4 GPUs under PyTorch framework. During testing, we use only one network which is selected by CRS in the population for feature representations.

Training settings. In mutual learning, we calculate k-reciprocal Jaccard distance (Zhong et al., 2017) for clustering, where \(k_1, k_2\) are set to 6 and 30, respectively. We set the minimum cluster samples to 4 and a distance threshold to 0.6 for DBSCAN (Ester et al., 1996). During training, the input image is resized to \(256\times 128\), and traditional image augmentation is performed via random flipping and random erasing. For each class from the training set, a mini-batch of 256 is sampled with P = 16 randomly selected classes and K = 16 randomly sampled images for computing the hard batch triplet loss. We use the Adam (Kingma & Ba, 2014) with weight decay 0.0005 to optimize parameters. In population mutual learning, the learning rate is fixed to 0.00035 for the overall 15 epochs. In each epoch, the temporal ensemble momentum \(\alpha \) in Eq. 8 is set to 0.999.

4.3 Comparison with State-of-the-Arts

We compare PEG with state-of-the-art person re-ID methods in Tables 1 and 2 on Market-1501, DukeMTMC-reID and MSMT17 datasets, respectively. The performance of our full approach is reported as PEG(Full). In addition, we also evaluate PEG using the same backbone of ResNet50 as most of the other methods since backbones are important for feature learning. However, the backbones of models are automatically selected in our selection phase. To guarantee a model with ResNet50 is preserved in the population, we limit PEG to choose at least one ResNet50 network at every time of selection. The results tested by ResNet50 are reported as PEG/ResNet50.

Previous unsupervised methods can be categorized into unsupervised domain adaptation (UDA) and fully unsupervised (FU) methods. State-of-the-art UDA methods are first listed and compared in Tables 1 and 2, including MMCL (Wang & Zhang, 2020), JVTC (Li & Zhang, 2020), DG-Net++ (Zou et al., 2020), ECN++ (Zhong et al., 2020), AD-Cluster (Zhai et al., 2020), MMT (Ge et al., 2020), DCML (Chen et al., 2020), MEB-Net (Zhai et al., 2020), MetaCam-DSCE (Yang et al., 2021), SpCL (Ge et al., 2020) and GLT (Zheng et al., 2021). All these methods usually rely on an annotated source domain to provide basic discrimination and transfer it to the target domain. Without any identity annotation from source domains, our proposed PEG outperforms all of them on Market-1501, DukeMTMC-reID datasets, and most of them on MSMT17 dataset except SpCL. The results indicate the better capacity of PEG to explore the information of the unlabeled data by exploiting the diversity of multiple models. On the other hand, although other approaches have also been proposed to utilize multiple models, such as MMT and MEB-Net, our PEG still surpasses them by exploring and exploiting the diversity of multiple models through evolutionary gaming. With mutation to provide more diverse discrimination, it automatically finds and preserves the optimal combination of networks from the population in every generation and thus achieves better performance in the end.

State-of-the-art fully unsupervised methods are then listed and compared in Tables 1 and 2 including BUC (Lin et al., 2019), SSL (Lin et al., 2020), JVTC (Li & Zhang, 2020), MMCL (Wang & Zhang, 2020), MPRD (Ji et al., 2021), HCT (Zeng et al., 2020), CycAs (Wang et al., 2020), GCL (Chen et al., 2021), UGA (Wu et al., 2019), IICS (Xuan & Zhang, 2021), IN unsup. (Fu et al., 2021), SpCL (Ge et al., 2020), OPLG (Zheng et al., 2021), CAP (Wang et al., 2020), ICE (Chen et al., 2021) and ClusterContrast (Dai et al., 2021). Especially, ICE (aware) denotes using extra camera information, and ICE (agnostic) denotes not using it. The compared approaches mainly rely on the pseudo-label discovery of single networks. Among them, methods tagged by “*” denote that elaborate extra temporal information is additionally used to improve the discrimination, such as CycAs and UGA, while our approach only considers person appearance similarity. The performance of these methods is provided just for reference since it is not our point to explore the extra temporal information, and our method does not use any of them. The fully unsupervised methods are separated into two groups, including linear classifier based methods and memory bank based methods:

(1) Comparison with linear classifier based methods For the linear classifier based methods, our approach with ResNet50 achieves better performance than most of them only except CycAs and UGA on the MSMT17 dataset, as shown in Tables 1, and 2. Different from the other two datasets, CycAs and UGA with extra temporal information achieves better performance on MSMT17 because images in the dataset are more diverse and harder to cluster accurately, making the elaborate extra temporal information particularly important. Nevertheless, these methods still suffer from the lack of diversity in single model training, which prevented them from maximizing their discrimination under unsupervised conditions. The superior performance of PEG can be attributed to the multiple model training, which improves the networks’ discriminative capability by mutual learning among diverse networks. And it can also be attributed to the selection of PEG, which preserves the more discriminative models in every generation and achieves the better performance of them. In addition, our full approach PEG(Full) further improves the re-ID performance by automatically selecting better architectures.

(2) Comparison with memory bank based methods Memory banks (Ge et al., 2020) were employed in many recent methods (Dai et al., 2021) to replace the linear classifier before the softmax cross-entropy loss function to improve unsupervised re-ID performance. Specifically, memory bank based methods can be further categorized into two groups including i) ClusterContrast, SpCL, and OPLG that learn general memories for all cameras, and ii) ICE and CAP that design specific memories for each camera. Since our research mainly focuses on the problem of training multiple models, it is independent of these methods for training single models. And they are not contradictive with our main contribution and are compatible with our method. To verify this, we additionally report our performance on the two typical stronger baselines of ClusterContrast as PEG+CCL, and ICE as PEG+ICE respectively in Tables 1 and 2.

For the camera-general memory bank based methods, PEG+CCL / ResNet50 surpasses most of other state-of-the-art methods only except ClusterContrast for Rank-1 accuracy on DukeMTMC-reID. For ClusterContrast, the relatively poor Rank-1 on DukeMTMC-reID dataset shows its weakness for some hard negative samples which were mistakenly identified as the same persons, because the soft mutual losses in mutual learning lack the certainty of labels and may not learn strong capability to separate hard negative samples. However, robust improvement of PEG is mainly shown by other metrics, especially the higher mAP on all benchmarks, indicating that PEG deals better with those hard positive samples, which is more important for the practical application of security. Furthermore, our full approach of PEG + CCL (Full) produces a new state-of-the-art performance on Market1501 and DukeMTMC-reID. The better performance can be attributed to the fact that the diverse population provides more reliable supervision for each other. The improved results also demonstrate that our evolution gaming approach is easily combined with different loss functions and can be further improved by more effective losses.

For the camera-specific memory bank based methods, PEG+ICE / ResNet50 outperforms all the compared methods on the three datasets and produces a new state-of-the-art performance on MSMT17 dataset. The superior performance to the PEG+CCL on MSMT17 can be attributed to that camera-specific memories alleviate the strong camera variance in the dataset which has 15 cameras. However, camera-specific memories are complementary with our PEG framework and can be further improved for better performance.

4.4 Ablation Study

4.4.1 Evaluation of Components

Detailed ablation studies are performed to evaluate the components of PEG as shown in Table 3.

Effectiveness of multiple model training Multiple model training usually achieves better performance than single model training because of the complementary discrimination of different models. In this section, we first introduce a baseline multi-model ensemble without mutual learning for comparison that only uses voting loss in Eq. 15 to train networks jointly, denoted as Multi-model. With eight networks used for ensemble, pseudo-labels are predicted by concatenating the features outputted from all networks and then used to supervise the training of each network individually by optimizing the voting loss. We also report the result of the single model baseline using the best architecture, ResNet50-IBNa. As shown in Table 3, Multi-model outperforms the single model training by large margins, indicating that more accurate pseudo-labels can be predicted using multiple models.

Effectiveness of population mutual learning Population mutual learning conducts knowledge distillation among all base models for the better ensemble. Compared with the baseline ensemble as Multi-model, models achieve better performance with mutual learning among themselves, as Multi-model + PML in Table 3. For example, the mAP is improved by 2.1% and 2.7% on Market-1501 and DukeMTMC-reID, respectively. The improvement can be attributed to that models additionally learn the distribution predicted by other models which contain more discriminative information.

In addition, more detailed ablation studies are performed to evaluate the components of mutual learning as shown in Table 5. In this experiment, three networks (DenseNet-121-IBNa, DenseNet-169-IBNa, and ResNet-50-IBNa) are trained concurrently. We first validate the temporally average model by removing it, denoted as PML w/o \(\varTheta _T\). For this experiment, we directly use the prediction of the current networks parameterized by \(\theta _T\) instead of the temporally average networks with parameters \(\varTheta _T\) as soft labels. As Table. 5 shows, distinct drops are observed, indicating that networks tend to degenerate to be homogeneous without using temporally average models, which substantially decreases the learning capability. Then we evaluate the mutual loss in Sect. 3.1.3 from two aspects: the mutual identity loss and the mutual triplet loss. The former is denoted as PML w/o \(\mathcal {L}_{mid}\). Results show that mAP drops from 79.2 to 77.2% on Market-1501 dataset and from 66.9 to 65.1% on DukeMTMC-reID dataset. Similar drops can also be observed when studying the mutual triplet loss, which is denoted as PML w/o \(\mathcal {L}_{mtri}\). The effectiveness of the mutual learning, including both two mutual losses, can be largely attributed to that it enhances the discrimination capability of all networks. Overall, the performance of the mutual learning ensemble largely outperforms the baseline ensemble. We also compare the mutual learning ensemble with two supervised upper bounds, which are trained using ground truths. The Single Model denotes evaluation using the best single model, and the Ensemble Feature denotes evaluation using feature ensemble among multiple networks. Our mutual learning ensemble is relatively close to them with evaluation using a single model.

Effectiveness of selection Selection phase in PEG finds and preserves an optimal subset of base networks for better multi-model training. The experiment with mutual learning and selection is denoted as Multi-model + PML + Sel. in Table 3. For this experiment, the selection is performed to preserve a combination of 3 networks from all 8 networks using the cooperative game in Sect. 3.1.1, then the preserved models are trained by mutual learning. Experimental results show that the selection phase improves the performance of Multi-model + PML even using fewer models. The superior performance indicates that some models may be redundant and cannot provide more discrimination but require more computation during training. However, the selection effectively preserves better models with the proposed cooperative gaming while abandoning weak models that could even degrade the overall discrimination capability of the whole ensemble. Without those weaker models, models will achieve better discrimination from more reliable and efficient mutual learning.

Effectiveness of reproduction and mutation Reproduction and mutation drive the PEG framework to train more diverse models by mutating their hyper-parameters, which is the key to the exploration of model diversity in our evolution process. With this component, PEG achieves the best performance in Table 3 as Multi-model + Sel. + Rep.& Mut.. The effectiveness of reproduction and mutation can be attributed to the exploration of training more diverse models with selection preserving the better ones of them after mutual learning. Beneficial from the iteration of reproduction, mutation, and selection, PEG keeps exploring and exploiting diverse and discriminative capacity for better re-ID models. In addition, multiple network ensemble with different architectures is also used to exploit their diversity. To further validate the effectiveness for the diversity of reproduction and mutation, we evaluate PEG with only a single model to initialize the population with reproduction and mutation, as Single architecture + PEG in Table 3. Compared with the single model baseline, the experiment improves the accuracy by large margins, and it also outperforms the multi-model without reputation and mutation. The results demonstrate that reputation and mutation are more important for exploring diversity. Moreover, our full approach of PEG with both multiple architectures and reputation &mutation performs the best, demonstrating that the diversities from the two components are complementary.

Generalization Analysis To validate the generalization of our approach with different baseline training methods, ablation studies on a stronger baseline of cluster contrast loss are evaluated as shown in Table 4. Compared with the single model, it performs not good when directly using multiple models for pseudo-label prediction, denoted as CCL-Multi. The distinct degradation of performance indicates that the weak models make a negative impact on such a stronger baseline. The models converge quickly to the inaccurate pseudo label partially predicted by the weak models and can no longer be improved. However, the performance of the multi-model is largely improved using the selection before training. It demonstrates that the selection still preserves better models effectively and abandons the weak models which are harmful to the ensemble. Furthermore, CCL-Multi + PEG produces the best performance on both datasets, validating the effectiveness of the mutation and reproduction. The superior results show that our PEG is effective and generalizable for different baseline methods.

4.4.2 Evaluation of Cross-Reference Scatter

In this section, we first validate the basic motivation of the cross-reference scatter, the phenomenon that more accurate labels lead to larger intra-cluster cohesion and inter-cluster separation in the trained feature space. We use inter-/intra-cluster scatters (ICS) to measure the separation as well as the cohesion over the feature space of models. As shown in Fig. 4, a larger ICS means larger inter-cluster separation and intra-cluster cohesion. We evaluate the ICS of models trained by labels with different accuracy. Specifically, the label accuracy is controlled by replacing a part of the ground truth with randomly incorrect labels. The results shown in Fig. 5 indicate a positive correlation between the ICS and the label accuracy, which confirms the basic hypothesis of CRS.

We also evaluate the cross-reference scatter with different metrics for clustering algorithm to compare the performance of re-ID models without ground truth. All comparison models with different architectures were first pre-trained in DukeMTMC-reID dataset and then evaluated in Market-1501 by the metrics. To demonstrate whether a metric can show the relative performance between models, we evaluate the correlation between the metric scores and the re-ID performance for all metrics, as shown in Fig. 6. Since CRS measures models at the start of every generation in PEG but aims to select the models that perform better after training, the metric scores were calculated before training and the re-ID performance is evaluated with mAP after the model has been unsupervised trained on the unlabeled data from Market1501, which represents more latent performance of models. We first compare ICS with two metrics for clustering algorithm including Davies–Bouldin Index (DBI) and Silhouette Coefficient (SC), as shown in the first line of Fig. 6. All three metrics are calculated directly on the feature space of the evaluated models by performing k-means clustering. For each metric, we used Spearman’s Rank Correlation (\(\rho \)) (Spearman, 1961) and Kendall’s Rank Correlation (\(\tau \)) (Kendall, 1938) to measure the correlation between the metric scores and the re-ID performance. However, we clearly see the poor correlation of the metrics with the re-ID performance according to the small \(\rho \) and \(\tau \), indicating that the distribution of features before training can not show the real performance of models. Then we evaluate the three metrics using our proposed cross-reference (CR) evaluation where metrics are calculated on the feature distribution of a reference model trained by predicted labels. As illustrated in the second line of Fig. 6, correlations are consistently improved by CR, which validates its effectiveness. Importantly, our CRS (ICS+CR) performs the highest correlation with \(\rho =0.93\) and \(\tau =0.86\) among all six compared metrics. Besides, we also present the rankings of models under different metrics in Fig. 7. Compared with the ground truth ranking result in the last column, CRS achieves a similar ranking of models while other metrics fail to rank them well. The superior performance of CRS can be attributed to two reasons. One reason is the cross-reference evaluation that measures the accuracy of predicted labels can better reflect models’ performance, and another reason is the ICS which better measures the convergence degree of the reference model. Specifically, both DBI and SC focus on the distribution of the difficult edge samples of clusters while they ignore the overall distribution and thus cannot measure well the degree of model convergence.

Comparison with different unsupervised measures on the correlation between the measures and re-ID performance (mAP after unsupervised learning) over different models (point a–h) on Market-1501 dataset. For each measure, we use Spearman’s Rank Correlation (\(\rho \)) (Spearman, 1961) and Kendall’s Rank Correlation (\(\tau \)) (Kendall, 1938) to measure the correlation between the metric and mAP values. A higher absolute value of \(\rho \) (or \(\tau \)) indicates a stronger correlation. Our proposed CRS shows a stronger correlation, indicating that it better reflects the performance of re-ID models

We also evaluate CRS with different clustering algorithms such as DBSCAN. In our work, DBSCAN is adopted in model learning to generate more accurate pseudo-labels like many recent unsupervised re-ID works. However, it is not applicable for CRS because the fair comparison of CRS among models requires the same cluster number during clustering, while DBSCAN cannot guarantee that. Specifically, CRS defined by the ratio of intra-/inter-cluster variance is relative to the cluster numbers. And the cluster numbers by DBSCAN with different evaluated feature models are likely to be different, making it unfair to compare their CRS for model selection. In our work, we use kmeans with the same cluster number k for all evaluated models. To validate its effectiveness, we evaluate the correlation between CRS and model performance using different clustering algorithms, as shown in Fig. 8. Compared with kmeans (M = 500), DBSCAN achieves a much lower Spearman’s Rank Correlation (\(\rho \)) (Spearman, 1961) and Kendall’s Rank Correlation (\(\tau \)) (Kendall, 1938) between the metric and mAP values. The results show that CRS with DBSCAN fails to measure the models, and KMeans does it better. Therefore, we use kmeans with the same cluster number for CRS.

Furthermore, we study the number of clusters M for k-means in CRS, which is hard to fix in the real world. We first compare the correlation between CRS and re-ID performance with different values of M, as shown in Fig. 8. CRS shows stronger correlations when M is set to 500 or 700, which is close to the number of person IDs in the datasets. The good performance of this cluster number is consistent with other kmeans based methods like MMT (Ge et al., 2020). When M is larger, the correlation will be weaker. But the CRS still basically reflects the performance of re-ID models, indicating it is robust to the cluster number. On the other hand, we also evaluate the performance of our full method with different M on both Market1501 and DukeMTMC-reID datasets. As shown in Table 6, the re-ID performance is generally consistent with the correlations of CRS. PEG performs best when M is set to 700, where CRS also achieves the highest \(\rho \) and \(\tau \), making selection able to select better models.

To validate CRS for very weak evaluated models which predict mostly wrong pseudo labels, we estimate CRS at different noise levels. Although its predicted labels are partially wrong for each evaluated model, we add extra noises by disrupting the label order of a particular portion of samples. As shown in Fig. 9, CRS maintains a stronger correlation between its values and model performance with the increase of the noise ratio, indicating its robustness for the wrong labels. When the noise level is too high such as 0.8, the correlation visibly deteriorated. However, higher CRS can still roughly reflect the better models.

In addition, we evaluate CRS with different architectures of the reference model. Four models are compared with fewer parameters to more parameters including OSNet, DenseNet-121, ResNet-50, and ResNet-101. For fair, all reference models are trained for 500 iterations during the evaluation of CRS. As shown in Table 7, we observe that models easy to converge such as OSNet and ResNet-50 show better measurement for higher correlation \(\rho \) and \(\tau \), while models hard to converge, like DenseNet-121 and ResNet-101, don’t perform well. Specifically, DenseNet using a dynamic architecture and ResNet-101 have deep layers and amounts of parameters, therefore they both require much more time to train. Since only a few training iterations are performed in CRS, the two architectures cannot show a sufficiently differentiable difference in feature distribution when evaluating different models. Moreover, we evaluate PEG for CRS with other light-weight networks as the reference model, including MobileNet and ResNet18. Different from OSNet specially designed for re-ID, the other two architectures are designed for general purpose. Table 8 shows the re-ID performance of PEG with different reference models for CRS. Our method achieves comparable re-ID performance consistently on Market-1501 and DukeMTMC-reID datasets. The results indicate that our CRS metric is model-general for reference models, which is not limited to certain architectures. In this work, we adopt the OSNet as the reference model of CRS in all other experiments for less time-consuming and more accurate measurement.

4.4.3 Analysis of Selection

Comparison with different model selection strategies For the method of selection of networks in PEG, we first compare our cooperative gaming (using the best-response dynamics according to CRS) with different selection strategies of network architectures, for example, using some of the deepest or weight-heaviest networks since deeper or weight-heavier networks generally achieve better performance. Considering that these strategies can not select networks from ones with the same architecture, in this experiment, we perform the selection from networks with different architectures only once and then train them by mutual learning before testing. The experiment results shown in Table 9 indicate that our approach selects better models which achieve higher performance through mutual learning. The better selection can be attributed to that the CRS approximately measures the discriminative capability of models by efficiently using the unlabeled data.

Moreover, we compare our group selection with the individual selection in PEG. For individual selection, we evaluated the CRS of every single network and accordingly preserved the best L networks. While for group selection, we use the cooperative game to find and preserve the group of L networks with the highest overall CRS. Through the iterative evolutionary game, the group selection performs better, as shown in Table 10. The superior performance indicates that networks preserved by the group selection are more complementary and it helps to achieve a better population in later evolutionary training.

Convergence analysis of cooperative selection gaming. We now discuss the convergence of the cooperative gaming of selection. Note that in every iteration of best response dynamics in Eq. 2, the outcome of the utility function strictly increases. Thus, no cycles are possible. Since the game is finite by assumption, it eventually ends, necessarily at a Nash equilibrium. The convergence of the cooperative game is illustrated in Fig. 10, where each game eventually halts at a Nash equilibrium.

4.4.4 Parameter Analysis

Analysis of agent number L in the selection. The agent number L in the cooperative game of selection determines the maximal size of the selected subset of networks. Here we evaluate the performance of our method and computational cost of the selection over different values of L, as shown in Fig. 11. Usually, a smaller L will lead to a lack of diversity of the population since only a small number of networks can be preserved during selection. However, L should not be very large because it will waste much more computational resources for solving the best-response dynamics. On the other hand, a larger L also means the larger size of the population in the next generation, which will cost more time for mutual learning among the networks. Taken together, a L of 3 is proper in our experiments, which achieves good performance without consuming too many computational resources.

Analysis of mutation factor r The mutation factor r in Sect. 3.1.2 will affect the diversity of populations and so the evolutionary training processes. We studied this parameter by setting it to different values and checking the mAP performance of all networks in the populations. Fig. 12 shows experimental results on Market-1501, where each circle point denote a single model. Using a larger r usually leads to a higher diversity within populations, which further leads to a higher possibility of achieving better performance. Specifically, a larger r results in a higher upper bound (maximized performance) and a similar average value. Notably, the average values do not represent the final performance. Although PEG aims to train a population of diverse networks, only one network is selected automatically according to Cross Reference Scatter for inference at the end of training, which is probably to be the better one. Therefore, the final performance of our method doesn’t depend on the average values of the population but depends on the performance of the selected model. On the other hand, a population with a higher upper bound is more likely to select a better model. For example, when r is set to 0.05 the best model in the population achieves 83.6% mAP, but when r is set to 0.5, there are 1/4 models in the population that achieve mAP higher than 83.6%, which may be selected for superior performance. Importantly, the final model is selected according to Cross Reference Scatter which is to estimate model performance by unlabeled training data. Experimental results in Sect. 4.4.2 demonstrated that better models are likely to have higher CRS values to be selected. And when \(r=0.5\) it provides more better models as candidates for the final selection. However, a larger r will also bring larger variance and instability of network performance within populations because it may reproduce very weak networks that drag down the overall discrimination capability of the whole population by mutual learning. Given all of that, we set r to 0.5 for both performance and stability.

Analysis of the number of generations To analyze the number of generations, we provide the model performance in every generation for 5 generation evolution, as shown in Fig. 13. The performance of models is boosted rapidly in the first and second generation, and the boost slows down gradually as the generation increases. After three generations of evolution, the performance is nearly convergent, and models achieve stable results.

4.4.5 Multiple Models versus Heavyweight Models

Heavyweight networks are more likely to learn discriminative representations than lightweight models since they have deeper architectures and more parameters. However, models with heavyweights require more time and computational resources during both the training and inference stages, making them infeasible in practice. Our experiments show that through PEG, lightweight models can surpass heavyweight models with IBN that are individually trained under unsupervised conditions. Take the market-1501 dataset as an example. After the evolutionary game of the population of lightweight networks, all member networks of the population achieve better performance than heavyweight networks, such as ResNet-101, as shown in Fig. 14. Specifically, one of the networks achieves a much higher mAP of 84.3% than ResNet-101 with only 1/3 of the parameters. The superior results can be attributed to two aspects. One is the mutation and selection that sufficiently explore and exploit the population. Mutation makes networks learn diverse knowledge, and selection maintains the optimal model groups and abandons the others. The models in the selected and preserved groups are complementary, so they produce more accurate and robust pseudo-labels for the next training phase and learn more discriminative features. The second reason is the mutual learning performed among all networks in the whole population. Since the models preserved by mutation and selection are diverse and complementary, each contains only a small part of the knowledge of the whole population. Through mutual learning, the knowledge of the population is assembled into each network by distillation, which equips the models with more discriminative capability. The PEG method explores the potential of lightweight networks and searches for the approximate global optimal solution and thus outperforms the heavyweight models.

4.5 Computational Cost

To evaluate the efficiency and effectiveness of the computational cost, we evaluate a series of large single models for reference as shown in Table 11. Experiments are conducted on four V100 GPUs. From the perspective of training, our method requires comparable computational cost with the large single models (such as ResNetrs420) while achieving significant performance improvement. And from the perspective of testing, our method requires as less computational cost as the small single models (such as IBN-DenseNet169) and surpasses them by large margins. More detailed descriptions are listed below.

(1) The improvement by increasing parameters is limited for single models on re-ID performance, and our PEG largely surpasses the best single model with comparable computations, demonstrating the cost meaningful. Specifically, we evaluate five architectures from lightweight to heavyweight including IBN-DenseNet169, IBN-ResNet50, IBN-ResNext101, ResNet200 and ResNetrs420. As parameters increase, single models usually achieve better performance, whereas they require more computational complexity and time for training. However, the improvement is limited when the parameters are very large, i.e., ResNetrs420 cannot surpass ResNet200 even though more than two times of parameters and training time are used. Compared with single models, PEG improves the accuracy by large margins. Although population-based training demands more cost, the cost is worth and affordable. Importantly, PEG provides further improvement that cannot be achieved by simply increasing model parameters.

To reduce the cost, we provide two implementation versions of PEG: small and large, with different sizes of the population. Specifically, PEG (small) maintains a lightweight population for efficient training, which selects only two models during selection and every model reproduces to 2 times. And PEG (large) follows the original settings according to the implementation details. Significantly, the provided PEG (small) achieves comparable performance with PEG (large) and requires only half the training cost. It also outperforms the best single architecture ResNet200 for more than 10% of mAP while costing comparable computing resources, which is more affordable and efficient than the large version. We suggest the version of PEG (small) for application in resource-limited environments.

(2) PEG requires less computational cost for testing, making it more applicable and valuable in practice. As is shown in Table 11, the computational complexity during testing of PEG is largely less than the large single models, even nearly 1/5 of the best one, ResNet200. It only requires as less computational cost as small single models such as IBN-DenseNet169 while surpassing its performance by large margins. It is because only one network in the population is selected in the end for evaluation. Since the training procedure is only conducted once, while the test will be continuously repeated in the actual re-ID system, PEG with less testing cost is applicable and valuable in practice.

To further analyze the training time of every procedure in our approach, we illustrated the training process over time in Fig. 15. Among the total training time, model learning accounts for the largest proportion. Model learning is performed by data loading, feedforward of all networks, backward of losses, and updating of parameters. This part of time is relative to the number and depth of networks. For example, the time of model learning in Generation 2 is shorter than in the other generations because there are only four networks in the population. The time of the selection stage is different in the three generations. On the one hand, it is affected by the number of candidate networks. On the other hand, it is affected by the convergence of the best-response dynamics. Moreover, clustering costs the least time, only for the extraction of features and execution of clustering algorithms.

5 Conclusion

The paper proposed a population-based evolutionary gaming which trains concurrently a population of networks for unsupervised person re-ID. We demonstrate that the population can evolve and achieve progressive discrimination through iterative selection to preserve adaptive networks, reproduction and mutation to provide more diversity, and mutual learning to assemble knowledge. Moreover, our proposed cross-reference scatter can approximately estimate the performance of networks using unlabeled data and thus is utilized as the utility of cooperative game in the selection phase. Our approach not only produces a new state-of-the-art accuracy on multiple benchmarks but also provided a fresh insight for population-based multi-network training.

Data Availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Ali, B., Moriyama, K., Kalintha, W., Numao, M., & Fukui, K. I. (2020). Reinforcement learning based metric filtering for evolutionary distance metric learning. Intelligent Data Analysis, 24(6), 1345–1364.

Baker, F. B., & Hubert, L. J. (1975). Measuring the power of hierarchical cluster analysis. Journal of the American Statistical Association, 70(349), 31–38.

Caron, M., Bojanowski, P., Joulin, A., & Douze, M. (2018). Deep clustering for unsupervised learning of visual features. In Proceedings of the European conference on computer vision (ECCV)(pp. 132–149).

Chen, G., Lu, Y., Lu, J., & Zhou, J. (2020). Deep credible metric learning for unsupervised domain adaptation person re-identification. In Computer vision–ECCV 2020: 16th European conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part VIII 16 (pp. 643–659). Springer

Chen, H., Lagadec, B., & Bremond, F. (2021a). Ice: Inter-instance contrastive encoding for unsupervised person re-identification. arXiv:2103.16364