Abstract

Providing relevant personalized recommendations for new users is one of the major challenges in recommender systems. This problem, known as the user cold start has been approached from different perspectives. In particular, cross-domain recommendation methods exploit data from source domains to address the lack of user preferences in a target domain. Most of the cross-domain approaches proposed so far follow the paradigm of collaborative filtering, and avoid analyzing the contents of the items, which are usually highly heterogeneous in the cross-domain setting. Content-based filtering, however, has been successfully applied in domains where item content and metadata play a key role. Such domains are not limited to scenarios where items do have text contents (e.g., books, news articles, scientific papers, and web pages), and where text mining and information retrieval techniques are often used. Potential application domains include those where items have associated metadata, e.g., genres, directors and actors for movies, and music styles, composers and themes for songs. With the advent of the Semantic Web, and its reference implementation Linked Data, a plethora of structured, interlinked metadata is available on the Web. These metadata represent a potential source of information to be exploited by content-based and hybrid filtering approaches. Motivated by the use of Linked Data for recommendation purposes, in this paper we present and evaluate a number of matrix factorization models for cross-domain collaborative filtering that leverage metadata as a bridge between items liked by users in different domains. We show that in case the underlying knowledge graph connects items from different domains and then in situations that benefit from cross-domain information, our models can provide better recommendations to new users while keeping a good trade-off between recommendation accuracy and diversity.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Cross-domain recommendation has recently emerged as a potential solution to the cold start problem in recommender systems (Cantador et al. 2015), aiming to mitigate the lack of data by exploiting user preferences and item attributes in domains distinct but related to the target domain. In this line, most of the cross-domain approaches proposed so far are based on collaborative filtering (Cremonesi et al. 2011), exploiting user preferences as a bridge to relate source and target domains, and ignoring the content of the items. Hence, they benefit from the fact that they do not need to perform any kind of analysis of item contents, which are in general highly heterogeneous across domains, and whose inter-domain relationships may be difficult to establish.

These difficulties, however, can be addressed nowadays thanks to the Semantic Web initiative (Shadbolt et al. 2006), and more specifically to its reference implementation the Linked Open Data (LOD) project (Bizer et al. 2009), which has originated a large number of inter-linked knowledge repositories publicly available in the Web, following the Semantic Web standards for data representation and access. Hence, in the current Web there is a wide array of structured data sources with information of items belonging to a variety of domains, such as history, arts, science, industry, media and sports, to name a few. This information not only consists of particular multimedia contents and associated metadata, but also explicit, semantic relations between items and metadata.

Motivated by the availability of large amounts of item metadata and semantic relations in the Linked Data cloud, we aim to address the cross-domain recommendation problem not only focusing on user preferences and item attributes, but also exploiting content-based relations between items from different domains. More specifically, we propose to use the set of LOD semantic features and relations as inter-domain links for supporting knowledge transfer across domains, enabling cross-domain item similarities, and providing recommendations for cold start users in the target domain.

Previous work has proposed graph-based algorithms to address the recommendation problem in heterogeneous datasets (Yu et al. 2014; Di Noia et al. 2016), analyzing the topology of semantic networks to jointly exploit user preferences and item metadata. These approaches have been shown to be effective for recommendation, but suffer from computational issues caused by the size of the semantic networks, which are in general very large. Differently, we avoid these issues by working in two steps. First, we exploit the semantic networks to compute inter-domain similarities that link items from different domains. Then, we leverage the computed similarities in hybrid matrix factorization (MF) models for recommendation, which no longer need to deal with the whole networks.

Therefore, the main contribution of this work is the development of novel, effective hybrid matrix factorization models that jointly exploit user preferences and item metadata for cross-domain recommendation. Moreover, we adapt a fast learning algorithm by Pilászy et al. (2010) for efficiently building our models, and evaluate them in cold start scenarios in several domains, in terms of both precision and diversity.

We evaluate the performance of the proposed models using a dataset of FacebookFootnote 1likes about movies, music and books. In order to obtain semantic metadata for the different items, we first mapped the items in our dataset to entities in LOD by means of SPARQL queries, and then extracted their attributes and relations to enhance the item profiles.

In a first experiment, we compared several state-of-the-art semantic similarity metrics for content-based recommendation, aiming to understand which is more suitable for later injecting in our cross-domain MF models, and achieving the best results using the link-based approach by Milne and Witten (2008). Then, we evaluated the ranking precision and diversity of the recommendations computed by the proposed models. We show that, depending on the involved source and target domains, our models generate more accurate suggestions than a number of baselines in severe cold start situations. Moreover, the proposed approaches provide a better trade-off between accuracy and diversity, which are in general difficult to balance.

We point out that the presented approaches can be effectively used if the underlying LOD knowledge graph encodes direct or indirect connections between items in different domains. In fact, we need to compute semantic similarities between items not belonging to the same domain. These connections are quite common for knowledge domains with some degree of information overlap, such as in the case of books, movies and music but, in case they are missing or rare, this may result as a limitation for the performances of the approaches we introduce here.

The reminder of the paper is structured as follows. In Sect. 2, we revise related work on cross-domain recommender systems, focusing on those approaches that are based on Matrix Factorization. For the sake of completeness, we provide an overview of the standard Matrix Factorization technique in Sect. 3. In Sect. 4, we present the developed cross-domain hybrid matrix factorization models. Next, in Sect. 5, we report and analyze the empirical results achieved in the experiments conducted to analyze user cold start situations. Finally, in Sect. 6 we end with some conclusions and future research lines.

2 Related work

In this section, we survey the state of the art on cross-domain recommender systems. First, in Sect. 2.1 we describe the cross-domain recommendation problem and present a categorization of the approaches, giving representative examples of each category. Next, in Sect. 2.2 we focus on those cross-domain recommendation approaches that use the matrix factorization technique to bridge the source and target domains.

2.1 Cross-domain recommender systems

Nowadays, the majority of recommender systems suggest items belonging to a single domain. For instance, NetflixFootnote 2 recommends movies and TV shows, SpotifyFootnote 3 recommends songs and music albums, and Barnes & NobleFootnote 4 recommends books. These domain-specific systems have been successfully deployed by numerous web platforms, and the single-domain recommendation functionality is not perceived as a limitation, but rather pitched as a focus on a certain market.

Nonetheless, in large e-commerce sites such as Amazon.comFootnote 5 and eBayFootnote 6 users often provide feedback for items from multiple domains, and in social networks like FacebookFootnote 7 and TwitterFootnote 8 users express their tastes and interests for a variety of topics. It may, therefore, be beneficial to leverage all the available user data provided in various systems and domains in order to generate more encompassing user models and better recommendations. Instead of treating each domain (e.g., movies, music and books) independently, knowledge acquired in a source domain could be transferred to and exploited in another target domain. The research challenge of transferring knowledge, and the business potential of delivering recommendations spanning across multiple domains, have triggered an increasing interest in cross-domain recommendations.

The cross-domain recommendation problem has been addressed from various perspectives in different research areas. It has been handled by means of user preference aggregation and mediation strategies for cross-system personalization in User Modeling (Abel et al. 2013; Berkovsky et al. 2008b; Shapira et al. 2013), as a potential solution to mitigate the cold start and sparsity problems in Recommender Systems (Cremonesi et al. 2011; Shi et al. 2011; Tiroshi et al. 2013), and as a practical application of knowledge transfer in Machine Learning (Gao et al. 2013; Li et al. 2009a; Pan et al. 2010). Focusing on how knowledge is exploited by cross-domain recommender systems, in Cantador et al. (2015) we categorized existing works according a two-level taxonomy.

-

Aggregating knowledge Knowledge from various source domains is aggregated to perform recommendations in a target domain. Depending on the stage in the recommendation process where the aggregation is performed we can further distinguish three cases. First, we find approaches that merge user preferences e.g., ratings, tags, transaction logs, and click-through data. The aggregation can be done by means of a multi-domain rating matrix (Berkovsky et al. 2007a; Sahebi and Brusilovsky 2013), using a common representation for user preferences such as social tags (Szomszor et al. 2008a; Abel et al. 2013; Fernández-Tobías et al. 2013) or semantic concepts (Kaminskas et al. 2013), linking the preferences via a multi-domain graph (Cremonesi et al. 2011; Tiroshi et al. 2013), or mapping user preferences to domain-independent features such as personality traits (Cantador et al. 2013) or user–item interaction features (Loni et al. 2014). In the second case, user modeling data from various recommender systems is mediated to improve target recommendations. For instance, Berkovsky et al. (2007a), Tiroshi and Kuflik (2012) and Shapira et al. (2013) import user neighborhoods and user–user similarities computed in the source domain into the target. Finally, some approaches directly combine single-domain recommendations, e.g. rating estimations (Berkovsky et al. 2007a; Givon and Lavrenko 2009) and rating probability distributions (Zhuang et al. 2010).

-

Linking and transferring knowledge Knowledge linkage or transfer between domains is established to support recommendations. In this case, we find methods that (1) link domains by a common knowledge such as item attributes (Chung et al. 2007), association rules (Cantador et al. 2013), semantic networks (Fernández-Tobías et al. 2011; Kaminskas et al. 2013), and inter-domain correlations (Zhang et al. 2010; Shi et al. 2011; Sahebi et al. 2017); methods that (2) share latent features between source and target domains factor models, either by using same model parameters (Pan et al. 2011; Hu et al. 2013; He et al. 2018) in both factorizations, or by introducing new parameters that extend the factorizations (Enrich et al. 2013; Fernández-Tobías and Cantador 2014); and methods that (3) transfer rating patterns extracted by co-clustering the source domain rating matrix and exploit them in the target domain (Li et al. 2009a; Gao et al. 2013; Cremonesi and Quadrana 2014). After defining the problem, in Pan (2016) three different knowledge transfer strategies for collaborative recommendation with auxiliary data (TL-CRAD) are introduced: (1) adaptive knowledge transfer, (2) collective knowledge transfer and (3) integrative knowledge transfer. Then, for each of them the author surveys related work with reference to different knowledge strategies with an emphasis on: transfer via prediction rule, transfer via regularization and transfer via constraint.

In terms of the goals addressed by cross-domain recommenders, we find great diversity among the reviewed approaches. Most proposals focus on improving accuracy by reducing data sparsity (Li et al. 2009a; Shi et al. 2011; Cao et al. 2010; Zhang et al. 2010; Pan et al. 2010; Tiroshi et al. 2013; Loni et al. 2014; Zhu et al. 2018). In many domains, the average number of ratings per user and item is low, which may negatively affect the quality of the recommendations. Data collected outside the target domain can increase the rating density, and thus may upgrade the recommendation quality. Others seek to enhance user models, which may have personalization-oriented benefits such as (1) discovering new user preferences for the target domain (Stewart et al. 2009; Szomszor et al. 2008b), (2) enhancing similarities between users and items (Abel et al. 2011; Berkovsky et al. 2008b), and (3) measuring vulnerability in social networks (Goga et al. 2013; Jain et al. 2013). Cross-domain methods have been also applied to bootstrap recommender systems by importing preferences from another source outside the target domain (Shapira et al. 2013), and have been proposed to improve the diversity of recommendations by providing better coverage of the range of user preferences (Winoto and Tang 2008). Finally, a few approaches have dealt with the new user problem (Hu et al. 2013; Sahebi and Brusilovsky 2013; Tiroshi and Kuflik 2012; Enrich et al. 2013). When a user starts using a recommender system, it has no knowledge of the user’s tastes and interests, and cannot produce personalized recommendations. This may be solved by exploiting the user’s preferences collected in a different source domain.

We observe that addressing the cold-start has been barely investigated, as in Liu et al. (2017) where the authors present a neighborhood-based algorithm for the dual cold-start problem. The generalization of users and items into a cluster level to obtain high-quality relations also in cold start scenario is the focus of Mirbakhsh and Ling (2015). They first employ biased matrix factorization to map rating matrix into lower-dimension latent spaces. After this step, they apply the k-means clustering algorithm to categorize users and items. The cold-start is also the main topic of Wongchokprasitti et al. (2015), where the authors propose a novel approach to cross-system personalization based on two assumptions: the existence of a user model that could be shared among platforms, and that a specific system can maintain (and provide) the user models built by its system.

As we shall present in Sect. 4, we aim to deal with the cold-start problem by means of novel matrix factorization models that jointly exploit user ratings and item metadata. Before, in Sect. 2.2, we revise state-of-the-art cross-domain recommender systems based on matrix factorization.

2.2 Matrix factorization-based cross-domain recommender systems

Although matrix factorization models can be applied in cross-domain approaches based on knowledge aggregation—essentially as a standard recommendation problem once the user preferences from both domains are combined—, they have been mostly used in knowledge linkage and transfer approaches. In these settings, latent factors from source and target domains are either shared or related in order to establish the bridge between the domains.

One way of linking domains explored in previous works exploits inter-domain similarities by integrating them into the probabilistic matrix factorization model (Salakhutdinov and Mnih 2007). Specifically, such similarities are imposed as constraints over user or item latent factors when jointly factorizing rating matrices. For instance, Cao et al. (2010) proposed an approach in which inter-domain similarities are implicitly learned from data, as model parameters in a non-parametric Bayesian framework. Since user feedback is used to estimate the similarities, user overlap between the domains is required. Addressing the sparsity problem, Zhang et al. (2010) adapted the probabilistic matrix factorization method to include a probability distribution of user latent factors that encodes inter-domain correlations. One strength of this approach is that user latent factors shared across domains are not needed, allowing more flexibility in capturing the heterogeneity of domains. Instead of automatically learning implicit correlations in the data, Shi et al. (2011) argued that explicit common information is more effective, and relied on shared social tags to compute cross-domain user-to-user and item-to-item similarities. Similarly to previous approaches, rating matrices from the source and target domains are jointly factorized; but in this case user, and item latent factors from each domain are restricted, so that their product is consistent with the tag-based similarities.

Latent factors shared between domains can be exploited to support cross-domain recommendations. In this context, two types of approaches have been studied to perform the actual transfer of knowledge; namely, adaptive and collective models. In the former, latent factors are learned in the source domain, and are integrated into a recommendation model in the target domain, while in the latter, latent factors are learned simultaneously optimizing an objective function that involves both domains. Pan et al. (2010) addressed the sparsity problem in the target domain following the adaptive approach, proposing to exploit user and item information from auxiliary domains where user feedback may be represented differently. In particular, they studied the case in which users express binary like/dislike preferences in the source domain, and utilize 1–5 ratings in the target domain. Their approach performs singular value decomposition (SVD) in each auxiliary domain in order to separately compute user and item latent factors, which are then shared with the target domain. Specifically, transferred factors are integrated into the factorization of the rating matrix in the target domain, and added as regularization terms so that specific characteristics of the target domain can be captured. Latent factors can also be shared in a collective way, as studied by Pan et al. (2011). In this case, instead of learning latent features from the source domains and transferring them to the target domain, the authors proposed to learn the latent features simultaneously in all the domains. Both user and item factors are assumed to generate the observed ratings in every domain, and, thus, their corresponding random variables are shared between the probabilistic factorization models of each rating matrix. Moreover, the factorization method is further extended by incorporating another set of factors that capture domain-dependent information, resulting in a tri-factorization scheme. A limitation of the proposed approach is that the users and items from the source and target domains have to be identical. Instead of focusing on sharing latent factors, Enrich et al. (2013) and Fernández-Tobías and Cantador (2014) studied the influence of social tags on rating prediction, as a knowledge transfer approach for cross-domain recommendations. The authors presented a number of models based on the SVD++ algorithm (Koren 2008) to incorporate the effect of tag assignments into rating estimation. The underlying hypothesis is that information about item annotation in a source domain can be exploited to improve rating prediction in a target domain, as long as a set of common tags between the domains exists. In the proposed models, tag factors are added to the latent item vectors, and are combined with user latent features to compute rating estimations. The difference between these models is in the set of tags considered for rating prediction. In all the models, knowledge transfer is performed through the shared tag factors in a collective way, since these are computed jointly for the source and the target domains. Hu et al. (2013) presented a more complex approach that takes domain factors into account. There, the authors argue that user–item dyadic data cannot fully capture the heterogeneity of items, and that modeling domain-specific information is essential to make accurate predictions in a setting where users typically express their preferences in a single domain. They referred to this problem as the unacquainted world, and proposed a tensor factorization algorithm to exploit the triadic user–item-domain data. In that method, rating matrices from several domains are simultaneously decomposed into shared user, item, and domain latent factors, and a genetic algorithm automatically estimates optimal weights of the domains. In a recent work, Zhu et al. (2018) propose a two-step approach where the latent factors, learned via MF for both the source and target domains, are linked by training a deep neural network (DNN) representing their connections. Interestingly, the training process of the DNN is driven by the sparsity degrees of individual users and items in the source and target domains. Contextual and content-based information is exploited in Taneja and Arora (2018) to cluster users in the source domain prior to a tensor factorization. The proposed Cross Domain-Multi Dimensional Tensor Factorization (CD-MDTF) mitigates the sparsity and cold-start problem by transferring the aggregated knowledge from the source domain to target domain. An approach based on linking and transferring knowledge is proposed in Zhao et al. (2017), where the main assumption is that correspondences among entities in different domains are unknown, but can be computed with a cost. Starting from this assumption, the authors propose a unified framework aimed at actively mapping entities in different domain and then transferring knowledge via collaborative filtering. This latter step leverages partial mappings among entities for knowledge transfer. The authors also show how to integrate in their framework various extended matrix factorization techniques in a transfer learning manner. An emphasis on the meaningfulness of the knowledge extracted from the source domain to the target domain is the main topic in Zhang et al. (2017). A clustering step among users and items is performed both in the source and target before a matrix factorization. Then, by comparing the resulting matrices, it is possible to evaluate the consistency of the information transfer.

Rather than sharing user or item latent factors for knowledge transfer, a different set of approaches analyzes the structure of rating data at the community level. These methods are based on the hypothesis that even when their users and items are different, close domains are likely to have user preferences sampled with the same population. Therefore, latent correlations may exist between preferences of groups of users for groups of items, which are referred to as rating patterns. In this context, rating patterns can act as a bridge that relates the domains, such that knowledge transfer can be performed in either adaptive or collective manners. In the adaptive setting, rating patterns are extracted from a dense source domain (Li et al. 2009a; Gao et al. 2013). In the collective setting, data from all the domains are pulled together and jointly exploited, even though users and items do not overlap across domains (Li et al. 2009b). In He et al. (2018), the authors propose to alleviate the data sparsity problem in a target domain by transferring rating patterns from multiple incomplete source domains. The proposed approach extracts rating patterns from a sparse source domain that are eventually combined with collaborative filtering to approximate the target domain and predict missing values. In particular, they take into account the effects related to negative transfer to obtain more robust recommendations.

3 Matrix factorization-based collaborative filtering

Matrix Factorization (MF) models are among the most popular approaches to collaborative filtering, and have been actively investigated since they were introduced in the context of the Netflix prize competition (Bell and Koren 2007a). As opposed to classic user- and item-based collaborative filtering heuristics (Herlocker et al. 1999; Linden et al. 2003), MF methods train a statistical model from the available data using machine learning techniques. Specifically, they perform a dimensionality reduction of the highly sparse rating matrix into a subspace of latent factors, which aim to capture implicit properties of users and items. In order for MF to be effective, the dimension k of the latent subspace is assumed to be much smaller than the number of users and items, \(k \ll \min (\vert U \vert , \vert I \vert )\), essentially acting as a bottleneck that compresses the sparse input while retaining enough information to explain the observed user–item interactions.

In this section we review the classical matrix factorization models for explicit and implicit feedback, which serve as the building blocks for our proposed models presented in Sect. 4, as well as the state-of-the-art approaches BPR and FISM that we use as baselines in our experiments.

3.1 Matrix factorization models for rating prediction

Recommendation models based on MF have their roots on the Latent Semantic Analysis (LSA) technique (Deerwester et al. 1990), widely used in Natural Language Processing and Information Retrieval. LSA attempts to automatically infer concepts implicit in text documents by approximating the term-document matrix with a truncated Singular Value Decomposition (SVD) of lower rank. The first MF approaches for recommendation borrowed the same idea, and applied it to the user–item matrix in the rating prediction task (Sarwar et al. 2000). In contrast to LSA, the SVD is not well defined for sparse matrices as those commonly found in recommender systems, and hence the above approaches relied on imputation techniques to fill the missing matrix entries before applying SVD.

Rather than filling the rating matrix, which may introduce inaccurate information, subsequent approaches aimed to only factorize observed ratings instead of the whole matrix. One of the first and most popular methods in this line is the model proposed by Funk (2006), in which each user u is assigned a vector \(\mathbf {p}_u \in {\mathbb {R}}^k\) of latent features automatically inferred from the data, and similarly each item i is assigned a vector \({\mathbf {q}}_i \in {\mathbb {R}}^k\) in the same subspace. Intuitively, latent features aim to capture properties implicit in the data—such as the amount of comedy or action in the case of movies—, but does not need to be interpretable at all, as this is not enforced in the model (Koren and Bell 2015). Ratings are then estimated as the dot product of latent feature vectors:

Equivalently, the rating matrix \(\mathbf {R}\) is factorized as \(\mathbf {R} \approx \mathbf {P} \mathbf {Q}^\top \), where \(\mathbf {P}\) is a \(\vert U \vert \times k\) matrix with the user vectors \(\mathbf {p}_u\) as rows, and respectively \(\mathbf {Q}\) is \(\vert I \vert \times k\) contains the \({\mathbf {q}}_i\) as rows. The values of these matrices are automatically estimated from the data, by minimizing the Mean Squared Error of the ratings predicted against the ratings observed in a training set. That is, \(\mathbf {P}\) and \(\mathbf {Q}\) are chosen to minimize to following loss function:

where \({\mathcal {R}}\) is the set of observed ratings, i.e., the set of non-zero entries of the rating matrix \(\mathbf {R}\), and \(\lambda >0\) is a regularization hyper-parameter used to prevent overfitting.

In (Funk 2006) this function is minimized using Stochastic Gradient Descent, a widely used optimization technique that iteratively updates the parameters in the opposite direction of the gradient. When applied to Eq. (2), this technique yields the following update rules for the parameters \(\mathbf {p}_u\) and \({\mathbf {q}}_i\) for each rating \(r_{ui}\) in the training set:

The learning rate\(\eta \) is a hyper-parameter that controls the extent to which the model parameters are updated in each iteration, and is carefully chosen; too large values may make the algorithm fail to converge, while too small values may make its convergence very slow. \(e_{ui}\) is the prediction error, and is defined as \(e_{ui} \triangleq r_{ui} - {\hat{r}}(u,i)\).

In addition to Stochastic Gradient Descent, other optimization techniques have been explored in the literature, such as Alternating Least Squares (Bell and Koren 2007b), which is the standard technique followed in MF models for positive-only feedback (Sect. 3.2).

The basic SVD model by Funk (2006) is easily extensible, and has served as a building block for more complex matrix factorization models. For instance, Koren (2008) proposed the SVD++ model, which includes additional parameters to account for implicit feedback in rating predictions. Further extensions of SVD introduce temporal variables to capture the evolution of user preferences through time (Koren and Bell 2015).

3.2 Matrix factorization models for positive-only feedback

The core ideas behind the standard Matrix Factorization model for collaborative filtering have also been applied to the item ranking task when positive-only feedback is available instead of numeric ratings. Recommendation models designed for this type of data must take into account its particular characteristics, most notably the absence of negative feedback, but also the possible uncertainty in the positive feedback, as an observed user–item interaction may not necessarily indicate a preference of the user towards the item.

In one of the most representative works in this direction, Hu et al. (2008) proposed an adaptation of the rating-based MF model described previously to deal with positive-only feedback. As opposed to the rating-based SVD, which only considers the observed ratings, Hu et al.’s method models the full set of \(\vert U \vert \cdot \vert I \vert \) interactions. Since negative feedback is not available in this scenario, the authors argue that the algorithm also has to model the missing information as an indirect source of negative user preferences. For such purpose, they introduce a parameter \(c_{ui}\) for each possible user–item pair that measures the confidence on the corresponding interaction, whether observed or not:

where \(k_{ui}\) is the count of implicitly collected interactions between user u and item i—such as number of clicks on a product web page on an e-commerce site, and the number of listening records of a given song in an online music provider—, and \(\alpha >0\) is a scaling parameter. When no interaction is observed, \(k_{ui}=0\) and the model assigns minimum confidence to the user–item pair, as it is unknown whether the lack of interaction is because the user really does not like the item, or just because the user does not know the item. Likewise, the more interactions are collected and the greater \(k_{ui}\), the larger is the confidence on that observation. Moreover, focusing on the item ranking task, Hu et al.’s approach only aims to predict if the user will interact with the item, rather than the actual number of observations \(k_{ui}\). Hence, a new set of variables is introduced so that \(x_{ui}=1\) if \(k_{ui}>0\), and \(x_{ui}=0\) otherwise.

Similarly to the SVD model for ratings, the recommendation score of item i for user u is estimated as the dot product of their corresponding latent feature vectors:

The model parameters \(\mathbf {p}_u\) and \({\mathbf {q}}_i\) are again automatically learned by minimizing the mean squared error for the score predictions, but now accounting for the different confidence levels and the full set of possible user–item pairs:

Again, the loss function can be minimized with different numerical optimization techniques such as Stochastic Gradient Descent, but in Hu et al. (2008) the authors propose an Alternating Least Squares (ALS) procedure that efficiently handles the greater cost of accounting for the missing values. Clearly, the loss function in Eq. (7) involves many more terms than that of Eq. (2), as the number of observed entries in the user–item matrix is usually very small due to the data sparsity.

The key observation behind ALS is that when one set of parameters is fixed, the optimization problem in Eq. (7) is convex and analytically solvable using ordinary least-squares estimation. In particular, fixing the \({\mathbf {q}}_i\) parameters and setting the gradient with respect to \(\mathbf {p}_u\) to zero yields the solution

where \(\mathbf {I}\) is the \(k \times k\) identity matrix, \(\mathbf {C}^u\) is a \(\vert I \vert \times \vert I \vert \) diagonal matrix with the \(c_{ui}\) values, and \(\mathbf {x}_u\) is a column vector of length \(\vert I \vert \) with the \(x_{ui}\) values. The same procedure can be applied by fixing the user factors, and optimizing the item factors, leading to the solution

Similarly, \(\mathbf {C}^i\) is a \(\vert U \vert \times \vert U \vert \) diagonal matrix with the \(c_{ui}\) confidence values, and \(\mathbf {x}_i\) is a column vector of length \(\vert U \vert \) containing the binary values of \(x_{ui}\).

As pointed out by the authors, the products \(\mathbf {Q}^\top \mathbf {C}^u \mathbf {Q}\) and \(\mathbf {P}^\top \mathbf {C}^i \mathbf {P}\) require time \({\mathcal {O}}(k^2\vert U \vert )\) and \({\mathcal {O}}(k^2\vert I \vert )\) for each user and item, respectively, and represent a computational bottleneck during the training phase. However, these terms can be computed more efficiently noting that \(\mathbf {Q}^\top \mathbf {C}^u \mathbf {Q} = \mathbf {Q}^\top \mathbf {Q} + \mathbf {Q}^\top (\mathbf {C}^u - \mathbf {I}) \mathbf {Q}\), where \(\mathbf {Q}^\top \mathbf {Q}\) is independent of the user and thus can be precomputed, and \(\mathbf {C}^u - \mathbf {I}\) only has non-zero entries in the diagonal for the \(\vert I(u) \vert \) items with \(k_{ui}>0\), which is much smaller than \(\vert I \vert \). Considering the computation of the matrix inverse, the total complexity of Eq. (8) for a single user is \({\mathcal {O}}(k^2\vert I(u) \vert + k^3)\). Likewise, the complexity for Eq. (9) is \({\mathcal {O}}(k^2\vert U(i) \vert + k^3)\).

The main advantage of ALS is that the optimal factors for each user in Eq. (8) can be computed in parallel once the item factors are fixed (P step). Symmetrically, once the user factors are obtained and fixed, the item factors in Eq. (9) can be found for each item in parallel (Q step). This observation leads to the alternating nature of ALS, respectively fixing one set of parameters and optimizing the other until convergence is reached or for a given number of iterations, as illustrated in Algorithm 1.

The ALS-based method by Hu et al. (2008) became the standard baseline for matrix factorization models with positive-only feedback, and has been extended and improved in subsequent works since it was first proposed. One notable paper by Pilászy et al. (2010) presents a new training procedure to boost the time complexity of the P step of each user to \({\mathcal {O}}(k^2 + k\vert I(u) \vert )\), and analogously the Q step. In order to achieve this significant improvement, the authors propose an approximate solution to the least-squares problem in each step. Rather than directly finding the k-dimensional solution as in Eqs. (8) and (9), which involves the costly computation of a matrix inverse, their approach iteratively solves k one-dimensional least squares problems, one for each latent dimension, much less expensive to solve. As reported in the paper, the loss of accuracy due to the approximate algorithm is small compared to the saved time for training. In subsequent work, Takács and Tikk (2012) extended ALS to a ranking-based MF approach that learns to predict the relative ordering of items instead of individual point-wise scores. More recently, Paquet and Koenigstein (2013) proposed a graph-based Bayesian model that is able to capture the meaning of missing values, distinguishing between a user disliking an item or being unaware of it.

3.3 Bayesian personalized ranking criterion

Matrix Factorization models can be easily trained to reduce the prediction error via gradient descent methods, alternating least-squares (ALS), and MCMC. If the problem is formulated as a top-N recommendation task, they can be trained using a learning to rank approach like the Bayesian Personalized Ranking Criterion (BPR) from Rendle et al. (2009). Given \(\varTheta \) as the model parameters, and \(\succ _u\) the unknown preference structure we want to learn for user u, the optimal personalized ranking using Bayesian formulation can be found optimizing the posterior probability:

The BPR criterion is optimized using a stochastic gradient descent algorithm on a set \(D_S\) of triples (u, i, j), with \(i \in I^u\) and \(j \not \in I^u\), selected through a random sampling from a uniform distribution. From the former, the overall optimization criterion BPR-OPT can be derived defining the individual probability with a sigmoid function \(\sigma (\cdot )\):

Given the sigmoid function, the update step is defined as:

where \(\alpha \) is the chosen learning rate, and the values \({\hat{x}}_{uij}\) are set to the difference of the predicted values, between a positive element (which is present in a user’s history), and a negative element (randomly extracted from the remaining items). In the classical BPR formulation, a Biased Matrix Factorization model (Koren and Bell 2015) is adopted in which the prediction \({\hat{x}}_{ui}\) corresponds to:

where \(b_u\) and \(b_i\) are, respectively, the learned user and item biases. The difference of the predicted values, \({\hat{x}}_{uij}\), is thus:

By computing the partial derivatives, the updates of the parameters for the model are:

Using Eqs. (14–18) the model parameters can be iteratively updated to maximize the BPR-OPT criterion. The algorithm updates sequentially the model using each sampled triple and continues the training until it reaches the convergence (usually the provided number of iterations).

3.4 FISM

Matrix factorization has also been exploited to alleviate the sparsity problem in generating the item similarity matrix. In FISM, Kabbur et al. (2013) proposed to learn the item similarity matrix as the product of two low dimensional latent factor matrices. Given two items i and j, the similarity sim(i, j) between them is computed as the dot product between the corresponding factors from \(\mathbf{P }\) and \(\mathbf{Q }\), i.e., \(sim(i, j) = \langle {\mathbf {p}}_j,{\mathbf {q}}_i\rangle \). The rating for a given user u on item i is estimated as

In order to solve the top-N recommendation problem, the authors used a ranking loss inspired by Bayesian Personalized Ranking (BPR) (Rendle et al. 2009), which optimizes the area under the curve (AUC). The consequent model, FISMauc, is learned solving the following minimization problem:

where the vectors \(b_i\) correspond to the vector of item biases. The regularization terms are used to prevent overfitting, and \(\beta \) and \(\gamma \) are the regularization weights for latent factor matrices, and item bias vector respectively.

4 Proposed matrix factorization models for cross-domain recommendation

In contrast to previous works that rely on graph-based methods for exploiting semantic metadata, the approach proposed in this paper first computes inter-domain content-based similarities between the items, and then exploits these similarities to regularize the joint learning of matrix factorizations in the source and target domains. In particular, we present three alternative hybrid models that make different assumptions about the relationships between source and target domain item latent factors, simultaneously exploiting user preferences and item metadata.

Moreover, in the paper we detail our adaptations of the fast alternating least squares training algorithm for matrix factorization proposed by Pilászy et al. (2010), in order to deal with the increased complexity of our models, which not only learn the auxiliary source domain user preferences, but also the item metadata used to bridge the domains.

Items from different domains tend to have very diverse attributes that are not straightforward related. For instance, a book may be characterized by its author or by its book genres, and a movie can be described using its cast, director or movie genres. In fact, content-based features are often different between domains, and even when they refer to related concepts, such as book genres and movie genres, the features may not be directly aligned, e.g., funny movies vs. comedy books.

In order to overcome the heterogeneity of features of items from different domains, we propose to exploit Linked Data for linking entities from multiple and diverse domains. Specifically, we map the items in our datasets to entities in DBpedia (Lehmann et al. 2015), a multi-domain repository that provides a semantic-based, structured representation of knowledge in Wikipedia.Footnote 9 In Sect. 5.1 we shall describe the process of mapping items to semantic entities from DBpedia. Once the items are mapped to their corresponding entities, we use the DBpedia graph to compute semantic similarities between such entities, mining both the attributes and the structure of the graph with semantic relations. More specifically, we exploit the information in DBpedia to compute a semantic similarity matrix \(\mathbf {S} \in {\mathbb {R}}^{\vert {\mathcal {I}}_S \vert \times \vert {\mathcal {I}}_T \vert }\) between the source domain items \({\mathcal {I}}_S\) and the target domain items \({\mathcal {I}}_T\):

In Sect. 5.4 we shall report recommendation performance results by using several semantic similarity metrics from the state of the art.

The computed inter-domain item similarities are then used to link the domains for cross-domain recommendation. In the cold-start, when a user has rated a few (if any) items in the target domain, a recommender system could suggest the user items in the target domain that are semantically similar to those the user liked in the source domain. Hence, the system could be effective only if there is an overlap of users between the domains. Moreover, even cold start users in the target domain should have some preferences in the source domain.

In the next subsections we present our three recommendation models based on the exploitation of semantic similarities to regularize item factors in MF, so that similar items from different domains tend to have similar parameters. In this way, even if the user’s preferences in the target domain are unknown, a recommender system could suggest the user with target items that are most similar to those she preferred in the source.

4.1 Regularization through similarity prediction

The first semantic-based matrix factorization cross-domain model we propose is based on the assumption that latent vectors of related items should explain the items semantic similarities, in addition to the users’ preferences. That is, we not only seek to predict the preferences \(r_{ui} \approx \langle \mathbf {p}_u, {\mathbf {q}}_i \rangle \), but also the inter-domain similarities \(s_{ij} \approx \langle {\mathbf {q}}_i, {\mathbf {q}}_j \rangle \), where \(i \in {\mathcal {I}}_S\) and \(j \in {\mathcal {I}}_T\).

Hence, our model jointly factorizes the rating and inter-domain item similarity matrices that link the source and target domains. Let \({\mathcal {U}}= {\mathcal {U}}_S \cup {\mathcal {U}}_T\) be the set of all users, which we assume overlaps between the domains, and let \({\mathcal {I}}= {\mathcal {I}}_S \cup {\mathcal {I}}_T\) be the set of all items, which we assume do not overlap. Our model learns a latent vector \(\mathbf {p}_u \in {\mathbb {R}}^k\) for each user \(u \in {\mathcal {U}}\), but separately models source and target domain items \({\mathbf {q}}_i\) and \({\mathbf {q}}_j\), with \(i \in {\mathcal {I}}_S\) and \(j \in {\mathcal {I}}_T\), as follows:

where \(\mathbf {Q}_S\) and \(\mathbf {Q}_T\) are matrices containing the item latent vectors as rows from the source and target domains, respectively. We note that the summation in the first term iterates over all items \(a \in {\mathcal {I}}\) from both domains, as we want to factorize the source and target user–item preference matrices simultaneously. The cross-domain regularization parameter \(\lambda _C > 0\) controls the contribution of the inter-domain semantic similarities; large values of the parameter will force items to have too similar latent vectors, whereas low values will result in limited transfer of knowledge between domains.

As in standard matrix factorization, we train our model using Alternating Least Squares. First, we fix \(\mathbf {Q}_S\) and \(\mathbf {Q}_T\), and solve analytically for each \(\mathbf {p}_u\) by setting the gradient to zero. Since the user factors do not appear in the additional cross-domain regularization term, we obtain the same solution as for the baseline MF model (see Eq. 8):

In order to simplify the notation, we have defined the matrix \(\mathbf {Q}\) as the row-wise concatenation of \(\mathbf {Q}_S\) and \(\mathbf {Q}_T\). The matrix \(\mathbf {C}^u\) is a diagonal matrix with the confidence values \(c_{ua}\) for all \(a \in {\mathcal {I}}\), and the vector \(\mathbf {r}_u\) contains the preferences of user u, again for all items \(a \in {\mathcal {I}}\).

Next, we fix the user factors \(\mathbf {P}\) and the target domain item factors \(\mathbf {Q}_T\), and compute the optimal values for the source domain item factors. Again, by setting the corresponding gradient to zero and solving analytically we obtain:

As previously, the vector \(\mathbf {r}_i\) contains the preferences assigned to item i, and \(\mathbf {s}_i\) is the ith row of the inter-domain semantic similarity matrix \(\mathbf {S}\). Finally, we proceed as before fixing \(\mathbf {P}\) and \(\mathbf {Q}_S\) to compute the optimal solution for the target domain item latent vectors:

The computation of the optimal factors can be parallelized within each step, but the larger number of items to consider and the extra step required for the source domain greatly increase the training time with respect to the MF baseline. In order to address this issue, we adapt the fast RR1 training algorithm for ALS proposed by Pilászy et al. (2010). Since the computation of the user factors is the same as in the original MF model, the procedure remains the same for the P-step. For the source domain Q-step, by inspecting Eqs. (22) and (24), we note that the additional terms that arise from the inter-domain similarities can be treated just like user preferences as follows. For each source item i:

-

1.

Generate examples for each rating \(r_{ui}\) as for baseline MF [see Pilászy et al. (2010)]

-

2.

For each target item \(j \in {\mathcal {I}}_T\):

-

Generate an input example \(\mathbf {x}_j := {\mathbf {q}}_j\).

-

Use the similarity as the dependent variable, \(y_j := s_{ij}\).

-

Use a constant confidence value \(c_j := \lambda _C\).

-

The parameter to optimize is \(\mathbf {w} := {\mathbf {q}}_j\).

-

The above procedure will produce the similarity terms of Eq. 24, which can be defined by means of the confidence matrix \(\tilde{\mathbf {C}}^i = \lambda _C \mathbf {I}\). The procedure for the target domain Q-step is completely analogous.

4.2 Regularization based on item neighborhoods

Our second semantic-based matrix factorization cross-domain model exploits the item semantic similarities in a different fashion. Instead of forcing pairwise item interactions to reproduce the observed similarity values, the approach we present here leverages \(\mathbf {S}\) to regularize the item latent vectors, so that feature vectors of similar items are pushed together in the latent space. Intuitively, items that are semantically similar should also have similar latent parameters.

As previously, let \({\mathcal {U}}= {\mathcal {U}}_S \cup {\mathcal {U}}_T\) and \({\mathcal {I}}= {\mathcal {I}}_S \cup {\mathcal {I}}_T\) be the sets of all users and items, respectively. Our approach jointly factorizes the source and target domain rating matrices, and regularizes similar item factors proportionally to the items similarity. However, instead of considering all the potentially similar source domain items, we limit the regularization of a target domain item \(j \in {\mathcal {I}}_T\) to its neighborhood, i.e., to the set \(N(j) \subseteq {\mathcal {I}}_S\) of the top-n most similar source domain items:

We note that items with greater similarity values are more heavily regularized, whereas items with values of \(s_{ij} \approx 0\) in their neighborhoods are barely affected. However, it may still be convenient to regularize such items so that they benefit from cross-domain information, and thus may be eligible for recommendation to cold start users. Therefore, we also experiment normalizing the similarity scores in the item neighborhoods so that \(\sum _{i \in N(j)} s_{ij} = 1\). In this way all target items are equally regularized, but each is affected by its source domain neighbors proportionally to their similarity scores.

By assigning latent vectors to target domain items close to those of similar source domain items, our model is able to generate recommendations in cold start settings. Specifically, let \({\mathbf {q}}_j\) be the latent vector learned for target item \(j \in {\mathcal {I}}_T\), and let \({\mathbf {q}}_i\) be the latent vector of source item \(i \in {\mathcal {I}}_S\), which we assume is semantically similar to j. Our model will regularize both factors so that their distance \(\left||{\mathbf {q}}_j - {\mathbf {q}}_i \right||\) is small, or equivalently, \({\mathbf {q}}_j \approx {\mathbf {q}}_i\). Consider now a cold start user u who only provided preferences in the source domain, so that her corresponding latent vector \(\mathbf {p}_u\) is therefore only adjusted using source domain preferences. In standard MF, it is not guaranteed that \(\mathbf {p}_u\) will extrapolate to the target domain, and will provide an accurate prediction for \({\mathbf {q}}_j\). In contrast, our model ensures that \(\langle \mathbf {p}_u, {\mathbf {q}}_j \rangle \approx \langle \mathbf {p}_u, {\mathbf {q}}_i \rangle \), i.e., target domain items yield relevance prediction scores close to that of similar source domain items. Hence, u will be recommended with a target domain item j if the user liked the source domain item i, or if i would be recommended to u in the source domain.

Once more, we train our neighborhood-based matrix factorization model using Alternating Least Squares. As in the previous model, the user factors are not affected by the extra regularization, and can be computed again using Eq. (23), leaving the P-step unchanged. For the target domain item factors \({\mathbf {q}}_j\) we proceed as usual, fixing the user and source item factors, and finding the values such that \(\frac{\partial {\mathcal {L}}}{\partial {\mathbf {q}}_j} = 0\), which yields the solution:

Repeating the same procedure for the source item factors \({\mathbf {q}}_i\) we obtain:

where \(N^{-1}(i)\) is the inverse neighborhood of item i, i.e., the set of target domain items that have i among their neighbors: \(N^{-1}(i) = \{ j \in {\mathcal {I}}_T | i \in N(j) \}\).

Unlike the model presented in the previous section, we cannot apply RR1 directly by treating the new similarity terms as additional user preferences. Instead, we derive again the update rules for each component of the source and target domain item parameters. As mentioned before, user parameters remain unchanged. Let \(j \in {\mathcal {I}}_T\) be a target item, and consider the optimization of the \(\alpha \)th component \(q_{j\alpha }\) of its corresponding latent vector \({\mathbf {q}}_j\). We can rewrite the loss in Eq. (26) as a function only of \(q_{j\alpha }\) as follows:

where \(e_{uj} \triangleq r_{uj} - \sum _{\beta \ne \alpha } p_{u\beta } q_{j\beta }\), and the constant includes terms that do not depend on \(q_{j\alpha }\). If we set the derivative \(\frac{\mathrm {d} {\mathcal {L}}_\alpha }{\mathrm {d} q_{j\alpha }} = 0\), we obtain:

Using the optimizations described in Pilászy et al. (2010), the computational cost of the above formula for all items is \({\mathcal {O}}(k^2\vert {\mathcal {U}} \vert + k\vert {\mathcal {R}} \vert + n\vert {\mathcal {I}}_T \vert )\), since all the neighborhoods are formed using the top n most similar items, \(\vert N(j) \vert \le n\). Applying the same procedure to the source domain item factor \({\mathbf {q}}_i\) we obtain:

The main difference with respect to Eq. (30) is that the sets \(N^{-1}(i)\) are not bounded, as a source item can potentially be the neighbor of an arbitrary number of target items, so that \(\vert N^{-1}(i) \vert \le \vert {\mathcal {I}}_T \vert \), resulting in a theoretical worst-case cost of \({\mathcal {O}}(k^2\vert {\mathcal {U}} \vert + k\vert {\mathcal {R}} \vert + \vert {\mathcal {I}}_S \vert \vert {\mathcal {I}}_T \vert )\). We observe, however, that in practice most of the source items appear only in a few neighborhoods and that the algorithm is still very efficient.

4.3 Regularization based on item centroids

When neighbor source domain items are mutually diverse, the neighborhood-based model presented in the previous section may struggle to regularize a target domain item that has to be simultaneously close to all its neighbors. The model we propose in this section works like the neighborhood-based model, but, instead of using the neighbor source domain items individually in the regularization, it uses their centroid (average) latent vector:

The same considerations regarding the neighborhood N(j) and the normalization of the similarity scores also apply to this model. However, the effect on the item relevance predictions for cold start users is different. Let \({\mathbf {q}}_j\) be an item in the target domain, and let N(j) be its neighborhood of most similar source domain items. The regularization scheme in our centroid-based approach aims to minimize the distance \(\left||{\mathbf {q}}_j - \sum _{i \in N(j)} s_{ij} {\mathbf {q}}_i \right||\), so that the latent vector of item j is close, on average, to those of the source items in N(j), i.e., \({\mathbf {q}}_j \approx \sum _{i \in N(j)} s_{ij} {\mathbf {q}}_i\). Let u be a cold start user in the target domain that has some preferences in the source domain. Again, her feature vector \(\mathbf {p}_u\) is only learned using the user’s source preferences, and may not be reliable for computing relevance predictions for target domain items in standard MF. Our model, however, ensures that

That is, the predicted relevance score is roughly the average of the relevance scores for the neighbor source domain items, weighted by their corresponding semantic similarity.

As in the previous models, the user parameters are not affected by the item regularization terms, and can be computed in the standard fashion using Eq. (23). For the target domain item factors \({\mathbf {q}}_j, j \in {\mathcal {I}}_T\), we set the gradient of Eq. (32) to zero to obtain:

Comparing the above to Eq. (27) we observe that both are equivalent when \(\sum _{i \in N(j)} s_{ij} = 1\), i.e., normalizing the similarity values has the same effect of the centroid-based regularization on the target domain item factors. The solution for source item factors \({\mathbf {q}}_i\), in contrast, has a different form:

where we have defined \(\mathbf {z}_{j{\backslash }i} = \sum _{l \in N^{-1}(i), l \ne i} s_{lj} {\mathbf {q}}_l\) to simplify the notation. We note that, differently to the previous models, the computation of the source domain latent vectors cannot be parallelized, as the value of \({\mathbf {q}}_i, i \in {\mathcal {I}}_S\) depends on the values of other \({\mathbf {q}}_l, l \in {\mathcal {I}}_S\) through the parameter \(\mathbf {z}_{j{\backslash }i}\). As a result, the training process can be slow when the set of source domain items is large. In our experiments, however, we observed that the time penalty of computing the source factors sequentially is usually compensated by the faster RR1 algorithm, although we do not provide any quantitative analysis as it falls out of the scope of this work.

In order to apply RR1 to our centroid-based approach, we derive again the solutions for each \(\alpha \)th coordinate separately. Once more, the solution for the user factors remains the same as it is not affected by the regularization terms. For the target domain item factors \({\mathbf {q}}_j\), we consider the loss in Eq. (32) as a function only of the \(\alpha \)th component \(q_{j\alpha }\):

As previously, the constant includes terms that do not depend on \(q_{j\alpha }\), and \(e_{uj}\) is defined as in Eq. (29). Setting the derivative \(\frac{\mathrm {d} {\mathcal {L}}_\alpha }{\mathrm {d} q_{j\alpha }} = 0\) yields:

We note, once again, the similar form of the above solution with respect to the previous model in Eq. (30). If we apply the same procedure to the source domain item factors, we obtain:

The computational complexity for the target domain factors is equivalent to the model from the previous section, whereas for the source domain factors it is \({\mathcal {O}}(k^2\vert {\mathcal {U}} \vert + k\vert {\mathcal {R}} \vert + n \vert {\mathcal {I}}_S \vert \vert {\mathcal {I}}_T \vert )\) in the worst case, which is similar to the neighborhood-based model since the size of the neighborhoods n is in general small.

5 Experiments

In a first experiment, we compared several state-of-the-art semantic similarity metrics for content-based recommendation, aiming to understand which is more suitable for later injecting in our cross-domain MF models, and achieved the best results using the link-based approach by Milne and Witten (2008). Second, we evaluated the ranking precision and diversity of the recommendations computed by the proposed models. We show that, depending on the involved source and target domains, our models generate more accurate suggestions than the baselines in severe cold start situations. Moreover, the proposed approaches provide a better trade-off between accuracy and diversity, which are in general difficult to balance.

5.1 Dataset

Our datasetFootnote 10 initially consisted of a large set of likes assigned by users to items on Facebook. Using the Facebook Graph API, a user’s like is retrieved in the form of a 4-tuple with the following information: the identifier, name and category of the liked item, and the timestamp of the like creation, e.g., {id: “35481394342”, name: “The Godfather”, category: “Movie”, created_time: “2015-05-14T12:35:08+0000”}. The name of an item is given by the user who created the Facebook page of such item. In this context, distinct names may exist for a particular item, e.g., The Godfather, The Godfather: The Movie, The Godfather - Film series, etc. Users thus may express likes for different Facebook pages which actually refer to the same item. Aiming to unify and consolidate the items extracted from Facebook likes, we developed a method that automatically maps the items names with the unique URIs of the corresponding DBpedia entities, e.g., http://dbpedia.org/resource/The_Godfather for the identified names of The Godfather movie.

Linking items to DBpedia entities Given a particular item, we first identified DBpedia entities that are labeled with the name of the item. For such purpose, we launched a SPARQL query targeted on the subjects of triples that have rdfs:labelFootnote 11 as property and the item title as object. The next query is an example for The Matrix 2 title:

To resolve ambiguities in those names that correspond to multiple items belonging to different domains, we specify the type of the item we wanted to retrieve in each case. Specifically, the previous query includes a triple clause with rdf:typeFootnote 12 (or dbo:typeFootnote 13) as property. Hence, in the given example, the subject The Matrix 2 refers to the “movie” type, which is associated to the dbo:Film class in DBpedia. The item types were set from the item categories provided in Facebook, and their associated DBpedia and YAGOFootnote 14 classesFootnote 15 were identified by manual inspection of the rdf:type values of several entities. Table 1 shows the list of item types and DBpedia/YAGO classes we considered for the three domains of our dataset.

Moreover, running the previous query template we observed that a number of items were not linked to DBpedia entities because the labels corresponded to Wikipedia redirection webpages. In these cases, to reach the appropriate entities the query makes use of the dbo:wikiPageRedirects property. The result of the previous query for The Matrix 2 is http://dbpedia.org/resource/The_Matrix_Reloaded, which actually is the DBpedia entity of the second movie in The Matrix saga. Here, it is important to note that thanks to the Wikipedia page redirect component we were able to link items whose names do not have a direct syntactic match with the label of its DBpedia entity, but with the label of a redirected entity, e.g., the Matrix 2 title matches the The Matrix Reloaded entity.

Final semantically annotated dataset For every linked entity, we finally accessed DBpedia to retrieve the metadata that afterward will be used as input for the recommendation models. In this case, we launched a SPARQL query asking for all the properties and objects of the triples that have the target entity as subject. Following the example given before, such a query would be:

This query returns all the DBpedia property-value pairs of the dbr:The_Matrix_ReloadedFootnote 16 entity. However, since our ultimate goal is item recommendation, we should only exploit metadata that may be relevant to relate common preferences of different users. Thus, we filtered the query results by considering certain properties in each domain. Specifically, Table 2 shows the list of DBpedia properties selected for each of the three domains of our dataset. Hence, for example, for the movie items, we would have as metadata the movies genres, directors, and actors, among others.

The items and relations shown in the table thus represent a semantic network that is automatically obtained from DBpedia for each particular domain. Table 3 shows statistics of the dataset for the three domains of interest, namely books, movies, and music. Additionally, users may express preferences in more than one domain. Table 4 shows the number of users shared between each pair of domains.

Semantically enriched item profiles Fixing books, movies, musicians and bands as the target items to be recommended, we can distinguish the following three types of item metadata obtained:

-

attributes, which correspond to item-attribute entities associated to the considered item types of Table 2, and are distinct to the entities of target items, e.g., the genre(s), director(s) and actors of a particular movie.

-

related items, which correspond to the item-item properties in Table 2 that derive related entities, e.g., the novel a movie is based on (dbo:basedOn property), the prequel/sequel of a movie (dbo:previousWork/dbo:subsequentWork properties), or the musicians belonging to a band (dbo:bandMember property).

-

extended attributes, which correspond to attribute-attribute properties that generate extended item attributes, originally not appearing as metadata, e.g., the subgenres of a particular music genre (dbo:musicSubgenre property).

The above three types of item metadata constitute the semantically enriched item profiles that we propose to use in our recommendation models. We note that they differ from the commonly used content-based item profiles composed of plain attributes. We also note that in the conducted experiments, the results achieved by exploiting the enriched profiles were better than those achieved by only using item attributes.

5.2 Evaluation methodology and metrics

The evaluation of the proposed models was conducted utilizing a modified user-based five-fold cross-validation strategy, based on the methodology by Kluver and Konstan (2014) for cold-start evaluation. Our goal is to understand how the different approaches perform as the number of observed likes in the target domain increases. First, we divide the set of users into five subsets of roughly equal size. In each cross-validation stage, we keep all the data from four of the groups in the training set. Then, for each user u in the fifth group—the test users—we randomly split her likes into three subsets, as depicted in Fig. 1:

-

1.

Training data initially filled with u’s likes and iteratively downsampled discarding one by one to simulate different cold start profile sizes,

-

2.

Validation data containing the set of likes used for tuning hyperparameters, and

-

3.

Testing data used to compute the performance metrics.

Overview of the cold start evaluation setting in a given cross-validation fold. The box indicates the test users in the current fold, whose profiles are split into training, validation, and testing sets. Different cold start profle sizes are simulated by sequentially adding likes to their training sets—four in the figure

The above procedure was modified for the cross-domain scenario by extending the training set with the full set of likes from the auxiliary domain, in order to obtain the actual training data for the predictive models. For each cold start profile size, we built the recommendation models using the data in the final training set. Then, for each test user, we generated a ranked list of the top 10 suggested items from the set of target domain items in the training set that are not yet known to the user. The performance is estimated from the output of each model and the test set using rank-based metrics. We note that in our evaluation, any item ranked after position 10 by the model is considered not relevant when computing the metrics, as we are interested in the more realistic setting where the user only examines a limited subset of the recommendations.

Regarding the metrics, we used the Mean Reciprocal Rank (MRR) to evaluate the ranking accuracy of the recommendations, which computes the average reciprocal rank of the first relevant item in the recommendation list. We also examined other metrics such as Precision and MAP, but we found similar behavior to MRR so for brevity we omit them in our results. Binomial Diversity Framework (BinomDiv) (Vargas et al. 2014) was used to evaluate the individual diversity, namely the degree of diversity in the recommendation lists based on item genres extracted from DBpedia.

5.3 Evaluated methods

We compared the performance of our proposed methods against the following baseline algorithms:

-

POP Non personalized baseline that always recommends the most popular items not yet liked by the user. Popularity is measured as the number of users in the dataset that liked the item.

-

UNN User-based nearest neighbors with Jaccard similarity. The size of the neighborhood is tuned for each dataset using a validation set.

-

INN Item-based nearest neighbors with Jaccard similarity and indefinite neighborhood size.

-

iMF Matrix factorization method for positive-only feedback (Hu et al. 2008) trained using the fast ALS technique by Pilászy et al. (2010).

-

BPR Bayesian personalized ranking from implicit feedback (Rendle et al. 2009). We used for our experiments the implementation available in LibRec (Guo et al. 2015).

-

FISM Factored item similarity model by Kabbur et al. (2013). We used the implementation of the FISMauc variant optimized for the item ranking problem available in LibRec (Guo et al. 2015).

-

HeteRec Graph-based recommender system proposed in Yu et al. (2014), based on a diffusion method of user preferences following different meta-paths.

-

SPRank Originally proposed in Di Noia et al. (2016), it implements a hybrid approach to compute recommendations with LOD datasets. We used a publicly available implementation of SPRank.Footnote 17

With the exception of POP (which only uses target domain data) and SPRank, we considered the application of all the baselines to both single- and cross-domain scenarios. We were not able to compute meaningful results for SPRank by using DBpedia properties shown in Table 2 due to the structure of the connections between domains in the underlying knowledge graph. All the paths calculated by SPRank to link items in different domains resulted in being not very relevant thus bringing to shallow performances of the algorithm. Moreover, given the datasets adopted for the experimental evaluation, we were not able to generate all the meta-paths needed to compute recommendations. We used machines with up to 3 TB of disk space but it was not sufficient.

Hereafter we use the prefix CD- to indicate that the algorithm is operating in cross-domain mode using the union of the rating matrices from the source and target domains. We did not consider for our evaluation the SemanticSVD++ method by Rowe (2014), as it is designed for rating prediction rather than item ranking. Moreover, preliminary tests showed that its performance was much lower than the other methods, and that its training time was about one order of magnitude larger.

We measured the performance of the three methods presented in this paperFootnote 18 against the previous baselines:

-

SimMF Our matrix factorization model regularized with similarity prediction described in Sect. 4.1.

-

NeighborMF Our proposed matrix factorization model with neighborhood-based regularization from Sect. 4.2.

-

CentroidMF Our matrix factorization model from Sect. 4.3 that uses the neighbor’s centroid to regularize the target domain item factors.

We tuned the hyperparameters of the considered recommendation models using a held-out validation set of likes, as we explain in the next section. For UNN, we only had to select the size of the user neighborhoods. For the matrix factorization models, in contrast, the number of hyperparameters is larger, namely, the dimensionality of the latent factor space k, the amount of regularization \(\lambda \), and the confidence parameter for positive-only feedback \(\alpha \). Moreover, the models proposed in this paper also include the cross-domain regularization rate \(\lambda _C\), which controls the contribution of the inter-domain item similarities. Finally, for NeighborMF and CentroidMF, we tuned the size n of the item neighborhoods N(j), and the possibility to normalize the neighbors’ similarities so that the sum to 1, as explained in Sect. 4.2.

The high number of parameters to tune rules out the possibility of performing a grid search for the best values. Hence, we used Bayesian Optimization techniques (Snoek et al. 2012) that train Machine Learning models to predict candidate values that are likely to maximize a given function while simultaneously reducing the uncertainty over unknown parameter values.

We tuned the parameters of the single-domain methods for each profile size only on the target domain. For simplicity, we used the same values for their cross-domain variants due to the combinatorial explosion of possible configurations. A quick inspection on books-movies and movies-music with profile size 3 did not reveal significant differences between the parameters on average. However, we acknowledge that our experiments could be improved by individually tuning each algorithm in each configuration, which we leave for future work. For UNN, the optimal number of neighbors was \(n = 50\) for books, and \(n = 100\) for movies and music. For iMF we obtained the optimal parameters \(k = (10, 29, 21), \lambda = (10^{-5}, 0.823, 1)\), and \(\alpha = (6, 7, 10)\) for books, movies, and music, respectively. For BPR we used \(\lambda = 0.01\) for regularization and \(\eta = 0.01\) as learning rate. In the case of FISM, we used \(\lambda = 0.001\) and \(\eta = 10^{-5}\). The optimal values for our proposed cross-domain models are reported in Table 5.

5.4 Results

In this section we present the results of the conducted experiments to evaluate the proposed matrix factorization models. First, we analyze several semantic relatedness metrics to compute the inter-domain item similarities. Next, we report the ranking accuracy and diversity of the evaluated recommendation approaches, and study how the size and diversity of the source domain user profile impacts on the target recommendations.

5.5 Inter-domain item semantic similarity

The goal of our first experiment is to analyze the performance of several semantic relatedness metrics to compute the inter-domain similarities that we later exploit in our matrix factorization models. We considered the following strategies:

-

TF-IDF Semantically-enriched item profiles (see Sect. 5.1) are used to build TF-IDF vector profiles based on the metadata of each item. Given an item i, the enriched profile corresponds to a set of paths \(\langle i, \rho , e\rangle \) where \(\rho \) is a predicate (or a sequence of predicates) in the semantic network\(\mathcal {SN}\) of a domain \(\mathcal {D^i}\). The considered predicates are those depicted in Table 2, and described as attributes, related items, and extended attributes. We may build the set of all possible metadata for all items as \(F = \{\langle \rho , e \rangle \mid \langle i, \rho , e\rangle \in \mathcal {SN} \text { with } i\in I \}\). Each item can be then represented as a vector of weights \({{\omega }_{\mathbf {i}}} = [\omega _{(i,1)},\ldots ,\omega _{(i,\langle \rho ,e\rangle )},\ldots , \omega _{(i,|F|)}]\) where \(\omega _{(i,\langle \rho ,e\rangle )}\) is computed as the normalized TF-IDF value for \(\langle \rho ,e\rangle \):

$$\begin{aligned} \omega _{(i,\langle \rho ,e\rangle )} = \underbrace{ \frac{|\{\langle \rho ,e\rangle \mid \langle i , \rho , e\rangle \in \mathcal {SN}\}|}{\sqrt{\sum \limits _{\langle \rho ,e\rangle \in F} |\{\langle \rho , e\rangle \mid \langle i , \rho , e \rangle \in \mathcal {SN}\}|^2}}}_{TF } \times \underbrace{ \log {\frac{|I|}{|\{j \mid \langle j,\rho ,e\rangle \in \mathcal {SN} \text { and } j \in I\}|}} }_{IDF} \end{aligned}$$The similarity score between a source domain item and a target domain item is computed as the cosine of their corresponding TF-IDF vectors.

-

ESA The Explicit Semantic Analysis technique proposed by Gabrilovich and Markovitch (2007). Instead of using the semantic metadata, we map each item to its corresponding Wikipedia article. Then, based on the text of the article, ESA extracts a set of other related Wikipedia articles, which represent semantic concepts, and builds a TF-IDF profile from the extracted concepts. Finally, the similarity score between two items is computed as the cosine of their corresponding concept-based vectors.

-

M&W The approach proposed by Milne and Witten (2008) computes the semantic relatedness between two items using the overlap of their sets of inlinks and outlinks in the Wikipedia hyperlink graph.

-

Katz Based on Katz’s centrality measure, the relatedness between two items is computed as the accumulated probability of the top shortest paths between their corresponding entities in the semantic network (Hulpus et al. 2015).

We evaluated the previous semantic relatedness metrics indirectly by comparing their performance in the item recommendation task. For such purpose, we chose a content-based recommendation model with no parameters, so that we can fairly measure the effect of each similarity on the item ranking quality. According to this simple model, the relevance score of an item is computed as the accumulated similarity with the items in the user’s profile:

where \(s_{ij}\) is computed any of the methods described above.

The results of our experiment are shown in Table 6. For an easier comparison according to the methodology from Sect. 5.2, we averaged the MRR scores for all the cold start sizes in each source–target domain combination. From the table we conclude that M&W is the best performing metric, beating all the other approaches except when considering the movie domain as source, in which case it is still competitive. Hence, in the following experiments we evaluate our proposed matrix factorization models using M&W as the backing semantic similarity. We note that better results could be achieved in real-world systems by selecting the optimal similarity metric for each domain. However, using the same metric simplifies the experiments and can only harm the performance of our proposed models, as the baselines do not exploit such information. Finally, we note that the low values for MRR are due to the simple recommendation algorithm chosen for this experiment.

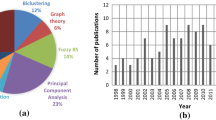

5.6 Item ranking accuracy

In our second experiment we analyze the accuracy of the item rankings generated by the evaluated recommendation approaches. We aim to understand if cross-domain variants are in general more effective than single-domain ones, and whether the proposed matrix factorization models are able to outperform the other methods in cold start settings.