Abstract

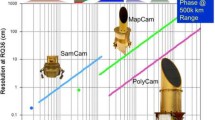

The NASA Mars InSight lander was successfully launched from Earth in May 2018 and is scheduled to land on Mars in November 2018. The key objective of the InSight mission is to investigate the interior structure and processes of Mars using a seismometer and heat flow probe that must first be placed onto the Martian surface by a robotic arm. The lander is equipped with two cameras to assist in this instrument deployment task. The Instrument Deployment Camera (IDC) is mounted to the lander robotic arm and will acquire images of the lander and surrounding terrain before, during, and after the instrument deployment activities. The IDC has a field of view (FOV) of \(45^{\circ} \times45^{\circ}\) and an angular resolution of 0.82 mrad/pixel at the center of the image. The Instrument Context Camera (ICC) is mounted to the lander and will acquire wide-angle views of the instrument deployment activities. The ICC has a FOV of \(124^{\circ} \times124^{\circ}\) and an angular FOV of 2.1 mrad/pixel at the center of the image. The IDC and ICC cameras are flight spare engineering cameras from the Mars Science Laboratory (MSL) mission. The InSight project upgraded the inherited cameras from single-channel greyscale to red/green/blue (RGB) color by replacing the detector with a Bayer-pattern version of the same \(1024~\mbox{pixel} \times1024~\mbox{pixel}\) detector. Stereo IDC image pairs, acquired by moving the arm between images, are critical for characterizing the topography of the instrument deployment workspace, a \(4~\mbox{meter} \times 6~\mbox{meter}\) area located in front of the lander. Images from the cameras are processed using software from previous Mars surface missions, with several new image products developed for InSight to support instrument placement activities. This paper provides a brief description of the IDC/ICC hardware and related image processing.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The NASA (National Aeronautics and Space Administration) InSight (Interior Exploration using Seismic Investigations, Geodesy and Heat Transport) mission will place a lander on the surface of Mars in November 2018 (Banerdt et al. 2017). The key objective of the InSight mission is the investigation of the interior structure and processes of Mars using a seismometer (SEIS, Seismometer Experiment for Interior Structure, Lognonné et al. 2018) covered by a Wind and Thermal Shield (WTS), a temperature probe (Heat Flow and Physical Properties Package, HP3, Spohn et al. 2018), and a precision radio tracking system (RISE, Folkner et al. 2018) to measure the size and state of the Martian core, mantle and crust. A robotic arm, known as the Instrument Deployment System (IDS, Trebi-Ollennu et al. 2018) will lift the SEIS and HP3 instruments off of the top deck of the lander and place them onto the ground at specific locations chosen by the InSight Instrument Site Selection Working Group (ISSWG, Golombek et al. 2018). To assist in this deployment task, the robotic arm is equipped with two cameras: the Instrument Deployment Camera (IDC) mounted on the robotic arm and the Instrument Context Camera (ICC) mounted on the lander body underneath the top deck (Fig. 1).

2 Instrument Description and Performance

The IDC and ICC properties are summarized in Table 1. The cameras are modified flight spare versions of the Mars Science Laboratory (MSL) Navigation (Navcam) and Hazard avoidance (Hazcam) cameras (Maki et al. 2012), and thus share nearly identical properties as the MSL flight versions, with the exception of the detector and filters. Both camera designs are inherited from the Mars Exploration Rover (MER) mission (Maki et al. 2003). The InSight-specific modifications are discussed in more detail later in this section.

2.1 Instrument Objectives and Requirements

2.1.1 Engineering Objectives

The primary objectives of the IDC and ICC are to: 1) document the state of the lander and surrounding terrain, 2) support terrain assessment for the selection of the SEIS and HP3 instrument deployment locations, 3) facilitate and document the deployment activities, 4) monitor the location and state of the instruments post-deployment, and 5) measure and monitor atmospheric dust opacity.

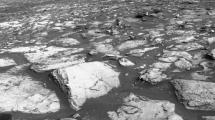

After initial imaging and assessment of the lander state, camera activities are primarily focused on the placement of the SEIS and HP3 instruments onto the surface as quickly as possible (Golombek et al. 2018). This requires careful examination of the instrument deployment workspace area, a crescent-shaped region of the terrain nominally located south of the lander and reachable by the robotic arm. Because the instruments must be placed on relatively flat, rock-free, load-bearing locations (Golombek et al. 2018), assessment of the deployment workspace relies extensively on stereo image data from the IDC. The stereo processing of these images is described in detail in Sect. 4. Camera images of the sky will be used to infer atmospheric dust optical depth (\(\tau\)) using a technique described in Sect. 3.2.2.

2.1.2 Science Objectives

Although the primary requirements for the IDC and ICC are focused on the deployment and monitoring of the SEIS and HP3 instruments on the Martian surface, images from the IDC and ICC will also be used by the InSight science team to understand the geology and physical properties of the terrain around the lander (Golombek et al. 2018). IDC images of the HP3 radiometer footprint regions on the local surface (located nominally on the north side of the lander) will provide an estimate of the thermal contributions of rocks and pebbles to diurnal temperature variations, enabling estimation of grain size and/or cohesion of the soil material. Images will also be used to measure the displacement of surface materials by the lander rocket engine plumes, characterize the deformation of surface materials from soil mechanics experiments with the arm, document eolian activity around the lander, and measure the deposition of dust onto the surface after landing. Results from these imaging experiments will be used to infer the geologic processes that have operated on the local surface and the corresponding shallow subsurface. IDC and ICC images will also serve as ground truth for orbital remote sensing data. These ground truth data will enable hypothesis testing of predictions made during the landing site selection phase, including rock size-frequency distributions, surface slopes, thermal inertia, soil properties, and eolian features and activity (Golombek et al. 2017).

2.2 Hardware Heritage

The two InSight cameras are flight spares from the Mars Science Laboratory Mission (MSL) mission. The MSL Navcam and Hazcam cameras are build-to-print copies of the original MER designs described in Maki et al. (2003). All of these cameras share the same camera body design, with different lenses attached. A total of 34 flight cameras of this type have landed previously on Mars, including 14 MER Engineering Cameras (Maki et al. 2003), 4 MER Pancams (Bell et al. 2003), 2 MER Microscopic Imagers (Herkenhoff et al. 2003), and 12 MSL Engineering Cameras (Maki et al. 2012). Two flight spare MER camera electronics and detector assemblies flew inside of the Mars Phoenix Surface Stereo Imager (SSI), (Lemmon et al. 2008). Additionally, a flight spare MSL Hazcam is scheduled to fly on the Mars 2020 Rover as part of the MEDA Skycam instrument (Rodriguez-Manfredi et al. 2016).

The InSight flight IDC is a MSL flight spare Navcam (serial number 210) and the InSight flight ICC is a MSL flight spare Hazcam (serial number 203). Both of these cameras were built during the MSL engineering camera production run at the Jet Propulsion Laboratory in 2007–2009 and subsequently stored in a cleanroom at JPL until 2013 when the InSight project took possession of the hardware. The inherited cameras were single-channel greyscale cameras with a 600–800 nm visible and near-IR bandpass created from a combination of Schott OG590 and KG5 filters (Maki et al. 2003).

Throughout this text the use of the term “MER/MSL” refers only to the MER cameras and the MSL Navcam and Hazcam cameras. The MSL mission flew five additional cameras of different types that were designed and built separately from the Navcam and Hazcam cameras. These five cameras are: the two Mast Cameras (Mastcam, Malin et al. 2017), the Mars Descent Imager (MARDI, Malin et al. 2017), the Mars Hand Lens Imager (MAHLI, Edgett et al. 2012), and the Chemical Camera (ChemCam) Remote Microscopic Imager (RMI, Wiens et al. 2012; Maurice et al. 2012). The InSight cameras are not derived from these other types of cameras.

2.3 Instrument Development—Color Upgrade

Color images offer an improvement over single channel greyscale, particularly when distinguishing between different types of Martian surface materials (Maki et al. 1999). The SEIS and HP3 instruments must be placed on load bearing surfaces, and deposits of Martian dust do not offer significant load bearing capabilities. Thus the ability to distinguish between deposits of Martian dust and other surface materials is critically important, particularly given that the InSight landing site is moderately dusty (Golombek et al. 2017). Figure 2 shows an example of how a color image can be used to easily distinguish between surface materials, a task that is significantly more challenging in a single-channel broadband monochrome image. With this motivation, in early 2014 the InSight project undertook the task of upgrading the inherited greyscale MSL cameras to three-channel red/green/blue (RGB) color. The upgrade was achieved by replacing the MSL greyscale detectors with Bayer Color Filter Array (CFA) versions of the identical detector. The Bayer version of the detector was manufactured specifically for InSight and is described in Sect. 2.6.

Visual differences between single-channel MSL Navcam greyscale image (left, 600–800 nm bandpass) and MSL Mastcam RGB color image (right) of the same scene. This example demonstrates the utility of color information for distinguishing differences between materials on Mars: The three examples of interest are (A) loosely packed grey powder from drilling operations, (B) packed grey drill powder, (C) reddish soil. The difference in material properties are more difficult to distinguish in the greyscale image but are easily distinguishable in the color image. Images: Mars Science Laboratory Planetary Data System (PDS) archive: Navcam image NLA_413665900EDR_F0060000NCAM00368M1.IMG and Mastcam image 0182ML0009890010105279E01_XXXX.DAT. Note that the spatial resolution of the Navcam image is approximately 2 mm/pixel and the spatial resolution of the Mastcam image is approximately 0.5 mm/pixel. The two images were taken approximately 2 minutes apart in time on MSL Sol 182

2.4 Mechanical

As shown in Fig. 3a, each InSight camera consists of 1) a camera head containing the optics, detector, and focal plane electronics and 2) an electronics box containing the bulk of the camera electronics. The camera head is attached to the electronics box via a flex cable, which thermally isolates the camera heads from the electronics. The flex cable also offers the ability to mount the camera heads at different optical boresight angles, depending on the camera mounting configuration. The camera assemblies are attached to mounting brackets, and the brackets are mounted to the lander hardware. Each camera connects electrically to the lander through a 25-pin micro-D connector.

(a) Flight IDC (top) and ICC (bottom), both camera lenses are covered with transparent remove-before-flight covers in this photograph, (b) IDC undergoing calibration without cover shown, (c) IDC mounted on the lander robotic arm, with cover open, (d) ICC mounted on the lander body, with cover open. The camera electronics box (silver box in each of the photos) is \(67 \times 69 \times 34~\mbox{mm}\) in size

2.5 Electronics

The IDC and ICC electronics are unchanged from MSL. The MSL camera electronics are identical to the MER versions, with the exception of the heater circuit, which operates at a slightly higher power level on MSL compared to MER. The IDC and ICC electronics boxes both contain an MSL heater circuit that operates with a power of approximately 3.5 Watts at 28 Volts. Use of the heaters is only necessary when operating the cameras at ambient temperatures below the minimum operating temperature of \(-55~{}^{\circ}\mbox{C}\), a temperature that typically occurs during the Martian night or the early mornings on Mars. The heaters are not typically needed for mid-day camera operation. Survival heating is not required when the cameras are unpowered.

Due to engineering considerations in the lander electronics hardware, the Insight lander computer sends an 8.25 MHz clock signal to the cameras instead of the 10 MHz clock used by the MER/MSL rovers. The slower clock speed on InSight increases the exposure time step size, frame transfer time, and the detector readout time compared to MER and MSL, but these differences have a negligible overall impact on camera performance. The difference in clock speed also lowers the camera signal chain bias level compared to MER and MSL, but the InSight camera bias level is trimmed back to MER/MSL levels by adjusting the camera video offset value (see Table 1). For more details on the camera electronics hardware, including a description of the video offset control circuit, see Bell et al. (2003).

2.6 Detector

The IDC and ICC cameras contain the same type of charge-coupled device (CCD) frame transfer detectors as MER/MSL, with the main difference being the addition of a Bayer CFA layer to the CCD design (see Fig. 4). Bayer CFAs consist of individual pixel-sized red, green, and blue filters arranged in a repeating pattern across the detector (Bayer 1976). As part of the InSight CCD fabrication effort the original mask designs from the MER production were located in the design archives and modified to include color filter layers to the front-side surface. The more modern fabrication process required additional modifications to the detector layers and material properties. The InSight CCDs were manufactured in Canada at Teledyne-Dalsa by the same group that manufactured the MER CCDs (the MSL Navcam and Hazcam flew CCDs that were diced from original MER wafers). The detectors were diced and mounted in Kovar packages at Corwil Technology Corporation in Milpitas, CA by the same group that packaged the MER/MSL CCDs. Electronically the greyscale and Bayer versions of the CCD are identical, and the color cameras produce the same raw image size as the MER/MSL detector: \(1024~\mbox{pixels} \times 1056~\mbox{pixels} \times 12~\mbox{bits}\) with a \(1024 \times 1024~\mbox{pixel}\) photosensitive area. As with the MER/MSL detectors, the InSight CCDs do not have antiblooming structures (Bell et al. 2003), causing blooming/bleeding effects when the accumulated charge in a pixel goes beyond several times the full well capacity (see Fig. 7 for an example).

InSight color CCD. (a) CCD assembly (approximately 35 mm by 26 mn in size), the lighter region is photosensitive and the darker region is the aluminum-shielded storage region, (b) image of the individual Bayer-pattern pixels under a microscope (each pixel is 12 microns in size), (c) shutter frame (contrast-stretched to bring out detail), and (d) measured QE of the IDC flight CCD without the IR cutoff filter. See Fig. 6 for a plot of the system responsivity with the IR cutoff filter included

As with MER/MSL, the InSight flight screening process for the color CCDs consisted of three phases: 1) pre-burn-in, 2) burn-in, and 3) post-burn-testing. CCD characterization was performed at temperatures of \(+20~{}^{\circ}\mbox{C}, 0~{}^{\circ}\mbox{C} \mbox{ and } -40~{}^{\circ}\mbox{C}\). Detectors that successfully passed the screening process underwent more detailed testing. A total of 13 devices passed through all InSight screening steps and were ranked in order from best to worst. The detector ranking criteria included Quantum Efficiency (QE), full well, read noise, dark current, bad pixels, linearity, and pixel response non-uniformity (PRNU). The top two ranking CCDs were selected for the flight cameras.

As with the MER/MSL cameras, images from the InSight cameras exhibit a characteristic shutter signature that is created after the CCD exposure is completed and the charge is quickly transferred into the frame storage area and then slowly read out of the CCD. This process adds two artifacts to an image: 1) frame transfer smear due to photon collection during the transfer of charge from the imaging region to the covered storage region and 2) a dark current ramp accumulated during the readout of the storage region. Figure 4c shows a shutter image example from the IDC. As is typically done on MER/MSL, the shutter signature can be removed by acquiring a zero-second exposure (shutter) image immediately before or after an image and subtracting shutter image from the image of interest. For more details on the shutter smear see Bell et al. (2003), Herkenhoff et al. (2003), and Maki et al. (2003).

Figure 4d shows the QE curve for an InSight CCD at \(0~^{\circ }\mbox{C}\). The short wavelength cutoff at 400 nm matches the original MER devices and is ultimately limited by the transmission through the front-side layers on the CCD. Above 900 nm the Bayer filters are transparent and the QE is once again limited by the underlying detector response (requiring an IR cutoff filter, see Fig. 6). Between 400–900 nm the RGB Bayer filters modulate the detector QE. The QE ranking of the devices was calculated using two metrics: the ratio of the peak blue response to the peak red response and similarly for the peak green to the peak red. This metric favored devices with maximum blue and green sensitivities.

The detector full well, conversion gain and read noise of the signal chain were measured using the photon transfer curve method described by Janesick et al. (1987). The results show full well values of approximately 160,000 electrons and a conversion gain of approximately 50 electrons/DN. These values are similar to the MER/MSL detector performance.

Dark current measurements were performed on the InSight detectors by taking a series of dark exposures at several temperatures. Figure 5 shows the dark current as a function of temperature for the InSight flight CCDs. The results are similar to the MER devices (Herkenhoff et al. 2003; Bell et al. 2003). At CCD operating temperature of 0 degrees C the dark current is 100 electrons (less than 2 DN) for a 100 msec image. Typical operating temperatures for the IDC and ICC are expected to be at or below \(0~^{\circ}\mbox{C}\) and thus dark current can be ignored for most analyses. However if desired or needed, dark current can modeled and removed from images.

2.7 Optics

The optically powered elements of the MER/MSL lens assemblies (Smith et al. 2001) were not modified for InSight. The ICC retains the same \(124^{\circ} \times 124^{\circ}\) field of view (FOV) fisheye lens as the MER/MSL Hazcams, with a pixel scale of 2.1 mrad/pixel at the center of the image, and the IDC retains the same \(45^{\circ} \times45^{\circ}\) FOV as the MER/MSL Navcams, with a pixel scale of 0.82 mrad/pixel at the center of the image. Because the MER/MSL lenses were optimized for the near-IR wavelength region, the modulation transfer function (MTF) is theoretically reduced in the visible region by a few percent for the IDC and up to several tens of percent for the ICC, depending on the field angle. However during IDC and ICC calibration this reduction in optical MTF was not measureable at the system level and thus was deemed to have a negligible impact on image quality.

2.8 Filters

To prevent unwanted near-IR signals from entering the focal plane the Schott OG590 longpass filter was removed from the MSL cameras and replaced with a Schott BG42 bandpass filter. This modification shifts the camera bandpass from the near-IR (600–800 nm) wavelengths to the visible wavelengths (400–700 nm). The BG42 also attenuates the red wavelengths slightly, providing a marginal improvement in the balance between red, green, and blue channel sensitivities, although the blue channel is still less sensitive than the red channel, as shown in Fig. 6. The heritage Schott KG5 filter in the cameras was retained for InSight in order to maintain total filter thicknesses from the original MER optical designs.

Because the combined Bayer RGB, BG42, and KG5 filter combinations attenuate the overall signal more than the MER/MSL OG590 and KG5 bandpass filters, the MER/MSL neutral density filters (Navcam ND 1.3, Hazcam ND 1.1) were not added to the InSight cameras because of a desire to improve the responsivity relative to the MER/MSL designs. The resulting InSight camera responsivities are approximately a factor of 6 higher than the MER/MSL camera responsivities. Expected exposure times for InSight will be approximately 40–60 milliseconds on Mars compared to approximately 250 milliseconds for MER/MSL, depending on the time of day, dust optical depth, and albedo of the surface material being imaged. One benefit of the shorter exposure times is a reduced chance of IDC image blur if arm oscillations were to occur during image exposure. Shorter exposures also reduce dark current, although this benefit is negligible because the dark current rates are expected to be \(< 1~\mbox{DN}\) at typical operating temperatures. The shorter exposure times also come with a small cost: the ratio of exposure time to frame transfer time on InSight is approximately a factor of 8, compared to 50 on MER/MSL. The decrease in this ratio increases the residual noise in an image after a shutter subtraction (because the signal from shutter is larger relative to the image signal), however this effect is small compared to the shot noise in an image. Camera testing has confirmed acceptable noise performance with the shorter exposure times (see Fig. 7 for an example).

Outdoor image acquired under Earth daylight at JPL with an engineering model IDC. The exposure time for this image is 37 milliseconds. Blooming effects can be seen in the lower right side of the image. Shutter subtraction was performed on this image. The calibration target, described in more detail in Sect. 2.13, can be seen in the middle of the image. This image was color corrected with a 5700 K illuminant, resulting in approximately correct colors (the green tray at the center of the image is a 3D-printed piece of green plastic)

2.9 Color Processing

In order to render colors correctly on display media, raw unprocessed camera images must be converted to calibrated color spaces. The raw Bayer-pattern pixel intensity values are converted into separate red, green, and blue images using a two-dimensional interpolation process called demosaicking (see Sect. 3.1.1). The red, green, and blue pixels are converted into other colorspaces by expressing each pixel in the destination colorspace as a linear function of the 3 original colors, as shown below:

where \(R_{d}\), \(G_{d}\), and \(B_{d}\) represent the colors of pixels in the destination colorspace, \(R_{i}\), \(G_{i}\), and \(B_{i}\) are the initial colors of the pixel, and \(a\) through \(i\) are the color conversion coefficients. In practice this operation is done as a \(3\times 3\) matrix multiplication on every pixel in a linear (decompanded) image, where the elements of the \(3\times 3\) matrix are the coefficients of the above equation. This can be expressed in matrix form by the following:

The matrix multiplication allows an image to be converted into a number of relevant color spaces, including device-independent Commission Internationale de l’Eclairage (CIE) \(\mathit{XYZ}\) tristimulus values, by using the conversion matrix for that color space. The coefficients for the \(3\times 3\) conversion matrices are found by acquiring images of calibrated color charts with known illuminants and solving for the matrix coefficients using a least squares fit. For more details on the CIE colorspace and \(\mathit{XYZ}\) tristimulus values see Wyszecki and Stiles (1982).

If the color conversion matrix contains only 3 non-zero coefficients along the diagonal, the result is a simple white balance:

where the coefficients \(j\), \(k\), and \(l\) are chosen to properly render white objects in the converted image. The values of the white balance coefficients depend on both the camera color channel responsivities and the illuminant of the desired colorspace. Performing a white balance on an image tends to be a simpler operation than a full color conversion and also has the advantage of not introducing noise crosstalk between the R, G, and B channels. However, to properly render IDC and ICC color images into standard colorspace such as sRGB, a full \(3\times 3\) color conversion matrix is needed. Figure 8 shows examples of color processing on a pair of raw IDC/ICC images. Because of the relative sensitivity deficiency in blue channel (as shown in Fig. 6), uncalibrated raw instrument RGB (iRGB) images have an olive color cast to them. Once the conversion matrix is applied however, the images reproduce colors correctly.

Color processing of IDC and ICC images. The two images on the left are raw instrument RGB images (iRGB), the two images in the middle are white-balanced iRGB images (iWB), and the two images are the right are iRGB images that have been converted to standard sRGB (ITU-R BT.709) using a \(3\times 3\) color conversion matrix. The raw source images were acquired with a 3000 K tungsten filament lamp illuminating a Colorchecker Classic test chart from X-rite, Inc.

2.10 Flat Field

Flat field images have been acquired for both cameras and the performance is within expectations for both cameras. Flat field images are normalized to the central region of the image and are applied to all radiometrically corrected images on the ground during surface operations. Figure 9 shows a flat field image for the flight IDC. Demosaicked R, G, and B flat field images will be applied separately to each color channel for decompressed JPEG color images.

IDC SN210 flat field image, (a) raw image, (b) shown with a histogram equalization stretch to accentuate details. The intensity of the flat field image falls off from 100% at the center of the image to approximately 80% at the horizontal/vertical center edges and approximately 60% at the diagonals. The pixel-to-pixel variation within a single color band is approximately 5%–10%. The small pixel-to-pixel crosshatch pattern visible in the images shown above is caused by the Bayer CFA

2.11 Stray Light

Stray light in an optical system commonly occurs in two ways, as described by Gauvin and Freniere (2000): 1) ghosting and 2) scattered light. Ghost images are created when light reflects off of the surface of a lens element or the detector, and scattered light occurs when light reflects off of surrounding lens structure. In both cases, the stray light is re-imaged back onto the detector and appears in the image. Photographers often call this lens flare. MER/MSL camera images generally do not exhibit significant amounts of stray light, although under certain solar illumination geometries, ghosts and scattered light are visible. Because the MER/MSL lens anti-reflective (AR) coatings are optimized for wavelengths between 600–800 nm (those cameras had bandpass filters that cut off photons below 600 nm), transmission curves for the MER/MSL coatings show a several percent increase in reflectance for wavelengths between 400 and 600 at near normal angles of incidence. Shifting the camera bandpass into the visible region thus increases the amount of scattered light in an image. Because the inherited AR coatings were not modified as part of the color upgrade the InSight cameras exhibit more scattered light artifacts than the MER/MSL versions, primarily in the blue and purple wavelengths.

The scattered light is most pronounced in the ICC partly due to the different optical design and bandpass filter locations. The IDC filters are located in the front of the lens (i.e., the front elements), while the ICC filters are located between the lens and the detector. Additionally, because the ICC has no sunshade (unlike the IDC, which has a sunshade mounted around the front portion of the lens), sunlight falls directly onto the ICC lens more often than the IDC. Figure 10 shows examples of scattered light in an InSight ICC image. Although not expected to be a problem operationally, during surface operations ICC scattered light may become visible in an image when the sun shines directly on the outer lens element (i.e., when the angle between the sun and the ICC boresight is less than 90 degrees). This condition is expected to happen before approximately 8:50 LTST (Local True Solar Time) and after 15:30 LTST during the early portion of the mission, assuming that the lander is not tilted appreciably and is facing due south. Between those times the sun is not expected to shine directly on the ICC front element and scattered light will be less noticeable in those images. Later during the mission, roughly between Sols 124 and 476, the sun will not shine on the ICC lens directly at any time during the day. The IDC scattered light is not expected to be a problem during normal operations unless the camera is pointed near the sun.

Comparison of lens flare between the MSL (left) and InSight (right) cameras. The image on the left (magnified inset, circled) shows a sun ghost in a MSL Hazcam image. The ICC image on the right shows 10 sun ghosts (g1 through g10) in a flight InSight ICC image, including a saturated sun ghost (g2). The image also exhibits scattered light (s1 and s2) off of the inside of the ICC lens. The ICC camera was intentionally tilted upwards towards the sun to demonstrate lens flare; the boresight to sun angle was approximately 83 degrees, which is equivalent to an image acquired at approximately 7:45 LTST and/or 16:40 LTST on InSight Sol 1

2.12 Camera Covers

The IDC and ICC cameras lenses are protected from dust and debris during landing by one-time deployable covers with transparent Lexan windows. The cover designs are based on the MSL Hazcam cover design with modifications for InSight. The covers are spring-loaded and released by a frangibolt heater (the MSL Hazcam covers were released with pyrotechnic devices), nominally activated two sols after landing. Once opened, the covers are kept out of the camera field of view by a retaining spring. Figure 11 shows the covers in both the closed and open positions.

2.13 Calibration Target

A camera calibration target (Fig. 12) is mounted on the lander deck. IDC images of this target (see Figs. 7 and 13e for examples) will be acquired and analyzed prior to stereo imaging of the deployment workspace. The calibration target top face is tilted 10 degrees upward from the lander deck to improve the viewing angle from the IDC (a steeper tilt angle was not possible due to volume constraints near the aeroshell when in the lander cruise configuration). The nominal calibration target image will be similar to the image shown in Figs. 7 and 13a, acquired when the IDC is placed as close to the target as possible while avoiding collisions with objects on the lander deck. The calibration target artwork contains five slanted-edge squares for modulation transfer function (MTF) measurements, five circular dots for geometric scale verification (three of which are color), and a greyscale ramp for signal/noise characterization. The target artwork also includes the flags of nations participating in the InSight mission and the space agency logos for NASA, CNES, and DLR. The calibration target will be used to verify IDC color performance and serve as a reference for relative albedo measurements. As dust settles onto the target, the white patches will redden and the ratio of red signal to blue signal (red to blue ratio) in an image will serve as a proxy for dust deposition over time. Although there were no requirements placed on the cameras for measuring absolute radiance, IDC images of the calibration target could in principle be used for estimating relative reflectance using the technique of Reid et al. (1999) or estimating radiance factors (I/F) using the technique described in Bell et al. (2006). Any shadows cast onto the target by the robotic arm will allow direct measurements of diffuse vs. direct illumination (shadows vs. direct sunlight).

InSight camera calibration target. The top face of the target is 17 cm by 10 cm in size. The flags on the calibration target represent the nations that were directly participating in the InSight mission as of late 2014 (when the design of the calibration target was frozen). They are (top row, from left to right): France, Germany, Switzerland, United Kingdom, Poland, Japan, Austria, Belgium, Spain, and Canada. The second row from top contains the flag of the United States of America, the NASA logo, Centre National d’Etudes Spatiales (CNES) logo, and Deutsches Zentrum für Luft- und Raumfahrt (DLR) logo. The third row from the top contains dots for color and geometric calibration. The fourth row contains slanted square edges for sharpness (MTF) measurements. The fifth row contains a greyscale intensity ramp for signal/noise measurements

Example of images acquired during ATLO campaign. (a) IDC image of lander footpad from above the lander deck, (b) IDC image of footpad from underneath the lander deck, (c) IDC image of grapple engagement with the WTS, (d) IDC of the underside of the lander, showing the ICC, (e) IDC image of calibration target, and (f) ICC image of the robotic arm

2.14 ATLO (Assembly, Test, and Launch Operations)

2.14.1 Functional Testing

After integration onto the spacecraft the cameras participated in a series of spacecraft hardware and software spacecraft functional test activities. The activities included thermal vacuum testing, robotic arm testing, instrument deployment scenario testing, panorama acquisition, and geometric camera calibration. The goal of this testing was to verify the ability of the lander system to acquire the images needed during surface operations. Figure 13 shows examples of the types of images acquired during ATLO.

2.14.2 Geometric Camera Models

Both the IDC and ICC have been geometrically calibrated using the CAHV(ORE) camera model system described in Yakimovsky and Cunningham (1978), Gennery (2001, 2006), using the same techniques used for the Mars rover camera calibrations (Maki et al. 2003, 2012). Performance of the InSight camera models is in family with previous MER and MSL calibrations, producing ambient calibration residuals of approximately 0.25 pixels RMS from a 3D to 2D projection.

2.14.3 Workspace Mosaic Verification

One of the important camera test activities conducted during ATLO was the verification of the stereo accuracy of the IDC deployment workspace mosaic. This activity involved the acquisition of stereo IDC images of a calibrated grid target (Fig. 14a) covering the deployment workspace. This grid target was surveyed with external metrology equipment in the lander frame and served as the ground truth data for the test. The standard IDC workspace mosaic consists of 56 images acquired at a standoff distance of 1.5 meters above the grid.

ATLO Workspace mosaic testing. (a) Calibrated grid target (in blue) placed in the deployment workspace (b), mosaicked IDC stereo images of the workspace grid (c), metrology coverage of the target grid, and (d) comparison of the IDC stereo data against the metrology. Note that the requirement in panel (d) is a 1-sigma error, thus some error values are allowed to go above the requirement as long as the RMS value stays below. Additionally, because the stereo error grows with distance, the operations team must make an engineering judgement on the quality of the data during deployment operations

The data from the ATLO test were processed by the ground image processing software described in Sect. 4 and the resulting stereo products were used to independently measure the 3D coordinates of each of the metrology grid points. Residuals between the IDC stereo data and the metrology ground truth meet the accuracy requirements: horizontal errors of approximately 8–10 mm RMS versus 17 mm RMS (root mean square) required, vertical errors of approximately 7–17 mm RMS versus 30 mm required, and relative accuracies of approximately 5 mm versus 5 mm required.

3 Image Acquisition and Camera Operations

3.1 Image Acquisition

Image acquisition is initiated by flight software (FSW) running on the InSight lander computer. This software controls the power state of the cameras, handles command processing, image acquisition (including autoexposure and image readout), and performs post-acquisition image processing. The software was developed by the InSight spacecraft manufacturer, Lockheed Martin in Littleton, CO, in response to requirements and algorithms provided by the InSight project at JPL.

All IDC and ICC images are acquired with a “TAKE_IMAGE” spacecraft command. The command has 6 command arguments that specify the image downlink priority, an image ID, exposure control, and image compression. Other parameters related to image acquisition are controlled by user-configurable parameter files onboard the lander, but these are rarely modified. After an image is read out from the camera into the lander computer memory, the image is compared iteratively against autoexposure criteria using the algorithm described in Maki et al. (2003). After the autoexposure iteration converges, a shutter image is subtracted from the image of interest and the image is ready for onboard processing (the shutter image is acquired immediately before the autoexposure).

3.1.1 Onboard Image Processing

Shutter subtraction is performed on all images in which JPEG compression is requested (shutter subtraction is not performed on uncompressed images). After shutter subtraction, the flight software converts the raw Bayer image into a 3-channel, 12 bit/pixel RGB image using the Malvar-He-Cutler demosaicking algorithm described in Malvar et al. (2004). As part of this step the blue pixels in the raw Bayer pattern image are scaled up by a factor of 1.7 immediately prior to demosaicking. This is done as a precautionary step to avoid crosstalk between the lower signal levels in the blue channel compared to the red/green channels during the gradient-corrected bilinear interpolation step of the Malvar-He-Cutler demosaicking. The factor of 1.7 was chosen as an approximate ratio of the expected signal levels between the green and blue channels. This “pre-demosaic boost” of the blue channel is equivalent (modulo quantization differences) to adjusting the blue filter coefficients in the Malvar-He-Cutler interpolation kernels.

After demosaicking, each of the color channels are companded to 8 bits/pixel using a lookup table (LUT). The LUT is derived from the following equation:

where DN8 is the converted 8-bit Digital Number and DN12 is the input 12-bit DN. This conversion uses a gamma value of 2.2, chosen to match the gamma value adopted in ITU-R BT.709 (ITU 2015). Figure 15 shows the InSight LUT output values plotted as a function of the input values. The InSight companding function exploits the fact that the higher signal levels have higher Poisson (shot) noise, proportional to the square root of the signal, by devoting less of the 8 bit information to these higher DN levels. Additionally, because human vision is more sensitive to changes in lower brightness levels than higher brightness levels, the companding function reduces quantization noise in the lower DN levels of the 8-bit images by allocating more information to the lower DN levels.

After the companding step, the RGB image is lossy-compressed to a user-specified quality level using JPEG (Joint Photographic Experts Group) lossy image compression. The InSight JPEG compressor is based on the Independent JPEG Group (IJG) software implementation, modified to run on the lander computer. Allowable JPEG compression quality values are 1–100, with chroma subsampling options of 422 and 444. Typical compression quality values expected to be used during flight include 75, 85, and 95, which approximately correspond to compressed bit rates of 1.5, 2, and 4 bits/pixel, respectively, although the exact relationship between compression quality and bit rate is highly scene-dependent. Figure 16 shows a compressed bit rate curve derived from a set of 67 different scenes in the InSight lander testbed. Each scene was imaged by the IDC and compressed at 4 different JPEG quality values using 422 chroma subsampling. The compressed bit rate for these JPEG files can be approximated using the function:

where \(q\) is the compression quality (1–100) and \(a\) and \(b\) are polynomial coefficients. For the InSight testbed data set the coefficient values that provide the best fit to the data are: \(a = 1.02\) and \(b = 9.1\mbox{e-}7\). This curve represents an estimate only, with scene-dependent variation from the model of \(\pm 0.5~\mbox{bits}/\mbox{pixel}\) at quality 75 and up to \(\pm 1~\mbox{bits}/\mbox{pixel}\) at quality 95. The coefficient values for images during flight are expected to differ from the coefficients derived from the testbed data. JPEG compression quality values of 100 are not recommended in practice because the compressed sizes of high-entropy images are often larger than the uncompressed size.

Compressed bit rate as a function of JPEG compression quality for IDC color images with 422 chroma subsampling. Images of 67 different scenes in the InSight sandbox were used for the source images, with the image in the inset being a representative example from that data set. The source images are 8 bits/pixel

Due to project development constraints a lossless compression capability is not available. However, the downlink of raw images is possible, but it comes at a considerable cost in data volume: the raw 12-bit image data are stored in 16-bit integers (companding is not an allowable option for raw images), causing a raw image to be 16.8 Mbits in size, compared to a 4 bit/pixel JPEG compressed image size of approximately 4.2 Mbits in size. Furthermore, shutter subtraction is not applied to raw images (shutter images must be acquired and transmitted separately for raw images). Because of these considerations the downlink of raw images is expected to be a rare occurrence during surface operations.

Once compressed, images are packetized for downlink and are prioritized according to the commanded downlink APID (Application Process Identifier). There are 6 downlink priorities for each camera, labelled from high priority to low priority. Images are assigned downlink priority by the ground operators based on the criticality of the data for surface operations activities. In general images required for deployment operations are deemed critical and are assigned the highest priorities, while images for non-operational use cases are assigned lower downlink priorities and may take days or weeks before becoming eligible for downlink. Packetized images do not incorporate error containment on InSight, thus if any packets are not received during downlink to Earth, any subsequent data (after the point of packet loss) for that image will not be reliably reconstructed until the missing packets are obtained. These images will be created as partial images until the missing image packets are retransmitted from the lander upon request. These packet retransmission requests are typically incorporated in command uplinks sent to the lander by the engineering operations team after downlink analyses.

3.1.2 Image IDs

Image IDs are assigned to each image to identify and process stereo pairs. The Image ID field is a 32-bit integer that is placed in the image acquisition command by the camera operators. Upon image command execution the image ID extracted from the command by the lander FSW and copied to the telemetered image metadata. This is sometimes called a “round-trip token” by operations personnel. Although it is in principle possible to identify overlapping images and perform stereo correlation without image IDs, this process would be significantly more complex and would not allow precise control over which images are processed for stereo. This is important because stereo data are generally acquired using only a shoulder (azimuth actuator) rotation in order to minimize relative baseline errors to a single actuator. For similar reasons, groups of images that should be mosaicked or meshed together are determined ahead of time and encoded in the image ID.

Subfields of the image ID include the “eye” (left or right) of a stereo pair, a mesh and mosaic ID that indicate which mesh or mosaic the image should be included in, and a sequence ID used for command tracking. The image_id specification is shown in Fig. 17. The image ID values are used extensively by the ground image processing pipeline to determine how to process an image.

3.1.3 Coordinate Frames

There are several coordinate frames defined on InSight, but only two see practical use in camera operations and image processing and are described here. The Lander frame, known alternatively as the IDA, Payload, and (sometimes, for heritage reasons) Rover frame, is the coordinate frame used for the majority of surface operations and commanding (Fig. 18). The Lander frame has its origin at the arm mounting point on the lander deck and is a right-handed Cartesian frame oriented with respect to the lander, with \(+Z\) down out the bottom of the lander, \(+X\) pointing out into the workspace and perpendicular to the yoke (i.e., the line formed by the center of the two solar panels and parallel to the plane of the top deck), and \(+Y\) completing the right-hand frame (parallel to the yoke and parallel to the deck). The origin of the lander frame (and the lander deck) is 1.05 meters above the nominal surface, thus the nominal surface \(Z\)-coordinate is \(+1.05\) meters in the Lander frame (Trebi-Ollennu et al. 2018).

Coordinate frames used by the image processing ground system. The lander deck is 1.05 meters above the nominal surface. The approximate deployment workspace is represented by the crescent shaped blob on the surface in front of the lander (green = nominal SEIS deployment workspace, grey = SEIS boundary, blue = WTS boundary). For the actual deployment workspace boundaries, see Trebi-Ollennu et al. (2018). The IDC camera FOV is depicted as the yellow frustum emanating from the IDC and expanding to the right

The Site frame is used in cases where the characteristics of the ground are more important than their relationship to the lander (for example, determining absolute tilt of instruments, or wind or shadow effects). The Site frame is coincident with the Lander frame origin but is a North-East-Nadir oriented frame, where \(+X\) points north, \(+Y\) points east, and \(+Z\) points down according to the local gravity vector (see Fig. 18). In the nominal landing orientation, the lander will be facing south, i.e., the Lander frame \(+X\) will be pointed in the site frame \(-X\) direction. Most operations activities are performed in the Lander frame. However, the instrument placement products (Sect. 4.6) require Site frame usage in some cases, because they document absolute tilts with respect to the gravity vector.

3.2 Camera Operations

Camera commands are generated by the Instrument Deployment System (IDS) uplink engineers in consultation with the science and operations teams. The InSight image command structure is significantly simplified relative to the MER/MSL image commands; a typical InSight image will be acquired with the camera in autoexposure mode, and most (likely all) images will be JPEG-compressed. Image ID, downlink priority and compression quality are expected to vary, although in general most commands will have been pre-written (“canned”) and will be executed many times in succession without modification (“reusable sequences”) as part of surface operations.

3.2.1 Science Panorama

In addition to regular operations in support of instrument deployment, the IDC will be commanded to acquire a “science panorama” as part of surface operations. This stereo panorama will cover a full 360-degree field of regard and will serve as a main data source for geologic properties investigations (Golombek et al. 2018). The science panorama will provide information about the surface morphology of the landing site, including rocks, dunes, pebbles, and other centimeter-scale textures, as well as color property information, including the relative brightness of surface materials. At 0.82 mrad/pixel angular resolution, the IDC science panorama will be qualitatively similar to the 0.9 mrad/pixel color panoramas acquired by the Imager for Mars Pathfinder (IMP, Smith et al. 1997). IMP panoramas were acquired from camera heights of 0.7 meters and 1.5 meters, and the InSight science panorama will be acquired at camera heights of 1.5 and 2 meters above the surface, depending on the tier.

3.2.2 Tau Measurements

Because the InSight lander is solar powered, the amount of sunlight falling on the solar arrays is affected by two main parameters: 1) the amount of dust suspended in the atmosphere, as measured by the cameras, and 2) the amount of dust deposited on the solar panels, expressed as a “dust factor” parameter. Differences between the two could imply changes to the modelled dust factor (due to dust settling, and/or due to dust cleaning wind events), or to some other spacecraft-internal cause. By monitoring the solar array power levels over time, spacecraft operators can compare changes in solar panel power levels directly to changes in the measured atmospheric dust opacity. To support this monitoring activity, dust optical depth images will be acquired daily during the nominal mission.

Mars landers have traditionally been instrumented to measure atmospheric optical depth (Colburn et al. 1989; Tomasko et al. 1999; Lemmon 2010; Lemmon et al. 2014, 2015). The optical depth is largely due to dust, with a minor contribution from water ice; both are time varying (Montabone et al. 2015). Opacity variations affect atmospheric heating and have been shown to change daily average surface insolation by a factor of nearly three (Lemmon et al. 2015). The latter quality is of particular importance to the InSight mission, as solar panel output will be affected by the atmosphere and by panel performance in the Martian environment (including dust deposition on the panels). Measurements of atmospheric opacity can be used to separate external contributions from intrinsic solar panel performance.

While previous missions have used dedicated solar filters for direct imaging of the sun to measure atmospheric extinction directly (Spiga et al. 2018), the InSight cameras have no such dedicated capability. Instead, InSight will rely on sky images taken with the IDC and possibly ICC. Each can view the sky near the horizon to the south. Wolfe (2016) used one Mars year of Opportunity rover sky images to conduct an exploratory study and demonstrated that opacity can be reliably determined from sky images pointed away from the Sun over the course of a Mars year, acquired by a dusty camera that had been exposed to the Martian environment (the Opportunity Navcam). Intrinsic uncertainty in retrieved optical depth using this method when compared to near-simultaneous direct measurements were less than 0.1 tau.

The expected operations plan for opacity measurements begins with images that capture the low (\(<30~\mbox{degrees}\)) southern sky when the Sun is low in the east or west. This region is accessible to each camera; a fixed camera orientation is chosen to minimize arm motion after the deployment period so that aiming at or near the Sun will not be necessary. Analysis following the Wolfe (2016) method will use the red channel of radiance calibrated images (as a Navcam analog), subdivided into bins by scattering angle (which varies approximately laterally in the images); bins will be modeled for relative variation of radiance with elevation angle (approximately vertical in the images). A discrete ordinance radiative transfer model (Stamnes et al. 1988) will constrain opacity from such images. The use of relative changes of radiance, rather than absolute radiance, minimizes errors from errors in aerosol scattering properties (e.g., ice/dust ratio), calibration, and the possible accumulation and removal of dust on the optics (Wolfe 2016). Images acquired with the camera boresight pointed above the horizon will be processed by automated ground software that extracts radiance from the images, determines inputs for and runs the radiative transfer retrieval or accesses results via look-up table, and writes an output “tau” text file that includes the resulting opacity, estimated error, and flags for any errors in processing. The automated processing is designed to support mission operations in real time. It relies on the demonstrated robustness of the Wolfe technique. It does not preclude further analysis of images, accounting for absolute radiance, using multiple images, and using color information.

4 Ground Image Processing

4.1 Introduction

Ground image processing will be performed by the Multimission Image Processing Laboratory (MIPL) at JPL using the Video Image Communication And Retrieval (VICAR) image processing system (VICAR 2016). Processed images are distributed to the ISSWG for deployment analysis and decision-making (Golombek et al. 2018). The VICAR software capabilities include stereo correlation, mosaic generation, terrain mesh generation, radiometric correction, pointing correction (bundle adjustment), linearization (epipolar alignment), and the creation of derived, mission-specific products such as surface normals, slope maps, arm reachability maps, \(\mathit{XYZ}\) point clouds, and roughness maps. A pipeline handles systematic, automated execution of the programs that create these products on every image and stereo pair received. The ground software, techniques, and products are similar to, and in some cases identical to those described in LaVoie et al. (1999) and Alexander et al. (2006), and the reader is directed there for further information.

The majority of imaging processing is performed on stereo pairs. A total of 134 derived image products will be made from each InSight stereo pair (67 per eye), generally within 30 minutes of receipt of the data in order to support daily mission operations and planning. This section provides a brief summary of these products. For more details on InSight image processing software, product types, file naming conventions, and data formats, the reader is directed to Abarca et al. (2018).

4.1.1 Heritage from Other Missions

The VICAR Mars software suite (Alexander et al. 2006; Deen 2003) is a set of applications and libraries written specifically for processing image data from Mars rover and lander operations. The Mars software suite had its origin with Mars Pathfinder (LaVoie et al. 1999) and has since been used for every NASA Mars surface mission since, including the Mars Exploration Rover (MER) mission (Alexander et al. 2006), Phoenix, MSL, and the upcoming Mars 2020 mission. This high heritage allowed the InSight project to efficiently leverage many years of development and test effort contributed by prior missions. The Mars software suite consists of about 150,000 lines of C++ code, of which approximately 3,600 lines are InSight-specific, written to enable use of the Mars software suite for InSight. Additionally, the instrument placement software tools are specific to InSight and consist of another 7,600 lines of code. Abarca et al. (2018) describes the software in more detail.

4.1.2 Image Decompression and Rotation

Upon receipt at Earth, compressed image data are decompressed and rotated so that the sky appears at the top of all ICC images and most IDC images (the IDC image orientation depends on the arm configuration). The image rotation step is performed because the camera hardware was mounted in orientations that were chosen for ease of mechanical accommodation (on MER and MSL the rotation was performed by software onboard the spacecraft). The original raw JPEG stream is also preserved and written to a JFIF (JPEG File Interchange Format) file. Because of the desire to keep the compressed data in an unmodified form these JPEG images are not rotated. After the individual images files are created they are placed into an image processing pipeline queue for further processing.

4.1.3 Image Processing Pipeline Description

For purposes of discussion, InSight derived image products fall into four main categories: 1) stereo products, processed as single stereo pairs, 2) mosaics, 3) instrument placement products, and 4) 3D visualization products. The products and processing are interrelated. Figure 19 shows the general processing flow for InSight product generation. Abarca et al. (2018) describes the pipeline in detail.

InSight image processing product flow overview. For more details see Abarca et al. (2018)

4.2 Stereo Processing

Stereo IDC images are acquired from different locations by moving only the shoulder joint of the arm to reduce arm pointing knowledge error. These stereo pairs are often acquired from multiple locations to create multi-image stereo mosaic data sets. As mentioned earlier, images are tagged with an image ID to distinguish between a left eye or right eye in a horizontal stereo pair. Vertical stereo is also possible in principle, although the software maintains a left/right naming convention.

Stereo image pairs are correlated by finding, for each pixel in an image, the matching pixel location in the corresponding stereo partner image. This correlation is found by maximizing the normalized cross-correlation coefficient of a patch of pixels around the pixel of interest compared to areas in the other image. The process used for InSight is a modification of the Gruen and Baltsavias (1988) algorithm and is described more fully in Deen and Lorre (2005). The resulting disparity map file is coregistered to one image and contains at each pixel the pixel coordinates of the matching feature in the other image.

Correlation processing is performed on the green channel of the RGB image. Green was chosen because the green channel is derived from twice as many source pixels as red or blue in the original Bayer pattern, thus it has the highest non-interpolated resolution of the three color bands. The green channel also forms the bulk of the luminance of the image. Luminance is not used directly because of concerns about increased noise levels in the blue channel relative to green. There is some amount of noise crosstalk from the blue channel to the green channel due to de-mosaicking but that is largely unavoidable.

The InSight robotic arm contains a grapple fixture at the end, and this grapple is visible in all IDC images, along with a portion of the robotic arm. Depending on the arm orientation, the grapple/arm hardware can appear in up to a quarter of the IDC image, reducing the stereo overlap. This obstruction is masked off after \(\mathit{XYZ}\) image generation (Fig. 20). When the grapple is deployed during instrument placement activities, it occludes even more of the image. The grapple can be re-stowed after the instruments are deployed to minimize this obscuration.

Example stereo IDC image products: (a) Radiometrically-corrected image, (b) Cartesian \(\mathit{XYZ}\) data contours. (c) Range from camera, (d) surface normal. (e) Local slope, (f) instrument placement product example for SEIS/WTS. The grapple/arm hardware can be seen in the upper left of each image, along with the corresponding absence of stereo data on the right side of the arm due to the hardware occluding the view of the terrain in the stereo partner image. For more details on the image products, including the color key definitions, the reader is referred to Abarca et al. (2018)

After stereo correlation, a number of derived image products are made: 1) \(\mathit{XYZ}\) images contain the Cartesian \(\mathit{XYZ}\) coordinates of each pixel in Site or Lander frame, 2) range images contain the distance from the camera or coordinate system origin to the object, 3) surface normal images contain the orientation of the surface, expressed as a unit vector, 4) slope images contain the slope angle, slope heading, and slope magnitude (i.e., sine of the slope angle) for each point on the surface, 5) range error images contain estimated errors in both range and cross-range direction, and 6) instrument placement product images, which comprise a set of image products describing various aspects of instrument placement. For details on these products see Abarca et al. (2018).

It should be noted that Insight stereo processing is performed on non-linearized images. Linearization is a process that removes geometric distortion, creating an image suitable for use with a pinhole camera model (CAHV), and performs epipolar alignment on images. Epipolar alignment constrains the disparity between two images to a single dimension, with matching stereo features located the same row of both images. To create a linearized, epipolar-aligned image the location and orientation of the stereo partner must be known. This is relatively straightforward with two fixed stereo cameras; if an image exists from one camera of a stereo pair one, one can calculate the geometry for the other camera without the presence of the other image, because of the fixed relationship between the two cameras. On InSight, linearization is more challenging due to the use of a single camera acquiring stereo images from varying positions and orientations, which is not predictable in advance. Additionally, the use of the arm azimuth joint to create the stereo offset introduces a significant frame rotation between the left and right eyes. Linearized versions of these images are subsequently also rotated, which introduces interpolation noise into the images. For these reasons, stereo correlation is performed on raw, unlinearized image pairs. InSight is the first Mars surface mission to rely primarily on non-linearized stereo processing, in which non-linearized, raw image geometries are used for primary downstream stereo products.

4.3 Pointing Correction

Because the stereo range error is proportional to the inverse of the stereo baseline (Chang et al. 1994), errors in the baseline knowledge propagate directly into the stereo range error. The absolute position error of the arm is on the order of 1 cm, which is too large to meet the end-to-end stereo accuracy requirement of 1 cm in the workspace. To reduce the stereo error, most stereo pairs are acquired by moving only the arm azimuth joint between images. This method constrains the degrees of freedom for the arm kinematics computations, and subsequently reduces the relative position knowledge error of the stereo baseline to approximately 5 mm.

While this arm positioning technique improves the accuracy of the stereo data, the accuracy is not sufficient to meet the accuracy requirements. Figure 21 shows the results of an IDC DEM mosaic created using telemetered arm pointing. The shading discontinuities between the images are caused by imprecise pointing knowledge. In order to improve the stereo accuracy, the images must be “pointing corrected”. Pointing correction is a process in which features that are common to overlapping images (tiepoints) are identified and analyzed using bundle-adjustment techniques (Triggs et al. 1999). A bundle adjustment process minimizes errors between tiepoints by making adjustments to the position and orientation of the camera. For more details on the pointing correction techniques used on InSight, see Abarca et al. (2018).

4.4 Mosaics

The ISSWG activities are vastly facilitated by the use of image mosaics, in particular those showing overhead views of the deployment workspace. InSight IDC mosaics are generated in 6 projection types: 1) Cylindrical, with constant degrees per pixel in azimuth and elevation, 2) Point-perspective, using a pinhole camera model, 3) Cylindrical-perspective hybrid, useful for stereo panoramas, 4) Polar, an overhead view mapped to a sphere, with the origin at nadir, 5) Vertical, an overhead view with elevation angle mapped linearly to distance, and 6) Orthorectified, an overhead view with a true mapping of pixels to real-world locations. Details on these projection types can be found in Alexander et al. (2006) and Abarca et al. (2018).

The ISSWG groups the IDC mosaics into four categories, based on the use case during surface operations: 1) workspace mosaics show the instrument deployment areas reachable by the arm and are typically orthorectified and pointing-corrected, 2) deck mosaics show the state of the lander top deck, including instrument configuration, after landing, 3) placement confirmation mosaics show the instruments after placement onto the surface, and 4) the science mosaic, a full 360-degree panorama of the terrain around the lander. Figure 22 shows examples of typical InSight mosaics.

Mosaic Examples: (a) SEIS deployment mosaic, vertical projection, (b) deck panorama, cylindrical projection, (c) science panorama, cylindrical projection. See Fig. 23 for an example of a workspace mosaic

Of the four categories of mosaics, the workspace mosaic is the most critical to the ISSWG. There are two workspace mosaics planned for InSight. These mosaics will be acquired for Phases 2 and 3 of ISSWG (Golombek et al. 2018). Both mosaics must be pointing-corrected. The first workspace mosaic consists of 4 tiers of images made up of 26 stereo image pairs, taken 1.5 m above the nominal ground plane, with stereo baselines varying between 22.5 cm for the bottom tier (closest to the lander) to 9.1 cm for the top tier. The stereo baselines in each tier are constrained by the need to achieve sufficient overlap between frames while still providing the largest stereo baseline possible to maintain accuracy. While the camera height above the surface is the same in the four tiers, the camera elevation angles change considerably, varying from approximately −84 degrees in the lower (near) tier to −42 degrees in the upper (far) tier. This variation in elevation angle affects the size of the image footprint on the ground and thus the corresponding number of frames needed in each tier to cover the same angular extent increases from the lower to upper tier (see Abarca et al. 2018, for the IDC stereo pair footprints shown in an ICC image). Because of this, only 3 frames are needed in the lower (near) tier while 9 frames are required in the upper (far) tier. To achieve the same stereo overlap percentage across the tiers the difference in azimuth angles are correspondingly smaller between frames in the far field compared to the near field. Because the radial distance from the camera to the shoulder joint of the arm does not vary significantly across tiers, the difference in azimuth angle closely correlates with camera baseline distance, with the net result that the nearer tiers have longer camera baselines than the farther tiers. In addition to the four tiers of images, four additional “tiepoint” monoscopic images are also acquired (two between tiers 1 and 2, and two between tiers 2 and 3) in order to create additional overlap for tiepointing. The second workspace mosaic is acquired at a 1.2-meter height, covering the area(s) of interest for instrument placement. In both cases the mosaics include parts of the lander deck, where fiducial markers are placed. These fiducials, which are at known locations in the Lander coordinate frame, improve the absolute accuracy of the mosaic and help to keep the bundle adjustment solution from drifting, which could happen without a known reference point. Figure 23 shows examples of workspace mosaics.

InSight workspace mosaic examples, created from stereo images acquired by an engineering model IDC in the InSight testbed at JPL. (a) Uncorrected color image mosaic, (b). Cartesian \(\mathit{XYZ}\) contours shown in red (\(X\)), green (\(Y\)) and blue (\(Z\)), with 10 cm spacing, (c) range contours, with 10 cm spacing, and (d) DEM, where darker tones are lower in elevation and lighter tones are higher. All data are from the same source images. The data gaps behind the rocks are due to occlusions, the gaps at the top of the image are due to obscuration caused by the arm/grapple. The terrain shown in this image is approximately 6 meters across by 4 meters high

Due to limited communications bandwidth from Mars, each of these two workspace mosaics will be downlinked from the lander over the course of several days. Each day, human analysts will add the new images to the set of tiepoints and rerun the bundle adjustment to get an increasingly complete and accurate result. It is expected that pointing correction will be applied to other mosaics as well, particularly the lander self-portrait (deck pan) mosaics. However, these are completed on a best-effort basis and are not part of the critical path for operations.

4.5 Polygonal Meshes

To facilitate viewing in 3D rendering software, notably RSVP (Trebi-Ollennu et al. 2018), stereo image data are rendered into 3D polygonal meshes (Fig. 24). Polygonal meshes are generated by creating surface polygons from \(\mathit{XYZ}\) point cloud images. These polygons are grouped together to form a surface, or “mesh” from which robotic arm operators can visualize during operations planning. In addition to allowing arm operators to view image data from multiple vantage points, meshes are used to check for collisions between arm/instrument hardware and the Martian surface.

4.6 Instrument Placement Products

Unique to InSight are a number of “instrument placement” products, which are used by the ISSWG to assess the suitability of an area of terrain for instrument placement. The instrument placement products contain information such as the calculated tilt of an instrument at each point in the workspace, the calculated roughness of the terrain under an instrument, the difference in tilt between the SEIS and WTS, and the extent of the reachable workspace as constrained by arm kinematics and lander tilt. These products do not describe the terrain directly; rather, they describe how the InSight instruments interact with the terrain. All of the instrument placement products use thresholds based on instrument deployment requirements to create a “goodness” status band for each product. These goodness bands are gathered into a single overall goodness product for the instrument of interest. The full set of instrument placement products allow operators to find areas in the workspace that meet the defined criteria for instrument placement. Figure 25 shows examples of instrument placement products, where each pixel represents the placement state if the instrument grapple is placed above that point. Instrument placement products are typically displayed so that areas that pass thresholds are rendered in green. See Abarca et al. (2018) for a detailed discussion of the instrument placement products and usage.

Examples of Instrument Placement Products overlaid onto the IDC orthographic workspace mosaic shown in Fig. 23. (a) HP3 allowable placement workspace, (b) SEIS/WTS delta tilt placement, (c) HP3 roughness, and (d) SEIS goodness map. Green pixels generally indicate that placement criteria are met, orange indicates that some requirements are met but not all, and red indicates that two or more criteria are not met. Note that the placement products only measure geometrical constraints. For example a flat rock embedded in sand with no relief would be acceptable from a geometric constraint standpoint, but would likely be ruled out by human evaluators using a multitude of additional criteria

Radiometric Processing

Although detailed IDC and ICC radiometric processing is not required for instrument placement activities, basic radiometric processing is performed on the image data. The first step in the radiometric processing removes the effects of the non-linear companding lookup table (LUT) through a decompanding (or inverse LUT) operation. This expands the 8-bit telemetered image back to a linear 12 bit space. Although the result adds no additional information compared to the 8-bit image (there are 256 quantized intensities possible in the full 0–4095 range), this is an important step because a linear brightness space is required for subsequent radiometric and color processing.

After conversion to a 12-bit linear space, radiometric processing removes the pre-demosaic preboost factor described in Sect. 3.1.1. Flat field correction is performed by dividing the image by a calibrated, normalized flat field (obtained during ground calibration). This step removes image vignetting and variations in pixel-to-pixel responsivity, although the latter is imperfect due to the effects of compression and demosaicking. After flat field correction, the image is divided by the exposure time. This step is critical for mosaics and meshes, which are comprised of images acquired using terrain-dependent autoexposure times that can vary considerably throughout a mosaic. Finally, the radiometric processing software multiplies the pixel by the estimated responsivity coefficients for each of the R, G, B filters. The result is an approximately radiometrically correct product, expressed in \(\mbox{W}/\mbox{m}^{2}/\mbox{nm}/\mbox{sr}\).

4.7 Data Archiving

InSight data will be delivered to the Planetary Data System (PDS) six months after receipt of data on Earth. Imaging activities after the first six months of the mission are likely to be minimal, consisting mainly of tau measurements and long-term monitoring image data, but any data received will be released to PDS at regular intervals following the first release.

5 Summary

The InSight lander is scheduled to land on Mars in November 2018. The lander is equipped with two cameras: the robotic arm-mounted IDC and the lander-mounted IDC. Both cameras are MSL flight spare engineering cameras, upgraded from greyscale to RGB color by the InSight project. Images from the IDC and ICC will be processed and analyzed to support the deployment of the SEIS and HP3 instruments onto the Martian surface. In addition to the deployment activities, the cameras will acquire images of the local terrain around the lander and the Martian sky in support of scientific investigations.

References

H. Abarca et al., Image and data processing for InSight lander operations and science. Space Sci. Rev. (2018 this issue, submitted)

D.A. Alexander, R. Deen, P. Andres, P. Zamani, H. Mortensen, A. Chen, M. Cayanan, J. Hall, V. Klochko, O. Pariser, C. Stanley, C. Thompson, G. Yagi, Processing of Mars Exploration Rover imagery for science and operations planning. J. Geophys. Res. 111, E02S02 (2006). https://doi.org/10.1029/2005JE002462

W.B. Banerdt et al., The InSight mission for 2018, LPSC XLVIII, abs. 1896, 2017

B.E. Bayer, Color Imaging Array, United States Patent #3,971,065 (1976)

J.F. Bell III, S.W. Squyres, K.E. Herkenhoff, J.N. Maki, H.M. Arneson, D. Brown, S.A. Collins, A. Dingizian, S.T. Elliot, E.C. Hagerott, A.G. Hayes, M.J. Johnson, J.R. Johnson, J. Joseph, K. Kinch, M.T. Lemmon, R.V. Morris, L. Scherr, M. Schwochert, M.K. Shepard, G.H. Smith, J.N. Sohl-Dickstein, R. Sullivan, W.T. Sullivan, M. Wadsworth, Mars Exploration Rover Athena Panoramic Camera (Pancam) investigation. J. Geophys. Res. 108(E12), 8063 (2003). https://doi.org/10.1029/2003JE002070

J.F. Bell III, J. Joseph, J.N. Sohl-Dickstein, H.M. Arneson, M.J. Johnson, M.T. Lemmon, D. Savransky, In-flight calibration and performance of the Mars Exploration Rover Panoramic Camera (Pancam) instruments. J. Geophys. Res. 111, E02S03 (2006). https://doi.org/10.1029/2005JE002444

C. Chang, S. Chatterjee, P.R. Kube, in Proceedings of 1st International Conference on Image Processing, 13–16 Nov. 1994, vol. 2, pp. 735–739. https://doi.org/10.1109/ICIP.1994.413668

D.S. Colburn, J.B. Pollack, R.M. Haberle, Diurnal variations in optical depth at Mars. Icarus 79, 159–189 (1989). https://doi.org/10.1016/0019-1035(89)90114-0

R.G. Deen, Cost savings through Multimission code reuse for Mars image products. Paper presented at 5th International Symposium on Reducing the Cost of Spacecraft Ground Systems and Operations, Deep Space Commun. and Navig. Syst. Cent. of Excellence (DASCANSO) (Jet Propulsion Laboratory, Pasadena, CA, 2003)

R.G. Deen, J.J. Lorre, Seeing in three dimensions: correlation and triangulation of Mars Exploration Rover imagery. Paper presented at 2005 International Conference on Systems, Man, and Cybernetics, Inst. of Electr. and Electron. Eng., Waikoloa, Hawaii, 2005

K.S. Edgett, R.A. Yingst, M.A. Ravine et al., Curiosity’s Mars Hand Lens Imager (MAHLI) investigation. Space Sci. Rev. 170, 259 (2012). https://doi.org/10.1007/s11214-012-9910-4

W.M. Folkner et al., The rotation and interior structure experiment on the InSight mission to Mars. Space Sci. Rev. 214, 100 (2018)

M.A. Gauvin, E.R. Freniere, Reducing Stray Light in Opto-Mechanical Systems (Lambda Research Corporation, Littleton, 2000)

D.B. Gennery, Least-squares camera calibration including lens distortion and automatic editing of calibration points, in Calibration and Orientation of Cameras in Computer Vision, ed. by A. Grun, T. Huang (Springer, Berlin, 2001), pp. 123–136. Chap. 5. ISBN 3-540-65283-3

D.B. Gennery, Generalized camera calibration including fish-eye lenses. Int. J. Comput. Vis. 68(3), 239–266 (2006). https://doi.org/10.1007/s11263-006-5168-1

M. Golombek, D. Kipp, N. Warner, I.J. Daubar, R. Fergason, R. Kirk, R. Beyer, A. Huertas, S. Piqueux, N.E. Putzig, B.A. Campbell, G.A. Morgan, C. Charalambous, W.T. Pike, K. Gwinner, F. Calef, D. Kass, M. Mischna, J. Ashley, C. Bloom, N. Wigton, T. Hare, C. Schwartz, H. Gengl, L. Redmond, M. Trautman, J. Sweeney, C. Grima, I.B. Smith, E. Sklyanskiy, M. Lisano, J. Benardini, S. Smrekar, P. Lognonné, W.B. Banerdt, Selection of the InSight landing site. Space Sci. Rev. 211, 5–95 (2017). https://doi.org/10.1007/s11214-016-0321-9

M. Golombek, M. Grott, G. Kargl, J. Andrade, J. Marshall, N. Warner, N.A. Teanby, H.E. Abarca, R.G. Deen, V. Ansan, E. Hauber, J. Voigt, R. Lichtenheldt, B. Knapmeyer-Endrun, A. Trebi-Ollennu, J. Singer, J. Maki, W.B. Banerdt et al., Geology and physical properties investigations by the InSight lander. Space Sci. Rev. 214, 84 (2018). https://doi.org/10.1007/s11214-018-0512-7

A.W. Gruen, E.P. Baltsavias, Geometrically constrained multiphoto matching. Photogramm. Eng. Remote Sens. 54(5), 633–641 (1988)