Abstract

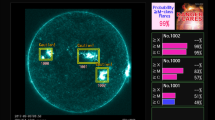

We propose a forecasting approach for solar flares based on data from Solar Cycle 24, taken by the Helioseismic and Magnetic Imager (HMI) on board the Solar Dynamics Observatory (SDO) mission. In particular, we use the Space-weather HMI Active Region Patches (SHARP) product that facilitates cut-out magnetograms of solar active regions (AR) in the Sun in near-realtime (NRT), taken over a five-year interval (2012 – 2016). Our approach utilizes a set of thirteen predictors, which are not included in the SHARP metadata, extracted from line-of-sight and vector photospheric magnetograms. We exploit several machine learning (ML) and conventional statistics techniques to predict flares of peak magnitude \({>}\,\mbox{M1}\) and \({>}\,\mbox{C1}\) within a 24 h forecast window. The ML methods used are multi-layer perceptrons (MLP), support vector machines (SVM), and random forests (RF). We conclude that random forests could be the prediction technique of choice for our sample, with the second-best method being multi-layer perceptrons, subject to an entropy objective function. A Monte Carlo simulation showed that the best-performing method gives accuracy \(\mathrm{ACC}=0.93(0.00)\), true skill statistic \(\mathrm{TSS}=0.74(0.02)\), and Heidke skill score \(\mathrm{HSS}=0.49(0.01)\) for \({>}\,\mbox{M1}\) flare prediction with probability threshold 15% and \(\mathrm{ACC}=0.84(0.00)\), \(\mathrm{TSS}=0.60(0.01)\), and \(\mathrm{HSS}=0.59(0.01)\) for \({>}\,\mbox{C1}\) flare prediction with probability threshold 35%.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Solar flares are sudden brightenings that occur in the solar atmosphere and release enormous amounts of energy over the entire electromagnetic spectrum. Flares are quite prominent in X-rays, UV, and optical lines (Fletcher et al., 2011) and they are often (but not always) accompanied by eruptions that eject solar coronal plasma into the interplanetary space (coronal mass ejections, CMEs). These very intense phenomena – the largest explosions in the solar system – are associated with regions of enhanced magnetic field, called active regions (AR), and are associated, in white light, with sunspot groups. Depending on their peak X-ray intensity, as recorded by the National Oceanic and Atmospheric Administration’s (NOAA) Geostationary Operational Environmental Satellite (GOES) system, flares are categorized in classes, the strongest and most important being X, M, and C (in decreasing order). Flare classification is logarithmic, with a base of 10, and is complemented by decimal sub-classes (M5.0, C3.2 etc.).

The solar flare radiation may be detrimental to infrastructures, instruments and personnel in space, therefore flare forecasting is an integral part of contemporary space-weather forecasting. Forecast mainly employs measurements of the AR magnetic field in the solar photosphere. Magnetic-field-based predictors represent AR magnetic complexity or the energy budget available to power flares. Recent developments in instrumentation have led to a regular production of such measurements, offering the opportunity to produce extensive databases with properties suitable for solar flare prediction.

On the other hand, machine learning (ML) in recent years has become an increasingly popular approach for performing computer cognition tasks that were inherently possible only using human intelligence. Thus, ML is a subfield of artificial intelligence (AI), and it aims at using past data in order to train computers so that they can apply the accumulated knowledge to new, previously unseen, data. The acquisition of knowledge is the training phase, and the application of what was learned to future scenarios is the prediction phase. Typically, ML is more interested in prediction than conventional statistics. ML can also interface with conventional statistics in a field called statistical learning (Hastie, Tibshirani, and Friedman, 2009). Learning is either called supervised or unsupervised, depending on whether it is done with a teacher or not. Supervised learning comprises regression and classification, while unsupervised learning is also called clustering. In our study, we focus on classification, where a set of input variables or predictors belongs to one of two classes (binary classification). ML is more powerful than traditional statistical techniques such as, say, generalized linear models that include probit, logit, etc. for binary classification, because it can help model more complex nonlinear relationships. An introduction to ML research can be found in several textbooks (MacKay, 2003; Hastie, Tibshirani, and Friedman, 2009).

Several researchers have recently used ML techniques to effectively forecast solar flares. More often, the techniques used by researchers were neural networks (Wang et al., 2008; Yu et al., 2009; Colak and Qahwaji, 2009; Ahmed et al., 2013), support vector machines (Li et al., 2008; Yuan et al., 2010; Bobra and Couvidat, 2015; Boucheron, Al-Ghraibah, and McAteer, 2015), ordinal logistic regression (Song et al., 2009), decision trees (Yu et al., 2009), and relevance vector machines (Al-Ghraibah, Boucheron, and McAteer, 2015). Very recently, random forests have also been used (Barnes et al., 2016; Liu et al., 2017).

We use predictors calculated from near-realtime (NRT) Space-weather HMI Active Region Patches (SHARP) data combined with state-of-the-art ML and statistical algorithms in order to effectively forecast flare events for an arbitrarily chosen 24-hour forecast window. Flare magnitudes of interest are \({>}\,\mbox{M1}\) and \({>}\,\mbox{C1}\). Prediction is binary, meaning that a given flare class is considered to either happen or not within the next 24 hours after prediction. Our predictions are effective immediately, therefore with zero latency. Analysis involves a comprehensive NRT SHARP sample including all calendar days between 2012 and 2016, at a cadence of 3 hours. Results in this work summarize the findings of the first 18 months of the “Flare Likelihood And Region Eruption foreCASTing” (FLARECAST) project and, while based on ongoing work, we took every effort to present robust and unbiased results.

The contribution of the present work is twofold:

-

The use of novel magnetogram-based predictors in a multi-parameter solar flare prediction model.

-

The use of classic and novel ML techniques, such as multi-layer perceptrons (MLP), support vector machines (SVM), and especially, for one of the first times,Footnote 1 random forests (RF), for the forecasting of \({>}\,\mbox{M1}\) and \({>}\,\mbox{C1}\) flares.

The application code is available at https://doi.org/10.17632/4f6z2gf5d6.1 , along with the benchmark dataset used in this work. The run time for all methods is on the order of few minutes.

The analysis presented here is part of the EU Horizon 2020 FLARECAST project, aiming to develop an NRT online forecasting system for solar flares. The study is organized as follows: Section 2 describes the data selected to train and test the algorithms and presents the predictors we used, together with background information on the solar physics aspects of magnetogram-based calculations. Section 3 describes the ML algorithms in terms of their core principles, along with some additional remarks and comments. Section 4 is devoted to the forecast experiments and a comparison with similar published results and statistics. Section 5 presents the main conclusions and future integration of the present work in the FLARECAST operational system. Four appendices that describe multiple complementary aspects of this work are also included.

2 Data and Classification Predictors

2.1 Data

The Helioseismic and Magnetic Imager (HMI; Scherrer et al., 2012) on board the Solar Dynamics Observatory (SDO; Pesnell, Thompson, and Chamberlin, 2012), provides regular full-disk solar observations of the three components of the photospheric magnetic field. The HMI team has created the Space Weather HMI Active Region Patches (SHARPs), which are cut-outs of solar regions-of-interest along with a set of parameters that might be useful for solar flare prediction (Bobra et al., 2014). For our analysis, we use the near-realtime (NRT), cylindrical equal area (CEA) SHARP data to calculate a set of predictors.

To associate SHARPs with flare occurrence, we use the Geostationary Operational Environmental Satellite (GOES) soft X-ray measurements. For each SHARP we search for flares within the next 24 hours by either matching the NOAA AR numbers with those of the recorded flares or by comparing the corresponding longitude and latitude ranges, considering also the differential solar rotation.

The algorithms of Section 3 were tested on a sample of the 2012 – 2016 SHARP dataset. We considered all days in the period October 1, 2012, to January 13, 2016, and for every given day, we computed the set of predictors (see Section 2.2) at a cadence of 3 hours, starting at 00:00 UT. For our analysis, only SHARP cut-outs that correspond to NOAA ARs were considered. In this way, we obtain a fairly representative sample of the solar activity, including several flares of interest, with a sufficiently high sampling frequency.

2.2 Predictors

The set of 13 predictors consists of both predictors that have previously been proposed in the literature and new ones, and comprises a subset of the parameter set developed for the FLARECAST project. In Figure 1 we show two sample magnetograms to demonstrate how the predictors reflect the complexity and size of the corresponding active region. The predictors used for this study are described below.

Two SHARP frames depicting an AR with very different levels of flaring activity. NOAA AR 11875 (left) produced 7 C-, 0 M-, and 0 X-class flares within 24 h, while NOAA AR 11923 (right) produced no flares. The two AR are scaled so as to retain their original relative size and, for comparison, vectors of the seven predictors used are included in the frames. The names of all \(K=7\) predictors [logR, FSPI, TLMPIL, DI, \(\mathrm{WL}_{\mathrm{SG}}\), IsinEn1, IsinEn2] are defined in Section 2.2. High values of the predictors statistically indicate a powerful AR (left), with low values indicating a quiescent, flare-quiet AR (right).

2.2.1 Magnetic Polarity Inversion Line (TLMPIL)

A magnetic polarity inversion line (MPIL) in the photosphere of an AR separates distinct patches of positive- and negative-polarity magnetic flux. Several studies have been carried out to investigate the relationship between flare occurrence and MPIL characteristics (Schrijver, 2007; Falconer et al., 2012). We determined a specific subset of an MPIL that has been also identified as an MPIL*, with i) a strong gradient in the vertical component of the field across the MPIL, and ii) a strong horizontal component of the field around the MPIL. MPIL* has been considered as the single most likely place in an AR where potential magnetic instabilities, such as, say, magnetic flux cancellation and/or magnetic flux rope formation (Fang et al., 2012) can take place. Such processes seem intimately related to flares. We used the total length \(L_{\mathrm{tot}}\) of MPIL* segments in active regions as an MPIL quantification parameter.

2.2.2 Decay Index (DI)

The decay index is a quantitative measure for the torus magnetic instability in a current-carrying magnetic flux rope (Kliem and Török, 2006). It has been found that the higher the value of the decay index in AR magnetic fields, the more likely a solar eruption involving a major solar flare (Zuccarello, Aulanier, and Gilchrist, 2015). We developed a decay index parameter derived by the ratio \(L_{\mathrm{hs}}/h_{\mathrm{min}}\), where \(L_{\mathrm{hs}}\) is the length of a highly sheared portion of an MPIL and \(h_{\mathrm {min}}\) is the minimum height at which the decay index achieves a purported critical value of 1.5. This ratio can be used to measure the degree of instability in a flux rope. Note that if there was more than one MPIL in an AR, then we calculated the ratio \(L_{\mathrm {hs}}/h_{\mathrm{min}}\) for every MPIL and took the peak value for a given time that represents the highest eruptive potential of the AR.

2.2.3 Gradient-Weighted Integral Length of the Neutral Line (\(\mathit{WL}_{\mathit{SG}}\))

The gradient-weighted integral length of the neutral line, \(\mathrm{WL}_{\mathrm{SG}}\), is defined in Falconer, Moore, and Gary (2008) as

and corresponds to the line integral of the vertical-field (\(B_{z}\)) horizontal gradient over all neutral line (or MPIL) segments on which the potential horizontal field is greater than 150 G. This MPIL-related property has been reported to show a useful empirical association with the occurrence of solar eruptions (flares, CMEs, SPEs; Falconer et al., 2011, 2014) and is the main predictor used in the Magnetic Forecast (MAG4) forecasting service, developed in the University of Alabama ( http://www.uah.edu/cspar/research/mag4-page ).

For these calculations of \(\mathrm{WL}_{\mathrm{{SG}}}\), two approximations of the vertical field \(B_{z}\) are used: \(B_{\mathrm{los}}\) (line of sight; uncorrected) and \(B_{r}\), keeping in mind that in the former case, only values for regions located within \(30^{\circ}\) from the central meridian are considered accurate. For each magnetogram, an MPIL mask is determined as in the calculation of MPIL characteristics, described previously. In order to select the strong-horizontal field segments of MPILs, the potential field extrapolation method developed by Alissandrakis (1981) is used. Finally, the horizontal gradient of \(B_{z}\) is calculated numerically and integrated over all MPIL segments. The accuracy of the calculated values was estimated by comparing flare rates derived from our calculations of \(\mathrm{WL}_{\mathrm{SG}}\) (using Equation 4 along with the values in Table 1 in Falconer et al., 2011) with the flare rates from the text output of MAG4.

2.2.4 Ising Energy (IsinEn1, IsinEn2)

The Ising energy is a quantity that parameterizes the magnetic complexity of an AR (Ahmed et al., 2010). For a two-dimensional distribution of positive and negative interacting magnetic elements, the Ising energy is defined as

where \(S_{i}\) (\(S_{j}\)) equals \(+1\) (−1) for positive (negative) pixels and \(d\) is the distance between opposite-polarity pairs. The interacting magnetic elements can be either the individual pixels with a minimum flux density value as in Ahmed et al. (2010) or the opposite-polarity partitions, produced using a flux-partitioning scheme (Barnes, Longcope, and Leka, 2005). The latter variation has been introduced for the first time in the FLARECAST project with promising results, and an assessment of its merit as a predictor is underway (Kontogiannis et al., in preparation). The Ising energy calculation produces four predictors, two for the line-of-sight magnetic field, and two for the radial magnetic field component.

2.2.5 Fourier Spectral Power Index (FSPI)

The spectral power index, \(\alpha\), corresponds to the power-law exponent in fitting the one-dimensional power spectral density \(E(k)\) extracted from magnetograms by the relation

This index parameterizes the power contained in magnetic structures of spatial scales \(l\) (\(=k^{-1}\)) belonging to the inertial range of magnetohydrodynamic (MHD) turbulence. Empirically, AR with spectral power index higher than \(5/3\) (Kolmogorov’s exponent for turbulence) are thought to display an overall high productivity of flares (e.g. see Guerra et al., 2015).

The spectral power index has been historically calculated from the vertical component of the photospheric magnetic field, as inferred from the line-of-sight component assuming perfectly radial magnetic fields. First, the magnetogram is processed using the fast Fourier transform (FFT). A two-dimensional power spectral density (PSD) is then obtained as

In order to express \(E(k_{x}, k_{y})\) from the Fourier \(k_{x}\) and \(k_{y}\) to the isotropic wavenumber \(k = (k_{x}^{2} + k_{y}^{2})^{1/2}\), it is necessary to calculate \(E(k)'\) – the integrated PSD over angular direction in Fourier space. From this last step, the one-dimensional PSD is obtained as \(E(k) = 2\pi k E(k)'\). Finally, the power-law fit is performed as a linear fit in a logarithmic representation of \(E(k)\) versus \(k\) and \(\alpha\) is measured for the assumed turbulent inertial range of 2 – 20 Mm (i.e. \(0.05\,\mbox{--}\,0.5~\mbox{Mm}^{-1}\)).

2.2.6 Schrijver’s \(R\) Value (logR)

The \(R\)-value property quantifies the unsigned photospheric magnetic flux near strong MPILs. The presence of such MPILs indicates that twisted magnetic structures carrying electrical currents have emerged into the AR through the solar surface. Therefore, \(R\) represents a proxy for the maximum free magnetic energy that is available for release in a flare. This property and its usefulness in forecasting was first investigated by Schrijver (2007).

The algorithm for calculating \(R\) is relatively simple, computationally inexpensive, and was originally developed to use line-of-sight magnetograms from the Michelson Doppler Imager (MDI) (Scherrer et al., 1995) on board the Solar and Heliospheric Observatory (SoHO). First, a bitmap is constructed for each polarity in a magnetogram, indicating where the magnitude of positive and negative magnetic flux densities exceeds the threshold value of \(\pm150~\mbox{Mx}\,\mbox{cm}^{-2}\). These bitmaps are then dilated by a square kernel of \(3 \times 3\) pixels, and the areas where the bitmaps overlap are defined as strong-field MPILs. This combined bitmap is then convolved with a Gaussian filter with a full-width at half-maximum (FWHM) of \({\approx}\,15\) Mm. This particular value is constrained by how far from MPILs flares are observed to occur in extreme ultraviolet images of the solar corona. Finally, the convolved bitmap is multiplied by the absolute flux value of the line-of-sight magnetogram, and \(R\) is calculated as the sum over all pixels. Note that since the \(R\) value was implemented by Schrijver (2007) for MDI magnetograms, the SHARP magnetograms were resampled to the spatial scale of MDI before the kernel application and subsequent calculations.

3 Machine-learning Algorithms and Conventional Statistics Models

The ML algorithms used in this study are MLPs, SVMs, and RFs. Among the hundreds of ML algorithms proposed for binary classification (e.g. Fernández-Delgado et al., 2014), these three categories of algorithms are representative of three important approaches in ML: i) artificial neural networks (ANN), ii) kernel-based methods, and iii) classification and regression trees. This is the reason why they were used in the present study, in order to furthermore investigate whether the usage of RFs could bring any improvements in flare prediction in comparison to SVMs and MLPs. The RFs belong to the category of ensemble methods, while the MLPs use unconstrained optimization and SVMs use constrained optimization techniques (e.g. quadratic programming). In general, the working principle of ML comprises the following steps: i) train the model using a training set, ii) predict using the trained model and a testing set, and iii) check whether the algorithm predicted well, in what is called the validation of the overall ML procedure. For further study, we refer to Vapnik (1998), MacKay (2003), and Hastie, Tibshirani, and Friedman (2009).

3.1 Multi-layer Perceptrons

The MLP is a feed-forward network, thus it is described by the planar graph shown in Figure 2. It contains an input layer, a hidden layer, and an output layer of neurons. By the term neuron we denote a basic processing unit where inputs are summed using specific weights and the result is squashed via an activation function. The hidden layer might expand in a series of hidden layers. Nevertheless, the simplest MLP networks have just one hidden layer. In principle, the term hidden describes every layer that is neither the input nor the output layer, but resides in between, as presented in Figure 2. A sufficient number of hidden nodes allows the MLP to approximate any continuous nonlinear function of several inputs with a desired degree of accuracy (Hornik, Stinchcombe, and White, 1989), which is what characterizes the MLPs as universal approximators. It also holds that the greater the number of hidden nodes, the more complex the nonlinear function that can be approximated by the neural network with a desired degree of accuracy. The number of hidden nodes typically does not have to be more than twice the number of input nodes (or predictors). If too many hidden nodes are used, then the overfitting problem arises, which means that the MLP memorizes the sample observations and generalizes badly in the prediction phase. Usually, and in this study, the optimal number of hidden neurons (called size of the MLP) is determined with a fine-tuning procedure (e.g. cross-validation approach, see Section 4.2) before the training phase starts. The tuning phase is relatively time consuming, so it need not be executed every time the training starts. It can be conducted for a single realization of the training set.

An MLP network is a kind of a nonlinear regression (classification) technique, equivalent to a nonlinear mapping from input \(\boldsymbol{I}\) to an output \(\boldsymbol{O} = \boldsymbol{O}(\boldsymbol{I}; \boldsymbol{\omega}, \boldsymbol{A})\). The output is a continuous function of the input and of the weights \(\boldsymbol{\omega}\). The network is described by a given architecture \(\boldsymbol{A}\), which typically defines the number of nodes in every layer (e.g. input, hidden, and output). In general, MLP networks can be used to solve regression and classification problems. The statistical model of an MLP neural network for binary outcome, as described in the following, is based on MacKay (2003). For a recent survey on neural networks, we refer to Prieto et al. (2016).

3.1.1 Classification Networks

We consider an MLP with \(l\) inputs called \(I_{l}\) and bias \(B_{1}\). The network also contains a single hidden layer with \(j\) hidden nodes \(H_{j}\) and bias \(B_{2}\). We have in general \(i\) outputs \(O_{i}\), while typically a single output is all that is needed (\(i=1\)).

In the case of a classification problem, the propagation of the information from the inputs \(\boldsymbol{I}\) to the output \(\boldsymbol{O}\) is described by

where, for example, \(f(\alpha) = \frac {1}{1+\mathrm{exp}(-\alpha)}\) and \(g(\alpha) = \frac {1}{1+\mathrm{exp}(-\alpha)}\).

The index \(l\) is used for the inputs \(I_{1}, \dots, I_{L}\), the index \(j\) is used for the hidden units, and the index \(i\) is used for the outputs (\(i=1\)). The weights \(\omega_{jl}^{(1)}\), \(\omega_{ij}^{(2)}\), and biases \(B_{j}^{(1)}\) and \(B_{i}^{(2)}\) define the parameter vector \(\boldsymbol{\omega}\) to be estimated. The nonlinear logistic function \(f\) at the hidden layer (also known as activation function) helps the neural network approximate any generic continuous nonlinear function with a desirable degree of accuracy (Hornik, Stinchcombe, and White, 1989). Visually, a neural network can be represented as a series of layers consisting of nodes, where every node is connected to nodes of the subsequent layer only (feed-forward networks).

In the case of binary classification, the MLP is trained using a dataset of examples \(D = \{ \boldsymbol{I}^{(n)}, \boldsymbol{T}^{(n)} \}\) by adjusting \(\boldsymbol{\omega}\) in order to minimize \(G(\boldsymbol{\omega})\), the negative log-likelihood function,

Note that \(\boldsymbol{I}^{(n)}\) is the matrix of the predictors and \(\boldsymbol{T}^{(n)}\) is the vector of the targets for observation \(n=1,\dots ,N\). In Equation 6, \(\boldsymbol{T}^{(n)}\) is 0 (1) for the negative (positive) class, respectively, and \(\boldsymbol{O}(\boldsymbol{I}^{(n)};\boldsymbol{\omega)}\) is strictly between 0 and 1 (a probability); this is ensured by Equations 5.

3.2 Support Vector Machines

The SVM variant we use is the \(C\)-Support Vector Classification (\(C\)-SVC) according to the widely used library LIBSVM (Chang and Lin, 2011; Meyer, Leisch, and Hornik, 2003).

Let us assume a vector of \(K\) predictor values at observation \(i\), \(\boldsymbol{x}_{i} \in R^{K}\), \(i=1,\dots,N\), which belongs in one of two classes, and an indicator vector \(\boldsymbol{y} \in R^{N}\) such that \(y_{i} \in\{1,-1\}\). Note that the positive class has the label \(+1\) and the negative class has the label −1. Then the \(C\)-SVC solves the optimization problem

where \(\phi(\boldsymbol{x}_{i})\) is an arbitrary unknown function that maps \(\boldsymbol{x}_{i}\) into a higher dimensional space, and \(C>0\) is the regularization parameter. The optimization in \(C\)-SVC model is performed by changing the decision variables \(\boldsymbol{\omega}\), \(b\), and \(\boldsymbol{\xi}\). LIBSVM solves the dual of \(C\)-SVC, which depends on a quantity \(K(\boldsymbol{x}_{i},\boldsymbol{x}_{j}) = \phi(\boldsymbol{x}_{i})^{T} \phi(\boldsymbol{x}_{j})\), which is called the “kernel” function. While \(\phi(\boldsymbol{x}_{i})\) is unknown, the kernel function is known and is equal to the inner product of \(\phi(\boldsymbol{x}_{i})\) with itself, but for different pairs of observations \(i\) and \(j\). This is the so-called kernel trick of the SVMs. As we show below, the kernel is a similarity measure and takes the maximum value of 1 when \(\mathrm{dist}(\boldsymbol{x}_{i},\boldsymbol{x}_{j})=0\).

We used the radial basis function (RBF; or Gaussian) kernel, which is defined as \(K(\boldsymbol{x},\boldsymbol{x}') = \mathrm{exp}(-\gamma\| \boldsymbol{x}-\boldsymbol{x}'\|^{2})\). A variant of the \(C\)-SVC model has been used for flare prediction in Bobra and Couvidat (2015).

For imbalanced datasets that account for rare events (e.g. in our case the \({>}\,\mbox{M1}\) flares), some researchers, e.g. Bobra and Couvidat (2015), have used two different values for the regularization parameter \(C\) in Equation 7, thereby penalizing the constraint violations for the minority class more strongly. These authors have used \(C_{1}\) and \(C_{2}\) with a ratio \(C_{2} / C_{1} \in\{2,15\}\), where \(C_{1}\) is the coefficient for the majority class (no events) and \(C_{2}\) is the coefficient for the minority class (events). While we generally use the SVM in the original unweighted version in Equation 7, in auxiliary runs we also experimented with using different values for \(C_{1}\) and \(C_{2}\) with a ratio \(C_{2} / C_{1} \in \{2,15,20\}\) to account for the imbalanced nature of the \({>}\,\mbox{M1}\) flares dataset.

3.3 Random Forests

The RF is a relatively recent ML method and was introduced by Breiman (2001). The RF approach is an ensemble of tree predictors, where we let each tree vote for the most popular class. It has been reported (Fernández-Delgado et al., 2014) that RF offers significant performance improvement over other classification algorithms. The RF approach relies on randomness and involves the concept of split purity and the Gini index for variable selection (Breiman et al., 1984).

According to Hastie, Tibshirani, and Friedman (2009), the goal of the RF algorithm is to randomly build a set (or ensemble) of trees by repeating the tree-formation process B times to create B trees. In particular, the algorithm i) chooses a bootstrap sample from the training data, ii) grows a tree \(T_{b}\) to the bootstrapped sample by consequently applying the following two substeps: Substep 1 selects m variables randomly out of the M variables, and Substep 2 splits the current node into two children nodes after selecting the best variable (node) from the m chosen ones. By repeating steps i) and ii) (where ii) consists of Substeps 1 – 2), the algorithm creates a set (called ensemble) of trees \(\{T_{b}\}_{1}^{B}\). Then, in the classification case studied in the present paper, a voting procedure for every tree \(T_{b}\) is followed in order to obtain the class prediction of the random forest.

This is one of the first times that RF is used for flare forecasting. Other related works are Liu et al. (2017) and Barnes et al. (2016). Furthermore, three recent applications of RF in astrophysics have been reported by (Vilalta, Gupta, and Macri, 2013; Schuh, Angryk, and Martens, 2015; Granett, 2017).

3.4 Implementation of ML Algorithms

3.4.1 Multi-layer Perceptrons

The MLPs were implemented using the R programming language and the nnet package (Venables and Ripley, 2002). The options used were \(\mbox{linout}=\mbox{FALSE}\), to ensure that sigmoid activation functions are used at the output node; entropy = TRUE, to ensure that the negative log-likelihood objective function is minimized during the training phase (and not the default sum of squares error (\(\mathrm{SSE}\)) criterion); and size = iNode, where \(\mathrm{iNode}\) for both \({>}\,\mbox{M1}\) flares and for \({>}\,\mbox{C1}\) flares was chosen with a tuning procedure.

3.4.2 Support Vector Machines

Support vector machines were implemented using the R programming language and the e1071 package (Meyer et al., 2015). The option used was probability = TRUE, in order to obtain probability estimates for every element of the training set as well as probability estimates for every element of the testing set.

3.4.3 Random Forests

Random forests were implemented using randomForest package (Liaw and Wiener, 2002) in the R programming language. The options used were importance = TRUE, to create importance information for every predictor; na.action = na.omit, to exclude records of predictors with missing values appearing in preliminary versions of the dataset (but lacking from the final version of the dataset).

3.5 Conventional Statistics Models

Non-ML (or statistical) methods also considered are i) linear regression (LM), ii) probit regression (PR), and iii) logit regression (LG). Although multiple linear regression is known to be redundant for binary outcomes because it can yield probabilistic predictions outside the interval \([0,1]\), we still included it in the array of tested methods. The reason is that some practitioners still use it for binary outcomes (calling it linear probability model (LPM), see Greene, 2002) and there is always interest to consider ordinary least squares (OLS) as an entry-level method for any regression analysis. An interesting article about the lack of use of probit and logit in astrophysics modeling is de Souza et al. (2015). The statistical algorithms were implemented in the statistical programming language R using the lm and glm functions.

For a description of these well-known methods we refer to (Greene, 2002; Winkelmann and Boes, 2006).

4 Data Preparation, Results, and Discussion

First, we implement ML predictions on \({>}\,\mbox{M1}\) flares. Second, we use statistical methods for the prediction of \({>}\,\mbox{M1}\) flares. Third, we predict \({>}\,\mbox{C1}\) flares with ML algorithms. Finally, we predict \({>}\,\mbox{C1}\) flares with the statistical algorithms. The following subsections describe these four experiments, presenting at first a single combination of a training/testing set for every flare class and category of techniques.

Results are presented for the prediction step in terms of i) skill score profiles (SSP) of ACC, TSS, and HSS as functions of the probability threshold, ii) ROC curves, and iii) RD plots for all methods: (for the explanation of metrics ACC, TSS, HSS, and ROC curves and RD diagrams, see Section 4.3). Skill score profiles were created by a code we developed in R, ROC curves were created using the ROCR package (Sing et al., 2005), and reliability diagrams were created using the verification package (Laboratory, 2015).

All algorithms were implemented and run using the R programming language \(3.3.2\) (R Core Team, 2016) and the RStudio 0.99 IDE.

4.1 Data Pre-processing

The data comprise the \(K=7\) predictors [logR, FSPI, TLMPIL, DI, \(\mathrm{WL}_{\mathrm{{SG}}}\), IsinEn1, and IsinEn2] described in Section 2.2 and computed using either the line-of-sight magnetograms, \(B_{\mathrm {los}}\), of SHARP data or the respective radial component, \(B_{r}\) (Bobra et al., 2014). Hence, we tested \(K = 2 \times6 + 1 = 13\) predictors.Footnote 2 The sample comprised \(N=23{,}134\) observations, randomly split in half into \(N_{1}=11{,}567\) observations for the training and \(N_{2}=11{,}567\) observations for the testing set. The random split was performed for 200 replications, and all six prediction algorithms (i.e. MLP, SVM, RF, LM, probit, and logit) of Section 3 were trained and performed on identical training and test sets. The metrics ACC, TSS, and HSS of Section 4.3 were always computed for the testing (out-of-sample) set. We standardized all predictor variables to have a mean equal to 0 and a standard deviation equal to 1 because several ML algorithms involve non-linear optimization (e.g. MLPs). This helps to better train the ML algorithms and also explains the effect of every predictor variable on the studied outcome in the case of the statistical models LM, probit, and logit.

4.2 Tuning of ML Algorithms

As with any parameterized algorithm (e.g. simulated annealing, evolutionary algorithms, and other metaheuristics), the performance of ML algorithms depends on a number of crucial parameters that need to be fine-tuned before the application of the ML procedure (e.g. training, testing, and validation steps). The optimal tuning of ML algorithms is more or less still an open question in the ML community and always poses a great challenge for any practitioner. This choice of optimal options for the ML algorithms themselves is similar to the choice of optimal parameters for other numerical models (e.g. MHD models), where the analyst also has to explore the optimal parameter space in several crucial parameters before conducting numerical MHD simulations. The algorithms MLP, SVM, and RF have their critical hyperparameters (e.g. parameters that are critical for the forecasting performance of every algorithm) tuned via a 10-fold cross-validation study exploiting only the training set at one of its realizations. The set of plausible values for every ML algorithm is as follows: i) MLP: size (number of hidden neurons) \(\in\{4,13,26\} \) and decay (weight decay parameter) \(\in\{10^{-3},10^{-2},10^{-1}\}\), ii) SVM: \(\gamma\) (parameter in the RBF (or Gaussian) kernel) ∈ \(\{ 10^{-6}, 10^{-5}, 10^{-4}, \dots, 10^{-1}\}\) and cost (regularization parameter) \(\in\{10,100\}\), and iii) RF: mtry (number of variables randomly sampled as candidates at each split) \(\in\{ \lfloor\sqrt{K} \rfloor= 3\}\) and ntree (number of trees to grow) \(\in\{500\}\).

We tuned only the MLP and SVM classifiers because the default RF values mtry = 3 and ntree = 500 immediately provided satisfactory results. Tuning of the MLP and SVM was mostly needed in the \({>}\,\mbox{M1}\) flares case, which was found harder to predict than \({>}\,\mbox{C1}\) flares, but was also performed in the \({>}\,\mbox{C1}\) flares case. Thus, the hyperparameters for MLP and SVM needed tuning because, for example, the default values \(\gamma = 1\) and \(\mbox{cost} = 1\) for SVM provided unsatisfactory results. We used the tune.nnet and tune.svm functions of the R package e1071 to tune the MLP and SVM, respectively. After the tuning, both MLP and SVM improved their performance significantly.

For the \({>}\,\mbox{M1}\) flares, the selected values are \(\mbox{size} = 26\) and \(\mbox{decay} = 0.1\) for the MLP and \(\gamma = 0.1\) and \(\mbox{cost} = 10\) for the SVM. These values are used throughout the remainder of this work. For the \({>}\,\mbox{C1}\) flares case, the selected values are \(\mbox{size} = 4\) and \(\mbox{decay} = 0.1\) for the MLP and \(\gamma = 0.001\) and \(\mbox{cost} = 100\) for the SVM.

4.3 Comparison Metrics

A wide variety of metrics exist in order to characterize the quality of binary classification. Of these, no single one is fit for all purposes. There exist two types of metrics, suitable for either categorical or probabilistic classification. In the former case, a strict class membership is returned from the model, and in the latter case, a probability of membership is returned. In this section we concentrate on categorical forecast metrics for binary classification. In what follows, let \(\mathrm{ACC}\) denote accuracy, TSS denote true skill statistic, and HSS denote Heidke skill score. The performance of algorithms is measured using a number of metrics. These are derived from the so-called contingency table or confusion matrix, a representation of which is provided in Table 1.

Table 1 includes true positives (TP; events predicted and observed), true negatives (TN; events not predicted and not observed), false positives (FP; events predicted but not observed), and false negatives (FN; events not predicted but observed), where \(N = \mathrm{TP} + \mathrm{FP}+ \mathrm{FN}+ \mathrm {TN}\) is the sample size. From these elements, the meaning of ACC is the proportion correct, namely the number of correct forecasts of both event and non-event, normalized by the total sample size,

The TSS (Hanssen and Kuipers, 1965) compares the probability of detection (POD) to the probability of false detection (POFD),

Moreover, the TSS is the maximum vertical distance from the diagonal in the ROC curve, which relates the POD and POFD for different probability thresholds; see Section 4. The TSS covers the range from −1 up to \(+1\), while the value of zero indicates lack of skill. Values below zero are linked to forecasts behaving in a contrary way, namely mixing the role of the positive class with the role of the negative class. In any negative TSS value, by exchanging the roles of YES and NO events, we can obtain the corresponding positive TSS value that would be identical in absolute value terms with the negative TSS value.

The HSS (Heidke, 1926) measures the fractional improvement of the forecast over the random forecast,

which ranges from \(- \infty\) to 1. Any negative value means that the random forecast is better, a zero value means that the method has no skill over the random forecast, and an ideal forecast method provides an HSS value equal to 1.

The TSS and HSS metrics are among the most popular metrics for comparison purposes in Meteorology and Space Weather and were conceptually compared in Bloomfield et al. (2012). In a probabilistic forecasting, such as the one for solar flares, they must be assigned a probability threshold, thus appearing as functions of this threshold.

To summarize, ACC is the most popular classification metric, but in rare events such as flares \({>}\,\mbox{M1}\), the ACC can be artificially high for the naive model, which will always predict the majority class (“no event”). Thus, TSS and HSS are more suitable for flare prediction. Moreover, TSS has the advantage of being invariant to the frequency of events in a sample (e.g. see Bloomfield et al., 2012). Typically, both TSS and HSS need to be evaluated for a given probability threshold in order to assess the merit of a given probabilistic forecasting model, such as those we develop in this study.

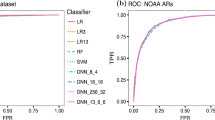

Regarding the probabilistic assessment of classifiers, we used the visual approaches of receiver operating characteristic (ROC) curves and reliability diagrams (RD) (e.g. see Section 4). The ROC describes the relationship between the POD and the POFD for different probability thresholds (e.g. see Figure 3b). The area under the curve (AUC) in the ROC has an ideal value of one. The RD describes the relationship between the returned probabilities by the model and the actual observed frequencies of the data. A binning approach is used to construct the RD, in which probabilities are assigned to intervals of arbitrary length (for example, we used 20 bins of length 0.05 each). For an example of RD, see Figure 3c. To algebraically assess the probabilistic performance of classifiers, we also used the Brier score (BS) (Brier, 1950) and Brier skill score (BSS) (Wilks, 2011), as well as the AUC (Marzban, 2004).

4.4 Results on \({>}\,\mbox{M1}\) Flare Prediction

4.4.1 Prediction of \({>}\,\mbox{M1}\) Flare Events Using Machine Learning

Figure 3 shows the forecast performances of the three tested ML methods, using both binary scores (SSP [left]; ROC [middle]) and probabilistic ones (RD [right]).

-

i)

Regarding the MLPs, we note a wide plateau with a more or less flat profile for HSS and less so for TSS. This occurs because the number of hidden neurons (\(\mbox{size}=26\)) is twice the number of input neurons, causing the MLP to provide probability estimates clustered around 0 and 1. The ROC curve is reasonably good, with maximum \(\mbox{TSS}=0.726\). Moreover, the RD shows a systematic overprediction above a forecast probability of 0.4.

-

ii)

For the SVMs, the SSP plateau noted in case of the MLPs is not present here, with nearly monotonically decreasing values of TSS and HSS appearing. The ROC curve shows a maximum \(\mbox{TSS}=0.629\), while the RD seems slightly better than for MLP, with some underprediction below a forecast probability of 0.4 and generally large uncertainties. When we used the weighted version of the SVM, with a ratio of \(C_{2} / C_{1} = 20\), the ROC curve improved, providing a maximum \(\mbox{TSS}= 0.718\), but the overall forecasting ability as measured by the SSP and RD remained worse than the MLP.

-

iii)

With respect to the RFs, the SSP behavior is such that HSS shows a plateau around its peak value, although smaller than in case of MLPs, while TSS monotonically decreases. This said, we note that the peak HSS and TSS values are higher in this case (e.g. \(\mbox{TSS}=0.780\) and \(\mbox{HSS}=0.587\)). The ROC curve is better than that of MLPs and SVMs, with a maximum \(\mbox{TSS}=0.780\). The RD, finally, appears clearly better than those of MLPs and SVMs, presenting some mild underprediction, mainly within error bars, above a forecast probability of 0.2.

4.4.2 Prediction of \({>}\,\mbox{M1}\) Flare Events Using Statistical Models

Figure 4 shows the forecast performances of the three tested statistical methods for \({>}\,\mbox{M1}\) flare prediction.

Same as Figure 3, but for statistical methods: linear regression (LM; top), probit regression (PR; middle), and logit regression (LG; bottom).

For the LM, the SSP is different between TSS and HSS, with TSS peaking more impulsively and for lower probabilities and then decreasing nearly monotonically. The ROC curve also shows a significant performance with maximum \(\mbox{TSS}=0.744\) that can also be seen in the RD, which shows a very good behavior, although with error bars, for the entire range of forecast probabilities.

The PR shows a slightly improved behavior in comparison with LM for the SSP, the ROC curves, and the RD. The RD seems also more reliable in this case compared to LM, although differences are mostly within the error bars.

We note a similar behavior for the LG as in the LM and especially PR method, and the RD in this case appears as good as the PR RD.

4.4.3 Monte Carlo Simulation for \({>}\,\mbox{M1}\) Flares

In Table 2 we provide the average values of the skill scores ACC, TSS, and HSS for all prediction methods after the 200 replications of the Monte Carlo experiment regarding \({>}\,\mbox{M1}\) flare prediction. Table 2 shows that the maximum \(\mbox{HSS}=0.57\) is obtained with the RF method for a probability threshold of 25%. The corresponding RF score values are \(\mbox{ACC}=0.96\pm0.00\), \(\mbox{TSS}=0.63\pm0.02\), and \(\mbox{HSS}=0.57\pm0.02\). The second-best method in Table 2 for the same probability threshold is MLP, with \(\mbox{ACC}=0.95\pm0.00\), \(\mbox{TSS}=0.56\pm 0.02\), and \(\mbox{HSS}=0.50\pm0.02\). For the threshold where the maximum TSS is observed, we obtain the best results for the RF method and a threshold of 10%, with values \(\mbox{ACC}=0.90\pm0.00\), \(\mbox{TSS}=0.77\pm0.01\), and \(\mbox{HSS}=0.42\pm0.01\). The second-best method may be considered the LM at 10% threshold, with \(\mbox{ACC}=0.88\pm0.00\), \(\mbox{TSS}=0.73\pm0.01\), and \(\mbox{HSS}=0.35\pm0.01\). The difference between RF and LM is statistically significant at the 0.01% level, as shown in Table 4 in row 1. For the range of thresholds 10% to 25%, the RF method yields increasing values of HSS and decreasing values of TSS. For example, an appealing forecasting model could be RF with threshold 15% and metrics \(\mbox{ACC}=0.93\pm0.00\), \(\mbox{TSS}=0.74\pm0.02\), and \(\mbox{HSS}=0.49\pm0.01\) in Table 2, but this would depend on the needs and requirements of a given decision maker.

4.5 Results on \({>}\,\mbox{C1}\) Flare Prediction

4.5.1 Prediction of \({>}\,\mbox{C1}\) Flare Events Using Machine Learning

We continued our computational experiments by training and performing our algorithms to the prediction of GOES \({>}\,\mbox{C1}\) flares. Figure 5 shows the forecast performances of the three tested ML methods for \({>}\,\mbox{C1}\) flare prediction.

Same as Figure 3, but for \({>}\,\mbox{C1}\) flare prediction.

For the MLP, we note that since for the \({>}\,\mbox{C1}\) flares the number of hidden nodes selected is \(\mbox{size}=4\), plateaus in HSS and TSS are not so eminent, in contrast to the case of \({>}\,\mbox{M1}\) flare prediction. The ROC curve seems satisfactory with maximum \(\mbox{TSS}=0.574\), and the RD is quite significant, showing no systematic over- or underprediction.

A purely monotonic decrease of TSS can be seen in the SVM, following an instantaneous peak. Some plateau in HSS is also noted, followed by a monotonic decrease. The ROC curve appears less satisfactory than in case of MLPs with maximum \(\mbox{TSS}=0.566\), and the RD shows some systematic underprediction for most of the forecast probability range.

For the RFs, we note a relatively similar behavior with MLPs, although with a slightly more pronounced HSS peak. The ROC curve seems better behaved than in the previous two methods with maximum \(\mbox{TSS}=0.615\), and the RD is arguably the best achieved together with the MLP RD.

4.5.2 Prediction of \({>}\,\mbox{C1}\) Flare Events Using Statistical Models

Figure 6 shows the forecast performances of the three tested statistical methods for \({>}\,\mbox{C1}\) class flare prediction.

Same as Figure 4, but for \({>}\,\mbox{C1}\) flare prediction.

For the LM, we note a decrease in the ACC of the method and some more or less similar behavior of HSS and TSS. The ROC curve seems satisfactory with maximum \(\mbox{TSS}=0.562\), while the RD appears to show a systematic overprediction below a forecast probability of 0.4 and a systematic underprediction above a forecast probability of 0.4 (excluding probabilities > 0.9).

A similar behavior with LM appears for the SSPs in the PR, while the ROC curve seems slightly better with maximum \(\mbox{TSS}=0.566\). The RD curve shows some systematic underprediction, although generally within the error bars.

Finally, for the LG, we note a similar behavior in the SSP as in the case of LM and PR, but arguably a better-behaved ROC curve with maximum \(\mbox{TSS}=0.567\). The RD seems to be the best behaved, compared to those of LM and PR.

4.5.3 Monte Carlo Simulation for \({>}\,\mbox{C1}\) Flares

In Table 3 we provide the average values of the skill scores ACC, TSS, and HSS for all prediction methods after the 200 replications of the Monte Carlo experiment regarding \({>}\,\mbox{C1}\) flares prediction. Table 3 shows that the maximum \(\mbox{HSS}=0.60\) is obtained with the RF method for a probability threshold of 40%. The corresponding skill score values are \(\mbox{ACC}=0.85\pm0.00\), \(\mbox{TSS}=0.59\pm0.01\), and \(\mbox{HSS}=0.60\pm0.01\). The second-best method in Table 3 for the same probability threshold is obtained with the LG method, with \(\mbox{ACC}=0.83\pm 0.00\), \(\mbox{TSS}=0.54\pm0.01\), and \(\mbox{HSS}=0.56\pm0.01\). Considering again the probability threshold where the maximum TSS is observed, we obtain the optimal results for the RF method and threshold 30% with values \(\mbox{ACC}=0.82\pm0.00\), \(\mbox{TSS}=0.61\pm0.01\), and \(\mathrm{HSS}=0.57\pm0.01\). The second-best method may be considered the MLP (or the LG in a tie) at a 30% threshold with \(\mbox{ACC}=0.81\pm0.00\), \(\mbox{TSS}=0.57\pm0.01\), and \(\mbox{HSS}=0.53\pm0.01\). For a range of probability thresholds (30% – 40%), the method RF yields increasing values of HSS and decreasing values of TSS. As a result, it is again not clear which the best-fit value of the threshold probability is if we choose to simultaneously optimize both TSS and HSS. For example, an appealing RF forecasting model is with a threshold 35% and skill scores \(\mbox{ACC}=0.84\pm0.00\), \(\mbox{TSS}=0.60\pm0.01\) and \(\mbox{HSS}=0.59\pm0.01\) in Table 3. These results are generally above those reported for \({>}\,\mbox{C1}\) class flare predictability, namely TSS \(\in[0.50, 0.55]\) and HSS \(\in[0.40, 0.45]\) (Al-Ghraibah, Boucheron, and McAteer, 2015; Boucheron, Al-Ghraibah, and McAteer, 2015). In brief, our data samples, both training and testing, are comprehensive and generally unbiased.

4.6 Assessment of Prediction Methods and Predictor Strength

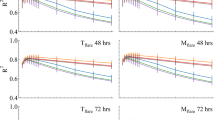

Following the presentation of results in Tables 2 and 3, we can see that both for \({>}\,\mbox{M1}\) and \({>}\,\mbox{C1}\) flare prediction, RF delivers the best skill score metrics for a wide range of probability thresholds. The second-best method is MLP, together with LG. In this setting, we performed some additional evaluation that confirms these results.

We present analytical results in Appendix A for the predictor strength. It seems that \(\mathrm{log}{{R}}\) and \(\mathrm{WL}_{\mathrm{SG}}\) rank in first place for both \({>}\,\mbox{C1}\) and \({>}\,\mbox{M1}\) flare prediction, closely followed by the Ising energy and the TLMPIL.

In order to investigate the robustness of our results, we present additional results in Appendix C, where we make predictions once a day (at 00:00 UT). The mean evolution (over 200 Monte Carlo iterations) of ACC, TSS, and HSS with respect to the probability threshold is presented. Likewise, the BS, AUC, and BSS are presented. The main finding is that issuing forecasts once a day keeps similar average skill scores as are achieved for issuing forecasts eight times a day, but the associated uncertainties (e.g. standard deviations) are higher in the case of daily predictions.

A final word for the comparison of ML algorithms versus conventional statistics models for this specific dataset and positive/negative class definitions is provided in Appendix D. There, we have included an auxiliary meta-analysis of the results in Tables 2 and 3 in order to clearly show whether the ML category of prediction algorithms performs better than the conventional statistics models in the \({>}\,\mbox{M1}\) and \({>}\,\mbox{C1}\) flare prediction cases. A multicriteria analysis using the weighted-sum (WS) method (Greco, Figueira, and Ehrgott, 2016) seems appropriate in order to aggregate the performance metrics ACC, TSS, and HSS of all classifiers as a function of the probability threshold (e.g. using equal weights for the aggregation). In this way, a composite index (CI) as a measure of overall utility is computed for every algorithm and probability threshold combination. There exist \(21 \times 6 = 126\) such alternatives when we use a 5% probability threshold grid, such as the grid in Tables 2 and 3. The ranking, in non-increasing order, of the CI reveals the overall merit of every probabilistic classifier and also allows us to draw conclusions for groups of classifiers, such as the group of ML methods (comprising RF, SVM, and MLP) and the group of conventional statistics methods (comprising LM, PR, and LG). Appendix D presents this multicriteria WS analysis, revealing that overall, in \({>}\,\mbox{C1}\) flare prediction ML outperforms conventional statistics methods by 71% versus 29% in the synthesis of the top \(100(1/6)=16.6\%\) performing methods (top 21 methods out of a total of 126). Likewise, in the \({>}\,\mbox{M1}\) flare prediction case, ML outperforms conventional statistics methods by 62% versus 38% in the synthesis of the top \(100(1/6)=16.6\%\) performing methods. This shows that \({>}\,\mbox{C1}\) flare prediction is more advantageous for ML versus statistical methods, in comparison to the \({>}\,\mbox{M1}\) flare case. This is due to the low performance of the SVM in \({>}\,\mbox{M1}\) flare prediction, which in turn is due to the way in which we have implemented, for simplicity, the SVM for a highly unbalanced sample in \({>}\,\mbox{M1}\) flare prediction,Footnote 3 using a single \(C\) constant and not two different \(C_{1}, C_{2}\) constants during the SVM training with Equation 7.

In auxiliary runs (available upon request), we also noted that when the sample size was very low, using ML algorithms posed no advantage over conventional statistics models. In order to have proper training, the ML algorithms need \(N > 2{,}000\) for \(K=13\), especially for the \({>}\,\mbox{M1}\) flare prediction.

4.7 Statistical Tests for Random Forest Versus MLP and Calculation of AUC and Brier Skill Scores

In Section 4.7.1 we present results of a \(t\)-test between the two best-performing methods according to maximizer thresholds for either TSS or HSS for \({>}\,\mbox{M1}\) class and \({>}\,\mbox{C1}\) class flare cases. Section 4.7.2 presents additional calculations reporting on BS, BSS, and AUC, which we used to assess the classification in the prediction.

4.7.1 Unpaired t-Tests to Compare Two Means for TSS and HSS of Random Forest versus MLP

A \(t\)-test compares the means of two groups. Here, we used the \(t\)-test to compare the mean TSS (HSS, respectively) of the RF method versus those of the MLP method (or in general the second-best performing method). Means were considered with respect to the Monte Carlo simulations performed on the 200 replications of the previous section. The TSS (HSS, respectively) values considered are those for specific probability thresholds maximizing either TSS or HSS. Table 4 presents the \(t\)-test results regarding the best and the second-best methods with respect to either TSS or HSS for these specific probability thresholds.

We find that RF is always (i.e. \(8/8\) of times) statistically better than the second-best method (which is the MLP \(4/8\) of times), with respect to both TSS and HSS.

4.7.2 Calculation of AUC and Brier Skill Scores

Tables 5 and 6 present the calculated mean values of BS, AUC, and BSS for the \({>}\,\mbox{M1}\) and \({>}\,\mbox{C1}\) flare prediction cases, respectively.

For the \({>}\,\mbox{M1}\) flare case (Table 5), results show that on average, the best BS and BSS results are achieved with the RF method (\(\mbox{BS} = 0.0266\); \(\mbox{BSS}=0.4163\)). The best AUC results are achieved with the RF method (\(\mbox{AUC} = 0.9556\)), but also for the PR (\(\mbox{AUC}=0.9392\)) and LG (\(\mbox{AUC}=0.9391\)) methods.

For the \({>}\,\mbox{C1}\) flare case (Table 6), results show that on average, the best BS and BSS results are achieved with the RF method (\(\mbox{BS}=0.1074\); \(\mbox{BSS}=0.4426\)). The best AUC results are also achieved with the RF method (\(\mbox{AUC}=0.8927\)), with other methods (except SVM) following closely. The SVM probably needs better fine-tuning, given its sensitivity on \(\gamma\) and cost (see Section 4.2).

4.8 Related Published Work and Comparison to Our Results

Ahmed et al. (2013) presented prediction results for \({>}\,\mbox{C1}\) class flares using cross-validation with 60% training and 40% testing subsets, with ten iterations in operational and segmented mode. Since our analysis focuses in operational mode, the gold standard for near-real-time operational systems such as FLARECAST, we present here their results on the operational mode for the period April 1996 – December 2010: \(\mathrm{POD}=0.455\) & \(\mathrm{POFD}=0.010\) thus \(\mathrm{TSS}=\mathrm{POD}-\mathrm{POFD}=0.445\) and \(\mathrm{HSS}=0.539\). Hence, Ahmed et al. reported (using a variant of a neural network, and threshold 50%) results for flares \({>}\,\mbox{C1}\): \(\mathrm{TSS}=0.445\) and \(\mathrm{HSS}=0.539\).

Li et al. (2008) presented results using an SVM coupled with k-nearest neighbor (KNN) for flare prediction \({>}\,\mbox{M1}\) in a way that, unfortunately, cannot be used to recover TSS and HSS values. Instead, they reported Equal = TN + TP, High = FP, Low = FN. The accuracy achieved is only \(\mbox{ACC} = 57.02\%\) for SVM and \(\mbox{ACC}=63.91\%\) for SVM-KNN for the testing year 2002.

Song et al. (2009) presented results using an ordinal logistic regression model classifying the C-, M-, and X-class flares with response values 1, 2, and 3, respectively. The B-class flare (or no flares) category received class 0 (baseline). Their sample contained 34 X-class flares, 68 M-class flares, 65 C-class flares, and 63 B-class or no-flare cases. A clear drawback of this sample is that it was not taken using a random number generator but seems to be hand-picked aiming at studying the considered 230 events during the period 1998 – 2005. As a result, the sample is biased in that the occurrence rates of the various flare classes are not representative of an actual solar cycle. Perhaps not surprisingly, these authors presented high TSS and HSS values that, given the sample, might be taken with a conservative outlook. From the results of Model 4 in that study (i.e. Table 8 of Song et al., 2009), we are able to infer that for C-class flares, Song et al. computed values \(\mbox{TSS}=0.65\) and \(\mbox{HSS}=0.623\) (C1 – C9 flares). Moreover, we maintain an impression that these numbers are obtained in-sample for the dataset with 230 events in Song et al. (2009).

Yu et al. (2009) used a sliding window approach to account for the evolution of three magnetic flare predictors with importance index above 10 (for the definition of the flare importance index, see Yu et al., 2009). The time period was 1996 to 2004, with a cadence of 96 minutes. The authors used the C4.5 decision tree algorithm and the learning vector quantization (LVQ) neural network, both implemented in WEKA (Witten et al., 2016; Hall et al., 2009). The authors used a 10-fold cross-validation approach with 90% training and 10% testing sets from the original sample. The sliding window size was 45 observations. Their results showed that the sliding window versions of the C4.5 and LVQ neural network algorithms improved the results obtained with the same algorithms for a sliding window size equal to 0. Since the authors presented only the TP rate and the TN rate results, we are not able to recover their HSS value. Their recovered TSS is \(\mbox{TSS}=0.651\) for the C4.5 algorithm with a sliding window of 45 observations and \(\mbox{TSS}=0.667\) for the LVQ, also with a sliding window of 45 observations.

Yuan et al. (2010) used the same dataset as in Song et al. (2009) and proposed a cascading approach, using first an ordinal logistic regression model to produce probabilities for GOES flare classes B, C, M, and X (associated with response levels 0, 1, 2, and 3, respectively), and second, feeding the probability values to an SVM in order to obtain the final class membership. Their results, according to Yuan et al. (2010), improved the prediction especially for X-class flares (response \(\mbox{level} = 3\) in the ordinal logistic regression), but were still not exceptionally high. For example, for \(\mbox{level} = 1\), therefore for C-class flares, we were able to recover the following TSS values for the used methods: logistic regression: \(\mbox{TSS}=0.22\), SVM: \(\mbox{TSS}=0.08\), logistic regression + SVM: \(\mbox{TSS}=0.09\). These rather fair results, as can be seen from the contingency tables presented in Yuan et al. (2010), may be due to the selection of a probability threshold value at 50% for levels 0, 1, and 3 in the ordinal logistic regression model and at 25% for the level 3 (X-class) flares in the same model. Choosing a threshold equal to 50% maximizes ACC but not TSS/HSS, as can be seen both here and in Bloomfield et al. (2012).

Colak and Qahwaji (2009) developed an online solar flare forecasting system called ASAP. Their prediction algorithm is a combination of two neural networks with the sum-of-squared error (SSE) objective function, where the first neural network predicts whether a flare of all types (C, M, or X) will occur, and if the prediction is yes, the second neural network predicts whether a C-, M-, or X-class flare will occur. The ASAP system was developed in C++ and has been validated with data from 1999 to 2002 (around the peak of Solar Cycle 23). The predictors were the sunspot area and characteristics from the McIntosh classification of sunspots (Zpc scheme). They obtained \(\mbox{HSS}=49.3\%\) (C-class flares) and \(\mbox{HSS}=47\%\) (M-class flares) for a forecast window of 24 h.

Wang et al. (2008) developed an MLP neural network using three input variables for the prediction of solar flares of class \({>}\,\mbox{M1}\). The predictors were the maximum horizontal gradient \(|{\mathrm{grad}}_{\mathrm{h}}(B_{z})|\), the length \(L\) of the neutral line, and the number of singular points \(\eta\). A limitation of the study is that only flaring active regions (at GOES C1 and above) were sampled and considered. The forecast window was 48 h. The authors presented prediction results for the period 1996 – 2002 (training set: April 1996 to December 2001, testing set: January 2002 to December 2002). The results were presented as plots of the X-ray flux associated with the predicted/observed flares for the test year 2002, therefore a comparison with the authors’ skill scores is not possible. This work reported \(\mbox{ACC}=69\%\) for the test year.

Bobra and Couvidat (2015) applied an SVM to a sample of 5,000 non-flaring and 303 flaring (at the GOES \({>}\,\mbox{M1}\) level) AR. Those \(N=5{,}303\) AR with \(N=5{,}000\) negative examples and \(P=303\) positive examples (ratio \(N/P=16.5\)), were sampled from the \({\approx\,}1.5\) million patches of the SHARP product (Bobra et al., 2014) between 2010 and 2014. The authors selected 285 M-class flares and 18 X-class flares observed between 2010 May and 2014 May. By comparison, our study relies on a representative sample of flaring/non-flaring AR in the period 2012 – 2016 and for flares \({>}\,\mbox{M1}\), with a ratio \(N/P=19.9\) (\(P=1{,}108\) and \(N=22{,}026\)). By inspecting Table 3 of Bobra and Couvidat (2015), we see that the authors report the results as \(\mbox{ACC}=0.924\pm0.007\), \(\mbox{TSS}=0.761\pm0.039\), and \(\mathrm{HSS}_{2}=0.517\pm0.035\), while our results are \(\mbox{ACC}=0.93\pm0.00\), \(\mbox{TSS}=0.74\pm0.02\), and \(\mbox{HSS}=0.49\pm0.01\) (their definition of \(\mathrm{HSS_{2}}\) is the same as the HSS definition in Section 4.3). Thus, our results with random forests are competitive with those of Bobra and Couvidat (2015). We note that we used a \(50/50\) rule for splitting training/testing sets, while Bobra and Couvidat (2015) use a \(70/30\) rule. Moreover, \(N/P\) in Bobra and Couvidat (2015) is 16.5, while in our case, \(N/P\) is 19.9. Finally, we used no fine-tuning in the parameters of the random forest, while Bobra and Couvidat (2015) carefully tuned the \(C\), \(\gamma\) and \(C_{1} / C_{2}\) of their Equations 2, 5, and 6, respectively. Regardless, Bobra and Couvidat (2015) still represent the state-of-the art in solar flare forecasting so far.

Boucheron, Al-Ghraibah, and McAteer (2015) applied support vector regression (SVR) to 38 predictors characterizing the magnetic field of solar AR in order to predict i) the flare size and ii) the time-to-flare using SVR modeling. The forecast window they used varied between 2 and 24 hours with a step of 2 hours (12 cases of forecast windows). By using the size regression with appropriate thresholds (different to the usual probability thresholds, for example, in Bloomfield et al., 2012), the authors achieved prediction results for \({>}\,\mbox{C1}\) flares with \(\mbox{TSS}=0.55\) and \(\mbox{HSS}=0.46\), while reporting that using the same data, Al-Ghraibah, Boucheron, and McAteer (2015) achieved \(\mathrm{TSS} \approx 0.50\) and \(\mathrm{HSS} \approx0.40\), respectively, for the prediction of \({>}\,\mbox{C1}\) class flares.

Al-Ghraibah, Boucheron, and McAteer (2015) applied relevance vector machines (RVM), a technique that is a generalization of SVM, to a set of 38 magnetic properties characterizing 2124 AR in a total of 122,060 images across different time points for all AR. They predicted \({>}\,\mbox{C1}\) flares using either the full set of properties or suitable subsets thereof. The magnetic properties are of three types: i) snapshots in space and time, ii) evolution in time, and iii) structures of multiple size scales. Al-Ghraibah, Boucheron, and McAteer (2015) reported results (e.g. see their Table 5 and Figure 6) in the range \(\mathrm{TSS} \approx0.51\) and \(\mathrm{HSS} \approx0.39\), which is a baseline result for the literature when no temporal information is included in the predictor set (i.e. static images are used).

5 Conclusions

We presented a new approach for the efficient prediction of \({>}\,\mbox{M1}\) and \({>}\,\mbox{C1}\) solar flares: classic and modern machine-learning (ML) methods, such as multi-layer perceptrons (MLP), support vector machines (SVM), and random forests (RF) were used in order to build the prediction models. The predictor variables were based on the SDO/HMI SHARP data product, available since 2012.

The sample was representative of the solar activity during a five-year period of Solar Cycle 24 (2012 – 2016), with all calendar days within this period included in the sample. The cadence of properties, or predictors, within the chosen days was 3 hours.

We showed that the RF method could be our prediction method of choice, both for the prediction of \({>}\,\mbox{M1}\) flares (with a relative frequency of 4.8%, or 1,108 events) and for the prediction of \({>}\,\mbox{C1}\) flares (with a relative frequency of 26.1%, or 6,029 events). In terms of categorical skill scores, a probability threshold of 15% for \({>}\,\mbox{M1}\) flares gives rise to mean (after 200 replications) RF skill scores on the order \(\mbox{TSS}=0.74 \pm0.02\) and \(\mbox{HSS}=0.49 \pm0.01\), while a probability threshold of 35% for \({>}\,\mbox{C1}\) flares gives rise to mean \(\mbox{TSS}=0.60 \pm0.01\) and \(\mbox{HSS}=0.59 \pm0.01\). The respective accuracy values are \(\mbox{ACC}=0.93\) and \(\mbox{ACC}=0.84\). In terms of probabilistic skill scores, the ranking of the ML techniques with respect to their BSS against climatology is RF (0.42), MLP (0.29), and SVM (0.28) for \({>}\,\mbox{M1}\) flares and RF (0.44), MLP (0.39), and SVM (0.36) for \({>}\,\mbox{C1}\) flares.

We further indicate that for \({>}\,\mbox{M1}\) flare prediction, SVM and MLP need additional tuning of their hyperparameters (Section 4.2) in order to produce comparable results with RF. Moreover, several statistical methods (linear regression, probit, and logit) produced acceptable forecast results when compared with the ML methods. By increasing the number of hidden nodes, the MLP networks provide flatter skill score profiles (i.e. ACC, TSS, and HSS as a function of the threshold probability), but the peak values of the corresponding curves are lower than those achieved by MLP networks with fewer hidden nodes. Regarding the \({>}\,\mbox{C1}\) flares, all forecast methods work acceptably, although the best method is, again, RF. A Monte Carlo experiment showed that results are robust with respect to different realizations of the training/testing pair, with different random seeds. Monte Carlo modeling also decreases the amplitudes of the applicable standard deviations of the skill scores. Typically standard deviations are larger for the \({>}\,\mbox{M1}\) flare case than for that of \({>}\,\mbox{C1}\) flares. This is to be attributed to the different occurrence frequency of flares in the two cases.

The RF is a relatively new approach to solar flare prediction. Nonetheless, it may be preferable over other widely used ML algorithms, at least for the datasets exploited so far, giving competitive results without much tuning of the RF hyperparameters. This generates hope for future meaningful developments in the formidable solar flare prediction problem, at the same time aligning with excellent performance for RF reported in several classification benchmarks (Fernández-Delgado et al., 2014). This important statement made, it appears that even with the application of RF, solar flare prediction in the foreseeable future will likely continue to be probabilistic (i.e. 0.0 – 1.0, continuous), rather than binary (i.e. 0 or 1).

In terms of the predictors importance, Schrijver’s \(R\) is found to be among the most statistically significant predictors, together with \(\mathrm{WL}_{\mathrm{SG}}\). The Ising energy and the TLMPIL are also considered as important, ranking slightly below the previous two predictors. This stems from the importance calculations according to the Fisher score and RF importance for the \({>}\,\mbox{C1}\) and \({>}\,\mbox{M1}\) flare cases in Appendix A. This result is also in line with the common knowledge that flares occur mostly when strong and highly sheared MPILs are formed. Other MPIL-highlighting predictors, such as the effective connected magnetic field strength, \(B_{\mathrm{eff}}\) (Georgoulis and Rust, 2007), remain to be tested, in conjunction with \(R\) and \(\mathrm{WL}_{\mathrm{SG}}\), as their cadence was lower than 3 h at the time this study was performed.

An interesting finding for the RF technique (Appendix B) is obtained by the predictors’ ranking information according to their importance, as measured by the Fisher score. Namely, when we create prediction models with a varying number of the most important predictors included, the RF prediction performance (in terms of TSS and HSS) continues to improve monotonically with the number of included parameters. Conversely, the MLP and SVM algorithms achieve only slight improvements in prediction results (again in terms of TSS and HSS) by adding more than, say, the six most important predictors. This interesting finding may further improve forecasting when more viable predictors become available.

For future FLARECAST-supported research, we plan to enlarge our analysis sample by reducing the property cadence from 3 h to 1 h or even less (the limit is the inherent cadence of SDO/HMI SHARP data, namely 12 min). Another direction of future research is to investigate the robustness of our results for samples created with a higher cadence of 12 h (24 h) coupled with a forecast window of 12 h (24 h). Furthermore, we plan to exploit the substantial time-series aspect of our data using recurrent neural networks, possibly trained with evolutionary algorithms. The present work, along with a series of similar concluded or still ongoing studies, is considered for possible integration in the final FLARECAST online system and forecasting tool, to be deployed by early 2018.

Notes

Liu et al. (2017) also used the random forest algorithm for solar flare prediction based on SDO/HMI data. Nevertheless, the specific details in that paper regarding the sampling strategy and the feature extraction are very different from our choices. For example, Liu et al. (2017) only considered flaring ARs (at the level \({>}\,\mbox{B1}\) class), and the sample size was \(N=845\), while we consider both flaring and non-flaring ARs with \(N=23{,}134\).

This is because we considered only the \(B_{r}\) version for predictor \(\mathrm{WL}_{\mathrm{{SG}}}\).

References

Ahmed, O., Qahwaji, R., Colak, T., Dudok De Wit, T., Ipson, S.: 2010, A new technique for the calculation and 3D visualisation of magnetic complexities on solar satellite images. Vis. Comput. 26, 385. DOI .

Ahmed, O.W., Qahwaji, R., Colak, T., Higgins, P.A., Gallagher, P.T., Bloomfield, D.S.: 2013, Solar flare prediction using advanced feature extraction, machine learning, and feature selection. Solar Phys. 283(1), 157. DOI .

Al-Ghraibah, A., Boucheron, L.E., McAteer, R.T.J.: 2015, An automated classification approach to ranking photospheric proxies of magnetic energy build-up. Astron. Astrophys. 579, A64. DOI .

Alissandrakis, C.E.: 1981, On the computation of constant alpha force-free magnetic field. Astron. Astrophys. 100, 197.

Barnes, G., Longcope, D.W., Leka, K.D.: 2005, Implementing a magnetic charge topology model for solar active regions. Astrophys. J. 629, 561. DOI .

Barnes, G., Schanche, N., Leka, K., Aggarwal, A., Reeves, K.: 2016, A comparison of classifiers for solar energetic events. Proc. Int. Astron. Union 12(S325), 201. DOI .

Bloomfield, D.S., Higgins, P.A., McAteer, R.T.J., Gallagher, P.T.: 2012, Toward reliable benchmarking of solar flare forecasting methods. Astrophys. J. Lett. 747(2). DOI .

Bobra, M.G., Couvidat, S.: 2015, Solar flare prediction using SDO/HMI vector magnetic field data with a machine-learning algorithm. Astrophys. J. 798(2), 135. DOI .

Bobra, M.G., Sun, X., Hoeksema, J.T., Turmon, M., Liu, Y., Hayashi, K., Barnes, G., Leka, K.D.: 2014, The Helioseismic and Magnetic Imager (HMI) vector magnetic field pipeline: SHARPs – Space-weather HMI active region patches. Solar Phys. 289(9), 3549. DOI .

Boucheron, L.E., Al-Ghraibah, A., McAteer, R.T.J.: 2015, Prediction of solar flare size and time-to-flare using support vector machine regression. Astrophys. J. 812(1), 51. DOI .

Breiman, L.: 2001, Random forests. Mach. Learn. 45(1), 5. DOI .

Breiman, L., Friedman, J., Stone, C.J., Olshen, R.A.: 1984, Classification and Regression Trees.

Brier, G.W.: 1950, Verification of forecasts expressed in terms of probability. Mon. Weather Rev. 78, 1. DOI .

Chang, Y.-W., Lin, C.-J.: 2008, Feature ranking using linear svm. In: Guyon, I., Aliferis, C., Cooper, G., Elisseeff, A., Pellet, J.-P., Spirtes, P., Statnikov, A. (eds.) Proceedings of the Workshop on the Causation and Prediction Challenge at WCCI 2008, Proceedings of Machine Learning Research 3, 53.

Chang, C.-C., Lin, C.-J.: 2011, LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2, 27:1. Software available at http://www.csie.ntu.edu.tw/~cjlin/libsvm . DOI .

Chen, Y.-W., Lin, C.-J.: 2006, Combining SVMs with various feature selection strategies. In: Feature Extraction, Springer, Berlin, 315. DOI .

Colak, T., Qahwaji, R.: 2009, Automated solar activity prediction: A hybrid computer platform using machine learning and solar imaging for automated prediction of solar flares. Space Weather 7(6), S06001. DOI .

de Souza, R.S., Cameron, E., Killedar, M., Hilbe, J., Vilalta, R., Maio, U., Biffi, V., Ciardi, B., Riggs, J.D.: 2015, The overlooked potential of generalized linear models in astronomy, I: binomial regression. Astron. Comput. 12, 21. DOI .

Falconer, D.A., Moore, R.L., Gary, G.A.: 2008, Magnetogram measures of total nonpotentiality for prediction of solar coronal mass ejections from active regions of any degree of magnetic complexity. Astrophys. J. 689, 1433. DOI .

Falconer, D., Barghouty, A.F., Khazanov, I., Moore, R.: 2011, A tool for empirical forecasting of major flares, coronal mass ejections, and solar particle events from a proxy of active-region free magnetic energy. Space Weather 9(4), n/a. S04003. DOI .

Falconer, D.A., Moore, R.L., Barghouty, A.F., Khazanov, I.: 2012, Prior flaring as a complement to free magnetic energy for forecasting solar eruptions. Astrophys. J. 757, 32. DOI .

Falconer, D.A., Moore, R.L., Barghouty, A.F., Khazanov, I.: 2014, MAG4 versus alternative techniques for forecasting active region flare productivity. Space Weather 12(5), 306. DOI .

Fang, F., Manchester, W. IV, Abbett, W.P., van der Holst, B.: 2012, Buildup of magnetic shear and free energy during flux emergence and cancellation. Astrophys. J. 754, 15. DOI .

Fernández-Delgado, M., Cernadas, E., Barro, S., Amorim, D.: 2014, Do we need hundreds of classifiers to solve real world classification problems? J. Mach. Learn. Res. 15, 3133.

Fletcher, L., Dennis, B.R., Hudson, H.S., Krucker, S., Phillips, K., Veronig, A., Battaglia, M., Bone, L., Caspi, A., Chen, Q., Gallagher, P., Grigis, P.T., Ji, H., Liu, W., Milligan, R.O., Temmer, M.: 2011, An observational overview of solar flares. Space Sci. Rev. 159, 19. DOI .

Georgoulis, M.K., Rust, D.M.: 2007, Quantitative forecasting of major solar flares. Astrophys. J. Lett. 661, L109. DOI .

Granett, B.R.: 2017, Probing the sparse tails of redshift distributions with Voronoi tessellations. Astron. Comput. 18, 18. DOI .

Greco, S., Figueira, J., Ehrgott, M.: 2016, Multiple Criteria Decision Analysis, 2nd edn.

Greene, W.H.: 2002, Econometric Analysis, 5th edn.

Guerra, J.A., Pulkkinen, A., Uritsky, V.M., Yashiro, S.: 2015, Spatio-temporal scaling of turbulent photospheric line-of-sight magnetic field in active region NOAA 11158. Solar Phys. 290, 335. DOI .

Hall, M., Frank, E., Holmes, G., Pfahringer, B., Reutemann, P., Witten, I.H.: 2009, The WEKA data mining software: An update. ACM SIGKDD Explor. Newsl. 11(1), 10. DOI .

Hanssen, A., Kuipers, W.: 1965, On the Relationship Between the Frequency of Rain and Various Meteorological Parameters: (with Reference to the Problem of Objective Forecasting).

Hastie, T., Tibshirani, R., Friedman, J.: 2009, The Elements of Statistical Learning: Data Mining, Inference and Prediction, 2nd edn.

Heidke, P.: 1926, Berechnung des erfolges und der güte der windstärkevorhersagen im sturmwarnungsdienst. Geogr. Ann. 8, 301. DOI .

Hornik, K., Stinchcombe, M., White, H.: 1989, Multilayer feedforward networks are universal approximators. Neural Netw. 2(5), 359. DOI .

Kliem, B., Török, T.: 2006, Torus instability. Phys. Rev. Lett. 96(25), 255002. DOI .

Laboratory, N.-R.A.: 2015, Verification: Weather forecast verification utilities. R package version 1.42.

Li, R., Cui, Y., He, H., Wang, H.: 2008, Application of support vector machine combined with k-nearest neighbors in solar flare and solar proton events forecasting. Adv. Space Res. 42(9), 1469. DOI .

Liaw, A., Wiener, M.: 2002, Classification and regression by randomforest. R News 2(3), 18.

Liu, C., Deng, N., Wang, J.T.L., Wang, H.: 2017, Predicting solar flares using SDO/HMI vector magnetic data products and the random forest algorithm. Astrophys. J. 843(2), 104. DOI .

MacKay, D.J.C.: 2003, Information Theory, Inference, and Learning Algorithms.