Abstract

Considering the impact composite indicators can have on public opinion and policy development, the need for their frameworks to be methodologically sound and statistically verified is growing daily. One of the issues in the process of composite indicator construction which has generated much debate is how to choose the weighting scheme. To address this slippery step, we propose an optimization approach based on the enhanced Scatter Search (eSS) metaheuristic. In this paper, the eSS algorithm is applied to obtain a weighting scheme which will increase the stability of the composite indicator. The objective function is based on the relative contributions of indicators, while the problem constraints rely on the bootstrap Composite I-distance Indicator (CIDI) approach which is also proposed herein. The eSS-CIDI approach combines the exploration capability of eSS and the data-driven constraints devised from the bootstrap CIDI. This novel weighting approach was tested on two acknowledged composite indicators: the Academic Ranking of World Universities (ARWU) and the Networked Readiness Index (NRI). Results indicate that the composite indicators created using the eSS-CIDI weighting approach are more stable than the official ones.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Organisation for Economic Co-operation and Development (2004) delineates composite indicators as metrics formed “when individual indicators are compiled into a single index, on the basis of an underlying model of the multi-dimensional concept that is being measured”. At the very outset, this definition provokes a number of questions: Which individual indicators to choose? How to compile the chosen indicators? Whether or not to apply weights to the indicators? How to devise an underlying model which will best describe the observed phenomenon? It can be observed that the creation of a composite metric might be a frustrating process since there are many questions with different possible subjective answers.

Nevertheless, composite indicators or composite indexes have become popular and widely used metrics for the assessment of complex and sophisticated phenomena which cannot be captured with a single variable. According to Saisana and Tarantola (2002), there are several reasons for the increased popularity of composite indicators. Namely, they provide a single number which summarizes a multi-dimensional phenomenon, making it easier to understand and communicate. Furthermore, a single number provides a ‘bigger picture’, offers the possibility of comparison and ranking between entities, and can be used to assess progress of entities over time.

On the other hand, there are drawbacks of the idea to compile individual indicators. Namely, the results of composite indicators should be interpreted with caution and policymakers and other stakeholders who interpret them should have prior knowledge of the measured multi-dimensional phenomenon (Zornic et al. 2015). Next, they may be misused to gauge the desired policy or may even lead to inappropriate policies if certain dimensions or indicators are not included in the original framework (Nardo et al. 2005). Also, as there is no simple answer to the methodological choices, various experts can easily initiate debate (e.g. Cherchye et al. 2007; Decancq and Lugo 2013; Jeremic et al. 2011; Ray 2007).

Having in mind the pros and cons of composite indicators, their possible policy implications should be more closely observed. According to Birkmann (2007), the purposes of assessment using composite indicators are to assist policy makers in defining strategies, developing policies, and identifying priorities, and to promote the exchange of information between the government and the wider public. Also, composite indicators can be used to initiate public and political discourse on various policies, for example on national university systems (Saisana et al. 2011). It can be concluded that various stakeholders establish opinions and make decisions based on these ranking results. Therefore, composite indicators can have significant policy implications and should be created and interpreted with caution. Grupp and Mogee (2004) warn that results of composite indicators should not be blindly followed as good policymaking in one country may be poor in another one. As there is absence of transparency and clear rule for the methodological choices, the results of composite indicators should have a complementary function in policymaking (Grupp and Schubert 2010; Saltelli 2007; Sébastien et al. 2014).

On one hand, there is a clear demand for composite measures, while on the other hand the process of their creation is not straightforward and involves assumptions (Saltelli 2007). Policymakers and other stakeholders are in need of stable and methodologically sound composite indicators. This provoked a new research direction in the process of composite indicator creation: the robustness analysis of the composite indicator through uncertainty and sensitivity analysis of methodological assumptions (Saisana et al. 2005). The main purpose of the uncertainty and sensitivity analysis is to act as a ‘quality assurance’ tool which should indicate how sensitive the ranking is to methodological choices (Greco et al. 2018a). Although both analyses provide important insights on the robustness of the created metric, this step is still not universally conducted in the process of composite indicator creation (Burgass et al. 2017; Freudenberg 2003; Nardo et al. 2005; Saisana et al. 2005).

The methodological assumption within the creation of composite indicators that attracted our attention is the weighting scheme and its impact on the overall stability of the metric. The question we aim to answer is how to assign weights to indicators within a composite indicator, in a manner which will improve the stability of the metric. Therefore, herein the focus will be how to minimize the effects of the weighting scheme in terms of rank sensitivity and rank reversal. This study attempts to provide an optimization approach that proposes a new weighting scheme, which is data-driven and maximizes the stability of the composite indicator considering specific weight constraints.

The purpose of this study is to devise a fresh weighting approach using non-participatory unsupervised methods with the goal to improve the robustness of the final metric. Such methods do not depend on expert opinion inputs and therefore reduce the possible bias (Singh et al. 2007). However, one should have in mind that the results of such methods should act as a starting point for experts and decision makers upon which they will form the final decision on the weighting scheme (Burgass et al. 2017; Zanakis et al. 2016). Namely, our approach proposes a weighting scheme which should inform the experts from which ‘neighbourhood’ or ‘environment’ they should aim to choose the final weights so as to be sure that their composite indicator will be stable. One of the benefits of the proposed approach is that it might accelerate the process of assigning weights which includes expert opinion.

The proposed approach is named enhanced Scatter Search—Composite I-distance Indicator (eSS-CIDI) approach. In order to obtain weight constraints, we applied the bootstrap Composite I-distance Indicator (CIDI), to form the objective function we used relative contributions (Sect. 3.1) and to solve the optimization problem we chose the enhanced Scatter Search (eSS) (Sect. 3.2).

The optimization problem that this study addresses is formulated as:

- Minimize:

-

(a) Sum of standard deviations of relative contributions of indicators which make a composite indicator through weighting scheme

- Subject to:

-

(b) Each weight should be within the interval based on the bootstrap CIDI results

(c) Sum of weights must equal 1

(d) Weights are greater or equal to 0

The question which arises is where does this study position itself in the literature. Is it a contribution to the field of weighting schemes or the field of robustness analysis? Having in mind the rising importance of both fields in the novel literature (e.g. Becker et al. 2017; Dobbie and Dail 2013; Foster et al. 2013; Greco et al. 2018a; Van Puyenbroeck and Rogge 2017) and the need to develop tools to reduce uncertainty and sensitivity of composite scores (Dialga and Thi Hang Giang 2017) we aimed to propose an approach which will encompass both issues at the same time. Namely, in the previous researches usually a novel weighting scheme is proposed, and in the next step, the robustness analysis is performed. Such an approach has been taken in several researches so far (e.g. Dobrota et al. 2015, 2016; Saisana and D’Hombres 2008). The eSS-CIDI approach, on the other hand, does the two at once and can thus speed up the process of composite indicator creation. It proposes a weighting scheme and automatically verifies that it is the most stable one if the robustness analysis is conducted.

The paper is organized as follows: Sect. 2 presents a literature review on the issues in the process of composite indicator creation with a special overview on the weighting approaches based on optimization. The same Section also provides insights on the robustness analysis and the issue of measuring composite indicator stability. Section 3 features the theoretical background of the chosen methods which form the proposed approach, presented in detail in Sect. 4.1. In Sect. 5.3, the results of the application of the proposed approach on the Academic Ranking of World Universities (ARWU) 2017 and the Networked Readiness Index (NRI) 2016 are presented. In Sect. 6 we analyse the prospects of future research, and finally, we provide concluding remarks in Sect. 7.

2 Related Work

2.1 Issues in the process of composite indicator creation

Although composite indicators are appealing and informative, several steps in their creation raise significant concerns among academics and researchers (see e.g. Paruolo et al. 2013; Saisana and D’Hombres 2008). The commonly cited slippery steps are the choice of method of normalisation, weighting approach, and aggregation. Therefore, the uncertainty and sensitivity analysis are commonly conducted to explore the effects of different approaches to these steps (Cherchye et al. 2008a; Paruolo et al. 2013; Saisana et al. 2011, 2005). Herein, we provide a brief literature review on the most commonly chosen approaches for the above-mentioned steps.

2.1.1 Normalization

After choosing individual indicators and prior to their weighting and aggregation, the raw data usually measured in different scales (euros, percentages, meters, …) should be put on a standard scale. The step which deals with this issue is normalization. Again, the question arises which method of normalization to use? Freudenberg (2003) lists out several methods: standard deviation from the mean (z-scores), distance from the group leader, distance from the mean, distance from the best and worst performers (min–max), and categorical scale. The normalization method does not affect the ranking of entities per individual indicators, but how does it impact the overall composite indicator value? As different normalization methods produce different results (Freudenberg 2003) they might have significant effects on composite indicator scores (Cherchye et al. 2007; Ebert and Welsch 2004).

Several studies have been conducted so as to explore the impact of the normalization method on the overall composite indicator values. For example, Jovanovic et al. (2012) showed that depending on whether raw or normalized data is used different rankings could be obtained. Pollesch and Dale (2016) conducted an analysis using partial derivatives of the aggregation and normalization functions to inspect their impact. They showed that the overall composite indicator value significantly changes if the value of the indicator changes for a unit depending on the normalization method employed. Talukder et al. (2017) tested and compared various normalization and aggregation techniques for developing composite indicators and also showed that that the normalization gravely impacts the overall results. The conclusion can be made that whichever normalization method is employed it should be chosen with care (Nardo et al. 2005) and in line with the weighting approach which is to be used (Mazziotta and Pareto 2012).

2.1.2 Weighting

According to the OECD Handbook on constructing composite indicators, weights should ideally reflect the contribution of each indicator to the overall composite indicator (Nardo et al. 2005). Therefore, prior to aggregation, the composite indicator creators are given the possibility to assign weight to indicators which will reflect their relative importance to the overall composite indicator (Booysen 2002). The question arises how to choose an appropriate weighting scheme as undeniably, the weighting scheme might have an impact on the entities ranked (Greco et al. 2018a).

Decancq and Lugo (2013) distinguish three essential classes of approach to assigning weights: data-driven, normative, and hybrid. Data-driven weights allow the collected data to determine the weights associated with each indicator, thus bypassing the value judgments of experts. Data-driven methods are usually based on a statistical approach, such as Principal Component Analysis (PCA) or an optimization method such as Data Envelopment Analysis (DEA), Linear programming, or Goal programming (Nardo et al. 2005). As weighting approaches based on optimization are of great importance for this paper, we elaborate more on them in Sect. 2.2.1. Normative approaches, in contrast, rely solely on the judgements of the surveyed experts or stakeholders about the importance of the indicators which make up the composite indicator. Some of the normative weighting approaches include equal weighting, Analytic Hierarchy Process (AHP), Conjoint Analysis (CA), public opinion, budget allocation and others (Singh et al. 2007). Hybrid approaches integrate data-driven and normative approaches. Namely, weights at one level of the composite indicator can be data-driven and expert-driven at the other (Maricic et al. 2015). Another type of hybrid weighting is when expert opinion acts as a constraint for data-driven optimization procedures (Joro and Viitala 2004; Reggi et al. 2014). Although the results of data-driven and hybrid weighting approaches are promising, new approaches are needed to develop a weighting scheme which will differentiate the indicators by importance, but which will also consider the stability of the composite indicator.

Neither weighting approach is flawless (Greco et al. 2018a). Namely, data-driven methods tend to exclude expert opinion completely and introduce a high level of conceptual rigidity into the metric generated (Booysen 2002). Moreover, they are sometimes based on an over-complex multivariate analysis which final users do not understand (Cox et al. 1992). Also, the measured statistical relationships between indicators might not always indicate an actual relation between them (Saisana and Tarantola 2002). On the other hand, expert-driven weighting is often characterized by strong inter-individual disagreement (Rogge 2012) which can lead to conflicting opinions that may, in turn, compromise the validity of the suggested weights (Giannetti et al. 2009). When it comes to equal weighting, it is criticized for not being adequately justified (Greco et al. 2018a) and because it may imply unequal weighting to indicators. Initially, the equal weighting scheme considers all dimensions in the composite indicator equally important. If dimensions consist of a different number of indicators, the indicators in the dimension with the largest number of indicators will be given less weight in the calculation of the overall metric (Dobbie and Dail 2013). However, if a composite indicator has a hierarchy, meaning that each dimension consists of the same number of indicators, all indicators will be equally weighted. Another issue of equal weighting is “double counting”, which occurs when two collinear indicators are included in the composite indicator. In that case, a unique dimension of the observed multi-dimensional concept will be assigned double weight (Nardo et al. 2005).

2.1.3 Aggregation

After weighting of individual indicators, aggregation comes as a final step before obtaining the results of the composite indicator. As in case with normalization and weighting, there are multiple approaches to choose from. The first issue is how to divide aggregation methods into categories. Booysen (2002) states they can be additive or functional in nature. The OECD observes them as linear, geometric, and multi-criteria (Nardo et al. 2005), while Munda (2005a) divides them on compensatory and non-compensatory. Nevertheless, in their review on aggregation methods, Greco et al. (2018a) also list “mixed strategies” which are both weighting and aggregation and could not perfectly fit in neither category. Examples of such approaches are Mazziotta–Pareto Index (MPI) (Mazziotta and Pareto 2007), Penalty for a Bottleneck methodology (Ács et al. 2014), and Mean–Min Function (Casadio Tarabusi and Guarini 2013). Herein, we will briefly present and compare the linear and geometric methods, as they are still the most commonly used aggregation methods in the composite indicator literature (Greco et al. 2018a).

The linear approach allows trade-off, while the geometric does not. Namely, if the linear approach is taken, low results in one indicator can easily be compensated with higher result in the other (Munda and Nardo 2009). Therefore, one should be cautioned when assigning weights led by the idea of giving importance to indicators, as the perceived importance will be transformed to trade-off (Paruolo et al. 2013). A mean of skipping the possibility of constant trade-off is to use the geometric mean, as it offers inferior compensability (Van Puyenbroeck and Rogge 2017). Also, if the geometric mean is used, entities with lower results would be motivated more to increase their results, as a small increase in the indicator value will have more effect on the final results than for the entities with a higher rank (Dobbie and Dail 2013; Greco et al. 2018a).

2.2 Weighting Approaches Based on Optimization

The approach based on optimization caught our attention from different data-driven and non-participatory weighting approaches. The idea of assigning weights through optimization is to maximize or minimize a specific objective function in which the decision variables are the indicator weights. Our literature review shows that this can be achieved through the use of Data Envelopment Analysis (DEA) and various metaheuristics.

2.2.1 Data Envelopment Analysis (DEA) and DEA-Like Approaches

The basic idea which underpins DEA, devised by Charnes et al. (1978), is to calculate the relative efficiency of decision-making units (DMUs) based on their inputs and outputs. DEA can be used in the process of composite indicator development to devise an optimal set of weights. Namely, a series of multiplicative DEA models that can be transformed into equivalent linear programs are solved (Zhou et al. 2010). The key obstacle in the application of DEA and DEA-like models in composite indicator creation is that without constraints, all the observed entities can achieve a maximum or close to maximum score (Hatefi and Torabi 2010). So far, several solutions to this problem have emerged. For example, Despotis (2005) proposed a two-phase method: first, the application of a standard DEA-like model and then the application of parametric goal programming to discriminate entities which receive a performance score of 1. Zhou et al. (2010) suggested the creation of a composite indicator which will be the weighted sum of the “best” and “worst” case scenario. Both scenarios are created using a multiplicative DEA model, whereas the “best” case scenario aims to assign weights that maximise the index value, while the “worst” aims for the opposite. Their approach is valuable as it avoids the inclusion of constraints but takes the extremes into account. On the other hand, Ramón et al. (2012) suggested a DEA approach which is based on the idea of minimizing the deviations of the common set of weights from DEA profiles of weights provided by the CCR model for efficient DMUs. Another, more recent approach is to use the results of the multivariate Composite I-distance Indicator (CIDI) approach as constraints for the DEA model (Radojicic et al. 2015, 2018).

Led by the idea of DEA, Melyn and Moesen (1991) proposed the Benefit-of-Doubt (BoD) model. Namely, in essence, the BoD model is a DEA CCR model with unitary inputs. Therefore, BoD is sometimes defined as output-oriented DEA (Rogge 2012). The BoD model aims at maximizing the overall composite indicator value of each entity without prior information on the weighting scheme. Clearly, there are conceptual similarities between DEA and BoD: first, between their goals and second, in the lack of available information on weights (Cherchye et al. 2007). Recently, the entity-specific BoD weighting technique has become an established method in the composite indicator literature (Amado et al. 2016; Mariano et al. 2015; Van Puyenbroeck and Rogge 2017). Because the BoD model is a linear programming problem (Rogge 2012), the question of additional model constraints arises. There is a number of approaches to model restriction. Cherchye et al. (2007) presented four different models of constraints to the BoD: absolute restrictions, ordinal sub-indicator share restrictions, relative restrictions, and proportional sub-indicator share restrictions. Blancas et al. (2013) followed the idea of weight restrictions and suggested a common-weight model, inspired by the DEA, but included an objective in the determination of weights: to minimize the number of ties. Giambona and Vassallo (2013) proposed to restrict the weights using the proportional share of index dimensions. Maricic et al. (2016) presented the possibility of integrating the BoD and the CIDI approach. In addition to the original BoD, new types of BoD model have emerged. Directional-BoD, based on a directional distance function model (Zanella et al. 2015), the Meta-Goal Programming BoD with two sets of goals and two meta-goals (Sayed et al. 2015), and the Goal Programming BoD (GP-BoD) (Sayed et al. 2018) which aims to obtain consistent and stable rankings through BoD weights.

2.2.2 Application of Metaheuristics

Metaheuristics can be defined as solution methods that conduct an interaction between the local improvement procedures and complex strategies to create a method able to move from local optima and perform a robust search of the solution space (Glover and Kochenberger 2003). Blum and Roli (2003) outlined fundamental properties of metaheuristics. They state that metaheuristics are strategies that “guide” or “lead” the search process with the goal to find (near-) optimal solutions, that the complexity of their algorithms vary, that they are not problem specific and can easily be modified to solve a particular problem, and that some of them can incorporate mechanisms to avoid getting trapped in the local optima. The possibility of emerging from the local optima and finding a better solution is what has made metaheuristics remarkably effective and appealing (Gendreau and Potvin 2010). Due to many benefits that metaheuristics offer in solving complex problems, this research field is in constant expansion since 1980s (Sörensen et al. 2018).

Various efforts at obtaining weights using metaheuristics have been summarized in Table 1. Kim and Han (2000) proposed the application of genetic algorithm (GA) approach to feature discretization and determination of connection weights for artificial neural networks (ANNs) to predict the stock price index. Socha and Blum (2007), on the other hand, used Ant Colony Optimization (ACO) to train feed-forward neural networks for pattern classification. Again, to solve the issue of connection weights in ANNs Karaboga et al. (2007) applied Artificial Bee Colony (ABC) algorithm while Mirjalili et al. (2012) used Particle Swarm Optimization (PSO) and the Gravitational Search algorithm (GSA).

Chowdhury et al. (2008) suggested using the Predator-Prey optimization (PPO) to solve the multi-objective optimization problem. The idea in multi-objective optimization is to assign different weights to each objective so as to minimize the objective function. Again, to solve the problem of weights within a multi-objective function Taghdisian et al. (2015) used GA, Macedo et al. (2017) employed Evolutionary Algorithms (EA), while Dubey et al. (2016) used Ant Lion optimization (ALO).

Jain et al. (2015) aimed to resolve the weights flexibility problem of DEA. They implemented a GA approach to find a set of weights which maximize the DMUs’ efficiency and which are at a minimum distance from all the decision makers’ preferences.

Two especially interesting papers are those by Grupp and Schubert (2010) and Becker et al. (2017). Both aim to optimize an aspect of the composite indicator through indicator weights using different approaches. The first seeks to minimize the difference between the best and the worst achievable rank of each ranked entity when weights change arbitrarily. They formulate the problem as a non-linear, mixed-integer problem which they solve using GA. Becker et al. (2017) based their approach on a normalized correlation ratio. The objective function aims to minimize the sum of square difference between the normalized correlation ratio based on the weights suggested by experts and the normalized correlation ratio based on the newly proposed weights. To solve the problem they utilized the Nelder-Mead simplex search (Lagarias et al. 1998).

The presented overview of methods employed to optimize a goal function through the weighting scheme shows that the DEA and DEA-like approaches have been used more often in the process of creating composite indicators while metaheuristics have been employed with a lot of success to optimize weights in ANNs and in multi-objective functions. A research direction which started to develop recently is the application of metaheuristics to optimize the weighting scheme and the structure of composite indicators and DEA models. A valuable insight provided additionally by Table 1 is that the metaheuristics most commonly used to solve the issue of assigning weights are population-based metaheuristics. Research shows that the ANNs which used the weights devised from population-based metaheuristics outperformed the gradient descent algorithm (Gupta and Sexton 1999) and random search and Levenberg–Marquardt algorithms (Socha and Blum 2007). Such a result can indicate that metaheuristics can be used with success in the process of establishing weighting schemes.

2.3 Robustness Analysis of Composite Indicators

Robustness and stability are considered to be important issues in the process of composite indicator development (Dobrota et al. 2015). Nardo et al. (2005) state that there are multiple approaches to be considered when constructing a composite indicator. Therefore, analyses should be undertaken to assess the impact of the different methodological approaches employed on the values and ranks of entities. According to Greco et al. (2018a), there are three ways to conduct the robustness analysis using: uncertainty and sensitivity analysis, stochastic multicriteria acceptability analysis, and other approaches which are mostly based on linear programming and optimization. Herein, we provide a literature review on the first two approaches, as the third group will be more closely observed in the following subsection as it is based on the minimization of rank-reversals.

The most commonly used robustness analyses to evaluate the stability of a particular composite indicator are uncertainty and sensitivity analysis (Dijkstra et al. 2011; Paruolo et al. 2013; Saisana and D’Hombres 2008; Saltelli et al. 2000). Usually, uncertainty analysis is performed first to quantify the impact of alternative models on the rankings. Each model is, in fact, a different composite indicator, in which the normalization method, weighting approach, the aggregation method or another factor have been randomly chosen from the predefined methods for each of the methodological assumptions (Freudenberg 2003). Alongside uncertainty analysis lies sensitivity analysis. The goal here is to present, qualitatively and quantitatively, the variability of the scores and ranks which occur due to different methodological assumptions (Saltelli et al. 2007). The sensitivity analysis can be used to test composite indicators for robustness as it shows how much an individual source of uncertainty influences the output variance (Saisana et al. 2005). There are multiple examples in which the uncertainty and sensitivity analysis have been conducted. For example, Dijkstra et al. (2011) observed the uncertainty of the threshold for the definition of the development stage of countries and the weighting scheme of the Regional Competitiveness Index (RCI). Saisana and Saltelli (2014) observed the uncertainty of the aggregation method, missing data imputation method, and the weighting scheme of the Rule of Law Index (RoL), while Cherchye et al. (2008a) had 23 uncertain input factors in the analysis of the Technology Achievement Index (TAI). Importantly, both uncertainty and sensitivity analysis can be used to assess a single methodological assumption, for example solely for the normalization method. Namely, sometimes, it is of interest to explore the robustness of the results when solely the weights are considered. Dobrota et al. (2015) inspected the stability of the information and communication technology (ICT) Development Index (IDI), Dobrota and Dobrota (2016) observed the stability of ARWU and Alternative ARWU ranking, while Dobrota and Jeremic (2017) analysed the robustness of the Quacquarelli Symonds (QS) and University Ranking by Academic Performance (URAP) rankings. All three papers measured the stability through relative contributions of indicators (Sect. 3.1) and indicated that the original weighting scheme could be altered so as to improve the stability of the composite indicator.

Another approach is to apply the stochastic multicriteria acceptability analysis (SMAA) initially proposed by Lahdelma et al. (1998). The SMAA was first used in the field of multiple criteria decision making, but recently it has been used in the field of composite indicators. Greco et al. (2018b) observed the robustness through weights assigned to indicators. They ranked 20 Italian regions based on 65 indicators and used SMAA to analyse the whole space of possible weight vectors considering the spectrum of possible individual preferences. The result of their analysis is the probability of a region to be on a certain rank. Corrente et al. (2018) proposed a novel technique, namely SMAA for strategic management analytics and assessment, or SMAA squared (SMAA-S) for ranking entrepreneurial ecosystems. Compared to the SMAA, the SMAA-S allows the definition of additional weight constraints. They ranked 23 European countries using 12 indicators and as a result, again obtained the probability of a country to be on a certain rank. Angilella et al. (2018) went a step further and proposed a hierarchical-SMAA-Choquet integral approach to create a composite index of sustainable development. Compared to the previous two versions of SMAA, this one is based on a more complex aggregation method, the Choquet integral which finds the balance between linear and geometric aggregation (Choquet 1953). They ranked 51 Italian municipalities using 10 indicators. We can conclude that the application of SMAA in the field of robustness analysis is a new promising field of study.

As it can be observed, the robustness analyses is commonly conducted to evaluate composite indicators as it allows the assessment to what extent, and for which entities, in particular, the modelling assumptions affect ranking results (Saisana and Saltelli 2014). Thus, the analysis provides valuable feedback to both composite indicator creators and policymakers. It can be argued that the stability of a composite indicator is highly important for policymakers as it assures them that the measure they are using is not susceptible to rank changes (Dobrota et al. 2015). Therefore, it can be debated that the statistical stability is one of the key attributes of any composite indicator (Renzi et al. 2017).

2.4 Measuring Composite Indicator Stability

The question which arises is how to define and measure the stability of a composite indicator? In this paper, we observed stability through the lens of rank reversals. Namely, the more rank reversals a weighting scheme yields compared to the official weighting scheme, the less stable it is. So far, the issue of rank reversal has been explored in the sphere of multicriteria decision making (MCDM). However, recent studies show that rank reversal in the sphere of composite indicators is slowly but surely becoming a topic of research interest. In the following paragraphs, we provide a literature review on the definition of stability through rank reversals in MCDM and composite indicators sphere and on the remedies to minimize it.

In the MCDM literature there are several definitions of rank reversal. One is that rank reversal is a ranking contradiction which occurs when the original set of alternatives alters by adding new alternatives or deleting one or more alternatives (Wang and Triantaphyllou 2008; Wang and Luo 2009). Macharis et al. (2004) define rank reversal as the phenomenon which occurs when a copy or a near copy of a choice is added to the set of options. The alternative term is equivalent to a country or entity in the composite indicator context (Sayed et al. 2018). According to Mousavi-Nasab and Sotoudeh-Anvari (2018) however the rank reversal is defined, it is an undesirable phenomenon that indicates unreliability. The same authors stress out that the research on rank reversal has great practical importance and should be continued.

The issue of rank reversal in MCDA has been a topic of thorough research as it is perceived as a drawback of almost all MCDA techniques. For example, Mousavi-Nasab and Sotoudeh-Anvari (2018) showed that COPRAS (Complex Proportional Assessment), TOPSIS (Technique for Order Preference by Similarity to Ideal Solution) and VIKOR (VIseKriterijumska Optimizacija i Kompromisno Resenje, Serbian term) may produce rank reversals. Herein, we list several studies which tackled the issue of rank reversal occurring due to weighting scheme. In an interesting study by Ligmann-Zielinska and Jankowski (2008), in which they presented a framework for sensitivity analysis in multiple criteria evaluation, they devoted special attention to weights as weights “have been most often criticized”. They state that it should be possible to devise “critical weights” for which a relatively small change in the weight will cause minimal rank reversal. In their study on Analytical Hierarchy Process (AHP) Bojórquez-Tapia et al. (2005) chose, as the best solution, the structure which presented the lowest rank reversal sensitivity to weight uncertainty. Their analysis showed that the robustness of decisions based on the AHP depends on the relative weights. Munda (2005b) also pointed out the importance of weights by giving evidence that changing the weights in a multi-criterion framework for devising urban sustainability index significantly changes entity ranking.

In the composite indicator literature on rank reversal, there are several researches needed to be mentioned. The first one is the work of Cherchye et al. (2008b) in which they inspected the robustness of the Human Development Index (HDI) through the effects of weighting and aggregation on eventual country rankings. They proposed several possible weight scenarios and using a dominance criterion conducted pairwise country comparisons. Several years later, Permanyer (2011) presented innovative ways to measure the sensitivity of a composite measure due to the choice of specific weights. Namely, he proposed an approach which aims to find a “neighbourhood system”, a close, nested, and bounded neighbourhood of weights from which a decision maker can consider to choose the weighting scheme. He also proposed a robustness function which shows the percentage of rank shifts as the weighting scheme changes. Next, Foster et al. (2013) did a research in two directions and showed that a higher positive association between indicators leads to maximal robustness and that their novel robustness measure can be used to compare aggregate robustness properties of different composite indicators. In a more recent study, Sayed et al. (2018) wanted to avoid some of the drawbacks of the BoD and proposed a Goal Programming BoD (GP-BoD) model. The main benefit of the proposed method is that it overcomes the rank reversal issue by assigning data-driven weights which minimize rank reversal.

Robustness of the composite indicator in terms of capacity to produce stable measures should be conducted (Maggino 2009) to find the most stable model. Sayed et al. (2018) noted that the credibility of the composite indicator is limited if it produces inconsistent countries’ rankings. Therefore, when creating a composite indicator, the issue of rank stability should be considered. Also, it is desirable that the chosen weighting method should be such that it avoids or minimizes rank reversal (Paracchini et al. 2008). Still, robustness is not a goal in itself, and the results of weighting schemes which are most stable should be analysed with caution (Permanyer 2011).

3 Background

In this Section, we provide insights into the methods used in our proposed approach for weight determination. First, we introduce the bootstrap CIDI, the methods it builds upon, and the rationale for its implementation. Second, we present the relative contributions and their role in the measurement of composite indicator stability. Finally, we give a brief overview of the enhanced Scatter Search (eSS), the metaheuristics used to solve the optimization model and provide the optimal weighting scheme.

Before introducing the above-mentioned methodologies, we provide notations used in the model development process and in the proposed approach.

Notation

- i :

-

Index for indicators within a composite indicator, \( I = \left\{ {1, \ldots ,k} \right\} \), \( i \in I \)

- \( x_{ie} \) :

-

Value of indicator i for entity e, \( i \in I \), \( e \in E \)

- \( d_{i} \left( {e,f} \right) = x_{ie} - x_{if} \) :

-

Distance between the values of indicator i of the entity e and fictive entity f, \( i \in I \), \( e \in E \)

- \( r_{ji.12 \ldots j - 1} \) :

-

Coefficient of partial correlation between indicators i and j where j < i, \( i \in I \), \( j \in I \)

- \( D^{2} \left( {e,f} \right) \) :

-

Value of the I-distance between entity e and fictive entity f, \( e \in E \)

- \( w_{{CIDI_{i} }} \) :

-

Weight assigned to indicator i using CIDI approach, \( i \in I \)

- \( s \) :

-

Index for bootstrap samples, \( S = \left\{ {1, \ldots ,p} \right\} \), \( s \in S \)

- \( w_{\hbox{min} i} \) :

-

Minimum weight of indicator i obtained after bootstrap CIDI, \( i \in I \)

- \( w_{i} \) :

-

Weight assigned to indicator I, \( i \in I \)

- e :

-

Index for entities ranked by a composite indicator, \( E = \left\{ {1, \ldots ,n} \right\} \), \( e \in E \)

- f :

-

Fictive entity from which the distances are calculated

- \( \sigma_{i}^{2} \) :

-

Variance of indicator i, \( i \in I \)

- \( r_{ji.12 \ldots j - 1}^{2} \) :

-

Coefficient of partial determination between indicators i and j where j < i, \( i \in I \), \( j \in I \)

- \( r_{i} \) :

-

Pearson’s correlation coefficient between indicator i and the I-distance value, \( i \in I \)

- \( v_{ie} \) :

-

Relative contribution of indicator i to the overall composite indicator value of entity e, \( i \in I \), \( e \in E \)

- \( w_{{CIDI_{is} }} \) :

-

Weight assigned to indicator i using CIDI approach based on the sample s, \( i \in I \), \( s \in S \)

- \( w_{\hbox{max} i} \) :

-

Maximum weight of indicator i obtained after bootstrap CIDI, \( i \in I \)

3.1 Bootstrap Composite I-Distance Indicator (Bootstrap CIDI)

This subsection provides insights to the Composite I-distance Indicator (CIDI) approach, a brief introduction to the bootstrap method, and the rationale for combining the bootstrap and the CIDI.

In the 1970s the growing need for a multivariate statistical analysis that could rank countries based on their level of socio-economic development led to the creation of the I-distance method (Ivanovic 1977). Since then the method has been applied for ranking entities in various fields, such as in education (Zornic et al. 2015), sustainable development (Savic et al. 2016), corporate social responsibility (Maricic and Kostic-Stankovic 2016) and ICT development (Dobrota et al. 2015).

In order to rank the entities (e.g. countries, regions, universities) using the I-distance method, we first select the entity with the minimal values for all indicators as a referent one in the observed dataset (Jeremic et al. 2013; Maricic and Kostic-Stankovic 2016). If such entity does not exist, a fictive entity, depicting minimal values for each observed indicator, is created.

For a selected set of indicators k chosen to characterize the entities, the square I-distance between the entity e, \( e \in E \), and fictive entity f is defined as (Ivanovic 1977):

The I-distance stands out for its ability to incorporate a number of variables into a single number without explicitly assigning weights. Therefore, it provides the possibility of ranking entities (Jeremic et al. 2011). Another benefit is that the importance of each variable for the ranking process can be obtained through the Pearson’s correlation coefficients. The more the variable is correlated with the I-distance value, the more it contributes to the overall I-distance value and the ranking process (Maricic and Kostic-Stankovic 2016). Therefore, the obtained correlation coefficients can act as a foundation for determining weights. Accordingly, the new weights are formed using the Pearson’s correlation coefficient between the variable and the obtained I-distance value. The weights suggested by the Composite I-distance Indicator (CIDI) approach are based on the ratio of the (I) correlation coefficient of a particular variable and (II) the I-distance value and the sum of correlation coefficients of variables with the I-distance value (Dobrota et al. 2015). The rationale of this approach is in line with the Hellwig’s (1969) “weights based on the correlation matrix” approach. Namely, he proposed indicator weights as the ratio of correlation coefficient of a particular variable and the exogenous criterion and the sum of correlation coefficients of variables with the exogenous criterion (Ray 2007). Comparing the two approaches, in case of the CIDI methodology, the exogenous criterion is the I-distance value. The formula is given as:

where \( r_{i} \) is the Pearson’s correlation coefficient between the indicator i, \( i \in I \) and the obtained I-distance value and \( \sum\nolimits_{j = 1}^{k} {r_{j} } \) is the sum of all Pearson’s correlation coefficients between the indicators and the obtained I-distance value. The sum of weights obtained using CIDI is 1 (Dobrota et al. 2016) and the new weighting scheme is unbiased in the sense that it is data-driven and that no expert opinion has been included in the weighting process. The obtained CIDI weights can be used to evaluate a composite indicator or to create a novel one (Dobrota et al. 2015), but they can also be used to create weight constraints (Maricic et al. 2016; Radojicic et al. 2018).

Efron (1979) coined the term bootstrap and described both the parametric and nonparametric bootstrap. In his parametric approach, random samples are drawn for a specified probability density function. On the other hand, in the nonparametric approach, thousands of resamples are drawn with replacement from the original sample, and each resample is of the same size as the original sample (Kline 2005). The idea of both parametric and nonparametric bootstrap is to assess the quality of estimates based on finite data (Kleiner et al. 2014). The goal is to obtain confidence intervals, quality assessments that provide more information than a simple point estimate obtained using the Maximum Likelihood Method (Efron and Tibshirani 1993).

However, statistical shortcomings of the nonparametric bootstrap have been discussed (Bickel et al. 1997; Mammen 1992). These led to the development of related methods such as the m out of n bootstrap with replacement (Bickel et al. 1997) and the m out of n bootstrap without replacement, known as subsampling (Politis et al. 1999). The idea of reducing the bootstrap sample size came from Bretagnolle (1983) and Beran and Ducharme (1991). They showed that the failure of Efron’s (1979) bootstrap with resampling size equal to the original sample size may be solved in some cases by undersampling. The idea of the m out of n bootstrap is to draw samples of size m with or without replacement, instead of resampling bootstrap samples of size n, where \( m \to \infty \), and \( m/n \to 0 \).

The type of bootstrap which caught our attention is the subsampling, the m out of n bootstrap without replacement. Namely, subsampling is more general than the m-bootstrap since fewer assumptions are required (Bickel and Sakov 2008). It is based on two basic ideas – one being undersampling, the other the absence of replacement (Politis and Romano 1994). Such an approach to sampling, without replacement, results in deliberate avoidance of choosing any member of the population more than once. This process should be used when outcomes are mutually exclusive and when the researcher wants to eliminate the possibility of the same entity being repeated in the sample. Also, the bootstrap method without replacement improves the stability and accuracy of the estimate (Kawaguchi and Nishii 2007) and shows asymptotic consistency even in cases where the classical bootstrap fails (Davison et al. 2003).

On the other hand, bootstrap with replacement is easier to use and more widely used (Geyer 2013). We chose the m out of n bootstrap without replacement (subsampling) so as to avoid the possibility of choosing any entity more than once. Namely, composite indicators are used to rank different entities. Although it is always possible to get two or three entities with the same values of indicators, this cannot happen for a large percentage of the sample. Theoretically, if a bootstrap with replacement was used, no matter the sample size, we could observe a sample made out of just one or two entities. The possibility of such an occurrence is small, but we wanted to be sure no entity will repeat. Such an approach is used in medical researches, when there is a strict need that a subject is no longer eligible for consideration (Austin and Small 2014). Also, Strobl et al. (2007) showed that when bootstrap without replacement is conducted no bias occurs. Having these considerations in mind, we opted for the m out of n bootstrap without replacement.

Using a smaller bootstrap sample requires a choice of m. Several solutions have been suggested (see e.g. Arcones and Gine 1989; Bickel and Sakov 2008). An interesting approach to choosing m is to make it equal to \( 0.632 \cdot n \), namely to its nearest integer. This idea was first introduced by Efron and Tibshirani (1997) with the goal to reduce the bias of the leave-one-out bootstrap. The idea of this approach is to have a number of observations in the subsample equal to the average number of unique observations in the bootstrap sub-samples (Braga-Neto and Dougherty 2004; De Bin et al. 2016). If a bootstrap n out of n with replacement is conducted, in each of the subsamples, there will be \( 0.632 \cdot n \) original data points. Namely, the probability that each data point will not appear in the subsample is \( \left( {1 - 1/n} \right)^{n} \approx e^{ - 1} \). If a subsample of size n is drawn then \( \left( {1 - 1/n} \right)^{n} \cdot n \approx e^{ - 1} \cdot n \approx 0.632 \cdot n \). This approach to defining m has become popular because it shows low variability and moderate bias (Jiang and Simon 2007). In our study, we, as in Strobl et al. (2007), used the \( 0.632 \cdot n \) as m.

When it comes to bootstrap, one must decide on the number of replications. Again, several suggestions have been made. For example, Hedges (1992) recommended performing 400–2000 bootstrap replications, while a more recent study by Pattengale et al. (2009) suggest between 100 and 500 replicates. They also state that the stopping criteria can recommend very different numbers of replicates for different datasets of comparable size. In our study, we chose 1000 bootstrap replications.

The idea of implementing the bootstrap for various forms of multivariate analysis emerged in the 1990s. For example, Ferrier and Hirschberg (1999) combined bootstrapping methods to measure bank efficiency using DEA. Nevitt and Hancock (2001) showed that bootstrap methods could be applied in structural equations models. Kim et al. (2008) combined the bootstrap and discriminant analysis. More recently, Xu et al. (2013) conducted a parametric bootstrap approach for the two-way ANOVA, and Konietschke et al. (2015) applied parametric and nonparametric bootstrap to MANOVA. Lead by these positive experiences and reputable results, we propose the application of the bootstrap to CIDI approach.

In a typical application of the CIDI approach, the researcher would obtain new, data-driven CIDI weights (Dobrota et al. 2016). However, by performing bootstrap and repeating the CIDI approach multiple times on various samples, one obtains an interval for each weight. Such an interval can be used as constraint in GAR DEA method (Global assurance region DEA method) (Radojicic et al. 2018). Therefore, we propose the application of the CIDI approach to each of the bootstrap samples and the calculation the minimum and maximum weight assigned to each indicator. The obtained values can be used as weight constraints.

The procedure for the m out of n bootstrap CIDI without replacement with p replications is as follows:

-

1.

From the observed n entities create p random samples Ss of size m (m = \( 0.632 \cdot n \))

-

2.

Perform the CIDI approach on each of the p created random samples

-

3.

Obtain the minimum and maximum of the CIDI weights assigned to each of the k indicators.

The results of the bootstrap CIDI will be used in the next steps of the proposed hybrid weighting approach.

3.2 Relative Contributions

The weight assigned to the indicator does not guarantee its final contribution in the overall value (Saisana and D’Hombres 2008). One of the means to assess the share of an indicator in the overall value is using relative contributions. The relative contribution of each indicator to the overall composite indicator value is calculated as the proportion of the (I) product of the indicator score with the associated weight compared to the (II) total score (Murias et al. 2008). The formula is given as:

where the relative contribution \( v_{ie} \) represents the relative contribution of indicator i, \( i \in I \) to the overall index value of entity e, \( e \in E \).

The relative contribution can be interpreted as percentual impact of the weighted indicator i to the overall composite indicator value of entity e. Therefore, we can say that as the value of the relative contribution of an indicator i of entity e rises, the impact of the indicator i in the overall composite indicator value of the entity e rises. The presented analysis can be quite useful when it comes to the assessment of composite indicators, especially when the values of relative contributions are analysed per indicator. Namely, such an approach can give insight into whether certain indicators are dominating the overall composite indicator values (Dobrota et al. 2016). It can also show that the relative contribution of an indicator to the overall composite indicator value might not necessarily be captured by the weight assigned (Saisana and D’Hombres 2008). This is measured through the mean relative contribution per indicator. It can deviate from the official weight assigned to the indicator thus indicating that an indicator has a higher or smaller impact. Therefore, relative contributions can indicate the level of composite indicator and/or rank stability. Accordingly, a higher standard deviation of relative contributions leads to a greater degree of oscillation of overall composite indicator value subsequently leading to higher rank oscillation (Savic et al. 2016).

So far, relative contributions have been used with a great deal of success in the complex procedure of composite indicator creation. Besides being used to inspect the difference between nominal (explicit) and relative (implicit) weights, relative contributions can be used as input to the uncertainty and sensitivity analysis of weighting schemes such as in Dobrota and Dobrota (2016) and Dobrota et al. (2016). Another interesting application of relative contributions in uncertainty and sensitivity analysis is their use as constraints in the DEA model. Murias et al. (2008) aimed to create a composite indicator for the quality assessment of Spanish public universities using nine indicators and the DEA model. Since they wanted to allow every unit to choose its individual weights, but also to limit the flexibility of the weights, they imposed additional restrictions to the relative contributions of indicator weights. Similarly, Pérez et al. (2013) used linear programming to maximize the value of the composite indicator, while restraining the weights using relative contributions of indicators. Relative contributions can also be used as a stopping rule. Savic et al. (2016) suggested stopping post hoc I-distance (proposed by Marković et al. (2016)) when the sum of standard deviations of relative contributions starts to increase.

3.3 Enhanced Scatter Search (eSS)

Scatter Search (SS) is a population-based metaheuristics based on formulations proposed in the 1960s for combining decision rules and problem constraints. The SS was introduced by Glover (1977) for integer programming. However, the SS has recently produced promising results for solving combinatorial and nonlinear optimization problems (Glover et al. 2000). For that, it has seen several modifications of the original method such as the enhanced Scatter Search (eSS) (Egea et al. 2009), Cooperative enhanced Scatter Search (CeSS) (Villaverde et al. 2012), and the self-adaptive cooperative enhanced scatter search (saCeSS) (Penas et al. 2017). One of the reasons for the interest of academics and researchers in the SS is because it uses strategies for combining solution vectors that have proved effective in a variety of problem settings. As the modifications of SS have become more widely used, there was a growing need to implement them in the programming language R and make them more accessible (Egea et al. 2014).

The optimization algorithm used, the enhanced Scatter Search (eSS), can be considered as an evolutionary method similar to e.g. genetic algorithms but based on systematic combinations of the population members instead of recombination and mutation operators. Metaheuristic classification places Scatter Search in the group of population-based algorithms that constructs solutions by applying strategies of diversification, improvement, combination and population update (Penas et al. 2015). It is a naturally inspired and non-linear metaheuristic which is suited for global dynamic optimization of nonlinear problems. The aim of the eSS is to enable the implementation of various solution strategies that can produce new solutions from combined elements to derive better solutions than strategies whose combinations are only based on a set of original elements. The SS algorithm uses different heuristics to choose suitable initial points for the local search which helps overcome the problem of switching from global to local search (Rodriguez-Fernandez et al. 2006). The pseudo code for the eSS can be found in Egea et al. (2009).

Although SS is similar to GAs, there are crucial differences which should be emphasized. Namely, GA approaches are based on the idea of choosing parents randomly and further on applying random crossover and mutation to create a new generation (Gen and Cheng 2000). Contrarily, SS does not emphasize randomization. It does not use crossover and mutation but applies a solution combination method that operates among population members. The approach is designed to apply strategic responses, both deterministic and probabilistic, that take account of evaluations and history (Rodriguez-Fernandez et al. 2006). New solutions are generated using a systematic (partial random) combination rather than a fully random solution (Remli et al. 2017).

The eSS algorithm which we propose to implement in our study aims to find the balance between intensification (local search) and diversification (global search) using a small population size, more search directions, and an intensification mechanism in the global phase, which exploits the promising directions defined by a pair of solutions in the reference set (Egea et al. 2009). Therefore, eSS stands out for its good balance between robustness and efficiency in the global phase, and couples with a local search procedure to accelerate the convergence to optimal solutions (Penas et al. 2017). The eSS has proved to be an efficient metaheuristic in solving complex-process optimization problems from different fields, providing a good compromise between diversification (exploration by global search) and intensification (local search) (Otero-Muras and Banga 2014).

4 Proposed Approach: Hybrid Enhanced Scatter Search—Composite I-Distance Indicator (eSS-CIDI) Optimization Approach

This study aims to find a set of data-driven weights which creates the most stable composite indicator. The stability of a composite indicator, observed through the prism of its weighting scheme, is measured through the standard deviation of relative contributions of indicators. Therefore, we aim to minimize the sum of standard deviations of relative contributions of indicators which constitute the composite indicator. The goal is to adjust the weights assigned to composite indicator so as to achieve high overall composite indicator stability. However, if no constraints to each weight are imposed, the model would assign weight to just one indicator, the indicator with the minimum standard deviation. Accordingly, we impose weight restrictions using the previously introduced m out of n bootstrap CIDI without replacement with p replications. To solve the proposed optimization model, we propose the eSS.

The three-step algorithm which searches for the weights that create the most stable composite indicator via constrained optimization is as follows:

-

1.

Conduct the m out of n bootstrap CIDI without replacement with p replications. The suggested value of the parameter m is \( 0.632 \cdot n \), where n is the number of the entities ranked by the composite indicator. The suggested number of replications (p) is 1000.

-

2.

Define the weight constraints. Obtain the min and max CIDI weights of each indicator following the bootstrap CIDI procedure.

-

3.

Solve the optimization model. Use the min and max CIDI weights as constraints of the optimization model. To solve the model, we propose the eSS metaheuristic.

The issue which arises when applying optimization models relates to model constraints. Namely, as previously explained, if no constraints are assigned, the optimization model would assign weight to just one indicator (in case of BoD) or all entities would be efficient or close to efficient (in case of DEA). To conduct the eSS (Step 3), bound constraints for the decision variables must be defined.

Among various approaches for determining such bounds, the CIDI approach stood out as is was employed with success to constraint BoD and DEA models. Namely, Maricic et al. (2016) created weight constraints for the BoD model around CIDI weights. On the other side, Radojicic et al. (2018) used bootstrap CIDI to create constraints for the DEA model. Following the example of good practice, herein we use the m out of n bootstrap CIDI without replacement with p replications to create weight bounds for the optimization model (Step 1). These bounds are defined as the minimum and maximum weight assigned using the bootstrap CIDI (Step 2). Such an approach allows us to create a wide enough interval which will cover all bootstrap CIDI weights.

The proposed optimization approach is presented in Fig. 1.

The objective function of the optimization problem is given as follows:

Subject to

where \( \sigma_{{v_{i} }} \) is the standard deviation of the relative contribution of indicator i, \( i \in I \), in the composite indicator of entities \( e, e \in E \), computed as:

Next, \( v_{ie} \) is the relative contribution of indicator i to the entity e, \( w_{\hbox{max} i} \) and \( w_{\hbox{min} i} \) are the maximum and minimum weight of indicator i obtained after bootstrap CIDI. Alongside the upper and lower constraints (Eq. 5), we added the constraint that the sum of assigned weights must be one (Eq. 6). Finally, the weights must be greater or equal to 0 (Eq. 7).

4.1 Discussion on the Proposed Optimization Approach

A key ‘managerial insight’ of the eSS-CIDI approach is that it allows the composite indicator creators to be certain they have created a stable and robust composite indicator considering the chosen weight constraints. Namely, when choosing the weighting scheme, researchers are given a wide range of weighting approaches to choose from. Nevertheless, there is no guarantee that they will choose the most stable one. Furthermore, our approach provides an opportunity to improve the stability of a devised composite indicator. Besides suggesting new weights, the eSS-CIDI approach could be used to assess and inspect the official weighting scheme. The deviations in weights, as well as overall composite indicator values and ranks, could have valuable policy implications as composite indicators are often used to define strategies and policies and act as guiding lights (Dialga and Thi Hang Giang 2017). Therefore, the eSS-CIDI approach could be of use to policymakers, especially as the optimized weights are rounded to three decimal places which makes them easier to interpret and present to the wider public (Cole 2015a, b).

One of the prominent contributions of our method is that it is a data-driven weight optimization approach which builds upon methods that were verified in various applications (Dobrota et al. 2016; Egea et al. 2009; Nevitt and Hancock 2001). Furthermore, the algorithm provides a single solution, so the researcher does not need to choose between several solutions. Finally, it enables the index creators to be sure that, given the constraints, their metric will have the highest stability.

The question which arises is how we measure stability in the eSS-CIDI approach. To measure the stability of the composite indicator we used the standard deviation of relative contributions (\( \sigma_{{v_{i} }} \)). Namely, as shown in Sect. 3.1, relative contributions indicate the prevalence of an indicator in the overall composite indicator. If the values of relative contribution of indicator i for all observed entities e, \( e \in E \), vary, therefore, the values of composite indicator of all observed entities are sensitive to the weight assigned to indicator i. To measure the stability of the impact of an indicator i we used the standard deviation of its relative contribution. The smaller \( \sigma_{{v_{i} }} \) of indicator i indicates that the overall composite indicator values are stable when it comes to the impact of indicator i. Therefore, our idea is to minimize the sum of standard deviations of relative contributions through weights and thus minimize the overall indicator volatility. The proposed approach leads to maximization of rank stability and robustness, all observed through the prism of weight uncertainty.

Next, we provide comparison of the eSS-CIDI approach with related weighting approaches (Table 2). The eSS-CIDI approach like CIDI and PCA does not assign entity-specific weights. Namely, BoD and DEA assign a specific weighting scheme to each observed entity (Amado et al. 2016; Cherchye et al. 2007). The criteria for which the proposed approach stands out is because it takes into account the stability of the created composite indicator while assigning weights. For all other observed weighting approaches, the uncertainty analysis for the weighting scheme can be conducted, but after the weights have been assigned. The eSS-CIDI approach is an approach based on optimization, so it is, as BoD and DEA, based on an objective function.

Additionally, we compare the proposed eSS-CIDI to similar approaches which aim to improve or measure the stability of the composite indicator. Compared to the approach of Foster et al. (2013), the eSS-CIDI provides the decision maker with a vector of most stabile weights, while their approach provides a measure of the stability of an initial vector of weights. Their approach gives a measure of robustness of an initial or official weighting scheme, while it does not suggest a vector of weights which will be more stable. The same accounts for the approach of Permanyer (2011), whereas his approach to measuring the robustness of an initial vector of weights differs as it observes the distance from equality. The approach of Cherchye et al. (2008b) is based on the rank robustness measured through Lorenz dominance. Therefore, their results do not produce an overall composite composite indicator value, whereas they provide pairwise dominance results. The eSS-CIDI, on the other hand, provides an overall composite indicator, but it does not take into account pairwise comparisons. Paruolo et al. (2013) proposed an approach based on the actual effect of the weights to the overall metric, measured through the ‘main effect’. The eSS-CIDI is in a way similar as it measures the proportional impact of the weighted indicator in the overall composite indicator value through relative contributions. The theoretical approach of the weighting approach of Becker et al. (2017) resembles the eSS-CIDI. They aimed to optimize weights so as the impact of weights measured through the ‘main effect’ fits the pre-specified importance. In the eSS-CIDI also an optimization approach is used, but the weights are to fit the bootstrap-CIDI constraints. The provided comparison shows that the eSS-CIDI provides a continuation of the resent research in this area.

Lastly, the objective of the proposed eSS-CIDI weighting approach should be observed through the prism of policymakers. Although the objective of the eSS-CIDI is understandable to an analyst, the question rises will its objective be understood by policymakers or government representatives or field experts who are working on the process of creating a composite indicator. Namely, will they choose the eSS-CIDI weighting scheme over equal weighting or a simpler data-driven weighting approach? In an attempt to answer the question the pros and cons of this approach to the process of policymaking should be analysed. One of the first benefits of the proposed approach is that the uncertainty analysis is automatically performed. Namely, Freudenberg (2003) states that this step is usually skipped in the process of composite indicator creation. By applying the eSS-CIDI the policymakers can be sure that this step will be conducted. Another benefit is that the eSS-CIDI proposes a weighting scheme which creates a most stable indicator considering predefined weight constraints. This also reduces the possibility of critique of the composite indicator methodology which the policymakers wish to evade. Namely, the weighting scheme is often criticized step in the process of composite indicator creation (e.g. Amado et al. 2016; Despotis 2005; Dobrota et al. 2016; Jeremic et al. 2011). On the other side, there are potential drawbacks of the proposed approach. First is that the approach is not so easy understandable for ones who have no basic knowledge of statistics and operational research, which might make it difficult to adopt by wider public. Second is that the obtained weights of indicators might significantly differ from the socially acceptable weights or weights proposed by experts. Nevertheless, according to Saltelli (2007) no matter how good the statistical and theoretical background of a composite indicator is, its acceptance relies on peer acceptance. The same can be said for the weighting methodology. We believe that this discussion might persuade the policymakers to put confidence in our approach.

5 Case Studies

This section shows the application of the hybrid eSS-CIDI approach to two existing composite indicators. Herein we chose the Academic Ranking of World Universities (ARWU) and the Networked Readiness Index (NRI). We chose two composite indicators on which to implement the suggested weighting scheme because they belong to two different fields of study; one is in the field of higher education, and the other in the field of information and communication technology (ICT). Another, more important reason, is the official weighting scheme. In case of ARWU, the weighting scheme is based on expert opinion and the assigned weights are not equal, while in case of NRI, the weights are equal. Finally, the chosen indicators have different structures. Namely, NRI has a three-level structure, while ARWU is based solely on indicators.

5.1 Academic Ranking of World Universities (ARWU) 2017

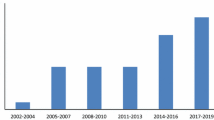

Higher education is just one of the spheres of life that has seen the introduction of quantitative metrics into its assessment through university rankings (Daraio and Bonaccorsi 2017). University rankings came into the spotlight after US News and World Report began providing rankings of US universities in 1983, while they proliferated after 2003 when the Academic Ranking of World Universities (ARWU) has been published (Moed 2017). Therefore, the general opinion is that the university rankings are here to stay (Hazelkorn 2007). The university ranking that attracted our attention was the ARWU ranking published by the Institute of Higher Education of the Jiao Tong University in Shanghai which ranks the world’s top 1000 universities (ShanghaiRanking 2018).

The ranking itself is based on six indicators which aim to rank institutions according to academic and research performance (Liu and Cheng 2005). The first two indicators, Alumni and Award, are related to the number of alumni/staff who won the Nobel Prize and/or Fields Medal. The following three indicators are bibliometric. The indicator HiCi, aims to measure the number of staff who are classified as highly cited researchers by Clarivate Analytics. The next two indicators measure the research output. The N&S, on one hand, indicates the number of papers published in Nature and Science in the last 5 years, while the PUB indicates the total number of papers indexed by the Science Citation Index-Expanded (SCIe) and the Social Science Citation Index (Social SCI) in the previous year. Both indicators take into account only publications of ‘Article’ type (ShanghaiRanking 2018). Finally, the PCP attempts to measure the academic performance of an institution by taking into account the number of full-time equivalent academic staff.

Taking a closer look at the ARWU methodology, to calculate the score of the ARWU ranking first raw indicator values are normalized. The normalization method used is the distance to a referent entity (Nardo et al. 2005), which is in this case the university with the highest indicator value. The best performing university is given a score of 100 and becomes the benchmark against which the scores of other universities are measured. In the next step, the normalized scores are weighted accordingly and aggregated using the linear sum (Dehon et al. 2010). The list of the ARWU indicators and their respective weights are given in Table 3.

Since it first appeared in 2003, the ARWU ranking has attracted both positive feedback and rigorous critique. Academics specialized in data analysis, bibliometrics, and composite indicators have tried to attract the attention of the ranking creators and the broader public to some of the methodological flaws the ARWU ranking faces. The methodological issue which is particularly significant for this research is the weighting scheme of the ARWU indicators. Namely, Dehon et al. (2010) scrutinized the ARWU and showed that its results are sensitive to the relative weight attributed to each of the indicators. Their research is also notable because it provided insight that the university rankings, as well as ARWU, are sensitive to weighting scheme alterations.

In the presented case study, we initially chose to observe the top 100 universities for the year 2017. The data was publicly available on the official site of the ARWU ranking (ShanghaiRanking 2017). As the indicators Award and Alumni are related to Nobel Prize and Fields medals winners, which not many universities can boast, many universities have zero values for these indicators. The high percentage of zero values of these indicators is seen an issue of the ARWU ranking (Docampo and Cram 2015; Maricic et al. 2017). In general, high per cent of zero values in the data can greatly complicate any statistical analysis or interpretation of results (Zuur et al. 2010). In some cases, the high percentage of zero values is inherent (e.g. murder rate), but otherwise it indicates poor data quality (Yang et al. 2015). Therefore, to improve the quality of the observed ranking, all universities within the top 100 which had zero value for any indicator were removed. In our analysis, we included 74 world-class universities within the top 100 for which all six indicator values are above 0.

According to the suggested algorithm, the first step was to perform the bootstrap CIDI and determine the allowed weight intervals needed for the eSS optimization. We performed 1000 iterations with a sample size of 47 (\( 0.632 \cdot 74 = 46.768 \approx 47 \)). The obtained intervals are also presented in Table 3. The original weights of indicators Award and N&S are covered by the interval, while the suggested weight intervals for HiCi and PUB are below the officially assigned weight. For example, PUB was assigned 20% while the bootstrap CIDI suggested weights from 7.5 to 15.4%. On the other hand, the latter two weight intervals were above the original weight. For example, the weight of the indicator Alumni, officially assigned a weight of 10%, has a suggested weight from the interval ranging from 15.2 to 19.1%. The obtained intervals give more significance to prestigious awards of alumni (Alumni) and per capita performance (PCP), while relegating the number of highly cited researchers (HiCi) and the level of publications (PUB). Bootstrap CIDI intervals are more oriented towards excellence measured through Nobel Prizes and Fields Medals.

In the following step, the eSS was performed and the results are given in Table 3. As it can be observed, for the first three indicators, the suggested weights are the lower limits of the bootstrap CIDI intervals. The weights of N&S and PCP are set as the upper limit of the bootstrap CIDI intervals. Interestingly, only one weight did not achieve the upper or lower bound of the constraint interval, the weight of PUB.

To evaluate the obtained weighting scheme, we compared the sum of standard deviations of relative contributions of the optimized, official, equal, and the CIDI weighting schemeFootnote 1 (Table 4). The sum of standard deviations of relative contributions of the official weighting scheme is 0.253385, while the sum of standard deviation of relative contributions of the eSS-CIDI weighting scheme is 0.252578. Compared with the equal weighting scheme and with the CIDI weighting scheme, the optimized weighting scheme again showed more stable results. Also, it is of interest to analyse the standard deviation of relative contributions. Comparing the official and the optimized weighting schemes, the indicators which were assigned more weight than the official have a greater standard deviation of relative contributions. Bearing in mind that university rankings, both international and national, should be stable and not subject to sharp fluctuations in the overall scores (Shattock 2017), the new eSS-CIDI weighting scheme might be very useful.

Finally, we apply the novel weighting scheme. The changes in the ARWU ranks as the result of the application of optimal weights for a selected number of universities are given in Fig. 2. We can observe that the rank difference ranges from + 9 places (University of Basel) to − 9 places (Rutgers, The State University of New Jersey—New Brunswick). Most commonly universities dropped one ranking position (23.0% of universities), while the second most common case was no rank change (17.6% of universities). For example, the Karolinska Institute and University of Munich improved their rank by 5 places. One of the reasons for such rank advancement is their high values for the indicator PCP, 53.3 and 51.7, respectively, whose importance for the ranking procedure rose. On the other side of Fig. 2 we can see that King’s College London and University of British Columbia dropped by 5 ranking places. Their overall score did not change dramatically, but other universities benefited more from the new weighting scheme and increased their overall score.