Abstract

Fractional Ornstein–Uhlenbeck process of the second kind \((\text {fOU}_{2})\) is a solution of the Langevin equation \(\mathrm {d}X_t = -\theta X_t\,\mathrm {d}t+\mathrm {d}Y_t^{(1)}, \ \theta >0\) with a Gaussian driving noise \( Y_t^{(1)} := \int ^t_0 e^{-s} \,\mathrm {d}B_{a_s}\), where \( a_t= H e^{\frac{t}{H}}\) and \(B\) is a fractional Brownian motion with Hurst parameter \(H \in (0,1)\). In this article we consider the case \(H>\frac{1}{2}\), and by using the ergodicity of \(\text {fOU}_{2}\) process we construct consistent estimators for the drift parameter \(\theta \) based on discrete observations in two possible cases: \((i)\) the Hurst parameter \(H\) is known and \((ii)\) the Hurst parameter \(H\) is unknown. Moreover, using Malliavin calculus techniques we prove central limit theorems for our estimators which are valid for the whole range \(H \in (\frac{1}{2},1)\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Motivation and overview

Assume \(B=\{B_t\}_{ t\ge 0}\) is a fractional Brownian motion with Hurst parameter \(H \in (0,1)\), i.e a continuous, centered Gaussian process with covariance function

Consider the following Langevin equation with a drift parameter \(\theta >0\) and a driving noise \(N\)

When the driving noise \(N=B\) is a fractional Brownian motion, a solution of the Langevin equation (1.1) is called the fractional Ornstein–Uhlenbeck process of the first kind, \((\text {fOU}_{1})\) in short. The fractional Ornstein–Uhlenbeck process of the second kind is a solution of the Langevin Eq. (1.1) with a driving noise \(N_t = Y^{(1)}_t := \int ^t_0 e^{-s} \,\mathrm {d}B_{a_s}\), where \(\ a_t= H e^{\frac{t}{H}}\). Terms “of the first kind” and “of the second kind” are taken from Kaarakka and Salminen (2011). It is well known that the classical Ornstein–Uhlenbeck process, i.e. when the driving noise \(N=W\) is a standard Brownian motion, has the same finite dimensional distributions as the Lamperti transformation (see 2.6 for definition) of Brownian motion. However, when one replaces Brownian motion with a fractional Brownian motion the solution of the Langevin equation (1.1) is different from the one that is obtained by the Lamperti transformation of a fractional Brownian motion, see Cheridito et al. (2003), Kaarakka and Salminen (2011). The motivation behind introducing the noise process \(N=Y^{(1)}\) is related to the Lamperti transformation of fractional Brownian motion. We refer to Subsection 2.2.2 or (Kaarakka and Salminen 2011, Sect. 3) for more details.

Usually statistical models with fractional processes exhibit short (long) memory property for \(H<\frac{1}{2}\) (\(H>\frac{1}{2}\), respectively) and this is true for \((\text {fOU}_{1})\) processes. In contrast, the \(\text {fOU}_{2}\) process always exhibits short range dependence regardless of the Hurst parameter \(H\). This phenomenon makes \(\text {fOU}_{2}\) an interesting process for modelling in many different disciplines. For example, for applications of short memory processes in econometric or in modelling the extremes of time series see Mynbaev (2011), Chavez-Demoulin and Davison (2012) respectively.

In this article we use ergodicity of the \(\text {fOU}_{2}\) process to construct consistent estimator of the drift parameter \(\theta \) based on observations of the process at discrete times. More precisely, assume that the process is observed at discrete times \(0, \Delta _N, 2\Delta _N, \ldots , N\Delta _N\) and let \(T_N = N\Delta _N\) denote the length of the observation window. We show that:

- (i) :

-

when \(H\) is known one can construct a strongly consistent estimator \(\widehat{\theta }\), introduced in Theorem 3.2, with asymptotic normality property under the mesh conditions

$$\begin{aligned} T_N \rightarrow \infty , \quad \text {and} \quad N\Delta _{N}^{2} \rightarrow 0 \end{aligned}$$with arbitrary mesh \(\Delta _N\) such that \(\Delta _N \rightarrow 0\) as \(N\) tends to infinity.

- (ii) :

-

when \(H\) is unknown one can construct another strongly consistent estimator \(\widetilde{\theta }\), introduced in Theorem 5.1, with asymptotic normality property under the restricted mesh condition

$$\begin{aligned} \Delta _N = N^{-\alpha }, \quad \text {with} \quad \alpha \in \left( \frac{1}{2}, \frac{1}{4H-2}\wedge 1\right) . \end{aligned}$$

1.2 History and further motivations

Statistical inference of the drift parameter \(\theta \) based on a data recorded from continuous (discrete) trajectories of \(X\) is an interesting problem in the realm of mathematical statistics. In the case of diffusion processes with Brownian motion as a driving noise the problem is well studied (e.g. see Kutoyants (2004) and references therein among many others). However, the estimation of the drift parameter becomes very challenging with fractional processes as a driving noise. This is mainly because of the fact that fractional Brownian motion \(B\) with Hurst parameter \(H \ne \frac{1}{2}\) is neither a semimartingale nor a Markov process (we refer to the recent book Prakasa Rao (2010) for more details). In the case of the fractional Ornstein–Uhlenbeck process of the first kind, maximum likelihood \((\text {MLE})\) and least squares \((\text {LSE})\) estimators based on continuous observations of the process are considered in Kleptsyna and Breton (2002) and Hu and Nualart (2010) respectively. In this case it turns out that both MLE and LSE provide strongly consistent estimators. Moreover, the asymptotic normality of MLE is shown in Bercu et al. (2011) for values \(H > \frac{1}{2}\) and for LSE in Hu and Nualart (2010) for values \(H \in [\frac{1}{2}, \frac{3}{4})\). In the case of the fractional Ornstein–Uhlenbeck process of the second kind, Azmoodeh and Morlanes (2013) showed that LSE is a consistent estimator using continuous observations. Moreover, they showed that a central limit theorem for LSE holds for the whole range \(H > \frac{1}{2}\).

The main feature of this paper is to provide strongly consistent estimators for the drift parameter \(\theta \) based on discrete observations of the process \(X\) together with CLTs using the modern approach of Malliavin calculus for normal approximations Nourdin and Peccati (2012). From practical point of view, it is very important to assume that we have a data collected from process \(X\) observed at discrete times. In addition to its applicability, such a demand makes the problem more delicate. Therefore, such a problem could not remain open for the fractional Ornstein–Uhlenbeck process of the first kind. In fact, estimation of the drift parameter \(\theta \) for the \(\text {fOU}_{1}\) process with discretization procedure of integral transform is considered in Xiao et al. (2011) assuming that the Hurst parameter \(H\) is known. In the same setup, Brouste and Iacus (2012) introduced an estimation procedure that can be used to estimate both the drift parameter \(\theta \) and the Hurst parameter \(H\) based on discrete observations. In this paper, we also display a new estimation method that can be used to estimate the drift parameter \(\theta \) of the \(\text {fOU}_{2}\) process based on discrete observations when the Hurst parameter \(H\) is unknown (Theorem 5.1).

1.3 Plan

The paper is organized as follows. In Sect. 2 we give auxiliary facts on Malliavin calculus and fractional Ornstein–Uhlenbeck processes. Section 3 is devoted to estimation of the drift parameter when \(H\) is known. In Sect. 4 we give a short explanation how the Hurst parameter \(H\) can be estimated by using discrete observations. Section 5 deals with estimation of the drift parameter when \(H\) is unknown. Finally, some technical lemmas are collected to Appendix A.

2 Auxiliary facts

2.1 A brief review on Malliavin calculus

In this subsection we briefly introduce some basic facts on Malliavin calculus with respect to Gaussian processes needed in this paper. We also recall some results how Malliavin calculus can be used to obtain a central limit theorem for a sequence of multiple Wiener integrals. For more details on the topic, we refer to Alos et al. (2001), Nualart (2006), Nourdin and Peccati (2012). Let \(W\) be a Brownian motion and let \(G=\{ G_t \}_{t\in [0,T]}\) be a continuous centered Gaussian process of the form

where the Volterra kernel \(K\), i.e. \(K(t,s)=0\) for all \(s>t\), satisfies \(\sup _{t \in [0,T]} \int _{0}^{t}K(t,s)^{2} \mathrm {d}s < \infty \). Moreover, we assume that for any \(s\) the function \(K(\cdot ,s)\) is of bounded variation on any interval \((u,T]\) for all \(u >s\). A typical example of this type of Gaussian processes is a fractional Brownian motion. It is known that for \(H>\frac{1}{2}\) the kernel takes the form

Moreover, we have the following inverse relation

where the operator \(K^{*}_{H}\) is defined as

Consider the set \(\mathcal {E}\) of all step functions on \([0,T]\). The Hilbert space \(\mathcal {H}\) associated to the process \(G\) is the closure of \(\mathcal {E}\) with respect to inner product

where \(R_G(t,s)\) denotes the covariance function of \(G\). The mapping \(\mathbf {1}_{[0,t]} \mapsto G_t\) can be extended to an isometry between the Hilbert space \(\mathcal {H}\) and the Gaussian space \(\mathcal {H}_1\) associated with the process \(G\). Consider next the space \(\mathcal {S}\) of all smooth random variables of a form

where \(f \in C_{b}^{\infty }(\mathbb {R}^n)\). For any smooth random variable \(F\) of the form (2.2), we define its Malliavin derivative \(D^{(G)}= D \) as an element of \(L^{2}(\Omega ;\mathcal {H})\) by

In particular, \(D G_t = \mathbf {1}_{[0,t]}\). We denote by \(\mathbb {D}^{1,2}_{G}= \mathbb {D}^{1,2}\) the space of all square integrable Malliavin derivative random variables as the closure of the set \(\mathcal {S}\) of smooth random variables with respect to the norm

Consider next a linear operator \(K^{*}\) from \(\mathcal {E}\) to \(L^{2}[0,T]\) defined by

where \(K(\mathrm {d}t,s)\) stands for the measure associated to the bounded variation function \(K(\cdot ,s)\). The Hilbert space \(\mathcal {H}\) generated by covariance function of the Gaussian process \(G\) can be represented as \(\mathcal {H} = (K^{*})^{-1} (L^{2}[0,T])\) and \(\mathbb {D}^{1,2}_{G}(\mathcal {H}) =(K^{*})^{-1} \big (\mathbb {D}^{1,2}_{W}(L^{2}[0,T])\big )\). Furthermore, for any \(n \ge 1\) let \(\fancyscript{H}_n\) be the \(n\)th Wiener chaos of \(G\), i.e. the closed linear subspace of \(L^2 (\Omega )\) generated by the random variables \(\{ H_n \left( G(\varphi ) \right) ,\ \varphi \in \mathcal {H}, \ \Vert \varphi \Vert _{\mathcal {H}} = 1\}\) where \(H_n\) is the \(n\)th Hermite polynomial. It is well known that the mapping \(I_{n}^{G}(\varphi ^{\otimes n}) = n! H_n \left( G(\varphi )\right) \) provides a linear isometry between the symmetric tensor product \(\mathcal {H}^{\odot n}\) and the space \(\fancyscript{H}_n\). The random variables \(I_{n}^{G}(\varphi ^{\otimes n})\) are called multiple Wiener integrals of order \(n\) with respect to the Gaussian process \(G\).

Let \(\mathcal {N}(0,\sigma ^2)\) denote the Gaussian distribution with zero mean and variance \(\sigma ^2\). We use notation \(\overset{\text {law}}{\longrightarrow }\) for convergence in distribution. The next proposition provides a central limit theorem for a sequence of multiple Wiener integrals of fixed order.

Proposition 2.1

Nualart and Ortiz-Latorre (2008) Let \(\{F_n\}_{n \ge 1}\) be a sequence of random variables in the \(q\)th Wiener chaos \(\fancyscript{H}_q\) with \(q \ge 2\) such that \(\lim _{n \rightarrow \infty } \mathbb {E}(F_n ^2) = \sigma ^2\). Then the following statements are equivalent:

-

(i)

\(F_n \overset{\text {law}}{\longrightarrow }\mathcal {N}(0,\sigma ^2)\) as \(n\) tends to infinity.

-

(ii)

\( \Vert DF_n \Vert ^{2}_{\mathcal {H}}\) converges in \(L^{2}(\Omega )\) to \(q \sigma ^{2}\) as \(n\) tends to infinity.

2.2 Fractional Ornstein–Uhlenbeck processes

In this subsection we briefly introduce fractional Ornstein–Uhlenbeck processes although we mostly focus on fractional Ornstein–Uhlenbeck process of the second kind for which we also provide some new results. Our main references are Cheridito et al. (2003), Kaarakka and Salminen (2011).

2.2.1 Fractional Ornstein–Uhlenbeck processes of the first kind

Let \(B=\{B_t\}_{ t\ge 0}\) be a fractional Brownian motion with Hurst parameter \(H \in (0,1)\). To obtain a fractional Ornstein–Uhlenbeck process, consider the following Langevin equation

The solution of the SDE (2.3) can be expressed as

Notice that the stochastic integral can be understood as a pathwise Riemann-Stieltjes integral or, equivalently, as a Wiener integral. Let \(\hat{B}\) denote a two sided fractional Brownian motion. The special selection

leads to a unique (in the sense of finite dimensional distributions) stationary Gaussian process \(U^{(H)}\) of the form

Definition 2.1

Kaarakka and Salminen (2011) The process \(U^{(H,\xi _0)}\) given by (2.4) is called a fractional Ornstein–Uhlenbeck process of the first kind with initial value \(\xi _0\). The process \(U^{(H)}\) defined in (2.5) is called a stationary fractional Ornstein–Uhlenbeck process of the first kind.

Remark 2.1

It is shown in Cheridito et al. (2003) that the covariance function of the stationary process \(U^{(H)}\) decays like a power function, and hence \(U^{(H)}\) is ergodic. Furthermore, for \(H \in (\frac{1}{2},1)\) the process \(U^{(H)}\) exhibits long range dependence.

2.2.2 Fractional Ornstein–Uhlenbeck processes of the second kind

Now we define a new stationary Gaussian process \(X^{(\alpha )}\) by means of Lamperti transformation of the fractional Brownian motion \(B\). More precisely, we set

where \(\alpha >0\) and \(a_t= \frac{H}{\alpha }e^{\frac{\alpha t}{H}}\). We aim to represent the process \(X^{(\alpha )}\) as a solution to the Langevin equation. To this end, we consider the process \(Y^{\alpha }_t\) defined via

where again the stochastic integral can be understood as a pathwise Riemann-Stieltjes integral as well as a Wiener integral. Using the self-similarity property of fractional Brownian motion one can see that ((Kaarakka and Salminen 2011, Proposition6)) the process \(Y^{(\alpha )}\) satisfies a scaling property

where \(\mathop {=}\limits ^{\text {f.d.d}}\) stands for equality in finite dimensional distributions. Using \(Y^{(\alpha )}\), the process \( X^{(\alpha )}\) can be viewed as a solution of the Langevin equation

with random initial value \(X_0^{(\alpha )}=B_{a_0} = B_{H/\alpha }\sim \mathcal {N}(0, (\frac{H}{\alpha })^{2H})\). Taking into account the scaling property (2.7), we consider the following Langevin equation

with \(Y^{(1)}\) as the driving noise. The solution of the Eq. (2.8) is given by

with \(\alpha =1\) in \(a_t\). Moreover, special selection \(X_0 = \int ^0_{-\infty } e^{(\theta -1) s} \,\mathrm {d}B_{a_s}\) for the initial value \(X_0\) leads to the following unique stationary Gaussian process

Definition 2.2

Kaarakka and Salminen (2011) The process \(X\) given by (2.9) is called the fractional Ornstein–Uhlenbeck process of the second kind with initial value \(X_0\). The process \(U\) defined in (2.10) is called the stationary fractional Ornstein–Uhlenbeck process of the second kind.

For the rest of the paper we assume \(H> \frac{1}{2}\) and we take \(X_0=0\) in the general solution (2.9). Then the corresponding fractional Ornstein–Uhlenbeck process of the second kind takes the form

and we have a useful relation

We start with a series of known results on fractional Ornstein–Uhlenbeck processes of the second kind required for our purposes.

Proposition 2.2

Azmoodeh and Morlanes (2013) Denote \(\tilde{B}_t= B_{t+H} - B_{H}\) the shifted fractional Brownian motion and let \(X\) be the fractional Ornstein–Uhlenbeck process of the second kind given by (2.11). Then there exists a regular (see (Alos et al. (2001), page767) for definition) Volterra kernel \(\tilde{L}\) such that

where the Gaussian process \(\tilde{G}\) is given by

and \(\tilde{W}\) is a standard Brownian motion.

Remark 2.2

Notice that by a direct computation and applying Lemma 4.3 of Azmoodeh and Morlanes (2013), the inner product of the Hilbert space \(\tilde{\mathcal {H}}\) generated by the covariance function of the Gaussian process \(\tilde{G}\) is given by

where \(\varphi , \psi \in \tilde{\mathcal {H}}\) and \(\alpha _H=H(2H-1)\).

The following lemma plays an essential role in the paper. More precisely, we use this lemma to construct our estimators for drift parameter. In what follows, \(B(x,y)\) denotes the complete Beta function with parameters \(x\) and \(y\).

Proposition 2.3

Azmoodeh and Morlanes (2013) Let \(X\) be the fractional Ornstein–Uhlenbeck process of the second kind given by (2.11). Then

almost surely and in \(L^2(\Omega )\), where

Proposition 2.4

Kaarakka and Salminen (2011) The covariance function \(c\) of the stationary process \(U\) decays exponentially and hence \(U\) exhibits short range dependence. More precisely, we have

Let \(v_{U}\) be the variogram of the stationary process \(U\), i.e.

The following lemma tells us the behavior of the variogram function \(v_{U}\) near zero. For functions \(f\) and \(g\), the notation \(f(t) \sim g(t)\) as \(t \rightarrow 0\) means that \(f(t) = g(t) + r(t)\), where \(r(t)=o(g(t))\) as \(t \rightarrow 0\).

Lemma 2.1

The variogram function \(v_{U}\) satisfies

Proof

Due to (Kaarakka and Salminen (2011), Proposition3.11) there exists a constant \(C(H,\theta )= H(2H-1) H^{2H(1 - \theta )}\) such that

Denote the term inside parentheses by \(\Phi (t)\). Then with some direct computations, one can see that

Therefore,

where \(r(t)=o(t^{2H})\) as \(t \rightarrow 0^+\). Hence, by use of the mean value theorem, we infer that as \(t \rightarrow 0^+\) we have

Substituting (2.16) into (2.15) we obtain the claim.\(\square \)

The next lemma studies the regularity of sample paths of the fractional Ornstein–Uhlenbeck process of the second kind \(X\). Usually Hölder constants are almost surely finite random variables and depend on bounded time intervals where the process is considered. The next lemma gives more probabilistic information on Hölder constants.

Lemma 2.2

Let \(X\) be the fractional Ornstein–Uhlenbeck process of the second kind given by (2.11). Then for every interval \([S,T]\) and every \(0 < \epsilon < H\), there exist random variables \(Y_1=Y_1(H,\theta )\), \(Y_2 = Y_2(H,\theta ,[S,T])\), \(Y_3 = Y_3(H,\theta ,[S,T])\), and \(Y_4 = Y_4(H,\epsilon ,[S,T])\) such that for all \(s,t \in [S,T]\)

almost surely. Moreover,

-

(i)

\(Y_1<\infty \) almost surely,

-

(ii)

\(Y_k (H,\theta ,[S,T]) \mathop {=}\limits ^{\text {law}}Y_k(H,\theta ,[0,T-S]), \quad k= 2,3,\)

-

(iii)

\(Y_4(H,\epsilon ,[S,T]) \mathop {=}\limits ^{\text {law}}Y_4(H,\epsilon ,[0,T-S]).\)

Furthermore, all moments of random variables \(Y_2\), \(Y_3\) and \(Y_4\) are finite, and \(Y_2(H,\theta ,[0,T])\), \(Y_3(H,\theta ,[0,T])\) and \(Y_4(H,\epsilon ,[0,T])\) are increasing in \(T\).

Proof

Assume \(s<t\). By change of variables formula we obtain

where

Therefore

For the term \(I_1\), we obtain

where \(\theta |B_{a_0}|\) is almost surely finite random variable. Similarly for the term \(I_3\) we get

Note next that \(Z\) is a differentiable process. Hence for the term \(I_4\) we get

Moreover, by using (2.12), we have

As a result we obtain

which implies

Collecting the estimates for \(I_1\), \(I_3\) and \(I_4\) we obtain

Put

and finally

Obviously the random variable \(Y_1\) fulfils property \((i)\). Notice also that \(U_t\) and \(e^{-u}B_{a_t}\) are continuous, stationary Gaussian processes from which property \((ii)\) follows. Moreover, all moments of supremum of a continuous Gaussian process on a compact interval are finite (see Lifshits (1995) for details on supremum of continuous Gaussian process). Hence it remains to consider the term \(I_2\). By Hölder continuity of the sample paths of fractional Brownian motion we obtain

To conclude, we obtain (see Nualart and Răşcanu (2002) and remark below) that the random variable \(C(\omega ,H,\epsilon ,[S,T])\) has all the moments and \(C(\omega ,H,\epsilon ,[S,T]) \mathop {=}\limits ^{\text {law}}C(\omega ,H,\epsilon ,[0,T-S])\). Now it is enough to take \(Y_4 = C(\omega ,H,\epsilon ,[S,T])\).\(\square \)

Remark 2.3

The exact form of the random variable \(C(\omega ,H,\epsilon ,[0,T])\) is given by

where \(C_{H,\epsilon }\) is a constant. Moreover, for all \(p \ge 1\) there exists a constant \(c_{\epsilon ,p}\) such that \(\mathbb {E}C(\omega ,H,\epsilon ,[0,T])^p\le c_{\epsilon ,p}T^{\epsilon p}\).

3 Estimation of the drift parameter when \(H\) is known

We start with the fact that the function \(\Psi \) is invertible. This fact allows us to construct an estimator for the drift parameter \(\theta \).

Lemma 3.1

The function \(\Psi :\mathbb {R}_+\rightarrow \mathbb {R}_+\) given by (2.14) is bijective, and hence invertible.

Proof

It is straightforward to see that \(\Psi \) is surjective. Hence the claim follows because for any fixed parameter \(y>0\), the complete Beta function \(B(x,y)\) is decreasing in the variable \(x\).\(\square \)

We continue with the following central limit theorem.

Theorem 3.1

Let \(X\) be the fractional Ornstein–Uhlenbeck process of the second kind given by (2.11). Then as \(T\) tends to infinity, we have

where the variance \(\sigma ^2\) is given by

The proof relies on two lemmas proved in the Appendix where we also show that \(\sigma ^2<\infty \). The variance \(\sigma ^2\) is given as iterated integral over \([0,\infty )^3\) and the given equation is probably the most compact form.

Proof of Theorem 3.1

For further use put

where the symmetric function \(\tilde{g}\) of two variables is given by

The notation \(I_2^{\tilde{G}}\) refers to multiple Wiener integral with respect to \(\tilde{G}\) introduced in Subsection 2.1. By Proposition 2.2 we have

Using product formula for multiple Wiener integrals and Fubini’s theorem we infer that

We get

Next we note that \((\)see (Azmoodeh and Morlanes 2013, Lemma3.4)\()\)

Hence

and thus we obtain

Therefore it suffices to show that

as \(T\) tends to infinity. Now by Lemmas 5.1 and 5.2 presented in the Appendix A we have

Hence the result follows by applying Proposition 2.1.\(\square \)

Now we are ready to state the main result of this section.

Theorem 3.2

Assume we observe the fractional Ornstein–Uhlenbeck process of the second kind \(X\) given by (2.11) at discrete time points \(\{t_k = k\Delta _N, k=0,1,\ldots ,N\}\) and put \(T_N = N\Delta _N\). Assume that \(\Delta _N \rightarrow 0, \ T_N\rightarrow \infty \) and \(N\Delta _N^{2}\rightarrow 0\) as \(N\) tends to infinity. Define

where \(\Psi ^{-1}\) is the inverse of the function \(\Psi \) given by (2.14). Then \(\widehat{\theta }\) is a strongly consistent estimator of the drift parameter \(\theta \) in the sense that as \(N\) tends to infinity, we have

almost surely. Moreover, we have

where

and \(\sigma ^2\) is given by (3.1).

Proof

Applying Lemma 2.2 we obtain for any \(\epsilon \in (0,H)\) that

We begin with last term \(I_4\). Clearly we have

By Remark 2.3, we have \(\mathbb {E}Y_4(H,\epsilon ,[0,T_N])^p \le CT_N^{\epsilon p}\) for any \(p \ge 1\). Hence, thanks to Markov’s inequality, we obtain for every \(\delta >0\) that

Now by choosing \(\epsilon <\gamma \) and \(p\) large enough we obtain

Consequently, Borel-Cantelli Lemma implies that

almost surely for any \(\gamma >\epsilon \). Similarly, we obtain

almost surely for any \(\gamma >0\). Consequently, we get

almost surely for any \(\gamma > \epsilon \). Note also that by choosing \(\epsilon >0\) small enough we can choose \(\gamma \) in such way that \(1+2\epsilon <1+2\gamma < \frac{3}{4} + \frac{H-\epsilon }{2}\). In particular, this is possible if \(\epsilon < \min \left\{ H-\frac{1}{2},\frac{H}{5}\right\} \). With this choice we have

almost surely, because the condition \(N\Delta _N^2\rightarrow 0\) and our choice of \(\gamma \) implies that

Treating \(I_1\), \(I_2\), and \(I_3\) in a similar way, we deduce that

almost surely. Moreover, we have convergence (3.6) by Lemma 2.3. To conclude the proof, we set \(\mu = \Psi (\theta )\) and use Taylor’s theorem to obtain

for some reminder function \(R_1(x)\) such that \(R_1(x)\rightarrow 0\) when \(x\rightarrow \Psi (\theta )\). Now continuity of \(\frac{\mathrm {d}}{\mathrm {d}\mu } \Psi ^{-1}\) and \(\Psi ^{-1}\) implies that \(R_1\) is also continuous. Hence the result follows by using (3.9), Theorem 3.1, Slutsky’s theorem and the fact that

\(\square \)

Remark 3.1

We remark that it is straightforward to construct strongly consistent estimator without the mesh restriction \(\Delta _N \rightarrow 0\). However, in order to obtain central limit theorem using Theorem 3.1, one need to pose the condition \(\Delta _N \rightarrow 0\) to get the convergence

Remark 3.2

Note that we obtained a consistent estimator which depends on the inverse of the function \(\Psi \). However, to the best of our knowledge there exists no explicit formula for the inverse and hence the inverse has to be computed numerically.

Remark 3.3

Theorem 3.2 imposes different conditions on the mesh \(\Delta _N\). One possible choice for the mesh satisfying such conditions is \(\Delta _N = \frac{\log N}{N}\).

Remark 3.4

Notice that we obtained strong consistency of the estimator \(\widehat{\theta }\) without assuming uniform discretization of the partitions. The uniform discretization will play a role in estimating the Hurst parameter \(H\).

4 Estimation of the Hurst parameter \(H\)

There are different approaches to estimate the Hurst parameter \(H\) of fractional processes. Here we consider an approach which is based on filtering. For more details we refer to Istas and Lang (1997), Coeurjolly (2001).

Let \(\mathbf a =(a_0,a_1, \ldots ,a_L) \in \mathbb {R}^{L+1}\) be a filter of length \(L+1, L \in \mathbb {N}\), and of order \(p\ge 1\), i.e. for all indices \(0 \le q < p\),

We define the dilated filter \(\mathbf a ^2\) associated to the filter \(\mathbf a \) by

for \(0\le k\le 2L\). Assume that we observe the process \(X\) given by (2.11) at discrete time points \(\{t_k = k\Delta _N, k=1,\ldots ,N\}\) such that \(\Delta _N \rightarrow 0\) as \(N\) tends to infinity. We denote the generalized quadratic variation associated to filter \(\mathbf a \) by

and we consider the estimator \(\widehat{H}_N\) given by

Assumption (A):

We say the filter \(\mathbf a \) of the length \(L+1\) and order \(p\) satisfies assumption (A) if for any real number \(r\) such that \(0 < r < 2p\) and \(r\) is not an even integer, the following property holds:

Example 1

A typical example of a filter with finite order satisfying assumption (A) is \(\mathbf a = (1,-2,1)\) with order \(p=2\).

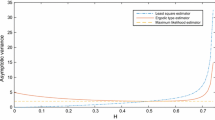

Theorem 4.1

Let \(\mathbf a \) be a filter of the order \(p\ge 2\) satisfying assumption (A) and put \(\Delta _N = N^{- \alpha }\) for some \(\alpha \in (\frac{1}{2},\frac{1}{4H-2})\). Then

almost surely as \(N\) tends to infinity. Moreover, we have

where the variance \(\Gamma \) depends on \(H\), \(\theta \) and the filter \(\mathbf a \) and is explicitly computed in Coeurjolly (2001) and also given in Brouste and Iacus (2012).

Remark 4.1

It is worth to mention that when \(H < \frac{3}{4}\), it is not necessary to assume that the observation window \(T_N= N \Delta _N\) tends to infinity whereas for \(H \ge \frac{3}{4}\) condition \(T_N \rightarrow \infty \) is necessary (see Istas and Lang 1997). Notice also that \(H \ge \frac{3}{4}\) if and only if \(\frac{1}{4H-2} \le 1\).

Proof of Theorem 4.1

Let \(v_U\) denote the variogram of the process \(U\). By Lemma 2.1 we have

as \(t\rightarrow 0^+\), where \(r(t)=o(t^{2H})\). Moreover, \(r(t)\) is differentiable and direct calculations show that for \(\epsilon \in (0,1)\)

Hence the claim follows by following the proof in Brouste and Iacus (2012) for the fractional Ornstein–Uhlenbeck process of the first kind and applying results of (Istas and Lang (1997), Theorem3). To conclude, we note that the given variance is also computed in (Coeurjolly (2001), p. 223).\(\square \)

5 Estimation of the drift parameter when \(H\) is unknown

In this section we consider \(\Psi (\theta ,H)\) instead of \(\Psi (\theta )\) to take account the dependence on Hurst parameter \(H\). Let \(\mu =\Psi (\theta ,H)\). Now implicit function theorem implies that there exists a continuously differentiable function \(g(\mu ,H)\) such that

where \(\theta \) is the unique solution to equation \(\mu =\Psi (\theta ,H)\). Hence for every fixed \(H\), we have

Moreover, by chain rule we obtain

and note that here \(\frac{\partial g}{\partial \mu }\) and \(\frac{\partial \mu }{\partial H}\) are known from which we can compute \(\frac{\partial g}{\partial H}\). Let \(\widehat{\mu }_{2,N}\) be given by (3.5) and let \(\widehat{H}_N\) be given by (4.1) for some filter \(\mathbf a \) of order \(p\ge 2\) satisfying assumption (A). We consider the estimator

for which we have the following result.

Theorem 5.1

Assume \(\Delta _N = N^{-\alpha }\) for some number \(\alpha \in (\frac{1}{2},\frac{1}{4H-2}\wedge 1)\). Then the estimator \(\widetilde{\theta }_N\) given by (5.1) is strongly consistent, i.e. as \(N\) tends to infinity, we have

almost surely. Moreover, we have

where the variance \(\sigma ^2_{\theta }\) is given by (3.8).

Proof

First note that

Now convergence

is in fact Theorem 3.2. Moreover, by Taylor’s theorem we get

for some reminder function \(R_2\) which converges to zero as \((\hat{\mu }_{2,N},\hat{H}_N)\rightarrow (\mu ,H)\). Therefore, by continuity and Theorem 4.1 we obtain

in probability. Hence, we also have

by Slutsky’s theorem. To conclude the proof, we obtain (5.2) from Eq. (5.4) by continuous mapping theorem.\(\square \)

References

Alos E, Mazet O, Nualart D (2001) Stochastic calculus with respect to Gaussian processes. Ann Probab 29(2):766–801

Azmoodeh E, Morlanes I (2013) Drift parameter estimation for fractional Ornstein-Uhlenbeck process of the second kind. Statistics, doi:10.1080/02331888.2013.863888

Bercu B, Coutin L, Savy N (2011) Sharp large deviations for the fractional Ornstein–Uhlenbeck process. Teor. Veroyatn. Primen., 55(4), pp. 732–771, translation in. Theory Probab Appl 55(4):575–610

Brouste A, Iacus SM (2012) Parameter estimation for the discretely observed fractional Ornstein–Uhlenbeck process and the Yuima R package. Comput Stat. doi:10.1007/s00180-012-0365-6

Chavez-Demoulin V, Davison AC (2012) Modelling time series extremes. REVSTAT 10(1):109–133

Cheridito P, Kawaguchi H, Maejima M (2003) Fractional Ornstein–Uhlenbeck processes. Electr J Probab 8:1–14

Coeurjolly J-F (2001) Estimating the parameters of a fractional Brownian motion by discrete variations of its sample paths. Stat Inference Stoch Process 4:199–227

Hu Y, Nualart D (2010) Parameter estimation for fractional Ornstein–Uhlenbeck processes. Stat Probab Lett 80(11–12):1030–1038

Istas J, Lang G (1997) Quadratic variations and estimation of the local hölder index of a gaussian process. Annales de l’Institut Henri Poincaré. 23(4):407–436

Kaarakka T, Salminen P (2011) On fractional Ornstein–Uhlenbeck processes. Commun Stoch Anal 5(1):121–133

Kleptsyna ML, Le Breton A (2002) Statistical analysis of the fractional Ornstein–Uhlenbeck type process. Stat Inference Stoch Process 5(3):229–248

Kutoyants Y (2004) Statistical inference for ergodic diffusion processes. Springer series in statistics. Springer, London

Lifshits MA (1995) Gaussian random functions. Mathematics and its applications, vol 322. Kluwer Academic Publishers, Dordrecht

Mynbaev KT (2011) Short-memory linear processes and econometric applications. Wiley, Hoboken

Nourdin I, Peccati G (2012) Normal approximations using Malliavin Calculus: from Stein’s Method to Universality. Cambridge Tracts in Mathematics. cambridge University, Cambridge

Nualart D (2006) The Malliavin Calculus and related topics. Probability and its application. Springer, New York

Nualart D, Ortiz-Latorre S (2008) Central limit theorems for multiple stochastic integrals and Malliavin calculus. Stoch Process Appl 118:614–628

Nualart D, Răşcanu A (2002) Differential equations driven by fractional Brownian motion. Collect Math 53:55–81

Prakasa Rao BLS (2010) Statistical inference for fractional diffusion processes. Wiley series in probability and statistics. Wiley, Chichester

Xiao W, Zhang W, Xu W (2011) Parameter estimation for fractional Ornstein–Uhlenbeck processes at discrete observation. Appl Math Model 35(9):4196–4207

Acknowledgments

The authors thank Lasse Leskelä for useful discussions and comments. Lauri Viitasaari thanks the Finnish Doctoral Programme in Stochastics and Statistics for financial support. Azmoodeh is supported by research project F1R-MTH-PUL-12PAMP. The authors thank both anonymous referees for careful reading of the previous version of this paper and for their valuable comments which improved the paper.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Computations used in the paper

Lemma 5.1

For \(F_T\) given by (3.2) and the variance \(\sigma ^2\) given by (3.1) we have

as \(T\) tends to infinity.

Proof

It is sufficient to show that as \(T\) tends to infinity, we have

Indeed, since

we obtain that (5.6) implies (5.5). Now we have

Hence, using Remark 2.2, we can write

Let now \(K(u,v)\) denote the kernel associated to the space \(\tilde{\mathcal {H}}\) i.e.

Using multiplicative formula for multiple Wiener integrals we see that

Here \(A_1\) is deterministic and \(A_2\) has expectation zero. Hence, in order to have (5.6), we need to show that

Therefore, by applying Fubini’s Theorem, it suffices to show that

as \(T\) tends to infinity. First we get

By plugging into (5.9) we obtain that it suffices to have

as \(T\) tends to infinity. Here we have

Note first that for every \(0\le x,y\le T\), we have that

As a consequence, we can omit the term \(e^{-\theta (2T-x-y)}\) on function \(\tilde{g}(x,y)\). This implies that instead of

it is sufficient to consider the following integrand:

Next we consider the first term and show that

In what follows \(C\) is a non-important constant which may vary from line to line. First it is easy to prove that

where constant does not depend on \(y\) or \(T\). Moreover, by change of variable we obtain

for every \(y\) and \(T\). Consider now the iterated integral in (5.12). The value of the integral depends on the order of the variables, and eight variables can be ordered in \(8! = 40320\) ways. However, it is clear that without loss of generality we can choose the smallest variable, say \(y_2\), and integrate over region \(\{0<y_2<u_1,u_2,v_1,v_2,x_1,x_2,y_1<T\}\). Other cases can be treated similarly with obvious changes. Assume now that the smallest variable is \(y_2\) and denote the second smallest variable by \(r_7\), i.e.

Integrating first with respect to \(y_2\) and applying upper bound \(e^{\theta y_2} \le e^{\theta r_7}\) together with (5.14), we obtain that

Next we integrate with respect to \(y_1\). In the case when \(r_7=y_1\), we have

where \(r_6\) is the third smallest variable, and in the case when \(r_7 \ne y_1\), we obtain by (5.13)

Hence we obtain upper bound

Next we integrate first with respect to variables \(v_1\) and \(v_2\) and then with respect to variables \(u_1\) and \(u_2\). Together with estimates (5.13) and (5.14) this yields

which gives (5.12). It remains to note that other three terms in (5.11) can be treated with the same arguments since only the ”pairing” of variables in terms of form \(e^{-\theta |x-y|}\) changes. Thus we have (5.10) and implications (5.10)\(\Rightarrow \)(5.8)\(\Rightarrow \)(5.6) \(\Rightarrow \)(5.5) complete the proof.\(\square \)

Lemma 5.2

For \(F_T\) given by (3.2) and \(\sigma ^2\) given by (3.1) we have

as \(T\) tends to infinity.

Proof

Using isometry we obtain

where

Recall that

We first show that we can omit the second term \(\frac{1}{2\theta }e^{-\theta (2T-x-y)}\) in the function \(\tilde{g}\). To see this, we have

By change of variables \(\tilde{v}=T-v\), \(\tilde{u}=T-u\), and then \(x=e^{-\frac{\tilde{v}}{H}}\), \(y=e^{-\frac{\tilde{u}}{H}}\) we infer that this is the same as

Let now \(x<y\). By change of variable \(z=\frac{x}{y}\) we obtain

which converges to zero when divided with \(T\) tending to infinity. The case \(x>y\) can be treated in a similar way, and hence it is sufficient to consider the function

instead of \(\tilde{g}(x,y)\). We shall use L’Hopital’s rule to compute the limit. Taking derivative with respect to \(T\), we obtain

By change of variables \(x=T-u_1\), \(y=T - u_2\) and \(z=T-v_1\), this reduces to

Therefore, we have

We end the proof by showing that this triple integral, denoted by \(I\), is finite. By use of the obvious bound \(e^{-\theta |z-y|} \le 1 \) we infer that

For the term \(I_1\), we obtain by change of variable \(u=e^{-\frac{y}{H}}\) that

For the term \(I_2\), we obtain by change of variables \(u=e^{-\frac{x}{H}}\) and \(v=e^{-\frac{z}{H}}\) that

For the term \(I_{2,1}\), we obtain by change of variable \(z=\frac{v}{u}\) that

Similarly for the term \(I_{2,2}\), we get by change of variable \(z=\frac{u}{v}\) that

\(\square \)

Rights and permissions

About this article

Cite this article

Azmoodeh, E., Viitasaari, L. Parameter estimation based on discrete observations of fractional Ornstein–Uhlenbeck process of the second kind. Stat Inference Stoch Process 18, 205–227 (2015). https://doi.org/10.1007/s11203-014-9111-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11203-014-9111-8

Keywords

- Fractional Ornstein–Uhlenbeck processes

- Malliavin calculus

- Multiple Wiener integrals

- Central limit theorem (CLT)

- Parameter estimation