Abstract

Altmetrics is an emergent research area whereby social media is applied as a source of metrics to assess scholarly impact. In the last few years, the interest in altmetrics has grown, giving rise to many questions regarding their potential benefits and challenges. This paper aims to address some of these questions. First, we provide an overview of the altmetrics landscape, comparing tool features, social media data sources, and social media events provided by altmetric aggregators. Second, we conduct a systematic review of the altmetrics literature. A total of 172 articles were analysed, revealing a steady rise in altmetrics research since 2011. Third, we analyse the results of over 80 studies from the altmetrics literature on two major research topics: cross-metric validation and coverage of altmetrics. An aggregated percentage coverage across studies on 11 data sources shows that Mendeley has the highest coverage of about 59 % across 15 studies. A meta-analysis across more than 40 cross-metric validation studies shows overall a weak correlation (ranging from 0.08 to 0.5) between altmetrics and citation counts, confirming that altmetrics do indeed measure a different kind of research impact, thus acting as a complement rather than a substitute to traditional metrics. Finally, we highlight open challenges and issues facing altmetrics and discuss future research areas.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Since 2010, altmetrics has been emerging as a new source of metrics to measure scholarly impact (Priem et al. 2010). Traditional impact indicators, known as bibliometrics, are commonly based on the number of publications, citation counts and peer reviews of a researcher or journal or institution (Haustein and Larivière 2015). As research publications and other research outputs were increasingly placed online, usage metrics (based on download and view counts) as well as webometrics (based on web links) (Priem and Hemminger 2010; Thelwall 2012b) emerged. These days, research outputs have become more diverse and are increasingly being communicated and discussed on social media. Altmetrics are based on these activities and interactions on social media relating to research output (Weller 2015).

Although there is no formal definition of altmetrics, several definitions have been proposed. From the vision presented in the Altmetrics Manifesto by Priem et al. (2010), altmetrics is defined as: “This diverse group of activities (that reflect and transmit scholarly impact on Social Media) forms a composite trace of impact far richer than any available before. We call the elements of this trace altmetrics.”; as well as this definition on altmetrics.org: “altmetrics is the creation and study of new metrics based on the Social Web for analyzing, and informing scholarship.”Footnote 1 Also from Priem et al. (2014, p. 263): “(...) altmetrics (short for “alternative metrics”), an approach to uncovering previously-invisible traces of scholarly impact by observing activity in online tools and systems.” Another definition has been proposed by Piwowar (2013, p. 159): “(...) scientists are developing and assessing alternative metrics, or 'altmetrics’—new ways to measure engagement with research output.” A definition from Haustein et al. (2014a, p. 1145) states: “Altmetrics, indices based on social media platforms and tools, have recently emerged as alternative means of measuring scholarly impact.” And recently, Weller (2015, pp. 261–262) propose these definitions: “Altmetrics–evaluation methods of scholarly activities that serve as alternatives to citation-based metrics (...)” and “Altmetrics are evaluation methods based on various user activities in social media environments.” In a white paper from NISO (2014) (National Information Standards Organization),Footnote 2 altmetrics is described as: ““Altmetrics” is the most widely used term to describe alternative assessment metrics. Coined by Jason Priem in 2010, the term usually describes metrics that are alternative to the established citation counts and usage stats—and/or metrics about alternative research outputs, as opposed to journal articles.”

In summary, the common understanding across all definitions is that altmetrics are new or alternative metrics to the established metrics for measuring scholarly impact. The main difference in the definitions however is in how and where altmetrics can be found—activities on Social Media, based on the Social Web, observing activity in online tools and systems, engagement with research output, based on social media platforms and tools, scholarly activities or various user activities in social media environments.

The main advantages of altmetrics over traditional bibliometrics and webometrics is that they offer fast, real-time indications of impact, they are openly accessible and transparent, include a broader non-academic audience, and cover more diverse research outputs and sources (Wouters and Costas 2012). Altmetrics, however, also face several challenges such as gaming, manipulation and data quality issues (Bornmann 2014b). As the awareness of altmetrics grows, an increasing number of people from different sectors, disciplines and countries are showing more interest in altmetrics and want to know its pitfalls and potentials. Universities, libraries, funding agencies, and researchers have common concerns and questions regarding altmetrics. For example, what are altmetrics? When did research on altmetrics commence and what topics have been investigated? How do altmetrics compare to traditional metrics? Are there any studies measuring this and what are their findings?

This paper aims to give an overview of this emerging research area of measuring research impact based on social media and to address some of these questions. There have been compilations on existing altmetrics tools such as by Chamberlain (2013), Peters et al. (2014), Priem and Hemminger (2010), Wouters and Costas (2012), and more recent listings by Kumar and Mishra (2015), and by Weller (2015), where a review of the altmetrics literature is given. Our paper aims to give a compact and yet comprehensive overview of the altmetrics landscape, applying and considering the frameworks and listings made in previous works. In “The altmetrics landscape” section, an overview is given of the altmetrics landscape depicting the inter-relationships between the different aggregators, comparing their different features, data sources, and social media events. In “Literature on altmetrics research” section, we analyse a cross-section of the academic literature relating to altmetrics research, thereby highlighting the trends and research topics handled in recent years. In “Results of research on altmetrics” section the consolidated results across multiple studies on coverage of altmetrics are presented as well as the results of a meta-analysis of cross-metric validation studies comparing altmetrics to citations and to other altmetrics. We conclude in “Conclusion and outlook” with a discussion on the challenges facing this relatively new research area and highlight the gaps and future topics.

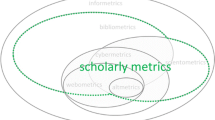

The altmetrics landscape

According to Haustein et al. (2016), a research object is an agent or document for which an event can be recorded. Events are recorded activities or actions that capture acts of accessing, appraising or applying research objects. Altmetrics are based on these events. Research documents include very diverse artifacts, for example, traditional documents could be journal articles, book chapters, conference proceedings, technical reports, theses, dissertations, posters, books, and patents. These are hosted typically on publisher’s websites, in online journals and in digital libraries such as PMC (PubMed Central), Scopus, PLOS, Elsevier, or Springer. Research documents hosted on social media comprise more modern research artifacts such as presentation slides on SlideShare, lectures videos on YouTube, blog posts on ResearchBlogger, datasets on Dryad, and software code on GitHub. Research agents could be individual scholars, research groups, departments, universities, institutions or funding agencies. Research agents are hosted usually on research or academic social networks such as ResearchGate, and Academia.edu. The altmetrics landscape covers various social media applications and platforms as data sources for altmetrics. Data sources record events related to research objects, making these available usually via an API, an online platform, a repository, or a reference manager. Hosts of research objects often act as data sources, for example, MendeleyFootnote 3 records events on articles such as saved or read and provides these events to altmetrics consumers. Altmetrics consumers comprise so called aggregators or providers of altmetrics who track and aggregate the various events gathered from social media data sources Haustein et al. (2016). These aggregated events are made available as altmetrics to the end-users, who are usually researchers, faculty staff, libraries, publishers, research institutions, universities, or funding agencies. In the following, we highlight the major altmetrics aggregators and make a comparison of the features they offer and the different data sources they use.

Altmetric aggregators

In the last few years, several aggregators have been created that either act as providers of altmetrics, as impact monitors or metric aggregators. In Fig. 1, an overview of the altmetrics aggregators is given, showing how they interact with one another. Some aggregators use data from other aggregators thus becoming secondary or tertiary aggregators. The information compiled here is based on the information gathered from the aggregtors’ websites or blogs (accessed as of 18 December, 2015) and is subject to change as updates are made to their websites and blogs regularly. Of course, some hosts such as academic social networks like ResearchGate or Academia.edu, or some publishers could also be considered as aggregators or providers as they increasingly track events (mostly usage metrics like views or downloads) on their platforms. These hosts however mainly collect locally generated events and presently do not aim to aggregate events from different sources. Thus, we consider them not to be aggregators but rather to be hosts and in some cases data sources.

-

(a)

Altmetric.com Footnote 4 (Adie and Roe 2013) was started in 2011 and provides the Altmetric Score which is a quantitative measure of the attention that a scholarly article has received, derived from three major factors: volume; sources and authors. A free bookmarklet is offered for researchers and access to an API, embeddable badges and an explorer are offered at a fee. A web application is provided for Scopus, called Altmetric for Scopus and PLOS Altmetric Impact Explorer that allows browsing conversations collected for papers published by PLOS.

-

(b)

Impactstory Footnote 5 was earlier known in 2011 as Total Impact (Priem et al. 2012a). It is an open-source, web-based tool that helps scientists explore and share the impacts of their research outputs by supporting profile-based embedding of altmetrics in their CV (curriculum vitae) (Piwowar and Priem 2013). Some of the altmetrics are reused from Altmetric.com (social mentions) and PLOS ALM (HTML and PDF views) as shown in Fig. 1.

-

(c)

Plum Analytics Footnote 6 (Buschman and Michalek 2013) tracks more than 20 different types of artifacts and collects 5 major categories of impact metrics: usage, captures, mentions, social media and citations. Metrics are captured and correlated at the group level (e.g., lab, department, or journal). Plum Analytics compiles article level usage data from various sources including PLOS ALM as shown in Fig. 1. Plum Analytics, founded in 2011, offers several products including: PlumX ALM, Plum Print, PlumX Artifact Widget and Open API.

-

(d)

PLOS ALM Footnote 7 (Lin and Fenner 2013b) was launched in 2009, and provides a set of metrics called Article-Level Metrics (ALM) that measure the performance and outreach of research articles published by PLOS. The ALM API and dataset are freely available for all PLOS articles. PLOS also offers an open-source application called Lagotto, that retrieves metrics from a wide set of data sources, like Twitter, Mendeley, and CrossRef.

-

(e)

Kudos Footnote 8 is a web-based service that supports researchers in gaining higher research impact by increasing the visibility of their published work. Researchers can describe their research, share it via social media channels, and monitor and measure the effect of these activities. Kudos also offers services for institutions, publishers, and funders. Kudos compiles citation counts from WoS, altmetrics from Altmetric.com (as shown in Fig. 1). In addition, share referrals and views on Kudos are also collected.

-

(f)

Webometric Analyst Footnote 9 (Thelwall 2012a) was formerly known as LexiURL Searcher (Thelwall 2010). It uses URL citations or title mentions instead of hyperlink searches for network diagrams, link impact reports, and web environment networks. Webometric Analyst searches via Mendeley and Bing for metrics and reuses altmetrics from Altmetric.com, see Fig. 1.

-

(g)

Snowball Metrics Footnote 10 (Colledge 2014) comprises a set of 24 metrics based on agreed and tested methodologies. It aims to become a standard for institutional benchmarking. The metrics are categorised into (i) research input metrics: applications volume—the amount of research grant applications submitted to external funding bodies and awards volume—the number of awards granted and available to be spent; (ii) research process metrics: the volume of research income spent and the total value of contract research; and (iii) research output metrics: mainly scholarly output, field-weighted citation impact, collaboration impact, and altmetrics. As shown in Fig. 1, Snowball Metrics reuses altmetrics from Altmetric.com, Plum Analytics and Impactstory.

Although offline now, some other aggregators were, for example, ReaderMeterFootnote 11 that was created in 2010 and estimated the impact of scientific content based on their consumption by readers on Mendeley. Another example is ScienceCardFootnote 12 that was based on PLOS ALM and offered article-level metrics. Other examples are Crowdometer, that was a crowdsourced service to classify tweets, and CitedIn (Priem et al. 2012a), that collected altmetrics for PubMed articles.

Features of altmetric aggregators

In Table 1, the various features offered by the aggregators mentioned above are presented. Similar to Wouters and Costas (2012), these features comprise technical functionality like the availability of an API, the availability of a visualisation of altmetrics data, user interfaces such as widgets and bookmarklets, and search and filter options; quality features such as gaming and spam detection, disambiguation, normalisation, data access and management; as well as other services offered to target audiences and user groups, user access to the systems, coverage of the metrics, the level of metrics offered, and whether traditional metrics such as citations are included in order to directly compare impact measures on the systems. When no information is found, we state N/A for information not available. Most aggregators cover a wide range of data sources and offer some form of visualisation of altmetrics, e.g., through widgets, bookmarklets and embedding. As part of their quality assurance strategy, Altmetric.com uses only those data sources that can be audited (Adie and Roe 2013). The blogs and news sources are manually curated. Altmetric.com is the only aggregator that offers an aggregated score, alongside the metrics, for the artifacts monitored. All the aggregators cover multiple disciplines and are transparent about what data sources they cover.

Data sources used by altmetric aggregators

In Table 2, the diverse data sources used by the altmetric aggregators are shown. The data sources used by altmetric aggregators cover both social media data sources as well as bibliometric data sources, as altmetric aggregators report on bibliometrics as well as altmetrics as shown in Table 1. The data sources in Table 2 were collected from the aggregators’ websites and blogs. When no information is found, we state N/A for information not available. Inspired from Priem and Hemminger (2010), the data sources are classified into several categories: social bookmarking and reference managers; video, photo and slide sharing services; social networks; blogging; microblogging; recommendation and review systems; Q&A websites and forums; online encyclopaedia; online digital libraries, repositories and information systems; dataset repositories; source code repositories; online publishing services; search engines and blog aggregators; and other less common sources such as policy documents, news sources, specialised services and the Web in general. A lot of these data sources are described by Kumar and Mishra (2015) and analysed by Wouters and Costas (2012).

Mendeley is the most popular social media data source covered directly by all aggregators in the table apart from Snowball Metrics. Other popular data sources are Twitter, YouTube, Wikipedia, Scopus and PLOS. Most of the aggregators however have their own preference of data sources and only a few are used in common. Those data sources in brackets indicate no longer viable data sources. LinkedIn have closed their data stream and Connotea has discontinued service as of March 2013. Historical data from LinkedIn and Connotea are however still available on Altmetric.com. Rarely considered data sources are for example, Flickr, Technorati, Sina Weibo (a Chinese microblog), CRIS (Current Research Information System), WIPO (World Intellectual Property Organization), QS (Quacquarelli Symonds—a global provider of specialist higher education and careers information and solutions), SSRN (Social Science Research Network), ADS (Astrophysics Data System), bit.ly (a URL shortening service), COUNTER (Counting Online Usage of Networked Electronic Resources), policy documents, research highlights, news outlets, and trackbacks.

Events tracked by altmetric aggregators

Altmetrics are based on events on social media, which are created as a result of actions on research objects. There are several classifications for events (Bornmann 2014b; Lin and Fenner 2013a). For example, PLOS ALM has the categories: cited; discussed; viewed, and saved, while Plum Analytics has the categories: usage; captures; mentions, and social media citations. Haustein et al. (2016) propose a framework to describe the acts on research objects. Acts are defined as activities that occur on social media leading to online events. The three categories of acts have an increasing level of engagement from just accessing, to appraising, to applying (Haustein et al. 2016).

Table 3 gives an overview of the events which the altmetrics aggregators pull from the various social media data sources and aggregate to finally provide altmetrics to the end-users. We collected these events primarily from the listings on the aggregators’ websites and blogs. We also retrieved further details and events from their APIs and application source codes (on GitHub). For some of the data sources, we did not find any explicit information about which events are retrieved nor how these events are aggregated nor counted. When no information is found, we state N/A for information not available. We classify the events we found according to the framework from Haustein et al. (2016) and inspired by PLOS ALM’s and Plum Analytics’ categories. The event categories proposed here are Usage Events and Capture Events for access acts, Mention Events and Social Events for appraisal acts, and Citation Events for apply acts. The access events would encompass PLOS ALM’s viewed and saved categories, and the usage and captures categories from Plum Analytics. The appraise events would map to PLOS ALM’s discussed and mention categories and Plum Analytics’ social media citations. Apply events would correspond to PLOS ALM’s cited and Plum Analyticscitations.

-

Access Acts and Events Access Acts are actions that involve accessing and showing interest in a research object. For scholarly documents, this could be viewing the metadata of an article such as its title or abstract, or downloading and storing it. For scholarly agents, access could involve viewing the researcher’s homepage, downloading a CV, sending an email, befriending, or following the researcher (Haustein et al. 2016). Access Acts lead to usage events (e.g., views, reads, downloads, link outs, library holdings, clicks) and to capture events (e.g., saves, bookmarks, tags, favorites, or code forks). Data sources for Access Acts encompass diverse online platforms and repositories, reference managers, academic social networking platforms and communication media like email or messaging (Haustein et al. 2016). In Table 3, the most common usage events are views and downloads. Nearly all aggregators track Mendeley readers. Impactstory even tracks the percentage of readers by country, discipline and career stage.

-

Appraisal Acts and Events Appraisal Acts involve mention events such as comments, reviews, mentions, links or discussions in a post. Appraisal Acts also involve social events such as likes, shares or tweets. Ratings or recommendations could either be crowdsourced and quantitative like in Reddit, or qualitative (peer) judgements by experts as on F1000 or PubPeer (Haustein et al. 2016). From Table 3, the most often collected mention events are comments and reviews.

-

Apply Acts and Events Apply Acts are actions that actively use significant parts of, adapt or transform research objects as a foundation to create new work. These could be the application or citation of theories, frameworks, methods, or results. Apply Acts formulate something new by applying knowledge, experience and reputation, such as a thorough discussion in a blog, slides adapted for a lecture, a modified piece of software, a dataset used for an evaluation, or a prototype used for commercial purposes (Haustein et al. 2016). Apply Acts may also involve collaborating with others.

The details about the data sources and events retrieved from the aggregators were scattered across diverse websites and blogs, sometimes even with outdated and conflicting reports. Therefore, we do not claim that the listings in Tables 1, 2, nor in Table 3 are in any way exhaustive. When no information was found, we stated N/A (not available). It is also not clear if F1000Prime is a data source or an aggregator as it does calculate a score based on the ratings given by the F1000 faculty members.

Literature on altmetrics research

A systematic literature review of altmetrics literature was conducted with the aim of answering the following questions:

-

1.

How has literature on altmetrics grown over the years?

-

2.

What research topics on altmetrics have been covered over the years?

-

3.

Which social media data sources have been investigated over the years?

We applied a multi-staged sampling for the data collection for the literature review as shown in Fig. 2. In the first stage, a search with the search term altmetric* was conducted on 14 September, 2015 in Scopus to identify the venues having at least 6 articles in the search results. A total of 13 venues were identified, namely: Scientometrics,Footnote 13 the Journal of Informetrics (JOI),Footnote 14 the Journal of the Association for Information Science and Technology (JASIST).Footnote 15 PLOS ONE,Footnote 16 Proceedings of the ASIS&T Annual Meeting,Footnote 17 Insights: the UKSG journal,Footnote 18 Aslib Journal of Information Management,Footnote 19 PLOS Biology,Footnote 20 Proceedings of the International Society of Scientometrics and Informetrics Conference (ISSI),Footnote 21 College & Research Libraries News,Footnote 22 El profesional de la información,Footnote 23 Nature,Footnote 24 and CEUR Workshop Proceedings,Footnote 25 A total of 124 articles were thus retrieved in stage one. As sometimes the most recent articles for some venues (e.g., early view articles not yet assigned to a specific volume) were not yet indexed by Scopus, a full census from the venue itself was conducted in stage two using the same search term as in stage one. This produced a total of 220 articles. After data cleaning, 177 were identified as relevant to altmetrics research. Furthermore, articles appearing in multiple venues (e.g., first in a proceeding and then in a journal) were counted only once, thus bringing the total amount of articles analysed to 172.

Figure 3 shows the distribution of articles across the years from the selected venues. Publications relating to altmetrics research (explicitly mentioning the term altmetrics) commenced in 2011 and the number of publications has been steadily growing at a fast pace.

Research topics on altmetrics

Over the last years, there have been several case studies performed with the aim of investigating altmetrics. Figure 4 gives an overview of the research topics published. In the following, representative examples are given for the different research topics investigated. Diverse research objects have been investigated, ranging from scholarly agents like authors, scholars, departments, institutions, and countries, to scholarly documents like articles, reviews, conference papers, editorial materials, letters, notes, abstracts, books, software, annotations, blogs, blog posts, YouTube videos, and acknowledgements. The main focus of most of the research over the years has been on cross-metric validation (Bar-Ilan 2014; Bornmann 2014a, c) and the majority of these were cross-disciplinary studies (Kousha and Thelwall 2015c; Kraker et al. 2015; Thelwall and Fairclough 2015a; Zahedi et al. 2014a). The most common method used for cross-metric validation was the calculation of correlations. Most results showed a weak to medium correlation between altmetrics and traditional bibliometrics (Haustein et al. 2014a; Zahedi et al. 2014a). Another focus was on studies investigating the validity of data sources (Haustein and Siebenlist 2011; Kousha and Thelwall 2015c; Shema et al. 2014; Thelwall and Maflahi 2015a), and on the coverage of altmetrics (investigating the amount of research articles for which altmetrics were available for) (Bornmann 2014c; Haustein et al. 2014a; Zahedi et al. 2014a).

Most studies concluded that Mendeley (Haustein et al. 2014a; Thelwall and Fairclough 2015a; Zahedi et al. 2014a) and Twitter (Bornmann 2014c; Hammarfelt 2014) have been the most predominant data sources for altmetrics. There has been a growing interest in investigating the limitations of altmetrics (Hammarfelt 2014; Zahedi et al. 2014a), as well as the motivation of researchers using social media (Haustein et al. 2014a; Mas-Bleda et al. 2014), and the investigation of normalisation methods (Bornmann 2014c; Bornmann and Marx 2015; Thelwall and Fairclough 2015a). In recent years, attention has also been given to investigating differences due to country biases (Mas-Bleda et al. 2014; Ortega 2015a; Thelwall and Maflahi 2015a), demographics (Ortega 2015a), gender (Bar-Ilan 2014; Hoffmann et al. 2015), disciplines (Holmberg and Thelwall 2014; Mas-Bleda et al. 2014; Ortega 2015a), and user group differences (Bar-Ilan 2014; Hoffmann et al. 2015; Mas-Bleda et al. 2014; Ortega 2015a). Only a few articles looked at the visualisation of altmetrics (Hoffmann et al. 2015; Kraker et al. 2015; Uren and Dadzie 2015) and detecting gaming or spamming in recent years (Haustein et al. 2015a).

Research on social media data sources

The social media altmetrics data sources investigated were: Mendeley (Zahedi et al. 2014a), CiteULike (Haustein and Siebenlist 2011), Connotea (Haustein and Siebenlist 2011; Yan and Gerstein 2011), BibSonomy (Haustein and Siebenlist 2011), Twitter (Zahedi et al. 2014a), F1000/F1000Prime (Bornmann 2014c; Bornmann and Marx 2015), ResearchGate (Hoffmann et al. 2015), Academia.edu (Mas-Bleda et al. 2014), LinkedIn (Mas-Bleda et al. 2014), Facebook (Hammarfelt 2014), YouTube and Podcasts, ResearchBlogging (Shema et al. 2014) and other blogs, Wikipedia (Zahedi et al. 2014a), Delicious (Zahedi et al. 2014a), LibraryThing (Hammarfelt 2014), SlideShare (Mas-Bleda et al. 2014), Amazon Metrics (Kousha and Thelwall 2015c), and WorldCat library holdings (Kousha and Thelwall 2015c). Figure 5 gives an overview of the social media data sources investigated in studies on altmetrics over the years.

In the last 2 years, there has been a large increase in the number of different social media data sources considered as interesting for research studies on altmetrics. Recently, Mendeley is the data source receiving the most interest. The number of studies on Mendeley has nearly doubled since 2014 as can be seen in Fig. 5. Twitter has received a rather steady amount of interest over the years, but it now seems the interest is shifting to Mendeley. F1000Prime seems to be receiving just as much attention as Twitter in 2015 (Bornmann and Marx 2015). Facebook has also received steady but low attention over the years (Hammarfelt 2014). In recent years, there have been a few new data sources studied, such as LibraryThing (Hammarfelt 2014), Amazon and WorldCat library holdings (Kousha and Thelwall 2015c). ResearchGate also seems to be gaining interest (Hoffmann et al. 2015). Amongst the altmetrics aggregators, Altmetric.com received the most interest (Bornmann 2014c; Hammarfelt 2014; Loach and Evans 2015; Maleki 2015b; Peters et al. 2015), but also Impactstory (Maleki 2015b; Peters et al. 2015; Zahedi et al. 2014a), PlumX (Peters et al. 2015), PLOS ALM (Maleki 2015b), and Webometric Analyst (Kousha and Thelwall 2015a) have been investigated.

Results of research on altmetrics

The main research investigations on altmetrics over the last 5 years have been on the coverage of altmetrics and cross-validation studies. The results of these two research topics are collated and analysed in the following sections.

Results of studies on altmetrics coverage

From the literature review in “Literature on altmetrics research” section, 42 publications were identified as investigating the coverage of altmetrics. For a specified data source, the coverage indicates the percentage of articles in the study’s data sample that exist in the data source. Non-zero coverage considers only those articles in the study’s data sample that exist in the data source and have at least one event. Thus, for non-zero coverage, no article having zero coverage is included in the calculation of the coverage. As noted in Mohammadi and Thelwall (2014), articles may be available on Mendeley that have no events, and this would be considered as zero coverage. Furthermore, as discussed in Bornmann (2014c), Altmetric.com only covers articles that have at least one event from an altmetric data source. The distinction between zero and non-zero coverage is not uniformly, nor consistently made across all studies in our sample, thus we only report on non-zero coverage when it is explicitly stated as such.

We focus our analysis on altmetric data sources that have been covered in at least two studies. Thus, we report on the coverage of 11 altmetric data sources (Mendeley, Twitter, CiteULike, Blogs, F1000, Facebook, Google+, Wikipedia, News, Reddit, and ResearchBlogging), reported across 25 studies, with a total of 100 reported values. For this reason, the following studies on coverage were not included in the analysis: Delicious (Zahedi et al. 2014a), LibraryThing (Hammarfelt 2014), BibSonomy (Haustein and Siebenlist 2011), Connotea (Haustein and Siebenlist 2011; García et al. 2014), ResearchGate, Academia.edu, ORCID, Xing, MySpace (Haustein et al. 2014a), Figshare, DataCite, Nature.com posts, ScienceSeeker, and Word Press (Bornmann 2015c), Amazon reviews, WorldCat holdings (Kousha and Thelwall 2015c), Altmetric mentions (Araújo et al. 2015), peer review sites, Pinterest, Q&A threads, bibliometric data (Bornmann 2014c), software mentions (Howison and Bullard 2015), data citations (Peters et al. 2015), acknowledgements (Costas and Leeuwen 2012), syllabus mentions (Kousha and Thelwall 2015b), and mainstream media discussions (Haustein et al. 2015b).

In this analysis, the following research questions are investigated:

- RQ1.1 :

-

What is the overall percentage coverage reported across studies for different data sources?

- RQ1.2 :

-

What is the overall mean event count reported across studies for different data sources?

- RQ1.3 :

-

What are the overall percentage non-zero coverage and mean non-zero event count reported across studies for different data sources?

- RQ1.4 :

-

What is the overall percentage coverage reported across studies for different disciplines?

In the analysis, we do not consider the coverage of altmetric aggregators, nor do we consider the coverage of usage metrics such as HTML views, nor downloads. We also do not consider the coverage of sources of citations, such as WoS, Scopus, PubMed, or CrossRef. Furthermore, some studies could not be considered in the analysis:

-

Studies that do not report the actual sample size (e.g., Alperin 2015a; Bar-Ilan 2014; Haustein and Larivière 2014; Haustein et al. 2014a, b; Peters et al. 2012; Thelwall and Sud 2015).

-

Studies that do not report the actual number of articles covered (e.g., Fairclough and Thelwall 2015; Hammarfelt 2013).

-

Studies that report on coverage but whose results could not be compared to those from other studies. For example, Holmberg and Thelwall (2014) report on the coverage of tweets by researchers and not the coverage of publications. Bornmann (2014c) reports on unique tweeters mentioning articles rather than on the number of tweets.

When coverage is reported as a break down by discipline (e.g., Costas et al. 2015; Haustein et al. 2015b; Kousha and Thelwall 2015c; Maleki 2015a; Mohammadi and Thelwall 2014; Mohammadi et al. 2015a), document type (e.g., Haustein et al. 2015b; Zahedi et al. 2014a), or gender (e.g., Paul-Hus et al. 2015), only the overall values for all disciplines, document types, or genders are included in the analysis to answer RQ1.1, RQ1.2, and RQ1.3.

In response to RQ1.1, Table 4 and Table 5 show the aggregated percentage coverage across the 25 studies analysed for the 11 aforementioned altmetric data sources. A data source refers to the social media data source investigated. The total sample size is the sum of all articles that were considered by the individual studies for the calculation of the coverage. The total number of articles covered is the number of articles available on the social media platform that could potentially have altmetric events, some, however have none. The overall percentage coverage is the percentage of articles covered with respect to the total sample size. Table 5 shows non-zero coverage in answer to the first part of RQ1.3, thus articles without events are not considered in these studies.

In Table 4, Mendeley has the highest coverage of 59.2 % across 15 studies. Twitter also has a medium coverage of 24.3 % across 11 studies. Apart from CiteULike with a coverage of 10.6 % across 8 studies, all other data sources have a low coverage of below 8 %. Non-zero coverage shown in Table 5 gives slightly different results. F1000 has a coverage of 100 % in one study, while Mendeley has 40.6 % across 3 studies.

The coverage of altmetric events is shown in Tables 6 and 7, answering RQ1.2 and the second part of RQ1.3. The total event count is the total number of altmetric events available for the sample. The average event count is the mean of the altmetric events for that sample. Different types of events are reported for individual data sources, such as: Mendeley bookmarks, readers, and readership; Twitter tweets, CiteULike bookmarks; blog mentions and posts; F1000 reviews, Facebook likes, shares, mentions on walls or pages; Wikipedia mentions; PubMed HTML views and downloads; and Reddit posts (excluding comments). Table 6 shows that Mendeley has the highest mean event count of 6.92 across 9 studies, and Table 7 shows that Mendeley also has the highest non-zero mean event count of 15.8 across 7 studies. All other data sources have a mean event count below 1 in Table 6, and a mean non-zero event count below 4 in Table 7. The mean non-zero event counts in Table 7 are all higher than the corresponding mean event counts in Table 6.

In response to RQ1.4, Table 8 gives the aggregated percentage coverage for different disciplines. The data sources Mendeley, Twitter, CiteULike, Blogs, Facebook, Google+, Wikipedia, and News have studies that report correlation values for different disciplines. Based on the most common categories used by the studies analysed, the disciplines are grouped according to: Biomedical and health sciences, Life and earth sciences, Mathematics and computer science, Natural sciences and engineering, Social sciences and humanities, and Multidisciplinary.

For Mendeley, the highest coverage is in Multidisciplinary (79.6 %) and Biomedical and health sciences (71 %). For Twitter, CiteULike, and Google+, the highest coverage is in Social Sciences and humanities, with 39.1, 6.3, and 1.7 % respectively. For Blogs and News, the highest coverage is in Life and earth sciences, with 3.6 and 1.8 % respectively. For Facebook, the highest coverage is in Biomedical and health sciences, with 7.4 %. For Wikipedia, the highest coverage is in Multidisciplinary with 6.9 %. Overall, the two technical disciplines—Natural sciences and engineering, and Mathematics and computer science, have a rather low coverage compared to the other disciplines.

Results of studies on cross-metric validation

From the data collected in the literature review in “Literature on altmetrics research” section, 58 publications were identified as having conducted studies on cross-metric validation. Twenty-seven of these studies considered cross-disciplinary differences, 9 investigated country biases and 10 looked into user groups and demographics such as the influences of professional levels, institutions, or gender. Most studies compared traditional metrics to altmetrics by calculating correlations. The limitations of applying correlations as a comparison method are however discussed in Thelwall and Fairclough (2015b), where simulations are conducted to systematically investigate the effect of heterogeneous datasets (having different years or disciplines) on correlation results.

The most popular bibliometric sources in studies on altmetrics are WoS, Scopus, and Google Scholar Citations (GSC). Other bibliometric sources include: Microsoft Academic Search (MAS), CrossRef and PubMed. Journal based citations include the Journal Impact Factor (JIF), the Journal Citation Score (JCS), the Journal Usage Factor (JUF), the Technological Impact Factor (TIF), the General Impact Factor (GIF), and the Journal to Field Impact Score (JFIS). Book citations encompass Google Books Citations and Thomson Reuters Book Citation Index (BKCI). University ranking indicators include the Times Higher Education Ranking, the QS World University Rankings, the Academic Ranking of World Universities, the CWTS Leiden Ranking, and the Webometrics Ranking of World Universities.

Several new metrics were proposed that do not directly have their sources from social media. For example, in Costas and Leeuwen (2012), funding acknowledgements have been investigated as a possible indication of impact. In Chen et al. (2015), 18 new metrics were proposed amongst them theses and dissertations advised, magazines authored, number of syllabi citations, and number of grants received. A new metric based on book reviews called reviewmetrics is presented in Zhou and Zhang (2015). Network measures based on altmetrics are proposed to rank journals in Loach and Evans (2015). Book mentions in syllabus are compared to citations from Scopus and BKCI citations in Kousha and Thelwall (2015b), while Cabezas-Clavijo et al. (2013) compare book loans and citations. In Haustein and Siebenlist (2011), social bookmarks (collected from CiteULike, BibSonomy and Connotea) are used to evaluate the global usage of journals. Bookmark based indicators (based on CiteULike bookmarks) are investigated in Sotudeh et al. (2015). Furthermore, Orduña-Malea et al. (2014) analyse the web visibility of universities using webometrics. Thomson Reuters’s Data Citation Index (DCI) is investigated and compared with altmetrics from Altmetric.com, Impactstory, and PlumX in Peters et al. (2015).

Table 9 gives an overview of the cross-metric validation studies performed comparing altmetrics to citations from Scopus, WoS, GSC, and MAS. Most studies compared altmetrics to citations from WoS or Scopus. Table 12 in “Appendix 1” gives an overview of cross-metric validation studies that compare altmetrics to citations from CrossRef, PubMed, journal based citations, book citations, and university rankings. Most of the studies compared altmetrics to journal based citations. Table 13 in Appendix “1” gives an overview of cross-metric validation studies that compare altmetrics to usage metrics: downloads from ScienceDirect, views from PMC, article level metrics from PLOS, holdings from WorldCat, metrics from Amazon, and downloads from arXiv. Table 15 in Appendix “1” gives an overview of the cross-metric validation studies performed that compare altmetrics to altmetrics.

We performed a meta-analysis to give an overview of the results from cross-metric validation studies collected from the literature review in “Literature on altmetrics research” section. Previous meta-analyses comparing altmetrics to citations in Bornmann (2014a) and in Bornmann (2015a) were also considered. From these two meta-analyses, 11 additional publications that were not yet covered in the original sample from the literature review were identified and included in our meta-analysis. Thus, in total, 68 publications were identified as having performed cross-metric validation of altmetrics. The studies however applied diverse statistical methods such as regression (Winter 2015; Bornmann and Leydesdorff 2015; Bornmann and Haunschild 2015; Bornmann 2015a, 2014c, 2015c), ANOVA (Allen et al. 2013), Mann-Whitney tests (Shema et al. 2014), Kendall’s Tau (Weller and Peters 2012), bivariate correlation (Tang et al. 2012), or Pearson correlation (Costas et al. 2015; Eysenbach 2012; Haustein and Siebenlist 2011; Ringelhan et al. 2015; Shuai et al. 2012; Sotudeh et al. 2015; Thelwall and Wilson 2015a; Waltman and Costas 2014; Zhou and Zhang 2015; Henning 2010). Some studies did not mention the method applied (Torres-Salinas and Milanés-Guisado 2014; Peters et al. 2015; Haustein and Larivière 2014; Bowman 2015; Costas and Leeuwen 2012). The majority of studies, however, applied Spearman correlation. Therefore, in order to have a consistent method across all studies in the meta-analysis (Hopkins 2004), only those studies applying Spearman correlation were considered. Furthermore, some studies did not report the exact correlation values (Thelwall and Fairclough 2015a; Thelwall and Maflahi 2015b; Thelwall and Fairclough 2015b; Loach and Evans 2015; Jiang et al. 2013; Haustein and Siebenlist 2011), nor the specific sample size (Bar-Ilan 2014; Cabezas-Clavijo et al. 2013; Chen et al. 2015; Fausto et al. 2012; Ortega 2015b; Thelwall and Sud 2015), thus these results could not be considered in our meta-analysis.

The majority of studies in our sample investigated the correlation between altmetrics and citations from WoS, Scopus and GSC. Thus, the focus of our meta-analysis was on studies comparing altmetrics with these three citation sources. Therefore, studies comparing altmetrics to other metrics like the JIF ( Eyre-Walker and Stoletzki 2013; Li et al. 2012; Haustein et al. 2014b), the JCS (Zahedi et al. 2014a, 2015a), the Immediacy Factor (IF), and the Eigenfactor (EF) (Haustein et al. 2014b), BCI and Google Books citations (Kousha and Thelwall 2015c), CrossRef and PMC cites (Liu et al. 2013; Yan and Gerstein 2011), university rankings (Thelwall and Kousha 2015), and usage metrics like downloads (Schlögl et al. 2014; Liu et al. 2013; Thelwall and Kousha 2015), or page views (Liu et al. 2013; Thelwall and Kousha 2015), were not considered in our meta-analysis.

We performed our meta-analysis using the Comprehensive Meta-Analysis (CMA) software,Footnote 26 applying a mixed effects analysis. A random effects model was used to combine studies within each subgroup. The following research questions were investigated:

- RQ2.1 :

-

What is the overall correlation between altmetrics and WoS citations, Scopus citations and Google Scholar citations?

- RQ2.2 :

-

What is the overall correlation between non-zero altmetrics and WoS citations, Scopus citations and Google Scholar citations?

- RQ2.3 :

-

What is the overall correlation between altmetrics and other altmetrics?

- RQ2.4 :

-

What is the overall correlation between altmetrics and WoS citations, Scopus citations, Google Scholar citations, and other altmetrics for different disciplines?

Table 10 gives an overview of the results from the meta-analysis answering RQ2.1, RQ2.2, and RQ2.3, for those altmetrics with correlation values reported in at least two studies. Thus, we analyse a total of 25 studies investigating 9 altmetrics: Mendeley, Twitter, CiteULike, Blogs, F1000, Facebook, Google+ (only on non-zero data samples), Wikipedia and Delicious. Some articles reported correlation values for multiple disciplines, giving individual values for each discipline as well as an aggregate value for a group of disciplines. For the meta-analysis, when an aggregate value is reported, only this value is taken (Thelwall and Wilson 2015b; Kousha and Thelwall 2015c; Mohammadi and Thelwall 2014; Mohammadi et al. 2015a). Some articles reported on correlation values for non-zero counts, i.e., for data having only non-zero metric counts. These non-zero results were analysed separately. Different events were reported for the data sources: Mendeley readership, readership count, user count, readership score, saves and bookmarks; Twitter tweets, and mentions; CiteULike user count, saves, and bookmarks; Blog mentions; F1000 recommendations, assessor scores, assigned labels, rating, and FFa Scores; Facebook shares and walls; Wikipedia cites; and Delicious bookmarks. Facebook comments, likes and clicks (Priem et al. 2012b), and the number of F1000 evaluators (Li et al. 2012) were not considered.

In Table 10, Mendeley has the highest correlation value of 0.37 with citations, whereas Google+ and Delicious have the lowest correlation value with citations of 0.07. Mendeley also has the highest correlation value of 0.547 with citations on non-zero datasets. Facebook has the lowest correlation value of 0.109 with citations on non-zero datasets. With other altmetrics, CiteULike has the highest correlation value of 0.322 and Wikipedia the lowest correlation with other altmetrics of 0.053. Mendeley had the highest overall correlation value across citations and altmetrics of 0.335, while Delicious had the lowest overall correlation of 0.064. Further details of the results from the meta-analysis, answering RQ2.1, RQ2.2, and RQ2.3 are shown in Appendix “1”.

In Table 11, the results of cross-metric validation studies are shown for several data sources, comparing across different disciplines, thus answering RQ2.4. From the relevant studies considered, four common disciplinary categories could be identified: Biomedical and life sciences, Social sciences and humanities, Natural sciences and engineering, and Multidisciplinary. Most of the studies reporting correlation values focused on Biomedical and life sciences. For Mendeley and CiteULike, Biomedical and life sciences had the highest correlation compared to most of the metrics. For Twitter, the highest correlation is measured for Social sciences and humanities.

Discussion

Overall, the analysis of the results on the coverage of altmetrics show a low coverage across all metrics and for all disciplines. The results of cross-metric validation studies also show overall a weak correlation to citations across all disciplines. These studies however faced numerous challenges and issues as further discussed in “Challenges and issues” section, ranging from challenges in data collection to issues in data integrity. We also faced some of these issues when compiling the results across the different studies. Confusing and sometimes contradictory terminology, and no standard definitions for the altmetric events was the most challenging issue when trying to consolidate the results and perform an overall analysis across so many studies. Thus the results presented here need to be considered with some caution due to the many discrepancies amongst the methodologies, datasets, definitions, and goals of the various studies considered.

Conclusion and outlook

Altmetrics is still in its infancy, however, we can already detect a growing importance of this emergent application area of social media for research evaluation. This paper gives a compact overview of the key aspects relating to altmetrics. The major aggregators were analysed according to the features they offer, and the data sources they collect events from. A snapshot of the research literature on altmetrics shows a steady increase in the number of research studies and publications on altmetrics since 2011. In particular, the validity of altmetrics compared to traditional bibliometric citation counts were investigated. Furthermore, a detailed analysis of the coverage of altmetrics data sources was presented as well as a meta-analysis of the results of cross-metric validation studies. Mendeley has the highest coverage of about 59 % across 15 studies and the highest correlation value when compared to citations of 0.37 (and 0.5 on non-zero datasets). Thus, overall, results from the literature review, coverage analysis and meta-analysis show that presently, Mendeley is the most interesting and promising social media source for altmetrics, although the data sources are becoming more and more diverse.

Challenges and issues

Altmetrics is however still a controversial topic in academia and this is partly due to the challenges and issues it faces, some of which are listed as follows.

-

1.

Data collection issues Altmetrics are usually collected via social media APIs, for example, via Mendeley’s, Facebook’s, or Twitter’s API, or scraped from HTML websites. There are however accessibility issues with certain APIs and restrictions to the amount of data collectable per day. Thus data collection takes a long time, and inconsistencies due to delays in data updates can arise (Zahedi et al. 2014b). Finding the right search queries to use is also an issue as not all research objects (not even all published research articles) have DOIs. DOIs are also not consistent across the different registration agencies and tracking and resolving DOIs to URLs can have complications such as accessibility issues, or difficulties with cookies (Zahedi et al. 2015b). Alternatively, the title or publication date of the publication might be used to search. This is however dependent on the quality of the metadata from the different bibliometric sources. These data collection issues are faced by the various altmetrics aggregators and this results in inconsistencies with the metrics they provide (Zahedi et al. 2015b).

-

2.

Data processing and disambiguation issues Altmetrics are based on the concept of tracking mentions of research output to the research objects. Resolving these links to unique identifiers can be very challenging. There might exist multiple versions of the same artifact across several sites, using different identifiers (Liu and Adie 2013). There is also the issue of missing links as some mentions do not include direct links to artifacts. A solution to this can be achieved by finding different ways to map the mentions to the articles by computing the semantics involved, also called Semantometrics (Knoth and Herrmannova 2014). Tracking multi-media data sources however still proves challenging, as most videos or podcasts do not include mentions to articles in their meta-data, but rather verbally in the audio or video content (Liu and Adie 2013). From Table 3 in “Events tracked by altmetric aggregators” section , we see that Apply Events are rarely tracked. This might be due to the fact that apply acts are hard to identify, for example, distinguishing between citations that are mentions and those that discuss results is very complex (Bornmann 2015b). In addition, some authors cannot be identified uniquely simply by using their names, and there could be variations to author names that could make tracking more complex. Some of the altmetrics aggregators, as shown in Table 1, provide features for disambiguation. Altmetric.com applies text mining mechanisms to identify missing links to articles, and disambiguates between different versions of the same output, collating all the attention into one. PLOS ALM supports author disambiguation and identity resolution by using ORCID (Open Researcher and Contributor ID) (Haak et al. 2012). Plum Analytics disambiguates both links to articles and names of authors.

-

3.

No common definition of altmetric events and confusing terminology There are many different ways by which altmetrics events can be measured from a data source (Liu and Adie 2013). Table 3 shows the diverse range of altmetrics events provided by altmetrics aggregators. One challenge is there is no standard definition of a specific altmetric event, thus aggregators name their events differently, for example, the number of Mendeley readers of an article is often referred to as Mendeley readership. In addition, event counts from a single data source could be measured in different ways, and aggregators do not always explicitly state how the events are counted. For example, if for a Facebook wall post, the likes and comments on the wall are counted as well (Liu and Adie 2013), or if re-tweets are counted or only tweets. This challenge is further compounded with confusing terminology such as the unclear distinction between usage metrics and altmetrics (Glänzel and Gorraiz 2015).

-

4.

Stability, coverage and usage of social media sources Social media data sources are liable to change or discontinue their service (Bornmann 2014b). In “Data sources used by altmetric aggregators” section, some discontinued social media data sources are mentioned, and in “The altmetrics landscape” section some altmetrics aggregators are also mentioned as no longer being in service. This fluctuation in the availability of altmetrics poses a challenge, especially regarding reproducing the evidences for the event counts. Furthermore, the usage and coverage of social media data sources depends on various factors such as country, demographics and audiences (Bornmann 2014b; Priem et al. 2014). Some data sources are popular in certain countries, for example, BibSonomy is popular in Germany (Peters et al. 2012).

-

5.

Data integrity There are many concerns regarding gaming, spamming and plagiarism in altmetrics. Several research studies have been conducted to investigate the manipulation of research impact. One such study on automated Twitter accounts revealed that automated bot accounts created a substantial amount of tweets to scientific articles and their tweeting criteria are usually random and non-qualitative (Haustein et al. 2015a). In Table 1, we present an overview of the various features offered by the altmetrics aggregators. Some of them offer novel tools and features that can help detect suspicious activity. Plum Analytics, Impactstory and PLOS ALM gather citation metrics as part of their data, which helps users to compare traditional metrics with altmetrics to see for themselves if there is any correlation between the two. Altmetric.com, in addition to detecting gaming, also picks up on spam accounts and excludes them from the final altmetric score. As part of their data integrity process, PLOS ALM generates alerts from Lagotto in order to determine what may be going wrong with the application, data sources, and data requests. These alerts are used to discover potential gaming activities and system operation errors. Webometric Analyst checks actively for plagiarism and supports automated spam removal by excluding URLs from suspicious websites. SSRN and PLOS ALM have set up strategies to ensure data integrity (Gordon et al. 2015). One such system is DataTrust (Lin 2012), developed by PLOS ALM, which keeps track of suspicious metrics activity. PLOS also analyses user behaviour and cross validates usage metrics with other sources in order to detect irregular usage (Gordon et al. 2015). SSRN issues warnings when fraudulent automatic downloads are detected (Edelman et al. 2009).

Future research areas

These issues listed above underline the need for common standards and best practices, especially across altmetrics aggregators (Zahedi et al. 2015b). To this aim, NISO (2014) has started an initiative to formulate standards, propose best practices and develop guidelines and recommendations for using altmetrics to assess research impact. Topics include defining a common terminology for altmetrics, developing strategies to ensure data quality, and the promotion and facilitation of the use of persistent identifiers. Ensuring consistency and normalisation of altmetrics will also be an important future research topic (Wouters and Costas 2012), as well as defining a common terminology, theories and classification of altmetric events (Haustein et al. 2016; Lin and Fenner 2013a).

Altmetrics offer a unique opportunity to analyse the reach of scholarly output in society (Taylor 2013). In future, network analysis of altmetrics will be needed to study research interaction and communication (Davis et al. 2015; Priem et al. 2014). Altmetrics can be used to describe research collaboration amongst scholars, scientists, and authors, thus leading to an extension of existing bibliometric co-occurrence networks (Wouters and Costas 2012). For example, co-blogging, co-tweeting, or co-bookmarking networks could pave the way to new sources of information for finding and recommending research articles and other items (Kurtz and Henneken 2014; Mayr and Scharnhorst 2015). To understand this interplay between different elements, and to gain insights into how people use, adapt and translate research will require the interdisciplinary collaboration between the humanities and computer science (Taylor 2013). The visualisation of such networks is also a potential research area (Priem et al. 2014). There have already been works in this direction (Hoffmann et al. 2015; Kraker et al. 2015; Uren and Dadzie 2015). More collaboration is needed between information retrieval, web mining and altmetrics (Davis et al. 2015; Mayr and Scharnhorst 2015). Text mining techniques will be needed to track indirect mentions of research outputs over vast amounts of textual content on the Web, especially from blogs, news articles, or government documents (Davis et al. 2015).

Some data sources that have not received much, or any attention up till now (see Table 5), might receive more in future, for example, YouTube, SlideShare, Academia.edu, or Stack Overflow (Priem et al. 2014). Others that have received nearly no attention could be potential future altmetric data sources or aggregators, for example, ArnetminerFootnote 27 that searches and extracts researcher profiles from the Web and from academic social networks (Tang et al. 2008); Paper CriticFootnote 28—an online peer-review tool (Wouters and Costas 2012); and Zotero reference manager which up till now has not been studied due to the lack of collection of metrics (Priem et al. 2014; Wouters and Costas 2012). Zotero however recently announced that an API for altmetrics data will be released soon.Footnote 29

Another important topic will be to investigate who the users are who engage with scholarly outputs on social media. Social media platforms and their user communities are new and diverse and not much is known nor understood about the users’ motivations, nor the contexts in which they act on research outputs (Alperin 2015b; Haustein et al. 2016). There have been some studies on the motivation and usage of social media data sources, for example, using content analysis (Shema et al. 2015), and user surveys (Mohammadi et al. 2015b) to find out if the users are scholars or non-scholars, and to determine their geographical distribution, career stage, and demographics. These findings will make it possible to understand and explain the various altmetric events, and validate their usage in research evaluation (Haustein et al. 2016).

The results of the meta-analysis in “Results of studies on cross-metric validation” section show overall a weak correlation between altmetrics and citation counts, with Spearman correlation values ranging from 0.07 to 0.5. These results confirm that altmetrics do indeed measure a different kind of impact than citations. Thus in future, research should focus more on investigating how altmetrics measure the broader impact of research and less on comparing altmetrics with traditional metrics (Bornmann 2014b). A future challenge will be to determine alternative ways to evaluate altmetrics, and to identify possible sources of ground-truth, which could be peer judgement or recommendations from the scientific community (Davis et al. 2015).

For research evaluation, it is still questioned whether altmetrics can be viewed as an alternative to traditional metrics in academia (Cronin 2013). Further research needs to be done to determine how altmetrics should be applied and considered for various purposes (e.g., hiring processes, academic promotions, funding purposes, etc.) and at different aggregation levels (e.g., individual researcher level, departmental levels, or university levels). Meaningful combinations with traditional metrics still need to be explored (Wouters and Costas 2012). Altmetrics should rather be seen as a complement to traditional metrics and be used only to inform decisions as part of a critical peer review process (Bornmann 2014b).

Notes

http://altmetrics.org/about/. Accessed 18 Feb 2016.

http://www.niso.org/topics/tl/altmetrics_initiative, Accessed 18 Feb 2016.

Mendeley is an online reference manager for scholarly publications.

http://www.altmetric.com. Accessed 18 Feb 2016.

https://impactstory.org. Accessed 18 Feb 2016.

http://www.plumanalytics.com. Accessed 18 Feb 2016.

http://article-level-metrics.plos.org. Accessed 18 Feb 2016.

https://www.growkudos.com. Accessed 18 Feb 2016.

http://lexiurl.wlv.ac.uk. Accessed 18 Feb 2016.

http://www.snowballmetrics.com. Accessed 18 Feb 2016.

http://readermeter.org. Accessed 18 Feb 2016.

http://50.17.213.175. Accessed 18 Feb 2016.

http://springerlink.bibliotecabuap.elogim.com/journal/11192. Accessed 18 Feb 2016.

http://www.journals.elsevier.com/journal-of-informetrics. Accessed 18 Feb 2016.

http://onlinelibrary.wiley.com/journal/10.1002/(ISSN)2330-1643. Accessed 18 Feb 2016.

http://plosone.org. Accessed 18 Feb 2016.

https://www.asis.org/proceedings.html. Accessed 18 Feb 2016.

http://insights.uksg.org/. Accessed 18 Feb 2016.

http://www.emeraldgrouppublishing.com/products/journals/journals.htm?id=AJIM. Accessed 18 Feb 2016.

http://journals.plos.org/plosbiology/. Accessed 18 Feb 2016.

http://www.issi2015.org/en/default.asp. Accessed 18 Feb 2016.

http://crln.acrl.org/. Accessed 18 Feb 2016.

http://recyt.fecyt.es/index.php/EPI/. Accessed 18 Feb 2016.

http://www.nature.com. Accessed 18 Feb 2016.

http://ceur-ws.org/. Accessed 18 Feb 2016.

https://www.meta-analysis.com/. Accessed 27 November 2015.

https://aminer.org/, Accessed 18 Feb 2016.

http://www.papercritic.com/, Accessed 18 Feb 2016.

https://www.zotero.org/blog/studying-the-altmetrics-of-zotero-data/, Accessed 18 Feb 2016.

References

Adie, E., & Roe, W. (2013). Altmetric: Enriching scholarly content with article-level discussion and metrics. Learned Publishing, 26(1), 11–17.

Allen, H. G., Stanton, T. R., Di Pietro, F., & Moseley, G. L. (2013). Social media release increases dissemination of original articles in the clinical pain sciences. PLoS One, 8(7), e68914.

Alperin, J. P. (2015a). Geographic variation in social media metrics: An analysis of Latin American journal articles. Aslib Journal of Information Management, 67(3), 289–304.

Alperin, J. P. (2015b). Moving beyond counts: A method for surveying Twitter users. http://altmetrics.org/altmetrics15/alperin/. Accessed 18 Feb 2016.

Andersen, J. P., & Haustein, S. (2015). Influence of study type on Twitter activity for medical research papers. In Proceedings of the 15th international society of scientometrics and informetrics conference.

Araújo, R. F., Murakami, T. R., De Lara, J. L., & Fausto, S. (2015). Does the global south have altmetrics? Analyzing a Brazilian LIS journal. In Proceedings of the 15th international society of scientometrics and informetrics conference, (pp. 111–112).

Bar-Ilan, J. (2012). JASIST@mendeley. In ACM web science conference 2012 workshop.

Bar-Ilan, J. (2014). Astrophysics publications on arXiv, Scopus and Mendeley: a case study. Scientometrics, 100(1), 217–225.

Bar-Ilan, J., Haustein, S., Peters, I., Priem, J., Shema, H., & Terliesner, J. (2012). Beyond citations: Scholars’ visibility on the social Web. arXiv preprint. arXiv:1205.5611.

Bornmann, L. (2014a). Alternative metrics in scientometrics: A meta-analysis of research into three altmetrics. Scientometrics, 103(3), 1123–1144.

Bornmann, L. (2014b). Do altmetrics point to the broader impact of research? An overview of benefits and disadvantages of altmetrics. Journal of Informetrics, 8(4), 895–903.

Bornmann, L. (2014c). Validity of altmetrics data for measuring societal impact: A study using data from atmetric and F1000Prime. Journal of Informetrics, 8(4), 935–950.

Bornmann, L. (2015a). Interrater reliability and convergent validity of F1000Prime peer review. Journal of the Association for Information Science and Technology, 66(12), 2415–2426.

Bornmann, L. (2015b). Letter to the editor: On the conceptualisation and theorisation of the impact caused by publications. Scientometrics, 103(3), 1145–1148.

Bornmann, L. (2015c). Usefulness of altmetrics for measuring the broader impact of research. Aslib Journal of Information Management, 67(3), 305–319.

Bornmann, L., & Haunschild, R. (2015). Which people use which scientific papers? An evaluation of data from F1000 and Mendeley. Journal of Informetrics, 9(3), 477–487.

Bornmann, L., & Leydesdorff, L. (2013). The validation of (advanced) bibliometric indicators through peer assessments: A comparative study using data from InCites and F1000. Journal of Informetrics, 7(2), 286–291.

Bornmann, L., & Leydesdorff, L. (2015). Does quality and content matter for citedness? A comparison with para-textual factors and over time. Journal of Informetrics, 9(3), 419–429.

Bornmann, L., & Marx, W. (2015). Methods for the generation of normalized citation impact scores in bibliometrics: Which method best reflects the judgements of experts? Journal of Informetrics, 9(2), 408–418.

Bowman, T. D. (2015). Tweet or publish: A comparison of 395 professors on Twitter. In Proceedings of the 15th international society of scientometrics and informetrics conference.

Buschman, M., & Michalek, A. (2013). Are alternative metrics still alternative? Bulletin of the American Society for Information Science and Technology, 39(4), 35–39.

Cabezas-Clavijo, Á., Robinson-García, N., Torres-Salinas, D., Jiménez-Contreras, E., Mikulka, T., Gumpenberger, C., Wernisch, A., & Gorraiz, J. (2013). Most borrowed is most cited? Library loan statistics as a proxy for monograph selection in citation indexes. In Proceedings of the 14th international society of scientometrics and informetrics conference (Vol. 2, pp. 1237–1252).

Chamberlain, S. (2013). Consuming article-level metrics: Observations and lessons. Information Standards Quarterly, 25(2), 4–13.

Chen, K., Tang, M., Wang, C., & Hsiang, J. (2015). Exploring alternative metrics of scholarly performance in the social sciences and humanities in Taiwan. Scientometrics, 102(1), 97–112.

Colledge, L. (2014). Snowball metrics recipe book, 2nd ed. Amsterdam, the Netherlands: Snowball Metrics program partners.

Costas, R., & van Leeuwen, T. N. (2012). Approaching the “reward triangle”: General analysis of the presence of funding acknowledgments and “peer interactive communication” in scientific publications. Journal of the American Society for Information Science and Technology, 63(8), 1647–1661.

Costas, R., Zahedi, Z., & Wouters, P. (2015). Do “altmetrics” correlate with citations? Extensive comparison of altmetric indicators with citations from a multidisciplinary perspective. Journal of the Association for Information Science and Technology, 66(10), 2003–2019.

Cronin, B. (2013). The evolving indicator space (iSpace). Journal of the American Society for Information Science and Technology, 64(8), 1523–1525.

Davis, B., Hulpuş, I., Taylor, M., & Hayes, C. (2015). Challenges and opportunities for detecting and measuring diffusion of scientific impact across heterogeneous altmetric sources. http://altmetrics.org/altmetrics15/davis/. Accessed 18 Feb 2016.

De Winter, J. (2015). The relationship between tweets, citations, and article views for PLOS One articles. Scientometrics, 102(2), 1773–1779.

Edelman, B., Larkin, I., et al. (2009). Demographics, career concerns or social comparison: Who Games SSRN download counts? Harvard Business School.

Eyre-Walker, A., & Stoletzki, N. (2013). The assessment of science: The relative merits of post-publication review, the impact factor, and the number of citations. PLoS Biol, 11(10), e1001675.

Eysenbach, G. (2012). Can tweets predict citations? Metrics of social impact based on Twitter and correlation with traditional metrics of scientific impact. Journal of Medical Internet Research, 13(4), e123.

Fairclough, R., & Thelwall, M. (2015). National research impact indicators from Mendeley readers. Journal of Informetrics, 9(4), 845–859.

Fausto, S., Machado, F. A., Bento, L. F. J., Iamarino, A., Nahas, T. R., & Munger, D. S. (2012). Research blogging: Indexing and registering the change in science 2.0. PLoS One, 7(12), e50109.

Fenner, M. (2013). What can article-level metrics do for you? PLoS Biol, 11(10), e1001687.

García, N. R., Salinas, D. T., Zahedi, Z., & Costas, R. (2014). New data, new possibilities: exploring the insides of Altmetric.com. El profesional de la información, 23(4), 359–366.

Glänzel, W., & Gorraiz, J. (2015). Usage metrics versus altmetrics: Confusing terminology? Scientometrics, 3(102), 2161–2164.

Gordon, G., Lin, J., Cave, R., & Dandrea, R. (2015). The question of data integrity in article-level metrics. PLoS Biol, 13(8), e1002161.

Haak, L. L., Fenner, M., Paglione, L., Pentz, E., & Ratner, H. (2012). ORCID: A system to uniquely identify researchers. Learned Publishing, 25(4), 259–264.

Hammarfelt, B. (2013). An examination of the possibilities that altmetric methods offer in the case of the humanities. In Proceedings of the 14th international society of scientometrics and informetrics conference, (Vol. 1, pp. 720–727).

Hammarfelt, B. (2014). Using altmetrics for assessing research impact in the humanities. Scientometrics, 101(2), 1419–1430.

Haunschild, R., Stefaner, M., & Bornmann, L. (2015). Who publishes, reads, and cites papers? An analysis of country information. In Proceedings of the 15th international society of scientometrics and informetrics conference.

Haustein, S., & Larivière, V. (2014). A multidimensional analysis of Aslib proceedings-using everything but the impact factor. Aslib Journal of Information Management, 66(4), 358–380.

Haustein, S., & Larivière, V. (2015). The use of bibliometrics for assessing research: possibilities, limitations and adverse effects. In Incentives and performance, Springer, pp. 121–139.

Haustein, S., Bowman, T. D., Holmberg, K ., Tsou, A., Sugimoto, C. R., & Larivière, V. (2015a). Tweets as impact indicators: Examining the implications of automated “bot” accounts on Twitter. Journal of the Association for Information Science and Technology, 67(1), 232–238.

Haustein, S., Costas, R., & Larivière, V. (2015b). Characterizing social media metrics of scholarly papers: The effect of document properties and collaboration patterns. PLoS One, 10(3), e0120495.

Haustein, S., Bowman, T. D., & Costas, R. (2016). Interpreting ‘Altmetrics’: Viewing acts on social media through the lens of citation and social theories. In Cassidy R. Sugimoto (Ed.), Theories of informetrics and scholarly communication. A Festschrift in honor of Blaise Cronin (pp. 372–406). De Gruyter.

Haustein, S., Peters, I., Bar-Ilan, J., Priem, J., Shema, H., & Terliesner, J. (2013). Coverage and adoption of altmetrics sources in the bibliometric community. In Proceedings of the 14th international society of scientometrics and informetrics conference (Vol. 1, pp. 468–483).

Haustein, S., Peters, I., Bar-Ilan, J., Priem, J., Shema, H., & Terliesner, J. (2014a). Coverage and adoption of altmetrics sources in the bibliometric community. Scientometrics, 101(2), 1145–1163.

Haustein, S., Peters, I., Sugimoto, C. R., Thelwall, M., & Larivière, V. (2014b). Tweeting biomedicine: An analysis of tweets and citations in the biomedical literature. Journal of the Association for Information Science and Technology, 65(4), 656–669.

Haustein, S., & Siebenlist, T. (2011). Applying social bookmarking data to evaluate journal usage. Journal of Informetrics, 5(3), 446–457.

Henning, V. (2010). The top 10 journal articles published in 2009 by readership on Mendeley. Mendeley Blog. http://www.mendeley.com/blog/academic-features/the-top-10-journalarticles-published-in-2009-by-readership-on-mendeley. Accessed 18 Feb 2016.

Hoffmann, C. P., Lutz, C., & Meckel, M. (2015). A relational altmetric? Network centrality on ResearchGate as an indicator of scientific impact. Journal of the Association for Information Science and Technology, 67(4), 765–775.

Holmberg, K. (2015). Online Attention of Universities in Finland: Are the bigger universities bigger online too? In Proceedings of the 15th international society of scientometrics and informetrics conference.

Holmberg, K., & Thelwall, M. (2014). Disciplinary differences in Twitter scholarly communication. Scientometrics, 101(2), 1027–1042.

Hopkins, W. G. (2004). An introduction to meta-analysis. Sportscience, 8, 20–24.

Howison, J., & Bullard, J. (2015). Software in the scientific literature: Problems with seeing, finding, and using software mentioned in the biology literature. Journal of the Association for Information Science and Technology (in press).

Jiang, J., He, D., & Ni, C. (2013). The correlations between article citation and references’ impact measures: What can we learn? In Proceedings of the American society for information science and technology,(Vol. 50, pp. 1–4). Wiley Subscription Services, Inc., A Wiley Company.

Knoth, P., & Herrmannova, D. (2014). Towards semantometrics: A new semantic similarity based measure for assessing a research publication’s contribution. D-Lib Magazine, 20(11), 8.

Kousha, K., & Thelwall, M. (2015a). Alternative metrics for book impact assessment: Can choice reviews be a useful source? In Proceedings of the 15th international society of scientometrics and informetrics conference.

Kousha, K., & Thelwall, M. (2015b). An automatic method for assessing the teaching impact of books from online academic syllabi. Journal of the Association for Information Science and Technology (in press).

Kousha, K., & Thelwall, M. (2015c). Can Amazon.com reviews help to assess the wider impacts of books? Journal of the Association for Information Science and Technology, 67(3), 566–581.

Kraker, P., Schlögl, C., Jack, K., & Lindstaedt, S. (2015). Visualization of co-readership patterns from an online reference management system. Journal of Informetrics, 9(1), 169–182.

Kumar, S., & Mishra, A. K. (2015). Bibliometrics to altmetrics and its impact on social media. International Journal of Scientific and Innovative Research Studies, 3(3), 56–65.

Kurtz, M. J., & Henneken, E. A. (2014). Finding and recommending scholarly articles. Beyond bibliometrics: harnessing multidimensional indicators of scholarly impact, pp. 243–259.

Li, X., Thelwall, M., & Giustini, D. (2011). Validating online reference managers for scholarly impact measurement. Scientometrics, 91(2), 461–471.

Li, X., Thelwall, M., & (uk, W. W. L. (2012). F1000, Mendeley and traditional bibliometric indicators. In Proceedings of the 17th international conference on science and technology indicators, pp. 451–551.

Lin, J. (2012). A case study in anti-gaming mechanisms for altmetrics: PLoS ALMs and datatrust. http://altmetrics.org/altmetrics12/lin. Accessed 18 Feb 2016.

Lin, J., & Fenner, M. (2013a). Altmetrics in evolution: defining and redefining the ontology of article-level metrics. Information Standards Quarterly, 25(2), 20.

Lin, J., & Fenner, M. (2013b). The many faces of article-level metrics. Bulletin of the American Society for Information Science and Technology, 39(4), 27–30.

Liu, C. L., Xu, Y. Q., Wu, H., Chen, S. S., & Guo, J. J. (2013). Correlation and interaction visualization of altmetric indicators extracted from scholarly social network activities: dimensions and structure. Journal of Medical Internet Research, 15(11), e259.

Liu, J., & Adie, E. (2013). Five challenges in altmetrics: A toolmaker’s perspective. Bulletin of the American Society for Information Science and Technology, 39(4), 31–34.

Loach, T. V., & Evans, T. S. (2015). Ranking journals using altmetrics. In Proceedings of the 15th international society of scientometrics and informetrics conference.

Maflahi, N., & Thelwall, M. (2015). When are readership counts as useful as citation counts? Scopus versus Mendeley for LIS journals. Journal of the Association for Information Science and Technology, 67(1), 191–199.