Abstract

This chapter describes the current state of the art in altmetrics research and practice. Altmetrics—evaluation methods of scholarly activities that serve as alternatives to citation-based metrics—are a relatively new but quickly growing area of research. For example, researchers are expecting that altmetrics that are based on social media data will reflect a broader public’s perception of science and will provide timely reactions to new scientific findings. This chapter explains how altmetrics have emerged and how they are related to the academic use of social media. It also provides an overview of current altmetric tools and potential data sources for computing alternative metrics, such as blogs, Twitter, social bookmarking services, and Wikipedia.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Scientific communication is, to a large degree, built upon the process of reading existing literature and situating one’s own work within this broader research context. This context is primarily established by citing other scholars’ publications (Weller and Peters 2012), and over time, these citations have become indicators of scholarly impact (Cronin 1984). Therefore, authors, journals, and/or papers that are most frequently cited are considered to be the most influential. Up to now, the various measures used to evaluate academic performance and impact are largely based upon the counting of citations. The disciplines of bibliometrics and scientometrics (Leydesdorff 1995) deal with the challenges of providing useful indicators and balancing the shortcomings of individual metrics (see also Haustein and Larivière 2015). Classical bibliometric indicators for measuring scholarly activity, such as publication numbers and citations, have a tradition that dates back to the creation of the Science Citation Index in the 1960s. Publication output and citations can thus have very practical implications for scholars, as they are used to evaluate individuals as well as institutions and can even influence job appointments, tenure, and project funding.

In addition, citation counts are used to retrieve information from publication databases. In fact, citation metrics were introduced at the Institute for Scientific Information’s (ISI) Web of Knowledge to support new search functions in the Web of Science databases. Citations help in the identification of popular or highly recognized articles and can also be used to explore research topics by recommending similar publications; e.g., based on co-citations or bibliographic coupling. Thus, citation metrics help with both performance measurement and navigation within the enormous amount of scholarly literature.

With the growing importance of the Internet, additional approaches to calculating metrics that are based on Web links or download numbers—so-called “webometrics” (Thelwall 2008)—have also been explored. This chapter shows how webometrics have evolved into alternative metrics, or “altmetrics”. Altmetrics are evaluation methods based on various user activities in social media environments. The next section thus describes the role of social media services in academia and the types of user activities that can possibly be measured through altmetrics. We then present the current state of altmetrics, both in practical application (Sect. 3) and research (Sect. 4).

2 The Rise of Social Media and Alternative Metrics

2.1 Web 2.0 and Science 2.0

For the last few decades, the Internet has been changing the way that scholars all over the world and across disciplines carry out their work. The Internet and related technologies influence the way that scholars gather research data, retrieve information and find relevant literature, present and distribute their research results, communicate and collaborate with colleagues, and teach and interact with their students (Tokar et al. 2012). These changes are closely connected to current initiatives to foster “open science” (Bartling and Friesike 2014; see also Friesike and Schildhauer 2015), i.e., opening up scholarly processes to enhance transparency and accessibility, including open access (Sitek and Bertelmann 2014) to research publications and to data (Pampel and Dallmeier-Tiessen 2014).

Today, many popular online spacesFootnote 1 are based on user-generated content, user networks, and user interactions. This was different 10 years ago, when Tim O’Reilly and Dale Dougherty of O’Reilly Media coined the term “Web 2.0” in a conference panel about the new Internet phenomena (O’Reilly 2005). They described a new era in which the Internet was no longer comprised of static Websites and content provided by just a few individuals or institutions. Instead, the “consumers” of Websites were increasingly contributing to the production of Web content [a phenomenon that has been labeled “prosumerism” (Buzzetto-More 2013) or “produsage” (Bruns 2008)] by sharing short texts, photos, videos, bookmarks, and other resources online through platforms that enabled user contributions with little effort and no programming skills. The infrastructural backbone of Web 2.0 has been a series of new platforms that enable content sharing and networking, which is comprised of interactions and connections among groups of users. Web 2.0 soon became known as the “Social Web,” and Web 2.0 platforms were called “social media” (e.g., Kaplan and Haenlein 2010) or “social networking sites” (Nentwich and König 2014). This terminology is still used today, although the characteristics of the initial vision of Web 2.0 have been widely adopted across numerous Web sites and users may no longer be able to see a difference between the Web in general and social media in particular. At the same time, social media is being increasingly studied across scholarly disciplines, regarding, for example, the role that it plays in political communication and online activism (e.g., Faris 2013), journalism (e.g., Papacharissi 2009), disaster relief (e.g., Silverman 2014), and health care (e.g., McNab 2009).

The addition of “2.0” was quickly combined with many other concepts: integrating social media in teaching and learning became “e-learning 2.0” (Downes 2005), knowledge management using wikis and other social networking platforms that facilitate knowledge sharing (e.g., reference management communities) became “knowledge management 2.0” and libraries that added user-generated keywords to their catalogues were described as “library 2.0” (Maness 2006). Comparable names were coined for other concepts—both in everyday life and in academia. Similarly, the term “science 2.0” was quickly coined as an umbrella term for approaches to use social media to support knowledge exchange and workflows in academic environments (Waldrop 2008; Weller et al. 2007). Science 2.0 is closely related to eScience (Hey and Trefethen 2003), cyberinfrastructures (Hey 2005), and cyberscience (Nentwich 2003; Nentwich and König 2012). Tochtermann (2014, in press) provides an up-to-date definition of Science 2.0 as well as a summary of recent developments.

Many researchers feel that social media has the potential to help scholars collaborate and communicate more easily. Nentwich and König (2014) describe the potential which social networking sites offer to academics in more detail. They highlight functionalities for knowledge production, processing and distribution, as well as applications in institutional settings. But the uptake of social media usage among scientists has been rather slow. However, single studies seem to indicate that scholars from some disciplines are acting as ‘early adopters’ while others appear more skeptical in using social media. For example, Mahrt et al. (2014) have summarized several studies which show that Twitter is only used by a small percentage of researchers. Other studies show a higher uptake of social media tools in specific academic communities and disciplines (e.g., Haustein et al. 2013). It appears that Twitter was first popular in disciplines that are themselves related to the Web or computer-mediated communication (for example, the semantic Web research community; see Letierce et al. 2010). In a survey with researchers in the UK Procter et al. (2010, p. 4044) also observed that “computer science researchers are more likely to be frequent users and those in medicine and veterinary sciences less likely”. The same study, however, did not find any significant differences in social media usage based on the scholars’ age. Currently, such studies mainly consider researchers in specific countries and more research is needed to fully understand practices for using social media usage in academia and to explore interdisciplinary differences. Gerber (2012) has noted that many social media platforms are unfamiliar to most scholars (he found, for example, that 35.8 % of scholars are familiar with the social networking platform ResearchGate, 16.1 % with SlideShare, a site to share and browse presentation slides) and that the “best-known tools, however, were also those that were rejected by the majority of researchers, e.g., Twitter, which was rejected by 80.5 % of the respondents” (Gerber 2012, p. 16), i.e., for example, four out of five scholars had a decisively negative opinion of Twitter.

However, social media use should not only be determined by counting how many people have user accounts on specific platforms; who consumes content from the social media sites without actively contributing to them should also be taken into consideration. Passive use of social media is much more common; e.g., reading blogs or Wikipedia (Weller et al. 2010). Allen et al. (2013) have pointed out that the principle of social media is to push information (tailored to users’ interests) to the public instead of waiting for users to pull the information from databases by searching. In this way, the dissemination of scholarly publications has increased in the health and medical domains (Allen et al. 2013). Furthermore, other studies show that social bookmarking platforms (i.e. services that allow sharing lists of bookmarked websites or scholarly references with a community, see e.g., Peters 2009) do indeed provide a notable coverage of the literature in specific domains, with Mendeley including more than 80 % (and up to 97 %) of research papers in given samples from different domains (Bar-Ilan 2012; Haustein et al. 2013; Li et al. 2012; Priem et al. 2012).

These findings reinforce the belief that content and user behavior of social media can be used as a type of indicator for measuring scholarly activity and impact.

2.2 The Search for Alternative Metrics

There are several shortcomings and challenges inherent in classic bibliometrics that have long been acknowledged by the research community (MacRoberts and MacRoberts 1989; see also Haustein and Larivière 2015). Notable shortcomings include the following: (1) Citations do not measure readership and do not account for the impact of scholarly papers on teaching, professional practice, technology development, and nonacademic audiences; (2) publication processes are slow and it can take a long time until a publication is cited; (3) publication practices and publication channels vary across disciplines and the coverage of citation databases, such as the Web of Science and Scopus, may favor specific fields; and (4) citation behavior may not always be exact and scholars may forget to acknowledge certain publications through citations or may tend to quote those papers that are already more visible due to a high number of citations.

Therefore, for many years, taking a variety of indicators into consideration in addition to citation counts has been strongly recommended, and the search for alternative metrics is older than the name “altmetrics.” This term became well-known through the Altmetrics Manifesto, written in 2010 by Priem et al. (2010) (after Priem first suggested the name altmetrics in a tweet). The manifesto opens with the statement that “no one can read everything” (Priem et al. 2010); the authors then go on to describe a landscape of social bookmarking systems (such as Mendeley, Delicious, or Zotero) and other social networking sites where fellow researchers can act as pointers to interesting literature (e.g., via Twitter or blogs). Just as with classic citation counts, the support of information retrieval is a major motivation for establishing altmetrics, but also just like classic bibliometrics, altmetrics have already been considered as novel indicators for identifying influential research and for evaluation. However, to date, altmetrics are not being used to, for example, make funding decisions, although some in the altmetrics community are advocating for this approach (Galligan and Dyas-Correia 2013; Lapinski et al. 2013; Piwowar 2013b).

Other authors have explicitly highlighted the different nature of altmetrics as obtained through social media and “that the most common altmetrics are not measuring impact, insofar as impact relates to the effect of research on […] practice or thinking” (Allen et al. 2013, p. 1). However, altmetrics offer their own unique type of insight into research practices; for example, “many online tools and environments surface evidence of impact relatively early in the research cycle, exposing essential but traditionally invisible precursors like reading, bookmarking, saving, annotating, discussing, and recommending articles” (Haustein et al. 2013, p. 2). They can also be obtained faster than citation metrics because “social media mentions being available immediately after publication—and even before publication in the case of preprints—offer a more rapid assessment of impact” (Thelwall et al. 2013). Piwowar (2013a, p. 9) has stated the following four advantages of altmetrics: They provide “a more nuanced understanding of impact”, they provide “more timely data”, they include the consideration of alternative and “web-native scholarly products like datasets, software, blog posts, videos and more”, and they serve as “indications of impacts on diverse audiences”. Similar to the first argument, Lapinski et al. (2013, pp. 292–293) presented the idea of different “impact flavors” as “a product featured in mainstream media stories, blogged about, and downloaded by the public, for instance, has a very different flavor of impact than one heavily saved and discussed by scholars”. Furthermore, different activities within one platform may represent different levels (or flavors) of commitment with scholarly content. For example, it requires less commitment to tweet a link to a scholarly article than to write a critical blog post about that article. Fenner (2014) lists a couple of activities that indicate engagement and can be used for altmetrics such as discussing, recommending, viewing, citing, saving. An established classification of different impact flavors is not yet available and the impact of different tools and metrics still needs to be studied. However, it may be useful to distinguish the perspectives described in the next section.

2.3 Social Media Services as Candidates for Altmetrics

Altmetrics research is still in a very early phase, and since the publication of the manifesto by Priem et al. (2010), it has focused on exploring potential data sources. Here, we have organized the different dimensions of alternative metrics into four categories to provide an overview of potential sources for altmetrics data.

(1) Metrics on a new level. The first category refers to alternative sources for measuring the reach of traditional publications on an article-level basis. Often altmetrics initiatives are also described as “article-level metrics”, which highlights their difference from previous journal-level metrics, such as the impact factor. The collection and combination of various metrics for a single article (or for other types of scholarly publications, such as books and conference papers) is one of the core aims of altmetrics. Such metrics can be collected from various sources. Social bookmarking systems (Henning and Reichelt 2008) typically permit the counting of how often a publication has been bookmarked by users; bookmarking systems that are frequently used for altmetrics research are Mendeley (mendeley.com) and CiteULike (citeulike.org; Haustein et al. 2013). Both cover a large amount of scholarly literature. Other bookmarking services (e.g., Zotero and Bibsonomy) are used less often. Social media platforms based on social networking and status updates can be mined for mentions of research papers. Platforms to be considered are Facebook, Twitter, Google+, reddit, Pinterest, and LinkedIn. Adie and Roe (2013) have provided a comparison of how frequently scholarly papers are mentioned across different social media platforms, with Twitter being the richest resource. Besides the popular universal social media services, there are other social networking sites that are particularly dedicated to the academic community, including Academia.edu and ResearchGate. On most of these social media platforms, it is possible to count mentions of scholarly works in users’ posts and, moving to another level, count other users’ interactions with those posts, such as comments, likes, favorites, or retweets. Blogs and Wikipedia are other resources that include explicit citations to scholarly publications and may be used for evaluation purposes. Some blog platforms, such as Nature.com Blogs, Research Blogging, or ScienceSeeker, focus specifically on scientific content and are thus most promising. Other sources from the age of webometrics that have already been studied include presentation slides (Thelwall and Kousha 2008), online syllabi (Kousha and Thelwall 2008), research highlights as identified by Nature Publishing (Thelwall et al. 2013), and article downloads (Pinkowitz 2002). Mainstream media and news outlets can also be monitored as well as discussion forums and Amazon comments. Lin and Fenner (2013) provide an overview of article-level metrics used in the PLoS database, including social shares on Twitter and Facebook, academic bookmarks from Mendeley and CiteULike, and citations from blogs and Wikipedia.

(2) Metrics for new output formats. The second dimension of using altmetrics is in establishing ways to measure alternative types of research output: not only classic publication formats such as journal articles or books may be measured but also other products such as blog posts, teaching material or software products. Buschman and Michalek (2013) pointed out that negative research results are increasingly not being published in peer-reviewed journals; rather, they are showing up in alternative formats such as blogs; they therefore argue that those information sources need to be considered as well for measuring scholars’ academic performance. Blogs are probably the main type of alternative textual research output, but nontextual output is also of interest. Popular forms of this type of information are YouTube videos with scientific content or other video material like recorded lectures, uploaded presentation slides on SlideShare, research data repositories, or source code on Github.

(3) Aggregated metrics for researchers. Finally, altmetrics may also refer to alternative ways to measure the popularity of single researchers. Buschmann and Michalek (2013, p. 38) have argued that the “greatest opportunity for applying these new metrics is when we move beyond just tracking article-level metrics for a particular artifact and on to associating all research outputs with the person that created them. We can then underlay the metrics with the social graph of who is influencing whom and in what ways”. Social media offers the opportunity to not only aggregate the output of individual scholars across different channels, but also to monitor the mentions and the level of attention that individual receives throughout these channels (e.g., followers on Twitter or Wikipedia articles mentioning a researcher). Bar-Ilan et al. (2012) have investigated the “footprints” that scholars leave through their activity in different online environments and then related these author-level metrics to citations from Google Scholar and the Web of Science.

(4) Metrics based on alternative forms of citations. Another objective of the altmetrics community is to take into account that there are different types of audiences and that scholarly activities do not only have an impact within the active research community but also within a general public. But metrics based on citations will only capture impact on those researchers that actively contribute to the process of writing and citing academic publications. Altmetrics look at citation-like activities by academics (e.g., linking to a journal publication in a researcher’s blog post) as well as activities by students, science journalists or non-specified general audiences (e.g., writing a Wikipedia article about a notable researcher). Altmetrics are not necessarily based on citation-like processes, but can comprise other measures that reflect “readership”, e.g., bookmarks and downloads of scholarly articles. Haustein et al. (2014b) show how publications with the most mentions on Twitter or with high readership-metrics on Mendeley are often related to topics that are of interest to a general public (e.g., the Chernobyl accident). Of course, this last category is not isolated from the other three, because online activities create their various interaction networks: a scholar may upload an academic YouTube video which then is being discussed by other scholars on Twitter or by some journalist in a blog post or commented on by the general public in a Facebook group.

Altogether these four categories reflect the different motivations for establishing new metrics as research performance indicators. Altmetrics should respect different sources of academic output besides peer reviewed journals and they should do so on different levels of granularity: down to single articles and up to aggregated metrics for researchers and their entire portfolio. And in order to do this comprehensively, they should also reflect the impact scholarly work can have for different communities, both academic and non-academic. While the exact role and significance of single altmetric indicators is still being studied (see Sect. 4), some of them have recently been transferred into practical applications that may already affect scholars and the assessment of academic performance, as we will see in the next section.

3 Applied Altmetrics in Accessing Research Performance

3.1 Stakeholders and Objectives

Altmetrics affect scholars in at least two ways—researchers can use them to retrieve interesting literature and to promote their own work (similar to the press offices of universities). Piwowar and Priem (2013, p. 10) discussed the use of placing altmetrics on one’s CV and listed several advantages for doing so, including to “uncover the impact of just-published work” and to “legitimize all types of scholarly products”. If this becomes standard practice, funding agencies and tenure committees might one day need to consider altmetric indicators for assessments, but as previously mentioned, this is not yet happening. In addition, as the quality of altmetrics—especially regarding their use for assessment—is being questioned by some in the scientific community (Cheung 2013), their practical impact in the area of assessment might be small. And Fenner (2014) also points out that it might be easy to manipulate some altmetric indicators by self-promotion and gaming. On the other hand, Buschman and Michalek (2013) argue that funders will also profit from altmetrics if they want to evaluate the immediate impact of a project right after or during the funding period. And some research councils are considering the use of altmetrics for evaluations (Viney 2013). Liu and Adie (2013a, p. 31) emphasize that all stakeholders may have different visions for new impact metrics and that “impact is a multi-faceted concept […] and different audiences have their own views of what kind of impact matters and the context in which it should be presented: researchers may care about whether they are influencing their peers, funders may care about re-use or public engagement and universities may wish to compare their performance with competing institutions”.

Meanwhile, other stakeholders are interested in measuring scholarly communication through various online channels. For example, librarians are encouraged to keep up to date with new metrics to better help their customers find specific publications (Baynes 2012; Lapinski et al. 2013). In addition, publishers, as well as certain social media companies, are also interested in altmetrics. In 2013, the publisher Elsevier bought the social bookmarking platform Mendeley. As one of the largest providers of citation metrics (via their database Scopus), Elsevier appears to be particularly interested in ongoing altmetrics developments. Open access platform providers are also contributing to the discussion of altmetrics, like the open access publisher PLoS that uses data from Mendeley, CiteULike, Facebook, and Twitter (Lin and Fenner 2013). Lastly, a new type of stakeholder has entered the scene: companies that are creating new types of products and services, especially centered on altmetrics. We will take a closer look at these new products in the next section.

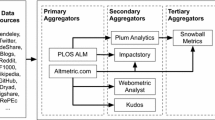

3.2 First Products Based on Altmetrics

Even though the exact value and the informative value of altmetrics remain vague, products have been released that are based on altmetrics approaches and that mainly combine a variety of new measures into one platform. The first product in this area is Altmetric.com, which is supported by Digital Science (owned by the same company as Nature). The purpose of Altmetric.com is to aggregate all article-level metrics that refer to the same publication. It thus monitors tweets, Facebook and blog posts, bookmarks, news, and other sources for mentions of digital object identifiers (DOIs) or other standard identifiers that relate to publications and research data, such as PubMedID or Handle (Liu and Adie 2013a). From these mentions, an “altmetrics score” is computed for each publication. The company’s Website states that “articles for which we have no mentions are scored 0. Though the rate at which scientists are using social media in a professional context is growing rapidly, most articles will score 0; the exact proportion varies from journal to journal, but a mid-tier publication might expect 30–40 % of the papers that it publishes to be mentioned at least once, with the rate dropping rapidly for smaller, niche publications” (Altmetric.com n.d.). Altmetric.com is selling access to its data to researchers and institutions who want to monitor who is mentioning them across different online channels. It also provides the so-called “altmetrics badge”, an icon that illustrates the altmetric score of a publication. This badge is also used by Elsevier’s Scopus. Finally, Altmetric.com also provides information about the users who interact with scholarly publications through social media. As social media platforms like Twitter and Mendeley include information about their users’ social networks, some of these information can be mined for gathering information about the audiences of scholarly publications. One may see, for example, where most people who tweet about a scholarly publication live (based on the location Twitter users mention in their profile) or whether people who bookmarked a specific publication are students or senior researchers (based on their Mendeley profiles). Of course these demographics provided by Altmetric.com have some limitations as they are entirely based on the information users include in their social media profiles, which might not always be complete or correct. See Haustein et al. (2014b) for an example how such information can be obtained from Altmetric.com and used in altmetrics research.

The second product, ImpactStory (impactstory.org), is a not-for-profit organization founded by Jason Priem and Heather Piwowar and supported by the Alfred P. Sloan Foundation (Lapinski et al. 2013; Piwowar 2013b). ImpactStory builds metrics around individual researchers rather than single papers. Researchers who log into the platform can import their publications and other identifiable work, such as presentations on SlideShare and source code published at Github, and track mentions across a variety of sources and classic citations. ImpactStory used to be free to use but started charging a subscription fee in autumn 2014. Both data and source code are currently openly available. A third player on the altmetrics market is Plum Analytics, which also collects article-level metrics.

Liu and Adie (2013b, p. 153) have summed up the current state of the art as follows: “However, all of the tools are in their early stages of growth. Altmetrics measures are not standardized and have not been systematically validated; there has been no clear consensus on which data sources are most important to measure; and technical limitations currently prevent the tracking of certain sources, such as multimedia files”. Applications that work with altmetrics have to face several technical challenges (Liu and Adie 2013a, b). Just like the automatic detection of classic citations, the collection of social mentions is not a trivial process. Collecting data from social media platforms requires data access, which is sometimes enabled via application programming interfaces (APIs). However, APIs sometimes have specific limitations, such as the Twitter API, which does not allow tracing back to older tweets (Gaffney and Puschmann 2014). DOIs or other unique identifiers make tracing citations easier, but they are not always available. Currently, many technical pitfalls remain, and certain information may get lost during the data collection process. Not only providers of altmetrics tools are facing these challenges. The scientific community studying the use of alternative metrics has to deal with them as well. Haustein et al. (2014c, p. 659) sum up technical challenges for working with Twitter-based altmetrics as follows: “A general problem of social media-based analyses is that of data reliability. Although most social media services provide application programming interfaces (API) to make usage data accessible, we still do not know if it is possible to collect every tweet, if there are missing data, or what effects download or time restrictions have on available data. In addition, the Altmetric coverage of Twitter may be incomplete because of technical issues, such as server or network downtime. Moreover, an article may be tweeted in a way that is not easily automatically identified (e.g., “See Jeevan’s great paper in the current Nature!”)”.

4 Altmetrics Research

The current research on altmetrics is still at a very early stage, but it is developing quickly (thus, this section can only highlight some remarkable examples). The history of altmetrics research is also summarized by Fenner (2014). Scholars with different backgrounds (but quite a few of them from library and information science or related fields and with prior experience in bibliometrics, among them Mike Thelwall and Judit Bar-Ilan) are acting as pioneers in the development of new metrics and new research approaches. Specialized workshop series such as the Altmetrics Workshops hosted at ACM Web Science conferences are being initialized and sessions organized at established scientometrics conferences such as the International Society of Scientometrics and Informetrics conference (ISSI). Most of this current research is dedicated to outlining the quality and scope of altmetrics indicators. Case studies can be broadly described as those that compare metrics across platforms (either alternative or classic) or those that study scholar performance or participation in social media across specific disciplines.

One question that is of high interest for the entire altmetrics community is whether social media mentions predict subsequent citation rates—or at least correlate to some degree with classic metrics. The most comprehensive comparison of altmetrics and citations to date was conducted by Thelwall et al. (2013). These authors looked at 11 different social media resources. However, metrics assessed in this study could not predict subsequent citations. which once more indicates that most altmetrics measure some different form of impact then citations.

Based on the current literature, Mendeley appears to provide the most article-level metrics: Zahedi et al. (2014) found Mendeley readership metrics for 62.6 % of all publications in their test sample, and Priem et al. (2012) found that close to 80 % of their publication set was included on Mendeley. In contrast, Priem et al. (2012) found only around 5 % of their sample papers cited on Wikipedia and Shuai et al. 2013 even less than this—which shows that different social media channels will provide very different metrics. Haustein et al. (2014c) could also show that the field is still developing: they identified around 20 % of biomedical papers published in 2012 being mentioned in at least one tweet on Twitter, twice as many as in 2011.

Mendeley readership and citation counts were found to show a moderate and significant correlation by several studies (Bar-Ilan 2012; Haustein et al. 2013; Li et al. 2012; Priem et al. 2012; Zahedi et al. 2014). Shuai et al. (2013) also report correlations for citations and mentions in Wikipedia, while Samoilenko and Yasseri (2013) did not find significant correlations of Wikipedia metrics with academic notability for researchers from four disciplines.

Li and Gillet (2013) and Thelwall and Kousha (2014) have collected user data from social media platforms (from Mendeley and Academia.edu, respectively) to determine how user activities relate to the professional levels of academia. Li and Gillet (2013) found that Mendeley users with higher impact were senior scholars with many co-authorships. Thelwall and Kousha (2014) showed that more senior philosophy scholars on Academia.edu get more page views for their profile pages, but they did not observe any positive correlation of page views and citations received.

Disciplinary differences appear to underlay most current altmetrics. Zahedi et al. (2014) conducted a study on altmetrics across disciplines and found that journals from different disciplines are represented to a very different extent on Mendeley (with arts and humanities being the least represented), Wikipedia, Twitter, and Delicious. Several studies have taken a close look at single disciplines and their depiction through altmetrics. Haustein et al. (2014a) focused on astrophysicists, and Haustein et al. (2014b, c) analyzed biomedicine scholars on Twitter. Holmberg and Thelwall (2014) then compare Twitter usage across researchers from 10 disciplines.

All these examples show that much still needs to be studied before we fully understand what alternative metrics derived from social media platforms or other online data can tell us about research activities and scholarly impact.

Conclusion

The call for alternative approaches to measure scholarly performance and impact has been heard and experts from academia, publishing business and research councils are engaged in the present discussion of new indicators. The label “altmetrics” has allowed this community to connect and establish venues for discussing their ideas about how to make sense of user interactions with scholarly content in various online environments. Expected outcomes of this discussion vary, and while some are skeptical about the practical relevance of altmetrics, others believe that altmetrics will become seamlessly integrated to other performance measurements, like Piwowar (2013a, p. 9) puts it: “Of course, these indicators may not be “alternative” for long. At that point, hopefully we’ll all just call them metrics”.

Before this can happen, much more work is needed in order to better understand the nature of user behavior in social media environments and the value of individual metrics obtained through measuring this user behavior. Current research focuses on this task and is step-by-step creating a map of the altmetrics landscape. Meanwhile single publishers have started to provide aggregated altmetrics for publications; other stakeholders enter the altmetrics market. These are still rather niche services, but if altmetrics become popular with a broader community and gain influence, it is quite likely that peopcheckle people might change their behavior in order to achieve better scores or even try to game. This of course is not new and happens as well with traditional bibliometrics and citation counts (e.g., Frey and Osterloh 2011), for example, through self-citations or cartels. But bibliometricians have found ways to adjust their indicators in order to respect such behavior. Consequently, independent research should monitor the use of altmetrics equally carefully and keep on studying how tools, users and metrics interact. For now, both social media platforms as well as publication databases offer a useful environment for browsing scholarly references and discovering interesting information based on peer recommendations so that many academics can already benefit from altmetrics for their everyday work.

Notes

- 1.

According to the Alexa.com ranking as of June 2014, the most accessed Web sites include Facebook, YouTube, Wikipedia, Twitter, and LinkedIn.

References

Adie E, Roe W (2013) Altmetric: enriching scholarly content with article-level discussion and metrics. Learn Publish 26(1):11–17

Allen HG, Stanton TR, Di Pietro F, Moseley GL, Sampson M (2013) Social media release increases dissemination of original articles in the clinical pain sciences. PLoS ONE 8(7):e68914

Altmetrics.com (no date) What does Altmetric do? http://www.altmetric.com/whatwedo.php#score. Accessed 14 June 2014

Bar-Ilan J (2012) JASIST 2001-2010. Bull Am Soc Inf Sci Technol 38(6):24–28

Bar-Ilan J, Haustein S, Peters I, Priem J, Shema H, Terliesner J (2012) Beyond citations. Scholars, visibility on the social Web. In: Proceedings of the 17th international conference on science and technology indicators (STI conference), Montreal, pp 98–109

Bartling S, Friesike S (eds) (2014) Opening science: the evolving guide on how the internet is changing research, collaboration and scholarly publishing. Springer, Heidelberg; New York

Baynes G (2012) Scientometrics, bibliometrics, altmetrics: some introductory advice for the lost and bemused. Insights 25(3):311–315

Bruns A (2008) Blogs, Wikipedia, second life, and beyond. From production to produsage. Peter Lang, New York

Buschman M, Michalek A (2013) Are alternative metrics still alternative? Bull Am Soc Inf Sci Technol 39(4):35–39

Buzzetto-More NA (2013) Social media and prosumerism. Issues Inf Sci Inf Technol 10:81–93

Cheung M (2013) Altmetrics: too soon for use in assessment. Nature 494(7436):176

Cronin B (1984) The citation process. The role and significance of citations in scientific communication. Taylor Graham, London

Downes S (2005) E-learning 2.0. eLearn Magazine. http://elearnmag.acm.org/featured.cfm?aid=1104968. Accessed 15 June 2014

Faris DM (2013) Dissent and revolution in a digital age. Social media, blogging and activism in Egypt. Tauris, London

Fenner M (2014) Altmetrics and other novel measures for scientific impact. In: Bartling S, Friesike S (eds) Opening science. The evolving guide on how the internet is changing research, collaboration and scholarly publishing. Springer, Heidelberg; New York, pp 179–190

Frey B, Osterloh M (2011) Ranking games. University of Zurich Department of Economics Working Paper No. 39. doi:10.2139/ssrn.1957162. Accessed 22 June 2014

Friesike S, Schildhauer T (2015) Open science: many good resolutions, very few incentives, yet. In: Welpe IM, Wollersheim J, Ringelhan S, Osterloh M (eds) Incentives and performance – governance of research organizations. Springer, Cham

Gaffney D, Puschmann C (2014) Data collection on Twitter. In: Weller K, Bruns A, Burgess J, Mahrt M, Puschmann C (eds) Twitter and society. Peter Lang, New York, pp 55–68

Galligan F, Dyas-Correia S (2013) Altmetrics: rethinking the way we measure. Ser Rev 39(1):56–61

Gerber A (2012) Online trends from the first German trend study on science communication. In: Tokar A, Beurskens M, Keuneke S, Mahrt M, Peters I, Puschmann C, van Treeck T, Weller K (eds) Science and the internet. Düsseldorf University Press, Düsseldorf, pp 13–18

Haustein S, Larivière V (2015) The use of bibliometrics for assessing research: possibilities, limitations and adverse effects. In: Welpe IM, Wollersheim J, Ringelhan S, Osterloh M (eds) Incentives and performance – governance of research organizations. Springer, Cham

Haustein S, Peters I, Bar-Ilan J, Priem J, Shema H, Terliesner J (2013) Coverage and adoption of altmetrics sources in the bibliometric community. In: Proceedings of the 14th international society of scientometrics and informatics conference, Vienna, Austria, 15–19th July 2013, pp 1–12

Haustein S, Bowman TD, Holmberg K, Peters I, Larivière V (2014a) Astrophysicists on Twitter: an in-depth analysis of tweeting and scientific publication behavior. Aslib J Inf Manag 66(3):279–296

Haustein S, Larivière V, Thelwall M, Amyot D, Peters I (2014b) Tweets vs. Mendeley readers: how do these two social media metrics differ? Inf Technol 56(5) (in press)

Haustein S, Peters I, Sugimoto CR, Thelwall M, Larivière V (2014c) Tweeting biomedicine: an analysis of tweets and citations in the biomedical literature. J Am Soc Inf Sci Technol 65(4):656–669

Henning V, Reichelt J (2008) Mendeley. A Last.fm for research? In: Proceedings of the 4th IEEE international conference on eScience, Indianapolis, pp 327–328

Hey T (2005) Cyberinfrastructure for e-Science. Science 308(5723):817–821

Hey T, Trefethen A (2003) The data deluge: an e-Science perspective. In: Berman F, Fox CG (eds) Grid computing. Making the global infrastructure a reality. Wiley, Chichester, pp 809–824

Holmberg K, Thelwall M (2014) Disciplinary differences in Twitter scholarly communication. Scientometrics. doi:10.1007/s11192-014-1229-3

Kaplan AM, Haenlein M (2010) Users of the world, unite! The challenges and opportunities of social media. Bus Horizons 53(1):59–68

Kousha K, Thelwall M (2008) Assessing the impact of disciplinary research on teaching. An automatic analysis of online syllabuses. J Am Soc Inf Sci Technol 59:2060–2069

Lapinski S, Piwowar H, Priem J (2013) Riding the crest of the altmetrics wave: how librarians can help prepare faculty for the next generation of research impact metrics. Coll Res Libr News 74(6):292–294 + 300

Letierce J, Passant A, Breslin J, Decker S (2010) Understanding how Twitter is used to spread scientific messages. In: Proceedings of the WebSci10: extending the frontiers of society on-line, Raleigh

Leydesdorff L (1995) The challenge of scientometrics. The development, measurement and self-organization of scientific communication. DSWO, Leiden

Li N, Gillet D (2013) Identifying influential scholars in academic social media platforms. In: Proceedings of the 2013 IEEEACM international conference on advances in social networks analysis and mining. ACM, New York, pp 608–614

Li X, Thelwall M, Giustini D (2012) Validating online reference managers for scholarly impact measurement. Scientometrics 91(2):461–471

Lin J, Fenner M (2013) The many faces of article-level metrics. Bull Am Soc Inf Sci Technol 39(4):27–30

Liu J, Adie E (2013a) Five challenges in altmetrics: a toolmaker's perspective. Bull Am Soc Inf Sci Technol 39(4):31–34

Liu J, Adie E (2013b) New perspectives on article-level metrics: developing ways to assess research uptake and impact online. Insights UKSG J 26(2):153–158

MacRoberts M, MacRoberts BR (1989) Problems of citation analysis. A critical review. J Am Soc Inf Sci 40(5):342–349

Mahrt M, Weller K, Peters I (2014) Twitter in scholarly communication. In: Weller K, Bruns A, Burgess J, Mahrt M, Puschmann C (eds) Twitter and society. Peter Lang, New York, pp 399–410

Maness J (2006) Library 2.0 theory. Web 2.0 and its implications for libraries. Webology 3(2):Article 25. http://www.webology.ir/2006/v3n2/a25.html. Accessed 14 June 2014

McNab C (2009) What social media offers to health professionals and citizens. Bull World Health Organ 89(8):566–567

Nentwich M (2003) Cyberscience. Research in the age of the internet. Austrian Academy of Sciences Press, Vienna

Nentwich M, König R (2012) Cyberscience 2.0. Research in the age of digital social networks. Campus Verlag, Frankfurt; New York

Nentwich M, König R (2014) Academia goes facebook? The potential of social network sites in the scholarly realm. In: Bartling S, Friesike S (eds) Opening science. The evolving guide on how the internet is changing research, collaboration and scholarly publishing. Springer, Heidelberg; New York, pp 107–124

O’Reilly T (2005) What is Web 2.0? Design patterns and business models for the next generation of software. http://oreilly.com/web2/archive/what-is-web-20.html. Accessed 14 June 2014

Pampel H, Dallmeier-Tiessen S (2014) Open research data. From vision to practice. In: Bartling S, Friesike S (eds) Opening science. The evolving guide on how the internet is changing research, collaboration and scholarly publishing. Springer, Heidelberg; New York, pp 213–224

Papacharissi Z (ed) (2009) Journalism and citizenship. New agendas in communication. Routledge, New York

Peters I (2009) Folksonomies. Indexing and retrieval in Web 2.0. De Gruyter/Saur, Berlin

Pinkowitz I (2002) Research dissemination and impact. Evidence from web site downloads. J Financ 57:485–499

Piwowar H (2013a) Altmetrics. What, why and where? Bull Am Soc Inf Sci Technol 39(4):8–9

Piwowar H (2013b) Altmetrics: value all research products. Nature 493(7431):159

Piwowar H, Priem J (2013) The power of altmetrics on a CV. Bull Am Soc Inf Sci Technol 39(4):10–13

Priem J, Taborelli D, Groth P, Nylon C (2010) Alt-metrics. A manifesto. http://altmetrics.org/manifesto/. Accessed 15 June 2014

Priem J, Piwowar H, Hemminger B (2012) Altmetrics in the wild. Using social media to explore scholarly impact. arXiv:1203.4745v1. Accessed 15 June 2014

Procter R, Williams R, Stewart JK, Poschen M, Snee H, Voss A, Asgari-Targhi M (2010) Adoption and use of Web 2.0 in scholarly communications. Philos Trans R Soc A Math Phys Eng Sci 368(1926):4039–4056

Samoilenko A, Yasseri T (2013) The distorted mirror of Wikipedia. A quantitative analysis of Wikipedia coverage of academics. http://arxiv.org/abs/1310.8508. Accessed 15 June 2014

Shuai X, Jiang Z, Liu X, Bollen J (2013) A comparative study of academic and Wikipedia ranking. In: JCDL’13 Proceedings of the 13th ACM/IEEE-CS joint conference on digital libraries. ACM, Indianapolis, pp 25–28

Silverman C (ed) (2014) Verification handbook. A definitive guide to verifying digital content for emergency coverage. European Journalism Centre, Maastricht

Sitek D, Bertelmann R (2014) Open access. A state of the art. In: Bartling S, Friesike S (eds) Opening science. The evolving guide on how the internet is changing research, collaboration and scholarly publishing. Springer, Heidelberg; New York, pp 139–145

Thelwall M (2008) Bibliometrics to webometrics. J Inf Sci 34(4):605–621

Thelwall M, Kousha K (2008) Online presentations as a source of scientific impact? An analysis of PowerPoint files citing academic journals. J Am Soc Inf Sci Technol 59:805–815

Thelwall M, Kousha K (2014) Academia.edu: social network or academic network? J Am Soc Inf Sci Technol 65(4):721–731

Thelwall M, Haustein S, Larivière V, Sugimoto C (2013) Do altmetrics work? Twitter and ten other social web services. PLoS ONE 8(5)

Tochtermann K (2014) How science 2.0 will impact on scientific libraries. Inf Technol 56(5) (in press)

Tokar A, Beurskens M, Keuneke S, Mahrt M, Peters I, Puschmann C, van Treeck T, Weller K (eds) (2012) Science and the internet. Düsseldorf University Press, Düsseldorf

Viney I (2013) Altmetrics: research council responds. Nature 494(7436):176

Waldrop MM (2008) Science 2.0. Sci Am 298(5):68–73

Weller K, Peters I (2012) Twitter for scientific communication. How can citations/references be identified and measured? In: Tokar A, Beurskens M, Keuneke S, Mahrt M, Peters I, Puschmann C, van Treeck T, Weller K (eds) Science and the internet. Düsseldorf University Press, Düsseldorf, pp 211–224

Weller K, Mainz D, Mainz I, Paulsen I (2007) Wissenschaft 2.0? Social Software im Einsatz für die Wissenschaft. In: Ockenfeld M (ed) Information in Wissenschaft, Bildung und Wirtschaft. Proceedings der 29. Online-Tagung der DGI, Frankfurt am Main, pp 121–136

Weller K, Dornstädter R, Freimanis R, Klein RN, Perez M (2010) Social software in academia: three studies on users’ acceptance of Web 2.0 services. In: Proceedings of the 2nd web science conference (WebSci10), Raleigh

Zahedi Z, Costas R, Wouters P (2014) How well developed are altmetrics? A cross-disciplinary analysis of the presence of’alternative metrics’ in scientific publications. http://arxiv.org/abs/1404.1301. Accessed 15 June 2014

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Weller, K. (2015). Social Media and Altmetrics: An Overview of Current Alternative Approaches to Measuring Scholarly Impact. In: Welpe, I., Wollersheim, J., Ringelhan, S., Osterloh, M. (eds) Incentives and Performance. Springer, Cham. https://doi.org/10.1007/978-3-319-09785-5_16

Download citation

DOI: https://doi.org/10.1007/978-3-319-09785-5_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-09784-8

Online ISBN: 978-3-319-09785-5

eBook Packages: Business and EconomicsBusiness and Management (R0)