Abstract

This study puts an emphasis on the disciplinary differences observed for the behaviour of citations and downloads. This was exemplified by studying citations over the last 10 years in four selected fields, namely, arts and humanities, computer science, economics, econometrics, and finance, and oncology. Differences in obsolescence characteristics were studied using synchronic as well as diachronic counts. Furthermore, differences between document types were taken into consideration and correlations between journal impact and journal usage measures were calculated. The number of downloads per document remains almost constant for all four observed areas within the last four years, varying from approximately 180 (oncology) to 300 (economics). The percentage of downloaded documents is higher than 90 % for all areas. The number of citations per document ranges from one (arts and humanities) to three (oncology). The percentages of cited documents range from 40 to 56 %. According to our study, 50–140 downloads correspond to one citation. A differentiation according to document type reveals further download- and citation-specific characteristics for the observed subject areas. This study points to the fact that citations can only measure the impact in the ‘publish or perish’ community; however, this approach is neither applicable to the whole scientific community nor to society in general. Downloads may not be a perfect proxy to estimate the overall usage. Nevertheless, they measure at least the intention to use the downloaded material, which is invaluable information in order to better understand publication and communication processes. Usage metrics should consider the unique nature of downloads and ought to reflect their intrinsic differences from citations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Due to the steadily increasing popularity of electronic journals, the tracking and collection of usage data has become much easier compared to in the print-only era. Thanks to the global availability of e-journals, it is now possible to observe scholarly communication from the reader’s perspective as well (Duy and Vaughan 2006; Rowlands and Nicholas 2007). As a result of e-journals, usage metrics, and particularly similarities and differences of reads and citations, have become a central issue in bibliometric research (Kurtz et al. 2005; Kurtz and Bollen 2010). Accordingly, usage metrics can be regarded as complementary to citation metrics (e.g. Bollen et al. 2005). In comparison to citation data, usage data have apparent advantages like easier and cheaper data collection, faster availability, and the reflection of a broader usage scope (Bollen et al. 2005; Brody et al. 2006; Duy and Vaughan 2006; Bollen and Van de Sompel 2008; Schloegl and Gorraiz 2010; Haustein 2011; Haustein 2012). Usage data, however, present a few disadvantages: (1) The availability of global usage data is restricted. (2) There is a risk of inflation by manual or automatic methods. (3) There are different access channels to scholarly resources (e.g. publisher websites vs. subject repositories vs. institutional repositories) (Gorraiz and Gumpenberger 2010).

Usage and citation analyses can be performed at both local and global levels. Since usage data are available to all libraries with existing license agreements for e-journals, examples of local usage studies are manifold (e.g. Darmoni et al. 2002; Coombs 2005; Duy and Vaughan 2006; Kraemer 2006; McDonald 2007; Bollen and van de Sompel 2008). Unlike local usage analyses, global usage studies are uncommon and depend on the cooperation of publishers in providing data for scientific purposes, for example, within the framework of different cooperation programs like the Elsevier Bibliometric Research Program (EBRP) (e.g. Guerrero-Bote & Moya-Anegón, 2013).

In contrast to journals from traditional publishing houses, gold open access journals, like all journals from the Public Library of Science, offer usage data (views, downloads) freely available on their websites. These are promising data sources for future studies on usage and alternative metrics. Furthermore, the platform SciVerse ScienceDirect offers the free service ‘Top 25 Hottest Articles’ in each category, which provides lists of most read articles, counted by article downloads from ScienceDirect either by journal (more than 2,000 titles) or by subject (24 core subject areas) level.

The correlation between citation and usage data is highly dependent on the discipline’s publication output and has been well documented in several studies (Bollen et al. 2005; Coats 2008; Moed 2005; Brody et al. 2006; Chu and Krichel 2007; O’Leary 2008; Watson 2009; Wan et al. 2010). Haustein and Siebenlist (2011) have focused on the evaluation of journal readership against the background of global download statistics by evaluating the usage of physics journals on social bookmarking platforms. Several usage indicators have been suggested in recent years. Most of them are based on the classical citation indicators from the Journal Citation Reports (JCR), using download data (usually full-text article requests) instead of citations. The corresponding usage metrics are ‘usage impact factor’ (Rowlands and Nicholas 2007; Bollen and Van de Sompel 2008), ‘usage immediacy index’ (Rowlands and Nicholas 2007) or ‘download immediacy index’ (Wan et al. 2010), and ‘usage half-life’ (Rowlands and Nicholas 2007).

The authors of the present study have already performed a few analyses focusing on usage data for oncology and pharmacology journals provided by ScienceDirect (Schloegl and Gorraiz 2010; Schloegl and Gorraiz 2011). In this study, the following issues have been addressed:

-

comparison of download and citation frequencies at category level (disciplinary differences exemplified by means of four selected fields: arts and humanities, computer science, economics, econometrics and finance, and oncology),

-

disciplinary differences in obsolescence characteristics between citations and downloads using synchronic and diachronic counts,

-

differences between document types, and

-

correlations between different journal impact and journal usage measures.

This contribution is an extension of our previous work presented at the 14th International Society of Scientometrics and Informetrics (ISSI) Conference (Gorraiz et al. 2013). In this article, we put a stronger emphasis on elaborating clear differences among the analysed disciplines. Furthermore, though included in the data, due to their small population and possible resulting distortions we did not consider psychology journals as their own subject category.

Methodology and data

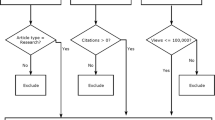

All data were provided within the scope of the EBRP, 2012. The analysed data pool includes usage data for the four ScienceDirect categories: arts and humanities (A&H, 37 journals), computer science (CS, 150 journals), economics, econometrics and finance (ECO, 133 journals), and oncology (ONC, 42 journals). The following data from ScienceDirect have been used at journal level (all for the period 2002–2011):

-

total number of downloadable items for each year,

-

number of downloadable items disaggregated by document types for each year,

-

download counts disaggregated by document type for each download year as well as for each publication year available within the given time period, and

-

citation counts from Scopus for each citation year and disaggregated by the various publication years (from citation year back to 2002).

All journals within a subject category were aggregated and considered as ‘one big journal’. This way, the number of all downloads within the category and the number of citations to all journals in the category was taken into account. Resulting values are averages per document. Used metrics were applied at synchronic (=reference point for the calculation of the download or citation year) as well as at diachronic levels (=reference point for the calculation is the publication year addressing subsequent citation or download years). Timelines for downloads per item as well as for citations per item have been provided in order to study the occurring obsolescence patterns. The common document types in ScienceDirect—articles, reviews, conference papers, editorial materials, letters, notes, and short communi-cations—were differentiated accordingly. Notes and research notes could not be distinguished. In addition, the evolution of articles in press (AIP) was analysed. Correlations between downloads and citations were calculated at synchronic as well as at diachronic levels for each of the four ScienceDirect categories using Spearman’s correlation coefficient. Besides calculating the correlation between the absolute numbers, we also used relative journal indicators. However, due to the fact that the majority of downloads take place in the current and subsequent years of publication (Schloegl and Gorraiz 2010), the use of a usage impact factor relying on the same time window as the impact factor would be flawed. It is rather suggested to deploy a journal usage factor (JUF), which not only reflects the two retrospective years but also includes the current reference year. The JUF is therefore defined as the number of downloads in the reference year from journal items published in this year as well as in the previous two years divided by the number of items published in these three years. In contrast to the usual two-year time window, this three-year time interval allows for a significant amount of downloads in most of the cases (Gorraiz and Gumpenberger 2010). Correspondingly, an adapted version of Garfield’s impact factor (GIF) is used in this study considering also the year of reference along with the previous two years. This indicator is labelled as total impact factor (TIF) as it also includes the immediacy index.

In order to test the stability of the above defined JUF(2), we experimented with two versions of this indicator using longer time windows:

-

JUF(5) = number of downloads in 2010 (=reference year) of documents published in the years 2005–2010 divided by the number of documents published in 2005–2010 (reference year plus 5 years window) and

-

JUF(8) = number of downloads in 2010 of documents published in the years 2002–2010 divided by the number of documents published between 2002–2010 (reference year plus 8 year window).

In accordance with this and using citations instead of downloads, we calculated TIF(2), TIF(5), and TIF(8). GIF(2), GIF(5), and GIF (8) correspond to Garfield’s journal impact factor for 2, 5, and 8 years respectively, without consideration of the first year (=reference year) but including all document types. Correlations were then calculated for all journals included in each category.

Results and discussion

Synchronic analysis

Comparison of download and citation rates per document type among disciplines

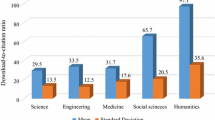

Table 1 shows the download and citation distribution for the various document types within the four investigated disciplines. The citation and download numbers are from the year 2011 and apply to documents published since 2002 (publication year window = 10 years). As can be seen, download and citation frequencies are subject specific. The citation rate per document clearly depends on the discipline. It is lowest in arts and humanities (1.18 citations per document), nearly twice as high in computer science (2 citations per document) and economics (1.85 citations per document) and three times higher in oncology (2.9 citations per document). The picture is clearly different for downloads. By far, most downloads are made in economics (approximately 250 downloads per document). For the three other disciplines, the download level per document is approximately the same: 167 in arts and humanities, 155 in oncology, and 139 in computer science. This clearly shows that the disciplines with the highest citation rates are not those with the highest download rates. Moreover, the proportion of downloaded documents is remarkably higher than the proportion of cited documents. In all four disciplines, more than 90 % of all documents published between 2002 and 2011 were downloaded in 2011. This proportion was highest in economics (96 %) and computer science (98 %). The corresponding values for the citations were between 43 % in arts and humanities and 59 % in oncology.

In each discipline, specific document types play significant roles. The number of both downloads and citations per document are higher for reviews than for research articles in computer science and oncology. Letters are more frequently downloaded and conference proceedings more often cited in arts and humanities than in other disciplines. Furthermore, short communications are most frequently downloaded in computer science, economics and oncology, and most frequently cited in computer science and economics. With a download frequency of 120–250 downloads per document, the importance of articles in press is evident in all disciplines.

In order to corroborate these results, download and citation statistics were retrieved for the download/citation years 2008–2011, considering a publication year window of five years for the downloads and seven years for the citations. As Table 2 shows, there is little variance in the four-year interval regarding the percentage of downloaded and cited documents as well as in the download and citation rates per document.

Timelines of downloads and citations per document

The timelines of downloads and citations are comparatively shown for all four categories in Figs. 1, 2, 3, and 4 below. The x-axis represents the publication years of the down-loaded/cited documents, whereas the multi-coloured lines represent the different down-load/citation years.

Considering downloads, similar trend lines can be observed for all four categories. In all four categories, the first two years post publication account for the highest downloads. Disciplinary differences only occur regarding the absolute download values, as was illustrated previously.

Synchronic citation counts differ in their development from discipline to discipline. For oncology, the citation maximum is reached two years after publication, followed by a decrease afterwards. For computer science, this interval increases to three to four years; and for economics, econometrics and finance increases to five to six years. After these intervals, stagnation rather than a decrease can be observed. For arts and humanities this interval is longer overall.

Diachronic analysis: timelines of downloads and citations per document

The timelines of downloads and citations are comparatively shown for all four categories in Figs. 5, 6, 7, and 8 below. The x-axis represents the download/citation years of the downloaded/cited documents, whereas the multi-coloured lines represent the different publication years.

Considering downloads, the results do not differ for the diachronic counts. The trend lines show a very similar run for all four analysed subject categories, namely a steady and steep curve progression.

Higher download averages have been identified for oncology, economics, econometrics, and finance (see Figs. 7, 8), with maximum values between 450 and 500 in 2009 for publications of the same year, followed by computer science and arts and humanities (see Figs. 5, 6) with maximum values between 300 and 350 in 2009 for publications of the same year.

For citations, the results from diachronic counts show different obsolescence patterns depending on the research field. There is a steady increase in citations within the first 10 years for arts and humanities (Fig. 5) and economics, econometrics and finance (Fig. 7). Whereas in Computer Science (Fig. 6) stagnation occurs after the first six to seven years for the older articles (2002–2004). For the other years, data availability is too sparse for solid evidence. Oncology (Fig. 8) is the only exception where a decrease can be observed after the second year.

Average citation frequency is also different for the various categories. Average counts are below two for arts and humanities, below three for computer science and economics, econometrics and finance, and below 4.5 for oncology.

Diachronic counts for different document types: timelines of downloads and citations per document

The diachronic count mode with fixed publication years gives a good picture of the citation and download trends for each document type over the last 10 years. Timelines for each disciplineFootnote 1 can be seen in Figs. 9, 10, 11, 12, 13, and 14 below. The x-axis represents the download/citation years of the downloaded/cited documents, whereas the multi-coloured lines represent the different publication years.

Figures 9–14 show very similar download timelines for all document types. The number of downloads of review articles is about twice as high as of articles for the last three years (2009–2011). Articles, in turn, are downloaded almost twice as often as letters. The timeline results for short communications are similar to the ones observed for letters, with the difference that the latter document type is approximately downloaded three times less often. The availability of notes was restricted and therefore the obtained results were too sparse to be presented here.

Citation timelines are all similar for articles, review articles, and conference proceedings, showing a steady increase at the beginning and reaching stagnation after a while. On the one hand, review articles clearly acquire more citations than articles. On the other hand, they reach the stagnation phase earlier. Conference proceedings remain less cited than articles. Editorials and letters are mostly cited within the first three years after publication, although at a very low level.

Data about AIP were only available from 2007 onwards. Their growth and the evolution of their download rates are represented in Table 3. Although inconsistencies were observed for the data provided by Elsevier for the years 2008 and 2009, this analysis proves an overall growth of AIPs in number and in terms of their download frequencies.

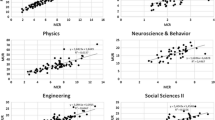

Correlations between synchronic and diachronic downloads and citations (absolute values) at journal level for each category

Spearman correlations between the total number of downloads and the total number of citations were calculated for each publication year (diachronic mode) as well as for each download/citation year (synchronic mode) for all journals with nearly complete data for the interval 2002–2011 (see Table 4).

The diachronic mode, considering the total number of citations and downloads for each publication year, should be the most appropriate way to determine the strength of the correlation between downloads and citations at the journal level. However, the download and citation windows need to be long enough. Thus, significant correlations were expected for the former publication years (2002 and 2003), where the citation/download windows are large enough. Nevertheless, the results presented in Table 3 are not in agreement with this assumption. The reason might be the strong increase in e-journal usage between 2003 and 2008, consolidating afterwards, causing a certain distortion of download counts in the transition years. The same assumption holds true for the synchronic correlations reported for the latter years (for instance, the 10 year window in 2011), which are considerably higher for all subject areas than the diachronic ones for 2002, the corresponding year with the largest citation/download window (10 years). In spite of these observations, high diachronic correlations were observed for economics, econometrics, and finance as well as for oncology. Correlations for computer science and for arts and humanities were slightly lower. Also, for the synchronic correlations, the highest values can be observed for oncology, economics, econometrics, and finance, followed by computer science and arts and humanities.

Correlations between JUF, TIF and GIF at journal level for each category

Spearman correlations among JUF, TIF, and GIF were compiled for the year 2010 for each journal with nearly complete data availability for the interval 2002–2010 in each category (see Table 5). The same correlations were also calculated for the year 2011 with no appreciable differences.

Table 5 shows that significant correlations between JUF and TIF were observed in all subject categories. The application of different time windows (2, 5, or 8 years) has nearly no influence, since the corresponding correlations are all very high. Furthermore, it makes nearly no difference whether the reference year is considered (TIF) or not (GIF) when calculating the impact factor.

Discussion

For all four subject categories, the results of this study corroborate in most instances the findings of previous analyses by Schloegl and Gorraiz (2010, 2011), who already observed a significant increase in the usage of ScienceDirect e-journals in oncology and also pharmacology in the period 2002–2006.

There is a clear difference between downloads and citations with regard to the proportion of downloaded/cited documents. While more than 90 % of all documents published between 2002 and 2011 were downloaded in 2011, the corresponding percentage for citations is between 42 and almost 60 %. As with citations, the number of downloads depends on the discipline. While an average document in arts and humanities was cited 1.2 times, approximately twice as many citations were made per document in computer science and economics (2.0), and three times as many citations were made per document in oncology (2.9). The variance was smaller for the average number of downloads: 253 in economics, 155 in oncology, 167 in arts and humanities, and 139 in computer science. The disciplines with the highest citations rates are different from those with the highest download rates. One possible explanation is that different citation rates among disciplines are caused by different citation behaviours, whereas download frequencies in a discipline might be more strongly influenced by the size of the particular user population. According to our study and considering a publication window of 10 years (see Table 1), 50 (oncology) to 140 (arts and humanities) downloads correspond to one citation depending on the category.

Unfortunately, there are hardly any studies available that are based on global download data at journal and category level for comparative purposes. However, the authors themselves reported in previous studies a ratio between citations and downloads of around 1:37 in the category oncology, comprising 29 journals in the transition interval between 2004 and 2006 (Schloegl and Gorraiz 2010), and a citation download ratio of 1:49 in the category pharmacy, comprising 30 journals (Schloegl and Gorraiz 2011). These former findings correspond well with the results of this study (consider for example oncology, ratio of 1:50).

Document types seem to play different roles in different disciplines. The number of both downloads and citations per document are higher for reviews than for research articles in computer science and oncology. Letters are more frequently downloaded and conference proceedings more often cited in arts and humanities than in other disciplines. Furthermore, short communications are more frequently downloaded in computer science, economics, and oncology, and more frequently cited in computer science and economics.

The diachronic count mode with fixed publication years is more suitable to analyse the overall increase in e-journal usage over time. The trend lines for downloads are very similar for all five analysed subject categories. The steady and steep curve progressions illustrate the rapid adoption of electronic journals by the research community, which has definitely sped up the process of scholarly communication in the last decade.

Results from synchronic download counts have indicated that the first two years post publication account for the highest downloads. The exclusion of the reference year is, therefore, no longer valid for the sound construction of the journal usage factor. Usage metrics should consider the special nature of downloads and ought to reflect their intrinsic differences from citations. In citation metrics, the common non-consideration of the ‘immediacy year’ (as for Garfield’s impact factor and almost all journal impact measures like SJR or SNIP) is well grounded in the existing citation delay. This is also confirmed by our study since the inclusion of the ‘immediacy index’ in the impact factor (GIF) (which we named TIF) did not result in considerable changes in any of the disciplines.

Contrary to the download analyses, the results for diachronic and synchronic citation counts reveal not only rather different obsolescence patterns depending on the research field, but also different citation frequencies.

Regarding document types, the time lines of downloads are very similar in general. Differences only occur in the download rates per document type. For citations, similarities only exist between articles, review articles, and conference papers. Average citation frequencies differ from document type to document type. Review articles are more cited overall than articles, but they reach the stagnation phase earlier.

The correlations between impact and usage factors were lower than those between the absolute values. Furthermore, the obtained results of this study suggest that different time windows for the calculation of JUF, TIF, or GIF seem to be indiscriminate. The high correlations observed for GIF(2) and GIF(5) are in agreement with the correlations calculated in the 2010 edition of the JCR for oncology (0.99; 145 journals), computer sciences (0.92; 395 journals), and economics, business, and finance (0.95; 237 journals). The correlation for all the journals of the Journal Citation Reports Science Edition (JCR-SCI) (6,717 journals) was 0.97, and for the overall Journal Citation Reports Social Sciences Edition (JCR-SSCI) (1995 journals) 0.94.

A new document type evolved in the digital era. Articles in press have become more and more common in recent years and are particularly interesting regarding usage metrics. They could even play an important role to project future downloads or even citations. However, further analyses at publication level are required to gain more insight to underpin this argument.

General conclusions

The download differences reported in this study suggest that citations can only measure the impact in the ‘publish or perish’ community, in which the characteristics of documents and their size differ from discipline to discipline. However, the spectrum of disciplinary target groups is much broader. The audience can belong to different sectors of the ‘triple helix’ (academic, governmental, industrial) or even be the whole society (societal impact).

As a logical consequence, articles are often downloaded many times but remain uncited due to the fact that they are used for other purposes (pure information, learning, teaching, etc.) apart from the publish or perish ‘game’.

Citations are insufficient to assess the impact of the research output in many disciplines. The digital era offers the opportunity to look at the wider picture by also including downloads, views, and social bookmarks, which can then be further analysed by means of usage metrics and altmetrics. The big challenge, admittedly, is to agree on standards of what exactly these proxies are intended to measure.

It can certainly be debated whether downloads are appropriate to measure usage, since many articles might be downloaded, but will remain unread or unused. On the other hand, it is generally known that many citations find their way into reference lists without prior reading of the cited material. Nevertheless, citations have become an accepted proxy for impact.

The authors are convinced that an active download is at least a statement of intent to use the downloaded material. Taking downloads into consideration as a complementary aspect will broaden our bibliometric citation-restricted horizon and help to better understand the complex processes in scientific communication.

Notes

Besides the four subject categories already mentioned, we were also provided with the data for eight psychology journals which were included in this analysis. However, due to their small number, we did not consider them as their own subject category.

References

Bollen, J., & Van de Sompel, H. (2008). Usage impact factor: The effects of sample characteristics on usage-based impact metrics. Journal of the American Society for Information Science and Technology, 59(1), 136–149.

Bollen, J., Van de Sompel, H., Smith, J. A., & Luce, R. (2005). Toward alternative metrics of journal impact: A comparison of download and citation data. Information Processing and Management, 41, 1419–1440. http://public.lanl.gov/herbertv/papers/ipm05jb-final.pdf. Accessed 26 Nov 2008.

Brody, T., Harnad, S., & Carr, L. (2006). Earlier web usage statistics as predictors of later citation impact. Journal of the American Society for Information Science and Technology, 57(8), 1060–1072.

Chu, H., & Krichel, T. (2007). Downloads vs. citations in economics: Relationships, contributing factors and beyond. In D. Torres-Salinas & H.F. Moed (Eds.), Proceedings of the 11th International Society for Scientometrics and Informetrics Conference (pp. 207–215). Madrid, Spain.

Coats, A. J. S. (2008). The top papers by download and citations from the International Journal of Cardiology in 2007. International Journal of Cardiology, 131, e1–e3.

Coombs, K. A. (2005). Lessons learned from analyzing library database usage data. Library Hi Tech, 23(4), 598–609.

Darmoni, S. J., Roussel, F., Benichou, J., Thirion, B., & Pinhas, N. (2002). Reading factor: A new bibliometric criterion for managing digital libraries. Journal of the Medical Library Association, 90(3), 323–327.

Duy, J., & Vaughan, L. (2006). Can electronic journal usage data replace citation data as a measure of journal use? An empirical examination. The Journal of Academic Librarianship, 32(5), 512–517.

Gorraiz, J., & Gumpenberger, C. (2010). Going beyond citations: SERUM—a new tool provided by a network of libraries. Liber Quarterly, 20, 80–93.

Gorraiz, J., Gumpenberger, C., & Schlögl, C. (2013). Difference and similarities in usage versus citation behaviours observed for five subject areas. In J. Gorraiz, E. Schiebel, C. Gumpenberger, M. Hörlesberger, & H. Moed (Eds.), Proceedings of the 14th International Society of Scientometrics and Informetrics Conference, Vienna, 15th-18th July (Vol. 1, pp. 519–535). Austria: Vienna.

Guerrero-Bote, V. P., & Moya-Anegón, F. (2013). Relationship between downloads and citation and the influence of language. In J. Gorraiz, E. Schiebel, C. Gumpenberger, M. Hörlesberger, & H. Moed (Eds.), Proceedings of the 14th International Conference on Scientometrics and Informetrics, 15th-18th Jul (Vol. 2, pp. 1469–1484). Austria: Vienna.

Haustein, S. (2011). Taking a multidimensional approach toward journal evaluation. In Proceedings of the 13th ISSI Conference, Durban, South Africa, 4th–7th July, Vol. 1 (pp. 280–291); Durban, South Africa.

Haustein, S. (2012). Using social bookmarks and tags as alternative indicators of journal content description. First Monday, 17- 5 November 2012; http://firstmonday.org/ojs/index.php/fm/article/view/4110; Accessed 25 Feb 2014.

Haustein, S., & Siebenlist, T. (2011). Applying social bookmarking data to evaluate journal usage. Journal of Informetrics, 5(3), 446–457.

Kraemer, A. (2006). Ensuring consistent usage statistics, part 2: working with use data for electronic journals. The Serials Librarian, 50(1/2), 163–172.

Kurtz, M. J., & Bollen, J. (2010). Usage biliometrics. Annual review of information science and technology, 44, 3–64.

Kurtz, M. J., Eichhorn, G., Accomazzi, A., Grant, C., Demleitner, M., Murray, S. S., et al. (2005). The bibliometric properties of article readership information. Journal of the American Society for Information Science and Technology, 56(2), 111–128.

Mcdonald, J. D. (2007). Understanding journal usage: A statistical analysis of citation and use. Journal of the American Society for Information Science and Technology, 58(1), 39–50.

Moed, H. F. (2005). Statistical relationships between downloads and citations at the level of individual documents within a single journal. Journal of the American Society for Information Science and Technology, 56(10), 1088–1097.

O’Leary, D. E. (2008). The relationship between citations and number of downloads in Decision Support Systems. Decision Support Systems, 45(4), 972–980.

Rowlands, I., & Nicholas, D. (2007). The missing link: Journal usage metrics. Aslib Proceedings, 59(3), 222–228.

Schloegl, C., & Gorraiz, J. (2010). Comparison of citation and usage indicators: The case of oncology journals. Scientometrics, 82(3), 567–580.

Schloegl, C., & Gorraiz, J. (2011). Global usage versus global citation metrics: The case of pharmacology journals. Journal of the American Society for Information Science and Technology, 61(1), 161–170.

Wan, J. K., Hua, P. H., Rousseau, R., & Sun, X. K. (2010). The download immediacy index (DII): Experiences using the CNKI full-text database. Scientometrics, 82(3), 555–566.

Watson, A. B. (2009). Comparing citations and downloads for individual articles. Journal of Vision, 9(4), 1–4.

Acknowledgments

This paper is partly based on anonymised ScienceDirect usage data and/or Scopus citation data kindly provided by Elsevier within the framework of the EBRP.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gorraiz, J., Gumpenberger, C. & Schlögl, C. Usage versus citation behaviours in four subject areas. Scientometrics 101, 1077–1095 (2014). https://doi.org/10.1007/s11192-014-1271-1

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-014-1271-1