Abstract

It is the objective of this article to examine in which aspects journal usage data differ from citation data. This comparison is conducted both at journal level and on a paper by paper basis. At journal level, we define a so-called usage impact factor and a usage half-life in analogy to the corresponding Thomson’s citation indicators. The usage data were provided from Science Direct, subject category “oncology”. Citation indicators were obtained from JCR, article citations were retrieved from SCI and Scopus. Our study shows that downloads and citations have different obsolescence patterns. While the average cited half-life was 5.6 years, we computed a mean usage half-life of 1.7 years for the year 2006. We identified a strong correlation between the citation frequencies and the number of downloads for our journal sample. The relationship was lower when performing the analysis on a paper by paper basis because of existing variances in the citation-download-ratio among articles. Also the correlation between the usage impact factor and Thomson’s journal impact factor was “only” moderate because of different obsolescence patterns between downloads and citations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

After the advent of electronic journals, it has become much easier to collect journal usage data. Contrary to the hard copy era, it is possible to view scholarly communication through the eyes of the reader now (Rowlands and Nicholas 2007, p. 223). Besides easy and cheap data recording, usage metrics have further advantages compared to citation metrics. They are available much more timely and reflect use on a much broader scope (Duy and Vaughan 2006, p. 513). While citations can be considered an indirect measure of use but a direct measure of usefulness, reads could be seen the other way round. Accordingly, usage metrics can be regarded complementary to citation metrics (e.g. Armbruster 2007; Bollen et al. 2005). Since e-journal usage data have been available for several years only, there is a need for further research. Kurtz et al. (2005) even expect similarities and differences of reads and citations to become a central issue of bibliometric research.

Several usage indicators have been suggested in recent years. For instance, Darmoni et al. (2002) introduced a so-called reading factor. Most suggestions base on the classical citation indicators from the Journal Citation Reports (JCR), using download data (usually full-text article requests) instead of citations. The corresponding usage metrics are usage impact factor (UIF) (Rowlands and Nicholas 2007; Bollen and Van De Sompel 2008), usage immediacy index (Rowlands and Nicholas 2007) or download immediacy index (Wan et al. 2008), and usage half-life (Rowlands and Nicholas 2007).

According to Bollen and Van de Sompel (2008) comparisons between citation and usage data can be made at a local and at a global level. Most of the existing studies used local usage data. Darmoni et al. (2002) accessed data from the Rouen University Hospital digital library and compared download and JIF rankings. Duy and Vaughan (2006), who analysed journal titles from the subject areas of chemistry and biochemistry at Concordia university library, found a significant correlation between electronic journal usage and local journal citation data but no relationship with the JIF. Also McDonald (2007) detected a medium relationship between local article downloads and locally collected citations for more than 1,500 journals (mainly from sciences) owned by the California Institute of Technology. Bollen and Van de Sompel (2008) collected usage data from the entire California State University system and aggregated them for more than 6,000 journals. Their analysis shows a modest negative correlation between the UIF and the JIF. The analysis at discipline level revealed that correlations differ for particular disciplines. A weak positive relation could be observed especially for disciplines with a higher proportion of graduate students and graduate faculty.

Global usage studies were mainly performed using subject repositories and open access journals. Chu and Krichel (2007) investigated the top 200 documents at RePEc, a digital library in economics. They related the number of downloads to the number of citations from SSCI and Google Scholar and found a moderate correlation between them in both cases. Brody et al. (2006) used download and citation data from arXiv.org and identified a significant and “sizeable” correlation between citations and downloads, which varied between 0.33 and 0.46 depending on the considered field. Kurtz et al. (2005) used the NASA Astrophysics Data System for their research. Besides examining the relationship between citation and readership numbers, the authors also compared the obsolescence function as measured by readership with the obsolescence function as measured by citations. Another study investigating the relationship between downloads and citations and possible differences in obsolescence patterns for articles published in a single journal was conducted by Moed (2005). In particular, Moed tried to determine the effect citations have on downloads and the effect of initial downloads made during the first three months of an article’s life-span on citations later-on. Wan et al. (2008) concentrated on the download immediacy index and explored its relationships with several citation indicators. They used the Chinese Academic Journal Full-Text Database in order to retrieve the download data. The citation indicators were extracted form the Chinese Academic Journals Comprehensive Citation Report which is an equivalent to JCR.

Methodology

It is the objective of this contribution to examine on a global scale in which aspects journal usage metrics differ from citation metrics. In particular, the following issues will be addressed:

-

growth in e-journal use;

-

comparison of citations and downloads (absolute and relative);

-

differences in obsolescence characteristics.

We conducted the last two analyses both at journal level and on a paper by paper basis for a few journals.

In our study, we employ a usage impact factor (UIF) and a usage half-life (UHL) both of which are calculated in analogy to the corresponding Thomson/ISI’s citation indicators. Since the journal impact factor (JIF) only considers citable documents, we follow Bollen and Van De Sompel (2008, p. 139) and use the article counts of the Journal Citation Reports (JCR). Accordingly the formula for calculating the UIF for journal j in the year i is

\( {\text{FTA}}_{j}^{i \to i - 1} \) is the number of full-text article requests in the year i to articles published in journal j in the year i-1; \( {\text{FTA}}_{j}^{i \to i - 2} \) the number of full-text article requests in the year i to articles published in journal j in the year i-2; \( {\text{nart}}_{j}^{i - 1} \) the number of (citable) articles published in journal j in the year i-1 (according to JCR); \( {\text{nart}}_{j}^{i - 2} \) the number of (citable) articles published in journal j in the year i-2 (according to JCR).

Analogously to the cited half-life, the usage half-life is defined as the median age of the articles that were downloaded for a particular journal in the considered year. This means that half of a journal’s downloaded articles were published more recently than the usage half-life.

The download data were provided from Science Direct (SD). We received the usage data for all SD oncology journals from 2001 to 2006 at journal level. For each journal within this period the (year-wise) sum of the downloaded full-text articles (FTAs) back to the year 1995 (if data were available so long backward) was given. Furthermore, the access data at article level were made available for a few journals. For each article, the number of downloads per month was itemized up to the year 2006.

Citation data were obtained from both JCR and Scopus. We used the number of (citable) documents, the number of citations, the impact factor, the cited half-life and the cited journal data which are the basis for the calculation of the cited half-life from JCR. For reasons of data reliability, we also retrieved the number of articles and review articles and the number of citations from Scopus and computed the impact factor with the Scopus data.

Results

Growth of article downloads

Table 1 reveals that e-journal use has strongly accelerated, at least with regard to SD oncology journals. Between 2001 and 2006 the number of full-text article requests (FTAs) has multiplied more than tenfold. This strong increase is only partly due to the longer time window of the access data in recent years (see obsolescence characteristics). The calculation of the growth rate on the basis of the 19 journals, which were included in Science Direct in 2001, shows that the use has multiplied sevenfold. This suggests that the overall growth was due only to some extent to more e-journals available. As a consequence, a big part of the increase might have been caused by the generally increased acceptance of e-journals at that time. As can also be seen, there was some consolidation in recent years where the growth rates were not so high anymore.

Analyses at journal level

Usage data versus citation data

Figure 1 displays the citation frequencies (left y-axis) in the period 2004–2006 and the number of downloads (right y-axis) in 2004 for 29 SD journals which are arranged from left to right according to their FTA values. It can be seen that, though the ratio between citations and FTAs is relatively unequal (1:37 with 2004 cites, 1:33 with 2005 cites, 1:29 with 2006 cites, n = 29), journals with higher download values are cited more often in general. This is also reflected by a high correlation between these two indicators. We computed Spearman rank correlation coefficients of 0.89 and twice 0.92 between the 2004 downloads and the particular 2004, 2005 and 2006 cites. The corresponding correlations between downloads and citations of the years 2005 (n = 31) and 2006 (n = 33) were similar (between 0.9 and 0.92).

The correlations (Spearman-Rho) between the usage impact factor (UIF) and Thomson’s JIF (see Table 2) are not as high as those between FTAs and citations for the period 2004–2006 which might partly be due to different obsolescence characteristics of downloads and citations (see following section).

As noted before, we computed the journal impact factor also with Scopus data. As in Gorraiz and Schloegl (2008) it turned out that there are problems with data quality for a few journals (“Drug Resistance Update”, “EJC Supplements”, “Journal of Urology”, “Lancet Oncology”, “Seminars in Radiation Oncology”). After removing these journals from the analysis, we identified a very high correlation between Thomson’s and “Scopus impact factor” (Spearman-Rho = 0.96 in 2004 and 2006, and 0.97 in 2005, n = 26). As a consequence, the correlations (Spearman-Rho) of the latter with the UIF are similar (see Table 3).

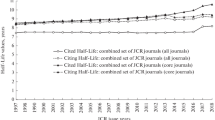

Obsolescence characteristics

Timeliness seems to be crucial when downloading articles (see Table 4). Fifty-six percent of all downloads in 2006 concerned articles published after 2004. Only 19% of the downloaded articles were older than 5 years. This obsolescence pattern is also illustrated in Fig. 2 for a few selected journals. As can be concluded, the UHL is between 1 and 2 years for most of the journals (for which access data to volumes from the past 10 years were available and which were included in JCR) (mean = 1.7, max = 2.8, min = 0.8 years in 2006, n = 24).

Comparing Figs. 2 and 3 illustrates the differences in obsolescence characteristics between citations and downloads quite well. Contrary to downloads, articles are hardly cited in the year of publication. Furthermore, it takes a few years until articles reach their citation maximum. Accordingly, also the cited half-life is much higher (mean = 5.6 years in 2006, n = 24). This also implies that the difference between the download immediacy index as defined by Wan et al. (2008) as “the number of downloads of a journal’s articles within one publication year, divided by the number of published articles by that journal in that same year” and Thomson’s (citation) immediacy index is even much greater than that of the half-lives.

Analyses at article level

Obsolescence characteristics

The download pattern on a paper by paper basis is similar to that of their aggregation at journal level in recent years, i.e. articles have the highest download requests immediately after they are available electronically (see Fig. 4). Interestingly, the age distribution is different for older volumes. As the case of “Cancer Letters” (see Figs. 5 and 6) shows, the download curve does not decline so quickly. Moreover, it may even happen that downloads increase again short-term in the following months or even years as can be observed for Articles 2, 6, and 8 in Fig. 5. One possible explanation could be that citations have a direct influence on downloads as will be analysed in more detail in the following section. Another explanation might be the beginning wide-spread use of e-journals at that time. As a consequence, also articles from older journal issues were downloaded more often because of non-existing prior e-journal use/access.

Figure 6 reveals a further feature of e-journals. Often their articles are made available online earlier than in print. For instance, the first article of issue 1, volume 203 (January 2004) of “Cancer Letters” was placed online in September 2003, followed by eight articles in October. Five out of 13 articles even had their download peak 1 month before they appeared in print.

Usage data versus citation data

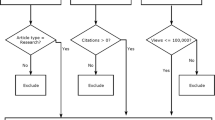

In order to relate the download data of the articles to their citations, we complemented the former by retrieving the number of citations until the year to which the download data were available (2006) in SCI. As Tables 5, 6 and 7 exhibit, the number of articles differs between SCI and Science Direct (e.g. 404 vs. 383 articles for “Cancer Cell”). The main reason for this difference is that Science Direct did not collect download data for editorial materials, corrections and letters. A small part of the deviation can also be attributed to wrong or missing data (see also Gorraiz and Schloegl 2008).

Tables 5, 6, 7 also clearly show that the number of citations is considerably lower than the number of downloads. This is especially true for the publication year 2006 in which an average article was only cited 0.4 times in “Cancer Letters” and 0.3 times in “Gynecologic Oncology”. (72% of the articles in “Cancer Letters” and 81% of those in “Gynecologic Oncology” were not cited at all in 2006.) In “Cancer Cell”, which is more heavily cited than the two other journals, an average article received two citations in 2006. The situation is different for downloads which usually have their maximum in the year of publication and the cumulated download frequency of which increases only gradually in the following years. The lower download frequencies for articles from the years 2001 and 2002 (“Cancer Letters” and “Gynecologic Oncology”) might be, as mentioned before, due to the lower use of e-journals at that time.

Because of the different obsolescence characteristics between citations and downloads, the average citation-download-ratio strongly depends on the considered publication year being very high for articles published more recently (for instance 1:1354 for “Cancer Letters” in 2006) and declining if a longer time window is considered (e.g. 1:42 for articles published in “Cancer Letters” in 2001).

The differences in the citation-download-ratio can be huge among articles. In the case of “Cancer Cell” we computed a minimum of 1:22.4 and a maximum of 1:1581 for 2004 articles. As a consequence, the correlation between downloads and citations is lower at article level than at journal level. Because of the big differences between downloads and citations especially in the publication year, a high correlation cannot be expected in 2006 (for instance 0.32 for “Cancer Letters” and 0.41 for “Gynecologic Oncology”). When considering longer time windows, we identified moderate relations (Spearman-Rho between 0.47 and 0.7).

Finally, as mentioned before, we investigated if citations have an impact on article downloads. (Figures 7 and 8 stand for the various analyses we have performed.) Figure 7 shows the monthly distribution of downloads and citations for Article 2 of Fig. 5, where downloads increased again clearly several months and even years after their initial maximum. As can be seen, it is difficult to determine a direct impact of citations on downloads. (Also the exclusion of self-citations did not result in different insights.) A possible explanation for the missing effect could be due to the low number of citations. Therefore, we conducted the comparisons with the most cited articles of our journal sample (see Fig. 8 for the most cited article in “Cancer Cell”). However, also these analyses failed to prove a direct impact of citations on downloads.

Conclusions

Our article aimed at investigating the similarities and differences between citation and usage data. This was done at journal level and on a paper by paper basis. It was shown that downloads and citations have different obsolescence patterns. Most of the articles are downloaded immediately after they were put online. In many cases, they reach their download maximum even before they appear in print. In contrast, it takes a few years until articles receive their citation peak. This is reflected by different half-lives at journal level. While the average cited half-life was 5.6 years, we computed a mean usage half-life of 1.7 years for the 2006 Science Direct data. There is some distortion when analysing the obsolescence characteristics for former volumes because of the strong increase in e-journal use between 2001 and 2006, which is mainly due to the generally increased acceptance of e-journals at that time.

There is a moderate correlation between full-text article requests and article citations. Because of different obsolescence patterns, it is essential, however, to consider a time-window of several years. The relationship is strong when comparing downloads and citations at journal level because variations at article level compensate each other. The correlation between UIF and JIF is weaker again because, contrary to the article downloads, a time frame covering only the past two years is too short in order to consider citations sufficiently. Even when considering a longer time span (for instance, a five year impact factor), a very strong relationship cannot be expected because article downloads have a much broader “meaning” than citations in the Web of Science which denote only a small aspect of science communication. Or in the words of Rowlands and Nicholas (2007, p. 222f): “authors and readers are not the same … They have different needs and different requirements of the journals literature.”

References

Armbruster, C. (2007). Access, usage and citation metrics: What function for digital libraries and repositories in research evaluation? Online available at URL: http://www.lub.lu.se/fileadmin/user_upload/pdf/NCSC/ncsc2008_chris_armbruster.pdf (26 November 2008).

Bollen, J., & Van De Sompel, H. (2008). Usage impact factor: the effects of sample characteristics on usage-based impact metrics. Journal of the American Society for Information Science and Technology, 59(1), 136–149.

Bollen, J., Van De Sompel, H., Smith, J.A., & Luce, R. (2005). Toward alternative metrics of journal impact: A comparison of download and citation data, online available at URL: http://public.lanl.gov/herbertv/papers/ipm05jb-final.pdf (26 November 2008).

Brody, T., Harnad, S., & Carr, L. (2006). Earlier web usage statistics as predictors of later citation impact. Journal of the American Society for Information Science and Technology, 57(8), 1060–1072.

Chu, H., & Krichel, T. (2007). Downloads vs. citations in economics: Relationships, contributing factors & beyond. In: Proceedings of the 11th International Society for Scientometrics and Informetrics conference, pp. 207–215, Madrid, Spain, June 25–27.

Darmoni, S. J., Roussel, F., Benichou, J., Thirion, B., & Pinhas, N. (2002). Reading factor: a new bibliometric criterion for managing digital libraries. Journal of the Medical Library Association, 90(3), 323–327.

Duy, J., & Vaughan, L. (2006). Can electronic journal usage data replace citation data as a measure of journal use? An empirical examination. The Journal of Academic Librarianship, 32(5), 512–517.

Gorraiz, J., & Schloegl, C. (2008). A bibliometric analysis of pharmacology and pharmacy journals: Scopus versus Web of Science. Journal of Information Science, 34(5), 715–725.

Kurtz, M. J., Eichhorn, G., Accomazzi, A., Grant, C., Demleitner, M., Murray, S. S., et al. (2005). The bibliometric properties of article readership information. Journal of the American Society for Information Science and Technology, 56(2), 111–128.

Mcdonald, J. D. (2007). Understanding journal usage: A statistical analysis of citation and use. Journal of the American Society for Information Science and Technology, 58(1), 39–50.

Moed, H. F. (2005). Statistical relationships between downloads and citations at the level of individual documents within a single journal. Journal of the American Society for Information Science and Technology, 56(10), 1088–1097.

Rowlands, I., & Nicholas, D. (2007). The missing link: Journal usage metrics. Aslib Proceedings, 59(3), 222–228.

Wan, J.-K., Hua, P.-H., Rousseau, R., & Sun, X.-K. (2008). The download immediacy index (DII): Experiences using the CNKI full-text database (in press).

Acknowledgements

The authors would like to thank Mr. Niels Weertman from Science Direct for providing the necessary usage data.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Schloegl, C., Gorraiz, J. Comparison of citation and usage indicators: the case of oncology journals. Scientometrics 82, 567–580 (2010). https://doi.org/10.1007/s11192-010-0172-1

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-010-0172-1