Abstract

We study the asymptotic behavior of the tail probabilities of the waiting time and the busy period for the \(M/G/1/K\) queues with subexponential service times under three different service disciplines: FCFS, LCFS, and ROS. Under the FCFS discipline, the result on the waiting time is proved for the more general \(GI/G/1/K\) queue with subexponential service times and lighter interarrival times. Using the well-known Laplace–Stieltjes transform (LST) expressions for the probability distribution of the busy period of the \(M/G/1/K\) queue, we decompose the busy period into a sum of a random number of independent random variables. The result is used to obtain the tail asymptotics for the waiting time distributions under the LCFS and ROS disciplines.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we study the tail asymptotic behavior of the distribution function (d.f.) of the busy period and of the waiting time for the \(M/G/1\) queueing system with a fixed capacity \(K\ge 1\) (including the service position). Specifically, we assume that the i.i.d. service time variables have a subexponential distribution. The family of subexponential distributions is an important class of heavy-tailed distributions, both in theory and in queueing applications.

The \(M/G/1\) queueing system, with either a finite or infinite capacity, is a classical queueing system about which basic properties can be found in many queueing books. For the finite-capacity model, which is closely related to our study on the busy period and waiting time, we refer readers to Miller [25], and Takagi and LaMaire [31], among others, for standard results, and particularly to the book by Takagi [30] and the references therein. It is worthwhile mentioning that tail asymptotic properties are not a focus of the above-mentioned references.

Studying tail asymptotic properties has a twofold significance—its own theoretical importance and its applications. In either case, and particularly when studying its applications, people often search for simple explicit expressions for important performance measures such as the waiting time and the busy period. This is usually accomplished by employing the useful tool of transformations. Our focus is on finding a simple explicit characterization for the tail asymptotic of the distribution function of the busy period and the waiting time with various service disciplines for the \(M/G/1/K\) queue when \(G\) is heavy-tailed. Tail asymptotic properties can often lead to approximations and performance bounds.

When \(G\) is heavy-tailed, comprehensive studies on asymptotic properties for the \(M/G/1\) (and also for the more general \(GI/G/1\)) queueing system with an infinite system capacity can be found in the literature. Most of the literature references focus on the waiting time distribution. For example, Cohen [13] considered the \(GI/G/1\) queue when the service time is regularly varying, Pakes [27] studied the \(M/G/1\) queue when the service time is subexponential, and Veraverbeke [32] tackled the problem through Wiener–Hopf factorizations. Borst et al. [10] considered the impact of the service discipline on delay asymptotics and Boxma and Zwart [12] reviewed the impact of scheduling on tail behavior. Recently, Boxma and Denisov [11] considered the \(GI/G/1\) queue with a regularly varying service time distribution of index \(\alpha \) and proved that under a proper service discipline, the waiting time is regularly varying with any given index value between \(-\alpha \) and \(1-\alpha \). The paper by Abate and Whitt [1] considered tail behavior for the waiting time of the \(M/G/1\) priority queue (both heavy tail and light tail cases, and also closely related to the busy period).

Considering an infinite-capacity, but for the busy period, De Mayer and Teugels [14] obtained a tail asymptotic result for the \(M/G/1\) queue with regularly varying service times. However, it was shown in Asmussen et al. [5] that the result proven in [14] cannot be true for the entire class of subexponential distributions. Zwart [38] extended the result in [14] to the \(GI/G/1\) queue under the assumption that the tail of the service time distribution is of intermediate regular variation. His method is probabilistic and revealed an insightful relationship between the busy period and the cycle maximum. Jelenković and Mom c̆ ilović [20] derived an asymptotic result for the busy period distribution in the stable \(GI/G/1\) queue with square-root-insensitive service times, and Baltrunas et al. [9] considered the \(GI/G/1\) queue with Weibull service times.

Also for the busy period, but for light-tailed behavior, Kyprianou [22] investigated the asymptotic for the \(M/G/1\) queue where a service time has a meromorphic moment generating function and Palmowski and Rolski [28] studied the \(GI/G/1\) work-conserving queues and provided exact tail asymptotics for the distribution of the busy period under light-tailed assumptions.

References on asymptotic properties, either light- or heavy-tailed, for the \(M/G/1\) queue with a processor sharing server are also abundant, and mainly focus on the probability of the sojourn time, either conditional or unconditional. These include Zwart and Boxma [39], Egorova et al. [16], Mandjes and Zwart [24], Yashkov [33]; Egorova and Zwart [15], Zhen and Knessl [35], Yashkov and Yashkova [34], and Zhen and Knessl [36].

From the stochastic process point of view, finite-capacity models can be studied through reflected barriers at both 0 and \(K\), such as reflected random walks for discrete models and reflected Brownian motion/Lévy processes for continuous models. For asymptotic properties of the \(M/G/1/K\) (or for the more general single server) queues with a finite capacity \(K\), much attention has been given to the blocking probability or the loss rate as \(K\) goes to infinity. For example, Baiocchi considered the \(M/G/1/K\) and \(GI/M/1/K\) models, and the \(MAP/G/1/K\) model in [7] and [8], respectively; Jelenković [19] obtained subexponential loss rates for a \(GI/G/1\) queue; Zwart [37] studied a \(GI/G/1\) queue (and also a fluid model) with a subexponential input; Pihlsgård [29] obtained loss rate asymptotics for a \(GI/G/1\) queue; Miyazawa et al. [26] studied asymptotic behavior for a finite buffer queue with QBD structure; Asmussen and Pihlsgård [6] studied the loss rate associated with a reflected Lévy process; Kim and Kim [21] carried out an asymptotic analysis for the loss probability of queues of the \(GI/M/1\) type; Andersen and Asmussen [3] examined loss rate asymptotics for centered Lévy processes; Andersen [2] derived subexponential loss rate asymptotics for a reflected Lévy process when the mean is negative and the positive jumps are subexponential; and Liu and Zhao [23] considered an \(M/G/1/N\) queue with server vacations and exhaustive service discipline.

In contrast to the above-mentioned studies, our focus in this paper is on tail asymptotic properties of the busy period and the waiting time of the \(M/G/1/K\) queue when \(G\) is a subexponential random variable with distribution \(B(x)\). Our main contributions in this paper include: (1) Based on the recursive formula with respect to the capacity \(K\) for the busy period, we connect the LST of the busy period distribution with a geometric sum of random variables. We derive the tail asymptotics for the busy period; (2) we study the tail asymptotic behavior for the waiting time distribution with the three service disciplines: first-come-first-served (FCFS), last-come-first-served (LCFS), and random-order-service (ROS). Under the FCFS discipline, the result is proved for the more general \(GI/G/1\) queue with lighter interarrival times. The main results are reported in Theorems 2.1, 3.1, 4.1, and 5.1.

Throughout the paper, for a given non-negative r.v. \(X\), its distribution function is denoted by \(F(x)=P(X\le x)\) and its tail (or, survival) function is denoted by \(\overline{F}(x)=1- F(x)=P(X> x)\). A d.f. \(F\) has a light tail if there exists \(\varepsilon >0\) such that \(E(e^{\varepsilon X})<\infty \). Otherwise, the d.f. \(F\) is referred to as heavy-tailed. In this paper, we focus on a special class, \(\mathcal S \), of heavy-tailed distributions, called subexponential distributions.

Definition 1.1

Let \(F^{*n}\) denote the \(n\)-fold convolution of \(F\) with corresponding tail \(\overline{F^{*n}}=1-F^{*n}\), then the d.f. \(F\) is called subexponential (or \(F\in \mathcal S \)) if \(\overline{F}(x)>0, x\ge 0\), and for all \(n\ge 2\),

It can be shown that if the condition holds for \(n=2\) then it holds for all \(n\ge 2\). The reader is referred to [17] or [18] for details and further references about subexponential distributions.

Also, throughout the paper the notations \(a(x)\sim b(x)\) and \(a(x)=o( b(x))\) mean that \(a(x)/b(x)\rightarrow 1\) and \(a(x)/b(x)\rightarrow 0\) as \(x\rightarrow \infty \), respectively. For two random variables, \(X\) and \(Y\), with d.f.s \(F(x)\) and \(G(x)\), respectively, we say \(X\) has a lighter tail if \(\overline{F}(x) = o (\overline{G}(x))\) as \(x\rightarrow \infty \). We denote by \(\overset{d}{=}\) the equality in distribution, i.e., \(X\overset{d}{=}Y\) means that the d.f.s of r.v.s \(X\) and \(Y\) are the same.

The rest of this paper is organized as follows. In Sect. 2, we obtain a tail asymptotic result on the waiting time of the \(GI/G/1/K\) queue for the FCFS case. In Sect. 3, the tail asymptotic result is proved for the busy period of the \(M/G/1/K\) queue. Sections 4 and 5 are devoted to the tail asymptotic results on the waiting time of the \(M/G/1/K\) queue for the LCFS and ROS cases, respectively. Appendix contains literature results that are used in our analysis.

2 Waiting Time for FCFS System

In this section, we prove a tail asymptotic result on the waiting time distribution for the \(GI/G/1/K\) system with subexponential service times and lighter interarrival times under the FCFS discipline. The system is assumed to be stable. Let \(W\) be the waiting time of an arriving customer (the tagged customer) who is accepted in to the system. We first prove the following fact:

Lemma 2.1

Let \(Z_1\) and \(Z_2\) be independent r.v.s with d.f.s \(F_1\) and \(F_2\), respectively. Define \(Z=Z_1+Z_2\). Assume that \(Z\) has a d.f. \(F \in \mathcal S \) and \(\overline{F}_2(t)=o(\overline{F}(t))\). Then, \(F_1\in \mathcal S \) and \(\overline{F}_1(t) \sim \overline{F}(t)\) as \(t\rightarrow \infty \).

Proof

We can write

Note that

where we have used the facts: \(\lim _{t\rightarrow \infty }\overline{F}(t-y)/\overline{F}(t)=1\) (by Lemma 6.1) and the dominated convergence theorem for interchanging the limit and the integral. Now, \(\lim _{t\rightarrow \infty }\overline{F}_1(t)/\overline{F}(t)=1\) follows from (2.1) and (2.2). \(\square \)

Remark 2.1

The above fact is useful, which is very likely available in the literature [18].

Theorem 2.1

For the \(GI/G/1/K\) queueing system with a subexponential service time distribution \(B(x)\) and a lighter interarrival time under the FCFS discipline, \(P(W >x) \sim (K-1) \overline{B}(x)\) as \(x\rightarrow \infty \).

Proof

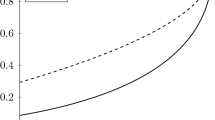

We refer to the tagged customer as \(C_0\), and denote \(C_{-j}\) (\(j = 1,2,\ldots \)) by the \(j\)th last customer who enters the system before \(C_0\) (blocked arrivals are not counted). Let \(s_{-j}\) represent the “service starting time” of \(C_{-j}\) (\(j = 0, 1,\ldots \)), \(X_{-j}\) represent the length of service time of \(C_{-j} \) (\(j = 1,2,\ldots \)), and \(a_0\) represent the arrival time of \(C_0\). Figure 1 depicts a typical order of service starting times of \(C_{-(K-1)},C_{-(K-2)},\ldots , C_0\) and the arriving time of \(C_0\). The following observations are straightforward.

-

(1)

The start of the service of customer \(C_{-(K-1)}\) must be before the arrival of customer \(C_0\), i.e., \(s_{-(K-1)}<a_0\). This is because \(C_{-(K-1)},C_{-(K-2)},\ldots ,C_{-1}\) must have entered the system before \(C_{0}\) does.

-

(2)

All customers that arrived during \((s_{-(K-1)},\ a_0)\) should be accepted into the system without blocking. The number of arrivals during \((s_{-(K-1)},\ a_0)\) is \(K-\tau -1\), where \(\tau \ =\) the number of customers in the system at time \(t=s_{-(K-1)}\) and \(1\le \tau \le K-1\). Precisely, \(C_{-(K-\tau -1)},C_{-(K-\tau -2)},\ldots ,C_{-1}\) arrive during \((s_{-(K-1)},\ a_0)\).

By (2), we know that \(a_{0}-s_{-(K-1)} \) equals the sum of \(K-\tau \) (\(1\le \tau \le K-1\)) interarrival times, where the first interarrival time should be interpreted as a partial interarrival time. Hence

On the other hand, \(s_{-(j-1)}-s_{-j}\) is the length of time between the service startings of customers \(C_{-j}\) and \(C_{-(j-1)}\). It is worthwhile noticing that there may be an idle period between two consecutive services before \(t=a_0\). So \(s_{-(j-1)}-s_{-j}= X_{-j} + \) at most one idle period. Since \(C_{-(K-1)},C_{-(K-2)},\ldots ,C_{K-\tau }\) are already in the system at time \(t=s_{-(K-1)}\) and they are continuously served without any idle period in between, we have

It follows from (2.3) and (2.4) that

where \(Y_1\overset{\triangle }{=}\) sum of \(K-\tau \) interarrival (or partial interarrival) times, and \(Y_2\overset{\triangle }{=}\) sum of at most \(K-\tau \) idle periods.

By Lemma 6.3 and Corollary 6.1, we have \(P(\sum _{j=1}^{K-1}X_{-j}+Y_2 >t)\sim P(\sum _{j=1}^{K-1}X_{-j} >t)\sim (K-1)\overline{B}(t)\) because an idle period has a lighter tail than \(\overline{B}(t)\). So, \(P(W+Y_1>t)\sim (K-1)\bar{B}(t)\) as \(t\rightarrow \infty \). Again, by Lemma 6.3, \(\lim _{t\rightarrow \infty }P(Y_1>t)/\overline{B}(t)=0\) because an interarrival time has a lighter tail than \(\overline{B}(t)\). Applying Lemma 2.1, we have \(P\{W>t\}\sim (K-1)\bar{B}(t)\) as \(t\rightarrow \infty \). \(\square \)

3 Busy Period of \(M/G/1/K\) Queue

In this section, we investigate the asymptotic behavior for the busy period for the \(M/G/1/K\) system. Assume that customers arrive according to a Poisson process with rate \(\lambda \). Let \(X\) be the length of a generic service time, and \(N(X)\) be the number of arrivals during \((0,X)\). Let \(c_k=P(N(X)=k)=\int _0^{\infty }\frac{(\lambda x)^k}{k!}e^{-\lambda x}dB(x),\ k\ge 0\), which is the probability that \(k\) customers arrive during a service time. Further, we let \(\overline{c}_k=P(N(X)\ge k)=1-\sum _{j=0}^{k-1}c_j\), \(k\ge 1\), which is the probability that \(k\) or more customers arrive during a service time.

Define \(h_1=1\) and \(h_n\), recursively, by

The main result in this section is the following theorem.

Theorem 3.1

Let \(A_n\) be the length of the busy period of the \(M/G/1/n\) queueing system with a subexponential service time distribution \(B(x)\). Then, \(P(A_n > x) \sim h_n \overline{B}(x)\) as \(x\rightarrow \infty \), where \(h_n\) is given by (3.1).

To prove the above tail asymptotic result, let us use \(\alpha _n(s)\) to represent the LST of the distribution function of \(A_n\). It is well known that \(\alpha _n(s)\) can be expressed in a recursive form with respect to the system capacity \(n\) as follows (see for example, p. 225 in Takagi [30], or Miller [25]):

where \(\beta (s)\) is the LST of the service time distribution and

It is a convention that for \(b<a\), \(\sum _a^b\equiv 0\) and \(\prod _a^b\equiv 1\).

The proof to Theorem 3.1 is carried out through the following steps with the use of properties in Appendix: (a) rewrite \(\alpha _n(s)\) as the LST of the distribution function of the sum of two independent random variables, one of which is a geometric sum of i.i.d. random variables; (b) properly define random variables associated with the geometric sum; and (c) tail asymptotic analysis for components of the random variables defined in (b).

For the first step, we note that \(u_k(s)\) is not the LST of a probability distribution function (since \(u_k(0)\not =1\)). However, we can write \(u_k(s)=c_k \beta _k(s)\), where \(\beta _k(s)\) is the LST of the conditional distribution function

Now, we rewrite (3.3) in term of \(\beta _k(s)\) as

where

Next, we will show that \(\tau _{n}(s)\), \(\delta _n(s)\), and \(\sigma _n(s)\) can be viewed as the LSTs of probability distributions. To this end, let \(B_n\) be a random variable having distribution \(B_n(x)\) (by abusing the notation). Define

By Lemma 6.6, it is easy to see that the LSTs of the d.f.s of random variables \(T_n\) and \(D_n\) are \(\tau _{n}(s)\) and \(\delta _n(s)\), respectively. According to Lemma 6.4, \(\sigma _n(s)\) can be viewed as the LST of the d.f. of a geometric sum (denoted by \(S_n\)) with parameter \(\overline{c}_1\) of i.i.d. random variables (each equal to \(D_n\) in distribution). By (3.5), \(\alpha _n(s)\) is the LST of the d.f. of random variable \(A_n\overset{d}{=}S_n+B_0\), the sum of the geometric sum \(S_n\) and the variable \(B_0\) (which is independent of the geometric sum \(S_n\)).

Now, let us characterize the tail property for random variables \(B_k\), \(T_n\), and \(D_n\). It is easy to verify that

Namely, \(B_k(x)\) (\(k=0, 1, 2,\ldots \)) is a light-tailed distribution. So, \(\overline{B}_k(x)=o(1)\overline{B}(x)\) as \(x\rightarrow \infty \).

It follows from the definition of \(T_n\) in (3.9) and (3.11) that

For the tail behavior of \(D_n\), it follows from its definition given in (3.10) that

We are now ready to prove the theorem, i.e., \(P\{A_n >x\}\sim h_n \overline{B}(x)\) as \(x\rightarrow \infty \) for \(n\ge 1\).

Proof of Theorem 3.1

The proof of case \(n=1\) is immediate since \(A_1\overset{d}{=}X\) (a service time). We employ the induction on \(n\) to prove the general case of \(n\ge 2\).

For the case of \(n=2\), \(D_2 \overset{d}{=} T_1\) by the definition of \(D_n\) in (3.10). It follows from (3.12) that \( P\{D_2>x\}= P\{T_1>x\} \sim \overline{B}(x)/ \overline{c}_1, \) which implies that \(D_2\) has a subexponential distribution according to Lemma 6.2. Therefore, by Lemma 6.5,

Furthermore, since \(B_0\) is light-tailed, \(P\{A_2 >x\}\sim P\{B_0+S_2>x\}\sim h_2 \overline{B}(x)\) according to Corollary 6.1. The proof for the case of \(n=2\) is complete.

Assuming that \(P(A_k>x) \sim h_k \overline{B}(x)\) for all \(k\) \((1\le k\le n-1)\), let us verify that \(P(A_n>x) \sim h_n \overline{B}(x)\) is also true. It follows from (3.13), Lemma 6.3, Corollary 6.1 and (3.12) that

Recall that \(A_n=S_n+B_0\). By (3.11), Corollary 6.1 and (3.14),

where the second last equation holds after interchanging the summations: \(\sum _{k=2}^{n-2}\) \(\sum _{j=n-k+1}^{n-1}=\sum _{j=3}^{n-1} \sum _{k=n-j+1}^{n-2}\) and noticing that: \(\overline{c}_{n-j+1}=\overline{c}_{n-1}+\sum _{k=n-j+1}^{n-2}c_{k}\).

The proof is complete. \(\square \)

4 Waiting Time for LCFS System

In this section, we prove a tail asymptotic result for the waiting time distribution for the \(M/G/1/K\) system with non-preemptive LCFS queueing discipline. Clearly, if \(K=1\) or \(2\), the waiting time of a customer remains the same regardless of the service discipline. So, we assume that \(K\ge 3\). The system is assumed to be stable. Definitions and notations used in the previous sections remain valid here, such as \(W\), \(X\), \(N(X)\), \(A_n\), \(B_n\), \(B_k(t)\), \(c_k\), \(\overline{c}_k\), \(h_n\), and \(\alpha _n(s)\). Unlike the case of the FCFS, the waiting time under the LCFS is closely related to the number of arrivals during the remaining service time, and therefore an explicit expression of the joint stationary distribution of the queue length and the remaining service time is required.

Consider the stationary system at an arbitrary time. Let \(L\) be the number of customers in the system, \(X_-\) be the elapsed service time, and \(X_+\) be the remaining service time for the customer who is in service (if any). Denote \(p_k\overset{\triangle }{=}P(L=k)\), \(0\le k\le K\), and

By (1.16) on p. 202 and (1.60a) on p. 213 in Takagi [30],

where \(\rho = \lambda \int \limits _0^\infty x dB(x)\). Note that \(p_k\) (\(0\le k\le K)\) are determined by independent Eqs. (4.1) and (4.2) combined with the normalizing condition \(\sum _{k=0}^{K}p_k=1\).

By (1.61a) on p. 214 in Takagi [30], we have

A formula for \(k=K\) is also available, but we do not need it here.

We study \(P(W>t)\), the tail probability of the waiting time of an arriving customer (the tagged customer) who is accepted in the system. Because Poisson arrivals see time average (PASTA), the tagged customer sees the system with \(L\) (\(0\le L\le K\)) customers (excluding itself) and the remaining service time \(X_+\) if \(L\ne 0\). Let \(W^{\prime }\) be the waiting time of this customer before receiving its service (we define \(W^{\prime } =\infty \) if it is blocked). So \(P(W>t)=P(W^{\prime }>t|0 \le L \le K-1)\).

Let \(J\) be the number of customers that arrived (including those blocked) to the system during the remaining service time \(X_+\) seen by the tagged customer. Define the following conditional distribution functions

By the definition of \(G_k(t)\), we can write

Let

We will prove the following result:

Theorem 4.1

For the stable \(M/G/1/K\) with subexponential service times and non-preemptive LCFS discipline, \(P(W >x) \sim w_{lcfs}\cdot \overline{B}(x)\) as \(x\rightarrow \infty \), where

It is obvious that \(W^{\prime }=X_+\) if \(L=K-1\) or \(J=0\). Otherwise, there are \(J \ge 1\) arrivals (accepted or rejected to the system depending on whether or not the system is full) during the remaining service time. When \(1\le L\le K-2\) and \(J \ge K-L\), after the remaining service time \(X_+\) the server will start serving the last accepted customer to the system, and after time \(A_{2}\) the server will start serving the second last accepted customer to the system that arrived during the remaining service time. This will continue until all customers accepted to the system during the remaining service time have been served, and then the server will start serving the tagged customer. The total time is

When \(1\le L\le K-2\) and \(J\le K-L-1\), the waiting time for the server to start serving the tagged customer can be similarly expressed in terms of the remaining service time \(X_+\) and the busy period \(A_n\). So, we have

Let \(T_{k,n}\), \(G_{k,j}\) and \(G_{k}\) be random variables having d.f.s \(T_{k,n}(t)\), \(G_{k,j}(t)\) and \(G_{k}(t)\), respectively. It follows from the above expression of \(W^{\prime }\) that

Next, we will study the tail probabilities of \(T_{k,n}\), \(G_{k,j}\) and \(G_{k}\). For this purpose, we consider the probability of event \(\{L=k, X_+>t\}\), which will be used in the proof.

For a fix \(a>0\), it follows from (4.8) that

For \(t\ge 0\),

According to Lemma 6.1, \(\overline{B}(x+t)/\overline{B}(t)\rightarrow 1\) uniformly on \(0\le x\le a\) as \(t\rightarrow \infty \). Therefore, from (4.9), (4.10) and (4.3)

By the definitions of \(G_{k}(t)\), \(G_{k,j}(t)\) and \(T_{k,j}(t)\), we have, as \(t\rightarrow \infty \),

It follows from (4.7), Theorem 3.1 and (4.12–4.14) that

where the last equation holds after interchanging the summations: \(\sum _{j=1}^{K-k-1} \sum _{n=K-(k+j-1)}^{K-k} =\sum _{n=2}^{K-k} \sum _{j=K-(k+n-1)}^{K-k-1}\) and noticing: \(\overline{c}_{k,K-k-n+1}=\overline{c}_{k,K-k}+ \sum _{j=K-(k+n-1)}^{K-k-1}c_{k,j}\).

5 Waiting Time for ROS System

In this section, we provide a tail asymptotic result on the waiting time for the \(M/G/1/K\) system with ROS service discipline. The same method used for LCFS systems will be used here. Again, the system is assumed to be stable. Definitions and notations introduced so far remain valid in this section. For the same reason as in the previous section, we assume that \(K\ge 3\).

To state the main theorem, let

The main result is given in the following theorem.

Theorem 5.1

For the stable \(M/G/1/K\) with subexponential service times and ROS discipline, \(P(W >x) \sim w_{ros}\cdot \overline{B}(x)\) as \(x\rightarrow \infty \), where

In a ROS system, suppose that the tagged customer sees the system with \(L=k\). If \(k \ne 0\) or \(K\), and \(j\) customers arrived (not necessarily accepted to the system) during the remaining service time \(X_+\), then there are \(\min (k+j-1,K-2)\) other customers (excluding the tagged one) in the system at the end of the remaining service time \(X_+\). Therefore, we can write \(W^{\prime }=X_++ W_{\min (k+j-1,K-2)}\), where \(W_k\) is the length of the time period starting from the completion of a service to the end of the waiting time of the tagged customer, given that there are \(k\) other customers (excluding the tagged one) left behind in the system at the completion of that service. We therefore have

To characterize the tail behavior of the waiting time: \(P(W>t)= P(W^{\prime }>t|0 \le L \le K-1)\), the key is to characterize the tail behavior of \(P(W_k>t)\) for \(0\le k\le K-2\). Because of the random selection, it is clear that \(W_k=0\) with probability \(1/(k+1)\) and \(W_k>0\) with probability \(k/(k+1)\). In the latter case, the tagged customer has to wait one service time before the next selection made by the server. Hence,

where \(0\le k\le K-2\).

Proposition 5.1

(1) For \(x>0\), \(P\{W_0 >x\}= 0\); (2) For \(1\le k\le K-2\), the asymptotic tail probability of \(W_k\) is given by

where the recursive expression for \(w_k\) is given in (5.2).

Proof

Since \(W_0\equiv 0\), part (1) is obvious. To prove (5.6), the key is to verify the existence of \(\lim _{x\rightarrow \infty } P(W_k >x)/\overline{B}(x)\) for all \(1\le k\le K-2\), which can be done by the mathematical induction on \(K(K\ge 3)\). When \(K=3\), \(W_1\) equals the sum of a light-tailed number of service times, so \(\lim _{x\rightarrow \infty } P(W_1 >x)/\overline{B}(x)\) exists by Lemma 6.5. Now, assuming that all \(\lim _{x\rightarrow \infty } P(W_k >x)/\overline{B}(x)\) exist when \(K=n\), let us see the case of \(K=n+1\). Conditioning on the events \(E=\{\)no other customers in the system when the tagged customer starts its service\(\}\) and its complement event \(E^c\), we have \(P(W_k >x)=P(E)P(W_k >x|E)+P(E^c)P(W_k >x|E^c)\). The existence of \(\lim _{x\rightarrow \infty } P(W_k >x|E^c)/\overline{B}(x)\) follows from the induction assumption (\(K=n\)) because one position is always occupied by a customer other than the tagged customer before its service. The existence of \(\lim _{x\rightarrow \infty } P(W_k >x|E)/\overline{B}(x)\) follows from the fact that \(P(W_k >x|E)=P(A_{n+1-k}+A_{n+1-(k-1)}+\cdots +A_{n} >x)\) and from Theorem 3.1, where \(A_m\) is the busy period of the \(M/G/1/m\) queue. So, all \(\lim _{x\rightarrow \infty } P(W_k >x)/\overline{B}(x)\) exist for the case \(K=n+1\).

Let \(\lim _{x\rightarrow \infty } P(W_k >x)/\overline{B}(x)\overset{\triangle }{=}w_k\). It follows from (5.5), (3.11) and (3.12) that \(w_k\) satisfies (5.2). \(\square \)

Proof of Theorem 5.1

Recall that \(W\) is the waiting time of an arriving customer who is accepted to a steady state system and \(P(W>t)=P(W^{\prime }>t|0 \le L \le K-1)\). Following the same treatment as that in the previous section, we have

Finally, by Proposition 5.1, (4.13) and (4.14),

\(\square \)

References

Abate, J., Whitt, W.: Asymptotics for M/G/1 low-priority waiting-time tail probabilities. Queueing Syst. 25, 173–233 (1997)

Andersen, L.N.: Subexponential loss rate asymptotics for Lévy processes. Math. Meth. Oper. Res. 73, 91–108 (2011)

Andersen, L.N., Asmussen, S.: Local time asymptotics for centered Lévy processes with two-sided reflection. Stochastic Models 27, 202–219 (2011)

Asmussen, S.: Applied Probability and Queues. Springer, New York (2003)

Asmussen, S., Klüppelberg, C., Sigman, K.: Sampling at subexponential times, with queueing applications. Stoch. Processes Their Appl. 79, 265–286 (1999)

Asmussen, S., Pihlsgård, M.: Loss rates for Lévy processes with two reflecting barriers. Math. Oper. Res. 32, 308–321 (2007)

Baiocchi, A.: Asymptotic behavior of the loss probability of the M/G/1/K and G/M/1/K queues. Queueing Syst. 10, 235–248 (1992)

Baiocchi, A.: Analysis of the loss probability of the MAP/G/1/K queue Part I: asymptotic theory. Commun. Stat. Stoch. Models 10, 867–893 (1994)

Baltrunas, A., Daley, D.J., Klüppelberg, C.: Tail behavior of the busy period of GI/G/1 queue with subexponential service times. Stoch. Processes Appl. 111, 237–258 (2004)

Borst, S.C., Boxma, O.J., Núñez-Queija, R., Zwart, A.P.: The impact of the service discipline on delay asymptotics. Perform. Eval. 54, 175–206 (2003)

Boxma, O., Denisov, D.: Sojourn time tails in the single server queue with heavy-tailed service times. Queueing Syst. 69, 101–119 (2011)

Boxma, O., Zwart, B.: Tails in scheduling. ACM SIGMETRICS Perform. Eval. Rev. 34, 13–20 (2007)

Cohen, J.W.: Some results on regular variation for distributions in queueing and fluctuation theory. J. Appl. Probab. 10, 343–353 (1973)

De Meyer, A., Teugels, J.L.: On the asymptotic behavior of the distributions of the busy period and service time in M/G/1. J. Appl. Probab. 17, 802–813 (1980)

Egorova, R., Zwart, B.: Tail behavior of conditional sojourn times in processor-sharing queues. Queueing Syst. 55, 107–121 (2007)

Egorova, R., Zwart, A.P., Boxma, O.J.: Sojourn time tails in the M/D/1 processor sharing queue. Probab. Eng. Inf. Sci. 20, 429–446 (2006)

Embrechts, P., Klüppelberg, C., Mikosch, T.: Modelling Extremal Events for Insurance and Finance. Springer, Heidelberg (1997)

Foss, S., Korshunov, D., Zachary, S.: An Introduction to Heavy-Tailed and Subexponential Distributions. Springer, New York (2011)

Jelenković, P.R.: Subexponential loss rates in a GI/GI/1 queue with applications. Queues with heavytailed distributions. Queueing Syst. 33, 91–123 (1999)

Jelenković, P.R., Momc̆ilović, P.: Large deviations of square root insensitive random sums. Math. Oper. Res. 29(2), 398–406 (2004)

Kim, J., Kim, B.: Asymptotic analysis for loss probability of queues with finite GI/M/1 type structure. Queueing Syst. 57, 47–55 (2008)

Kyprianou, E.K.: On the quasi-stationary distribution of the virtual waiting time in queues with poisson arrivals. J. Appl. Probab. 8, 494–507 (1971)

Liu, Y., Zhao, Y.Q.: Asymptotic behavior of the loss probability for an M/G/1/N queue with vacations. Appl. Math. Model. 37, 1768–1780 (2013)

Mandjes, M.R.H., Zwart, A.P.: Large deviations of sojourn times in processor sharing queues. Queueing Syst. 52, 237–250 (2006)

Miller, L.W.: A note on the busy period of an M/G/1 finite queue. Oper. Res. 23(6), 1179–1182 (1975)

Miyazawa, M., Sakuma, Y., Yamaguchi, S.: Asymptotic behaviors of the loss probability for a finite buffer queue with QBD structure. Stoch. Models 23, 79–95 (2007)

Pakes, A.G.: On the tails of waiting-time distributions. J. Appl. Probab. 12, 555–564 (1975)

Palmowski, Z., Rolski, T.: On the exact asymptotics of the busy period in GI/G/1 queues. Adv. Appl. Probab. 38(3), 792–803 (2006)

Pihlsgård, M.: Loss rate asymptotics in a \(GI/G/1\) queue with finite buffer. Stoch. Models 21, 913–931 (2005)

Takagi, H.: Queueing analysis: A Foundation of Performance Evaluation, vol. 2: Finite Systems. North-Holland, Amsterdam (1993)

Takagi, H., LaMaire, R.O.: On the busy period of an M/G/1/K queue. Oper. Res. 42(1), 192–193 (1994)

Veraverbeke, N.: Asymptotic behavior of Wiener–Hopf factors of a random walk. Stoch. Processes Appl. 5, 27–37 (1997)

Yashkov, S.F.: On asymptotic property of the sojourn time in the M/G/1-EPS queue. Inf. Process 6, 256–257 (2006). (in Russian)

Yashkov, S.F., Yashkova, A.S.: Processor sharing: a survey of the mathematical theory. Autom. Remote Control 68, 1662–1731 (2007)

Zhen, Q., Knessl, C.: Asymptotic expansions for the conditional sojourn time distribution in the M/M/1-PS queue. Queueing Syst. 57, 157–168 (2007)

Zhen, Q., Knessl, C.: Asymptotic expansions for the sojourn time distribution in the M/G/1-PS queue. Math. Meth. Oper. Res. 71, 201–244 (2010)

Zwart, A.P.: A fluid queue with a finite buffer and subexponential input. Adv. Appl. Probab. 32, 221–243 (2000)

Zwart, A.P.: Tail asymptotic for the busy period in the GI/G/1 queue. Math. Oper. Res. 36(3), 485–493 (2001)

Zwart, A.P., Boxma, O.J.: Sojourn time asymptotics in the \(M/G/1\) processor sharing queue. Queueing Syst. 35, 141–166 (2000)

Acknowledgments

The authors thank an anonymous referee for his/her suggestions, which inspired us to have a significantly improved proof to Theorem 2.1 and to generalize our result to the \(GI/G/1/K\) queue. The authors also thank Drs. O. Boxma and B. Zwart for their suggestions of references. This research was supported in part through Summer Fellowships of the University of Northern Iowa, the National Natural Science Foundation of China (Grant No. 11171019) and an NSERC Discovery Grant.

Author information

Authors and Affiliations

Corresponding author

Appendix: Useful Literature Results

Appendix: Useful Literature Results

For convenience, we collected useful literature results for this paper in this appendix.

The following two basic properties of the subexponential distribution can be found in Embrechts et al. [17].

Lemma 6.1

(Lemma 1.3.5 in [17]) If \(F \in \mathcal S \), let \(X\) be a random variable having \(F\) as its distribution. Then, (a)

and (b)

The following lemma says that the class \(\mathcal S \) is closed under tail-equivalence.

Lemma 6.2

(Lemma A 3.15 in [17]) Suppose that \(F\) and \(G\) are distributions on \((0,\infty )\). If \(F \in \mathcal S \) and

then, \(G \in \mathcal S \).

The following result is useful for obtaining the asymptotic tail probability of the sum of independent random variables having subexponential distributions.

Lemma 6.3

(Lemma A 3.28 of in [17]) Let \(Y_1, Y_2, \ldots , Y_m\) be independent r.v.s and \(F \in \mathcal S \). Assume

Then,

Corollary 6.1

If \(F_1\) and \(F_2\) are two distributions such that \(F_1\in \mathcal S \) and \(\overline{F}_2(x)=o(\overline{F}_1(x))\), then \(F_1*F_2\in \mathcal S \) and \(\overline{F_1*F_2}(x) \sim \overline{F}_1(x)\) as \(x\rightarrow \infty \).

Lemma 6.4

(Geometric Sum, p. 580 in [17]) Let \(Y_1, Y_2, \ldots \) be a sequence of i.i.d. r.v.s with a common d.f. \(F(x)\). Let \(N\) be an integer-valued random variable, which is independent of the sequence \(Y_i\) and has a geometric distribution \(P(N=k) = (1-p)p^{k}\), \(k=0, 1,2,\ldots \). Define \(S_0\equiv 0\) and \(S_n = Y_1+ Y_2+ \cdots + Y_n\) for \(n \ge 1\). Then,

where \(F^{*n}\) denotes the \(n\)-fold convolution of \(F\) (\(F^{*0}\) is defined as the Heaviside unit step function), and the LST of \(G(x)\) is given by

where \(f(s)\) is the LST of \(F(x)\).

Lemma 6.5

(p. 296 in Asmussen [4]) Let \(Y_1, Y_2, \ldots \) be i.i.d. r.v.s with a common subexponential distribution \(F\) and let \(N\) be an integer-valued random variable, independent of the sequence \(Y_i\), with \(Ez^N < \infty \) for some \(z>1\). Then,

Specifically, if \(P(N=k) = (1-p)p^{k}\), \(k=0, 1,2, \ldots \), then

The following results can be easily verified.

Lemma 6.6

-

(1)

Let \(Y_i\) be a r.v. whose d.f. has the LST \(g_i(s)\), \(i=1, 2,\ldots ,n\). Define

$$\begin{aligned} X = Y_i \text{ with} \text{ probability} p_i,\ 1\le i \le n, \end{aligned}$$where \(\sum _{i=1}^n p_i=1\). Then, the d.f. of \(X\) has the LST \(g_X(s)=\sum _{i=1}^n p_i f_i(s)\);

-

(2)

Let \(Y_1, Y_2, \ldots , Y_n\) be independent r.v.s whose d.f.s have the LST \(g_1(s),g_2(s),\ldots ,g_n(s)\), respectively. Then, \(g(s)=\prod _{i=1}^n g_i(s)\) can be viewed as the LST of the d.f. of the r.v. \(X\overset{d}{=}\sum _{i=1}^n Y_i\).

Rights and permissions

About this article

Cite this article

Liu, B., Wang, J. & Zhao, Y.Q. Tail asymptotics of the waiting time and the busy period for the \({{\varvec{M/G/1/K}}}\) queues with subexponential service times. Queueing Syst 76, 1–19 (2014). https://doi.org/10.1007/s11134-013-9348-8

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11134-013-9348-8

Keywords

- \(M/G/1/K\) queue

- \(GI/G/1/K\) queue

- Waiting time

- Busy period

- Subexponential distribution

- Tail asymptotics