Abstract

This paper uses “double DEA” to assess how accounting for quality influences DEA technical efficiency scores of a sample of 1,074 US hospitals. In the first use of DEA, quality indices are estimated using a variety of process and outcome measures of quality. In the second use of DEA, technical efficiency is assessed while controlling for quality. A variety of DEA quality indices and a DEA variety of efficiency models are compared to determine how the treatment of quality influences findings regarding technical efficiency. Controlling for efficiency does matter, with outcome measures having an apparently greater impact than process measures. Given the call for improved quality and better cost containment, controlling for quality is an important contribution to efficiency analysis.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The “iron triangle” of health care—access, cost, and quality—has received considerable attention in both the academic and popular literature. With estimates that perhaps 40 million plus people lack health insurance, the first side of the triangle, access to health care, has been a major story in the US in recent years. Access to health care was addressed by the Patient Protection and Affordable Care Act, which began to take effect in the US on March 23, 2010. The law provides several vehicles for extending health care insurance coverage and mandates that by 2014 all Americans must have health care coverage or pay a fine. Cost containment, the second side of the iron triangle, has been a research and public policy concern for decades. Much of the emphasis has focused on hospitals, which represent the largest single block of health care spending in most industrialized countries (Zweifel et al. 2009). For example, 31 % of US national health expenditures in 2010 were spent on hospitals (Martin et al. 2012). In the 1980s, Medicare introduced its Prospective Payment System (PPS) as a cost control measure to replace its generous Fee for Service (FFS) policy which allegedly contributed to escalating costs. Managed care was introduced in the private sector shortly thereafter in another attempt to control health care costs. Numerous studies of hospital efficiency have been undertaken to determine how much of hospital cost was attributable to “waste” (see Hollingsworth et al. 1999; Hollingsworth 2003, 2008, for reviews); the academic literature was voluminous enough to be characterized by Hollingsworth (2008, p. 1107) as a “small industry.” The third side of the iron triangle, concern about quality, is in part an outgrowth of the move to contain health care costs. Critics have argued that health care providers trade-off cost control efforts and quality, with patients leaving hospitals “quicker but sicker” as a result of the quest to contain costs.Footnote 1

While the “iron triangle” was intended to drive home the point that access, cost, and quality are connected, they have often been treated separately in the academic literature. Until recently, this was especially true in the field of hospital cost function estimation and efficiency measurement. Two early efforts to account for quality while measuring hospital efficiency are Morey et al. (1992), who incorporated quality measures into a DEA efficiency analysis of hospitals, and Carey and Burgess (1999), who argued that leaving quality out of cost function estimation would result in omitted variable bias. Part of the reason that quality has largely been ignored in hospital efficiency studies is that quality is difficult to define and quantify. While the issue of how to measure “quality” in health care has not been resolved definitively, a variety of measures of quality have now become available. This is due in part to the efforts of the Centers for Medicare & Medicaid Services (CMS), which offers “pay-for-performance” (P4P) incentives to hospitals,Footnote 2 as well as to patient advocacy groups, such as the Leapfrog Group, which seek to provide consumers with information on hospital quality so that they can make better health care decisions. The problem is no longer one of not having measures of quality, but rather one of how to incorporate the wealth of quality measures into an analysis of hospital efficiency.

In this paper we propose a new approach by which multiple quality measures can be incorporated into the analysis of the hospital efficiency.Footnote 3 Specifically, we propose a two-step procedure in which both steps involve the use of DEA, but for different purposes. As a first step, DEA is used to construct indices of hospital quality based on a variety of procedural and outcome indicators of quality. As a second step, these DEA-based quality indices are incorporated in a variety of ways into a DEA analysis of hospital technical efficiency. We dub this a “double DEA” approach. The results found in both stages are analyzed and contrasted. The remainder of the paper is organized as follows. Section 2 provides additional background on the need to jointly monitor efficiency and quality in the hospital sector and motivates our contribution. Section 3 offers a brief review of the literature on the relationship between efficiency and quality in the hospital sector and recent approaches used to measure efficiency while accounting for quality. Section 4 describes a set of three quality indices that we generate using DEA; these indices are used to account for quality when measuring hospital efficiency in Sect. 5. Section 6 summarizes the paper.

2 Background and motivation

The current system of pricing and financing medical care in the United States seems is the evolution of several public policies with varying objectives and intentions. Among the multitude of factors contributing to the complexity of the current US health care market is widespread, but not universal, insurance coverage, much of which is provided by the government. Adverse selection makes private health insurance markets inefficient, but a large percentage of the US workforce has had access to group health insurance through their employer since the 1940s, an artifact of wage controls imposed during WW II. Beneficial tax treatment, employer subsidies of the premium, and mitigation of adverse selection help allow the group health insurance market to work fairly well to provide coverage for employees and their families through their employers (Thomasson 2002).

Medicare is one of the two major government health insurance programs; Medicaid is the other major insurance program. Medicare was created in 1964 to ensure adequate access to appropriate medical care for senior citizens no longer in the workforce and who were unlikely to have group coverage through an employer.Footnote 4 Medicare originally reimbursed medical care providers retrospectively for the cost of all care provided to its covered beneficiaries (i.e., Fee for Service [FFS] reimbursement). Like all medical insurance, this program essentially lowered the marginal cost of medical care for covered beneficiaries (to near zero in some cases). Since rational, optimizing economic agents will consume care up to the quantity where the marginal benefit equals the marginal cost, medical insurance encourages beneficiaries to consume a greater quantity of medical care. Additionally, health care providers have minimal incentives to ration the care provided or to provide less than the highest quality of care when they are reimbursed for the full cost of all care provided. It is not surprising that the use of medical services and benefits paid out for Medicare have continuously increased and are projected to continue to increase unless incentives for patients to reduce the use of medical care are introduced (Fuchs 1999; MedPAC 2010).

In 1983 Medicare converted from FFS to a Prospective Payment System (PPS) based on diagnosis related groups (DRGs) for inpatient care.Footnote 5 Under PPS, providers are paid a fixed amount of money to treat a patient based on the DRG, severity, and procedure codes regardless of the actual cost incurred to treat that patient.Footnote 6 While not necessarily the primary goal of the conversion, PPS does provide an incentive for providers to give appropriate care in the most cost-effective manner.Footnote 7 In the production of most goods, incentives that encourage production with the lowest resource usage would seem to be unambiguously good. In the case of medical care, however, where providers heavily influence consumer choices, and consumers have imperfect, unverifiable information and hence face considerable uncertainty, the PPS reimbursement system may have had the unintended consequence of encouraging providers to reduce the quality of care in order to lower costs. Lower quality of care could cause adverse health outcomes in the covered population.

The introduction of PPS (and negotiated pricing contracts for large managed care providers) substantially changed the pricing power and pricing structure of hospitals by subjecting them to yardstick competition (Shleifer 1985). Yardstick competition is an attempt to replicate the results of perfect competition in non-competitive markets. Under yardstick competition, the fee paid to hospitals is based on the average cost across hospitals of treating a patient with a given DRG. In principle, this should encourage those hospitals with higher than average costs to become more efficient, with all hospitals approaching the efficient frontier over time.

Though not all hospital patients are covered by Medicare, Medicare payments were 29 % of hospital spending in 2008; Medicaid and all State Children’s Health Insurance Program (SCHIP) programs represented another 17 % of hospital spending (MedPAC 2010). While it is possible that the conversion to PPS may have acted as an exogenous shock that encouraged all hospitals to provide care more efficiently to all patients, as yardstick competition theories would predict (Shleifer 1985), it is also possible that it encouraged cost shifting, such that the care provided to Medicare patients is subsidized by higher prices charged to non-Medicare patients. Changes in Medicare reimbursement, however, were not the only compensatory changes taking place. The growth in employer-sponsored plans involving contracts with managed care (MC) and health maintenance organizations (HMO) further increased the fiscal incentives for hospitals to control the cost of health care for covered plan participants, thus reducing the ability to shift costs to other payers. If hospitals respond to the incentives as intended, it would lead to higher efficiency, including technical, allocative, and cost efficiencies. However, if the quality of care is difficult for consumers to assess accurately and cost is increasing in quality, as some studies indicate (e.g., Carey 2003; Morey et al. 1992), then it is entirely plausible that reimbursement mechanisms intended to control the cost of health care may also lead to consumers receiving a lower quality of care. If quality is not included in the analysis of cost/efficiency, it would cause an omitted variable problem that would bias any results found or conclusions drawn from the data. The primary concern is that failure to account for quality could lead to hospitals that provide a lower quality of care to appear to be relatively more efficient, while the hospitals providing the best care could appear to utilize resources sub-optimally. For these reasons, hospital efficiency and the quality of care provided by hospitals should be considered together when evaluating public policy on health care.

While many studies have been done on hospital quality and many others on hospital efficiency this paper addresses the omitted variable bias by measuring the efficiency of hospitals while controlling for the quality of care provided. It also improves on previous quality measures by incorporating outcome and process quality indicators into quality indices using data envelopment analysis (DEA), which minimizes the a priori assumptions and weights needed to form an aggregate measure of quality.

3 Hospital quality and efficiency literature

The potential for PPS to generate unintended consequences was quickly recognized. Several studies were done shortly after the introduction of PPS to assess whether the quality and/or efficiency of care changed as a result. Rogers et al. (1990) found no change in mortality following hospitalization but found an increased likelihood of an unstable patient being discharged. Kosecoff et al. (1990) found an improvement in quality with the exception of discharge planning. Finally, Keeler et al. (1990) found that Medicare patients were sicker at time of admission after the introduction of PPS. All of these findings could be the result of a shift from inpatient to outpatient care treatments for less critically ill patients, since outpatient services continued to be covered on a retrospective FFS basis until 2000.

Chirikos and Sear (2000) found little change in efficiency in Florida hospitals from 1983 to 1992 with significant inefficiency present regardless of the technique used to estimate the efficiency. Rosko (2001), in contrast, found improvements in efficiency from 1990 to 1996 for urban US hospitals when efficiency was estimated using stochastic frontier analysis (SFA). Since all of these studies were done using data available after the introduction of PPS financing, it is possible that their results captured the residual response to the financing shock, but that other trends might emerge over time. Further study is also warranted given the increasing market share of managed care, HMO, and other private insurance plans with strong cost control mechanisms.

Variations in the quality of care provided by hospitals have been evaluated with mixed results. Sloan et al. (2001) found no significant difference in the mortality rate based on hospital characteristics, primarily ownership status. But Jha et al. (2005) found that not-for-profit hospitals, academic hospitals, and hospitals located in the Northeast and Midwest to be statistically significant determinants of hospital quality when quality was based on process measures. These two studies suggest that the effect of quality may depend on how quality is measured/proxied.

Ideally, the quality of medical care is measured as the improvement in health as a direct result of the care provided, or a measure of the ultimate health outcomes of care. In reality, measuring outcomes in this way is problematic for many reasons. First, many illnesses appear or subside somewhat randomly. Some patients may experience adverse outcomes despite receiving the best possible care while other patients fully recover even in the absence of care. The stochastic nature of many conditions makes it difficult to determine the effects of care without observing a large number of patients receiving similar medical care for the same illness. A second consideration is that many complications or illnesses may not manifest immediately. Exposure to some risks or illnesses may not lead to observable symptoms or complications until several months or years after the exposure or treatment. This requires the observation of patients for sufficiently long periods of time to observe any non-immediate outcomes. Finally, patient characteristics other than the medical care received (e.g., socioeconomic factors) play a significant role in the health outcome of the patient. The patient’s compliance with discharge instructions, genetic predispositions, home environment, and lifestyle all influence the health status of the patient and are largely outside the control of the care provider, so they must be controlled for if the outcomes as a result of the care are to be accurately measured. These confounding characteristics make it necessary to observe large numbers of patients with similar conditions over long time periods to measure health consequences truly attributable to the medical care received. Risk-adjusted mortality rates are intended to control for patient characteristics, but the validity of risk adjustments have been questioned (Thomas and Hofer 1999).

Donabedian (1978) suggested that there are three components of quality that need to be accounted for in modern medical settings. The first component measures the quality of the structure of the care delivery system, or the quantity and quality of resources or inputs available for providing care. The second component is the process of the care, or how the inputs are combined to provide care which measures the quality of the clinical decision-making process. The third component measures the health outcomes resulting from the care as discussed above. Donabedian argues that any overall measure of medical care must include all three components to adequately account for the quality of care patients receive. While outcome quality measures are natural metrics for patients to appreciate and may come close to measuring the health effects in which we are ultimately interested, medical professionals may find process quality measures more useful. Hospitals facing a higher than expected mortality rate may not be able to determine whether this is the result of patient risks that were not accounted for, or whether it is due to inadequate clinical practices. If it is determined to result from inadequate care, the recognition does not indicate the best method for improving the quality of care or suggest modifications that care providers could make to provide better quality patient outcomes. Process quality measures, on the other hand, provide hospitals with insights as to specific steps to take to improve the quality of patient care. Furthermore, medical care providers have direct control over the clinical decision making process, but have little or no control over the quality and quantity of resources available and only indirect or partial control over the outcomes. In our analysis below, all three components of quality are considered by using data on hospital inputs, processes, and outcomes.

Given the potential for negative outcomes of poor medical care, the quality of care provided by hospitals is of immediate concern. However, most hospitals are incredibly complex organizations treating a wide variety of patient types with a variety of illnesses and no single quality measure has been derived that captures all the dimensions of the quality of care provided by the hospital. DesHarnais et al. (1991) examined three different outcome measures and calculated an index for each of the three risk-adjusted measures: mortality, complication rates, and re-admission rates. They found no correlation among a hospital’s ranks on the three quality measures. Further inconsistencies in quality measures have been found. Farsi (2004) examined changes in mortality rates of patients admitted through the ER with diagnoses of acute myocardial infarction (AMI, or heart attack) or congestive heart failure (CHF) after an ownership conversion to determine whether financial incentives affect quality. He found that conversion to for-profit status increased mortality rates for AMI patients, while conversion to non-profit status increased mortality rates for CHF patients. Hartz et al. (1993) found high risk-adjusted mortality to be correlated with process quality indicators only when compared to like hospitals; comparisons across diverse groups of hospitals are uncorrelated. These studies suggest that different measures of quality contain different information and that a measure that accounts for the multiple facets of quality is needed.

To account for the multiple aspects of quality, Jacobs et al. (2005) created a quality index number reflecting 10 quality measures using a variety of aggregation methods, such as an equally weighted sum, a variable weights sum, and transforming the quality measures using non-linear rules to assign a categorical value (which was intended to replicate the star ratings of English hospitals). Jacobs et al. (2005) found that the quality indices and rankings derived from them were quite sensitive to the aggregation method used and the scales used to measure the individual quality components, particularly when some measurement error is present or when there is random variation. The aggregation method used to generate the index number also required the researchers to make assumptions regarding the weights or priorities used in the process. Using a separate metric for the quality of each component of care quickly generates too much data to be easily processed by consumers seeking care, but an aggregated quality measure needs to be free from the types of sensitivity and weighting concerns documented by Jacobs et al. (2005). Several other attempts to measure the quality of care empirically have also concluded that a single measure of quality does not adequately capture the separate components of quality (e.g., Farsi 2004; Pine et al. 1997; Hines 2003).

Despite the evidence that no single quality measure easily captures overall hospital quality, the current consumer-driven care initiatives rely on hospitals competing for consumers and require consumers to make sophisticated decisions on how to best spend health care dollars. Several states have begun requiring hospitals to make various quality statistics available to consumers, but how well consumers can interpret the information available and manage large amounts of quality information remains uncertain.

Although several studies have considered hospital quality, and many more have evaluated hospital efficiency, very few have considered both efficiency measurements and the quality of care provided. Carey (2003) considered efficiency and quality simultaneously by using a hospital mortality index from The Guide to Hospital Performance as a proxy for quality when estimating a cost function using SFA. Carey (2003) found that increasing quality was associated with increases in costs. Ferrier and Valdmanis (1996) used the quality of care, as measured by the ratio of risk-adjusted predicted mortality to actual mortality, as one of the determinants of efficiency scores for rural hospitals. They found quality to be positively and significantly related to technical efficiency, but negatively and significantly related to allocative and scale efficiency. Deily and McKay (2006) examined the relationship between cost efficiency and the quality of care in a two-stage procedure. Efficiency was determined using SFA, which was then used as an explanatory variable to examine quality as measured by the risk-adjusted mortality rates. Using data from Florida they found that higher cost inefficiency was associated with higher inpatient mortality rates. These latter two studies involved a two-stage methodology that required the authors to make assumptions regarding the direction of causality between efficiency and quality. Despite the causality assumptions, these constructs should reveal whether or not quality variations show up as inefficiency.

Some earlier studies of hospital efficiency that accounted for quality were discussed above (Morey et al. 1992; Carey and Burgess 1999). Another way to account for quality when measuring efficiency include the use of quality indicators (e.g., teaching status) as a control in either the first or second stage of efficiency analysis. Recently there has been an increased interest in bringing quality into the analysis of hospital efficiency and we have identified three methods for accounting for quality in the recent hospital efficiency literature—including quality measures as extra outputs in a DEA model, the use of various controls for quality in the estimation of stochastic cost frontiers, and the use of a DEA-congestion model to examine quality.

A DEA model that specifies quality measures as extra outputs was used by Nayar and Ozcan (2008) to compare the efficiency for a 2003 sample of 53 Virginia hospitals. Their baseline DEA model included only standard inputs (total beds, scaled expenses, total staff FTEs, and total assets) and standard outputs (adjusted discharges, trainings FTEs, and outpatient visits). Their second DEA model specification included the same inputs and outputs plus three pneumonia-related process measures of quality—percentage of pneumonia patients who received their initial dose of antibiotics within 4 h of admission, percentage of pneumonia patients given an oxygen assessment, and percentage of elderly pneumonia patients who were given a pneumonia vaccine, if appropriate, before being discharged. Nayar and Ozcan report a Spearman rank correlation of 0.77 between the efficiency scores produced by their two models. They conclude that this offers evidence that quality is not sacrificed in the name of efficiency in the provision of hospital care.

Mutter et al. (2008) estimated costs functions for 1,290 urban hospitals operating in 20 US states during 2001 using stochastic frontier analysis. Mutter et al. (2008) contrast the results of five models that vary in the degree and means in which they account for both patient burden of illness and hospital quality. Their cost functions include the prices of capital (proxied by the area mean value of depreciation and interest expense per bed) and labor (proxied by area mean salary and benefits per FTE employee). Outputs included inpatient admissions, outpatient visits, and patient days in non-acute care units. Their base model accounted for patient burden of illness using Medicare’s Case-Mix Index and included two-binary variables related to the teaching status of hospitals to control for quality. The second model of Mutter et al. (2008) built on their first model by adding an “all-causes, risk-adjusted, excess in-hospital mortality rate index for Medicare patients” (p. 2000) that they obtained from Solucient. Their third model employed a more specific quality measure than their second model—“condition-specific, risk-adjusted mortality rates” (p. 2000) generated using the QI (quality indicator) software from the Agency for Health care Research and Quality (AHRQ). Their fourth model added 30 hospital-level comorbidity rates per admission to their first model. Finally, the fifth and final model combined their second and fourth models. They found that their first three models returned highly correlated estimates of inefficiency (Pearson correlation coefficients of 0.99 or higher). However, the results of their last two models suggest that including the comorbidity rates leads to increased estimates of efficiency. While the last two models also were highly correlated with one another (Pearson correlation coefficient of 0.999), correlations between the first three models and the last two models were around 0.80. Mutter et al. (2008) conclude “that in under-specified models variation in patient burden of illness might masquerade as inefficiency” (p. 2005).

Valdmanis et al. (2008) used congestion analysis, a modification of DEA, to examine whether there was a trade-off between the efficiency and quality in a sample of 1,377 US urban hospitals operating in 34 states in 2004. The congestion model treats some “outputs” as undesirable—in this case, the undesirable outputs are “patient safety events.” Valdmanis et al. (2008) used DEA models in each step of their two-step procedure. First, they used DEA to generate a measure of congestion (output loss associated with patient safety patient events in their case) in an output-oriented model. The inputs included bassinets, two measures of bed types, FTE registered nurses (RNs), licensed practical nurses (LPNs), medical residents, and other personnel. The desirable outputs included case-mix adjusted admissions, total surgeries, total outpatient visits, total births, and total other patient days. The undesirable outputs (indicators of poor quality) included failure to rescue, infection due to medical care, post-operative respiratory failure, and post-operative sepsis. These “nurse sensitive” quality measures were generated using AHRQ’s QI software. After adjusting desirable outputs by “quality congestion,” DEA was performed a second time. By comparing results between the first (unadjusted) and second (adjusted) DEA models, they were able to examine whether increases in inputs would lead to increases in quality or to increases in inefficiency. They concluded that a reallocation of resources could improve relative quality among hospitals. For example, low quality hospitals tended to have too few labor inputs while high quality hospitals tended to have excess labor inputs. Overall, they found that there is not necessarily a tradeoff between efficiency and quality. Mutter et al. (2010) also used the congestion approach and concluded that low-performing hospitals could potentially improve both quality and efficiency by adopting the characteristics of best-practice hospitals.

4 The quality indices

The sample for this study consists of 1,074 US general acute-care hospitals operating in FY2005 for which sufficient data on quality measures and input and output data could be collected. To be included in the sample, a hospital had to treat a minimum of 25 patients for each of the four outcome measures used as quality indicators and also had to report compliance rates to the Center for Medicare & Medicaid Services (CMS) for the eight process quality measures used. The process measures used are part of the original ten compliance rates that CMS began collecting in 2003. Prior to 2004, data reporting was strictly voluntary and those hospitals choosing to report likely were not representative of all hospitals. Beginning in 2004, Medicare linked participation to annual payment updates. The participation rate is now over 98 % (Lindenauer et al. 2007), so the financial incentive to “voluntarily” participate should mitigate selection bias concerns. By including only those hospitals that treated at least 25 patients without contraindications for each of the four outcome indicators used, this study excludes many small hospitals. This is necessary to prevent one adverse outcome from unduly affecting a hospital’s complication or mortality rates, which would lead to problems associated with measurement error in the outcomes quality measures. Despite excluding very small and specialty hospitals, the hospitals included represent most of the acute care hospitals beds and the hospital care provided to a majority of the US population.

While the definition of hospital output is far from straightforward, for hospitals “[q]uality may be even more difficult to measure than output…” (Olesen and Petersen 2002, p. 106). Conceptually difficult to define and practically hard to quantify, “quality of care” could refer to any aspect of a hospital’s service that influences the well-being of a patient both during treatment and beyond. Quality includes the amenities of the “hotel” aspects of a hospital and the “humanness” of care as well as procedural compliance rates and mortality and complication rates. In this study, we use a variety of outcome measures (indicators of the quality of outputs) as well as process measures (indicators of the quality of inputs) as measures of quality. The quality measures we use come from two sources: (1) the outcome quality data come from HealthGrades, Inc.,Footnote 8 and (2) the process quality data come from the CMS website.Footnote 9 The four outcome measures used include the risk-adjusted predicted and observed mortality rates for acute myocardial infarction (AMI), congestive heart failure (CHF), pneumonia, and the risk-adjusted predicted and observed complication rates for cholecystectomy surgery (gall-bladder removal). The first three measures are associated with commonly presented serious ailments and are often used to assess hospital qualityFootnote 10 and the last ailment, the cholecystectomy, is a common surgical procedure. All four ailments are commonly presented at general acute care hospitals. (See Table 1 for definitions of the outcome quality measures.) HealthGrades provided the data in June 2005, with the predicted and observed measures based on the most recent data available at that time.Footnote 11 There were 4,228 hospitals in the HealthGrades data set; however, many of those observations contained outcome data for one or more but not all four conditions. The process quality measures related to AMI, CHF, and pneumonia, were downloaded from the CMS website in June 2005 to reflect patient care in the same time period as the HealthGrades, Inc. data. The CMS website included compliance rates from 4,747 hospitals, but again most hospitals do not report compliance rates for all three conditions (and eight measures) used in this study. (See Table 1 for definitions of the eight process measures used.) As noted above, our final data sample consisted of 1,074 hospitals.

Recall that DesHarnais et al. (1991) concluded that because quality is multidimensional, no single aspect of quality was sufficient for ranking hospitals. While some of the quality measures we employ have been used in previous studies, typically only a single or small number of aspects of a hospital’s quality of care have been considered (e.g., Carey and Burgess 1999, used mortality rates, readmission rates, and outpatient follow-up visits as three measures of quality in estimating a cost function for Veterans Administration [VA] hospitals). Because there are myriad measures of quality we combine several different measures into a single, aggregate measure of quality using quantifiable outcome and process measures as our data. While there are several methods that could be used to create a single, aggregate measure, almost all of them require the researcher to have a previous measure or to make assumptions about weights assigned to the individual measures used to create the index. It is reasonable to assume that, ceteris paribus, hospitals seek to maximize the quality of care for every patient; however, constraints on resources may force them to make trade-offs between the quality and quantity of care provided within a diagnosis and across different diagnoses and patient groups.Footnote 12 Equally weighting each patient group or diagnosis seems inadequate since not all diagnoses are equally common or equally severe. However, weighting by patient count may also be inappropriate if it reflects patient choice or hospital strategy in metropolitan areas where patients may have several hospitals to choose from and patients in less populated areas do not have similar choices.

To minimize the a priori assumptions needed, two aggregation methods were considered: factor analysis and DEA. DEA has the advantage of not assigning weights a priori. Instead, the linear program solved by DEA results in what Cherchye (2001) described as “benefit of the doubt weighting” (p. 408)—weights are chosen to make each “decision making unit” (DMU) appear to be as efficient as possible.Footnote 13 In contrast, factor analysis uses the weights that best condense the information contained in the data. In our case, factor analysis proved to be an unsatisfactory option. An unrestricted exploratory analysis determined there were 6 independent factors in the 12 quality measures used. Limiting the analysis to one factor produced factor weights that were highly sensitive to the sample used.Footnote 14 Based on this, we opted to use DEA as the means to aggregate the multiple dimensions of quality into several indices of quality.

DEA is most frequently applied to measure “efficiency” in a production setting; but it has also been used for a number of other purposes. For example, Doyle and Green (1991) specified a DEA model with a single “input” (cost) and multiple “outputs” (features) to estimate a composite indicator of the price/quality relationship of the computer printers in their sample, providing a means for consumers to more easily compare products. Oh et al. (2010) consider the price/quality trade-off for Korean automobiles. Thompson et al. (1986) explored the optimal location for the superconducting-supercollider from among six sites in Texas. They specified three inputs in their DEA model and assigned each potential site the same quantity—1—of a single output—the superconducting supercollider. Lovell (1995) suggested the use of a special case of DEA, the non-convex Free Disposal Hull (FDH), to create an aggregated measure of macroeconomic performance that is not sensitive to measurement scale and does not assign weights a priori. Rather than a single, unitary output as in the case of Thompson et al. (1986), in Lovell (1995) each country had a unitary, single input available—“its macroeconomic decision making apparatus” (Lovell 1995, p. 167), which he referred to as a country’s “helmsman”—those collectively responsible for “steering” the economy. Lovell et al. (1995) used an additive DEA model to create indices of macroeconomic performance. Olesen and Petersen (1995) utilized a stochastic dominance approach to control for quality where some output is good and some output is bad. This overcomes the strong disposability assumption, but it requires quality to be measured discretely and quantities of each level of quality to be known, which is not feasible with data currently available for hospitals. More recently, Cherchye (2001) used DEA to merge four separate measures of macroeconomic performance into a single “synthetic indicator” of performance.Footnote 15 He suggests that the weights applied to each aspect of economic performance provide proxies that reflect policy priorities.Footnote 16

In this paper we use DEA as suggested in Cherchye (2001) to create a “single statistic” of quality based on multiple outcome and process quality measures for each hospital in our sample. This approach allows us to integrate multiple components of quality into a single measure, or index, without imposing a priori weights or strong assumptions on the model. Under the assumption that hospitals would like to maximize quality, we use a radial, output-oriented DEA model (with a single, constant unitary input—a hospital’s “decision-making apparatus” used to guide quality [Lovell (1995) helmsman])—and a variety of process and outcome measures specified as the outputs. Because most of the quality indicators are measured as ratios, we use a variable returns to scale model (Hollingsworth and Smith 2003). For hospital “o,” the output oriented, VRS DEA model for n hospitals, k quality indicators for each hospital (y ij ), and a single, unitary input (the “helmsman”) is given by the following linear programming problem:

Note that the second inequality constraint (representing the single unitary input available to all hospitals) is redundant since it will automatically be satisfied given the assumptions of variable returns to scale (VRS) and non-negativity of the weights that form the convex combination of hospitals that define the best practice frontier. The resulting solution, θ*, provides a “single statistic” aggregate quality of a hospital—a quality index. The greater the value of θ*, the higher the quality of hospital “o.” Different quality indices can be constructed, depending upon the particular quantity indicators that are used in the implementation of the above DEA model.

Eight of the twelve outputs in the analysis are the compliance rates from the CMS data. They measure the percentage of patients without a contraindication that received the recommended diagnostic or treatment within the specified time frame. The compliance rates range from 0.05 to 0.99 and are procedural, or process, quality measures. The other four quality measures are the ratios of risk-adjusted predicted to observed mortality rates or complication rates for each of the four conditions noted above. The risk-adjusted predicted mortality rates and complication rates incorporate patient demographic characteristics and co-morbidities to account for patient risks outside the hospital’s control. The ratio is used since all outputs must be positive for DEA and so a higher number represents better than expected patient outcomes, presumably due to higher quality of care. These mortality ratios are the outcome quality measures. The summary statistics for the twelve quality measures shown in Table 2 suggest that quality varies considerably across hospitals. Table 3 provides both the Pearson and Spearman correlations among the twelve quality measures. Though relatively low, many of the measures are positively and statistically significantly correlated with one another, especially with measures from the same category (outcomes vs. processes). However, some of the quality measures are negatively, statistically significantly correlated, while other are not correlated, suggesting that the quality measures are contributing different pieces of information about overall hospital quality. Recall that DesHarnais et al. (1991) found their quality measures to be uncorrelated.

Using DEA to measure the efficiency of producing quality on multiple dimensions, there are several logically justifiable ways the data can be analyzed. We have chosen to examine three different aggregate quality measures to see whether the method for creating the quality measure drives any changes in technical efficiency observed when controlling for quality.

The first quality index (QI1) is generated using all twelve quality performance measures as separate outputs generated by a single unitary input (the “helmsman”). This measures how efficiently the hospital produces quality based on all the available quality measures simultaneously. The second quality index (QI2) is generated using only the eight procedural performance measures as outputs, again with a single unitary input. This score can be thought of as a measure of how effectively a hospital’s inputs, primarily labor, and processes lead to compliance with stated treatment targets. The third quality index (QI3) is derived using the four outcome quality measures as outputs and the single unitary input. It represents how effectively the hospital avoids complications or how efficiently it generates additional patient health. All three quality indices are estimated using the bootstrapping procedure of Simar and Wilson (1998), and only bias corrected measures are used in the analysis.Footnote 17

The three quality indices offer an overall measure of quality (QI1), a process quality measure (QI2), and an outcomes quality measure (QI3), respectively. In each case the index is the proportional radial distance from the origin to a given hospital relative to the best-practice quality frontier. For QI1, QI2, and QI3, the quality indices can be interpreted as the effectiveness of the “helmsman” in generating quality; scores range from 0 to 1, with hospitals on the efficient quality frontier receiving a score of 1.

Descriptive statistics of and correlations among the quality indices are reported in Tables 4 and 5, respectively. The descriptive statistics indicate wide dispersion in the quality of hospitals as well as variability in the indices themselves. As indicated in Table 5, all of the quality indices and their rankings are positively and statistically significantly correlated, though the correlations are sometimes low, especially across the process and outcome based indices. This suggests that while the indices are related, they likely provide different insights regarding quality into hospital performance. It is worth noting that the mean and the minimum values of QI3 are substantially small than those of QI1 and QI2. While each quality index has a different number of outputs the total dimensionality of the DEA models (number of inputs plus number of outputs) were essentially the same and small relative to the number of observations used in the estimation of the quality indices, suggesting that differences in the magnitude of the quality indices is not driven by the “curse of dimensionality.”

Paired t tests and sign-tests (Table 6) show that all of the quality indices are significantly different from each other; therefore, the relative distance to the frontier varies with how the quality index is specified. However, it is still possible that the same hospitals are farthest from the frontier in each of the models and that the same hospitals are on, or very close to, the frontier, in which case the efficiency rankings would be quite similar in all models. A Spearman rank correlation test rejects the hypothesis of unrelated rankings at the 0.01 level for each pair of models (Table 5).Footnote 18 However, the model used to generate the quality frontier will influence which hospitals are deemed to be most efficient. It is noteworthy that none of the hospitals in the study was efficient for all three of the quality index measures.

5 Technical efficiency incorporating quality

Once we estimated the quality indices using DEA, we evaluated technical efficiency both with and without controlling for the quality of care for all of the hospitals in our sample using a radial, input-oriented, variable returns to scale BCC DEA model (Banker et al. 1984). While there is no consensus on which inputs and outputs to include in an efficiency analysis of hospitals (see Hollingsworth 2008), our specification is fairly typical. Inputs included hospital beds, full-time equivalent (FTE) registered nurses (RNs), FTE licensed practical nurses (LPNs), FTE other labor, and medical residents.Footnote 19 Measuring the output of hospitals is an unsettled question. The ultimate output of health care is its contribution to patients’ health. Given that this is inherently difficult to measure, and not fully in the control of health care providers, outputs here are measured in terms of the tasks and services provided to patients. In particular, hospital outputs are measured by inpatient days broken down by Medicare, Medicaid, and other payers, emergency room visits, other outpatient visits, outpatient surgeries, and inpatient surgeries. All of the input and output data come from the American Hospital Association’s (AHA) Annual Survey of Hospitals. Summary statistics of the inputs and outputs, as well as for the case mix index (CMI) which is use to adjust outputs for patient severity, are reported in Table 7.Footnote 20

The first model estimated (Model 1) uses the inputs and outputs noted above without adjusting for either case mix or quality; Model 1 thus serves as a baseline model. Model 2 does not control for quality, but like all remaining models, it does adjust for case mix. Models 3a–3d included quality as an additional output. The rationale for this is that hospitals are assumed to value a reputation for providing a high quality of care but are conscious of costs. While reporting quality data is essentially mandatory and the results are publicly available and widely promoted, such as the CMS process measures, hospitals have an incentive to maximize their compliance with the guidelines since customers can use the reported compliance rates to judge a hospital’s quality of care.Footnote 21 There is evidence that hospitals are aware of their relative quality rankings and take steps to intentionally improve their CMS compliance rates (e.g., Williams et al. 2005.).Footnote 22 Model 3a includes QI1 (all quality indicators) as an additional output measure “quality;” Model 3b includes QI2 (process indicators only) as the additional quality output; Model 3c includes QI3 (outcome indicators only) as the output measure of quality; and Model 3d includes both QI2 and QI3 as measures of quality. Models 3a–3d are simply Model 2 with an additional output (or 2 in the case of Model 3d) included to capture quality. These models are similar to the DEA model of Morey et al. (1992), which included the ratio of actual deaths to predicted deaths as an additional output to control for quality for a cross-section of 300 hospitals, except that our indices of quality are more comprehensive than the single measure of quality used by Morey et al. (1992).

While it is reasonable to imagine that hospitals must make some tradeoff between quantity and quality of care with constrained resources (especially those operating on the frontier), measuring quality as a separate output may not be appropriate. It may be more appropriate to think of hospitals as producing outputs that fall along a continuum of quality so that output and quality are not separable. To estimate efficiency when quantity and quality are observed jointly, inputs and outputs are scaled by the quality indices to obtain quality adjusted measures of inputs and outputs. The logic is similar to that behind the adjustment of outputs for case mix—to create a “level playing field” when assessing the relative efficiency of hospitals against their peers. As is the case for adjusting for case mix, adjusting for quality assumes that the quality of care in a hospital is consistent over all patient types and diagnoses even though we only observe a few specific diagnoses. However, the medical conditions used to generate the quality indices are all fairly common, but serious, conditions that typically require treatment as an inpatient.Footnote 23

Thus, for Models 4a-4b, the quality indices were also used to adjust inputs and outputs to produce “quality adjusted” values of the resources used and outputs produced by hospitals. Model 4a adjusts variable inputs (all inputs except beds) and outputs using QI1, the overall measure of quality. Model 4b adjusts variable inputs by the process quality index (QI2) and outputs by the outcomes quality index (QI3). Without the adjustments for quality, Models 4a-4b would reduce to Model 2.

Two final DEA models were estimated for the sake of comparison and completeness. Another perspective on how quality of care should be modeled, perhaps more consistent with process quality measures, is that it is the skill and effort of the decision-makers and care givers that drive the quality of care received. Hospitals employing care givers with higher levels of education, more training on quality control processes, or other characteristics may allow them to provide a higher quality of care independent of the number of employees, where the quality of care is a direct reflection of the quality of the care providers. To evaluate this potential explanation for quality of care, Model 5 is estimated where the labor inputs (RNs, LPNs, and Other) are scaled by QI2 to reflect the quality of labor, or the “effective FTEs.” Finally, Model 6 includes only an “output quality adjustment”—outputs are adjusted by QI3. Without these adjustments, the Models 5 and 6 would be equivalent to Model 2.

For ease of interpretation, all bias corrected technical efficiency scores were converted to the (0,1) scale. Descriptive statistics of the efficiency scores from all of the DEA models appear in Table 8. The mean technical efficiency scores range from a high of 0.7941 for Model 1 to a low of 0.6547 for Model 4b. Recall that Model 1, which gives the highest average level of efficiency, does not adjust for either case mix or quality. Model 2, which adjusts for case mix, has a mean efficiency score of 0.7173. Thus, adjusting for case mix reduces mean efficiency by almost 10 % (Model 2 versus Model 1). This suggests that accounting for case mix is quite important. Recall that all remaining models adjusted for case mix while also accounting for quality.

Adding a quality index as an additional output (Models 3a-3d) produced mean efficiency scores smaller than that of Model 1 but greater than that of Model 2. While the increase in scores relative to Model 2 could be the result of including an extra output variable in the analysis, it is also consistent with the argument that higher quality shows up as inefficiency when it is not controlled for in the analysis. Model 4a, which adjusts inputs and outputs by the overall quality index (QI1), produces a mean efficiency score of 0.7171 which is almost identical to Model 2’s mean of 0.7173. Adjusting inputs and outputs by their own quality indices (QI2 and QI3, respectively)—Model 4b—generates a substantially lower mean efficiency score 0.6547. This suggests that additional heterogeneity is introduced into the data by these approaches.

The mean efficiency under Model 5, 0.7178, is very similar to the mean efficiency under Model 2, despite the fact that Model 5 adjusts inputs for quality whereas Model 2 contains no adjustment for quality. This suggests that input, or “process,” quality may have little impact on efficiency. This is corroborated by the fact that both the Pearson and Spearman correlations between Model 2 and Model 5 are very close to 1 (0.996 and 0.959, respectively) as reported in Table 9.

Model 6, which adjusts for only output (outcome) quality, produced a mean efficiency score of 0.6552, which is almost identical to the mean of Model 4b which adjusted separately for both input and output quality. The standard deviations of these two models are also very similar—0.148 (Model 4b) and 0.149 (Model 6). This finding, coupled with the argument in the previous paragraph, suggests that accounting for output quality may be more important than adjusting for input quality. This is further corroborated by the very high Pearson (0.996) and Spearman (0.992) correlations between Model 4b and Model 6 (see Table 9).

Table 9 reports the Pearson and Spearman correlations for all pairs of estimated models. All correlations are positive and statistically significant at better than the 0.01 % level. Each of the quality adjusted models is much more highly correlated with Model 2, which adjusts for case mix but not quality, than with Model 1, which adjusts for neither case mix nor quality. This suggests the need to adjust for both case mix and quality.

Efficiency studies often examine the relationship between efficiency scores and various potential correlates of efficiency. Rather than performing a second stage regression on the DEA scores, we examine the differences in mean values of one of the quality indices, QI1, and the DEA model scores, for two frequently studied hospital characteristics—teaching status and ownership status. As reported in Table 10, the majority of hospitals are non-teaching hospitals, and the vast majority of hospitals are privately owned, not-for-profit hospitals. Keeler et al. (1992) compared the quality of care at different types of hospitals using explicit criteria, implicit reviews, and sickness-adjusted outcomes; they found that teaching hospitals offered higher-quality of care than did non-teaching hospitals. Focusing on MCI, Allison et al. (2000) also found that teaching hospitals were of higher quality than non-teaching hospitals based on both process (input) measures and outcomes (output) measures of quality. Our results confirm these previous findings—while the difference was small, we found that the mean quality indices for teaching hospitals were statistically significantly higher than those for non-teaching hospitals; the standard deviations of the quality index were also smaller for teaching hospitals than for non-teaching hospitals (see Table 11, Panel a). A small but statistically significant difference was also found in the quality indices by ownership form—private for profit hospitals had the highest mean quality indices, and the smallest standard deviations, followed by private, for-profit hospitals and public hospitals (see Table 11, Panel b).

The higher quality of care at teaching hospitals comes at the cost of efficiency. As shown in Table 12, teaching hospitals were found to be statistically significantly less efficient than non-teaching hospitals, even after controlling for quality.Footnote 24 In all but two cases, the differences in technical efficiency were not found to be statistically significantly different across ownership form at the 5 % level or better (see Table 13). The two exceptions, Model 4b and Model 6, are the DEA models for which output quality adjustment appear to play a prominent role (see above). In these two cases, public hospitals were, on average, the most efficient, followed by private, not-for-profit hospitals and private, for-profit hospitals.

Finally, we compare the relative efficiencies of the “production” of hospital quality and the production of hospital outputs. Just as productivity can be measured by the ratio of an index of outputs to an index of inputs, we examine the ratio of an index of quality to an “index” of efficiency. To do so, we use the ratio of the first quality index, QI1, which is based on Lovell (1995) concept of a helmsman as the single input into the process of producing our twelve indicators of quality, and DEA Model 2, which adjusts for case mix, but not for quality: \( {{{\text{QI}}1} \mathord{\left/ {\vphantom {{{\text{QI}}1} {\left( {{1 \mathord{\left/ {\vphantom {1 {{\text{DEA}}^{{{\text{Model}}\;2}} }}} \right. \kern-\nulldelimiterspace} {{\text{DEA}}^{{{\text{Model}}\;2}} }}} \right)}}} \right. \kern-\nulldelimiterspace} {\left( {{1 \mathord{\left/ {\vphantom {1 {{\text{DEA}}^{{{\text{Model}}\;2}} }}} \right. \kern-\nulldelimiterspace} {{\text{DEA}}^{{{\text{Model}}\;2}} }}} \right)}}. \) (The reciprocal of the technical efficiency scores from Model 2 were used so that both the numerator and denominator has the same (0,1] range.) This ratio indicates how efficiently a hospital produces quality relative to how efficiently they produce output; this ratio may indicate the importance a hospital attaches to quality vis-á-vis cost control.

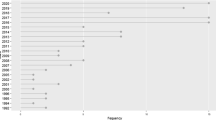

The mean of this ratio for all hospitals was 1.4028 (see Table 14), suggesting that hospital are relatively more efficient at producing quality than at controlling costs. It should be noted that the histogram plotted in Fig. 1, which approximately follows a lognormal distribution, shows that the mode is not far from 1. Comparing teaching and non-teaching hospitals, teaching hospitals (1.4694) are relatively stronger in quality relative to cost control compared to non-teaching hospitals (1.3625). The Kruskal–Wallis test of the difference of means for teaching versus non-teaching hospitals has a test stat of 47.5916, with a p value less than 0.0001, suggesting that the difference is statistically significant. This finding is consistent with theory and earlier empirical studies that suggest that teaching hospitals have higher costs, ceteris paribus, than non-teaching hospitals and place a greater emphasis on quality.Footnote 25

Comparing private for-profit and private not-for-profit hospitals (see Table 14), the results suggest that the ratio of their quality and efficiency indices are quite similar—1.3957 versus 1.4185, respectively—suggesting that quality achievement exceeds efforts at cost control. The similarity of these ratios is somewhat surprising—theory and previous empirical evidence suggest that private for-profit hospitals might be more concerned with cost control while private not-for-profit hospitals might be more concerned with quality provision. The difference in the mean value of this ratio is not statistically significantly different across the two forms of private ownership (Kruskal–Wallis \( \chi_{(1)}^{1} \)= 0.3921 with a p value of 0.5312). The mean ratios for both private forms of ownership are higher than the mean ratio for public hospitals (1.3545), suggesting that while public hospitals also perform better at quality control than at cost control, the emphasis on quality is less strong. The Kruskal–Wallis test that the means of all three ratios are the same has a test statistic of 8.0803, with a p value of 0.0176, suggesting that the difference in means is statistically significant at better than the 2 % level of significance.Footnote 26

6 Conclusion

This study does not produce a definitive answer to how quality should be incorporated into efficiency studies; however, some insight into the issue has been gained. First, none of the findings of this study contradict previous research indicating that there is a significant variation in the quality of care provided by different hospitals nationwide and that the measurement or definition of quality influences which hospitals appear to provide the highest quality of care. Second, the results of this study are also consistent with previous research in observing considerable inefficiency in many hospitals. Third, adjusting for quality clearly changes the efficiency scores, and the magnitude of the change depends on which quality adjusted method is used. Adding quality as an additional output accounts for some of the previously observed inefficiency and improves the efficiency of teaching hospitals more than non-teaching hospitals. Fourth, it is not obvious which method is the “right” way to control for quality, but pay-for-performance plans will have to define and measure quality in order to reward it. Clearly, the definition of quality may influence which hospitals see the largest rewards under pay-for-performance programs for quality and likely will affect how hospital respond to new incentives. Finally, adjusting for outcome quality appears to be more important than adjusting for process quality. Based on this finding, it may be more important to account for differences in outcome measures of quality than on process measures of quality.

Just as earlier studies found that specification of inputs and outputs (e.g., Magnussen 1996; Halsteinli et al. 2010) affects efficiency measurement, controlling for the quality of care also affects the observed efficiency, so relying on efficiency measures without controlling for quality of care to determine cost savings could encourage a lower quality of care and should not be used to infer potential cost savings. However, the stability of the efficiency rankings, regardless of the method used to control for quality, indicates that using efficiency scores have some valid applications. Since, generally the same hospitals are identified as relatively efficient or relatively inefficient regardless of the quality adjustment used, efficiency scores are probably an appropriate tool to identify hospitals which have considerable room for improvement and which hospitals are benchmarks that could be used as helpful references to achieve the improvement. Additionally, when examining the correlates of quality adjusted technical efficiency, the same hospital characteristics were found to be significant and to the same degree as in the unadjusted model.

While informative, this research is not exhaustive and there are several directions future research could pursue. As consumer driven care expands, the importance of simple but informative quality measures will only intensify. The implications of using different quality adjustments for outputs and inputs and the distribution of quality adjusted efficiency scores should be examined. Furthermore, since the outcome quality measures in this study ultimately come from outcomes of Medicare patients, it remains to be seen how outcome quality for non-elderly patient populations compares. As more states require reporting for procedures common to other populations, such as childbirth and appendectomy, evaluation of quality outcomes on different patient groups can be compared. Additionally, only four diagnoses were considered to generate the quality indices, but the growing body of quality performance measures will allow a wider scope of health conditions to be used in measuring overall quality.

Nurses and physicians are arguably the most important decision makers in the provision of hospital care. Physicians, however, are rarely hospital employees. Currently there is little quality information available on specific physicians; although, a recent pact between Congress and the AMA promises to generate data on physician quality (Pear 2006).Footnote 27 As more information on physician quality becomes available, the quality of admitting physicians should heavily influence the quality of care measurements of hospitals and make efficiency scores more informative. Another interesting extension would be to compare the efficient peers for inefficient hospitals under the different models to see how often the different quality adjustment models produce the same hospitals as benchmarks for inefficient hospitals.

The high rate of compliance with voluntary quality data disclosure when it is tied to Medicare reimbursement rates and the convergence to target CMS compliance rates observed over the past few years for selected diagnoses indicate that hospitals do respond to incentives. The widening outcome quality gap occurring simultaneously (HealthGrades 2006) reinforces the importance of making sure the incentive plan rewards the desired actions as pay-for-performance plansFootnote 28 are implemented in the reimbursement of hospital services. With proper incentives in places, quality of hospital care can improve. This paper provides a method for aggregating quality measures into a quality index and explores how these indices can be incorporated into a DEA efficiency analysis.

Notes

There is some empirical evidence to support this; for example, Maniadakis et al. (1999) found that following the reform of the National Health Service, the productivity and quality of a sample of Scottish hospitals followed opposite trends. Others, however (e.g., Clement et al. 2008), have found that technical efficiency and quality are positively related.

Historically, CMS hospital payments reflected processes; under the P4P program outcomes also affect payments.

Given appropriate data, measures of access as well as quality could be included in the efficiency estimates. At this we are not aware of any reliable measures of access to care or a way to observe non-patients who do not receive care for lack of access. Improvements in efficiency while controlling for quality should reduce costs and improve access to care.

Medicaid is a means-tested health care program funded for individuals and families. It is jointly by the U.S. federal and state governments and is administered by the states. It was created in 1965.

A similar system was implemented for outpatient services in 2000.

The payment amounts are adjusted for regional input price differences and complex cases that require extreme amounts of resources to treat.

Alternatively, providers could choose the treatment option with highest reimbursement relative to costs incurred when an illness has multiple treatment options (i.e., “upcoding”).

The outcome data were graciously provided by HealthGrades, Inc. for this research; the data cover the twelve month period June 2004–May 2005.

http://www.hospitalcompare.hhs.gov/hospital-search.aspx?AspxAutoDetectCookieSupport=1; 2005 measures were used.

The Hospital Quality Alliance, a public–private group that collects and shares information on hospital quality, includes information on these three conditions as their “starter kit” for measuring hospital quality. CMS’s Hospital Quality Initiative initially focused on these three conditions as well.

At the time HealthGrades, Inc. provided the data for this study predicted and observed mortality and complication rates were available to consumers on their website. Since that time they have converted to a star system to report the relative quality of outcomes for hospital patients and do not show actual mortality and/or complication rates. For more information see www.healthgrades.com.

It is also possible that higher quality care could result in greater efficiency and lower costs. For example, reducing adverse drug events would increase the quality of care and could save hospitals millions of dollars (Agency for Health care Research and Quality [AHRQ], 2001).

Factor analysis was run on the full sample and again on each half when the sample was randomly divided. Results of the factor analysis are available from the authors on request.

As noted above, changing or restricting weights would change DEA scores. One reason for restricting weights would be to incorporate value judgments into the analysis (Allen et al., 1997).

The correlations between the “raw” DEA scores and the bias corrected scores were very high.

Though not reported, Pearson and Spearman correlations were calculated separately for each quartile of the data; in all cases, the correlations remained statistically significant.

All three labor categories (excluding residents) were proportionally adjusted for hospitals that operate a long-term care or skilled nursing facility in conjunction with the hospital. AHA data report skilled nursing beds separately, but labor is aggregated. The analysis was run using adjusted and unadjusted labor values with results being qualitatively equivalent. The results reported here use adjusted labor inputs.

The CMI reflects the severity of patients seen by a hospital throughout the year relative to the “average” patient for all hospitals. Adjusting for case mix creates a “level playing field” when evaluating hospital performance. For more information, see Grosskopf and Valdmanis (1993).

“Compliance competition” can create undesirable consequences. For example, Metersky et al. (2006) documented that some patients receive antibiotics before a conclusive diagnosis is made to ensure the hospital complies with care guidelines that all patients ultimately diagnosed with pneumonia receive antibiotics within 4 h of arrival. This maximizes compliance rates, but is a concern of hospitals with public disclosure of compliance rates.

The Medicare claims data researchers use to calculate mortality and complication rates are also publicly available; however, relatively few potential hospital patients are likely to analyze the claims data to determine quality.

If the quality of care for fairly serious conditions is not closely correlated with the quality of more minor procedures, which would be consistent with the findings of DesHarnais et al. (1991), then the quality indices used here would be more appropriate for inpatient services and less appropriate for outpatient services.

The relative inefficiency of teaching hospitals may be due to the fact that “teaching” output of these hospitals is not included in the analysis.

For teaching status, similar results, both qualitatively and in terms of statistical significance, were obtained when the ratio was based on QI2 or QI3 rather than QI1. Quantitatively, the results were very similar when QI2 was used to form the ratio rather than QI1. When QI3 was used to form the ratio, the means for both teaching and non-teaching hospitals were smaller, but still above 1.

For ownership status, similar results, both qualitatively and in terms of statistical significance, were obtained when the ratio was based on QI2 rather than QI1. Quantitatively, the results were very similar when QI2 was used to form the ratio rather than QI1. When QI3 was used to form the ratio, the means were smaller for all three ownership forms but still above 1. In the case of using QI3, the ratio was statistically significantly higher for the two non-for-profit (private and public) than for the (private) for-profit hospitals.

Physician quality is likely related to how they are compensated; i.e., physicians responds to incentives. For a thorough discussion of payment incentives offered to physicians, see Robinson (2001).

For example, the Medicare Prescription Drug, Improvement, and Modernization Act of 2003 includes a provision that hospitals failing to provide data for ten specific quality indicators will receive a 0.4 percentage point smaller annual payment increase compared to hospitals that do submit the data. Increasingly, third party payers are including financial incentives to encourage higher quality. For example, to learn about Blue Cross Blue Shield’s hospital pay-for-performance plans see http://www.bcbsm.com/provider/value_partnerships/hpp/.

References

Agency for Health care Research and Quality (2001) Reducing and preventing adverse drug events to decrease hospital costs. Res Action (1) (AHRQ Publication Number 01-0020). http://www.ahrq.gov/qual/aderia/aderia.htm

Allen R, Athanassopoulos AD, Dyson RG, Thanassoulis E (1997) Weights restrictions and value judgements in DEA: evolution, development and future directions. Ann Oper Res 73:13–34

Allison JJ, Kiefe CI, Weissman NW, Person SD, Rousculp M, Canto JG, Sejong Bae O, Williams D, Farmer R, Centor RM (2000) Relationship of hospital teaching status with quality of care and mortality for medicare patients with acute MI. J Am Med Assoc 284:1256–1262

Banker RD, Charnes A, Cooper WW (1984) Models for estimating technical and scale inefficiencies in data envelopment analysis. Manage Sci 30:1078–1092

Carey K (2003) Hospital cost efficiency and system membership. Inquiry 40:25–38

Carey K, Burgess JF Jr (1999) On measuring the hospital cost-quality trade-off. Health Econ 8:509–520

Cherchye L (2001) Using data envelopment analysis to assess macroeconomic policy performance. Appl Econ 33:407–416

Chirikos TN, Sear AM (2000) Measuring hospital efficiency: a comparison of two approaches. Health Serv Res 34:1389–1408

Clement JP, Valdmanis VG, Bazzoli GJ, Zhao M, Chukmaitov A (2008) Is more better? An analysis of hospital outcomes and efficiency with a DEA model of output congestion. Health Care Manag Sci 11:67–77

Deily ME, McKay NL (2006) Cost inefficiency and mortality rates in florida hospitals. Health Econ 15:419–431

DesHarnais S, McMahon LF Jr, Wroblewski R (1991) Measuring outcomes of hospital care using multiple risk-adjusted indexes. Health Serv Res 26:425–445

Donabedian A (1978) The quality of medical care: methods for assessing and monitoring the quality of care for research and for quality assurance programs. Science 200:856–864

Doyle JR, Green RH (1991) Comparing products using data envelopment analysis. Omega 19:631–638

Dyson RG, Thanassoulis E (1988) Reducing weight flexibility in data envelopment analysis. J Operat Res Soc 39:563–576

Farsi M (2004) Changes in hospital quality after conversion in ownership status. Int J Health Care Finance Econ 4:211–230

Ferrier GD, Valdmanis V (1996) Rural hospital performance and its correlates. J Prod Anal 7:63–80

Fuchs VR (1999) Health care for the elderly: how much? Who will pay for it? Health Aff 18:11–21

González E, Cárcaba A, Ventura J, García J (2011) Measuring quality of life in Spanish municipalities. Local Gov Stud 37:171–197

Grosskopf S, Valdmanis V (1993) Evaluating hospital performance with case-mix-adjusted outputs. Med Care 31:525–532

Halsteinli V, Kittlesen SA, Magnussen J (2010) Productivity growth in outpatient child and adolescent mental health services: the impact of case-mix adjustment. Soc Sci Med 70:439–446

Hartz AJ, Gottlieb MS, Kuhn EM, Rimm AA (1993) The relationship between adjusted hospital mortality and the results of peer review. Health Serv Res 27:765–777

Hashimoto A, Ishikawa H (1993) Using DEA to evaluate the state of society as measured by multiple social indicators. Socio-Econ Plann Sci 27:257–268

HealthGrades (2006) The ninth annual Healthgrades hospital quality in America study. www.healthgrades.com

Hines S (2003) Using publicly reported data to choose a hospital: do different websites and different measures give consistent answers?. Delmarva Insights, Delmarva Foundation

Hollingsworth B (2003) Non-parametric and parametric applications measuring efficiency in health care. Health Care Manag Sci 6:203–218

Hollingsworth B (2008) The measurement of efficiency and productivity of health care delivery. Health Econ 17:1107–1128

Hollingsworth B, Smith P (2003) Use of ratios in data envelopment analysis. Appl Econ Lett 10:733–735

Hollingsworth B, Dawson PJ, Maniadakis N (1999) Efficiency measurement of health care: a review of non-parametric methods and applications. Health Care Manag Sci 2:161–172

Jacobs R, Goddard M, Smith P (2005) How robust are hospital ranks based on composite performance measures? Med Care 43:1177–1184

Jha AK, Li Z, Orav EJ, Epstein AM (2005) Care in U.S. hospitals—the hospital quality alliance program. N Engl J Med 353:265–274

Keeler EB, Kahn KL, Draper D, Sherwood MJ, Rubenstein LV, Reinisch EJ, Kosecoff J, Brook RH (1990) Changes in sickness at admission following the introduction of the prospective payment system. J Am Med Assoc 264:1962–1968

Keeler EB, Rubenstein LV, Kahn KL, Draper D, Harrison ER, McGinty MJ, Rogers WH, Brook RH (1992) Hospital characteristics and quality of care. J Am Med Assoc 268:1709–1714

Kosecoff J, Kahn KL, Rogers WH, Reinisch EJ, Sherwood MJ, Rubenstein LV, Draper D, Roth CP, Chew C, Brook RH (1990) Prospective payment system and impairment at discharge: the ‘quicker-and-sicker’ story revisited. J Am Med Assoc 264:1980–1983

Lindenauer PK, Remus D, Roman S, Rothberg MB, Benjamin EM, Ma A, Bratzler DW (2007) Public reporting and pay for performance in hospital quality improvement. N Engl Med 356:486–496

Lovell CAK (1995) Measuring the macroeconomic performance of the Taiwanese economy. Int J Prod Econ 39:165–178

Lovell CAK, Pastor JT, Turner JA (1995) Measuring macroeconomics performance in the OECD: a comparison of European and non-european countries. Eur J Oper Res 87:507–518

Magnussen J (1996) Efficiency measurement and the operationalization of hospital production. Health Serv Res 31:21–37

Maniadakis N, Hollingsworth B, Thanassoulis E (1999) The impact of the internal market on hospital efficiency, productivity and service quality. Health Care Manag Sci 2:75–85

Martin AB, Lassman D, Washington B, Catlin A, the National Health Expenditure Accounts Team (2012) Growth in US Health spending remained slow in 2010; health share of gross domestic product was unchanged from 2009. Health Aff 31:208–219

MedPAC (2010), A Data Book: health care spending and the medicare program. http://www.medpac.gov/documents/Jun10DataBookEntireReport.pdf

Metersky ML, Sweeney TA, Getzow MB, Siddiqui F, Nsa W, Bratzler DW (2006) Antibiotic Timing and diagnostic uncertainty in medicare patients with pneumonia: is it reasonable to expect all patients to receive antibiotics within 4 hours? Chest 130:16–21

Morey RC, Fine DJ, Loree SW, Retzlaff-Roberts DL, Tsubakitani S (1992) The trade-off between hospital cost and quality of care: an exploratory empirical analysis. Med Care 30:677–698

Mutter RL, Rosko MD, Wong HS (2008) Measuring hospital inefficiency: the effects of controlling for quality and patient burden of illness. Health Serv Res 43:1992–2013

Mutter R, Valdmanis V, Rosko M (2010) High versus lower quality hospitals: a comparison of environmental characteristics and technical efficiency. Health Serv Outcomes Res Method 10:134–153

Nayar P, Ozcan Y (2008) Data envelopment analysis comparison of hospital efficiency and quality. J Med Syst 32:193–199

Oh I, Lee J-D, Hwang S, Heshmati A (2010) Analysis of product efficiency in the Korean automobile market from a consumer’s perspective. Empirical Economics 38:119–137