Abstract

Using a sample of Virginia hospitals, performance measures of quality were examined as they related to technical efficiency. Efficiency scores for the study hospitals were computed using Data Envelopment Analysis (DEA). The study found that the technically efficient hospitals were performing well as far as quality measures were concerned. Some of the technically inefficient hospitals were also performing well with respect to quality. DEA can be used to benchmark both dimensions of hospital performance: technical efficiency and quality. The results have policy implications in view of growing concern that hospitals may be improving their efficiency at the expense of quality.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

In an era of cost containment and resource constraints the US hospital industry is facing incentives to improve technical efficiency. Improvements in efficiency result in optimal resource utilization and it is hoped in improved organizational performance. Hospital administrators thus, are concerned with assessment of their organization’s technical efficiency. With an increasing emphasis on quality by third party payers like Medicare, hospitals are now also focused on improving quality. While stressing efficiency are quality outcomes being compromised? Is there an “inevitable” efficiency–quality trade-off? The answers to these questions are far from resolved.

Data Envelopment Analysis (DEA) is a useful technique to assess organizational performance in terms of technical and allocative efficiency. However, little work has addressed quality issues, or included qualitative measures in DEA analyses. The purpose of this study is to analyze the efficiency of acute care hospitals using measures of quality in the DEA analyses and to compare the results to the standard technical efficiency DEA model that uses technical inputs and outputs. The research questions that this study attempts to address are: (1) How does the inclusion of quality measures as outputs in the DEA models change the results of the analysis of efficiency? and (2) How are hospitals that are technically efficient performing with respect to quality?

Background and literature review

DEA is a quantitative technique developed by Charnes, Cooper and Rhodes [1] that computes efficiency scores for decision making units (DMUs) relative to their peer units. DEA has been used by researchers to evaluate the efficiency of various organizational forms in the health care industry including hospitals [2–6]; physicians [7–9] and health maintenance organizations [10, 11]. DEA was also found to be a useful technique to analyze the efficiency of renal dialysis units [12, 13]. However, all these previous studies have used quantitative outcomes as outputs in the DEA models. There have been few attempts to include quality measures in the outputs of the DEA models. One of the possible reasons could be the paucity of validated measures of quality and the lack of a composite measure for evaluating overall quality [14]. Also, if mortality rates are used as outcome measures of quality there is a concern that hospitals that treat the sickest patients may be unfairly labeled as inefficient as compared to their peers. However, with Medicare’s Quality Initiative that seeks to reward hospitals for improved quality outcomes, it would be interesting to examine how hospitals are faring on quality; and whether by focusing on technical efficiency hospitals are compromising their quality.

The classical notion has been that when resources are constrained there is an inevitable quantity-quality trade-off [15]. Laine et al. [16], used stochastic frontier analysis to examine the association between productive efficiency and clinical quality in institutional long term care for elderly patients and found no systematic association between technical efficiency and clinical quality of care. However, long term care units were found to attain high scores in some dimensions of quality (depression medication and treatment) and have high levels of technical efficiency. Laine et al. [17], used DEA models to calculate technical efficiency and explore the association between quality and technical efficiency and found a significant association between technical efficiency and what the authors termed as “unwanted dimensions of quality”.

Sherman and Zhu [18] used quality-adjusted DEA models to evaluate the effectiveness of bank branches. They incorporated quality into DEA in two different models. The first model added a quality variable as an additional output into the standard DEA model. They found that, using this approach, there was a quality–efficiency trade-off. The second approach was to evaluate quality and efficiency independently. However, no systematic study incorporating quality measures as outputs in the efficiency models was found in the DEA literature in the health care sector.

The specific focus of this study is to compare both dimensions of hospital performance: technical efficiency and quality using DEA. Although quality measures have not been directly used as outputs in DEA models of hospital efficiency, evidence from other industries, specifically banks have shown that quality measures can be used as outputs in DEA. With this approach, it has been found that there is an efficiency–quality trade-off. The purpose of this study is to use DEA models to compare hospital performance in terms of technical efficiency and quality and to determine whether there is an efficiency–quality trade-off. This study will follow Sherman and Zhu [18] and use quality measures as additional outputs in the DEA models. The results of this model will then be compared to the standard technical efficiency DEA model that uses technical inputs and outputs to examine the quality–efficiency trade-off found in Sherman and Zhu’s study of banks.

Medicare’s health care quality improvement program

In 1992, the Centers for Medicare and Medicaid Services (CMS) initiated the Health Care Quality Improvement Program. The objectives of the program were to develop quality indicators, to identify opportunities to improve care, to foster quality improvement and to re-measure to evaluate success and redirect efforts. Health Care Financing Administration’s national quality improvement activities focus on six clinical priority areas: acute myocardial infarction, breast cancer, diabetes, heart failure, pneumonia and stroke. These priorities were chosen because of their public health importance. All of these conditions are important causes of mortality and morbidity in the US.

In November 2001, Health and Human Services Secretary Tommy G. Thompson announced the Quality Initiative, a commitment to assure quality health care for all Americans through published consumer information coupled with health care quality improvement support through Medicare’s Quality Improvement Organizations. Hospitals participating in the Quality Initiative voluntarily report on a set of ten clinical quality measures. The enactment of Section 501(b) of the Medicare Prescription Drug, Improvement, and Modernization Act of 2003 has provided an incentive for hospitals to submit data for the ten quality measures, because the law stipulates that a hospital that does not submit performance data for the quality measures will receive 0.4 percentage point lower annual payment update than a hospital that submits data. The CMS will also reward top performing hospitals by increasing their payment for Medicare patients. The Hospital Quality Alliance (HQA) is a public–private collaboration led by the American Hospital Association (AHA), the Federation of American Hospitals, and the Association of American Medical Colleges that collects and reports hospital quality performance information and makes it available to consumers through CMS information channels. The HQA starter set of quality measures are related to three serious medical conditions: acute myocardial infarction (AMI) or heart attack, heart failure and pneumonia. Data on quality performance of participating hospitals are posted at www.cms.hhs.gov and are publicly available (http://www.hospitalcompare.hhs.gov/Hospital/Static/Data-Professionals.asp?dest=NAV).

For the purpose of this study, the quality measures for pneumonia were chosen to be used as outputs in the analysis of hospital efficiency. The HQA starter set also includes measures for AMI and stroke. A preliminary examination of the reported data on the CMS website found too much missing data for AMI and stroke to allow for a meaningful analysis. Therefore, the measures for pneumonia were chosen. The measures for pneumonia include: oxygenation assessment, initial antibiotic consistent with current recommendations, blood culture collected prior to first antibiotic administration, influenza screening/vaccination, pneumococcal screening/vaccination, initial antibiotic timing and smoking cessation advice/counseling.

Materials and methods

Study design

The study design was cross-sectional and the sample included all non-federal acute care hospitals in the state of Virginia in 2003. The unit of analysis was the non-federal acute care hospital. Data for this study were extracted from secondary databases including the AHA survey database for 2003; the CMS database for FY2003; the Virginia Health Information database and the CMS website www.cms.hhs.gov.

Sample

There were a total of 117 non-federal acute care hospitals in Virginia with non-missing data in the AHA survey database in 2003. Of these, 40 were rural in location and 77 were urban in location. Only 78 of the 117 hospitals reported data on quality measures to the CMS. Of these 78 hospitals only 53 hospitals had non-missing data for all of the input and output measures used in the DEA models. Of the hospitals reporting quality measures for pneumonia, only three of the measures (initial antibiotic timing, oxygenation assessment and pneumococcal vaccination) had non-missing data and hence, these three measures were used as outputs in the DEA models.

Evaluation technique

Data Envelopment Analysis is an extension of linear programming that is used to develop an efficiency frontier for the DMUs that operate with optimal performance patterns. These optimally performing DMUs that are considered as technically efficient, lie on the efficiency frontier and have an efficiency score of 1. DMUs with efficiency scores less than 1 are considered to be inefficient. DEA can also be used to identify slacks which are lack of outputs or excessive inputs for inefficient hospitals. The inefficient hospitals will have to increase their output slacks or reduce their input slacks in order to become efficient. Thus, DEA is a very useful technique for hospital administrators seeking to identify opportunities for performance improvement.

Measures

Output measures

In this study hospitals were assumed to produce primarily three types of outputs: (1) adjusted discharges—total hospitals inpatient discharges for 2003, adjusted using the Medicare case mix index for each hospital for that year; (2) total outpatient visits—all visits to hospital emergency and outpatient facilities during 2003 and (3) Training full-time equivalents (FTEs) including medical and dental trainee FTEs and other professional FTEs trained during 2003. This is consistent with output measures used in the DEA literature [2, 3].

Input measures

The input measures used in this study were: (1) hospital size—the total number of hospital beds set up and staffed in 2003; (2) supply—the amount of operational expenses, not including payroll, capital or depreciation expenses; (3) total full-time and part-time staff and (4) total assets. Measures of total assets and staffing had a lot of missing data. However, a reduced sample of 53 hospitals with non-missing data on staffing and total assets was examined to evaluate technical efficiency and quality. These input measures are consistent with the DEA literature.

Quality measures

The quality measures used as outputs in the DEA models were: (1) percent of patients given initial antibiotic timing, i.e., pneumonia inpatients who received their first dose of antibiotic within 4 h of arrival to the hospital (qual1); (2) percent of patients given oxygenation assessment, i.e., pneumonia patients who had an assessment of arterial oxygenation by arterial blood gas measurement or pulse oximetry within 24 h prior to or after arrival at the hospital (qual2) and (3) percent of patients given pneumococcal vaccination: pneumonia patients age 65 and older who were screened for pneumococcal vaccine status and were administered the vaccine prior to discharge, if indicated (qual3).

Data analysis strategy

Analysis of technical efficiency and quality

Technical efficiency and quality of the hospitals in the study were examined using constant returns to scale (CRS) input oriented models. The following DEA models were examined:

-

1)

Model 1: three output / four input CRS model of technical efficiency (n = 53). The technical inputs used in this model were bed size, non-labor/capital expenses, total assets and staffing FTEs. The technical outputs used were total adjusted discharges, total outpatient visits and training FTEs.

-

2)

Model 2: three technical output + three quality output / four input model of technical efficiency and quality (n = 53). The quality outputs used in the model were: percent of patients given initial antibiotic timing, percent of patients given oxygenation assessment and percent of patients given pneumococcal vaccination. The technical inputs and out puts used were the same as in model 1.

Descriptive statistics

Out of 117 acute care hospitals, only 78 hospitals reported quality data to the HQA. Of these, only 53 hospitals had non-missing data on all of the technical input and output and quality measures and this constituted the final empirical sample. There was no statistically significant difference in size (total hospital beds), staffing (total hospital staff) and inpatient days between the population of Virginia hospitals and the final sample. The average bed size for the sample was 220.2 beds. Nearly 40% of the empirical sample was rural in location.

Analysis of technical efficiency and quality

Model 1: Technical efficiency constant returns to scale input oriented

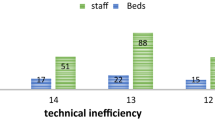

Using a four input / three output constant returns to scale DEA model it was found that out of 53 DMUs that had non-missing data for all of the quality outputs and technical inputs, 16 DMUs were efficient (efficiency score = 1) and 37 DMUs (efficiency score < 1) were inefficient. The average efficiency score of the inefficient DMUs was 0.72. The average efficiency score for all the DMUs in the sample was 0.81 (Table 1). Using a two input (total beds, scaled expenses), three output (adjusted discharges, training FTEs, outpatient visits) CRS input oriented model of the 117 hospitals, 11 were found to be efficient. Since this model did not have all of the technical input variables used in model 1, the results are not separately reported. However, it is worthy of mention that only 4 of the 11 technically efficient hospitals out of these 117 hospitals reported quality measures and were included in the final empirical sample.

The average inputs for the efficient hospitals in the model 1 were 275.68 beds and $ 96,051,820 non-payroll expenses, 1,801.62 total staffing FTEs and $33,859,000 total assets. The average outputs produced by the efficient hospitals were 235,071.87 outpatient visits, 124,134.88 adjusted discharges, and 89.93 training FTEs. The average inputs for the inefficient hospitals in the model were 195.34 beds, 1,130.70 total staffing FTEs, $ 60,144,354 total assets and $ 61,2 07,470 in non-payroll expenses. The average outputs produced by the inefficient hospitals were 127,733.4 outpatient visits, 63,615.92 adjusted discharges, and 3.82 training FTEs (Table 2).

Model 2: Technical efficiency and quality CRS input oriented

Four Input / three output + three quality output model

Using a four input / three output + three quality output constant returns to scale model it was found that out of 53 DMUs in the sample that had non-missing data for the quality outputs, 21 DMUs were efficient and 32 DMUs were inefficient. It was found that five of the inefficient and near-efficient DMUs (efficiency score less than 1) in model 1 had efficiency scores of 1 in model 2; indicating that although they were not maximizing efficiency in terms of quantitative outcomes, they were maximizing their quality outcomes. The average efficiency score of the inefficient DMUs was 0.78. The average efficiency score for all the DMUs in the sample was 0.86 (Table 3).

The average inputs for the efficient hospitals in the model 2 were 246.61 beds, 1,480.76 total staffing FTEs, $ 59,024,248 total assets and $ 7,605,580 non-payroll expenses. The average outputs produced by the efficient hospitals were 189,656.3 outpatient visits, 100,556.5 adjusted discharges, and 68.52 training FTEs. The quality outcomes were 70.90% for qual1 (initial antibiotic timing), 99.38% for qual2 (oxygenation assessment) and 41.28% for qual3 (pneumococcal vaccination). The average inputs for the inefficient hospitals in the model were 205.65 beds, 1,229.62 total staffing FTEs, $ 68,698,039 total assets and $ 69,664,000 in non-payroll expenses. The average outputs produced by the inefficient hospitals were 139270.1 outpatient visits, 71,535.41 adjusted discharges, and 4.90 training FTEs. The quality outcomes were 65.65% for qual1, 98.68% for qual2 and 45.43% for qual3 (Table 4).

The degree of correlation between the efficiency scores for each DMU in the technical efficiency model (model 1) and the scores obtained with the technical efficiency and quality model (model 2) was examined using the Spearman rank correlation coefficient. The correlation coefficient obtained was 0.770. Figure 1 plots the technical efficiency and technical efficiency combined with quality scores for each of the DMUs in the empirical sample (n = 53).

Forty hospitals out of 117 were rural in location. The average technical efficiency score with the two input / three output DEA model for the rural hospitals was 0.64 and the average technical efficiency score for the urban hospitals was 0.75. However, in the empirical sample of 53 hospitals, the 19 hospitals which were rural had comparable technical scores (0.9 vs. 0.86) and higher technical efficiency and quality score (0.6 vs. 0.3).

Discussion

Interpretation of the results

The most significant finding of the analysis of the DEA models was that hospitals that were efficiently producing quantitative outputs (outpatient visits, adjusted discharges and training FTEs); were also found to be efficiently producing the quality outputs (percent of pneumonia patients receiving oxygenation assessment, initial antibiotic timing and pneumococcal vaccination). Sixteen of the hospitals in the sample had a score of 1 in both the technical efficiency and technical efficiency combined with quality DEA models (models 1 and 2). These hospitals were the best performers, in that they were maximizing technical efficiency or quantitative outcomes as well as the quality outcomes. Also, five of the inefficient and near-efficient hospitals with regard to quantitative outcomes (score less than 1) were efficiently producing the technical efficiency combined with quality outputs (score = 1). These hospitals need to enhance their technical efficiency in order to improve their performance. Thirty two of the hospitals were poor performers with respect to both technical efficiency and quality. None of the 53 hospitals were found to be technically efficient and requiring quality improvement (Table 5).

Thus, the evidence from this study indicates that quality outcomes were not being compromised by the efficient hospitals in the study. This indicates that the hospitals were behaving as not only quantity maximizing units, but as quality maximizing units as well. This does not provide strong evidence for an ‘inevitable’ efficiency–quality trade-off. This is indeed heartening, especially in view of Medicare’s Quality Initiative that seeks to reward high performing hospitals with bonuses.

The other significant finding was that there was a high degree of correlation (Spearman’s rho > 0.75) between the efficiency score obtained using only technical outputs and the efficiency score obtained using technical combined with quality outputs. Thus, this validates the inclusion of quality measures as outputs in DEA models of hospital efficiency, as has been found in the banking industry. With an increasing emphasis on providing value (efficiency combined with quality) the inclusion of quality outputs in the measurement of organizational performance offers promise as a benchmarking tool for organizations seeking to maximize performance and value. However, the quality measures used in the study were self-reported process measures of quality. Further research using validated outcome measures of quality on larger samples is required to see if these preliminary findings are consistently found.

Another incidental finding was that although rural hospitals in the original sample of 117 hospitals were not performing as well as their urban counterparts, in the smaller empirical sample of 53 hospitals, they were found to be performing as well or even better in terms of technical efficiency and quality. Considering the small sample size, this finding needs to be further examined in larger samples.

Limitations of the study

One of the limitations of the study was the limitations of the secondary databases that had a lot of missing data on many of the input variables (staffing and assets) that precluded their use in the DEA models. Since the AHA database is based on self-reported data from the member hospitals, this is an inevitable limitation. The CMS quality database which is self-reported by the hospitals also had missing data on some of the quality measures for pneumonia. However, only three of the five quality measures for pneumonia that had non-missing responses were used in the analysis of technical efficiency and quality.

As the quality measures used in this study were self-reported, another issue was non-response to the survey. Hospitals voluntarily report data on quality. Only 78 of the 117 Virginia hospitals reported quality measures to the HQA. Of these 78 hospitals that reported quality measures only 53 had non-missing data for all of the inputs and outputs used to estimate the DEA models. This small sample size may limit the generalizability of the results of the study. It is also significant to note that out of the 11 efficient of the 117 hospitals in Virginia, only four hospitals reported quality data.

Finally, DEA has been criticized for being sensitive to random noise, whereas parametric techniques like stochastic frontier analysis allow for statistical noise. However, in a comparison of both techniques, Jacobs [19] concludes that each of these methods has strengths and weaknesses and they measure different aspects of efficiency. Further, Linna [20] found that both methods gave comparable results for individual efficiency scores.

Conclusion/policy implications

This study represents a significant contribution to the DEA literature as few previous studies have analyzed quality measures as outputs in the technical efficiency analysis. It also has implications for policy because the findings indicate that a managerial focus on improving technical efficiency is not likely to compromise quality. With an increasing emphasis on quality by third party payers like Medicare with pay for performance initiatives, the findings of this study are especially significant for policy makers and administrators alike, as the evidence is that hospitals can maximize both quality and quantity; and efficiency need not be at the expense of quality. The inclusion of quality measures in DEA offers promise as a benchmarking tool for hospitals.

References

Charnes, A., Cooper, W. W., and Rhodes, E., Measuring the efficiency of decision making units. Eur. J. Oper. Res 2:429, 1978.

Ozcan, Y. A., Luke, R. D., and Haksever, C., Ownership and organizational performance: A comparison of technical efficiency across hospital types. Med. Care 30(9):781–794, 1992.

Ozcan, Y. A., Sensitivity analysis of hospital efficiency under alternative output/input and peer groups: A review. Knowl. Policy 5(4):1–29, 1993.

Ozcan, Y. A. et al., Trends in labor efficiency among American hospital markets. Ann. Oper. Res 67:61–82, 1996.

White, K. R., and Ozcan, Y. A., Church ownership and hospital efficiency. Hosp. Health Serv. Adm 41(3):297–310, 1996.

Harris, J. M., Ozgen, H., and Ozcan, Y. A., Do mergers enhance the performance of hospital efficiency? J. Oper. Res. Soc 51:801–811, 2000.

Chilingerian, J. A., Evaluating physician efficiency in hospitals: A multivariate analysis of best practices. Eur. J. Oper. Res 80:548–574, 1995.

Ozcan, Y. A., Physician benchmarking: measuring variation in practice behavior in treatment of otitis media. Health Care Manage. Sci 1(1):5–17, 1998.

Ozcan, Y. A., Jiang, H. J., and Pai, C. W., Do primary care physicians or specialists provide more efficient care? Health Serv. Manag. Res 13:90–96, 2000.

Rosenman, R., Siddharthan, K., and Ahern, M., Output efficiency of Health Maintenance Organizations in Florida. Health Econ 6:295–302, 1997.

Draper, D. A., Solti, I., and Ozcan, Y. A., Characteristics of Health Maintenance Organizations and their influence on efficiency. Health Serv. Manag. Res 13:40–56, 2000.

Ozgen, H., and Ozcan, Y. A., A national study of efficiency for dialysis centers: an examination of market competition and facility characteristics for production of multiple dialysis outputs. Health Serv. Res 37(3):711–732, 2002.

Ozgen, H., and Ozcan, Y. A., Longitudinal analysis of efficiency in multiple output dialysis markets. Health Care Manag. Sci. 7(4):253–261, 2004.

Ikegami, N., Hirdes, J. P., and Carpenter, I., Measuring the quality of long-term care in institutional and community setting. In: Smith, P. (Ed.), Measuring up: Improving health system performance in OECD Countries. OECD, Paris, 2002.

Newhouse, J., Toward a theory of non-profit institutions: An economic model of a hospital. Am. Econ. Rev 60:64–74, 1970.

Laine, J. et al., Measuring the productive efficiency and clinical quality of institutional long term care for the elderly. Health Econ 14:245–256, 2005.

Laine, J. et al., The association between quality of care and technical efficiency in long term care. Int. J. Qual. Health Care 17(3):259–267, 2005.

Sherman, D. H., and Zhu, J., Service productivity management: Improving service performance using data envelopment analysis (DEA). Springer, New York, 2006.

Jacobs, R., Alternative methods to examine hospital efficiency: Data envelopment analysis and stochastic frontier analysis. Health Care Manag. Sci 4:103–115, 2001.

Linna, M., Measuring hospital cost efficiency with panel data models. Health Econ 7:415–427, 1997.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Nayar, P., Ozcan, Y.A. Data Envelopment Analysis Comparison of Hospital Efficiency and Quality. J Med Syst 32, 193–199 (2008). https://doi.org/10.1007/s10916-007-9122-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10916-007-9122-8