Abstract

Early detection and counting of immature green citrus fruit using computer vision can help growers produce a predictive yield map which could be used to adjust management practices during the fruit maturing stages. However, such detecting and counting is difficult because of varying illumination, random occlusion and color similarity with leaves. An immature fruit detection algorithm was developed with the aim of identifying and counting fruit in a citrus grove under varying illumination environments and random occlusions using images acquired by a regular red–green–blue (RGB) color camera. Acquired citrus images included front-lighting and back-lighting illumination conditions. The Retinex image enhancement algorithm and the two-dimensional discrete wavelet transform were used for image illumination normalization. Color-based K-means clustering and circular hough transform (CHT) were applied in order to detect potential fruit regions. A Local Binary Patterns feature-based Adaptive Boosting (AdaBoost) classifier was built for removing false positives. A sub-window was used to scan the difference image between the illumination-normalized image and the resulting image from CHT detection in order to detect small areas and partially occluded fruit. An overall accuracy of 85.6% was achieved for the validation set which showed promising potential for the proposed method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Florida contributed more than 65% of the citrus production in the U.S. per year during the last decade (USDA-NASS 2015). Early yield estimation of immature green citrus fruit using agricultural machinery is of great significance. It may help growers to identify growth conditions of immature citrus fruit and thus implement precise site-specific fertilization based on their conditions and allocate labor based on yield maps. Detection and counting of immature green citrus fruit are critical steps in creating yield maps (Li et al. 2016).

Computer vision may provide a promising tool for agricultural machinery-based fruit detection. Many researchers have proposed different image processing methods for detecting immature green fruit. Stajnko et al. (2004) developed a method using a thermal camera to capture apple tree images for estimation of the number and diameter of immature green apple fruit in an orchard during the growing season. Wachs et al. (2010) designed a thermal infrared and color image-based method for immature green apple detection using low and high-level visual features. Kane and Lee (2007) designed a method where immature green citrus fruit images were captured by a monochromatic near-infrared camera and the fruit were detected using morphological image processing techniques. Another green citrus fruit detection method proposed by Okamoto and Lee (2009) used hyperspectral images to detect the fruit, however, there were some occluded or highly contrasted fruit identified incorrectly.

The above methods that analyzed thermal and hyperspectral images of the fruit using visual and infrared information have potential to detect immature green fruit. However, the detection equipment costs are high, and hyperspectral images include much redundant information. Direct analysis of fruit color image from a much less expensive red–green–blue (RGB) camera may be a more easily acceptable method for fruit growers to estimate fruit yield. A large number of articles have focused on RGB color image-based detection algorithm for immature green fruit. Linker et al. (2012) developed an algorithm with four main steps, which combined the segmentation results of high probability apple areas to determine the number of green apples in the orchard. Kurtulmus et al. (2014) proposed a feature-based algorithm for immature peach fruit detection using statistical classifiers. Similarly, some algorithms for detection of immature green citrus fruit also have been developed using RGB color images of the natural outdoor citrus canopy. An ‘eigenfruit’ method with color and Gabor texture features was implemented by Kurtulmus et al. (2011) to identify and count immature green citrus under natural environment. However, three factors contributed to a high missed rate: (1) Varying illumination affected color and texture of the fruit; (2) partial occlusion made citrus fruit appear to be smaller and (3) the same fruit was detected multiple times. Bansal et al. (2013) proposed a fast Fourier transform (FFT) leakage-based green citrus detection method. However, this algorithm would produce faulty results when the image included sky background or another symmetric area. Another scheme was developed by Sengupta and Lee (2014) to detect immature green citrus fruit. The fruit firstly was detected using shape analysis. Then a citrus texture-based support vector machine was applied to detect fruit. The algorithm was able to detect and count 80.4% of citrus fruit of natural canopy images for validation. However, the percentage of false positives was high (27.1%), which might have been caused by round objects from the Circular Hough Transform (CHT) and partial occlusion. After image segmentation by using adaptive red and blue chromatic map (ARB), Zhao et al. (2016) trained a support vector machine using color and the sum of absolute transformed difference to detect immature green citrus. More than 83% of the fruit was successfully detected in the validation set. In another study, Li et al. (2016) firstly used a fast normalized cross correlation (FNCC) method to detect potential green citrus fruit positions. Then the fruit was identified by combining color, texture and shape feature analysis. The successful detection rate of the fruit approached 84.4%. However, their algorithm had difficulty in detecting fruit with more than a half of their edges missing or occluded with a branch in the middle.

While comparing the above studies, the detection accuracies of immature green citrus fruit obtained by analyzing RGB color images did not show big differences from those of analyzing thermal and hyperspectral images. A regular RGB color camera may be a much easier option and cost less than a thermal or hyperspectral camera. It may be a better choice for growers to acquire citrus fruit images for early yield estimation using the regular RGB camera. However, varying illumination, random occlusion and color similarity with leaves may present many more challenges in immature green citrus fruit detection based on regular RGB image analysis.

Due to occlusion of leaves and branches and illumination variation, some fruit surfaces usually appear darker, which will increase the difficulty of fruit identification. Many researchers have used a logarithm transform to enhance image illumination (Savvides and Kumar 2003; Vishwakarma et al. 2009; Cheng et al. 2010; Kurtulmus et al. 2014). Retinex theory was proposed by Edwin (1977), where Retinex is a compound word and made up from the two words retina and cortex. It was established based on scientific experiments and analysis and considered as an image enhancement algorithm on the basis of the human visual system. It has been widely used in many fields due to its good performance. For example, edge enhancement, color constancy and balance in dynamic range compression made Retinex different from conventional image enhancement algorithms. However, a halo commonly exists in high contrast edges using this algorithm. Bilateral filtering may be a potential tool used for dealing with the disadvantage of Retinex. However, the original bilateral filtering algorithm consumes too much processing time, so it has not been widely used, especially in computationally intensive tasks, such as fruit detection (Yang et al. 2015). Durand and Dorsey (2002) proposed two acceleration strategies for the original bilateral filtering, piece-wise linear approximation and multi-scale implementation.

In face recognition research, illumination variation is one of the critical factors affecting accuracies since it can make several images of the same person appear to be dramatically different. Thus, algorithm development for eliminating the effects of illumination variation has become a topic of interest. Similarly, varying illumination is also a significant factor in limiting the performance of citrus fruit recognition. Illumination normalization can be used for eliminating illumination variation. Among various illumination normalization techniques, the wavelet transform has been widely researched. Duan et al. (2004) used a wavelet transform to decompose a face image into an approximation component and an illumination component. Each pixel of the luminance component was set to the same gray value. The value was used as the mean of the approximation component. Thus, the illumination variation was removed from the approximation component. Du and Ward (2005) proposed a wavelet-based illumination compensation method. The method applied one level wavelet transform to decompose the image and use histogram equalization toward contrast enhancement, which yielded satisfactory results. Emadi et al. (2012) decomposed an input image into high and low-frequency components, and then set the low-frequency component to zero. The image was reconstructed by inverse wavelet transform. The result showed that the image had not only enhanced edges and details but also enhanced contrast.

Texture features may be more suitable for distinguishing immature green citrus fruit and non-fruit objects than color features. Local Binary Patterns (LBP) operator, initially proposed by Ojala et al. (1996), is a powerful tool for texture description and has been widely used in many fields. Sun et al. (2011) extracted an overall face feature histogram with the LBP operator. Then the least squares support vector machine (LS-SVM) was used to identify and train samples of face images in order to improve the recognition rate of the face. Lei et al. (2012) combined the LBP operator and a Bayesian classifier to trace visual objects. Mazo et al. (2017) used the LBP operator to describe the texture information of the cardiovascular tissues. Thus, the cardiovascular tissues could be automatically classified by using texture information and support vector machines (SVM). A classifier usually needs to be applied in the LBP feature-based classification. The Adaptive Boosting (AdaBoost) algorithm proposed by Freund and Schapire (1995) has a robust classification ability using a strong classifier constructed by boosting a large number of weak classifiers that have weak classification abilities. Some research activities have been carried out for classification using the AdaBoost algorithm (e.g., Wang et al. 2004; Khammari et al. 2005; Luo et al. 2016).

Motivated by these studies, this study used a regular RGB color camera to obtain immature green citrus fruit images in a citrus grove with the aim of identifying the fruit under varying illumination and random occlusion conditions as accurately as possible. Specific objectives of this study were to:

-

(1)

develop a fast bilateral filtering-based Retinex for immature green citrus image enhancement;

-

(2)

develop a method for normalizing illumination as much as possible based on the wavelet transform;

-

(3)

develop a method for detecting potential fruit positions using color analysis and CHT; and

-

(4)

develop a classification method by combining LBP features and an AdaBoost-based classifier for removing false positives and identifying as much immature green citrus fruit as possible including partially occluded fruit.

Materials and methods

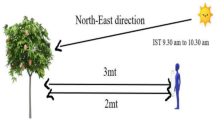

Image acquisition

Citrus canopy images, as shown in Fig. 1, including leaves, branches and immature green citrus fruit, were obtained using a typical digital color camera (Power Shot SD880IS, Canon Inc., USA) with a JPEG image format and a resolution of 3648 × 2736 pixels for developing an algorithm for fruit detection. The citrus variety was Orlando Tangelo. One hundred canopy images under both front-lighting and back-lighting conditions were obtained in a 1 week period at various times during daylight in October 2015 from an experimental citrus grove in the University of Florida, Gainesville, Florida (29°38′11.9″N, 82°21′52.5″W), USA. The images were corresponding to approximately 240 × 180 mm2 actual scene. All of the images were resized to 800 × 600 pixels by a bilinear interpolation method for computational efficiency. Matlab (Ver. R2016b, Mathworks, Natick, MA, USA) and OpenCV (Ver. 3.0, Intel Corporation, Santa Clara, California, USA) were used to develop an immature green citrus fruit detection algorithm in this study.

Algorithm description

The proposed algorithm included three stages: preprocessing, training and testing for detecting and counting immature green citrus fruit from trees. As shown in Fig. 2a, the preprocessing stage was composed of image size conversion, and enhancement and normalization of illumination, which was the crucial part of the algorithm and was used to remove noise as much as possible. The algorithm firstly enhanced illumination of the resized images using the Retinex image enhancement algorithm based on a fast bilateral filtering, and then a wavelet transformation-based algorithm was proposed for illumination normalization of the images. The illumination-normalized image was the output of the preprocessing stage, which also was the input of the testing stage and the training stage. Its specific details are demonstrated in the next section. As shown in Fig. 2b, in the training stage, 24 illumination normalized images were randomly selected for manual extraction of fruit regions and non-fruit regions so that their LBP features could be calculated. Each number of fruit regions and non-fruit regions was 200 and the region size was 24 × 24 pixels. In parallel, in step 1 of the testing stage, potential citrus fruit regions were extracted by segmenting the illumination normalized images using color-based K-means clustering and CHT. Thus, the images were divided into two parts, which were the image including detection results of CHT and the image without detection results of CHT, respectively. In step 2 of the testing stage, the CHT detection results were decided whether they were fruit regions using the training stage classifier. There were still some partial occlusions and small area fruit after CHT detection. In step 3 of the testing stage, a 10 × 10 pixels window scanned the whole remainder image to search for any missing fruit regions. The fruit number would be the sum of the result of steps 2 and 3 (Fig. 2b).

Image enhancement using a fast bilateral filtering-based Retinex

The first strategy proposed by Durand and Dorsey (2002) was used to accelerate the bilateral filtering for citrus image illumination enhancement in this study. Based on the Retinex theory, the resized citrus fruit image S(x, y) can be understood as the product of the reflectance image R(x, y) and the illumination image L(x, y) shown as Eq. 1.

Then, the illumination image of the citrus fruit image can be obtained using a logarithmic operation on Eq. 1. The intensity of the illumination image L(x, y) was segmented into some values {ij}, and the output value of the filtering for each value was firstly calculated using Eq. 2,

where Ω was the set of all pixels, f1 and f2 were both Gaussian functions, Ip was the bright value of point p and kj(s) was a normalization term that was defined by Eq. 3.

And the final output Is of the filtering for a pixel s was a linear interpolation between the output B j s of the two closest values ij.

After the fast bilateral filtering described above, the new illumination image I(x, y) was obtained. It was composed of a new image plus the contrast enhanced reflectance image R′(x, y). An illumination enhanced image S′(x, y) was finally obtained using an exponential operation on the new image.

Wavelet transformation-based illumination normalization

Though image edge and fruit surface illumination can be enhanced by the algorithm of the previous section, uneven illumination still exists on the surface of the fruit. The illumination enhanced citrus image S′(x, y) was firstly decomposed into three color channels of SR′(x, y),SG′(x, y), and SB′(x, y) in RGB color space. Considering each color channel as C(x, y), the two-dimensional discrete wavelet transform (two-dimensional Mallat algorithm) in the first layer was adopted to decompose it into low-frequency and high-frequency components. Its quick decomposition formula is as below:

where x, y presented the co-ordinate of the pixel point, k, l were both integers, and g, h were a high-pass filter and a low-pass filter, respectively. cj was the original color channel, d V j+1 was a vertical high-frequency component, d H j+1 was a horizontal high-frequency component and d D j+1 was a diagonal high-frequency component. cj+1 was a low-frequency component. Histogram equalization and contrast enhancement were then used for the low-frequency component and the high-frequency component, respectively. Illumination normalization was accomplished when the low-frequency component and the high-frequency component were reorganized into a gray scale image using two-dimensional wavelet reconstruction using Eq. 5.

The parameters in Eq. 5 were the same as in Eq. 4. Programming was carried out using Matlab. Bi-orthogonal wavelet was selected as the wavelet function from the Matlab wavelet toolbox. A wavelet transformation-based illumination normalization was then carried out by reorganization of the three illumination normalized color channels of the citrus color image.

AdaBoost classifier based on LBP features

The process of the LBP operator is similar to a filter template operation. For every pixel in the image, the gray value of the point is used as a threshold to transform its eight neighbor-pixels into binary numbers. Based on a certain order, those binary numbers formed an 8 bit binary number, which was then converted to its corresponding decimal value (0–255). Eventually, this decimal value was used as the response of the center pixel. As shown in Fig. 3, ‘5’ is the gray value of the center pixel. By using it as a threshold, the gray values of the eight adjacent pixels are compared with it. If the surrounding pixels are greater than or equal to it, then the pixel location is labeled as 1, otherwise it is 0. Thus, 19 (= (00010011)10) can be obtained in a clockwise direction and it is just the LBP value of the center pixel. In image recognition, the statistical histogram of the LBP pattern can be used as a texture descriptor so that a robust classifier could be trained by it.

LBP features extraction of fruit and non-fruit objects

Positive samples, which were LBP features of fruit, were built using a 24 × 24 pixels sub-window that was smaller than the area of the smallest fruit size to capture green citrus fruit areas. Each fruit area was divided into 576 (= 24 × 24) small non-overlapping regions R0, R1, … R575. The LBP pattern histogram Hi of each region Ri can be calculated using Eqs. 6 and 7,

where f(A) was defined as

m represented one LBP pattern and q was the number of LBP pattern categories. \( \overline{{h_{i} }} \) of each region obtained by equalizing each Hi was cascaded from row to column to constitute a vector Z; and Z was just the LBP histogram feature of one positive sample. Extraction of the LBP histogram feature ξ of each negative sample was implemented using the same procedure.

Classifier construction based on AdaBoost algorithm

The specific classifier construction process trained by using LBP features of fruit and non-fruit is shown using the pseudo code in Table 1.

Where xi represented sample i including fruit LBP feature Z and non-fruit LBP feature ξ, yi = 0 or yi = 1 indicated sample i was a negative or positive sample, respectively, and N was the total number of positive and negative samples. Firstly, the weights D1(xi) of all samples were initialized. For the jth feature of each sample, one weak classifier hj(xi) as shown in Eq. 8 can be obtained by calculating its minimum error,

where θi was a threshold, pi was direction parameter and gj was the jth LBP feature. Then, in the resulting weak classifiers, one weak classifier ht(x) with the minimum error ɛt can be obtained. Thus, the weights of all the samples Dt(xi) were updated into Dt+1(xi), where βt was equal to ɛt/1 − ɛt, Zt was a normalization factor made \( \sum\nolimits_{i = 1}^{N} {D_{t + 1} \left( {x_{i} } \right)} \) to be 1. If xi was correctly classified by ht(x), ei would be 0, otherwise 1. Finally, the strong classifier H(x) was constructed as Eq. 9.

where \( \alpha_{t} = \log 1/\beta_{t} = \log \frac{{1 - \varepsilon_{t} }}{{\varepsilon_{t} }}. \)

Training of the classifier using LBP feature

The proposed method of this study (Method 3) used the LBP features of 200 fruit samples and 200 non-fruit samples of illumination-normalized images to train the AdaBoost-based classifier in the training stage. To build the training data, firstly, the fruit samples and the non-fruit samples were manually extracted from the illumination-normalized color images of the citrus canopy. Then, these samples were uniformly processed into 24 × 24 sub-window images as shown in Fig. 4a. Next, the sub-window images were transformed to LBP texture maps as shown in Fig. 4c. Finally, the texture maps were used to extract LBP features of both fruit and non-fruit in the process stated in the previous section. In the testing stage, the classifier was used to determine whether the potential fruit regions extracted by the developed algorithm were fruit or non-fruit. Details are presented in the next section.

In order to demonstrate the performance of illumination normalization and the proposed algorithm, two other methods were presented for comparison as follows.

Method 1 Referring to the requirements of samples selection for the AdaBoost algorithm (Freund and Schapire 1997), 300 fruit samples and 600 non-fruit samples were manually extracted. Instead of using illumination-normalized images, those samples were extracted from the original citrus canopy color images as shown in Fig. 4b. Their corresponding LBP texture maps are shown in Fig. 4d. Then, the classifier was trained by the LBP features in the training stage. However, the trained classifier would be used to detect the immature green citrus fruit in the original citrus canopy color images, instead of illumination-normalized images, in the testing stage.

Method 2 The training stage was the same as Method 3. However, the trained AdaBoost-based classifier was directly used to detect the immature green citrus fruit in the original citrus canopy color images in the testing stage.

Potential fruit region detection based on K-means clustering and CHT

Immature green citrus fruit are usually light green, which is different from branches and background. Thus, color analysis has the potential for obtaining fruit regions. This is a simple procedure to find the green components and discard other colors. Clustering is an unsupervised learning that can automatically divide a data set into different classes each of which has greater similarity and a large degree of difference simultaneously between different classes. K-means clustering is a commonly used clustering algorithm. It determines K divisions which reach a minimum squared error. When a cluster is dense, the distinction between classes is obvious, and the algorithm has better results. For dealing with large datasets, the algorithm is relatively scalable and efficient. K-means clustering needs to specify the number of clusters to be partitioned and a distance metric to quantify how near the two classes are to each other. Immature green citrus fruit and leaves are green, and the peduncles, branches and most backgrounds are other colors, thus the number of clusters was determined as two based on multiple trials. Therefore, in the testing stage, illumination normalized citrus color image was firstly segmented into the green and non-green components using K-means clustering. Fruit regions can be located in the green color component. As the shape of the fruit was roughly circular, a basic shape analysis should be conducted for extracting fruit regions from the green color component. CHT first applied by Duda and Hart (1972) is a variation of the standard Hough transform, which is a feature extraction technique and used widely in the computer vision community. The parameters of the detected circles can be determined by the CHT. If the radius of the circle is known beforehand, the CHT algorithm is easier to implement. In the training stage, the minimum and maximum radii were found to be approximately 20 and 50 pixels, respectively. Using these radius ranges, a fast circle search can be performed in the gray level image of the segmented green color component using Matlab. ‘Imfindcircles’ was selected as the CHT function, and the parameter ‘Sensitivity’ was set to be 0.91, which was determined from the training set. The detection results based on K-means clustering and CHT were overlaid on the illumination normalized citrus image.

Removal of false detections and determination of the final number of fruit

After extraction of potential fruit regions, the illumination normalized image of citrus was segmented into the image with the CHT detection result and the image without the CHT detection result, which were stored as image_CHT and image_WCHT, respectively. False positives can occur in image_CHT and false negatives can exist in image_WCHT. In the image_CHT, a maximum inscribed rectangle of each circle was divided into multiple 20 × 20 sub-windows. Then each sub-window was classified using the trained AdaBoost-based classifier and it was then determined whether it satisfied the citrus fruit feature. In the maximum inscribed rectangle, if the number of sub-windows which satisfied the fruit feature were greater than the number of sub-windows that did not satisfy the fruit feature, the circumscribed circle of the rectangle could be determined as a fruit region by majority voting. Otherwise, it would be determined as a non-fruit region, and the circle region would be assigned to ‘0’. All of the circles in the image were scanned using this method. The center co-ordinates of the identified circles were stored into (xi, yi), where i was the number of the identified circles. Euclidean distance was used to measure the distance between any two centers. If the distance was less than 20 pixels, the two centers were determined as the same center and i was updated into i − 1. The distance parameter (20 pixels) was determined from the training data set. Following this procedure, i finally was updated into i′ that represented the final number of fruit regions in image_CHT after false positive removal, and the rectangle that was tangent to the identified circle was used to represent the fruit.

In the image_WCHT, a 10 × 10 sub-window scanned the whole image from left to right and from top to bottom by moving 10 pixels at one time for searching the region that satisfied the fruit feature. The classifier would be used to determine whether the region was fruit or not. If the sub-window satisfied the fruit feature, the co-ordinates of its four vertices were recorded. Then, its center can be calculated and stored into (xi′, yi′). i represented the number of the identified sub-windows. If the Euclidean distance between two identified sub-window centers was smaller than 10 pixels, the two sub-windows were considered to belong to the same fruit. They were combined into a large rectangle to represent the fruit. The rectangle’s four vertices were the minimum and maximum abscissas and the minimum and maximum ordinates of the two sub-windows. i was updated into i − 1. Finally, i was updated into i″ that represented the final number of fruit regions in image_WCHT after false negative removal. Thus, the final number of immature green citrus fruit detected by the proposed algorithm was the sum of i′ and i″.

Results

Some example images including Fig. 1 were randomly chosen from the 76 validation images to demonstrate how the proposed method worked. The intermediate results are shown in the following sub-section.

Illumination enhancement

To illustrate algorithm performance under different illumination conditions, citrus canopy images were captured under both front-lighting and back-lighting conditions as shown in Fig. 5a and b. The image enhancement method which was used in this study enhanced the whole image illumination and preserved fruit edges. The resulting images of the fast bilateral filtering-based Retinex are shown in Fig. 5c and d. Darker areas in the original image became brighter, and fruit edges were kept clear after illumination enhancement.

Result from illumination normalization

Based on wavelet transformation, illumination normalized images from Fig. 5c and d can be seen in Fig. 6a and b. There were no apparent brightness differences between most of the shadow and light areas apart from the two regions where there was much stronger illumination than other regions. The two regions are the upper left corner of Fig. 6a and a bunch of bright bands in Fig. 6b middle, respectively. The histograms for Fig. 6a and b are shown in Fig. 6e and f, where a more even image intensity distribution was shown compared to Fig. 6c and d which are the histograms for Fig. 5c and d. These results indicated that the proposed illumination normalization algorithm was effective.

Potential fruit regions detection results from K-means clustering and CHT

After illumination normalization, extraction results of potential fruit regions based on K-means clustering and CHT are shown in Fig. 7. Figure 7a was the extraction result of green color components of Fig. 6b based on K-means clustering, and Fig. 7b was the part that belonged to red and blue color components. It can be seen that green color components including citrus fruit and leaves can almost be segmented from the original illumination normalized image. Most of the fruit in Fig. 6b can be seen in Fig. 7a. However, there were still some small holes in the fruit surfaces and leaves. After those holes were filled, fruit shapes became obvious. Thus most of the fruit regions can be determined by CHT as shown in Fig. 7c. The results based on K-means clustering and CHT were overlapped on the original illumination normalized image. Though most of the fruit regions can be identified, false positives and false negatives also occurred as shown in Fig. 7d.

LBP feature-based fruit detection result and removal of false detections

Figure 8a and b are the detection results using Method 1 and Method 2, respectively. The figures show that fruit detection using the LBP feature-based AdaBoost classifier was effective. However, apparent false negatives appeared in the result from Method 1. Though Method 2 had less false negatives, more false positives occurred in the detection result. After the potential fruit region detection, the proposed algorithm divided Fig. 7d into image_CHT and image_WCHT shown as Fig. 8c and d, respectively. False positive removal result is shown in Fig. 8e and red rectangles represent the final fruit detection results in the image_CHT. In the image_WCHT, false negatives including three partially occluded fruit were removed in Fig. 8f. After false positive removal, the detection result of immature green citrus fruit using the algorithm is shown in Fig. 8g.

Results of LBP feature-based detection and false removal: a detection result based on LBP feature using original citrus canopy image; b detection result based on LBP feature using illumination normalized image of citrus canopy; c image_CHT; d image_WCHT; e false positive removal result in image_CHT; f false negative removal result in image_WCHT; g detection result based on the proposed method

Performance of the algorithm

Successful detections and misclassifications

The successful detection results were obtained under varying illumination environments. Figure 9a shows eleven citrus fruit with different surface illumination including five fruit partially occluded by the leaves which were successfully detected by the algorithm under front-lighting conditions. In Fig. 9b, some successful detections for smaller fruit partially occluded by leaves and partially occluded by other fruit can be seen. Figure 9c and d show other images taken from a little closer distance under different illumination conditions with bigger fruit sizes compared with the previous images. All fruit, including some fruit partially occluded with branches in the middle (Fig. 9d) were successfully detected in these images. However, due to the visible part being much smaller than half of one fruit, three partially occluded fruit were missed in the detection result of Fig. 10a. Similar false detection happened in Fig. 10b where, beyond the main view, two small blurred fruit were missed, and two small background regions were mistaken for fruit. Illumination conditions and visibility of fruit may have caused these false detections. However, it is inevitable that some citrus canopy images will include these disadvantageous factors under varying illumination environments. These results indicated that the algorithm had potential for immature green citrus fruit detection under varying illumination and random partial occlusion conditions.

Successful detection results under different conditions: a detection result with a large capturing distance under front-lighting conditions; b detection result with a large capturing distance under back-lighting conditions; c detection result with closer capturing distance under front-lighting conditions; d detection result with closer capturing distance under back-lighting conditions

For the validation dataset including 76 citrus canopy images, three different detection methods were applied. The detection results are summarized in Table 2. There were a total of 458 citrus fruit in the validation images. A total of 392 fruit were correctly identified by the proposed method, much larger than the 329 fruit and 351 fruit identified by Method 1 and Method 2, respectively. The correct fruit detection accuracy was 85.6%, higher than the 71.8 and 76.6% accuracy of Method 1 and Method 2, respectively. From Table 2, Method 1 had 113 false positives, smaller than the 121 false positives by Method 2. However, 129 fruit were missed compared with 107 missed by Method 2. That implies that the LBP feature-based AdaBoost classifier could detect immature green citrus fruit under natural environment conditions. However, results were better when the AdaBoost classifier based on LBP feature of illumination normalized images was used to detect fruit.

The proposed method introduced 54 false positives and 66 missed fruit in comparison, which is described in Table 3. False positive usually indicates non-fruit is incorrectly identified as fruit. For false positives, in this study, partial occlusion means that the identified region includes more than two non-fruit objects. False positives occurred more under unoccluded conditions, which were 11 and 23, than those under partial occlusion conditions, which were 7 and 13. Missed fruit more easily happened in a partially occluded environment, which 30 and 15 more than those 15 and 6 under unoccluded conditions.

Real-time performance of the proposed algorithm

The processing time for the proposed algorithm mainly occurred during false positive removal. Although the fast bilateral filtering-based Retinex algorithm decreased the original Reinex algorithm processing speed, the average processing time was 6765 ms for 76 citrus images. For this research, a 2.40 GHz CPU was used to run the algorithm. Moreover, the average processing time of wavelet-based illumination normalization was 1186 ms. Although the CHT algorithm took too much time, the proposed potential fruit regions detection algorithm used packaged CHT functions in Matlab to search for circles in the green color component of the K-means clustering extraction results. The average time of processing for CHT was 965 ms. However, the false detection removal determining whether the CHT detection results were fruit regions in image_CHT and traversing the sub-window into image_WCHT to search for the region that satisfied fruit feature based on different LBP features took much time. The average processing time from citrus image preprocessing to the final fruit counting was 16 928 ms.

Discussions

This study used only regular RGB images of the citrus canopy to detect immature green citrus fruit under natural environment. There were large challenges such as varying illumination, random occlusion and color similarity to leaves. RGB images could be affected more easily than hyperspectral or thermal images by varying illumination so that information of the fruit surface could be changed. Random occlusion by fruit or other objects also could affect correct fruit detection. The similar color made it difficult to detect the fruit using color information. This study introduced the LBP features to express texture features of fruit and non-fruit objects. Then the AdaBoost algorithm-based classifier was trained by the LBP features. Thus, false positives were removed by using the potential fruit region detection and the classifier. Finally, immature green citrus fruit were detected.

Although the LBP feature was an illumination invariant, it was not possible to obtain a reasonable accuracy by using only the LBP feature of the original citrus color image based-classifier to detect the fruit under natural environmental conditions. Fruit detection under natural environment may be more complicated than human face recognition. However, LBP was a potential tool to combine with another method. Before the fruit detection, varying illumination of the original citrus color image was normalized, which improved the overall accuracy by using only LBP features of the illumination-normalized image. Color-based K-means clustering and shape-based CHT were helpful for detecting potential fruit regions because the fruit usually is green and circular. After potential fruit regions detection, the classifier based on the LBP feature of the illumination normalized images determined whether the CHT detection results were fruit and searched for missed fruit. Not only did this method combine color, shape and texture features but also reduced the interference of varying illumination. The classifier based on the LBP feature of the illumination normalized image played an important role as well. Finally, by combining two partial results of false detection removal, the detection achieved a satisfactory result of an overall accuracy of 85.6%.

Two improvements will be developed in future research. They will be an adaptive algorithm for fruit detection under variable illumination and improving the image processing speed by decreasing false positive removal time.

Conclusions

Detection and counting of immature green citrus fruit based on the LBP feature using illumination-normalized images was proposed in this research. The major conclusions can be summarized as follows:

-

(1)

The fast bilateral filtering-based Retinex algorithm enhanced illumination of the citrus canopy images.

-

(2)

Wavelet transformation-based illumination normalization algorithm was helpful to normalize illumination of the citrus canopy images.

-

(3)

Combining K-means clustering with CHT improved detection of potential fruit regions after illumination normalization.

-

(4)

The AdaBoost classifier trained by using both LBP features of fruit and non-fruit was able to identify immature green citrus fruit, but results were better when the AdaBoost classifier was used based on LBP features of fruit and non-fruit of illumination-normalized images to detect the fruit.

-

(5)

The proposed method used the same AdaBoost classifier (based on LBP features of fruit and non-fruit of illumination-normalized images) to remove false detections after potential fruit region detection, which yielded better detection results under varying illumination and partial occlusion conditions.

-

(6)

In this study, for the fruit detection, an accuracy of 85.6% was achieved. It indicated that the proposed algorithm has the potential to develop an early citrus yield mapping system.

Change history

17 May 2018

The original version of this article unfortunately contained a mistake. The affiliation “China West Normal University” should be removed from the author Dr. Chenglin Wang. The correct affiliation details are given below.

Abbreviations

- LBP:

-

Local Binary Patterns

- RGB:

-

Red, green and blue

- CHT:

-

Circle hough transform

- FFT:

-

Fast Fourier transform

- ARB:

-

Adaptive red and blue chromatic map

- FNCC:

-

Fast normalized cross correlation

- Retinex:

-

A compound word from ‘retina’ and ‘cortex’

- SVM:

-

Support vector machines

- LS- SVM:

-

The least squares support vector machine

- AdaBoost:

-

Abbreviations of adaptive boosting

References

Bansal, R., Lee, W. S., & Satish, S. (2013). Green citrus detection using fast Fourier transform (FFT) leakage. Precision Agriculture, 14(1), 59–70.

Cheng, Y., Hou, Y., Zhao, C., Li, Z., Hu, Y., & Wang, C. (2010). Robust face recognition based on illumination invariant in nonsubsampled contourlet transform domain. Neurocomputing, 73, 2217–2224.

Du, S., & Ward, R. (2005). Wavelet-based illumination normalization for face recognition. In 2005 International Conference on Image Processing (Vol. 3, pp. 954–957). IEEE Computer Society, Genova, Italy.

Duan, J., Zhou, C. G., Liu, X. H., Zhang, L. B., & Liu, M. (2004). The methods of improving variable illumination for face recognition. In Proceedings of 2004 International Conference on Machine Learning and Cybernetics (pp. 3918–3923). IEEE Computer Society, Shanghai City, China.

Duda, R. O., & Hart, P. E. (1972). Use of the Hough transform to detect lies and curves in pictures. Communications of the ACM, 15(1), 11–15.

Durand, F., & Dorsey, J. (2002). Fast bilateral filtering for the display of high dynamic range images. SIGGRAPH, 2002, 257–266.

Edwin, H. Land. (1977). The retinex theory of color vision. Scientific American, 237(6), 108–129.

Emadi, M., Khalid, M., Yusof, R., & Navabifar, F. (2012). Illumination Normalization using 2D Wavelet. Procedia Engineering, 41, 854–859.

Freund, Y., & Schapire, R. E. (1995). A decision-theoretic generalization of on-line learning and an application to boosting. In: Vitányi P. (Ed) Computational Learning Theory. EuroCOLT 1995. Lecture Notes in Computer Science (Lecture Notes in Artificial Intelligence) (Vol. 904). Berlin, Heidelberg, Germany: Springer.

Freund, Y., & Schapire, R. E. (1997). A decision-theoretic generalization of on-line learning and an application to boosting. Journal of Computer and System Sciences, 55(1), 119–139.

Kane, K. E., & Lee, W. S. (2007). Multispectral imaging for in-field green citrus identification. ASABE Paper No. 073025. St. Joseph, MI, USA: ASABE.

Khammari, A., Nashashibi, F., Abramson, Y., & Laurgeau, C. (2005). Vehicle detection combining gradient analysis and AdaBoost classification. In Proceedings of 2005 Intelligent Transportation Systems (pp. 66–71). IEEE Computer Society, Vienna, Austria.

Kurtulmus, F., Lee, W. S., & Vardar, A. (2011). Green citrus detection using “eigenfruit”, color and circular Gabor texture features under natural outdoor conditions. Computers and Electronics in Agriculture, 78(2), 140–149.

Kurtulmus, F., Lee, W. S., & Vardar, A. (2014). Immature peach detection in color images acquired in natural illumination conditions using statistical classifiers and neural network. Precision Agriculture, 15, 57–79.

Lei, J., Han, H., & Tong, M. (2012). Efficient visual tracking by using LBP descriptor. International Conference on Artificial Intelligence and Computer Engineering, 7530, 391–399.

Li, H., Lee, W. S., & Wang, K. (2016). Immature green citrus fruit detection and counting based on fast normalized cross correlation (FNCC) using natural outdoor color images. Precision Agriculture, 17, 678–697.

Linker, R., Cohen, O., & Naor, A. (2012). Determination of the number of green apples in RGB images recorded in orchards. Computers and Electronics in Agriculture, 81(2), 45–57.

Luo, L. F., Tang, Y. C., Zou, X. J., Wang, C. L., Zhang, P., & Feng, W. X. (2016). Robust grape cluster detection in a vineyard by combining the AdaBoost framework and multiple color components. Sensors, 16(12), 1–20.

Mazo, C., Alegre, E., & Trujillo, M. (2017). Classification of cardiovascular tissues using LBP based descriptors and a cascade SVM. Computer Methods and Programs in Biomedicine, 147, 1–10.

Ojala, T., Pietikäinen, M., & Harwood, D. (1996). A comparative study of texture measures with classification based on feature distributions. Pattern Recognition, 29, 51–59.

Okamoto, H., & Lee, W. S. (2009). Green citrus detection using hyperspectral imaging. Computers and Electronics in Agriculture, 66, 201–208.

Savvides, M., & Kumar, V. (2003). Illumination normalization using logarithm transforms for face authentication. In Proceedings of the 4th International Conference on Audio- and Video-Based Biometric Person Authentication (pp. 549–556). Guildford, UK: Springer.

Sengupta, S., & Lee, W. S. (2014). Identification and determination of the number of immature green citrus fruit in a canopy under different ambient light conditions. Biosystems Engineering, 117, 51–61.

Stajnko, D., Lakota, M., & Hoevar, M. (2004). Estimation of number and diameter of apple fruit in an orchard during the growing season by thermal imaging. Computers and Electronics in Agriculture, 42, 31–42.

Sun, J. G., Li, Y., Yang, X. N., & Wang, J. T. (2011). Face recognition based on improved LBP and LS-SVM. Advanced Materials Research, 403–408, 3249–3252.

United States Department of Agriculture-National Agricultural Statistics Service (USDA-NASS). (2015). Forecasting Florida citrus production (brochure). Retrieved June 28, 2017 from http://usda.mannlib.cornell.edu/usda/nass/CitrFrui//2010s/2015/CitrFrui-09-17-2015.pdf.

Vishwakarma, V. P., Pandey, S., & Gupta, M. N. (2009). Adaptive histogram equalization and logarithm transform with rescaled low-frequency DCT coefficients for illumination normalization. International Journal of Recent Trends in Engineering, Issue on Computer Science, 1, 318–322.

Wachs, J. P., Stern, H. I., Burks, T., & Alchanatis, V. (2010). Low and high-level visual feature-based apple detection from multi-modal images. Precision Agriculture, 11, 717–735.

Wang, Y. B., Ai, H. Z., Wu, B., & Huang, C. (2004). Real time facial expression recognition with AdaBoost. In Proceedings of the 17th International Conference on Pattern Recognition (pp. 926–929). IEEE Computer Society, Cambridge, UK.

Yang, Y. N., Jiang, Z. H., Yang, C. H., Xia, Z. Q., & Liu, F. (2015). Improved retinex image enhancement algorithm based on bilateral filtering. In Proceedings of the 4th International Conference on Mechatronics, Materials, Chemistry and Computer Engineering (Vol. 39, pp. 2250–2256). Xi’an, China: Atlantis Press.

Zhao, C. Y., Lee, W. S., & He, D. (2016). Immature green citrus detection based on color feature and sum of absolute transformed difference (SATD) using color images in the citrus grove. Computers and Electronics in Agriculture, 124, 243–253.

Acknowledgements

The authors would like to thank the National Natural Science Foundation of China and Science, technology project of Guangdong Province, technology project of Huizhou (Nos. 31571568, 2015A020209111, 2014B040008006), and support from the University of Florida.

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

Wang, C., Lee, W.S., Zou, X. et al. Detection and counting of immature green citrus fruit based on the Local Binary Patterns (LBP) feature using illumination-normalized images. Precision Agric 19, 1062–1083 (2018). https://doi.org/10.1007/s11119-018-9574-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11119-018-9574-5