Abstract

Site-specific weed management can allow more efficient weed control from both an environmental and an economic perspective. Spectral differences between plant species may lead to the ability to separate wheat from weeds. The study used ground-level image spectroscopy data, with high spectral and spatial resolutions, for detecting annual grasses and broadleaf weeds in wheat fields. The image pixels were used to cross-validate partial least squares discriminant analysis classification models. The best model was chosen by comparing the cross-validation confusion matrices in terms of their variances and Cohen’s Kappa values. This best model used four classes: broadleaf, grass weeds, soil and wheat and resulted in Kappa of 0.79 and total accuracy of 85 %. Each of the classes contains both sunlit and shaded data. The variable importance in projection method was applied in order to locate the most important spectral regions for each of the classes. It was found that the red-edge is the most important region for the vegetation classes. Ground truth pixels were randomly selected and their confusion matrix resulted in a Kappa of 0.63 and total accuracy of 72 %. The results obtained were reasonable although the model used wheat and weeds from different growth stages, acquisition dates and fields. It was concluded that high spectral and spatial resolutions can provide separation between wheat and weeds based on their spectral data. The results show feasibility for up-scaling the spectral methods to air or spaceborne sensors as well as developing ground-level application.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Site-specific weed control and management could economically benefit farmers and consumers without diminishing weed control efficiency (Pinter et al. 2003; Slaughter et al. 2008). Another reason to reduce the amount of applied herbicides is weed resistance to herbicides (Marshall and Moss 2008). Site-specific weed management has reduced herbicide use by 11–90 % without affecting crop yield (Feyaerts and van Gool 2001; Gerhards and Christensen 2003). Weed distribution in fields is non-uniform and confined to patches of varying size in field as well as along field borders (Gerhards et al. 1997; Weis et al. 2008) and, since there is significant variation in weeds also between different fields, the need for site-specific weed monitoring and management is emphasized (Moran et al. 2004).

Non-selective weed detection and control can be implemented by detection of green vegetation (Biller 1998). This approach can be applied to entire fields before crop emergence or between the crop rows after emergence (Moran et al. 1997; Alchanatis et al. 2005). Selective sensing methods are designed to detect the shape of weed leaves against the soil background and, thus, can be applied only in early growing stages when leaves are not overlapping (Weis et al. 2008). Selective ground-based sensing methods can also rely upon the spectral characteristics of the weed as well as of the crop. The reflectance data of canopy will probably include shaded leaves and might include stems, flowers, fruits and a background that is very likely to be soil that might be partly shaded and of different humidity levels.

Plant spectra will mainly be affected by leaf pigmentation in the visible region (400–700 nm) (Yoder and Pettigrew-crosby 1995) while the near infrared (NIR) region (700–1 100 nm) is highly influenced by the leaf or canopy structure that can be affected by phenology as well as species (Gausman 1985). A dicotyledonous leaf has more air spaces among its spongy mesophyll tissue than a monocotyledonous leaf (Raven et al. 2005) of the same thickness and age, resulting in a higher reflectance in the NIR region (Gausman 1985). The red-edge region is the slope connecting the low red and high NIR reflectance values in the spectrum of vegetation and is an important indicator for spectral separation of different plant species (Herrmann et al. 2011; Shapira et al. 2013).

The first step required in order to spectrally distinguish between crops and weeds is to obtain continuous spectra of pure plant for each species or group of species. This can be implemented by using high spatial and spectral resolutions, as shown by Vrindts et al. (2002), whose conclusions demonstrated the need to employ relative reflectance values in order to classify crops and weeds and to minimize the effect of different lighting conditions on the spectral data. Lopez-Granados et al. (2008) classified the ground-level spectral reflectance of wheat, four grass weeds and soil, and concluded that one sampling date per growth season, when phenological distinction is maximal, can provide high quality classification. It is important to mention that relying on phenology for spectral separation will be less efficient in cases when the optimal time for herbicide application precedes the date of maximal phenological variability among crop and weeds. Slaughter et al. (2008) noted, in their review, that the greatest portion of the studies were conducted in ideal conditions, with no overlapping of crop and weeds, and resulted in classification accuracies of 65–95 %. Zwiggelaar (1998) mentions, in his review, that using selected wavelengths for discriminating between crops and weeds in a row environment has not been demonstrated so far, and analyzing images with a limited number of wavelengths might not be sufficient. Okamoto et al. (2007) worked in the visible and NIR regions in order to separate between sugar beet and four weeds, two broadleaf weeds and two grass weeds. The validation results showed 75–97 % success for the five classes for sampled pure vegetation pixels. Predictions for an entire image and ground truth analyses were not mentioned. In other studies that applied hyperspectral cameras, the soil background was excluded and the classifications of crop and weeds were applied to young plants with one or two layers of leaves (Borregaard et al. 2000; Feyaerts and van Gool 2001; Nieuwenhuizen et al. 2010).

The current research used ground-level image spectroscopy data, with high spectral and spatial resolutions, for detecting annual grasses and broadleaf weeds in wheat fields. Specific objectives were threefold: (1) to choose the best class determinations, for this dataset, in order to separate broadleaf weed (BLW), grass weed (GW) and wheat; (2) to find the most important spectral bands needed for this separation; and (3) to examine the potential of using high spectral and spatial resolution ground-level reflectance from the wheat fields to predict categories of wheat and weeds.

Materials and methods

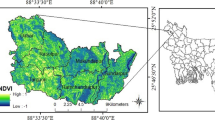

Study area

Field measurements were performed in rainfed as well as irrigated wheat experimental plots in winter 2009 at the Gilat Research Center in the northwest Negev, Israel (31o20′ N, 34o40′ E). This region is defined as semi-arid with a short rainy season (November–April; Har Gil et al. 2011). Soils are Calcic Xerosols with sandy loam texture formed from alluvium and loess on shallow hills with average elevation of 80–150 m above sea level (Kafkafi and Bonfil 2008).

Field work and pre-processing

Ground level images were obtained by the Spectral Camera HS (V10E, Specim, Oulu, Finland), a pushbroom sensor, with 1 600 pixels per line and 849 spectral narrow bands (~ 0.67 nm wide) in the visible and NIR regions. The images were obtained from 2 h before until 2 h after midday in order to minimize changes in the solar zenith angle and shadow effects. Since herbicides are usually applied before closure of the crop canopy (Thorp and Tian 2004), images were acquired 10–54 days after the emergence of the wheat. The growth stages of the wheat were Zadoks 12–47 known as seedling growth to flag leaf sheath opening (Zadoks et al. 1974) and the canopy height was up to 0.35 m. The camera was mounted on a tripod, 1.35 m above the top of the canopy, pointing down to cover an area of 0.5 by 0.5 m delimited by a metal frame at the canopy level (Fig. 1). At this height, the spatial resolution was approximately 0.5 mm. The square frame was divided into 16 equally sized squares to visually estimate the relative coverage of different features, such as wheat, weeds and soil. The assessment was carried out for each of the squares and accumulated, with 6.25 % weight per square, to include the entire area surrounded by the frame. All assessments were performed by the same person. The relative coverage of BLW category included all broadleaf plants in the frame area mainly Chenopodium, Malva, potato, knapweed and chrysanthemum. The relative coverage of GW category included all grasses that are not wheat mainly Lolium rigidum and Hordeum plaucum.

A Coolpix S10 (Nikon) digital camera, mounted at the same height as the hyperspectral camera, was used to acquire true-color images (RGB). These photos were used as references for the cross-validation classification, as well as for obtaining ground truth. It should be noted that, since the integration time of each scene, resulting from the push broom instrument, was 28–35 s (depending on the frame rate and number of lines acquired), and since all images were acquired in an open field, gusts of wind could influence the relative location of leaves. Consequently, a slight difference might exist between the hyperspectral image and the RGB photo.

The image preprocessing included the subtraction of the sensor electronic noise (dark current) and radiometric correction by the AISATools software (Specim, Oulu, Finland). Then, the images were converted to relative reflectance values by the ENVI 4.3 (EXELIS, Boulder, Colorado, USA) software environment. This process was based on the flat field calibration method by white referencing to a barium sulfate (BaSO4) panel positioned on the frame underneath the camera (Hatchell 1999) as presented in Fig. 1. The barium sulfate panel was prepared by pressing BaSO4 powder into a 20 mm deep round box with a diameter of 52 mm. The powder was smoothened with glass to create a smooth surface. The panel was smoothed in the beginning of every working day and checked after every image. The flat field calibration method was performed for each image by its own white reference; this also provided atmospheric correction. The images were spectrally resampled to obtain 91 bands by averaging the original spectra every 5 nm in the range of 400–850 nm. The images were rectangularly clipped to include only the area within the frame boundaries. In cases where the frame was not parallel to the image borders, the images were clipped to include the maximal area, while the frame itself was not included. 21 images were acquired this way for further processing and statistical analysis.

PLS-DA analysis

The partial least squares discriminant analysis (PLS-DA) applies a partial least squares model to the discriminant function analysis problem in order to allow maximal separation among classes (Musumarra et al. 2004). Since the partial least squares method was not initially designed for classification, it has been rarely used for this purpose. Nevertheless, the PLS-DA method can produce plausible separation (Barker and Rayens 2003). In order to relate the PLS (numerical) to the DA (categorical), in a two classes case, each sample was assigned an arbitrary number that indicated to which class it belonged (Xie et al. 2007). These two arbitrary numbers are the only values acceptable for one artificial variable. In the case of more than two classes to be separated, there is a need for more (equal to the number of classes) binary artificial variables that will indicate to which class each sample belongs (Musumarra et al. 2004).

A total number of 1857 spectra from pure pixel (i.e., containing one class) were arbitrarily selected from the 21 raw images, as presented in Table 1. Each spectra was obtained from one pixel as a vector of reflectance values in all 91 bands. The BLW category included spectra of three species: Chenopodium, Malva, and potato. The GW category included spectra of Lolium rigidum and Hordeum plaucum. The PLS-DA was applied for the categories and not for specific species. Approximately half of the spectra were obtained from sunlit pixels and the rest from shaded pixels. In Table 1, the data is divided into four classes: BLW, GW, soil and wheat with 799, 364, 330 and 364 spectra, respectively. Six models were examined:

-

Model #1 separates three classes: BLW, G (including GW and wheat) and soil;

-

Model #2 separates two classes: BLW and G (including GW and wheat);

-

Model #3 separates four classes: BLW, GW, soil and wheat;

-

Model #4 separates three classes: BLW, GW and wheat;

-

Model #5 separates the classes from model #3 and divides them into sunlit and shaded pixels (i.e., eight classes);

-

Model #6 separates the classes from model #4 and divides them into sunlit and shaded pixels (i.e., six classes).

The sample distribution to classes for each of the models is presented in Table 1.

In order to evaluate the relative importance of each band in the chosen PLS-DA model, the variable importance in projection (VIP) after Wold et al. (1993) was computed. The VIP is defined as the summary of the importance for each predictor projections to find a number of principal components of the PLS model (Chong and Jun 2005; Cohen et al. 2010). The VIP values are evaluated by “the higher the better” method where the average VIP = 1 is considered to be the putative threshold since it is the average value of the PLS model predictors’ VIP values. Therefore, in order to separate between wheat and weeds, as well as to determine the most important wavelengths for the separation, each PLS-DA model was cross-validated (including VIP analysis) and the best model performed a prediction for each of the images. This process was applied in a Matlab 7.6 (MathWorks, Natick, Massachusetts, USA) environment by the PLS-toolbox (Eigenvector, Wenatchee, Washington, USA). Building PLS-DA models included pre-processing the X-block by mean centering the data. Mean centering is applied as pre-processing for PLS models by reducing variation within the data (Navalon et al. 1999). It operates by subtracting the mean reflectance value for each wavelength from each reflectance value. Then the data were validated by cross-validation as is customary for empirical models (Borregaard et al. 2000). The cross-validation was using every 10th sample in order to set the number of latent variables to be applied for the model (Nason 1996; Wu et al. 1997). Classification quality assessment methods were applied for these confusion matrices in order to obtain the most suitable model for the prediction of weeds in a wheat field. The chosen model was applied for the prediction of all images. The PLS-DA classification prediction intermediately resulted in a two-dimensional image per class, in which the value of each pixel is the probability of the pixel to belong to this class. A pixel was determined to belong to a certain class if the probability of this class was higher than the others. The threshold for classification was set to be 0.3, meaning pixels with probability values smaller than 0.3 were defined as unclassified. Pixels that were unclassified for all classes were determined as unclassified in the final classification result image. The PLS-DA classification prediction resulted in 21 two-dimensional images, in which each pixel is related to one of the classes or defined as unclassified.

Classification quality assessment

The quality of PLS-DA models was compared based on the cross-validation confusion matrices. Cohen’s Kappa was computed as presented and defined by Cohen (1960) as the proportion of agreement after chance agreement is removed from consideration. Cohen’s Kappa is a unit-less value ranging from 1 for perfect agreement to −1 for complete disagreement. Computation of Cohen’s Kappa is based on a confusion matrix and is presented in Eq. (1):

where d is the sum of pixels that were correctly classified, q is the sum of each line and column in the confusion matrix all summed to be divided by the total number of samples and N is the total number of samples. The confidence limit (CL) units are percent and were calculated for overall accuracy as shown by Foody (2008) and presented in Eq. (2):

where p is the overall accuracy, t N,d−1 is the statistical value of a 95 % two-tailed test for d samples, N is the total number of samples, and d is the sum of ground truth pixels that were correctly classified. The CL of the total accuracy can allow comparisons between models that are based on total accuracy and, therefore, show if there is a model that is significantly better or worse than others (Foody 2008). A comparison of the quality of coupled PLS-DA classification models was performed, as mentioned, by Congalton and Mead (1986) and presented in Eq. (3):

where Z is the normal curve deviation, and if it is > 1.96 or < −1.96, the difference between the confusion matrices is significant at 95 % probability (2.58 and −2.58 are the thresholds for significance at 99 %); Kappa is defined in Eq. (1); and Var is the variance of the confusion matrix, as shown by Hudson and Ramm (1987). The comparison was done for two confusion matrices at a time, and their Cohen’s Kappa and variance were calculated in order to determine if they are significantly different from one another.

The quality of the prediction by the PLS-DA model was assessed by ground truth data. For each spectral image, 50 pixels, not included in the calibration data set, were randomly selected to be ground truth data. These pixels were identified in the digital photos taken in field and have been determined to belong to one of the classes. The classification results, in comparison with the ground truth, were analyzed as confusion matrices. The quality of the classification was assessed by Cohen’s Kappa coefficient, overall accuracy, user’s accuracy and producer’s accuracy for each confusion matrix. The classification quality was assessed for each image separately (i.e., 50 ground truth pixels) and for all the images together (i.e., 1 050 ground truth pixels).

Relative coverage assessment

Relative coverage assessment was performed by three methods: field estimation, counting pixels in the PLS-DA classification results, and by a simple classification decision tree (DT). The field estimation was described earlier, and the classes were BLW, GW, soil and wheat. The final results of the PLS-DA classification for each image were used to count the pixels related to each of the four classes (i.e., BLW, GW, soil and wheat) and to divide this number by the number of pixels in the image in order to obtain relative coverage. The DT classified each image into one of five classes: sunlit vegetation, shaded vegetation, specularly reflected vegetation, sunlit soil and shaded soil. The DT classification was applied in ENVI software environment for all the images and resulted in 21 two-dimensional images, in which each pixel is related to one of the five classes.

The DT, presented in Fig. 2, is based on conditions applied for the relative reflectance values of three narrow bands (i.e., 470, 555 and 670 nm) that reflect differences between the classes. The first condition checks if the reflectance values in bands 470 and 555 nm are both lower than 0.05. In case the condition is fulfilled, the pixel is assumed to be shaded and the next condition will determine if it is soil or vegetation. In case the condition is not fulfilled, the pixel is assumed to be sunlit, and the other two conditions will determine if it is sunlit soil, specularly reflected vegetation, or sunlit vegetation.

Decision tree for separating five classes: sunlit vegetation, shaded vegetation, specularly reflected vegetation, sunlit soil, and shaded soil. Each condition, written in the rectangular boxes, has two options: yes or no. Each option leads to another condition or to a classification product. The ρ stands for the relative reflectance value at the specified wavelength (in nm)

Results and discussion

Figure 3 presents the averaged reflectance values with standard deviation of the eight classes: four classes obtained from pure pixels that were sunlit and four classes obtained from pure pixels that were shaded. Note that, as expected, the range of reflectance values of the sunlit pixels (Fig. 3a) are higher than those of the shaded ones (Fig. 3b). These eight classes were used for testing the different classes by the six PLS-DA models.

Tables 2, 3, 4, 5, 6, 7 present the cross-validation confusion matrices of six PLS-DA models. These six models are divided into three couples, each with the same classes with and without soil. In order to find the best model, a comparison of the confusion matrices was computed by the normal curve deviation (Z; Eq. 3) for all model couplings (Table 8). In Model #1, the classes were either broadleaf or grass (including wheat data); therefore, it did not distinguish between crop and weeds. For Model #-2, a soil class was added. These models were analyzed and presented in order to learn more about the importance of soil as a distinguishable class. The overall accuracy of Model #1 is higher than that of Model #2 (Tables 2 and 3), and the difference between these confusion matrices is significant (Table 8). Similarly, in other couples of models, i.e., Models #3 and 4 (Tables 4 and 5) and Models #5 and 6 (Tables 6 and 7), the only difference between them is an additional soil class. The overall accuracy is higher for each model containing a soil class, and the difference between the coupled models is significant, as presented in Table 8. These three couplings show that the total accuracy is significantly better (more than 99 %) when soil is added as a class. Since the canopy in the current study is denser than mentioned in the literature for operating similar sensors (Borregaard et al. 2000; Feyaerts and van Gool 2001; Okamoto et al. 2007; Nieuwenhuizen et al. 2010) and since the current study is expected to be a step towards up-scaling (soil will be included in the larger pixel), the influence of soil on classification quality was explored. It can be assumed that, for the coupled models (i.e., models #1 and #2, #3 and #4, and #5 and #6), the improvement in the overall accuracy for models including the soil class is mainly influenced by the accuracies of the soil classes. This assumption is incorrect based on the user’s and producer’s accuracies in Tables 2, 3, 4, 5, 6, 7.

Models #5 and 6 were also analyzed in order to demonstrate the effect of sunlit and shaded pixels. In most of the cases, the sunlit class caused better user’s and producer’s accuracies than the shaded class (Tables 6 and 7). The shaded soil and, to a lesser extent, the shaded GW user’s accuracy values of Model #5, produced values that are similar to the soil and GW classes in Model #3. When comparing the user’s accuracy of sunlit classes from Model #5 to the classes of Model #3, the values are similar. Since the images used for the prediction include 21–65 % shade and shaded vegetation out of the total area of the image (obtained by the DT and not presented), Model #3, including sunlit and shaded pixels together, is a more efficient classifier than Model #5. The distribution of sunlit and shaded pixels is presented in Table 1. Table 8 shows the significant superiority of Model #3 over Models #5 and 6. Model #3 comprises, in addition to wheat and weeds, a class of soil, and each class combines data from sunlit and shaded pixels. Therefore, it was chosen to be the model for applying the prediction by images with the aim of identifying weeds in a wheat field.

It is important to emphasize that, although the cross-validation was obtained by pure pixels (Fig. 3), the spectral samples of vegetation classes were acquired from the canopy, and those of the soil class were acquired next to canopy or in its shade. As mentioned above, leaves are semi-transparent for NIR radiation. Therefore, the spectrum of the soil shaded by vegetation will most likely include vegetation signals; hence, a spectrum from each of the classes also includes the spectral signals of the neighboring objects (Borregaard et al. 2000) e.g., leaves and soil, GW and BLW, and sunlit as well as shaded. These objects can be the same class of the sample, another class, or even a target that is not included in any of the classes (e.g., stems, specular reflection and stones). Therefore, each of the presented models includes some amount of error that was caused by field conditions. Consequently, Model #3, that includes in each of its classes sunlit and shaded spectra, is believed to provide prediction results that are based on real field conditions.

In order to find the important wavelengths for separating the classes in Model #3, the VIP analysis was applied for each of the classes and is presented in Fig. 4. For the three vegetation classes, the most important spectral region is the red-edge with the highest peak at 730 nm, while for the soil, the highest peak is at 685 nm on the edge of the red and red-edge spectral regions. This makes sense since the soil does not absorb radiation in the red region, as vegetation does, due to the photosynthetic process (Gausman 1985) and the 685 nm wavelength is the reflectance depth for the three sunlit vegetation classes (data not presented). This is in agreement with the perfect producer’s accuracy for the soil and sunlit soil classes in Tables 2, 4 and 6. Spectra of shaded soil, in a vegetated area, can include elements of vegetation (e.g., absorption in the red region and enhanced reflectance in the NIR region). This might be part of the reason why the soil and soil-shaded classes do not have perfect user’s accuracy in these three tables. For the wheat and GW classes, besides the red-edge, the important regions are the blue and green ones. In most of the cases, the GW plants, in the field as well as in the digital pictures, have a lighter green hue than the wheat plants that seem to be more bluish. This combination of four wavelengths (i.e., blue, green, red and red-edge) became the most important for separating the four classes of Model #3.

There are few hyperspectral satellites that are active today (e.g., Hyperion and CHRIS with two planned, HyspIRI and EnMAP) and they might not be able to meet the spatial requirements for site-specific weed management. Currently, there are only two operational multi-spectral satellites, WorldView-2 and RapidEye, which can meet the four-band requirements (including a red-edge band) along with high spatial resolution for site-specific agricultural applications. The other two forthcoming satellites are the vegetation and environmental new micro spacecraft (VENμS) and Sentinel-2 to be launched in 2014, at the earliest. There is a need to further explore the influence of reduced spatial resolution on the quality of prediction, in other words, to deal with the mixed pixel issue in order to find out what the ideal pixel size is for weed analysis. This is suggested to be a specific airborne (hyper- or multi-spectral) mission for calibrating several common weeds and crops in relation to the economic benefits of weed control. Although satellites can obtain coverage of several fields in one image, it seems that high spatial resolution that allows identifying early weed infestation, similar to ground level, is beyond the goals of current or near future satellites. Therefore, air- and ground-level application based on similar sensors, with a higher spatial resolution, is also a course to be concentrated on in the near future.

Table 9 presents the total accuracy and its confidence interval, Cohen’s Kappa, and user’s and producer’s accuracies obtained from 21 confusion matrices computed in order to calculate the quality of prediction by Model #3 for the 21 images. The total accuracies range from 54 to 90 % and, when considering the confidence intervals, can range from 40 to 98 %. The highest total accuracies of 88 and 90 % were both obtained in images that do not contain all four classes to begin with, or in which the randomly picked ground truth pixels did not cover all available classes, as can be seen by the 0 values in both user’s and producer’s accuracies. Correlating the total accuracy to vegetation coverage by field assessment, PLS-DA and DT, for the images presented in Table 9, resulted in very weak, non-significant, negative relations with R2 < 0.025. Therefore, it can be assumed that Model #3 predictions are not influenced by the vegetation cover range of the values of the current data. The vegetation cover was obtained by the three methods mentioned above and resulted in 35–95 % by the field assessment, 28–100 % by the PLS-DA model, and 15–99 % by the DT.

The confusion matrix for all the ground truth points together is presented in Table 10. The 1050 ground truth pixels were distributed among the classes almost evenly, and the number of unclassified pixels is negligible. Soil is the class whose accuracy of prediction is the highest. BLW can be predicted with 81 % success, but BLW is predicted as BLW only with 52 % success. For the wheat class, it is the other way around as wheat can be predicted with 60 % success, but wheat is actually predicted as wheat with 79 % success. Pixels of BLW, GW and soil that are mistakenly classified are, in most cases, classified as wheat 68, 49 and 21 %, respectively. Therefore, the user’s accuracy of wheat is the lowest. In Table 6, most of the mis-classifications are between shaded vegetation classes and, since in Model #3 the classes include sunlit and shaded data in the same class, this might be the source of the miss-classifications in the vegetation classes presented in Table 10. The 1857 cross-validation pixels (used for calibration) were collected from homogeneous regions and were not picked from stones, stems, specular reflection and upside down leaves, nor those adjacent to leaf edges. The 1050 ground truth pixels were randomly selected and, therefore, might be of a target whose spectrum is similar to more than one class (e.g., pixel on the edge of a leaf). In order to generalize the model, spectral data were collected in several plots and growing stages. Tyystjarvi et al. (2011) obtained the best results for species classification by training and testing leaf florescence measurements that were obtained on the same date. Therefore, it is assumed that the classification results could have been improved if the cross validation was applied to data obtained from one date, one plot, or even one image. However, the applied system must be generalized in the sense of location and unlimited to specific growth stage before crop closure (i.e., the optimal time for herbicide application).

Figure 5 displays two images and their classification results by Model #3. Figure 5a presents an image with the lowest total accuracy and Fig. 5c presents an image with above average values (Table 9). The relative coverage assessed in the field, for both images, shows 30 and 25 % of GW, 40 and 50 % of wheat, 27 and 22 % of soil, and 3 and 3 % of BLW, respectively. The DT analysis resulted in similar relative coverage with differences that are not higher than 4 % for each of the five classes. The quality of the prediction confusion matrices’ comparison resulted in a significant difference (more than 95 %), based on Z = 2.27. The distributions of ground truth pixels in both images are similar, with differences of 1, 3, 6 and 4 pixels for the four classes BLW, GW, soil and wheat, respectively. Both images were acquired in the same field on the same day with less than half an hour difference between acquisitions. Therefore, besides human assessment or other errors or inaccuracies and the limitations of the model itself, the difference between the confusion matrices is assumed to be related to the fact that the model was cross-validated from a variety of spectral, growth stages and environmental conditions combined with infield spectral variability.

The total accuracy and Cohen’s Kappa of prediction, presented for Model #3, can be explained by the number of cross-validation pixels obtained from the image by 11.3 and 0.2 %, respectively, both not significant. Therefore, it is assumed that the influence of the amount of cross-validation pixels on the Cohen’s Kappa, as well as the total accuracy, is negligible and that Model #3 classification prediction results, for the 21 images, are not influenced by the cross-validation pixels’ distribution among the images. Figure 6 presents the user’s and producer’s accuracy of a class for each image related to the number of cross-validation pixels, of the same class, acquired from each image. The GW and soil classes correctly identified among the pixels classified (i.e., user’s accuracy) as GW and soil, respectively, by Model #3 are significantly influenced by the number of cross-validation pixels acquired in the image by 39 and 22 %, respectively. The BLW class correctly classified among the pixels known to be BLW (i.e., producer’s accuracy) by Model #3 is significantly influenced by the amount of cross-validation pixels acquired in the image by 47 %. When including in the analysis only the images that cross-validation pixels were acquired from and the user’s accuracy, the R2 values of BLW, GW, soil and wheat were 0.01, 0.27, 0.83 and 0.1, respectively. When including in the analysis, only the images that pixels were acquired from and the producer’s accuracy, the R2 values of BLW, GW, soil and wheat were 0.29, 0.06, 0.31 and 0.03, respectively. Comparing these R2 values to the R2 values in Fig. 6 results in a reduction of correlation between the amount of pixels and the user’s as well as the producer’s accuracies for the three vegetation classes. Therefore, the vegetation classification quality is almost not influenced by the distribution of pixels among the images. In the case of soil, the tendency is the opposite and, therefore, it seems that, for soil, it is important to distribute the cross-validation pixels among more images.

In order to decide whether to apply weed control, there is a need to know the relative coverage of weeds in the field (Slaughter et al. 2008). Figure 5b and d present examples of classification results by Model #3; such images were used to obtain the relative coverage of each of the four classes. There is a positive, significant correlation between the relative coverage obtained by PLS-DA classification and by field assessment (Fig. 7). BLW and soil that show relatively better user’s accuracy results (Table 9) provide better correlation to field assessment. Therefore, it can be assumed that the relative coverage of the four classes can be assessed by Model #3 with the limitations presented above. On top of human error in assessment and mis-classifications of the model, differences in relative coverage can be partly an outcome of the clipping process. Since the frame that was used for field assessment was not always parallel to the image borders, narrow triangles, in the frame area, were clipped out of the images that were used to predict and ground truth the PLS-DA model.

Conclusions

Classification of wheat and weeds was the aim of the current study. Pixels were selected from ground-level images and used to build six PLS-DA models. The best model was found to be the one that included soil as an additional class and combined sunlit and shaded pixels in each of its four classes: BLW, GW, soil, and wheat. The important wavelengths for each of the classes of the best model were obtained by the VIP method. The best model was applied to the images and ground truth was applied using RGB photos. The total ground truth resulted in an overall accuracy of 72 %. These results indicate that differentiation between wheat and weeds is possible using PLS-DA, therefore, potentially contributing to practical site-specific herbicide application. Specific conclusions are:

-

Composition of four classes: BLW, GW, soil and wheat, was the best for weed detection for the current data set.

-

Sunlit vegetation can be better separated into classes than shaded vegetation.

-

The red-edge is the most important region for separation among wheat, BLW and GW. For wheat and GW, the blue and green regions are also important, respectively. For soil separation among vegetation, the edge between the red and red-edge is most important, spectrally.

-

Although the model’s cross-validation and ground truth were acquired for heterogenic data, the model obtained reasonable results and, therefore, is potentially applicable.

-

High spectral and spatial resolutions can provide separation between wheat and weeds based on spectral data alone.

Future work that is more aimed at economic thresholds for applying weed control should be concentrated in one of two directions: covering wider areas, with coarser spatial resolution, by satellites (e.g., WorldView-2, RapidEye, VENμS and Sentinel-2) while exploring the mixed pixel issue; or covering relatively smaller areas, with fine spatial resolution, by ground level sensors in order to deal with the sunlit and shaded parts in the canopy and soil separately or to better understand their mutuality.

References

Alchanatis, V., Ridel, L., Hetzroni, A., & Yaroslavsky, L. (2005). Weed detection in multi-spectral images of cotton fields. Computers and Electronics in Agriculture, 47, 243–260.

Barker, M., & Rayens, W. (2003). Partial least squares for discrimination. Journal of Chemometrics, 17, 166–173.

Biller, R. H. (1998). Reduced input of herbicides by use of optoelectronic sensors. Journal of Agricultural Engineering Research, 71, 357–362.

Borregaard, T., Nielsen, H., Norgaard, L., & Have, H. (2000). Crop-weed discrimination by line imaging spectroscopy. Journal of Agricultural Engineering Research, 75, 389–400.

Chong, I. G., & Jun, C. H. (2005). Performance of some variable selection methods when multicollinearity is present. Chemometrics and Intelligent Laboratory Systems, 78, 103–112.

Cohen, J. (1960). A coefficient of agreement for nominal scales. Educational and Psychological Measurement, 20, 37–46.

Cohen, Y., Alchanatis, V., Zusman, Y., Dar, Z., Bonfil, D., Karnieli, J., et al. (2010). Leaf nitrogen estimation in potato based on spectral data and on simulated bands of the VENuS satellite. Precision Agriculture, 11, 520–537.

Congalton, R. G., & Mead, R. A. (1986). A review of three discrete multivariate analysis techniques used in assessing the accuracy of remotely sensed data from error matrices. IEEE Transaction on Geoscience and Remote Sensing, 24, 169–174.

Feyaerts, F., & van Gool, L. (2001). Multi-spectral vision system for weed detection. Pattern Recognition Letters, 22, 667–674.

Foody, G. M. (2008). Harshness in image classification accuracy assessment. International Journal of Remote Sensing, 29, 3137–3158.

Gausman, H. (1985). Plant leaf optical properties in visible and near infrared light. Lubbock: Texas Tech Press.

Gerhards, R., & Christensen, S. (2003). Real-time weed detection, decision making and patch spraying in maize, sugarbeet, winter wheat and winter barley. Weed Research, 43, 385–392.

Gerhards, R., Sokefeld, M., Schulze-Lohne, K., Mortensen, D. A., & Kuhbauch, W. (1997). Site specific weed control in winter wheat. Journal of Agronomy and Crop Science, 178, 219–225.

Har Gil, D., Bonfil, D. J., & Svoray, T. (2011). Multi scale analysis of the factors influencing wheat quality as determined by gluten index. Field Crops Research, 123, 1–9.

Hatchell, D. (1999). Reflectance, ASD technical guide. Periodical reflectance, ASD technical guide. Available online at: http://www.gep.uchile.cl/Biblioteca/radiometr%C3%ADa%20de%20campo/TechGuide.pdf (last accessed 1 December, 2012).

Herrmann, I., Pimstein, A., Karnieli, A., Cohen, Y., Alchanatis, V., & bonfil, D. J. (2011). LAI assessment of wheat and potato crops by VENμS and Sentinel-2 bands. Remote Sensing of Environment, 115, 2141–2151.

Hudson, W. D., & Ramm, C. W. (1987). Correct formulation of the kappa-coefficient of agreement. Photogrammetric Engineering and Remote Sensing, 53, 421–422.

Kafkafi, U., Bonfil, D. J. (2008). Integrated nutrient management: Experience nd concepts from the Middle East. In M. Aulakh and C.A. Grant (Eds.), Integrated nutrient management for sustainable crop production (pp. 523–565). Binghamton: The Haworth Press, Inc.

Lopez-Granados, F., Pena-Barragan, J. M., Jurado-Exposito, M., Francisco-Fernandez, M., Cao, R., Alonso-Betanzos, A., et al. (2008). Multispectral classification of grass weeds and wheat (Triticum durum) using linear and nonparametric functional discriminant analysis and neural networks. Weed Research, 48, 28–37.

Marshall, R., & Moss, S. R. (2008). Characterisation and molecular basis of ALS inhibitor resistance in the grass weed Alopecurus myosuroides. Weed Research, 48, 439–447.

Moran, M. S., Inoue, Y., & Barnes, E. M. (1997). Opportunities and limitations for image-based remote sensing in precision crop management. Remote Sensing of Environment, 61, 319–346.

Moran, M. S., Maas, S. J., Vanderbilt, V. C., Barnes, M., Miller, S. N. Clarke, T. R. (2004). Application of image-based remote sensing to irrigated agriculture. In S. L. Ustin (Ed.), Remote sensing for natural resource management and environmental monitoring (pp. 617–676) Hoboken: Wiley.

Musumarra, G., Barresi, V., Condorelli, D. F., Fortuna, C. G., & Scire, S. (2004). Potentialities of multivariate approaches in genome-based cancer research: identification of candidate genes for new diagnostics by PLS discriminant analysis. Journal of Chemometrics, 18, 125–132.

Nason, G. P. (1996). Wavelet shrinkage using cross-validation. Journal of the Royal Statistical Society Series B-Methodological, 58, 463–479.

Navalon, A., Blanc, R., del Olmo, M., & Vilchez, J. L. (1999). Simultaneous determination of naproxen, salicylic acid and acetylsalicylic acid by spectrofluorimetry using partial least-squares (PLS) multivariate calibration. Talanta, 48, 469–475.

Nieuwenhuizen, A. T., Hofstee, J. W., & Van Henten, E. J. (2010). Adaptive detection of volunteer potato plants in sugar beet fields. Precision Agriculture, 11, 433–447.

Okamoto, H., Murata, T., Kataoka, T., & Hata, S. I. (2007). Plant classification for weed detection using hyperspectral imaging with wavelet analysis. Weed Biology and Management, 7, 31–37.

Pinter, P. J., Hatfield, J. L., Schepers, J. S., Barnes, E. M., Moran, M. S., Daughtry, C. S. T., et al. (2003). Remote sensing for crop management. Photogrametric Engineering and Remote Sensing, 69, 647–664.

Raven, P. H., Everet, H., Everet, R. F., & Eichhorn, S. E. (2005). Biology of plants, 7 ed. New York: W. H. Freeman and Company.

Shapira, U., Herrmann, I., Karnieli, A., & Bonfil, D. J. (2013). Field spectroscopy for weed detection in wheat and chickpea fields. International Journal of Remote Sensing. doi:10.1080/01431161.2013.793860.

Slaughter, D. C., Giles, D. K., & Downey, D. (2008). Autonomous robotic weed control systems: A review. Computers and Electronics in Agriculture, 61, 63–78.

Thorp, K. R., & Tian, L. F. (2004). A review on remote sensing of weeds in agriculture. Precision Agriculture, 5, 477–508.

Tyystjarvi, E., Norremark, M., Mattila, H., Keranen, M., Hakala-Yatkin, M., Ottosen, C. O., et al. (2011). Automatic identification of crop and weed species with chlorophyll fluorescence induction curves. Precision Agriculture, 12, 546–563.

Vrindts, E., De Baerdemaeker, J., & Ramon, H. (2002). Weed detection using canopy reflection. Precision Agriculture, 3, 63–80.

Weis, M., Gutjahr, C., Rueda Ayala, V., Gerhards, R., Ritter, C., & Scholderle, F. (2008). Precision farming for weed management: techniques. Gesunde Pflanzen, 60, 171–181.

Wold, S., Johansson, E., & Cocchi, M. (1993). PLS- partial least squars projections to latent structures. In: 3D QSAR in drug design: theory, methods, and applications, H. Kubinyi (Ed.), 523–550. Leiden: ESCOM.

Wu, W., Massart, D. L., & deJong, S. (1997). Kernel-PCA algorithms for wide data, part 2- Fast cross-validation and application in classification of NIR data. Chemometrics and Intelligent Laboratory Systems, 37, 271–280.

Xie, L., Ying, Y., & Ying, T. (2007). Quantification of chlorophyll content and classification of nontransgenic and transgenic tomato leaves using visible/near-infrared diffuse reflectance spectroscopy. Journal of Agricultural and Food Chemistry, 55, 4645–4650.

Yoder, B. J., & Pettigrew-crosby, R. E. (1995). Predicting nitrogen and chlorophyll content and concentrations from reflectance spectra (400–2500 nm) at leaf and canopy scales. Remote Sensing of Environment, 53, 199–211.

Zadoks, J. C., Chang, T. T., & Konnzak, C. F. (1974). A decimal code for growth stages in cereals. Weed Research, 14, 415–421.

Zwiggelaar, R. (1998). A review of spectral properties of plants and their potential use for crop/weed discrimination in row-crops. Crop Protection, 17, 189–206.

Acknowledgments

This study was supported partially by the Chief Scientist of the Ministry of Science and Technology Grant, Chief Scientist of the Ministry of Agriculture Grant and the Israeli Field Crops Board Grant.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Herrmann, I., Shapira, U., Kinast, S. et al. Ground-level hyperspectral imagery for detecting weeds in wheat fields. Precision Agric 14, 637–659 (2013). https://doi.org/10.1007/s11119-013-9321-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11119-013-9321-x