Abstract

Modeling the behavior of air plasma spray (APS) process, one of the challenges nowadays is to identify the parameter interdependencies, correlations and individual effects on coating properties, characteristics and influences on the in-service properties. APS modeling requires a global approach which considers the relationships between coating characteristics/ in-service properties and process parameters. Such an approach permits to reduce the development costs. This is why a robust methodology is needed to study these interrelated effects. Artificial intelligence based on fuzzy logic and artificial neural network concepts offers the possibility to develop a global approach to predict the coating characteristics so as to reach the required operating parameters. The model considered coating properties (porosity) and established the relationships with power process parameters (arc current intensity, total plasma gas flow rate, hydrogen content) on the basis of artificial intelligence rules. Consequently, the role and the effects of each power process parameter were discriminated. The specific case of the deposition of alumina–titania (Al2O3–TiO2, 13% by weight) by APS was considered.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Air plasma spraying (APS) is a versatile technique to produce coatings of powder material at high deposition rates 3 kg · h−1 [1]. Powder particles are injected into a plasma jet, where they are melted and accelerated towards a substrate [2]. The coating microstructures and properties depend strongly on the characteristics of the plasma jet, which can be controlled by the adjustment of the process parameters. The coating quality control generally considers the monitoring of the feedstock particle characteristics (i.e., velocity and temperature) before their impact onto the substrate [3]. These influence significantly the coating in-service properties [4] and microstructure features. Among these features, porosity is a key parameter describing the anisotropy of the sprayed coatings and controlling their properties. Porosity increase generally leads to decrease the breaking strength, the elasticity module and the thermal conductivity [5]. Thus, the porosity formed during the process is also an important microstructure feature that must be controlled. However, many interactions between their operating parameters made these processes more complicated. A full understanding of the relationships between the coating properties and the process parameters is important for optimizing the technique and the product quality.

Artificial Intelligence (AI) offers new insights for the control, optimization and prediction of the APS process. Such a methodology is an adequate tool for the study of the complex processes with parameter interdependencies [6]. In addition, this technique proved to be viable in the field of materials science [7] and especially in the case of thermal spraying [8].

Artificial neural networks (ANN) and fuzzy logic (FL) were implemented in parallel to solve the same problem aiming at predicting porosity as a function of the power process parameter (arc current intensity, total plasma gas flow rate and hydrogen content). AI computational models (ANN and FL) were developed in matlab language. Experimental data were used to define AI model. Subsequently, this model was used to predict the coating properties (porosity). Alumina–titania (Al2O3–TiO2, 13% by weight) coatings were produced and the porosity was measured for various power process parameters.

Experimental

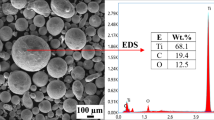

Metco 130 (Sulzer-Metco, Switzerland) fused and crushed grey alumina–titania (Al2O3–TiO2, 13% by weight) powder was selected as the feedstock powder. A Sulzer F4 torch (Sulzer-Metco, Switzerland) of 50 kW maximum operating power equipped with a 6 mm internal diameter anode nozzle was selected to carry out the experiments. Argon was used as a carrier gas. The carrier gas flow rate was systematically adjusted by spray deposit control (SPCTS, university of Limoges, France). This system is a non-intrusive diagnostic tool (CCD camera equipped with filters and a short, aperture duration, i.e., a few ms) for each spray parameter set so as to obtain an “ideal” particle trajectory within the plasma flow, that is to say a penetration of the particles into the hot core of the flow and a resulting deviation angle of about 4°.

Several sets of power operating parameters were defined to spray the coatings. These sets permitted a study of the effects of the arc current intensity, the total plasma gas flow rate and the hydrogen content (i.e., hydrogen percentage in the plasma gas). Table 1 summarizes the other parameters.

The coatings were sprayed onto 25 mm diameter and 20 mm thick button-type AISI 304L (stainless steel) samples. Prior to spraying, the substrates were manually grit-blasted using white corundum (α-Al2O3) of an average diameter ranging from 425 μm to 600 μm (supplier data, Saint-Gobain, Avignon, France). After grit-blasting, roughness was measured by Altisurf 500 (ALTIMET, 74200 Thonon-Les-Bains). The substrate exhibited an average roughness (Ra) of 3.6 μm, average value, and a peak-to-valley height (Rz) of 31 μm, average value.

After a metallographic preparation performed on automatic Vanguard-BUEHLER (Buehler, Ltd., 41 Waukegan Road, Lake Bluff, IL 60044, USA) system (sample cutting and sample polishing), coatings were observed on their cross sections by JEOL JSM 5800LV (JEOL, 78 290 Croissy sur seine) Scanning Electron Microscopy (SEM) in the secondary electron (SE) mode, leading to a resolution of 0.2 μm. In SEM, electrons were emitted from a cathode and were accelerated towards an anode. The electron beam, which had an energy of 15 keV was focused by two condenser lenses into a beam. The energy exchange between the electron beam and the sample results in the emission of electrons and electromagnetic radiation, which can be detected to produce an image. To improve contrast and to prevent the accumulation of static electric fields around the specimen due to the electron irradiation required during imaging, gold was coated on the sample surface. The sample was deposited on a support which permitted rotational and translational movements necessary to guide the sample towards the beam or the detectors. The sample supported the vacuum, the intense electronic bombardment and it was sufficiently conductor to dissipate the charges.

Ten digital images randomly captured along the cross-section were treated per sample using the software of image analysis “Image J” developed by the American health department (NIH, Bethesda, NH, USA). Images were scanned with a filter. From these scanned images, a correction of the automatic levels was carried out. In order to accentuate the difference, the contrast treatment was necessary. The objective was to dissociate the elements by identifying the pores and by defining their limits. This process was called thresholding or binarization of the image (24 bit image transformation into binary image). After image binarization all pores were in white color and the material was black. The threshold choice was not imposed. A meticulous comparison permitted to eliminate the few remaining differences between what appeared to be porosity and what was classified as porosity on the binary image. To discriminate the porosities, it was just supposed that the pores presented a continuous distribution. The total porosity was the sum of all voids, i.e. small pores, large pores, delaminations and small vertical cracks. The morphology of the residual α phase consisted of two types: a partially melted particle type prevailing as rounded or nearly spherical shaped form and the other splat type, a disc shaped caused by the high impact and rapid solidification of the fully molten plume. The relationship between the white pixels of the image (pores) and the total number of pixels in the image corresponds to the porosity which was expressed as a percentage. The experimental results are summarized in Table 2.

Artificial Intelligence Background

APS process modeling is a complex method which requires the analysis and control of many parameters. Moreover, some parameters (intrinsic) on which depends the process reproducibility, such as electrode wear and fluctuations are uncontrollable [9]. Numerous analytical or statistical models aim at studying the process mechanisms. However, these models suffer from the hypotheses simplification required to process more easily the parameters/properties correlations and from the limited number of operating conditions that can be processed. Another concept based on AI offers the possibility to discover the complex and non-linearity correlations, without physical interpretation, by taking into account the concepts of uncertainty, imprecision, imperfection. The limit of the concept is to be redefined for each kind of process and it needs in the first time database to train (ANN model) or to define the rules (FL model).

Fuzzy Logic Concept

A fuzzy logic (FL) concept was implemented in this study to predict the porosity by varying the power operating parameters. The model was empirically based and provided a simple way to reach a definite conclusion based upon imprecise input information (Fig. 1). The main interest of this approach is to code the desired behaviors in the form of rules expressed in a language close to the human language. FL model is typically divided into three categories: fuzzification, inference engine and defuzzification [10].

Fuzzification and Membership Functions

The membership function (MF) associates a weighting with each of the inputs that are processed, defines functional overlap between inputs, and ultimately determines an output response.

In fuzzification step, MF defined on the input variables were applied to their actual values to determine the degree of truth for each rule premise. This process aimed at translating the numerical values into linguistic descriptions. Figure 2 illustrates the input and output variables in fuzzy partition. This was achieved by simply evaluating all the input MF (power parameters) with respect to the current set of input values in order to establish the degree of activation of each MF. The true value for the premise of each rule was computed and applied to the conclusion part of each rule. This result in one fuzzy subset to be assigned to each output variable for each rule. At the end of the fuzzification process, a list of activations was obtained and can be carried forward to the next stage [11].

Rule Base

An algorithm was coded using fuzzy statements in the block containing the knowledge base by taking into account the objectives and the system behavior. The idea was to make use of expert knowledge and experience to build a rule base with linguistic rules [12]. The rules were in the familiar if-then format, and formally the if-side is called the condition and the then-side is called the conclusion (more often, perhaps, the pair is called antecedent—consequent or premise—conclusion).

The rules used the input membership (power parameters) values as weighting factors to determine their influence on the fuzzy output sets of the final output conclusion (porosity). Once the functions were inferred, scaled, and combined, they were defuzzified (by calculating the coordinate of the centroid surface response) into a crisp output (converted into a real value). Each rule was activated as soon as the membership degree of its premise was not null.

Mamdani’s method [13] which was based on Zadeh’s [14] was applied in this study. A typical rule can be composed in a general point of view as follows:

where A, B, C are the fuzzy partition of the process parameters (null, very low, low, medium, high, very high) and D corresponds to the porosity fuzzy partitions.

Mathematically, this becomes:

where μ is the membership degree (truth value).

Inference Engine

In a fuzzy inference engine, the actions are encoded by means of fuzzy inference rules. The appropriate fuzzy sets are defined in the fields of the involved variables, and fuzzy logic operators and inference methods are formalized in computational terms. The basic function of the inference engine is to compute the overall value of the fuzzy output based on the individual contributions of each rule in the rule base. Each individual contribution represents the value of the fuzzy output as computed by a single rule. The truth value for the premise of each rule is computed and applied to the conclusion part of each rule.

However, several rules can be activated simultaneously and recommend actions with various degrees of validities. These actions can be contradictory in this case. It is appropriate to aggregate the rules. The Max–Min composition operation was used in this study. The output membership function of each rule was indeed given by the Min (minimum) operator and Max (maximum) operator [15].

Defuzzification

Defuzzification is the procedure that produces a real value from the result of the inference, which could be used as a fuzzy control input. The set of modified control output values is converted into a single point value. Characteristically, a fuzzy system has a number of rules that transform a number of variables into a fuzzy result, that is to say that the result is described in terms of membership in fuzzy sets. The defuzzification method that was used was performed by combining the results of the inference process and then computing the fuzzy centroid of the area [16]. The coordinate of the centroid corresponds to the defuzzified value that was calculated as follows:

where U represents all output values which are considered.

Artificial Neural Network Model

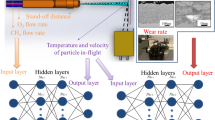

ANN is a mathematical architecture of units called neurons (elementary processors). It comprises an input vector of neurons (input layer) and an output vector of neurons (output layer). A set of neurons, organized in so-called hidden layers, connects these two vectors following different possible schemes [6]. Figure 3 represents an ANN structure in a general point of view. Each neuron receives a variable number of inputs coming from neurons belonging to a level located upstream (upstream neurons). The neuron xi is characterized by, Fig. 4:

-

the input being the sum of flow coming from the other neurons connected upstream;

-

the activation function making the input nonlinear [6] and animating the neuron by determining its activation. The principal role of this function is to encode the non-linearity of the problem on the scale of the neurons. The sigmoid function was used;

-

the output resulting from the transformation allowing to supply the neurons connected downstream. Each neuron is equipped with a single output, which then ramifies to feed a variable number of neurons belonging to a level located downstream (downstream neuron) [17].

The problem specificities and the process parameters were encoded in the connections between neurons by features called weights [6]. These weights represent the fundamental concept in discovering the parameter relationships. They translate the opportunity to communicate the result of a “within unit manipulation” to another unit by a coefficient fixing the contribution of a parameter influence on the final result. The training process primarily involved the determination of the connection weights and the patterns of connections.

Experimental data were used to train and validate the model. In this way, the data sets were organized in training and test samples. The training category was used to tune neural network weights and the test category to test the network configuration. The input layer was constituted by three neurons which symbolized the tree process parameters (arc current intensity, total plasma gas flow rate and hydrogen). The ANN output layer (system response) was composed by one neuron, i.e., the porosity.

Training and Test Procedures

An important step in developing an ANN model was the determination of its weight matrix through training. This part intended at minimizing the difference between the network outputs and the target outputs [18]. Mathematically, a neuron is an algebraic operator which summed the inputs. The hidden layer output was expressed by Eq. 4 and the signal of the network \(y_{{\text{net}}} \,(k)\) was approximated by Eq. 5.

where the activation function

{xi} were the n neurons inputs. The parameters {wi} were called weight, w ij was a weight for an input hidden layer, w jl was a weight for an input component x l .

The training algorithms adopted in this study optimized the weights by attempting to minimize the sum of the square differences between the desired values (experimental values) and actual values (network predicted value) of the output neurons [19] (Eq. 6). The prediction error associated with the output response was computed by:

where y net(k) was the network value and y(k) the target value.

This error was then propagated back, layer by layer, from the output layer to the input layer. The weights were adjusted to reduce the prediction errors through a back propagation algorithm where the error was back distributed to the previous layers across the network [20]. At the beginning of the training, the value of each weight was initially unknown and the computation started with a random set of weights. The optimization of the connection weights was indeed performed by minimizing the error according to:

where \( w_{jk}^0 \) was the initial connection weight and μ the learning rate parameter.

Once the weights were modified, the next dataset was fed to the network and a new estimation was performed. The error was calculated again and back distributed across the network for the next modification. This iterative process was repeated until the prediction error decreased to defined criteria [21].

After the training step, the model was tested using the test data to verify whether the network generalized the results. If the network output for each test pattern was almost close to the respective target, the network was considered to have acquired the underlying dynamics from the training patterns [22].

Neural Network Optimization

For the modeling process, user-defined parameters including the iteration number, the learning rate, momentum rate and the number of neurons in the hidden layer have to be determined and optimized. The optimization steps consist in, Table 3:

-

the parameter value was specified by a number representing a nerve pulse, or excitation, normalized in the range (0–1). The boundary values corresponded in this case to the minimal and maximal values that could be reached; i.e., the process limitations [23]:

$$ x_{{\text{normalised}}} = \frac{{x - \min \left( x \right)}} {{\max \left( x \right) - \min \left( x \right)}} $$(8)where x normalized represented the formatted expression, and x was the real value;

-

dividing the database into two categories: a training category required to tune weight and a test category to test the validity of the predicted results without modifying weight values;

-

initializing weight structure;

-

submitting to the structure a number of input/output examples from the database for training and testing;

-

weighting values correction with backpropagation method.

Results

Several sets of power operating parameters were defined to produce the coatings. These sets allowed the effect of the selected power process parameters on the coating properties to be evaluated. In this way, the effect of the arc current intensity was varied from 350 A to 650 A and by fixing respectively the mass percentage of hydrogen and the total plasma gas mass flow rate at 1.25% (25% at volume percentage) and 72.3 g · min−1 (50 Nl · min−1 at volume flow rate). The effect of the total plasma gas mass flow rate was studied between 50 g · min−1 and 100 g · min−1 (34–70 Nl · min−1 at volume flow rate) by keeping the mass percentage of hydrogen and the arc current intensity constant at 1.25% (25% at volume percentage) and 530 A, respectively. The effect of the mass percentage of hydrogen in the plasma gas was studied between 0.25% and 1.75% (5–35% at volume percentage) whereas the total plasma gas mass flow rate and the arc current intensity were kept constant at 72.3 g · min−1 (50 Nl · min−1 at volume flow rate) and 530 A, respectively.

The FL and ANN models were computed and the results are summarized in Figs. 5–7. AI permitted to relate the processing parameters to the porosity.

Results were consistent with experimental data and the tolerance permits to generalize the methodology to predict the coating structural and/or mechanical attributes from the required operating parameters. Table 4 compares the ANN and FL predicted results.

Arc Current Intensity Effect

Porosity presents a linear relationship versus the arc current intensity. Nevertheless, from a general point of view, the arc current intensity increases the plasma energies (both enthalpy and momentum) [4], consequently the porosity decreases when the arc current increases, Fig. 5. Electric energy increases the in-flight particle characteristics (mainly temperature), thus, the coating density [24]. In the same way, a high current improves the flattening process [4], this is why at the lowest current levels (lower than 500 A), the porosity values are higher than 5%.

Total Plasma Gas Mass Flow Rate Effect

Indeed, the plasma gas flow rate increases the particle velocity and decreases their temperature in the plasma jet [9]. Increasing this flow rate above a critical value leads to decrease the largest particle melting state. Thus, only few particles will succeed in penetrating the hot core region of the plasma jet. In the same way, increasing the total plasma gas flow rate leads to decrease the plasma/particle interaction duration, thus the molecular ionization and dissociation in the plasma jet [25]. The particles are not well heated before impact onto substrate [26], thus, the lamellae flattening ratio decreases. This is the main reason why the porosity increases, Fig. 6.

Hydrogen Effect

The hydrogen modifies the plasma jet characteristics [27] i.e. the plasma enthalpy, thermal conductivity and velocity increases while the plasma viscosity decreases [9]. For low values, hydrogen still improves the thermal conductivity of the plasma jet and facilitates the increased thermal exchange between the plasma jet and the powder particles. For high hydrogen values, porosity does not vary significantly due to the fact that the enthalpy of the plasma jet reaches a critical value inducing significant feedstock evaporation [28], Fig. 7. The same tendencies of evolution were demonstrated by Friis et al. [9].

AI, able to approximate any function, presents the advantage to be a powerful statistical procedure which permits to relate the parameter of a given problem to its desired result. Moreover, AI needs less data compared to least square fit and it can be performed continually. For example when the experimental data have only few parameter numbers (performing regression analysis using simple relationships) correlation factors are small and these translate the difficulty to fit non-linear relationships. In the opposite, choosing high polynomial degrees fit better the experimental results. However, they do not represent a meaningful function and require generally more experimental data to generalize the correlations. Table 5 compares the ANN and FL models.

Conclusions

Artificial intelligence model permits to determine and hence to understand the inter-relationships between power process parameters and deposit properties in plasma spray process. They were implemented in this study to predict the porosity.

The proposed model is a convenient and powerful tool for the practical optimization of the coating characteristics and processing parameters in order to obtain the desired combination.

From a general point of view, the ANN model is better than that of FL with regard to the prediction and simulation concept. On the other hand FL represents a better asset in the field of system control.

References

Fauchais P, Montavon G, Vardelle M, Cedelle J (2006) Surf Coat Technol 201:1908–1921

Smith RW, Knight R (1995) JOM 47(8):32–39

Moreau C, Gougeon P, Lamontagne M, Lacasse V, Vaudreuil G, Cielo P (1994) In: Berndt CC, Sampath S (eds) Thermal spray industrial applications. ASM International, Materials Park, pp 431–437

Prystay M, Gougeon P, Moreau C (2001) J Therm Spray Technol 10:67–75

Smith RD, Harlan HU (1976) J Am Ceram Soc 55:979–982

Nelson MM, Illingworth WT (1991) A practical guide to neural networks, 3rd edn. Addison-Wesley, New York

Bhadeshia HKDH (1999) ISIJ Int 39:966–979

Kanta A-F, Montavon G, Planche M-P, Coddet C (2006) Adv Eng Mater 8 (7):628–635

Friis M, Persson C, Wigren J (2001) Surf Coat Technol 141(1–2):115–127

Ogaji SOT, Marinai L, Sampath S, Singh R, Prober SD (2005) Appl Energy 82:81–89

Liang M, Yeap T, Hermansyah A, Rahmati S (2003) Int J Mach Tools Manufact 43:1497–1508

Mamdani EH (1974) Proc IEE 121:1585–1588

Mamdani EH, Assilian S (1975) Int J Man-Machine Studies 7:1–13

Zadeh LA (1973) IEEE Trans Syst Man Cybern 3:28–44

Eker I, Torun Y (2006) Energy Conv Manag 47:377–394

Fraichard T, Garnier P (2001) Robot Auton Syst 34:1–22

Pham DT, Xing L (1995) Neural networks for identification, prediction and control, 2nd printing. Springer, London

Attoh-Okine NO (1999) Adv Eng Softw 30(4):291–302

Bishop CM (1995) Neural Comput 7(1):108–116

Celikoglu HB (2006) Math Comput Model 44:640–658

Kanta A-F, Montavon G, Planche M-P, Coddet C (2007) Adv Eng Mater 9:105–113

Reich Y, Barai SV (1999) Artif Intell Eng 13(3):257–272

Romeo LM, Gareta R (2006) Appl Therm Eng 26:1530–1536

Varacalle DJ, Herman H, Bancke GA, Riggs WL (1992) Surf Coat Technol 54–55:19–24

Fisher IA (1972) Int Met Rev 17:117–129

Steeper TJ, Varacalle DJ, Wilson GC, Riggs WL, Rotolico AJ, Nerz JE (1992) In: Berndt CC (ed) Thermal spray: international advances in coatings technology. ASM International, Materials Park, pp 415–420

Pfender E (1988) Surf Coat Technol 34:1–14

Marsh DR, Weare NE, Walker DL (1961) J Met 2:473–478

Acknowledgements

The French National Agency for Innovation (ANVAR) and the CNRS MRCT “Plasma network” (Réseau Plasma Froid) are gratefully acknowledged for their financial support.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kanta, AF., Montavon, G., Planche, MP. et al. Artificial Intelligence Computation to Establish Relationships Between APS Process Parameters and Alumina–Titania Coating Properties. Plasma Chem Plasma Process 28, 249–262 (2008). https://doi.org/10.1007/s11090-007-9116-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11090-007-9116-9