Abstract

In consideration of the initial singularity of the solution, a temporally second-order fast compact difference scheme with unequal time-steps is presented and analyzed for simulating the subdiffusion problems in several spatial dimensions. On the basis of sum-of-exponentials technique, a fast Alikhanov formula is derived on general nonuniform meshes to approximate the Caputo’s time derivative. Meanwhile, the spatial derivatives are approximated by the fourth-order compact difference operator, which can be implemented by a fast discrete sine transform via the FFT algorithm. So the proposed algorithm is computationally efficient with the computational cost about \(O(MN\log M\log N)\) and the storage requirement \(O(M\log N)\), where M and N are the total numbers of grids in space and time, respectively. With the aids of discrete fractional Grönwall inequality and global consistency analysis, the unconditional stability and sharp H1-norm error estimate reflecting the regularity of solution are established rigorously by the discrete energy approach. Three numerical experiments are included to confirm the sharpness of our analysis and the effectiveness of our fast algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent decades, fractional partial differential equations have attracted many attentions for its superiority in describing the phenomenon related to nonlocality and spatial heterogeneity. The time fractional differential equations are one of the important fractional models developed in various fields [1]. We consider the following reaction-subdiffusion problem

where \({\Omega }\subset \mathbb {R}^{d}\) is a bounded interval (d = 1), rectangle (d = 2), or cube (d = 3), and ∂Ω denotes its boundary. Δ be the d-dimensional Laplacian operator and the reaction coefficient κ is a positive constant. In (1.1), \(\partial ^{\alpha }_{t}={}_{0}^{C}\mathcal {D}^{\alpha }_{t}\) represents the Caputo fractional derivative of order α with respect to t, defined by

where the weakly singular kernel ω1−α(t − s) is defined by ωμ(t) := tμ− 1/Γ(μ) for t > 0.

Despite there are some theoretical methods to solve the fractional diffusion equations analytically, we may still have to explore numerical methods to obtain the solutions in general. In previous literatures, a large number of numerical methods are presented to approximate the Caputo derivative (1.4) availably. For instance, the well-known L1 formula [2, 3], which is constructed by using a piecewise linear interpolation on each subinterval and has the accuracy of order 2 − α. High-order approximations are constructed recently in [4,5,6,7] by using the piecewise quadratic polynomial interpolation. Most of discrete approximations for the Caputo derivative depend on the uniform time-steps and restrict the solutions to be sufficiently smooth. Nevertheless, studies in [8,9,10,11,12] show that the existence of the singular kernel makes the exact solution u always has an initial layer at t = 0, which is typically for fractional differential equations and has great influence on the convergence rate. In [10], the authors prove that the uniform L1 formula is accurate of orders \(O(t_{n}^{\alpha -1}\tau )\) and \(O(t_{n}^{-1}\tau )\) for smooth and nonsmooth initial data respectively, which are far from the desired goals. Recently, there appears several interesting works, including the specified mesh techniques [12,13,14,15,16] and the (generally) nonuniform mesh approaches [17,18,19,20], to fill this gap by resolving the initial regularity and restoring the optimal convergence rate.

However, due to the intrinsically nonlocal property and historical dependence of the fractional derivative, numerical applications of the aforementioned methods are always time-consuming, especially for the problems on two- or three-dimensional space domain. Therefore, it is imperative and necessary to develop efficient algorithms to reduce the huge storage and computational cost. Many efforts have been made in the literatures to develop fast time-stepping methods. Ke et al. [21] apply the fast Fourier transform for block triangular Toeplitz-like systems and propose a fast direct method for solving the time-fractional PDEs. Another idea is suggested by Baffet and Hesthaven [22], where the compression is carried out in the Laplace domain by solving the equivalent ODE with some one-step A-stable scheme. Recently, Jiang et al. [23] use the sum-of-exponentials (SOEs) technique to approximate the kernel and reduce the computational complexity significantly. The overall computational cost O(MN2) and storage O(MN) of the L1 formula are reduced to \(O(MN\log N)\) and \(O(M\log N)\), respectively, where M is the grid points in space and N is the total levels in time. Yan et al. [24] propose a second-order fast formula on uniform meshes to approximate the Caputo derivative. They also investigate the stability and convergence of the resulting second-order time-stepping scheme for the subdiffusion equations, corresponding to the case of κ = 0 of (1.1) by assuming that the solution is sufficiently smooth. Moreover, a fast L1 finite difference scheme on the graded mesh is reported in [25] for time-fractional diffusion equations by considering both the initial singularity and the long-time historical memory. Liao et al. [26] propose a two-level nonuniform L1 scheme for solving semilinear subdiffusion equations and prove that the fast linearized scheme is unconditionally stable on generally nonuniform meshes. To the best of our knowledge, no nonuniform fast algorithms for high-order numerical Caputo derivatives have been published yet.

The main contribution of this paper is to construct and analyze a computationally efficient difference algorithm on a general class of nonuniform time meshes for linear reaction-subdiffusion equations in multi-dimensions. More precisely, the nonuniform Alikhanov formula with the SOEs approximation is employed for the Caputo fractional derivative, and the fourth-order compact difference operator with a fast discrete sine transform (DST) is utilized for spatial discretization so that our algorithm is computationally efficient in both time direction and spatial dimensions. Consider the (possibly nonuniform) time levels 0 = t0 < t1 < ⋯ < tk− 1 < tk < ⋯ < tN = T with the weighted time tn−𝜃 := 𝜃tn− 1 + (1 − 𝜃)tn for an offset parameter 𝜃 ∈ [0,1). Denote the time-step sizes τk = tk − tk− 1 for 1 ≤ k ≤ N and the maximum time-step size \(\tau :=\max \limits _{1\le k\le N }\tau _{k}\). Let the step size ratios ρk := τk/τk+ 1 for 1 ≤ k ≤ N − 1 and the maximum time-step ratio

Our accelerated discrete Caputo formula for the Caputo derivative (1.4) takes a form of

where \(\triangledown _{\tau } v^{k}=v^{k}-v^{k-1}\) and the discrete convolution kernels \(\textsf {A}_{n-k}^{(n)}\) are determined later. Let \(\bar {{\Omega }}_{h}\) be the discrete spatial grid covered by \(\bar {{\Omega }}\) with uniform spacings in each direction. For 0 ≤ n ≤ N, denote the numerical solution \({u_{h}^{n}}\approx u(\mathbf {x}_{h},t_{n})\), and \(u_{h}^{n-\theta }:=\theta u_{h}^{n-1}+(1-\theta ){u_{h}^{n}}\approx u(\mathbf {x}_{h},t_{n-\theta })\) for \(\mathbf {x}_{h} \in \bar {{\Omega }}_{h}\). We take 𝜃 = α/2 and have the following 𝜃-weighted compact difference scheme for the reaction-subdiffusion problem (1.1)–(1.3)

Here, the compact difference operator \(\mathfrak {D}_{h}\) will be defined in the next section, in which the numerical implementation of (1.6)–(1.8) is also described.

The stability and convergence analysis of (1.6)–(1.8) rely on the recently developed fractional Grönwall inequality in [18], which is applicable for any discrete fractional derivative having the discrete convolution form (1.5). For the completeness of presentation, we combine three previous results from [18, Lemma 2.2, Theorems 3.1, and 3.2] into the following lemma.

Lemma 1.1

Assume that discrete convolution kernels \(\textsf {A}_{n-k}^{(n)}\) satisfy the following two criteria:

-

A1. There is a constant πA > 0 such that \(\textsf {A}_{n-k}^{(n)}\geq \frac {1}{\pi _{A}}{\int \limits }_{t_{k-1}}^{t_{k}}\frac {\omega _{1-\alpha }(t_{n}-s)}{\tau _{k}}\mathrm {d} s\) for 1 ≤ k ≤ n ≤ N.

-

A2. The discrete kernels are monotone, i.e., \(\textsf {A}_{n-k-1}^{(n)}-\textsf {A}_{n-k}^{(n)}\geq 0\) for 1 ≤ k ≤ n − 1 ≤ N − 1.

Define also a sequence of complementary discrete convolution kernels \(\textsf {P}^{(n)}_{n-k}\) by

Then the complementary kernels \(\textsf {P}^{(n)}_{n-k}\ge 0\) are well-defined and fulfill

Suppose that the offset parameter 0 ≤ ν < 1, λ is a nonnegative constant independent of the time-steps and the maximum step size

If the nonnegative sequences \((v^{k})_{k=0}^{N}\) and \((\eta ^{k})_{k=1}^{N}\) satisfy

or

then it holds that for 1 ≤ n ≤ N,

where \(E_{\alpha }(z):={\sum }_{k=0}^{\infty }\frac {z^{k}}{{\Gamma }(1+k\alpha )}\) is the Mittag–Leffler function.

This fractional discrete Grönwall lemma suggests that these two criteria A1-A2 should be verified to guarantee the stability of the fully discrete scheme (1.6)–(1.8). In Section 3, we will prove some properties of the discrete convolution kernels \(\textsf {A}^{(n)}_{n-k}\) by assuming

-

M1. The maximum time-step ratio ρ = 3/2.

Then Lemma 1.1 leads to the unconditional stability, see Theorem 3.1. Note that, M1 is a mild mesh condition, but it implies that a sudden, drastic reduction of time-steps should be avoided to ensure the stability.

The present analysis is also built on a new concept named global consistency analysis, developed recently by Liao et al. in [19], where a sharp L2-norm error estimate of the nonuniform Alikhanov scheme for the problem (1.1)–(1.3) is established systematically. To make the analysis extendable (such as for distributed-order subdiffusion problems), we assume that the continuous solution u satisfies the regularity assumptions

where ν ∈{1,2,3} and 0 < t ≤ T, σ ∈ (0,1) ∪ (1,2) is a regularity parameter and Cu is a positive constant. For instance [9, 11, 12], this assumption holds with σ = α for the original problem (1.1) if f(x, t) = 0 and \(u_{0}\in {H_{0}^{1}}({\Omega })\cap H^{2}({\Omega })\). Invoking to the new global consistency error analysis of the accelerated Alikhanov formula presented in Lemma 4.2 and the fractional discrete Grönwall lemma described earlier, we establish sharp H1-norm error estimate by means of the time-space error splitting technique, see Theorem 4.1. This theorem implies that the convergence analysis is applicable to a general family of nonuniform time meshes satisfying M1. To resolve the initial singularity of solution and solve it efficiently, we choose the time-steps that satisfy the following condition:

- AssG.:

-

There is a positive constant Cγ independent of k, such that \(\tau _{k}\le C_{\gamma }\tau \min \limits \{1,t_{k}^{1-1/\gamma }\}\) for 1 ≤ k ≤ N, with tk ≤ Cγtk− 1 and τk/tk ≤ Cγτk− 1/tk− 1 for 2 ≤ k ≤ N.

Here, the parameter γ ≥ 1 controls the extent to which the time levels are concentrated near t = 0. If the mesh is quasi-uniform, then AssG holds with γ = 1. As γ increases, the initial step sizes become smaller compared with the later ones. A simple example satisfying AssG is the graded mesh tk = T(k/N)γ. Under the assumption of AssG, Theorem 4.1 shows the error estimate of the time-stepping scheme (1.6)–(1.8) as

and the second-order accuracy in time is achieved if γ ≥ 2/σ, see Corollary 4.1 and Remark 2. In the last section, three numerical examples are given to confirm the theoretical results and verify the accuracy and effectiveness of the fast scheme. Throughout the paper, any subscripted C, such as Cu, Cv, and CΩ, denotes a general positive constant, which has different values in different circumstances, and always depends on the given data and the continuous solution but is independent of the time and spatial steps.

2 Accelerated Alikhanov formula and fully discrete scheme

In this section, based on the SOEs approximation, fast Alikhanov formula on generally nonuniform time meshes is considered at first. Then, we develop a fully discrete second-order compact scheme for the problem (1.1)–(1.3) combined with the compact difference operator. Furthermore, an efficient numerical implementation is given at the end of this section.

2.1 Fast Alikhanov formula on nonuniform grids

Denote π1,kv and π2,kv are the linear interpolation of a function v with respect to tk− 1, tk and the quadratic interpolation of v with respect to tk− 1, tk, and tk+ 1, respectively. Recalling the definition \(\triangledown _{\tau } v^{k}\), it is easy to check that for k ≥ 1,

For simplicity of presentation, we always denote ϖn(ξ) := −ω2−α(tn−𝜃 − ξ) for 0 ≤ ξ ≤ tn−𝜃 and \(\varpi _{n}^{\prime }(\xi )=\omega _{1-\alpha }(t_{n-\theta }-\xi )\) for 0 ≤ ξ < tn−𝜃. The nonuniform Alikhanov approximation to the Caputo fractional derivative (1.4) is defined as [19]

where the discrete coefficients \(a_{n-k}^{(n)}\) and \(b_{n-k}^{(n)}\) are defined by

Rearranging the terms in (2.1), we reformulate (2.1) in the following discrete convolution form

where the discrete convolution kernels \(a_{n-k}^{(n)}\) are defined as: \(A_{0}^{(1)}:=a_{0}^{(1)}\) if n = 1, and for n ≥ 2,

Due to the essentially nonlocal property of the fractional derivative, the formula mentioned above involves the solution information at all historical time-levels and it is time-consuming in practical calculations. Here, the SOEs technique is employed to deduce an accelerated Alikhanov formula to improve the efficiency. The key point of the SOEs approximation (see [23, Theorem 2.5] or [26, Lemma 2.2]) is as follows:

Lemma 2.1

Given α ∈ (0,1), an absolute tolerance error 𝜖 ≪ 1, a cut-off time Δt > 0, and a final time T, there exist a positive integer Nq, positive quadrature nodes sℓ, and positive weights 𝜗ℓ (1 ≤ ℓ ≤ Nq) such that

where the quadrature nodes number Nq satisfies

In [24], the authors illustrate that \(N_{q}=O(\log N)\) for T ≫ 1 and \(N_{q}=O(\log ^{2}N)\) for T ≈ 1. Moreover, they also list a table to show the relationships between Nq and various parameters α, τ, and 𝜖, see Table 1 in [24]. These results indicate the SOEs strategy efficiently reduces the computational complexity and storage requirement. By virtue of this lemma, we divide (2.1) into a sum of a local part (an integral over [tn− 1, tn−𝜃]) and a historical part (an integral over [0,tn− 1]). In the former part, linear interpolation is utilized to approximate \(v^{\prime }(\xi ) (\xi \in (t_{n-1}, t_{n-\theta }))\) as before, and in the latter one, SOEs technique is applied to the convolution kernel \(\varpi _{n}^{\prime }(\xi )\), i.e.,

where

To compute \({\mathcal{H}}^{\ell }(t_{k})\) efficiently, we attempt to obtain the recurrence formulation. Applying the quadratic interpolation to approximate \(v^{\prime }(\xi )\) in each subinterval [tk− 1, tk] (1 ≤ k ≤ n − 1) yields

where the coefficients are given by

Note that these coefficients are calculated exactly based on the integral property of exponential functions. Overall, the accelerated nonuniform Alikhanov formula can be formulated as

where \({\mathcal{H}}^{\ell }(t_{k})\) satisfies \({\mathcal{H}}^{\ell }(t_{0})=0\) and the recurrence relation

2.2 Second-order compact scheme and implementation

We discretize the spatial domain \({\Omega } \in \mathbb {R}^{d}\) by equal spatial step sizes hk = (xk, R − xk, L)/Mk for the positive integers Mk (1 ≤ k ≤ d) and denote \(h:=\max \limits _{1\leq k \leq d}h_{k}\) is the maximum spatial length. Let \(x_{k,i_{k}}=x_{k,L}+i_{k}h_{k}\) for 0 ≤ ik ≤ Mk, 1 ≤ k ≤ d, then the fully discrete grids in space can be defined as \(\bar {{\Omega }}_{h}:=\{\mathbf {x}_{h}=(x_{1,i_{1}},x_{2,i_{2}},\cdots ,x_{d,i_{d}})|0\leq i_{k}\leq M_{k},1\leq k \leq d\}\). Set \({\Omega }_{h}=\bar {{\Omega }}_{h}\cap {\Omega }\) and the boundary \(\partial {\Omega }_{h}=\bar {{\Omega }}_{h}\cap \partial {\Omega }\). Also, \(M={\prod }_{k=1}^{d}(M_{k}+1)\) represents the total number of grid points in space. Denote an index vector h = (i1, i2, ⋯ ,id) and for any grid function vh at the k th position (1 ≤ k ≤ d), we introduce the following difference operators:

and the compact difference operator is denoted as \(\mathfrak {D}_{k}v_{i_{k}}:=\frac {{\delta _{k}^{2}}}{\mathcal {I}_{k}}v_{i_{k}}\). As a result, the second-order and fourth-order spatial approximations of Δv(xh) for xh ∈Ωh can be denoted separately as \({\Delta }_{h}v_{h}:={\sum }_{k=1}^{d}{\delta _{k}^{2}}v_{h}\) and \(\mathfrak {D}_{h}v_{h}:={\sum }_{k=1}^{d}\mathfrak {D}_{k}v_{h}\).

Let \(\mathbb {V}_{h}=\{v=(v_{h})_{\mathbf {x}_{h} \in {\Omega }_{h}}|v_{h}=0\ \text {when}\ \mathbf {x}_{h} \in \partial {\Omega }_{h} \}\) be the space of grid functions. For any \(v,w \in \mathbb {V}_{h}\), we define the discrete inner product \(\langle v, w\rangle _{h}:=({\prod }_{k=1}^{d}h_{k}){\sum }_{\mathbf {x}_{h} \in {\Omega }_{h}}v_{h}w_{h}\) and the corresponding discrete L2-norm \(\|v\|:=\sqrt {\langle v,v\rangle _{h}}\). Also, the discrete H1 semi-norm is defined as \(|{v}|_{1}:=\sqrt {{\sum }_{k=1}^{d}\|\delta _{k}v_{h}\|^{2}}\). Based on the embedding theorem, there exist a positive constant CΩ dependent on the domain Ω such that

Combining with the fast Alikhanov formula derived earlier, one has a fully discrete second-order compact scheme (1.6)–(1.8) for the problem (1.1)–(1.3). To speed up the numerical computations, we introduce the fast discrete sine transform (DST) [27, 28] in spatial direction and bypass the direct calculations. Based on the DST and compact difference operator, for any grid function vh at the k th position, fourth-order spatial approximation can be drawn:

After simple calculations, for 1 ≤ jk ≤ Mk − 1 and 1 ≤ k ≤ d, we have

Denote an index set ν = {(j1, j2, ⋯ ,jd)|1 ≤ jk ≤ Mk − 1,1 ≤ k ≤ d}. Resorting to the fast Alikhanov formula in (2.5) and the fast DST mentioned above, numerical scheme (1.6) can be reformulated in the following way for implementation:

where \(\widehat {{\mathcal{H}}}^{\ell }(t_{0})=0\). \(\widehat {u}_{\nu }^{n-1}\) and \(\widehat {f}^{n-\theta }\) are obtained from \(u_{h}^{n-1}\) and fn−𝜃 via the DST, respectively. The current solution \({u_{h}^{n}}\) is computed from \(\widehat {u}_{\nu }^{n}\) via the inverse DST.

The fast DST is essentially implemented via the fast Fourier transform (FFT). In [29], the authors illustrate that FFT algorithm can reduce the numerical cost from O(M2) to \(O(M\log M)\). Therefore, compared with traditional algorithms, the presented one reduces the overall computational cost from O(M2N2) to \(O(MN\log M \log N)\) and the overall storage from O(MN) to \(O(M\log N)\).

3 Properties of discrete convolution kernels and stability

To prepare for the subsequent analysis, in this section, we show that the discrete kernels \(\textsf {A}_{n-k}^{(n)}\) of the fast approximation \((\partial _{a\tau }^{\alpha }v)^{n-\theta }\) satisfy A1-A2 in Lemma 1.1. Then, the stability in the H1-norm can be verified rigorously via the fractional discrete Grönwall lemma.

3.1 Properties of discrete kernels

We eliminate the historical term \({\mathcal{H}}^{\ell }(t_{n-1})\) in (2.5) and reformulate the recursive relationship into the discrete convolution form. The recurrence relation in (2.5) leads to

Replacing k by n − 1 and plugging (3.1) into (2.5), we use the definitions (2.2)–(2.3) to obtain an alternative formula

where the positive coefficients \(\textsf {A}_{n-k}^{(n)}\) and \(\textsf {b}_{n-k}^{(n)}\) are defined by

Correspondingly, we rearrange (3.2) and obtain the following discrete convolution form

where the discrete convolution kernels \(\textsf {A}_{n-k}^{(n)}\) are defined as: \(\textsf {A}_{0}^{(1)}:=a_{0}^{(1)}\) if n = 1, and for n ≥ 2,

Comparing the coefficients between (2.2)–(2.3) and (3.3)–(3.4), we see that the latter ones are obtained by replacing the SOEs approximations with the integral kernels in the formers. Then Lemma 2.1 gives

and when M1 (\(\rho _{k}\leq \rho =\frac {3}{2}\)) holds, for 1 ≤ k ≤ n − 1,

Next, we show that discrete convolution kernels \(\textsf {A}_{n-k}^{(n)}\) satisfy the assumptions A1-A2. The following bounds which can be proved by the integral mean-value theorem will be utilized in several places,

Lemma 3.1

Let M1 holds. Denote \(\epsilon ^{*}=\frac {\alpha }{2(1-\alpha )}\omega _{1-\alpha }(T)\) and \(\epsilon ^{**}=\frac {1}{26}\omega _{1-\alpha }(T)\). If the tolerance error \(\epsilon \leq \min \limits \{\epsilon ^{*},\epsilon ^{**}\}\), then the discrete convolutional kernels \(\textsf {A}_{n-k}^{(n)}\) satisfy A1 with πA = 2 for 1 ≤ k ≤ n. Furthermore, \(\textsf {A}_{n-k}^{(n)}\) has an upper bound with \(\textsf {A}_{n-k}^{(n)}\leq \frac {2}{\tau _{n}}\omega _{2-\alpha }(\tau _{n})\).

Proof

The definition of \(\textsf {A}_{n-k}^{(n)}\) in (3.3) and the integral mean-value theorem yield

where sℓ,𝜗ℓ are positive in Lemma 2.1 and the monotonicity of exponential function was also used. For k = n − 1, the definition (2.2) and (3.7) yield

Since ex > 1 + x for \(x\in \mathbb {R}\) and \(\ln (1-\frac {x}{2})<0\) for 0 < x < 1, one has

Therefore, we obtain \(\textsf {a}_{0}^{(n)}=a_{0}^{(n)}> a_{1}^{(n)}+\epsilon \geq \textsf {a}_{1}^{(n)}\) by using the definitions in (3.3) and the inequality (3.5). It means that \(\textsf {a}_{n-k-1}^{(n)}>\textsf {a}_{n-k}^{(n)}\) for 1 ≤ k ≤ n − 1.

In consideration of [19, Lemma 4.3] and \(0<\theta <\frac {1}{2}\), it is easy to check

For 1 ≤ k ≤ n − 1, the definition of \(\textsf {A}_{n-k}^{(n)}\) and the inequalities (3.5)–(3.6) yield

Note that \(\frac {2+2x-x^{2}}{2(1+x)}\) is increasing for \(x\in (0,\frac {3}{2}]\), and \(\epsilon \leq \epsilon ^{**}\leq \frac {1}{26}w_{1-\alpha }(t_{n-\theta }-t_{k-1})<\frac {1}{26}a_{n-k}^{(n)}\), then we find

For the case of k = n, it is clear that \(\textsf {A}_{0}^{(n)}\geq \textsf {a}_{0}^{(n)}=a_{0}^{(n)}>\frac {1}{2}{\int \limits }_{t_{k-1}}^{t_{k}}\frac {\omega _{1-\alpha }(t_{n}-\xi )}{\tau _{k}}\mathrm {d} \xi \). In summary, by choosing πA = 2, the discrete convolution kernels \(\textsf {A}_{n-k}^{(n)}\) satisfy A1 for 1 ≤ k ≤ n.

Now we derive an upper bound of \(\textsf {A}_{n-k}^{(n)}\). From (3.6) and (3.8), one gets

Note that \(\frac {x^{3}}{2(1+x)}\) is increasing for \(x\in (0,\frac {3}{2}]\), we apply (3.7) and M1 to get

Since \(a_{0}^{(n)}=\frac {1}{\tau _{n}}(1-\theta )^{1-\alpha }{\int \limits }_{t_{n-1}}^{t_{n}}\omega _{1-\alpha }(t_{n}-\xi )\mathrm {d} \xi \leq \frac {1}{\tau _{n}}{\int \limits }_{t_{n-1}}^{t_{n}}\omega _{1-\alpha }(t_{n}-\xi )\mathrm {d} \xi \), we obtain an upper bound, i.e., \(\textsf {A}_{n-k}^{(n)}\leq \frac {2}{\tau _{n}}{\int \limits }_{t_{n-1}}^{t_{n}}\omega _{1-\alpha }(t_{n}-\xi )\mathrm {d} \xi =\frac {2}{\tau _{n}}\omega _{2-\alpha }(\tau _{n})\). This completes the proof. □

Next, we establish the monotonicity of discrete kernels. For simplicity of presentation, two positive coefficients are introduced [19]

Some properties of the coefficients are also given as follows:

Lemma 3.2

The positive coefficients in (3.9) satisfy

Proof

We refer to [19, Lemma 4.4] for (i)–(ii) and [19, Lemma 4.5] for (iii). □

Next, we are ready to prove the following monotonicity lemma.

Lemma 3.3

Let M1 holds. Denote \(\epsilon _{k}=\frac {\alpha (1+5\rho _{k})\tau _{k}}{105T}\omega _{1-\alpha }(T)\) for 1 ≤ k ≤ n − 1. If the tolerance error \(\epsilon \leq \min \limits _{1\leq k \leq n-1}\epsilon _{k}\), then the discrete convolutional kernels \(\textsf {A}_{n-k}^{(n)}\) satisfy A2 for 1 ≤ k ≤ n.

Proof

Comparing the discrete convolution kernels between two formulas and invoking to the inequalities (3.5)–(3.6), one has \(\textsf {A}_{0}^{(1)}=A_{0}^{(1)}\) if n = 1, and for n ≥ 2,

where M1 was also used. Also, we can derive a similar estimate of \(\left |{\textsf {A}_{n-k-1}^{(n)}-A_{n-k-1}^{(n)}}\right |\). Combining these two inequalities, we have \(A_{0}^{(1)}=\textsf {A}_{0}^{(1)}\) if n = 1. For n ≥ 2, \(A_{0}^{(n)}\leq \textsf {A}_{0}^{(n)}+\frac {29\epsilon }{20}\) and

According to [19, Theorem 2.2 (II)] and Lemma 3.2 (i), it holds that

Recalling the definition of ϖn(ξ), we get the second derivative \(\varpi _{n}^{\prime \prime }(\xi )=-\omega _{-\alpha }(t_{n-\theta }-\xi )>0\) and the third derivative \(\varpi _{n}^{\prime \prime \prime }(\xi )=\omega _{-\alpha -1}(t_{n-\theta }-\xi )>0\) for 0 ≤ ξ < tn−𝜃. By means of [19, Lemma 2.1], for 1 ≤ k ≤ n − 1, it is not difficult to check

Substituting (3.13) into (3.12) and applying (3.11), one gets

When the hypothesis holds, i.e., \(\epsilon \leq \min \limits _{1\leq k \leq n-1}\frac {\alpha (1+5\rho _{k})\tau _{k}}{105T}\omega _{1-\alpha }(T)\), we obtain

This completes the proof of Lemma 3.3. □

Remark 1

In numerical simulations, the SOEs tolerance error 𝜖 is always set to be small enough so that the convergence order should not be degraded. Hence, the condition of 𝜖 in Lemma 3.3 is allowed to guarantee the monotonicity of discrete convolutional kernels \(\textsf {A}_{n-k}^{(n)}\).

In addition to the boundedness and monotonicity described above, the following auxiliary lemma is also indispensable in the subsequent analysis.

Lemma 3.4

Under the conditions of Lemma 3.3, it holds that

Proof

The conclusion is equivalent to \(\frac {1-2\theta }{1-\theta }\textsf {A}_{0}^{(n)}-\textsf {A}_{1}^{(n)}>0\). For n ≥ 2, (3.10) yields

Resorting to the proof of [19, Theorem 2.2 (III)], we have

By means of Lemma 3.2 (ii)–(iii) with k = n − 2, it is easy to check

where M1 and \(\frac {2+2x-x^{3}}{2(1+x)}\geq \frac {13}{40}\) for \(x\in (0,\frac {3}{2}]\) were used. Then, it follows that

where Lemma 3.2 (ii) with k = n − 1 and (3.13) were used. Under the conditions of Lemma 3.3, one has \(\epsilon \leq \epsilon _{n-1}=\frac {\alpha (1+5\rho _{n-1})\tau _{n-1}}{105T}\omega _{1-\alpha }(T)\). This together with M1 leads to

Overall, for n ≥ 2, we have \(\frac {1-2\theta }{1-\theta }\textsf {A}_{0}^{(n)}-\textsf {A}_{1}^{(n)}>0\). This completes the proof of Lemma 3.4. □

If the tolerance error \(\epsilon \leq \min \limits \{\epsilon ^{*}, \epsilon ^{**}, \min \limits _{1\leq k\leq n-1}\epsilon _{k}\}\) and M1 holds, Lemmas 3.1 and 3.3 state that the discrete convolution kernels \(\textsf {A}_{n-k}^{(n)}\) satisfy the assumptions A1-A2 and have the property in Lemma 3.4. According to Lemmas 3.3 and 3.4, one has the following result.

Corollary 3.1

Under the conditions of Lemma 3.3, the fast Alikhanov formula (2.5) satisfies

Proof

Lemma 3.3 implies that the discrete convolution kernels \(\textsf {A}_{n-k}^{(n)}\) are monotone, and Lemma 3.4 shows that 𝜃(n) ≥ 𝜃 for 1 ≤ n ≤ N, where

Then, the proof of [18, Lemma 4.1] leads to the claimed result. □

3.2 H 1-norm stability

In the following analysis, we also need some results on the spatial approximation \(\mathfrak {D}_{h}\). Recalling the compact difference operator, it is well-known that the matrix corresponding to \(\mathcal {I}_{k}\) is a tridiagonal, real, symmetric, and positive definite matrix, and the eigenvalues are in the form of

Denote Hk is the matrix associated with the operator \(\mathcal {I}_{k}^{-1}\) and \(\lambda _{H_{k},j_{k}}\) represents the eigenvalues of Hk. For 1 ≤ k ≤ d, it is easy to check \(1<\lambda _{H_{k},j_{k}}< \frac {3}{2}\) and

Now we establish the stability of the time-stepping scheme (1.6)–(1.8). Taking the discrete inner product of (1.6) with \(2(\partial _{a\tau }^{\alpha }u_{h})^{n-\theta }\) and applying Cauchy-Schwarz inequality yield

With the aids of (3.14) and embedding inequality (2.6), we apply Corollary 3.1 with \(v:={\Delta }_{h}^{1/2}u_{h}\) and obtain

which has the form of (1.12) with λ := κ2CΩ, \(v^{k}:=|{{u_{h}^{k}}}|_{1}^{2}\), and ηn := ∥fn−𝜃∥2. Under the conditions of Lemmas 3.1 and 3.3, we see that Lemma 1.1 holds with πA = 2. Consequently, Lemma 1.1 indicates the H1-norm stability of the presented scheme in the following sense.

Theorem 3.1

Let the conditions of Lemmas 3.1 and 3.3 hold. If the restriction of maximum time-step \(\tau \leq 1/\sqrt [\alpha ]{4\kappa ^{2}{\Gamma }(2-\alpha )C_{{\Omega }}}\), then the numerical solution \({u_{h}^{n}}\) of the scheme (1.6)–(1.8) is stable in the sense that

4 Global consistency analysis and convergence

4.1 Global consistency error analysis

We now proceed with the global consistency error analysis of the fast Alikhanov formula (2.5). First of all, the local consistency error of the standard Alikhanov formula on nonuniform girds in (2.1) is given (slightly simplified) from [19, Theorem 3.4].

Lemma 4.1

Let M1 holds. Assume that v ∈ C3(0,T], and there exists a positive constant Cv such that \(|v^{\prime \prime \prime }(t)|\leq C_{v}(1+t^{\sigma -3})\) for 0 < t ≤ T, where σ ∈ (0,1) ∪ (1,2) is a regularity parameter. For the standard Alikhanov formula on nonuniform girds, there exist

where two quantities \(G_{\text {loc}}^{n}\) and \(G_{\text {his}}^{k}\) are defined as

The main difference between the fast Alikhanov formula and the standard one is that the convolution kernels are approximated by SOEs with the tolerance error 𝜖. Denote \(\hat {t}_{j}=\max \limits \{1,t_{j}\}\) for 1 ≤ j ≤ N. When j ≥ 2, the regularity assumptions (1.14) and (3.10) lead to

From Lemma 4.1 and (3.11), we have \(|{(\partial ^{\alpha }_{t}v)(t_{1-\theta })-(\partial _{\tau }^{\alpha }v)^{1-\theta }}|\leq \textsf {A}_{0}^{(1)}G_{\text {loc}}^{1}\) if j = 1, and for j ≥ 2,

In view of the regularity assumptions (1.14) and the definition of \(G_{\text {loc}}^{n}\), it is not difficult to find \(G_{\text {loc}}^{1}\leq C_{v}\sigma ^{-1}\tau _{1}^{\sigma }\) and \(G_{\text {loc}}^{k}\leq C_{v}t_{k-1}^{\sigma -3}{\tau _{k}^{3}}\) for 2 ≤ k ≤ N. Accordingly, the regularity assumptions (1.14) and the definition of \(G_{\text {his}}^{k}\) mentioned above imply that \(G_{\text {his}}^{1}\leq C_{v}(\sigma ^{-1}\tau _{1}^{\sigma }+t_{1}^{\sigma -3}{\tau _{2}^{3}})\) and \(G_{\text {his}}^{k}\leq C_{v}(t_{k-1}^{\sigma -3}{\tau _{k}^{3}}+t_{k}^{\sigma -3}\tau _{k+1}^{3})\) for 2 ≤ k ≤ N − 1. Hence, we have

Denote the local consistency error

a triangle inequality leads to \(|{{\Upsilon }^{1-\theta }[v]}|\leq \textsf {A}_{0}^{(1)}G_{\text {loc}}^{1}\) if j = 1, and for j ≥ 2,

Multiplying the inequality (4.2) by \(\textsf {P}_{n-j}^{(n)}\) and summing the index j from 1 to n, we exchange the order of summation and apply the definition (1.9) of \(\textsf {P}_{n-j}^{(n)}\) to get

where (1.11) with m = 1 and πA = 2 was used in the last step. Under the conditions of Lemma 3.1, the boundedness implies that \(\textsf {A}_{0}^{(k)}\leq \frac {2}{\tau _{k}}\omega _{2-\alpha }(\tau _{k})\), \(\textsf {A}_{k-2}^{(k)}\geq \frac {1}{2}\omega _{1-\alpha }(t_{k}-t_{1})\) and

Moreover, the identity (1.10) for the complementary discrete kernels \(\textsf {P}_{n-j}^{(n)}\) gives

Then, it follows from (4.3) that

The first term on the right-hand side is bounded by \(C_{v}\left (\sigma ^{-1}\tau _{1}^{\sigma }+t_{1}^{\sigma -3}{\tau _{2}^{3}}\right )\leq C_{v}\frac {\tau _{1}^{\sigma }}{\sigma }\), and two terms in the middle can be bounded by

Combining these inequalities together, one has

In conclusion, by taking the initial singularity into account, we have the following global consistency error analysis of the fast Alikhanov approximation on nonuniform meshes.

Lemma 4.2

Assume that v ∈ C3(0,T] and there exist Cv > 0 such that \(|v^{\prime }(t)|\leq C_{v}(1+t^{\sigma -1})\) and \(|v^{\prime \prime \prime }(t)|\leq C_{v}(1+t^{\sigma -3})\) for 0 < t ≤ T, where σ ∈ (0,1) ∪ (1,2) is a regularity parameter. Denote \(\hat {t}_{n}=\max \limits \{1,t_{n}\}\). Under the conditions of Lemma 3.1, the global consistency error of the fast Alikhanov approximation (2.5) satisfies

4.2 Sharp H 1-norm error analysis

As described in section 3.1 of [20], under the assumption of AssG, the traditional H1-norm analysis together with the discrete Grönwall inequality in Lemma 1.1 shows the error estimate of the time-stepping scheme (1.6)–(1.8) as

It is obvious that a loss of theoretical accuracy \(O(\tau ^{\gamma \frac {\alpha }{2}})\) exists in time under the regularity assumption (1.14), which leads to a suboptimal error estimate due to the initial singularity and the discrete convolution form. The authors utilize the time-space error splitting technique to overcome this problem (see [20] for more details). In the following analysis, an alternative two-stage error analysis is applied to establish sharp H1-norm error estimate for the fully discrete scheme (1.6)–(1.8) under the realistic assumption of solution.

Define the continuous inner product \((v,w):={\int \limits }_{{\Omega }}v(\mathbf {x})w(\mathbf {x})\mathrm {d} \mathbf {x}\) with the associated L2-norm \(\|v\|_{L^{2}}:=\sqrt {(v,v)}\). Also, the H1 semi-norm is defined as \(|{v}|_{{H}^{1}}:=\sqrt {(-{\Delta } v, v)}\). It is easy to check \(\|v\|_{L^{2}}\leq C_{{\Omega }}|{v}|_{H^{1}}\) via the embedding inequality. Additionally, we have \(|{v}|_{1}\leq C_{{\Omega }}|{v}|_{H^{1}}\) by using the Cauchy-Schwarz inequality.

Stage 1: Temporal error analysis for time-discrete system We apply the fast Alikhanov formula (3.2) to approximate the problem (1.1)–(1.3) and obtain the time-discrete system

The existence and uniqueness of the solution un can be proved straightforward since it is a linear elliptic problem. Denote the solution error en(x) := u(x, tn) − un for x ∈Ω, then the governing equation reads

where Υn−𝜃[u] is the local consistency error described in (4.1), and

Taking the continuous inner product of (4.7) with −Δen−𝜃, we have

In terms of the first Green formula and Cauchy-Schwarz inequality, we apply Corollary 3.1 with v := Δ1/2e and obtain

which takes the form of (1.13) with λ := 2κ, \(v^{k}:=|{e^{k}}|_{H^{1}}\), and \(\eta ^{n}:=2\left (|{{\Upsilon }^{n-\theta }[u]}|_{H^{1}}+|{R_{\tau }^{n-\theta }}|_{H^{1}}\right )\). By virtue of Lemmas 3.1 and 3.3, we see that Lemma 1.1 is satisfied with πA = 2. Thus, under the restriction of maximum time-step size \(\tau \le 1/\sqrt [\alpha ]{8\kappa {\Gamma }(2-\alpha )}\), Lemma 1.1 gives

Similar to the proof of [19, Lemma 3.8], it is easy to find

Therefore, Lemma 4.2 and (4.9) show that

Stage 2: Spatial error analysis for fully discrete system Now return to the fully discrete system, which can be viewed as the spatial approximation of time-discrete system (4.4)–(4.6). Under the priori assumptions in (1.14), this system has a unique solution un ∈ H4(Ω) for 1 ≤ n ≤ N. Denote the solution error \({e_{h}^{n}}:=u^{n}-{u_{h}^{n}}\) for xh ∈Ωh, the governing equation reads

where \(R_{s}^{n-\theta }=(\mathfrak {D}_{h}-{\Delta })u^{n-\theta }\). By means of Taylor’s expansion with integral reminder, it is easy to check that \(\|R_{s}^{n-\theta }\|\leq C_{u}h^{4}\).

Taking the discrete inner product of (4.11) with 2\((\partial _{a\tau }^{\alpha }e_{h})^{n-\theta }\), one has

By means of (3.14) and the embedding inequality (2.6), we apply Corollary 3.1 with \(v:={\Delta }_{h}^{1/2}e_{h}\) and obtain

which has the form of (1.12) with λ := κ2CΩ, \(v^{k}:=|{{e_{h}^{k}}}|_{1}^{2}\), and \(\eta ^{n}=\|R_{s}^{n-\theta }\|^{2}\). Under the conditions of Lemmas 3.1 and 3.3, we see that Lemma 1.1 holds with πA = 2. If the maximum time-step size \(\tau \le 1/\sqrt [\alpha ]{4\kappa ^{2}{\Gamma }(2-\alpha )C_{{\Omega }}}\), Lemma 1.1 yields

Combining (4.10) with (4.12), we utilize the triangle inequality

Overall, we establish the convergence of the numerical solution.

Theorem 4.1

Assume that the exact solution u satisfies the regularity property in (1.14) with the parameter σ ∈ (0,1) ∪ (1,2). Let M1 holds and \(\hat {t}_{n}=\max \limits \{1,t_{n}\}\) for 1 ≤ n ≤ N. Under the restriction of maximum time-step \(\tau \le 1/\sqrt [\alpha ]{4\kappa \max \limits \{2, \kappa C_{{\Omega }}\}{\Gamma }(2-\alpha )}\) and the SOEs tolerance error \(\epsilon \leq \min \limits \{\epsilon ^{*}, \epsilon ^{**}, \min \limits _{1\leq k\leq n-1}\epsilon _{k}\}\), the numerical solution \({u_{h}^{n}}\) is convergent with respect to the discrete H1-norm,

As mentioned in Section 1, the aforesaid analysis is applicable to a wider class of unequal time-steps satisfying M1. On the other hand, if AssG holds, one has

where \(\beta :=\min \limits \{2,\gamma \sigma \}\). Obviously, \(\tau _{1}^{\sigma }\leq C_{\gamma }\tau ^{\gamma \sigma }\leq C_{\gamma }\tau ^{\beta }\). Moreover, it is easy to check

Then, Theorem 4.1 gives the following corollary.

Corollary 4.1

Let the conditions of Theorem 4.1 hold. If the time mesh satisfies AssG, the discrete solution \({u_{h}^{n}}\) is convergent in the sense that

It says that the optimal grading parameter γopt = 2/σ.

Remark 2

Actually, an alternative estimate of (4.13) can be obtained similarly

This means that the fast nonuniform Alikhanov formula \((\partial _{a\tau }^{\alpha }v)^{n-\theta }\) approximates \((\partial _{t}^{\alpha }v)(t_{n-\theta })\) to order \(O(\tau ^{\min \limits \{\gamma \sigma , 3-\alpha \}})\), and the optimal grading parameter γopt = (3 − α)/σ. However, restricted by the term (4.14) arising from \(R_{\tau }^{n-\theta }\) in (4.8), the temporal convergence rate of the new scheme is to order O(τ2) if γ ≥ 2/σ.

5 Numerical examples

In this section, three examples are presented to verify the effectiveness and accuracy of the new scheme. For comparisons, we denote the numerical scheme using the standard Alikhanov (Alikhanov) approximation (2.4) as Scheme 1 in the tables, while the fully discrete scheme (1.6)–(1.8) using the accelerated Alikhanov (AccA) formula is called Scheme 2 for simplicity. To illustrate superiority of the fast algorithm, comparisons of computational cost between Scheme 1 and Scheme 2 are also indicated.

Example 1

To test the validity and accuracy of the fast Alikhanov approximation, we begin by considering a fractional ordinary differential equation \(\mathcal {D}^{\alpha }_{t}u=\omega _{1+\sigma -\alpha }(t)\). The time interval is (0,1) and the exact solution is u = ω1+σ(t) which satisfies the regularity assumptions (1.14). To trade off the accuracy and efficiency, here and in what follows, we choose 𝜖 = 10− 12 in the simulations for the fast algorithm.

Tables 1 and 2 display the temporal error and convergence order in uniform mesh (γ = 1). Due to the lack of smoothness near the initial time, we find that all convergence orders are hard to achieve O(τ3−α). Orders in two tables can be described as O(τσ) which supports the sharp error estimate in Remark 2. By comparison test, we observe that the convergence rate is improved with the increase of the regularity parameter σ. It is also in agreement with the theoretical analysis. Additionally, computational errors in two tables indicate that the accelerated formula has the same accuracy as well as the standard one.

The graded grids are employed to improve the convergence order. Tables 3, 4, and 5 list the temporal error and convergence order for σ = 0.8 in nonuniform partitions with different parameters α and γ. The effectiveness of the fast Alikhanov formula is still held in this situation due to the same temporal error between two formulas. Comparing with Table 1, it is not difficult to find that the convergence rate is increased with different γ. Numerical results in Tables 3, 4, and 5 witness the predicted time accuracy in Remark 2. Moreover, invoking to the data in three tables, one gets the optimal mesh parameter γopt = (3 − α)/σ which is also coincide with our analysis. All these results substantiate the validity of nonuniform meshes in resolving the initial singularity and the sharpness of error estimate in Remark 2. Also, we see that the fast Alikhanov approximation is effective no matter in uniform grid or nonuniform ones.

Example 2

We consider the problem (1.1)–(1.3) in Ω = (0,π)2 to witness the effectiveness and accuracy of Scheme 2. Let T = 1, κ = 2, and \(f=\omega _{1+\sigma -\alpha }(t){\sin \limits } x {\sin \limits } y\). The continuous problem has an exact solution \(u=\omega _{1+\sigma }(t){\sin \limits } x {\sin \limits } y\) which satisfies the regularity assumptions (1.14).

Define the \(L_{\infty }(H^{1})\) semi-norm solution error \(e(N,h)=\max \limits _{1\leq k\leq N}|{u^{k}-{u_{h}^{k}}}|_{1}\). In numerical tests, temporal order and spatial order are investigated by supposing that e(N, h) ≈ C(τp + hq) and evaluating the convergence rates \(p\approx \log [e(N,h)/e(2N,h)]\) and \(q\approx \log [e(N,h)/e(N,h/2)]\). To check the spatial accuracy, we choose the parameters α = 0.4,σ = 1.6 and set N = 1000 to avoid contamination of the temporal error. Spatial errors and convergence rates for both uniform partition and nonuniform ones are recorded in Table 6. We see that the spatial errors of Schemes 1 and 2 are equal and both two schemes preserve the fourth-order accuracy well. This is consistent with our predicted space accuracy in Corollary 4.1. Table 7 displays the errors and orders in time for different parameters γ with α = 0.4,σ = 1.6 and a fine mesh size h = π/200. Temporal errors of Schemes 1 and 2 remain the same which confirms the effectiveness and accuracy of the fully discrete scheme. Unfortunately, numerical results in the third part of Table 7 are different from the first two cases. The convergence rates in this part are higher than 2, maybe can reach γσ. This amusing phenomenon shows that there are certain differences between theory and experiment. It seems that the truncation error of u(tn−𝜃) − un−𝜃 in (4.9) can be further enhanced at least for the coarse meshes.

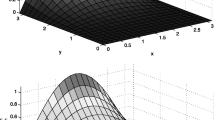

The left panel of Fig. 1 shows the errors with the parameters α = 0.5,σ = 0.5,h = π/10 at T = 1 for different γ. We can conclude that the performance of nonuniform grids is better than the uniform one. Error curves on different nonuniform partitions are indicated in the right panel. These curves illustrate that the fully discrete scheme is unconditionally stable.

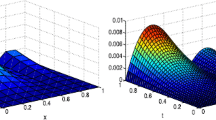

Since the computational cost in space had been proved in [29], we just examine the computational cost in time. Taking the parameters α = 0.6,σ = 1.6,γ = 1.5,h = π/10, and T = 1, we consider the relationship between CPU time and the time-step size N. The left panel of Fig. 2 reports the CPU time in seconds for Schemes 1 and 2 versus N. We find that the CPU time increase via the number of N is linear with the slopes of one and two in the logarithm for Scheme 2 and Scheme 1, respectively. It verifies that the new scheme is much faster than the normal one.

Example 3

Consider the three-dimensional problems (1.1)–(1.3) in Ω = (0,π)3 and the time interval is (0,1]. Let κ = 3 and \(f=\omega _{1+\sigma -\alpha }(t){\sin \limits } x {\sin \limits } y {\sin \limits } z\), so that the reaction-subdiffusion problem has an exact solution \(u=\omega _{1+\sigma }(t){\sin \limits } x {\sin \limits } y {\sin \limits } z\). This solution still satisfies the regularity assumptions (1.14).

We choose the paremeters α = 0.8,σ = 0.8 and still test the temporal error and spatial error separately. For spatial accuracy, N = 1000 is taken as Example 2 to ignore the temporal error. Errors and convergence orders are reported in Table 8 by varying mesh sizes from h = π/5 to π/40. For the case of uniform partition, we observe that the errors are almost invariable when the mesh size is refined to h = π/10. This problem might be caused by too small regularity parameter σ, so that the temporal error contaminates the final data. On the contrary, numerical results of nonuniform partitions are close to the fourth-order accuracy which agrees well with our theoretical prediction. In general, the nonuniform grids outperform the uniform one for the problem with singularity solutions. Always, the same parameters are selected to check the temporal convergence rates in Table 9. We choose h = π/200 enable to avoid the errors in space and double increase the number of N. Numerical results in the first two cases maintain the theoretical analysis in Corollary 4.1 for both uniform partition and nonuniform ones. However, the third part in this table arises an interesting phenomenon similar to Example 2, namely, the convergence rate is higher than 2, approaches 3 − α.

Analogously, comparisons of error curves between two types of meshes and unconditional stability of the proposed scheme are listed in Fig. 3. In the left panel, error curve of the uniform grid is still higher than the other nonuniform ones. On the other side, the performances of error curves on different nonuniform grids illustrate the unconditionally stability of the new scheme.

Comparisons of computational complexity are also reported for 3D case. CPU times with the parameters α = 0.6,σ = 1.6,h = π/10 at T = 1 and different numbers of time steps N are shown in the right panel of Fig. 2. The fast scheme has almost linear complexity in N and is much more efficient than the normal one.

In conclusion, compared with Scheme 1, the fully discrete scheme (Scheme 2) not only has the same accuracy but also reduces the computational cost and storage requirement significantly for long time calculation and spatial multi-dimensional cases.

6 Concluding remarks

By taking the intrinsically initial singularity of solution into account, an accelerated nonuniform version of Alikhanov formula is presented for approximating the Caputo fractional derivative. In the finite difference framework, we utilize the discrete sine transform and develop a second-order fast compact scheme for solving the subdiffusion problems. Unconditional stability and sharp H1-norm error estimate are established by employing the discrete fractional Grönwall inequality and global consistency analysis. Some numerical examples are presented to support the effectiveness of this scheme and the sharpness of our analysis. The theoretical results in time approximation together with their proofs here would be also valid for some other spatial discretizations such as finite element method and spectral method.

References

Hilfer, R.: Applications of Fractional Calculus in Physics. World Scientific, Singapore (2000)

Sun, Z.Z., Wu, X.N.: A fully discrete difference scheme for a diffusion-wave system. Appl. Numer. Math. 56, 193–209 (2006)

Lin, Y.M., Xu, C.J.: Finite difference/spectral approximations for the time-fractional diffusion equation. J. Comput. Phys. 225, 1533–1552 (2007)

Li, C.P., Chen, A., Ye, J.J.: Numerical approaches to fractional calculus and fractional ordinary differential equation. J. Comput. Phys. 230, 3352–3368 (2011)

Gao, G.H., Sun, Z.Z., Zhang, H.W.: A new fractional numerical differentiation formula to approximate the Caputo fractional derivative and its applications. J. Comput. Phys. 259, 33–50 (2014)

Alikhanov, A.A.: A new difference scheme for the time fractional diffusion equation. J. Comput. Phys. 280, 424–438 (2015)

Lv, C.W., Xu, C.J.: Error analysis of a high order method for time-fractional diffusion equations. SIAM J. Sci. Comput. 38, A2699–A2724 (2016)

Brunner, H., Ling, L., Yamamoto, M.: Numerical simulations of 2D frational subdiffusion problems. J. Comput. Phys. 229, 6613–6622 (2010)

McLean, W.: Regularity of solutions to a time-fractional diffusion equation. ANZIAM J. 52, 123–138 (2010)

Jin, B.T., Lazarov, R., Zhou, Z.: An analysis of the l1 scheme for the subdiffusion equation with nonsmooth data. IMA J. Numer. Anal. 36(1), 197–221 (2016)

Sakamoto, K., Yamamoto, M.: Initial value/boundary value prolems for fractional diffusion-wave equations and applications to some inverse problems. J. Math. Anal. Appl. 382, 426–447 (2011)

Stynes, M., O’Riordan, E., Gracia, J.L.: Error analysis of a finite difference method on graded meshes for a time-fractional diffusion equation. SIAM J. Numer. Anal. 55, 1057–1079 (2017)

Yuste, S.B., Quintana-Murillo, J.: A finite difference method with non-uniform timesteps for fractional diffusion equation. Comput. Phys. Commun. 183, 2594–2600 (2012)

Mustapha, K., Aimutawa, J.: A finite difference method for an anomalous sub-diffusion equation, theory and applications. Numer. Algo. 61, 525–543 (2012)

Zhang, Y.N., Sun, Z.Z., Liao, H.-L.: Finite difference methods for the time fractional diffusion equation on nonuniform meshes. J. Comput. Phys. 265, 195–210 (2014)

Li, C.P., Yi, Q., Chen, A.: Finite difference methods with non-uniform meshes for nonlinear fractional differential equations. J. Comput. Appl. Math. 316, 614–631 (2016)

Liao, H.-L., Li, D.F., Zhang, J.W.: Sharp error estimate of the nonuniform l1 formula for linear reaction-subdiffusion equation. SIAM J. Numer. Anal. 56, 1112–1133 (2018)

Liao, H.-L., McLean, W., Zhang, J.W.: A discrete grönwall inequality with application to numerical schemes for subdiffusion problems. SIAM J. Numer. Anal. 57, 218–237 (2019)

Liao, H.-L., McLean, W., Zhang, J.W.: A second-order scheme with nonuniform time steps for a linear reaction-subdiffusion problem. arXiv:1803.09873v2 (2018)

Ren, J.C., Liao, H.-L., Zhang, J.W., Zhang, Z.M.: Sharp h1-norm error estimates of two time-stepping schemes for reaction-subdiffusion problems. arXiv:1811.08059v1 (2018)

Ke, R.H., Ng, M.K., Sun, H.W.: A fast direct method for block triangular Toeplitz-like with tri-diagonal block systems from time-fractional partial differential equation. J. Comput. Phys. 303, 203–211 (2015)

Baffet, D., Hesthaven, J.S.: A kernel compression scheme for fractional differential equations. SIAM J. Numer. Anal. 55, 496–520 (2017)

Jiang, S.D., Zhang, J.W., Zhang, Q., Zhang, Z.M.: Fast evaluation of the Caputo fractional derivative and its applications to fractional diffusion equations. Commun. Comput. Phys. 21, 650–678 (2017)

Yan, Y.G., Sun, Z.Z., Zhang, J.W.: Fast evaluation of the Caputo fractional derivative and its applications to fractional diffusion equations: a second-order scheme. Commun. Comput. Phys. 22, 1028–1048 (2017)

Shen, J.Y., Sun, Z.Z., Du, R.: Fast finite difference schemes for time-fractional diffusion equations with a weak singularity at initial time. East Asia J. Appl. Math. 8, 834–858 (2018)

Liao, H.-L., Yan, Y.G., Zhang, J.W.: Unconditional convergence of a fast two-level linearized algorithm for semilinear subdiffusion equations. J. Sci. Comput. 80, 1–25 (2019)

Wang, H.Q., Zhang, Y., Ma, X., Qiu, J., Liang, Y.: An efficient implementation of fourth-order compact finite difference scheme for Poisson equation with Dirichlet boundary conditions. Commun. Math. Appl. 71, 1843–1860 (2016)

Wang, H.Q., Ma, X., Lu, J.L., Cao, W.: An efficient time-splitting compact finite difference method for Gross-Pitaevskii equation. Appl. Math. Comput. 297, 131–144 (2017)

Shen, J., Tang, T., Wang, L.L.: Spectral Methods: Algorithms, Analysis and Applications. Springer, Berlin (2011)

Author information

Authors and Affiliations

Corresponding author

Additional information

Fundding information

Xin Li is financially supported by a grant KJ2018A0523 from the University Natural Science Research Key Project of Anhui Province. Hong-lin Liao is financially supported by a grant 1008-56SYAH18037 from NUAA Scientific Research Starting Fund of Introduced Talent and a grant DRA2015518 from 333 High-level Personal Training Project of Jiangsu Province.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, X., Liao, Hl. & Zhang, L. A second-order fast compact scheme with unequal time-steps for subdiffusion problems. Numer Algor 86, 1011–1039 (2021). https://doi.org/10.1007/s11075-020-00920-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-020-00920-x