Abstract

The Floater–Hormann family of the barycentric rational interpolants has recently gained popularity because of its excellent stability properties and highly order of convergence. The purpose of this paper is to design highly accurate and stable schemes based on this family of interpolants for the numerical solution of stiff Volterra integral equations of the second kind.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Volterra integral equations (VIEs) of the second kind are often used as mathematical models of physical or biological phenomena with history arising in many practical applications in science and engineering (see [14, 16]), and have the form

in which \(g:I\rightarrow \mathbb {R}^{D}\) and \(K:S\times \mathbb {R}^{D}\rightarrow \mathbb {R}^{D}\) are assumed to be given functions where D denotes the dimension of the system and S := {(t, s) : t0 ≤ s ≤ t ≤ T}. In what follows, we assume that the given functions g and K are smooth enough such that the system of equations (1) has a unique solution [14, 16, 32].

Over the last few decades, numerous numerical methods for the numerical solution of (1) have been introduced in different classes such as global or spectral methods, multistage (and multivalue) methods, and direct quadrature methods which all rely on a considerable variety of foundations and methodologies. Although the convergence of spectral methods may be exponential for equations involving analytic functions g and K (see [33]), and their order adapts automatically to the degree of smoothness of these data, they cannot be very useful in the case of Volterra type equations. Indeed, they treat Volterra equations as the Fredholm ones which means that they require the value of T in advance while this is not very natural in time-dependent problems. In contrast, multistage methods such as collocation methods [13], two-step almost collocation methods [18] and multistep collocation methods [19] are more popular and efficient for solving VIEs (1). An other class of the multistage methods for VIEs is the class of general linear methods, introduced by Izzo et al. in [27] and investigated more in [1, 3]. Although multistage methods have desirable stability and accuracy properties, the computational cost of these methods which usually are fully implicit for computing the internal stage values (for instance, see [1, 3, 27]), is considerable. In fact, they require the values of y, g, and K to be computed at stage abscissa points and solve a (nonlinear) system in sD dimensions in which s is the number of internal stages. The direct quadrature methods [6, 16, 32] for solving (1) are the simplest in the implementation and most familiar ones. Recently, two direct quadrature (global and composite versions) methods based on linear barycentric rational interpolants (LBRI) have been introduced in [10]. The linear stability properties of the composite one have been investigated in [26]. It has been shown that its stability regions, for various choices of (n, d), with respect to both the basic test equation of the form

with \(\lambda \in \mathbb {C}\), and a more significative test equation (the convolution test equation) of the form

with \(\lambda ,\mu \in \mathbb {R}\), are bounded and found that the construction of methods with unbounded stability regions in this class is an open problem. In this paper, we are going to introduce new methods in this class with unbounded stability regions with respect to both test equations (2) and (3).

The paper is organized along the following lines. In Section 1, we briefly review the LBRI and their applications in approximating the definite integral and the first derivative of a given function on a arbitrary set of nodes. The goal of the paper is included in Section 3 with the aim of designing LBRI-based methods for (1) having desirable stability properties which are suited to the numerical solution of stiff VIEs. The convergence analysis of the methods together with their order of convergence are investigated in Section 4. Section 5 provides a discussion on the linear stability of the methods with respect to both the basic and convolution test equations. The robustness and efficiency of the methods and the theoretical results on their order of convergence are illustrated by some numerical experiments in Section 6. Finally, the work of the paper is summed up in Section 7.

2 A review on the barycentric rational finite differences and quadrature

The barycentric form of Lagrange’s interpolation formula to interpolate the given n + 1 data values fj := f(tj), j = 0,1,…, n, corresponding to a real function \(f:[a,b]\rightarrow \mathbb {R}\) at the n + 1 distinct nodes t0, t1, …, tn, takes the form

with the barycentric weights

which is eminently suitable for machine computation [11, 23]. Note that if the barycentric weights λj in (5) are changed to any arbitrary nonzero numbers, namely βj, the interpolation property of pn in (4) is still preserved but the resulting function pn is usually no longer a polynomial but a rational function. In [9], it has been proved that the signs of the barycentric weights βj must alternate for the rational function to have no poles in [a, b].

Berrut [8] has introduced two LBRI schemes for ordered distinct nodes \(t_{0}<t_{1}<\cdots <t_{n}\) in which the barycentric weights are chosen as \(\beta _{j}=(-1)^{j}\) and \(\beta _{j}=(-1)^{j}\delta _{j}\) with δj equal to 1/2 for j = 0, n and 1 for j = 1,2,…, n − 1; the latter are equal to the weights of polynomial interpolation for Chebyshev points of the second kind. A major drawback of these choices for the weights is the low rate of the convergence. It was proved in [20] that the first one converges as \(\mathcal {O}(h)\) and the second one as \(\mathcal {O}(h^{2})\) for small values of the stepsize \(h := {\max \limits } \{t_{j+1} - t_{j} \}\). These low rates of convergence make these schemes rather inadequate for the numerical solution of equations.

Floater and Hormann [20] have introduced a new family of LBRI, depending on a parameter d, as a rational blend of n − d + 1 polynomials interpolating at d + 1 consecutive nodes, converging at the rate \(\mathcal {O}(h^{d+1})\). For uniformly spaced points tj, the barycentric weights of this LBRI take the form, up to a constant factor,

which is used throughout the paper. This family of LBRI does not exhibit Runge’s phenomenon for d fixed [21]. The convergence rate together with the accuracy and desirable stability properties of this LBRI make it an interesting choice for solving smooth problems on the basis of equispaced samples.

A natural way to approximate the derivative and/or integral of a given function is to use the derivative and/or the integral of its interpolant. Because of the good accuracy and excellent stability properties of these LBRI [29], the spirit of the proposed methods for the numerical solution of VIE (1), presented in Section 3, is based on two exemplary applications of the LBRI

to approximate the derivative and integral of a real smooth function f sampled at n + 1 equispaced nodes which will be briefly reviewed here: Differentiating the LBRI (6) and evaluating it at the node ti, i = 0,1,…, n, leads to the barycentric rational finite differences (RFD) formula to approximate \(f^{\prime }(t_{i})\) [29, 31] as

with

The convergence rate of the RFD formula (7) is given by the following theorem.

Theorem 1

[29, 31] Suppose n and d, d ≤ n, are positive integers, t0, t1, …, tn are equispaced nodes in the interval [a, b] and f ∈ Cd+ 2[a, b]. Then the absolute error of the approximation formula (7) with weights (8) goes to zero as \(\mathcal {O}(h^{d})\).

Another application of the LBRI is to approximate the definite integral

for a real integrable function f in the interval \([t_{0},t_{n}]\) and sampled at the distinct ordered nodes t0, t1, …, tn, through a quadrature rule. Integrating the LBRI (6) leads to the direct linear rational quadrature (DRQ) [29, 30] as

with

The weights wj can be evaluated in machine precision by means of an accurate quadrature rule such as Gauss or Clenshaw–Curtis which are provided in the Chebfun system [7, 34, 35]. The convergence rate of DRQ rule (9), for d ≤ n/2 − 1 and \(f\in C^{d+3}[t_{0},t_{n}]\), is \(\mathcal {O}(h^{d+2})\) [30].

3 Description of the LBRI-based methods

In this section, considering a uniform grid

for the given interval [t0, T] with the constant stepsize \(h=t_{j+1}-t_{j}\), j = 0,1,…, N − 1, we are interested in developing methods based on the LBRI (6) for the numerical solution of (1). In each step of the proposed methods, say the step number m ≤ N, we require a quadrature rule to approximate a definite integral on the interval \([t_{0},t_{m}]\). In order to avoid computing new quadrature weights for every step, a composite version of (9), introduced in [10], should be considered in which the quadrature weights are scale and translation invariant. To define the composite barycentric rational quadrature (CBRQ) rule, let d and n be positive integers with d ≤ n ≤ m/2 and set p := ⌊m/n⌋− 1. Then, the CBRQ rule can be written as

where

Notice that in the case of n ≤ m < 2n, we just have the last term of (10) which is the global quadrature rule, i.e., DRQ rule (9) with m + 1 points.

The convergence order of the rule (10) is given in the following theorem.

Theorem 2

[10] Suppose n, d and m, d ≤ n ≤ m/2, are positive integers, \(f\in C^{d+2}[t_{0},T]\). Then the absolute error in the approximation of the integral of f with (10) goes to zero as \(\mathcal {O}(h^{d+1})\).

Remark 1

According to Thm. 2 in [20], in the case of odd n − d, the bound on the interpolation error involves an additional factor nh, so that the orders of the related RFD formula and quadrature rule are one unit larger than stated in Theorems 1 and 2.

In [26], it has been shown that the stability regions of the direct quadrature method based on CBRQ rule (10), called CBRQM, are bounded. Here, we are going to introduce new methods in this class with unbounded stability regions for various choices of (n, d): Differentiating (1), cf. [36], yields Volterra integro-differential equations of the second kind

with initial condition \(y(t_{0})=g(t_{0})\). We start approximating the left-hand side of (12) at tm by an application of the RFD formula. At time tm, only values of y at tk for k ≤ m are known, so left one-sided differences should be used as follows

where ym denotes an approximation for the exact solution y of (1) at tm and the function Fm(t) is given by

In order to avoid calculating the derivative of the functions in (1), we approximate \(F^{\prime }_{m}(t_{m})\), in (13), numerically. There are two options: One is to use the left one-sided RFD formula which leads to

and the other one is to use the right one-sided RFD formula in which the weights are the same as those of the left one-sided RFD formula, though taken from right to left and reversed sign, i.e.,

Now, to have fully discretized methods, it is required to use a quadrature rule finding an approximation to Fm(t), denoted by Qm(t;h), in equations (14) and (15). We use the CBRQ (10) with the weights given by (11) as

which gives the methods fully based on the LBRI with Floater–Hormann weights inheriting all the advantages of this family of interpolation. Therefore, two versions of the method take the form

which uses the left one-sided RFD formulae to approximate both of \(y^{\prime }(t_{m})\) and \(F^{\prime }_{m}(t_{m})\) and will be referred to as LBRILLM, and

which uses the left one-sided RFD formula for \(y^{\prime }(t_{m})\) and right one-sided RFD formula for \(F^{\prime }_{m}(t_{m})\) and will be referred to as LBRILRM.

The nature of the LBRILLM (17) and LBRILRM (18) is multistep, hence they require a starting procedure of adequate accuracy. Indeed, the values y1, y2, …, yn− 1 should be known to compute yn, yn+ 1, …, yN by the LBRILLM and LBRILRM. In what follows, we design a starting procedure which is again based on the LBRI (6). Evaluating (12) at the mesh point tm, m = 1,2,…, n − 1, and using the RFD formula (7) to approximate the values \(y^{\prime }(t_{m})\) and \(F^{\prime }_{m}(t_{m})\), leads to

wherein \({Q_{m}^{S}}(t_{j};h)\) is an approximation to \(F_{m}(t_{j})\) given by

with

Notice that in addition to the unknown values y1, y2, …, yn− 1, another unknown value yn is included in the system of equations (19) while there are only n − 1 equations in this system. So, the upper bound for m in equations (19), (20), and (21) must be increased to n. In this way, the value yn is computed by the starting procedure and so the LBRILLM (17) and LBRILRM (18) should be begun from m = n + 1 instead of m = n.

4 Convergence analysis

In this section, we analyze the convergence of the LBRILLM (17) and LBRILRM (18) and obtain their order of convergence in terms of the parameters of the method. Since the convergence rate of the starting procedure affects directly that of the main method, we shall first investigate the order of convergence of the starting procedure. In the following theorem, we obtain the convergence rate of the starting procedure (19) in terms of the parameter d.

Theorem 3

Assume that \(g\in C^{d+2}[t_{0},T]\) and \(K\in C^{d+2}(S\times \mathbb {R}^{D})\), and let \(y_{1},y_{2},\ldots ,y_{n}\) be the approximate values for the exact solution (1) at the points \(t_{1},t_{2},\ldots ,t_{n}\), obtained by the starting procedure (19) with d ≤ n. Then the norm of the starting error vector \(\mathbf {e}_{n}=(e_{1},e_{2},\ldots ,e_{n})^{T}\) with ei = y(ti) − yi, i = 1,2,…, n, tends to zeros as \(\mathcal {O}(h^{d+\delta })\) with δ = 1 for even n − d and δ = 2 for odd n − d.

Proof

Substituting the exact values for y in the starting procedure (19), and including their consistency error Rm(h), we get

where

Subtracting (12), in which t is substituted by tm, from (22), for m = 1,2,…, n, and adding the terms \(\pm \frac {1}{h}{\sum }_{j=0}^{n} d_{m,j}F_{m}(t_{j})\) gives

Now, by using Theorems 1 and 2 together with Remark 1, we find that \(R_{m}(h)=O(h^{d+\delta })\), for m = 1,2,…, n, with δ = 1 for even n − d and δ = 2 for odd n − d.

Subtracting (19) from (22) and using the mean-value theorem, we find that, for m = 1,2,…, n,

where the hat indicates that each row of ∂K/∂y is evaluated at a different mean value ξm, each lying in the internal part of the line segment joining y(tm) and ym, and the same case for ζk. Equation (23) can be written in the matrix form

in which \(\mathbf {R}_{n}(h):=[R_{1}(h),R_{2}(h),\ldots ,R_{n}(h)]^{T}\), the matrix \(\mathcal {W}_{n}\) is a diagonal matrix with \(\widehat {\frac {\partial K}{\partial y}}(t_{m},t_{m},\xi _{m})\), m = 1,2,…, n, on the diagonal and the matrices \(\mathcal {D}_{n}\) and \(\mathcal {\overline {W}}_{n}\) are defined by dm, k and \({\sum }_{j=0}^{n}d_{m,j}w_{k}^{(m)}\widehat {\frac {\partial K}{\partial y}}(t_{j},t_{k},\zeta _{k})\) on their (m, k) elements, respectively. Because of the differentiability hypothesis on the function K, \(\widehat {\frac {\partial K}{\partial y}}\) has a maximum absolute value and also the weighs dm, j and \(w_{k}^{(m)}\) since in the starting procedure, n is fixed and only the stepsize h is variable. This means that the norm of the matrices \(\mathcal {W}_{n}\) and \(\mathcal {\overline {W}}_{n}\) are bounded so that \(h\|\mathcal {W}_{n}+\mathcal {\overline {W}}_{n}\|_{\infty }\) may be made as small as necessary by decreasing h. Therefore, with small enough stepsize h and nonsingularity of the matrix \(\mathcal {D}_{n}\) [30], there exists a positive constant \(\mathcal {C}\) such that

which implies \(\|\mathbf {e}_{n}\|_{\infty }=\mathcal {O}(h^{d+\delta })\). □

In a similar way to convergence theory of Volterra linear multistep (VLM) methods for the numerical solution of VIEs (1), we first introduce the polynomial ρ defined by

and note that for every \(z\in \mathbb {C}\), the coefficients dn, j, j = 0,1,…, n, satisfy

which gives ρ(1) = 0 and \(\rho ^{\prime }(1)=1\).

Zero-stability is essential for multistep methods, such as the LBRILLM (17) and LBRILRM (18), to be convergent, and it requires that the polynomial ρ satisfies the root condition, i.e., all its zeros lie in or on the closed unit circle |w| = 1 with only simple zeros on the boundary. The simple and elegant Bistritz stability criterion [12] can be used to determine how many zeros of \(\rho (w)\left /(1-w)\right .\) lie strictly inside the unit disk. This is discussed in [2] for various pairs of (n, d) and found that the polynomial ρ satisfies the root condition for every choice of n ≥ d with n ≤ 20, d ≤ 6, except for (n, d) = (7,6), (8,6), (11,6), and (12,6).

We are now in a position to state the convergence theorem of the LBRILLM (17) and LBRILRM (18) which can be deduced by using Theorem 3.2.6(b) in [16] as a general convergence theorem for VLM methods. This theorem establishes an upper bound for the global error em, m = n + 1, n + 2,…, N, defined by

Theorem 4

Assume that g and K be continuous on [t0, T] and \(S\times \mathbb {R}^{D}\), respectively, and let the functions K and ∂K/∂t satisfy a Lipschitz condition with respect to their third argument. Assume also that n and d be such that the LBRILLM (17) (or the LBRILRM (18)) is zero-stable. Then there exists a constant C independent of the stepsize h such that, for all sufficiently small h,

wherein

in the case of the LBRILLM and

in the case of the LBRILRM, with

It should be noted that \({\mathscr{L}}[y(t_{m});h]\), introduced in Theorem 4, may be considered as the local truncation error of the LBRILLM (17) or LBRILLM (18). For g ∈ Cd+ 2[t0, T] and \(K\in C^{d+2}S\times \mathbb {R}^{D}\), we have

with δ introduced in Theorem 3, which can be proved by adapting the analysis employed for the consistency error of the starting procedure Rm(h), m = 1,2,…, n, in the proof of Theorem 3.

On the basis of Theorem 4, we have the following corollary regarding the order of convergence of the methods (17) and (18) which can be deduced from Corollary 3.2.2 stated in [16].

Corollary 1

Let the conditions of Theorem 4 together with additional conditions \(g\in C^{d+2}[t_{0},T]\) and \(K\in C^{d+2}(S\times \mathbb {R}^{D})\) be satisfied. Then the order of convergence of the methods (17) and (18) is \(\min \limits \{d_{1},d_{2}\}\) where d1 and d2 are the largest positive integers such that \(R_{m}(h)=O(h^{d_{1}})\), m = 1,2,…, n, and \({\mathscr{L}}[y(t_{m});h]=O(h^{d_{2}+1})\).

By Theorem 3 and (24), we find that the integers d1 and d2 appearing in Corollary 1 are respectively d + δ and d + δ − 1. Therefore, the order of convergence of the methods (17) and (18) is \(\min \limits \{d_{1},d_{2}\}=d+\delta -1\) (see Table 1).

5 Stability analysis

As is known, the convergence property for a numerical method tells us only what happens in the limit as \(h\rightarrow 0\) and does not give us any guarantee that the numerical solution will be acceptable for a sufficiently small fixed non-zero stepsize h. Actually, the numerical stability analysis is absolutely necessary in assessing the fitness of the used method in the numerical solution. Here, we study the linear stability of the methods (17) and (18) with respect to both the basic and convolution test equations (2) and (3). These test equations have an exponentially decaying solution for Re(λ) < 0 and for \(\lambda ,\mu \in \mathbb {R}^{-}\), respectively [6, 15, 32]. The study of linear stability of the numerical methods provides a general framework for understanding the condition under which the numerical solution exhibits the same behavior.

Applying, the LBRILLM (17) and LBRILRM (18) to the basic test (2), we get

and

with z := hλ, respectively, which by considering \({\sum }_{j=0}^{n} d_{n,j}=\rho (1)=0\), both of them are reduced to

So, the linear stability properties of the both methods (17) and (18) with respect to the basic test (2) are determined by the “stability polynomial” Φ(w, z) defined by

which is the same as that of the introduced method based on the LBRI in [5] for the numerical solution of ordinary differential equations. In [5], it has been shown that the method is A(α)-stable with wider angles α in comparison with BDFs of the same order. Indeed, the LBRILLM (17) and LBRILRM (18) are solutions for the open problem proposed in [26]. In Table 1, the values of α of A(α)-stability for various pairs of (n, d) are presented and compared with those for BDFs which shows that the stability properties of the methods (17) and (18) are better that those of BDFs for VIEs introduced in [36].

Now, we investigate the stability properties of the methods (17) and (18) with respect to the convolution test (3). To this end, in a way similar to what is done for investigating the stability properties of the proposed methods in [26] and [4], let us introduce y[m− 1], y[m] and Y[m− 1], for any positive integer m, as

where δjk denotes the Kronecker delta and also introduce the n × n matrices W0, W1, V, and \(\overline {V}\) with

where the n dimensional vectors e and e1 are the all-ones vector and the first standard unit vector, respectively. Now, by using the relations \({\sum }_{j=0}^{n} d_{n,j}=\rho (1)=0\) and \({\sum }_{j=0}^{n} jd_{n,j}=\rho ^{\prime }(1)=1\), it is easy to show that both methods (17) and (18) applied to the test (3) lead to

where ξ := λh, η := μh2, \(\overline {w}\) is the first row of the matrix W1 and In stands for the identity matrix of order n. Equation (25) can be written more compactly as

with the “stability matrix” M(ξ, η) given by

which is exactly the same as that of the method based on the LBRI introduced in [4] for the numerical solution of Volterra integro-differential equations

when its stability properties are analyzed with respect to the test equation

The stability properties of both the LBRILLM (17) and LBRILRM (18) with respect to the convolution test (3) are governed by the “stability polynomial” Φ(w, ξ, η) defined by

So, the set of values of \((\xi ,\eta )\in \mathbb {R}^{-}\times \mathbb {R}^{-}\) for which any solution to the equation Φ(w, ξ, η) = 0 lies in the unit disk with only simple roots on the boundary is known as the stability region. By (28), we find that the roots of Φ for w are rational functions in terms of ξ and η in which degree of ξ in numerator and denominator are respectively n − 1 and n. So, for a fixed arbitrary η < 0, all the roots w of Φ tend to zero when \(\xi \rightarrow -\infty \). This means that the stability regions of the methods are unbounded. Although we did not find V0-stable methods within this class of methods, the proposed methods (17) and (18) are V0(α)-stable with a large value for α. We should recall that a method is said to be V0– and/or V0(α)-stable, for α ∈ [0, π/2), if its stability region with respect to (3) contains the all pairs of (ξ, η) with ξ ≤ 0 and η ≤ 0 and/or the set \(\{(\xi ,\eta ): 0\leq \arctan (\eta /\xi )< \alpha , \xi <0\}\) [3, 27]. In Table 2, the values of α of V0(α)-stability for various pairs of (n, d) are presented. The boundary locus technique has been used to obtain the value of α which is described in [28]. Moreover, we have plotted the stability regions of the methods in the (ξ, η)-plane and domain [− 50,0] × [− 50,0], for various zero-stable choices of n and d in Fig. 1.

Although the stability analysis shows that the stability properties of both methods (17) and (18) are the same with almost identical accuracy (see Tables 3, 4, and 5), the advantage of the method (17) is that method (18) requires the kernel function K to be defined in a larger interval [t0, T + nh], instead of [t0, T], for its first variable, while it is not the case for the LBRILLM (17).

6 Numerical experiments

In this section we present the results of numerical experiments with various values of n and d for which our methods are zero-stable, to validate the stability results of both methods (17) and (18) and show their capability and efficiency in solving stiff VIEs. For comparison, we also add the results from the CBRQM introduced in [10].

Efficiency and accuracy of the proposed methods are shown by measuring

and

yielding the number of correct decimal digits at the starting values and at the endpoint tN, respectively. To verify theoretical results on the order of accuracy, we compute an experimental estimate of the order of accuracy for the starting procedure by the formula \(O_{S}:=\log _{2}(10)\cdot \left (\kappa _{S}(h/2)-\kappa _{S}(h)\right )\), and for the methods (17) and (18), denoted by ON and computed by the similar formula for OS. In our numerical experiments, we use superscript LL and LR for κN and ON to refer to the results from LBRILLM and LBRILRM, respectively.

As a first example, we consider the VIE

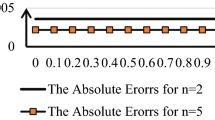

to illustrate the stability regions of the proposed methods in Section 3. Here, the function g is chosen such that the exact solution is \(y(t)=2-\exp (-t)\). For this example, the parameters λ and μ, appearing in (3), can be approximated by λ ≈− 300 and μ ≈− 4500. We solve this example with (n, d) = (5,3) and (4,3), and two different fixed stepsizes h1 = 0.1 and h2 = 0.05. Considering the stability regions for the methods with (n, d) = (5,3) and (4,3), plotted in Fig. 1, \((\xi _{1},\eta _{1})=(\lambda h_{1},\mu {h_{1}^{2}})\) lies outside of the stability regions while \((\xi _{2},\eta _{2})=(\lambda h_{2},\mu {h_{2}^{2}})\) lies inside of these regions. Also, both of these points lie outside of the stability region for the CBRQM which are plotted in paper [26]. Figure 2 shows the numerical stability and instability behavior of the LBRILLM and CBRQM by a graph for the error over the whole interval, which demonstrate beautifully their agreement with the theoretical results and superiority of the LBRILLM compared with the CBRQM.

Errors versus t for example (29) solved by the LBRILLM and CBRQM with the parameters (n, d) = (5,3) (left) and (4,3) (right) and the stepsizes h = 0.1 and h = 0.05

Next we study the nonlinear stiff VIE [36]

with the exact solution y(t) = t. The stiffness parameters ∂K/∂y and ∂2K/∂t∂y for this example increase with t. The numerical results of the methods have been reported in Table 3 and Fig. 3. Table 3 illustrates the efficiency and capability of the methods in solving stiff VIEs together with their theoretical orders. The CBRQM fails to solve this example for all choices of (n, d) and the corresponding stepsizes h reported in Table 3. Indeed, considering the approximated values for λ and μ at T = 10 which are − 22026 and − 352423, and the stability regions of the CBRQM, for instance for (n, d) = (3,2), the stepsize h must be less than 1.01 × 10− 4. Figure 3 shows that the CBRQM becomes unstable at t ≈ 5 whereas LBRILLM and LBRILRM are stable in the whole interval. This discussion clearly clarifies that the proposed methods outperform CBRQM in the sense of stability for solving stiff VIEs.

Errors versus t for example (30) solved by the LBRILRM, LBRILLM, and CBRQM with (n, d) = (3,2) and the stepsize h = 2− 8

We also study the system of VIEs

with t ∈ [0,50], \(a=\exp ((s-t)/20)\), and \(b=\exp (-t/20)\), which models endemic infectious disease [24, 25]. Reference solution at the endpoint of integration T = 50 is given by [25]

The numerical results of the LBRILLM and LBRILRM for various choices of n and d have been reported in Table 4. This table shows that, as it is expected, the order of convergence does appear to be d + 1 in the case of odd n − d, or d, for even n − d.

Finally, we study the nonlinear VIE

arising in the analysis of neural networks with post inhibitory rebound [17, 22]. Reference solution at the endpoint of integration T = 10 is given by y(10) = 1.25995582337233 [17]. The numerical results of the LBRILLM and LBRILRM with (n, d) equal to (6,3), (5,4), and (13,6) have been reported in Table 5. As to be expected, the orders observed are four, five, and seven respectively.

7 Conclusion

The stability region of the LBRI-based method, introduced in [10], for solving VIEs of the second kind is bounded, which make it unsuitable for solving stiff problems. This was a motivation for this work to derive methods in this class with unbounded stability regions. We proved that the stability regions of the proposed methods are unbounded and obtained their order of convergence in terms of the parameters of the methods. The effectiveness of our methods was confirmed by some numerical experiments.

References

Abdi, A.: General linear methods with large stability regions for Volterra integral equations. Comp. Appl. Math. 38(52), 1–16 (2019)

Abdi, A., Berrut, J.-P., Hosseini, S. A.: The linear barycentric rational method for a class of delay Volterra integro-differential equations. J. Sci. Comput. 75, 1757–1775 (2018)

Abdi, A., Fazeli, S., Hojjati, G.: Construction of efficient general linear methods for stiff Volterra integral equations. J. Comput. Appl. Math. 292, 417–429 (2016)

Abdi, A., Hosseini, S. A.: The barycentric rational difference-quadrature scheme for systems of Volterra integro-differential equations. SIAM J. Sci. Comput. 40, A1936–A1960 (2018)

Abdi, A., Hosseini, S.A., Podhaisky, H.: Adaptive linear barycentric rational finite differences method for stiff ODEs. J. Comput. Appl. Math. 357, 204–214 (2019)

Baker, C. T. H., Keech, M. S.: Stability regions in the numerical treatment of Volterra integral equations. SIAM J. Numer. Anal. 15, 394–417 (1978)

Battles, Z., Trefethen, L. N.: An extension of Matlab to continuous functions and operators. SIAM J. Sci. Comput. 25, 1743–1770 (2004)

Berrut, J. -P.: Rational functions for guaranteed and experimentally well-conditioned global interpolation. Comput. Math. Appl. 15, 1–16 (1988)

Berrut, J. -P.: Linear barycentric rational interpolation with guaranteed degree of exactness. In: Fasshauer, G.E., Schumaker, L.L. (eds.) Approximation Theory XV: San Antonio 2016, Springer Proceedings in Mathematics & Statistics, 1–20 (2017)

Berrut, J. -P., Hosseini, S. A., Klein, G.: The linear barycentric rational quadrature method for Volterra integral equations. SIAM J. Sci. Comput. 36, A105–A123 (2014)

Berrut, J. -P., Trefethen, L. N.: Barycentric Lagrange interpolation. SIAM Rev. 46, 501–517 (2004)

Bistritz, Y.: A circular stability test for general polynomials. Syst. Control Lett. 7, 89–97 (1986)

Blom, J. G., Brunner, H.: The numerical solution of nonlinear Volterra integral equations of the second kind by collocation and iterated collocation methods. SIAM J. Sci. Stat. Comput. 8, 806–830 (1987)

Brunner, H.: Collocation methods for Volterra integral and related functional equations. Cambridge University Press, Cambridge (2004)

Brunner, H., Nørsett, S.P., Wolkenfelt, P.H.M.: On V0-stability of numerical methods for Volterra integral equations of the second kind. Report NW84/80. Mathematish Centrum, Amsterdam (1980)

Brunner, H., van der Houwen, P. J.: The numerical solution of Volterra equations. CWI Monographs, North-Holland (1986)

Capobianco, G., Conte, D., Del Prete, I., Russo, E.: Fast Runge–Kutta methods for nonlinear convolution systems of Volterra integral equations. BIT 47, 259–275 (2007)

Conte, D., Jackiewicz, Z., Paternoster, B.: Two-step almost collocation methods for Volterra integral equations. Appl. Math. Comput. 204, 839–853 (2008)

Conte, D., Paternoster, B.: Multistep collocation methods for Volterra integral equations. Appl. Numer. Math. 59, 1721–1736 (2009)

Floater, M. S., Hormann, K.: Barycentric rational interpolation with no poles and high rates of approximation. Numer. Math. 107, 315–331 (2007)

Guttel, S., Klein, G.: Convergence of linear barycentric rational interpolation for analytic functions. SIAM J. Numer. Anal. 50, 2560–2580 (2012)

Hairer, E., Lubich, C., Schlichte, M.: Fast numerical solution of nonlinear Volterra convolution equations. SIAM J. Sci. Stat. Comput. 6, 532–541 (1985)

Henrici, P.: Essentials of Numerical Analysis. John Wiley, New York (1982)

Hetcote, H. W., Tudor, D. W.: Integral equation models for endemic infectious diseases. J. Math. Biol. 9, 37–47 (1980)

Hoppensteadt, F. C., Jackiewicz, Z., Zubik-Kowal, B.: Numerical solution of Volterra integral and integro-differential equations with rapidly vanishing convolution kernels. BIT 47, 325–350 (2007)

Hosseini, S. A., Abdi, A.: On the numerical stability of the linear barycentric rational quadrature method for Volterra integral equations. Appl. Numer. Math. 100, 1–13 (2016)

Izzo, G., Jackiewicz, Z., Messina, E., Vecchio, A.: General linear methods for Volterra integral equations. J. Comput. Appl. Math. 234, 2768–2782 (2010)

Izzo, G., Russo, E., Chiapparelli, C.: Highly stable Runge–Kutta methods for Volterra integral equations. Appl. Numer. Math. 62, 1002–1013 (2012)

Klein, G.: Applications of Linear Barycentric Rational Interpolation. University of Fribourg, PhD thesis (2012)

Klein, G., Berrut, J. -P.: Linear barycentric rational quadrature. BIT 52, 407–424 (2012)

Klein, G., Berrut, J. -P.: Linear rational finite differences from derivatives of barycentric rational interpolants. SIAM J. Numer. Anal. 50, 643–656 (2012)

Linz, P.: Analytical and Numerical Methods for Volterra Equations. SIAM, Philadelphia (1985)

Tang, T., Xu, X., Cheng, J.: On spectral methods for Volterra integral equations and the convergence analysis. J. Comput. Math. 26, 825–837 (2008)

Trefethen, L. N.: Is Gauss quadrature better than Clenshaw-Curtis?. SIAM Rev. 50, 67–87 (2008)

Trefethen, L.N., et al.: Chebfun Version 5.6.0, The Chebfun Development Team. http://www.chebfun.org (2016)

van der Houwen, P. J., te Riele, H. J. J.: Backward differentiation type formulas for Volterra integral equations of the second kind. Numer. Math. 37, 205–217 (1981)

Acknowledgments

The results reported in this paper were obtained during the visit of the first and second authors to Martin-Luther-Universität Halle-Wittenberg in 2018, which was supported by the German Academic Exchange Service, DAAD. These authors wish to express their gratitude to H. Podhaisky for making this visit possible. Also, The work of the first author was supported by the University of Tabriz, Iran under Grant No. 816.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Abdi, A., Hosseini, S.A. & Podhaisky, H. Numerical methods based on the Floater–Hormann interpolants for stiff VIEs. Numer Algor 85, 867–886 (2020). https://doi.org/10.1007/s11075-019-00841-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-019-00841-4