Abstract

Slow convergence and low accuracy are two main drawbacks in nonlinear system identification methods. It becomes more complicated when time delay and noises are considered. In this paper, considering a fractional-order Hammerstein model, an online identification method is proposed. A combination of an evolutionary optimization method and recursive least square algorithm is used to estimate the system parameters and orders in the presence of unknown noises. Finally, simulation results are taken to prove the effectiveness of the proposed algorithm.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Previously, standard systems as well as standard control systems, regardless of reality, were all considered to be integer-order systems. Recent researches, however, have shown that some of real-world systems are fractional order. In recent years, an increasing number of real-time physical systems have been better described by fractional-order differential equations (FODEs) than classical integer-order models. So, researchers and engineers are increasingly using fractional-order dynamical models to model real physical systems.

Identifying of the mathematical model of the system is very important in analyzing the properties of the system as well as designing a suitable controller for the system. System identification is a standard tool for modeling unknown systems whose main purpose is to determine the structure and parameters of the mathematical model of the system to reproduce the dynamic behavior of the system. This process becomes more difficult when physical systems are described by FODE instead of integer-order models. In this regard, the high complexity and lack of sufficient mathematical tools had led to little attention to fractional-order (FO) dynamical systems in theory and practice [1, 2]. But today, however, with the growth of computers and their ability to compute complex integrals and FO derivatives, this problem has been somewhat solved and fractional calculations have become an attractive research topic in the scientific and industrial communities. In recent decades, due to this growth, along with the theoretical research of fractional integrals and derivatives [3, 4], the use of fractional operators in various fields has been significantly developed.

Fractional calculus has been introduced in various fields of science and engineering [5, 6], including identification of thermal systems [7], identification of biological tissues [8], image processing [9], signal processing [10], path planning [11], path tracking [12], robotics [13], mechanical damping [14], battery [15], control theory and its applications [16, 17], mechanics [18], diffusion [19]. Also, systems with long-memory transient characteristics and independent frequency domain [14, 20, 21], transmission and distribution lines, electromechanical processes, dielectric polarization, viscoelastic materials such as polymers and rubbers, relaxation phenomena of organic dielectric materials, flexible structures, traffic in information networks and biological systems [7, 19, 22,23,24,25,26,27,28,29], colored noise, chaos [30], controllers [31], etc., can be modeled more appropriately by FO models than integer-order models. This confirms the fact that a significant number of real systems are generally fractional. Although for many of them, the degree of fractionality is very low. Thus, the application of fractional calculus has become the focus of international academic research, and the identification of such fractional-order systems has aroused growing interest in the scientific community. Although FO models are more suitable for describing dynamic systems than integer-order models, they require suitable methods for analytical or numerical calculations of FODEs [3, 4].

The aim of this paper is presenting a new online method for identifying nonlinear systems which offers two features simultaneously: increasing accuracy and decreasing computations. For this purpose, a nonlinear system identification based on FO Hammerstein model has been considered. The proposed method uses recursive identification algorithm with the ability of online identification.

The reason for using the Hammerstein model (Fig. 1) is that this model does not require a lot of basic information from the system, which makes identifying using process data relatively easier. The motivation for using fractional calculations in system identification is to preserve the features and phenomena ignored by integer-order models by FO models, as well as the fact that the dynamic behavior of an increasing number of real processes can be more accurately expressed using fractional models.

In the FO Hammerstein model identification using input/output data, all unknown system parameters include:

-

1.

The coefficients of numerators and denominators of the transfer function and fractional degrees in the linear dynamics part.

-

2.

Bezir–Bernstein polynomial (BBP) coefficients or radial basis function neural network (RBFNN) parameters including centers, widths and connection weights in the nonlinear static part are estimated. The recursive method in identification is based on updating unknown parameters by adding new input/output data using a recursive optimization algorithm. In this paper, recursive least square (RLS) algorithm is used for this purpose.

The fractional Hammerstein model is developed to identify multi-input–single-output (MISO) nonlinear systems with the following structure (Fig. 2). It is clear that the identification of MISO systems has many practical applications and it can be generalized to multi-input–multi-output (MIMO) systems.

Modeling the behavior of the nonlinear static part is a major challenge in the Hammerstein model. In this paper, two methods are used to represent this part:

-

1.

Bezier–Bernstein polynomials: From the point of numerical analysis view, although different types of polynomial functions can be used to estimate the function, in [32] it is shown that Bernstein basic functions are the best and most stable basic functions against other polynomial basic functions.

-

2.

Artificial neural network: An important advantage of using artificial neural network is overcoming limitations such as slow convergence and complexity of the structure [33].

Weaknesses and limitations of existing methods of identifying the Hammerstein model, which has become a motivation to present a new method, can be mentioned as follows:

-

1.

Limited to the proportional fractional orders [34,35,36,37,38,39,40,41]

- 2.

-

3.

Disqualification for online identification due to computational complexity [34,35,36,37,38,39,40,41]

- 4.

-

5.

Not to consider time delays especially in online mode [34,35,36,37,38,39,40,41,42,43,44,45,46,47]

-

6.

Lack of generality of the presented models. For example, most methods are only applicable to nonlinear systems that have quasi-linear properties [37, 38];

-

7.

Inability in system identification in the presence of noises [43,44,45,46,47,48].

In this paper, a recursive method that is generalized to online identification will be used and the Hammerstein model will be considered with the following features:

-

1.

The linear part transfer function is considered as a fractional order, which, in addition to more accurate identification of the system, allows the reduction of the number of parameters as a feature of FO systems.

-

2.

Due to the BBP properties and RBFNN in more accurate modeling of nonlinear dynamics, these two functions are used to represent the nonlinear part of the Hammerstein model.

-

3.

The FO Hammerstein model is developed in MISO mode.

-

4.

According to the use of the recursive method and other mentioned features, the proposed identification method is generalized to the online mode.

2 Mathematical background

2.1 Fractional-order models

A continuous-time FO dynamical system can be expressed by a FODE as follows [27, 49]:

where \(a_{k} ,b_{k} \in {\mathbf{\mathbb{R}}}\). In explicit form:

By applying the Laplace transform with zero initial conditions, the input–output representation of the FO system can be obtained in the form of a transfer function:

2.2 The structure of Hammerstein model

As mentioned, the MISO Hammerstein model consists of a linear static subsystem and a linear dynamic part. In general, the system is modeled as follows:

where \(y(t)\) is the output of the system and \(u_{k} (t),k = 1,2, \ldots ,r\) specifies the inputs, and \(w_{k} (t),k = 1,2, \ldots ,r\) are the outputs of the nonlinear part and the input to the dynamic one, and \(n,m\) are the input and output delays for the linear subsystem.

In this paper, for the linear dynamics part, the FO transfer function is used as follows:

where \(a_{1} , \ldots ,a_{n} ,b_{0} , \ldots ,b_{m} \in R\) are coefficients and \(\alpha_{1} , \ldots ,\alpha_{n} ,\beta_{0} , \ldots ,\beta_{m} \in R\) are fractional orders. The corresponding FODE is shown as follows:

In the considered fractional-order transfer function, there is no proportional order constraint.

In order to use the output estimation method, Eq. (6) must be written in the regression form:

In this paper, three different structures are considered for the nonlinear static part:

2.2.1 Bezier–Bernstein polynomials

Reference [32] has shown that among the various types of polynomial functions for estimating a function, Bernstein's basic functions are the best and most stable of the basic functions against other polynomial basic functions. Therefore, the first method considered for modeling the nonlinear static part is the use of BBP functions. In 1912, S.N. Bernstein introduced the following polynomials for a function defined on the interval [1, 0] [50]:

Bernstein’s polynomials can be defined on an interval [a, b] by the following equation [50]:

These polynomials can be used to estimate any continuous function in the interval [a, b] and have the following properties [50]:

A general Bezier curve of degree n, defined by n + 1 vertices, can be expressed as follows [50]:

where \(a_{i}\) defines the \(i{\text{th}}\) vertex and provides information about the shape of the B-curve. Bezier extended the idea of estimating a function to the estimation of a polygon in which n + 1 vertices of a polygon are estimated by Bernstein’s basis. As a result, it was called the Bezier–Bernstein polynomial curve.

The Bezier–Bernstein polynomial used for the nonlinear static part in this paper is considered in the following form:

where \(j,{\text{d}}\) are non-negative integers, \(\delta_{j}\) are weights that must be specified, \(x(u(t)\) converts the input change interval to the interval [1, 0] (\(x \in \left[ {0,1} \right]\)), and \(B_{{j,{\text{d}}}} (.)\) are the BBPs with the following definition:

The number of Bernstein univariate polynomials of degree d is d + 1.

In [40], a formula for Bernstein polynomials defined in interval [a, b] is given as follows:

With this definition, the relation (12) can be written as follows:

Using BBPs to represent the nonlinear part, we have in relation (4):

\(B_{{k\;i,{\text{d}}}} \left( {u_{k} \left( t \right)} \right),i = 0, \ldots ,{\text{d}},k = 1, \ldots ,r\) are BBPs related to kth input and \(\delta_{k\;i} ,i = 0,1, \ldots ,{\text{d}},k = 1, \ldots ,r\) are related weights to be determined.

2.2.2 Radial basis function neural network (RBFNN)

In this case, the identification of the fractional-order Hammerstein model with RBFNN in the nonlinear static part and the fractional-order transfer function in the linear dynamic part is investigated.

The two advantages of using a RBFNN for the structure of a nonlinear gain function are the ability to quickly modify modeling during changes in process dynamics and to overcome constraints such as slow convergence and structural complexity [33]. In fact, the ability of Hammerstein models in nonlinear dynamics modeling with relatively simpler models is combined with the ability of more accurately and simpler system estimation using fractional-order transfer functions. As a result, the problems of inadequacy for online identification and high estimation error in existing methods are eliminated. Also, due to RBFNN capabilities for estimating functions, its use in this paper, by increasing the accuracy of nonlinear part estimation, affects the reduction of estimation error. In this case, the output of the nonlinear part and the input to the linear part are obtained from the following relation:

\(\phi_{hk} (.),h = 1, \ldots ,M,k = 1, \ldots ,r\) are the Gaussian functions of hth hidden node related to kth input and \(\omega_{hk} (.),h = 1, \ldots ,M,k = 1, \ldots ,r\) are the connection weights from hth hidden node to the output related to kth input which should be identified. M is the number of radial basis neural network functions.

\(c_{hk} ,{\text{d}}_{hk} ,h = 1, \ldots ,M,k = 1, \ldots ,r\) are the centers and widths of the hth RBF hidden unit associated with the kth input and \(\left\| . \right\|\) defines the Euclidean norm.

2.2.3 Modified radial basis function neural network (MRBFNN)

RBFNNs consist of only one layer of activation functions that are radially symmetric. In the standard form, the number RBFNN parameters increases exponentially with increasing the number of inputs. In the modified RBFNN, the centers and widths of Gaussian functions are concentrated in a single adjustable point instead of different points, and only different weights are used. Also, in RBFNN, the number of hidden nodes is equal to the number of sampled training data. But in the MRFNN, the number of hidden nodes is limited and selectable. These two features significantly reduce the number of unknown parameters in the identification process. In this case, the Gaussian functions associated with kth input are no longer dependent on the hidden nodes and have a constant center and width for each input. They are as follows:

That is, \(\phi_{hk} (.)\) Gaussian functions have the same centers and widths and are simplified to \(\phi_{k} (.),k = 1, \ldots ,r\).

3 System identification algorithms

In the proposed identification method, the modified genetic algorithm (MGA) [51] is applied to identify fractional orders and time delays in the dynamic part and to identify the centers and widths of RBF units (Eqs. 17–19) in the nonlinear part as well as the production of initial estimations for the coefficients of the fractional-order transfer function, BBP weights (Eq. 16) and NN weights (Eqs. 17–19).

GA with an innovative strategy is called as modified genetic algorithm (MGA). Comparing with the classic GA in which the best solutions or chromosomes are selected and transferred to the next generation, the best characteristics or properties are selected and transferred to the next generation in MGA. It is inspired from artificial genetic operation in some agronomic products that the best properties of different types by genetic manipulation are transferred to the new product. In this algorithm, the crossover is performed exchanging the best genes between the chromosomes [51].

The crossover step is the main difference between the classic GA and MGA. In MGA, after selection the best chromosomes, the genes are changed between them. The aim of continuing this process is finding the best genes instead of the best chromosomes. The artificial parents are generated with the best genes and putting them together. Better solutions, escaping from local optima and faster convergence are some of the advantages of MGA which are very vital in the online identification process. These advantages will be illustrated by examples [51].

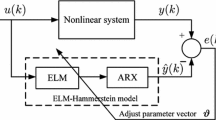

For the identification process, a part of the input/output data is used to obtain all the unknown parameters using MGA. In the next step, using these initial estimations, the recursive least squares (RLS) algorithm is used to update and optimize them using the rest of data. The effective combination of these two algorithms provides the ability to track the nonlinear time-variable behavior of the system. The proposed online system identification structure is shown in Fig. 3.

3.1 MISO Hammerstein model using BBPs

Considering Eq. (15), Eq. (7) becomes:

Equation (20) for MISO mode can be written in the following form:

According to Eq. (9), in the general case, for BBP of d degree, we have:

Using Grunwald–Letnikov estimation:

where

If we consider the measured data \(u(t)\) and \(y^{*} (t) = y(t) + p(t)\) where \(p(t)\) is the disturbance signal, Eqs. (3–43) can be rewritten as follows:

while

Equation (26) is linear with respect to coefficients and can be expressed as follows:

which:

The estimation vector \(\hat{\theta }_{k}\) is obtained by minimizing the following quadratic least squares criterion:

The solution to this problem can be obtained by the least squares estimation:

provided that there is the inverse \(\left[ {\sum\limits_{i = 1}^{k} {\phi (i - 1)\phi^{T} (i - 1)} } \right]^{ - 1}\).

In order to provide the online identification possibility, the recursive algorithms are required. For this purpose, a recursive version of Eq. (33) is proposed, which can be written as follows:

where the initial value of the adaptation gain matrix \(G_{k}\) is generally selected as:

For static systems with slow-varying parameters, a forgetting factor \(\lambda {, 0 < }\lambda < 1\) can be considered. Therefore, the RLS algorithm is converted as:

Using this recursive algorithm, the coefficients \(a^{\prime}_{i} ,bv^{\prime}_{jl}\) are determined; then, the values of \(a_{i} ,b_{j}\) can be obtained using Eq. (24). Finally, Eq. (23) is used to obtain the estimated output values. The convergence of RLS algorithm in the reference [51] has been proven.

3.2 MISO Hammerstein model using RBFNN

As mentioned, in order to increase the accuracy of system dynamics investigation, time-delay information must be considered in practical processes or systems identification. In this case, the system is considered with time delays in the inputs. It is modeled as follows:

where \(y(t)\) is system output, \(w_{k} (t),k = 1,2, \ldots ,r\) are RBFNN outputs and the inputs to the linear dynamics section. \(\gamma_{k} ,k = 1,2, \ldots ,r\) are time delays and \(n,m\) are the input and output delays for the linear subsystem. Nonlinear static functions in this case are considered as follows:

which \(u_{1} ,u_{2} , \ldots ,u_{r}\) define inputs. As shown in the following equation, \(\phi_{hk} (.),h = 1, \ldots ,M,k = 1, \ldots ,r\) are Gaussian functions related to kth input. Also, \(\omega_{hk} (.),h = 1, \ldots ,M,k = 1, \ldots ,r\) are the connection weights from hth hidden node to the output and related to the kth input that must be determined. M is the number of RBFs.

\(c_{hk} ,{\text{d}}_{hk} ,h = 1, \ldots ,M,k = 1, \ldots ,r\) are the centers and widths of the hth RBF hidden unit associated with kth input. \(\left\| . \right\|\) defines the Euclidean norm.

Also, the linear subsystem is considered as follows:

which \(a_{1} , \ldots ,a_{n} ,b_{0} , \ldots ,b_{m} \in R\) are coefficients and \(\alpha_{1} , \ldots ,\alpha_{n} ,\beta_{0} , \ldots ,\beta_{m} \in R\) are the fractional orders.

Considering Eqs. (38) to (40), the input–output relationship is equal to:

If the measured inputs and outputs are \(u(t)\) and \(y^{*} (t) = y(t) + q(t)\), respectively, which \(q(t)\) is a random Gaussian noise with zero mean and \(\sigma^{2}\) variance, Eq. (41) is rewritten as follows:

Unknown parameters are divided into two subcategories: The first subset includes time delays \(\gamma_{k} ,k = 1,2, \ldots ,r\), fractional orders \(\alpha_{1} , \ldots ,\alpha_{n} ,\beta_{0} , \ldots ,\beta_{m}\), and centers and widths of RBF units \({\text{d}}_{hk} ,c_{hk} ,h = 0, \ldots ,M,k = 1, \ldots ,r\). And the second category includes the coefficients of the transfer function \(a_{1} , \ldots ,a_{n} ,b_{0} , \ldots ,b_{m}\) and the connection weights \(\omega_{hk} \left( \cdot \right),h = 1, \ldots ,M,k = 1, \ldots ,r\) from the jth hidden node to the output. In this paper, input/output data are divided into two parts. The first part is used to obtain both sets of unknown parameters using MGA. Then, using these estimations, RLS algorithm uses the I/O data second part to update and optimize the second subcategory of unknown parameters.

The standard RLS algorithm needs to set initial values for unknown parameters. In the standard method, these initial values are calculated using the batch least squares algorithm from multiple prototypes. In this method, the regressors dimension of the known parameters determines the number of samples required to determine the unique solution. In this paper, MGA is used for this task.

In order to use the output error estimation method, the input–output relationship (41) must be written in regression form:

where \(z\) is known parameters including input–output data:

That:

And \(\theta\) shows unknown parameters:

Including:

Which in (50):

Provided that \(\left[ {\sum\limits_{i = 1}^{k} {z(i - 1)z^{T} (i - 1)} } \right]^{ - 1}\) has a definable value, solving this problem using the LS estimation is:

When the parameters matrix \(z\) is singular or poorly conditioned, its inverse calculation will be very difficult. To avoid the problem of inverse calculation in online identification, the recursive version of Eq. (53) is written as follows [51].

In general, the initial value of the adaptation gain matrix P is selected as follows [51]:

Without losing the generality, we assume that \(\hat{b}_{r} (k) = 1\):

The unknown coefficients \(a_{i} ,i = 1,...,n\) are obtained directly from \(\hat{\theta }_{a}\). The \(b_{j} {, 0} \le j \le m \, \) values are calculated using linear least squares, assuming \(\hat{b}_{r} (k) = 1\) as the following:

3.3 MISO Hammerstein model using MRBFNN

In this case, Eq. (41) is modified as:

If the measured inputs and outputs are \(u(t)\) and \(y^{*} (t) = y(t) + q(t)\), respectively, which \(q(t)\) is a random Gaussian noise with zero mean and \(\sigma^{2}\) variance, Eq. (58) is rewritten as follows:

And the relation (46) is modified as:

In this case, time delays \(\gamma_{k} ,k = 1,2, \ldots ,r\), fractional orders \(\alpha_{1} , \ldots ,\alpha_{n} ,\beta_{0} , \ldots ,\beta_{m}\), and centers and widths of RBF units \(d_{hk} ,c_{hk} ,h = 0, \ldots ,M,k = 1, \ldots ,r\) are identified using MGA. And an initial estimation for the coefficients of the transfer function \(a_{1} , \ldots ,a_{n} ,b_{0} , \ldots ,b_{m}\) and the connection weights from the jth hidden node to the output \(\omega_{hk} \left( \cdot \right),h = 1, \ldots ,M,k = 1, \ldots ,r\) are obtained using this evolutionary algorithm. Then, these estimations are updated and optimized using RLS algorithm.

In comparison with classic RBFNN which the number of unknown parameters is equal to \(3M + 1\) (i.e., \(c_{hk} ,d_{hk} ,\omega_{hk} ,\gamma_{k}\) and \(h = 1, \ldots ,M\) where M is the data samples number), their number in modified RBFNN is equal to 4 (i.e. \(d_{k} ,c_{k} ,\Omega_{k} ,\gamma_{k}\)).

4 Simulation results

The main reason for using the recursive method is the ability to generalize to online identification. In order to demonstrate the ability of online identification, two examples have been considered:

-

1.

Hammerstein model with piecewise nonlinear characteristic such as dead-zone characteristic [52]

-

2.

Continuous-time linear parameter-varying (CT LPV) nonlinear benchmark system [53]

Both systems have been identified online using all three presented structures. One hundred samples were used to estimate the prototype. Then, by adding any input/output data in online mode, the model is updated using RLS. In the online mode, the MGA is executed only once at the beginning of the detection process and then only the LS algorithm is executed recursively. Therefore, all that is required in each instance is the recursive formula of the LS algorithm.

In addition, it is considered a case in which with adding each input / output data, MGA runs in a small number of generations, i.e., 15 generations. In this case, the MGA updates all the unknown parameters in each sampling starting from the best chromosome of the last run in the previous sampling (instead of starting from random chromosomes). Then, RLS goes one step further and updates the connection coefficients and weights.

4.1 Hammerstein model with piecewise nonlinear characteristic

In this Hammerstein model, the recursive relation forms the linear dynamic part:

And the discrete nonlinear part is described by the following equation:

Actual output and estimated output in the last sample (using 200 sampled data) for the Hammerstein model with Bezier–Bernstein polynomials in Fig. 4, for the Hammerstein model with RBFNN in Fig. 5 and with MRBFNN, is shown in Fig. 6. The corresponding estimation error using the three nonlinear functions Bezier–Bernstein, RBFNN and MRBFNN is presented in Figs. 7, 8 and 9, respectively.

In order to compare the estimation accuracy of three different structures, the changes of estimation error during the identification process (in different samples) in these three structures are shown in Figs. 10, 11 and 12, and the values of these errors are given in Table 1.

The comparison of the estimation errors in Table 1 and corresponding Figs. 10, 11 to 12 shows the superiority of identification accuracy using the MRBFNN in Hammerstein model over the two other structures.

Also, in order to compare the parameters convergence speed in three different structures, the variations of the identified parameters for the Hammerstein model with Bezier–Bernstein polynomials in Figs. 13 and 14, for the Hammerstein model with RBFNN in Figs. 15, 16 and 17 and for the Hammerstein model with MRBFNN are presented in Figs. 18, 19 and 20. The comparison of the figures shows that the identification process of the Hammerstein model with MRBFNN has the highest convergence speed due to the smaller number of unknown parameters identified between the three proposed structures.

4.2 Continuous-time linear parameter-varying (CT LPV) nonlinear benchmark system

The second system is a benchmark problem proposed by Rao and Garnier in [54]. This problem is inspired by the "moving pole" parameter-varying system, a fourth-order system with non-minimum phase freezing dynamics and complex poles pair dependent to \(p\) parameter. This system is defined as follows:

where the \(d\) operator is a time derivative, \(p\) is a time-dependent programming variable, \(A_{0}\) and \(B_{0}\) are polynomials of \(d\) with coefficients \(a_{i}^{0}\) and \(b_{i}^{0}\). These coefficients are functions of \(p\); \(v_{0}\) is a process with semi-static noise with limited and unrelated spectral density as follows:

The \(e_{0} (t)\) is a white noise process with a mean of zero, \(q^{ - 1}\) is the backward time shift operator, \(C_{0}\) and \(D_{0}\) are polynomials with constant coefficients. The values of the parameters used in this paper are as follows [53]:

For coefficients:

The input signal is a uniform distribution sequence in the interval \([ - 1,1]\), \(p\) is selected as \(p(t) = \sin (\pi t)\), and the sampling time is \(1{\text{ms}}\).

Actual output and estimated output in the last sample (using 500 sampled data) for the Hammerstein model with Buzzer–Bernstein polynomials are shown in Fig. 21, for the Hammerstein model with RBFNN in Fig. 22 and for the MRBFNN in Fig. 23. The corresponding estimation error using the three nonlinear functions Bezier–Bernstein, RBFNN and MRBFNN is presented in Figs. 24, 25 and 26, respectively.

In order to compare the accuracy of estimation with three different structures, the variations of estimation error during the identification process (in different samples) in these three structures are shown in Figs. 27, 28 and 29 and the values of these errors are given in Table 2.

The comparison of the results of estimation errors in Table 2 and the corresponding Figs. 26, 27 and 28 shows the superiority of identification accuracy in the Hammerstein model using the MRBFNN compared to the Hammerstein model with the classic RBFNN.

Also, in order to compare the convergence velocity of the parameters in three different structures, the variations of the identified parameters for Hammerstein model with Bezier–Bernstein polynomials are presented in Figs. 30 and 31, for Hammerstein model with RBFNN in Figs. 32, 33 and 34 and for the Hammerstein model with MRBFNN in Figs. 35, 36 and 37. The comparison of the figures shows that the process of identifying the Hammerstein model with MRBFNN has the highest convergence speed between the three proposed structures, due to the smaller number of unknown parameters identified.

5 Conclusion

In this paper, a numerical example and a benchmark problem were introduced to evaluate the accuracy of the proposed online identification method. Then, each of the three proposed structures was used to identify these systems and the results were presented. These results confirm the ability of the proposed method to accurately identify the system and eliminate noise. A comparison is made between the online identification of the three proposed structures in terms of convergence speed and estimation accuracy, which shows the relative superiority of accuracy using the modified one. This comparison shows that using the Hammerstein model with the MRBFNN, the accuracy of estimation and the speed of convergence of the parameters in the online mode increase compared to the use of the classical NN. The estimation accuracy of the modified NN in the first example is 63.05% higher than the Bezier–Bernstein polynomial and 18.7% lower in the second example. Considering the speed of convergence of the modified NN compared to the use of Bezier–Bernstein polynomials, the use of the Hammerstein structure with the modified NN is recommended.

References

Oustaloup, A.: From fractality to non-integer derivation through recursivity, a property common to these two concepts: a fundamental idea from a new process control strategy. In: Proceeding of the 12th IMACS World Congress, Paris, pp. 18–22. (1988)

Manabe, S.: The non-integer integral and its application to control systems. Mitsubishi Denki Lab. Rep. 2(2), 1–4 (1961)

Miller, K.S., Ross, B.: An Introduction to the Fractional Calculus and Fractional Differential Equations. Wiley, New York (1993)

Podlubny, I.: Fractional Differential Equations: An Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of their Solution and some of their Applications. Academic Press, Cambridge (1998)

Baleanu, D., Güvenç, Z.B., Machado, J.T. (eds.): New Trends in Nanotechnology and Fractional Calculus Applications, p. 978. Springer, New York (2010)

Sabatier, J., Agrawal, O.P., Machado, J.T.: Advances in Fractional Calculus. Springer, Dordrecht, The Netherlands (2007)

Gabano, J.D., Poinot, T., Kanoun, H.: Identification of a thermal system using continuous linear parameter-varying fractional modelling. IET Control Theory Appl. 5(7), 889–899 (2011)

Ionescu, C.M., Hodrea, R., De Keyser, R.: Variable time-delay estimation for anesthesia control during intensive care. IEEE Trans. Biomed. Eng. 58(2), 363–369 (2011)

Mathieu, B., Melchior, P., Oustaloup, A., Ceyral, C.: Fractional differentiation for edge detection. Signal Process. 83(11), 2421–2432 (2003)

Barbosa, R.S., Machado, J.T., Silva, M.F.: Time domain design of fractional differintegrators using least-squares. Signal Process. 86(10), 2567–2581 (2006)

Melchior, P., Orsoni, B., Lavialle, O., Poty, A., Oustaloup, A.: Consideration of obstacle danger level in path planning using A∗ and fast-marching optimisation: comparative study. Signal Process. 83(11), 2387–2396 (2003)

Yousfi, N., Melchior, P., Rekik, C., Derbel, N., Oustaloup, A.: Design of centralized CRONE controller combined with MIMO-QFT approach applied to non square multivariable systems. Int. J. Comput. Appl. 45(16), 0975–8887 (2012)

Silva, M.F., Machado, J.T., Lopes, A.M.: Fractional order control of a hexapod robot. Nonlinear Dyn. 38(1–4), 417–433 (2004)

Cao, J., Ma, C., Xie, H., Jiang, Z.: Nonlinear dynamics of duffing system with fractional order damping. J. Comput. Nonlinear Dyn. 5(4), 041012 (2010)

Cugnet, M., Sabatier, J., Laruelle, S., Grugeon, S., Sahut, B., Oustaloup, A., Tarascon, J.M.: On lead-acid-battery resistance and cranking-capability estimation. IEEE Trans. Ind. Electron. 57(3), 909–917 (2010)

Monje, C.A., Vinagre, B.M., Feliu, V., Chen, Y.: Tuning and auto-tuning of fractional order controllers for industry applications. Control Eng. Pract. 16(7), 798–812 (2008)

Zhao, M., Wang, J.: Outer synchronization between fractional-order complex networks: a non-fragile observer-based control scheme. Entropy 15(4), 1357–1374 (2013)

Baleanu, D., Golmankhaneh, A., Nigmatullin, R., Golmankhaneh, A.: Fractional newtonian mechanics. Open Phys. 8(1), 120–125 (2010)

Chen, W., Ye, L., Sun, H.: Fractional diffusion equations by the Kansa method. Comput. Math. Appl. 59(5), 1614–1620 (2010)

Suchorsky, M.K., Rand, R.H.: A pair of van der Pol oscillators coupled by fractional derivatives. Nonlinear Dyn. 69(1–2), 313–324 (2012)

Yang, J.H., Zhu, H.: Vibrational resonance in duffing systems with fractional-order damping. Chaos Interdiscip. J. Nonlinear Sci. 22(1), 013112 (2012)

Rossikhin, Y.A., Shitikova, M.V.: Analysis of damped vibrations of thin bodies embedded into a fractional derivative viscoelastic medium. J. Mech. Behav. Mater. 21(5–6), 155–159 (2013)

Bagley, R.L., Calico, R.A.: Fractional order state equations for the control of viscoelasticallydamped structures. J. Guid. Control Dyn. 14(2), 304–311 (1991)

Battaglia, J.L., Le Lay, L., Batsale, J.C., Oustaloup, A., Cois, O.: Heat flux estimation through inverted non integer identification models. Int. J. Therm. Sci. 39(3), 374–389 (2000)

Zaborovsky, V., & Meylanov, R.: Informational network traffic model based on fractional calculus. In: Info-tech and Info-net, 2001. Proceedings. ICII 2001-Beijing. 2001 International Conferences, vol. 1, pp.58–63. IEEE (2001)

Vinagre, B. M., & Feliu, V.: Modeling and control of dynamic system using fractional calculus: application to electrochemical processes and flexible structures. In: Proceeding 41st IEEE Conference Decision and Control vol. 1, pp. 214–239. (2002)

Sjöberg, M.M., Kari, L.: Non-linear behavior of a rubber isolator system using fractional derivatives. Veh. Syst. Dyn. 37(3), 217–236 (2002)

Reyes-Melo, M. E., Martinez-Vega, J. J., Guerrero-Salazar, C. A., & Ortiz-Mendez, U.: Application of fractional calculus to modelling of relaxation phenomena of organic dielectric materials. In: Solid Dielectrics, 2004. ICSD 2004. Proceedings of the 2004 IEEE International Conference on vol. 2, pp. 530–533). IEEE (2004)

Ionescu, C., Desager, K., De Keyser, R.: Fractional order model parameters for the respiratory input impedance in healthy and in asthmatic children. Comput. Methods Programs Biomed. 101(3), 315–323 (2011)

Li, C., Peng, G.: Chaos in Chen’s system with a fractional order. Chaos Solitons Fractals 22(2), 443–450 (2004)

Chen, Y., Petras, I., & Xue, D.: Fractional order control-a tutorial. In: 2009 American Control Conference, pp. 1397–1411. IEEE (2009)

Farouki, R., Goodman, T.: On the optimal stability of the Bernstein basis. Math. Comput. Am. Math. Soc. 65(216), 1553–1566 (1996)

Pislaru, C., Shebani, A.: Identification of nonlinear systems using radial basis function neural network. Int. J. Comput. Inf. Syst. Control Eng. 8(9), 1528–1533 (2014)

Hong, X., Mitchell, R.J.: Hammerstein model identification algorithm using Bezier-Bernstein approximation. IET Control Theory Appl. 1(4), 1149–1159 (2007)

Aoun, M., Malti, R., Cois, O., & Oustaloup, A.: System identification using fractional Hammerstein models. In: Proceeding of the 15th IFAC World Congress, pp. T-We-M21. (2002)

Liao, Z., Zhu, Z., Liang, S., Peng, C., Wang, Y.: Subspace identification for fractional order Hammerstein systems based on instrumental variables. Int. J. Control Autom. Syst. 10(5), 947–953 (2012)

Vanbeylen, L.: A fractional approach to identify Wiener-Hammerstein systems. Automatica 50(3), 903–909 (2014)

Zhao, Y., Li, Y., & Chen, Y.: Complete parametric identification of fractional order Hammerstein systems. In: Fractional Differentiation and its Applications (ICFDA), 2014 International Conference on, pp. 1–6. IEEE (2014)

Li, Y., Zhai, L., Chen, Y., & Ahn, H. S.: Fractional-order iterative learning control and identification for fractional-order Hammerstein system. In: Intelligent Control and Automation (WCICA), 2014 11th World Congress on, pp. 840–845. IEEE (2014)

Hammar, K., Djamah, T., & Bettayeb, M.: Fractional Hammerstein system identification using polynomial non-linear state space model. In: Control, Engineering & Information Technology (CEIT), 2015 3rd International Conference on, pp. 1–6. IEEE (2015)

Hammar, K., Djamah, T., & Bettayeb, M.: Fractional Hammerstein system identification using particle swarm optimization. In: 2015 7th International Conference on Modelling, Identification and Control (ICMIC), pp. 1–6. IEEE (2015)

Ivanov, D. V.: Identification discrete fractional order Hammerstein systems. In: Control and Communications (SIBCON), 2015 International Siberian Conference on, pp. 1–4. IEEE (2015)

Karimshoushtari, M., Novara, C.: Design of experiments for nonlinear system identification: a set membership approach. Automatica 119, 109036 (2020)

Karagoz, R., Batselier, K.: Nonlinear system identification with regularized tensor network B-splines. Automatica 122, 109300 (2020)

Kaltenbacher, B., Nguyen, T.T.N.: A model reference adaptive system approach for nonlinear online parameter identification. Inverse Probl. 37(5), 055006 (2021)

Du, Y., Liu, F., Qiu, J., Buss, M.: A novel recursive approach for online identification of continuous-time switched nonlinear systems. Int. J. Robust Nonlinear Control 31(15), 7546–7565 (2021)

Mania, H., Jordan, M.I., Recht, B.: Active learning for nonlinear system identification with guarantees. J. Mach. Learn. Res. 23, 32–41 (2022)

Leylaz, G., Wang, S., Sun, J.Q.: Identification of nonlinear dynamical systems with time delay. Int. J. Dyn. Control 10(1), 13–24 (2022)

Monje, C.A., Chen, Y., Vinagre, B.M., Xue, D., Feliu-Batlle, V.: Fractional-order Systems and Controls: Fundamentals and Applications. Springer, Cham (2010)

Mohammed, A.A.: Approximate solution of differential equations of fractional orders using Bernstein-Bézier polynomial. Al- Mustansiriya J. Sci. 23(1), 65–74 (2012)

Jahani Moghaddam, M., Mojallali, H., Teshnehlab, M.: A multiple-input–single-output fractional-order Hammerstein model identification based on modified neural network. Math. Methods Appl. Sci. 41(16), 6252–6271 (2018)

Elleuch, K., Chaari, A.: Modeling and identification of Hammerstein system by using triangular basis functions. Int. J. Electr. Comput. Energ. Electron. Commun. Eng. 1, 1 (2011)

Laurain, V., Tóth, R., Gilson, M., Garnier, H.: Direct identification of continuous-time linear parameter-varying input/output models. IET Control Theory Appl. 5(7), 878–888 (2011)

Rao, G. P., & Garnier, H.: Numerical illustrations of the relevance of direct continuous-time model identification. In: 15th Triennial IFAC World Congress on Automatic Control. Barcelona, Barcelona (Spain) (2002)

Funding

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

Study concept and design; analysis and interpretation of data; drafting of the manuscript; critical revision of the manuscript for important intellectual content; and statistical analysis are all done by the corresponding author.

Corresponding author

Ethics declarations

Competing interests

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jahani Moghaddam, M. Online system identification using fractional-order Hammerstein model with noise cancellation. Nonlinear Dyn 111, 7911–7940 (2023). https://doi.org/10.1007/s11071-023-08249-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-023-08249-5