Abstract

In this paper, we focus on Boubaker polynomials in fractional calculus area and obtain the operational matrix of Caputo fractional derivative and the operational matrix of the Riemann–Liouville fractional integration for the first time. Also, a general formulation for the operational matrix of multiplication of these polynomials has been achieved to solve the nonlinear problems. Then, these matrices are applied to solve fractional optimal control problems directly. In fact, the functions of the problem are approximated by Boubaker polynomials with unknown coefficients in the constraint equations, performance index and conditions. Thus, a fractional optimal control problem converts to an optimization problem, which can then be solved easily. Convergence of the algorithm is proved. Numerical results are given for several test examples to demonstrate the applicability and efficiency of the method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Polynomial expansion methods are applied in many mathematical and engineering problems to obtain good results. Among the most frequently used polynomials, the Boubaker polynomials are one of the non-orthogonal polynomial sequences established by Boubaker in 2007 [1]. In [2, 3], the authors presented temperature profiling theoretical investigations in cylindrical coordinates and solved the related problems using Boubaker polynomials. Kumar solved the Love’s equation via the Boubaker polynomials expansion scheme [4], and the Boltzmann diffusion equation was solved using these polynomials in [5]. In [6], the authors used these polynomials to solve the optimal control problems that have a long history, and this paper motivated us to use these polynomials for solving the fractional order optimal control problems (FOCPs) that involve at least one fractional derivative, and they are a very new field in mathematics. Optimality conditions for FOCPs with Riemann–Liouville derivative were achieved by Agrawal in [7]. Since analytical solution of Hamiltonian system is very complicated, some researchers have presented several numerical methods to solve this system; for example in [8] the author approximated fractional dynamic systems using the Grunwald–Letnikov definition and then applied numerical method for solving the obtained algebraic equations, and in [9] Agrawal introduced Hamiltonian equations for a class of FOCPs with Caputo derivative and then presented an approach based on the equivalence of the Euler–Lagrange and the Volterra integral equations. In [10], a central difference numerical scheme was used to solve these equations. In [11], the authors used rational approximation to solve fractional order optimal control problems. In [12], the Legendre multiwavelet collocation method was introduced, and in [13] another numerical method was applied to solve a class of FOCPs by the authors. [14] presented a quadratic numerical scheme, and in [15] Legendre spectral collocation method was used to solve some type of fractional optimal control problems. A discrete method was used to solve fractional optimal control problem in [16]. Recently, the fractional optimal control problems have been solved directly without using Hamiltonian equation by some authors [17, 18]; in fact, in these papers the authors have converted fractional optimal control problems to a system of algebraic equations, which should be solved by some methods similar to the methods presented in [19,20,21,22] for solving nonlinear system. The organization of this paper is as follows. Section 2 contains three subsections that present some preliminaries needed later. In Sects. 3 and 4, the Boubaker operational matrices of the Caputo fractional derivative and Riemann–Liouville fractional integration are presented. In Sect. 5, we introduce the operational matrix of multiplication. Section 6 contains problem statement by using the obtained operational matrices. In Sect. 7, the convergence analysis is discussed, and Sect. 8 presents several numerical examples to show validity of our method. Finally, conclusion is presented in Sect. 9.

2 Preliminaries and notations

In this section, we will recall some basic definitions and auxiliary results that will be needed later in our discussion. Here we give the standard definitions of left and right Riemann–Liouville fractional integrals and Caputo fractional derivatives.

2.1 The fractional integral and derivative

Definition 1

Let \(x:[a,b] \rightarrow {\mathbb {R}}\) be a function, \(\alpha > 0\) a real number and \(n=\left\lceil { \alpha }\right\rceil \), where \(\left\lceil { \alpha }\right\rceil \) denotes the smallest integer greater than or equal to \(\alpha \), the left and right Riemann–Liouville fractional integrals are defined by [23]:

For the Riemann–Liouville fractional integrals, we have:

Moreover, the left and right Caputo fractional derivatives are defined by means of:

The left Caputo fractional derivative has the property,

where \(N_0=\lbrace 0,1,2,\ldots \rbrace \), and \(D_t^\alpha C = 0\), holds for constant C.

2.2 Boubaker polynomials

In this section, Boubaker polynomials, which are used in the next sections, are reviewed briefly. The Boubaker polynomials were established for the first time by Boubaker et al. to solve heat equation inside a physical model. The first monomial definition of the Boubaker polynomials on interval \(t \in [0,1] \) was introduced by [1]:

where \(\lfloor \cdot \rfloor \) is the floor function. The Boubaker polynomials have also a recursive relation:

and the first few Boubaker polynomials are

2.3 Function approximation

It is clear that

is a finite dimensional and closed subspace of the Hilbert space \(H=L^2[0,1]\), and therefore, Y is a complete subspace and there is a unique best approximation out of Y such as \({\tilde{f}}\in Y\) for each \(f \in H\), that is

Since \({\tilde{f}} \in Y,\) there exist unique coefficients \(c_0,c_1,\ldots ,c_m\), such that [24]

where T shows transposition, C and \(\varPsi (t)\) are the following vectors:

We let

where \(\langle , \rangle \) denotes inner product, and then, we have

with

Now we suppose that

where

so we can write

and C can be calculated as

where D is the following \((m+1) \times (m+1)\) matrix

3 Boubaker operational matrix of the Caputo fractional derivative

The Caputo fractional derivative of vector \(\varPsi (t)\) mentioned in Eq. (3) can be expressed by:

where \(D_\alpha \) is the \((m+1)\times (m+1)\) Caputo fractional operational matrix of derivative that will be constructed in this section. The Boubaker polynomials basis can be considered as:

where \(T_m(t) = [1,t,\ldots ,t^m]^\mathrm{T},\) and \(\varLambda =(\varUpsilon _{i,j})_{i,j=0}^m,\) is an \((m+1)\times (m+1)\) matrix.

Considering the following terms

or

we can obtain the entries of the matrix \(\varLambda \) for \(i\ge 2, j= i-2\lfloor i/2\rfloor ,\ldots ,i,\) as:

And based on the definition \(B_0(t)\) and \(B_1(t)\), this formula is valid for \(i=1,\), and for \(i=0,\) we have

By using Eqs. (5) and (6), we set

Giving consideration to the following property of the Caputo fractional derivative,

define:

where \({\tilde{\varSigma }} =({\tilde{\varSigma }}_{i+1,j+1})\) is a diagonal \((m+1)\times (m+1)\) matrix with entries

and \( {\tilde{T}}=({\tilde{T}} _{i+1})_{(m+1)\times 1},\) with

Now, \({\tilde{T}}\) is expanded in terms of Boubaker polynomials as

where

The entries of the vector \({\hat{P}}_i\) can be calculated as

then we have

In Eq. (9), \(D_\alpha \) is the operational matrix of Caputo derivative.

4 Boubaker operational matrix of the Riemann–Liouville fractional integration

In this section, we derive the \((m+1)\times (m+1)\) Riemann–Liouville fractional operational matrix of integration \(F^{(\alpha )}\) expressed by:

By using Eq. (1) and linearity of Riemann–Liouville fractional integral, for \(i\ge 2,\) we have

where

Now we can expand \(t^{i-2r+\alpha }\) in terms of Boubaker polynomials as

with

and substitution in Eq. (11) we get

Now let

and rewrite Eq. (12) for \( i=2,\ldots ,m,\) as

For \(i=0,1\), we have

so like the previous process \( t^{\alpha }\) and \( t^{\alpha +1}\) are expanded with respect to Boubaker polynomials as

Hence, we have

5 The operational matrix of multiplication

The product of two Boubaker function vectors satisfies in the following equation

where A is the any arbitrary vector and \({\tilde{A}}\) is a \((m+1)\times (m+1)\) matrix. To calculate the entries of this matrix, we notice that \(A^\mathrm{T}\varPsi (t)\varPsi (t)^\mathrm{T}\) can be approximated by \(\varPsi (t)\) as following

where

Using Eq. (13), we obtain

where \( a_i^k=\langle a_i,B_k\rangle ,d_{j,k}=\langle B_j,B_k\rangle \). So by considering \(A_i=[a_i^0,a_i^1,\ldots ,a_i^m]^\mathrm{T},\) we have

or

therefore the operational matrix of multiplication is obtained.

6 Problem statement

In this section, we consider the following FOCPs,

where

are state and control vectors and also A(t), B(t) and C(t) are matrices of appropriate dimensions with B(t) a symmetric positive-semi definite matrix and A(t) a symmetric positive-definite matrix. Subject to dynamical system

and conditions

where \(M_i,\quad i=1,\ldots ,n,\) and \(N_j,\quad j=1,\ldots ,m,\) are real numbers and at least one of them should be nonzero. P(t), Q(t), F(t) are continuous matrix functions of time as follows

and

and \(x_k, k=0,\ldots ,n-1,\) are specified constant vectors. The resolution process by using the operational matrices is discussed in the following subsections.

6.1 Application of operational matrix of derivative

We expand the state and control variables with respect to the Boubaker polynomials as:

where \(X_i\) and \(U_j\) are the following unknown coefficients vectors

so we derive

Suppose that \( {\hat{\varPsi }}(t) \) and \( {\hat{\varPsi }}^{*}(t) \) are the following \( l(m+1)\times l \) and \( q(m+1) \times q \) matrices, respectively

where \( I_{l} \) and \( I_{q} \) are \( l \times l \) and \( q\times q \) identity matrices, respectively, and \( \otimes \) denotes the Kronecker product [25]. So we can write

where X, U are vectors of order \( l(m+1) \times 1 \) and \( q(m+1) \times 1 \), respectively, given by

and

Also, we expand other functions of t in the problem with respect to Boubaker polynomials as

where the vectors

can be achieved using Eq. (4).

Then, one can expands \(x_i^{(k)}(t)\) as

where \(D^1\) is the \((m+1)\times (m+1)\) operational matrix of derivative of order 1 and can be obtained easily by choosing \(\alpha =1,\) in \(D_\alpha \).

Now, if these approximations are applied in the problem, we have:

that can be solved numerically by Gauss–Legendre integration method.

Subject to the system

Or

Now using

we obtain the following system of algebraic equations

Also, we need to write the initial conditions

in terms of the Boubaker basis as

To solve mentioned optimization problem, let

where \(\mu _i ,\) and \(\lambda _k=[\lambda _{k0},\lambda _{k1},\ldots ,\lambda _{km}]^\mathrm{T}\) are unknown Lagrange multipliers solved by Newton’s iterative method. The necessary conditions for \((X,U,\lambda _k,\mu _i)\) to be the extreme of \(J^\star \) are

As a result, by replacing the values obtained from (18) in Eq. (14), u(t) and x(t) can be calculated.

6.2 Application of operational matrix of integration

In this section, the operational matrix of integration is applied to solve the mentioned FOCP. For this purpose, we approximate

therefore we have

Now we write

and we have

also for \(j=1,\ldots ,n-1,\) and \(k=1,\ldots ,l,\) we have

and for \(j=1,\ldots ,m,\) and \(k=1,\ldots ,l,\) we have

by replacing the approximation of x(t) and u(t) in the performance index J, this functional can be calculated numerically like previous subsection, and also by using the mentioned approximations in this subsection, the system

can be writen as

Now using

we obtain the following linear system of algebraic equations

To solve mentioned optimization problem, let

where \(\lambda _k=[\lambda _{k0},\lambda _{k1},\ldots ,\lambda _{km}]^\mathrm{T}\) are unknown Lagrange multipliers solved by iterative method. The necessary conditions for \((X,U,\lambda _k)\) to be the extreme of \(J^\star \) are

As a result, by replacing the values obtained from (20) using Newton’s iterative method in Eq. (19), u(t) and x(t) can be calculated.

7 Convergence analysis

In this section, we focus on the convergence of the method, first we consider the operational matrix of derivative and show that \(X_k^\mathrm{T}D_\alpha \varPsi (t)\) tends to \(D_t^\alpha x_k(t)\) as m tends to infinity in Eq. (15) for \(k=1,\ldots ,l\). For this purpose, we notice that we have,

Since \(B_j^{(n)}(t)\) is a continuous function, we have:

We can write the above equation as

by Eqs. (2), (21) and (22), for \(n-1<\alpha \le n\), we obtain

or

Now we recall Eq. (9) that \(D_\alpha =\varLambda {\tilde{\varSigma }} P^\mathrm{T},\) where ith column of \(P^\mathrm{T}\) is the coefficients vector of Boubaker approximation of \(t^{i-\alpha }\) for \(i=1,\ldots ,m.\)

By the Weierstrass approximation theorem, we know that Boubaker approximation of every continuous function converges uniformly to that function, so considering Eq. (8) we have

or

where \(\varPsi _n(t)=[B_0(t),B_1(t),\ldots ,B_n(t)]^\mathrm{T},\) so we can write,

Now we use Eqs. (6) and (7) and insert the following term in the above equation,

and obtain

Then, the following result can be achieved from Eqs. (23) and (24)

Finally, this proof is complete by \(n\ge m\). It should be noticed that this result is valid for any arbitrary \(\alpha _j\) and can be expanded for general form of dynamical system presented in this article.

Now the operational matrix of integration is considered to find an upper bound for the error of operational matrix of the fractional integration \(F^{(\alpha )}\) and to show that by increasing the number of Boubaker polynomials \(F^{(\alpha )}\varPsi (t)\) tends to \(I_t^{\alpha }\varPsi (t)\). To obtain this result, we recall the following lemma and theorems.

Lemma 1

Suppose \(f\in C^{m+1}[0,1]\) and

Let \(y_0\) be the best approximation for f out of Y then

where \(K=Max_{t\in [0,1]}\vert f^{(m+1)}(t)\vert .\)

Proof

The set \(\lbrace 1, t,\ldots , t^m\rbrace ,\) is a basis for polynomials space of degree m. We define

From Taylor expansion, we have

where \(\tau \in (0,1)\). Since \(y_0\) is the best approximation f(t) out of \(S_m, y_1(t)\in S_m,\) and from above equation, we have

Then by taking square roots, the proof is complete. \(\square \)

Theorem 1

Suppose that H is a Hilbert space, Y is a finite dimensional and closed subspace of H and \(\lbrace y_1,y_2,\ldots ,y_n\rbrace ,\) is a basis for Y. Let x be an arbitrary element in H and \(y_0\) be the unique best approximation to x out of Y. Then [24]

where G is Gramian matrix.

Theorem 2

Suppose that \(f\in L^2[0,1]\) and f(t) is approximated by \(\sum _{i=0}^{m}c_i B_i(t)\), then we have [24]

Now by using these theorems, we show that \((F^{(\alpha )}B_i(t)-I_t^{\alpha }B_i(t))\) tends to zero for \(i=0,1,\ldots ,m,\) as follow: for \(i=0,\)

For \(i=1\)

and for \(i\ge 2\)

Now we can conclude by Theorem 2 and Eqs. (25), (26) and (27) that the difference of \(F^{(\alpha )}\varPsi (t)\) and \(I_t^{\alpha }\varPsi (t)\) tends to zero when the number of Boubaker basis functions tends to infinity so this approximation could be used for each elements of state vector.

8 Numerical examples

Now we present some examples of optimal control problems and use the mentioned algorithms for solving them.

Example 1

Assume that we wish to minimize the functional

subject to dynamical system

and the condition

that its exact optimal cost for \(\alpha =1\), is \(J=0.3807971,\) and the exact value of control variable is [26]

Here we solve this problem by using the Boubaker integration operational matrix for \(m=5\), as follows:

where

So we have

with \(d=[1,0,0,0,0,0]^\mathrm{T}\). Substituting these into problem, we get

D is introduced in Eq. (4).

where \(\lambda _1\) is

Now we solve the following system using Newton’s iterative method

and for example for \(\alpha =1,\) we obtain

and

We present the results for different values of \(\alpha \) in Table 1 and see that as \(\alpha \) approaches to 1, the numerical values of J converge to the objective value of \(\alpha =1\).

Also, Fig. 1 shows the curves for exact values of control variable and numerical values of u(t) and x(t) for \(\alpha =0.5, 0.8, 0.9, 1\).

Curves for \(\alpha =0.5, 0.8, 0.9,1\), Example 1. a Numerical and exact values of x(t). b Numerical and exact values of u(t)

This problem is solved in [26] for \(\alpha =1,\) with Chebyshev finite difference method and the result for \(m=7,\) is as accurate as our values for \(m=5\).

Example 2

We consider the FOCPs:

subject to

with the following exact solution in the case of \(\alpha =1,\)

The absolute errors of \(x_1(t), x_2(t)\) and u(t) for different values of m and \(\alpha =1\) are given in [12] by the use of Legendre multiwavelet collocation method. We also give these results using operational matrix of integration in Tables 2, 3 and 4 to show that our results for \(x_2(t)\) are better, for \(x_1(t)\) are as accurate as the results obtained by Legendre multiwavelet and for u(t) are more accurate almost everywhere. It should be noted that the numbers of basis polynomials are equal to \(m+1\), in our method while it is obtained as multiplication of \(m+1\) in the numbers of subintervals via multiwavelet.

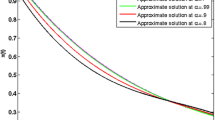

Considering Tables 2, 3 and 4, it is shown that if the number of Boubaker basis functions is increased the absolute errors are decreased, and therefore, the numerical values of parameters converge to exact solution. In Fig. 2, we show the curves of unknown functions of this example for different values of \(\alpha \) to show convergence of fractional order to integer one.

Curves for \(\alpha =0.5, 0.8, 0.9,1\), Example 2. a Numerical and exact values of \(x_1(t)\). b Numerical and exact values of \(x_2(t)\). c Numerical and exact values of u(t)

Also, Table 5 shows the convergence between the values of J for different \(\alpha \) as \(\alpha \) approaches to 1.

Example 3

The next example under consideration is as follows [27]:

subject to dynamical system

and the conditions

with the exact solution

for \(\alpha =1,\) and in this case \(J=6.149258\).

We have used the operational matrix of fractional derivative and achieved \(J=6.149258977,\) for \(m=5,\) as follows, however, the best result obtained in [27] is \(J=6.149061,\) with 32 nodes.

where

So we have

substituting these into problem we get

D is introduced in Eq. (4).

where \(\lambda _2,\lambda _3\) are real numbers and \(\lambda _1\) is

Now we solve the following system

and for \(\alpha =1,\) we obtain

and

In Fig. 3, the exact and numerical values of state and control functions are shown.

Curves for \(\alpha =0.5, 0.8, 0.9, 1\), Example 3. a Numerical and exact values of x(t). b Numerical and exact values of u(t)

Example 4

Now consider the following time varying problem [18]

subject to

Table 6 compares the values of J, obtained via Boubaker operational matrix of fractional derivative and the results reported in [18]. The number of our basis and calculation is less than [18]. In addition, the more values of J for different \(\alpha \) in our method show the better convergence.

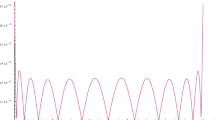

Figure 4 demonstrates the values of state and control functions for different values of \(\alpha \).

Curves for \(\alpha =0.5, 0.8, 0.9,1\), Example 4. a Numerical and exact values of x(t). b Numerical and exact values of u(t)

9 Conclusion

In this paper, we have presented the Boubaker operational matrices of Caputo fractional derivative and Riemann–Liouville fractional integration for the first time. Then, a general formulation for operational matrix of multiplication is achieved, and these matrices have been used to approximate numerical solution of fractional optimal control problems. In fact, the problem is solved by the direct use of the functional without solving fractional Hamiltonian equations, and by using these matrices the mentioned fractional optimal control problem is reduced to systems of algebraic equations. Some numerical examples, solved by Wolfram Mathematica 10, show the validity of the method. Also, it is shown that if the number of Boubaker basis functions is increased this method is convergent.

References

Boubaker, K.: On modified Boubaker polynomials: some differential and analytical properties of the new polynomial issued from an attempt for solving bi-varied heat equation. Trends Appl. Sci. Res. 2, 540–544 (2007)

Karem Ben Mahmoud, B.: Temperature 3D profiling in cryogenic cylindrical devices using Boubaker polynomials expansion scheme (BPES). Cryogenics 49, 217–220 (2009)

Dada, M., Awojoyogbe, O.B., Boubaker, K.: Heat transfer spray model: an improved theoretical thermal time-response to uniform layers deposit using Bessel and Boubaker polynomials. Curr. Appl. Phys. 9, 622–624 (2009)

Kumar, A.S.: An analytical solution to applied mathematics-related Love’s equation using the Boubaker polynomials expansion scheme. J. Frankl. Inst. 347(9), 1755–1761 (2010)

Boubaker, K.: Boubaker polynomials expansion scheme (BPES) solution to Boltzmann diffusion equation in the case of strongly anisotropic neutral particles forwardbackward scattering. Ann. Nucl. Energy 38, 1715–1717 (2011)

Kafash, A., Delavarkhalafi, A., Karbassi, A.S., Boubaker, K.: A numerical approach for solving optimal control problems using the Boubaker polynomials expansion scheme. J. Interpolat. Approx. Sci. Comput. 2014, 1–18 (2014). doi:10.5899/2014/jiasc-00033

Agrawal, O.P.: A general formulation and solution scheme for fractional optimal control problem. Nonlinear Dyn. 38, 323–337 (2004)

Agrawal, O.P.: A Hamiltonian formulation and a direct numerical scheme for fractional optimal control problem. J. Vib. Control 13, 1269–1281 (2007)

Agrawal, O.P.: A formulation and numerical scheme for fractional optimal control problems. J. Vib. Control 14, 1291–1299 (2008)

Baleanu, D., Defterli, O., Agrawal, O.P.: A central difference numerical scheme for fractional optimal control problems. J. Vib. Control 15, 583–597 (2009)

Tricaud, C., Chen, Y.Q.: An approximation method for numerically solving fractional order optimal control problems of general form. Comput. Math. Appl. 59, 1644–1655 (2010)

Yousefi, S.A., Lotfi, A., Dehghan, M.: The use of Legendre multiwavelet collocation method for solving the fractional optimal control problems. J. Vib. Control 13, 2059–2065 (2011)

Lotfi, A., Yousefi, S.A., Dehghan, M.: Numerical solution of a class of fractional optimal control problems via the legendre orthonormal basis combined with the operational matrix and the Gauss quadrature rule. J. Comput. Appl. Math. 250, 143–160 (2013)

Agrawal, O.P.: A quadratic numerical scheme for fractional optimal control problems. ASME J. Dyn. Syst. Meas. Control. 130(011010–1), 011010–6 (2008)

Sweilam, N.H., Alajmi, T.M.: Legendre spectral collocation method for solving some type of fractional optimal control problem. J. Adv. Res. 6, 393–403 (2015)

Almeida, R., Torres, D.F.M.: A discrete method to solve fractional optimal control problems. Nonlinear Dyn. 80(4), 1811–1816 (2015)

Lotfi, A., Dehghan, M., Yousefi, S.A.: A numerical technique for solving fractional optimal control problems. Comput. Math. Appl. 62, 1055–1067 (2011)

Keshavarz, E., Ordokhani, Y., Razzaghi, M.: A numerical solution for fractional optimal control problems via Bernoulli polynomials. J. Vib. Control 22(18), 3889–3903 (2015)

Ding, F., Ma, J., Xiao, Y.: Newton iterative identification for a class of output nonlinear systems with moving average noises. Nonlinear Dyn. 74, 21–30 (2013)

Mao, Y., Ding, F.: Multi-innovation stochastic gradient identification for Hammerstein controlled autoregressive autoregressive systems based on the filtering technique. Nonlinear Dyn. 79, 1745–1755 (2015)

Wang Y, Ding, F.: Recursive least squares algorithm and gradient algorithm for Hammerstein-Wiener systems using the data filtering. doi:10.1007/s11071-015-2548-5

Xu, L., Chen, L., Xiong, W.: Parameter estimation and controller design for dynamic systems from the step responses based on the Newton iteration. Nonlinear Dyn. 79, 2155–2163 (2015)

Podlubny, I.: Fractional Differential Equations. Academic Press, New York (1999)

Kreyszig, E.: Introductory Functional Analysis with Applications. Wiley, New York (1978)

Lancaster, P.: Theory of Matrices. Academic Press, New York (1969)

El-Kady, M.: A Chebyshev finite difference method for solving a class of optimal control problems. Int. J. Comput. Math. 7, 883–895 (2003)

Hosseinpour, S., Nazemi, A.: Solving fractional optimal control problems with fixed or free final states by Haar wavelet collocation method. IMA J. Math. Control Inf. 33(2), 543–561 (2016)

Acknowledgements

We would like to thank the four referees for their valuable comments and helpful suggestions to improve the earlier version of this article.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Rabiei, K., Ordokhani, Y. & Babolian, E. The Boubaker polynomials and their application to solve fractional optimal control problems. Nonlinear Dyn 88, 1013–1026 (2017). https://doi.org/10.1007/s11071-016-3291-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-016-3291-2