Abstract

Identification of nonlinear Hammerstein models has received much attention due to its ability to describe a wide variety of nonlinear systems. This paper considers the problem of the parameter estimation of the ARMAX models for the Hammerstein systems. A novel nonlinear recursive instrumental variables method, which is simple and easy for practical applications, is proposed to deal with the problem. In order to make the instrumental variables uncorrelated with the colored noise and to obtain better identification effect, three approaches for choosing the instrumental variables usually used in the linear RIV method are introduced. Furthermore, the procedure of the nonlinear RIV method and its property of the mean square convergence of the nonlinear RIV method are rigorously derived. Finally, an example is carried out as illustration, where the ARMAX-RLS method is compared as the basis, and the results show that the nonlinear RIV method is superior to ARMAX-RLS method in terms of identification accuracy and convergence speed under colored noise, which reveals the effectiveness of the proposed method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Most real-life systems can be modeled quite well with a linear model, but in practice, almost all processes are nonlinear if they are considered not merely in a small vicinity of their working points, so better results can be obtained by using a nonlinear model. As is known, nonlinear models are commonly used to describe the behavior of many industrial processes because of the increased accuracy and performances of the system behavior, which has drawn great attention of many researchers. And the nonlinear time series analysis is usually a very useful and widely used tool to study the observed nonlinear behavior [1–5]. Among the nonlinear models, the so-called block-oriented models such as the Hammerstein model, Wiener model and Hammerstein–Wiener model have turned out to be very useful for the estimation of nonlinear systems. A great deal of work on the identification of these models has been proposed [6–20]. The Hammerstein model, which consists of a static nonlinear block followed by a linear time-invariant subsystem, is the focus of this paper.

The identification of Hammerstein systems has been extensively studied in the recent years, so there exist a large number of studies on this topic in the literature, which can be roughly classified into several categories, namely the over-parameterization method [21–24], the nonparametric method [25–28], the iterative method [29–32], the gradient algorithm [33–35], the kernel machine and space projection method [36–38] and the recursive algorithm [23, 39–42]. Recently, Ding et al. [43] put forward decomposition-based Newton iterative method for the identification of a Hammerstein nonlinear FIR system with ARMA noise. Li [44] developed an algorithm based on Newton iteration for the identification of Hammerstein model. Hong and Mitchell [45] used the Bezier–Bernstein approximation of the nonlinear static function to identify the model. Han and Raymond [46] extended the rank minimization approach to Hammerstein system identification by using reconstructing the models. And Zhang et al. [47] proposed a hierarchical gradient-based iterative parameter estimation algorithm for multivariable output error moving average systems. Chen and Chen [41] designed a weighted least squares (WLSs)-based adaptive tracker for a class of Hammerstein systems. Vanbeylen et al. [48] showed a method about the identification of discrete-time Hammerstein systems from output measurements only. Ding et al. [35] proposed a modified stochastic gradient-based parameter estimation algorithm for dual-rate sampled-data systems. Sun and Liu [49] proposed a novel APSO-aided maximum-likelihood identification method for Hammerstein systems. Furthermore, some new nonparametric identification methods are presented, such as the using of neural network to model the static nonlinear part [50, 51] and identification without explicit parameterization of nonlinearity driven by piecewise constant inputs [52].

Ding and Chen [23] presented an excellent recursive least squares (RLSs) method to deal with the Hammerstein models, which has good identification accuracy and convergence rate. And many researchers take Ding’s method and models as a basis to compare with their methods [23, 49, 53]. However, Ding’s [23] RLS approach is not applicable, when the noise model is unknown. And better identification accuracy and faster convergence rate still remain the targets both in theory and applications.

In this paper, the nonlinear recursive instrumental variables (RIVs) method is proposed to the identification of a classic Hammerstein model. Better identification results can be obtained in comparison with RLS method, and the method can be applied when the noise structure is unknown, which has shown that the proposed method is more flexible than RLS method. Furthermore, the property of the mean square convergence of the nonlinear RIV method is rigorously proved.

This paper is organized as follows: Sect. 2 describes the problem formulation based on Hammerstein models. Section 3 depicts the instrumental variable method for the Hammerstein models and three approaches to choose instrumental variables in linear RIV method. Section 4 presents the derivation of the nonlinear RIV method and proves its property of the mean square convergence, respectively. Section 5 illustrates the proposed approach with a classic model based on Hammerstein systems, where the nonlinear RIV method and the ARMAX-RLS method reported in the open literature [23] are compared in detail to show the effectiveness of the proposed method, and finally some conclusions from the above analysis are achieved in Sect. 6.

2 Problem statement

In a deterministic setting, the linear part of the system is characterized by a rational transfer function, and the system output \(y(t)\) is exactly observed. However, in practice, the system itself may be random and the observations may be corrupted by noise. So, it is of practical importance to consider stochastic Hammerstein systems as shown in Fig. 1, which is composed of a nonlinear memoryless block \(f(\cdot )\) followed by a linear subsystem. \(u(t)\) is the system input, \(y(t)\) is the system output and \(v(t)\) is a white noise sequence, respectively. The true output \(x(t)\), colored noise \(e(t)\) and the inner variable \(\overline{u} (t)\), which is the output of the nonlinear block, are unmeasurable. \(N(z)\) is the transfer function of the noise model, and \(G(z)\) is the transfer function of the linear part in the model [49].

The linear dynamical block in Fig. 1 is an ARMAX subsystem, the nonlinear model in Fig. 1 has the following input–output relationship [23, 33]:

This can be transformed as

where \(v(t)\) is a white noise sequence with the normal distribution \(v(t)\sim N(0,\sigma _v^2 )\) for the nonparametric \(f(\cdot )\), the value \(f(u)\) is estimated for any fixed \(u\). In the parametric case, \(f(\cdot )\) either is expressed by a linear combination of known basis functions with unknown coefficients, or is a piecewise linear function with unknown joints and slopes, and hence, identification of the nonlinear block in this case is equivalent to estimating unknown parameters. The nonlinear part is considered as a known basis \((\omega _1 ,\omega _2 ,\ldots ,\omega _{n_c })\) with coefficients \((c_1 ,c_2 ,\ldots ,c_{n_c } )\) in this paper:

Notice that the parameterization is actually not unique. In order to get a unique parameter estimate, without loss of generality, one of the gains of \(f(\cdot )\) must be fixed. Here, the first coefficient of the nonlinear function is assumed to equal 1, i. e., \(c_1 =1\) [12, 54].

Substitute Eq. (8) into Eq. (7) gives

This paper is aimed at presenting the nonlinear RIV method to get the estimation of the unknown parameters \(a_i (i=1,2,\ldots ,n_a ), b_j (j=1,2,\ldots ,n_b )\) and \(c_k (k=1,2,\ldots ,n_c )\) of the nonlinear ARMAX model by using the input and output data \(\{u(t)\},\{y(t)\}\) and to find the property of the algorithm.

3 Instrumental variables

Define the parameter vector, referring to [23, 33], as

Equation (9) can be written as

or

where

The least squares estimation formula is as follows:

where

The Frechet Theorem [55] is introduced here.

Theorem 1

Assume that \(\{x(t)\}\) is a sequence of random variables which converges to a constant \(x_0 \), then, there are

or

where \(f(\cdot )\) is continuous scalar function.

Theorem 2

Assume that matrices \({\varvec{A}}_t \) and \({\varvec{B}}_t\) exist the probability limit, and the dimension of them does not change with the increase of \(t\), apply Theorem 1, it gives

Apply the two convergence theorems above, there are

If \(e(t)\) is white noise, \(E\{\mathbf{h}(t)e(t)\}=\mathbf{0}\), thus \({\hat{\varvec{\uptheta }}}_{LS} \mathop {\longrightarrow }\limits ^{W.P.1}_{L\rightarrow \infty } \varvec{\uptheta }\),then the unbiased estimation can be obtained.

If \(e(t)\) is not white noise, \(E\{\mathbf{h}(t)e(t)\}\ne \mathbf{0}\). In order to get the unbiased estimation of the parameters, or to say \({\hat{\varvec{\uptheta }}}_{LS} \mathop {\longrightarrow }\limits ^{W.P.1}_{L\rightarrow \infty } \varvec{\uptheta }\) holds, an instrumental matrix is defined as

Two conditions are given as follows:

-

(a)

\(\frac{1}{L}\mathbf{H}_L^{*T} \mathbf{H}_L \mathop {\longrightarrow }\limits \limits ^{W.P.1}_{L\rightarrow \infty } E\{\mathbf{h}^{*}(t)\mathbf{h}^{T}(t)\}\) is a nonsingular matrix;

-

(b)

\(\mathbf{h}^{*}(t)\) is independent of \(e(t)\), which means \(\frac{1}{L}\mathbf{H}_L^{*T} \mathbf{e}_L \mathop {\longrightarrow }\limits ^{W.P.1}_{L\rightarrow \infty }\) \(E\{\mathbf{h}^{*}(t)e(t)\}=\mathbf{0}\), where \(\mathbf{h}^{*}(t)\) are the instrumental variables.

If the instrumental variables meet the two conditions above, it gives

where \({\hat{\varvec{\uptheta }}}_\mathrm{IV} \) is the parameter estimation with instrumental variables method.

It can be seen that if the chosen instrumental variables are suitable to satisfy the two conditions above, then unbiased and consistent parameter estimation can be obtained. So, how to choose suitable instrumental variables is crucial to instrumental variables method, the following three approaches, usually used in the linear RIV method [56], are introduced first to choose instrumental variables.

\(m(t)\) is noted as the instrumental variables in Fig. 2. \(\mathbf{h}^{*}(t)\) is given as follows:

-

1.

Adaptive filtering method

When \(u(t)\) is persistent excitation signal, then \(E\{\mathbf{h}^{*}(t)\mathbf{h}^{T}(t)\}\) is nonsingular. Since \(m(t)\) is only related to \(u(t)\), that is to say \(\mathbf{h}^{*}(t)\) must be uncorrelated with the noise, hence \(E\{\mathbf{h}^{*}(t)e(t)\}=0\).

The instrumental model can be regarded as an adaptive filter. The instrumental variables can be obtained by the following method:

or

where \(\alpha \in (0.01,0.1), d\in (0,10),{\hat{\varvec{\uptheta }}}(t)\) is the parameter estimation at moment \(t\) with instrumental variable method, it can be calculated by recursive method. If the two conditions (a) and (b) are satisfied, then Eqs. (23) and (24) are equivalent.

-

2.

Tally principle

If noise \(e(t)\) can be regarded as following model:

where \(w(t)\) is uncorrelated stochastic noise with zero mean value and

The instrumental variables can be chosen as

-

3.

Pure lag method

The instrumental model can be regarded as pure lag segment. The instrumental variables can be chosen as follows:

where \(n_b \) is the order of polynomial \(B(z^{-1})\). It is evident that if \(u(t)\) is persistent excitation signal and is unrelated to \(e(t)\), the instrumental variables will meet the two conditions above.

Detailed description about the three methods to choose instrumental variables can be found in [56].

4 The nonlinear recursive instrumental variables method

4.1 The nonlinear RIV algorithm

Following gives the derivation of the nonlinear RIV algorithm.

Replace \(\mathbf{h}(t)\) by \(\mathbf{h}^{*}(t)\) in Eq. (14) gives

Define

Then,

Substitute Eqs. (30)–(31) into Eq. (28) gives

Apply the inversion formula of matrix \(({\varvec{A}}+{\varvec{BC}})^{-1}={\varvec{A}}^{-1}-{\varvec{A}}^{-1}{\varvec{B}}({\varvec{I}}+ {\varvec{CA}}^{-1}{\varvec{B}})^{-1}{\varvec{CA}}^{-1}\) to (30) yields

Substitute Eq. (33) into Eq. (29) gives

And finally the nonlinear RIV algorithm is obtained as follows:

where \(\mathbf{h}^{*}(t)\) are the instrumental variables shown in Eq. (20)–(21), the choice of \(\mathbf{h}^{*}(t)\) can use the three methods described above. If the instrumental variables are in adaptive filtering form, it needs least squares method to calculate a few steps to obtain the initial parameter estimation \({\hat{\varvec{\uptheta }}}(t)\) as the initial state of the nonlinear RIV method, to initialize the algorithm, \(p_0 \) is taken as a large positive real number, e.g., \(p_0 =10^{6}{\varvec{I}}\), and \({\hat{\varvec{\uptheta }}}(0)=10^{-6}{\varvec{I}}_{n_0 \times 1} \).

4.2 Mean square convergence of the nonlinear RIV method

Firstly, two lemmas [57] are introduced.

Lemma 1

Assume the eigenvalues of matrix \(A\in R^{n\times n}\) are \(\lambda _i [A], i=1,2,\ldots ,n\), then the eigenvalues of matrix \(A+s{\varvec{I}}\) are \(\lambda _i [A+s{\varvec{I}}]=\lambda _i [A]+s,i=1,2,\ldots ,n, s\) is a constant

Lemma 2

Assume the eigenvalues of matrix \(A\in R^{n\times n}\) are \(\lambda _i [A], i=1,2,\ldots ,n, \mathop {\min }\nolimits _i \{\lambda _i [A]\}=\alpha ,\) then \(A^{T}A\ge \alpha ^{2}{\varvec{I}}\), \((A+s{\varvec{I}})^{T}(A+s{\varvec{I}})\ge (\alpha -s)^{2}{\varvec{I}}\), where \(0<s<\alpha \).

Theorem 3

Assume that \(\{e(t)\}\) is a random noise vector sequence with zero mean and bounded variance, namely \(E[||e(t)||^{2}]=\sigma _e^2 (t)\le \sigma ^{2}<\infty \); the input vectors \(\{u(t)\}\) and the instrumental vectors \(\{\mathbf{h}^{*}(t)\}\) are uncorrelated with \(\{e(t)\}\) and the system meets the weak persistence of excitation, which is to ensure that the matrix \(\frac{1}{t}\mathbf{H}_t^*\mathbf{H}_t \) is nonsingular, that is

Define \(||X||^{2}=tr[XX^{T}]\).

Assume \(E[||\hat{\varvec{\uptheta }}(0)-\varvec{\uptheta } ||^{2}]\le M_0 <\infty \), and \(\hat{\varvec{\uptheta }}(0)\) is uncorrelated with \(\{e(t)\}\), then the parameter estimation error using the nonlinear RIV method converges to zero at the rate of \({O}(\frac{1}{\sqrt{t}})\), that is

where \(n\) is the rank of matrix \(\mathbf{H}_t^*{\varvec{P}}^{T}(t){\varvec{P}}(t)\mathbf{H}_t^{*^{T}}, \beta \) is the largest eigenvalue of matrix \(\mathbf{H}_t^*{\varvec{P}}^{T}(t){\varvec{P}}(t)\mathbf{H}_t^{*^{T}}, {\varvec{P}}(0)=P_0 =\frac{1}{a}I, 0<a<1\), say \(a=10^{-6}\).

Refer to [58, 59], the following gives the proof of Theorem 3.

Define

Combine Eqs. (30) and (35) gives

Multiplying both sides of the Eq. (30) by \({\varvec{P}}^{-1}(t)\) gives

Substituting Eq. (41) in Eq. (40) gives

where \(\mathbf{H}_t^{*^{T}} e_t =\sum \nolimits _{i=1}^t {\mathbf{h}^{*}(t)e^{T}(t)} \), \({\varvec{P}}^{-1}(t)=\mathbf{H}_t^*\mathbf{H}_t +{\varvec{P}}^{-1}(0)\), \(\gamma _1 (t)={\varvec{P}}(t){\varvec{P}}^{-1}(0)\tilde{\varvec{\uptheta } }(0)\), \(\gamma _2 (t)={\varvec{P}}(t)\mathbf{H}_t^{*^{T}} e_t \).

Apply Lemmas 1 and 2, then for any \(t\rightarrow \infty \), it gives \({\varvec{P}}^{-T}(t)=(\mathbf{H}_t^*\mathbf{H}_t )^{T}+{\varvec{P}}^{-1}(0){\varvec{I}}\).

Define

Then,

Thus, it gives

Substitute Eq. (46)–(47) into Eq. (42)

Then the mean square convergence of the nonlinear RIV method is completely proved.

From what is discussed above, it can be seen that \(e(t)\) is colored noise, but as long as the system is persistently excited, and the noise \(e(t)\) is zero mean and bounded variance and uncorrelated with \(\hat{\varvec{\uptheta }}(0)\), which means condition \(A_1\) and \(A_2 \) are satisfied, then the nonlinear RIV method has the property of mean square convergence for identification of Hammerstein models, that is to say the parameter estimation error \(\tilde{\varvec{\uptheta }}(t)\) using the proposed method converges to zero at the rate of \({O}(\frac{1}{\sqrt{t}})\), which guarantees that the nonlinear RIV method has good capability against colored noise.

5 Example

Due to the commonly recognized effectiveness of Ding’s RLS algorithm, Ding’s example [23] is hereby taken as the model to demonstrate the improved identification performance of the new algorithm. This is a Hammerstein ARMAX system as follows:

\(\varvec{\uptheta } =[a_1 ,a_2 ,b_1 ,b_2 ,c_2 ,c_3 ]=[-1.60,0.8\), \(0.85,0.65, 0.5,0,25]\) are the parameters to be identified. \(\{u(t)\}\) is taken as a persistent excitation signal sequence with zero mean and unit variance, and \(\{v(t)\}\) as a white noise sequence with zero mean and constant variance \(\sigma _v^2 \). The noise-to-signal-ratio (NSR) is defined by the standard deviation of the ratio of input-free output and noise-free output, namely \(\mathrm{NSR}=\sqrt{\frac{\hbox {var}[v(t)]}{\hbox {var}[u(t)]}}\times 100\,\% \).

When \(\sigma _v^2 =0.3^{2}, \sigma _v^2 =0.5^{2}\) and \(\sigma _v^2 =0.7^{2}\), the corresponding NSRs are 16.34, 25.45 and 35.70 %, respectively. The accuracy of identification of the proposed models is assessed by comparing overall output response of estimated model and the true output, and also the relative parameter estimation error, which is

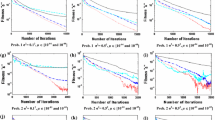

For the model discussed above, the nonlinear RIV method is chosen to be compared with ARMAX-RLS [23] under three NSRs mentioned above and sampling data length 3,000 to get the results. The comparison of the relative parameter estimation errors between real parameters and identified parameters of the nonlinear RIV and ARMAX-RLS methods with NSR 16.34, 25.45 and 37.50 % is shown in Figs. 3, 4 and 5 (dashed line for the errors by RLS method and solid line for the errors by the nonlinear RIV method), respectively. And Tables 1, 3 and 5 are the records of the parameter estimation and the related relative error of nonlinear RIV method with NSR 16.34, 25.45 and 37.50 %, respectively. And Tables 2, 4 and 6 keep record of the parameter estimation and the related relative error of ARMAX-RLS method with NSR 16.34, 25.45 and 37.50 %, respectively.

Figure 3 shows the changing process of relative error between nonlinear RIV method and ARMAX-RLS method when NSR is 16.34 %. And the relative error is obtained through Eq. (54). When the sampling time is between 0 and 400, the identification error of ARMAX-RLS method is smaller than that of the nonlinear RIV method. However, the identification error of nonlinear RIV method shows decline trend and is lower than that of the ARMAX-RLS method afterward. This has shown that the advantage of the proposed method may not be obvious at first, but with the increase of the sampling time, the advantage becomes more and more obvious.

Tables 1 and 2 have shown that the concrete values of the parameters with 16.34 %. \(\theta _1 ,\theta _2 ,\theta _3 ,\theta _4 \), which can be directly obtained through Eqs. (35)–(37), represent the parameter \(a_1 ,a_2 ,b_1 ,b_2 \), respectively. \(\theta _5 ,\theta _6 ,\theta _7 ,\theta _8 \) are not the parameters of the real system but obtained by linear transform and they represent \(b_1 c_2 ,b_2 c_2 ,b_1 c_3 ,b_2 c_3 \), respectively. And \(c_2 ,c_3 \) can be obtained by the following method:

After iteration of 3,000, the final error of the nonlinear RIV method is 2.54 %, the final error of ARMAX-RLS method is 3.32 %.

The final parameter identification result is:

Figure 4 depicts the changing process of relative error between predicted output and real output by the nonlinear RIV method and ARMAX-RLS method with 25.45 % NSR. From Fig. 4, it can be seen that the advantage of the nonlinear RIV method is still significant and the error curve of this method is sharply declining when the sampling time is about 300 and keeps under the error curve of the ARMAX-RLS method afterward.

Tables 3 and 4 have shown that the concrete values of the parameters with 25.45 %. After iteration of 3,000, the final error of the nonlinear RIV method is 3.73 %, the final error of ARMAX-RLS method is 5.51 %.

Based on Eq. (55), the final parameter identification result is:

Figure 5 depicts the changing process of relative error between predicted output and real output by nonlinear RIV method and ARMAX-RLS method when NSR is 35.70 %. From Fig. 5, it can be shown that the advantage of the proposed method is not significant at first. The overlapping of the two curves when the sampling time is between 0 and 700 indicates that the two methods show their advantages at different sampling times, and when the sampling time is over 700, the error curve of the nonlinear RIV method keeps under the error curve of the ARMAX-RLS method all through. The identification results of the nonlinear RIV method are all better than those of ARMAX-RLS method with the three different NSRs.

Tables 5 and 6 have shown that the concrete values of the parameters with 35.70 %. After iteration of 3,000, the final error of the nonlinear RIV method is 4.25 %, the final error of ARMAX-RLS method is 6.17 %.

The final parameter identification result is:

From the identification results above, the following conclusions can be obtained:

-

(1)

The proposed nonlinear RIV method for Hammerstein system identification is effective, and the identification errors with three NSRs are lower than that of ARMAX-RLS method after sampling time about 400, 300 and 700, respectively. From Figs. 3, 4 and 5, we get the identification error curve of the nonlinear RIV method is basically under that of ARMAX-RLS method in the whole identification course. When NSR is 16.34 %, the identification error of the nonlinear RIV method is lower than that of ARMAX-RLS by 30.7 % after 3,000 iteration, when NSR is 25.45 %, the identification error of the proposed method is lower than that of ARMAX-RLS by 47.7 %. When NSR is 35.70 %, the identification error of the nonlinear RIV is lower than that of ARMAX-RLS by 45.2 %. So, the larger NSR is, the better advantage of the proposed method over ARMAX-RLS method is.

-

(2)

With the increase of the NSR, the deviation of identification error of predicted output with the real output is enlarging, which means the proposed method is applicable with small noise signals. When the noise signal is getting larger, the identification results are getting worse or the system may not be identified. But the nonlinear RIV method is superior to ARMAX-RLS method with noise interference.

-

(3)

As is shown in the error curves, at the beginning of identification, the parameter identification results converge very quickly; the cutoff point appears almost at 1,000 iterations. After 1,000 iterations, with the increase of iteration number, the speed of convergence is getting slower, and there exists some steady-state error between identification parameters and real parameters under different NSRs.

6 Conclusions

A nonlinear recursive instrumental variable method in identification of nonlinear system is obtained. It is applied to Hammerstein ARMAX model under different NSRs and is compared with ARMAX-RLS method in detail. The results show that the nonlinear RIV method is not only an effective method but also superior to ARMAX-RLS method in terms of identification accuracy and convergence speed especially under colored noise. ARMAX-RLS method is not applicable when noise model is unknown, while the proposed method is far more appropriate. In other words, the nonlinear RIV method is more flexible in identification of nonlinear systems with colored noise.

With the increase of the NSR, the deviation of identification error of predicted output with the real output is enlarging, which means the proposed method is applicable with small noise signals. When the noise signal is getting larger, the identification results are getting worse or the system may not be identified. But the nonlinear RIV method is superior to ARMAX-RLS method with noise interference.

The procedure of the proposed method and its mean square convergence for identification of Hammerstein models are also established, and its convergence analysis is worth further research.

Abbreviations

- \(y(t)\) :

-

System output

- \(x(t)\) :

-

True output, not corrupted by noise

- \(v(t)\) :

-

White noise

- \(e(t)\) :

-

Colored noise

- \(\bar{{u}}(t)\) :

-

Inner variable

- \(a_i, b_i, c_i, d_i\) :

-

System parameters

- \(f(u(t))\) :

-

Nonlinear function

- \(w_1 ,w_2,\ldots ,w_{n_c}\) :

-

Basis of a nonlinear function

- \(\sigma _\mathrm{v}^2\) :

-

Variance of white noise

- \(h^{*}(t)\) :

-

The instrumental variable

- \({\hat{\varvec{\uptheta }}}_{\mathrm{IV}}\) :

-

The identification result using instrumental variable method

- \({\hat{\varvec{\uptheta }}}_{\mathrm{RIV}}\) :

-

The identification result using nonlinear RIV method

- \({\hat{\varvec{\uptheta }}}_{\mathrm{ARMAX}\text {-}\mathrm{RLS}}\) :

-

The identification result using ARMAX-RLS method

- NSR:

-

Noise-to-Signal-Ratio, defined as \(\mathrm{NSR}=\sqrt{\frac{\hbox {var}[v(t)]}{\hbox {var}[u(t)]}}\times 100\,\% \)

- \(P\) :

-

Probability

References

Perc, M.: Nonlinear time series analysis of the human electrocardiogram. Eur. J. Phys. 26, 757–768 (2005)

Perc, M.: The dynamics of human gait. Eur. J. Phys. 26, 525–534 (2005)

Kodba, S., Perc, M., Marhl, M.: Detecting chaos from a time series. Eur. J. Phys. 26, 205–215 (2005)

Gao, J., Hu, J., Mao, X., Perc, M.: Culturomics meets random fractal theory: insights into long-range correlations of social and natural phenomena over the past two centuries. J. R. Soc. Interface 9, 1956–1964 (2012)

Perc, M.: Self-organization of progress across the century of physics. Sci. Rep. 3, 1720 (2013)

Li, J.H., Ding, F., Hua, L.: Maximum likelihood Newton recursive and the Newton iterative estimation algorithms for Hammerstein CARAR systems. Nonlinear Dyn. 75, 235–245 (2014)

Westwick, D., Verhaegen, M.: Identifying MIMO Wiener systems using subspace model identification methods. Sig. Process. 52, 235–258 (1999)

Shi, Y., Fang, H.: Kalman filter based identification for systems with randomly missing measurements in a network environment. Int. J. Control 83, 538–551 (2010)

MacArthur, J.W.: A new approach for nonlinear process identification using orthonormal bases and ordinal splines. J. Process Contr. 22, 375–389 (2012)

Ding, F., Liu, X.P., Liu, G.J.: Identification methods for Hammerstein nonlinear systems. Digit. Signal Proc. 21, 215–238 (2012)

Liu, X.G., Chen, L., Hu, Y.Q.: Solution of chemical dynamic optimization using the simultaneous strategies. Chin. J. Chem. Eng. 21, 55–63 (2013)

Bai, E.W.: A blind approach to the Hammerstein–Wiener model identification. Automatica 38, 967–979 (2002)

Michalkiewicz, J.: Modified kolmogorov’s neural network in the identification of Hammerstein and Wiener systems. IEEE Trans. Neural Netw. Learn. 23, 657–662 (2012)

Cerone, V., Piga, D., Regruto, D.: Bounded error identification of Hammerstein systems through sparse polynomial optimization. Automatica 48, 2693–2698 (2012)

Gallman, P.G.: A comparison of two Hammerstein model identification algorithms. IEEE Trans. Autom. Control 21, 124–126 (1976)

Li, G.Q., Wen, C.Y.: Convergence of normalized iterative identification of Hammerstein systems. Syst. Control Lett. 60, 919–935 (2011)

Liu, X.G., Qian, J.: Modeling, control and optimization of ideal internal thermally coupled distillation columns. Chem. Eng. Technol. 23, 235–241 (2000)

Liu, Y.J., Sheng, J., Ding, R.F.: Convergence of stochastic gradient estimation algorithm for multivariable ARX-like systems. Comput. Math. Appl. 59, 2615–2627 (2010)

Maarten, S., Rik, P., Yves, R.: Parametric identification of parallel Hammerstein systems. IEEE Trans. Instrum. Meas. 60, 3931–3938 (2011)

Vazquez Feijoo, J.A., Worden, K., Stanway, R.: System identification using associated linear equations. Mech. Syst. Signal Process. 18, 431–455 (2004)

Chang, F., Luns, R.: A noniterative method for identification using Hammerstein model. IEEE Trans. Autom. Control 16, 464–468 (1971)

Greblicki, W.: Recursive identification of continuous-time Hammerstein systems. Int. J. Syst. Sci. 33, 969–977 (2002)

Ding, F., Chen, T.W.: Identification of Hammerstein nonlinear ARMAX systems. Automatica 41, 1479–1489 (2005)

Ding, F., Shi, Y., Chen, T.W.: Auxiliary model based least-square identification methods for Hammerstein output error systems. Syst. Control Lett. 56, 373–380 (2007)

Crama, P., Schoukens, J.: Initial estimates of Wiener and Hammerstein systems using multisine excitation. Syst. Control Lett. 50, 1791–1795 (2001)

Greblicki, W.: Stochastic approximation in nonparametric identification of Hammerstein systems. IEEE Trans. Automat. Control 47, 1800–1810 (2002)

Greblicki, W., Pawlak, M.: Nonparametric System Identification. Cambridge University Press, Cambridge (2008)

Hasiewicz, Z., Mzyk, G.: Hammerstein system identification by non-parametric instrumental variables. Int. J. Control 82, 440–455 (2009)

Narendra, K., Gallman, P.: An iterative method for the identification of nonlinear systems using a Hammerstein model. IEEE Trans. Automat. Control 11, 546–550 (1966)

Vörös, J.: Iterative algorithm for parameter identification of Hammerstein systems with two-segment nonlinearities. IEEE Trans. Automat. Control 44, 2145–2149 (1999)

Liu, Y., Bai, E.W.: Iterative identification of Hammerstein systems. Automatica 43, 346–354 (2007)

Liu, X.G., Bai, E.W.: Iterative identification of Hammerstein systems. Automatica 46, 549–554 (2010)

Ding, F., Shi, Y., Chen, T.W.: Gradient-based identification methods for Hammerstein nonlinear ARMAX models. Nonlinear Dyn. 45, 31–43 (2005)

Wang, D.Q., Ding, F.: Extended stochastic gradient identification algorithms for Hammerstein–Wiener ARMAX systems. Comput. Math. Appl. 56, 3157–3164 (2008)

Ding, J., Shi, Y., Wang, H., Ding, F.: A modified stochastic gradient based parameter estimation algorithm for dual-rate sampled-data systems. Digit. Signal Proc. 20, 1238–1247 (2010)

Greblicki, W., Pawlak, M.: Identification of discrete Hammerstein systems using kernel regression estimates. IEEE Trans. Automat. Control 31, 74–77 (1986)

Li, G., Wen, C., Zheng, W., Chen, Y.: Identification of a class of nonlinear autoregressive with exogenous inputs models based on kernel machines. IEEE Trans. Signal Process. 59, 2146–2158 (2011)

Huang, B., Ding, S.X., Qin, S.J.: Closed-loop subspace identification: an orthogonal projection approach. J. Process Control 15, 53–66 (2005)

Vörös, J.: Recursive identification of Hammerstein systems with discontinuous nonlinearities containing dead-zones. IEEE Trans Autom. Control 48, 2203–2206 (2003)

Zhao, W.X., Chen, H.F.: Adaptive tracking and recursive identification for Hammerstein systems. Automatica 45, 2773–2783 (2009)

Chen, X.M., Chen, H.M.: Recursive identification for MIMO Hammerstein systems. IEEE Trans. Autom. Control 56, 895–902 (2011)

Söderström, T., Ljung, L., Gustavsson, I.: A theoretical analysis of recursive identification methods. Automatica 14, 231–244 (1978)

Ding, F., Deng, K.P., Liu, X.M.: Decomposition based Newton iterative identification method for a Hammerstein nonlinear FIR system with ARMA noise. Circ. Syst. Signal Proc. 33, 2881–2893 (2014)

Li, J.H.: Parameter estimation for Hammerstein CARARMA systems based on the Newton iteration. Appl. Math. Lett. 26, 91–96 (2013)

Hong, X., Mitchell, R.J.: Hammerstein model identification algorithm using Bezier–Bernstein approximation. IET Control Theory Appl. 1, 1149–1159 (2007)

Han, Y., Raymond, A.: Hammerstein system identification using nuclear norm minimization. Automatica 48, 2189–2193 (2012)

Zhang, Z.N., Ding, F., Liu, X.G.: Hierarchical gradient based iterative parameter estimation algorithm for multivariable output error moving average systems. Comput. Math. Appl. 61, 672–682 (2011)

Vanbeylen, L., Pintelon, R., Schoukens, J.: Blind maximum likelihood identification of Hammerstein systems. Automatica 44, 3139–3146 (2008)

Sun, J.L., Liu, X.G.: A novel APSO-aided maximum likelihood identification method for Hammerstein systems. Nonlinear Dyn. 73, 449–462 (2013)

LL-Duwaish, H., Karim, M.: A new method for the identification of Hammerstein model. Automatica 33, 1871–1875 (1997)

Lang, Z.: A nonparametric polynomial identification algorithm for the Hammerstein system. IEEE Trans. Autom. Control 42, 1435–1441 (1997)

Wang, J.D., Sano, A., Chen, T.W., Huang, B.: Identification of Hammerstein systems without explicit parameterization of non-linearity. Int. J. Control 82, 937–952 (2009)

Wang, D.Q., Ding, F., Chu, Y.Y.: Data filtering based recursive least squares algorithm for Hammerstein systems using the key-term separation principle. Inform. Sciences. 222, 203–212 (2013)

Gallman, P.G.: A comparison of two Hammerstein model identification algorithms. IEEE Trans. Autom. Control 21, 124–126 (1976)

Golfberger, A.S.: Econometric Theory. Wiley, New York (1964)

Fang, C.Z.: Process Identification. Tsinghua University Press, Beijing (1988)

Ding, F.: System Identification-New Theory and Methods. Science Press, Beijing (2013)

Ding, F., Chen, T.W.: Performance bounds of forgetting factor least squares algorithm for time-varying systems with finite measurement data. IEEE Trans. Circuits 52, 555–566 (2005)

Ding, F., Chen, T.W.: Parameter estimation for dual-rate system with finite measurement data. Dyn. Cont. Dis. Ser. B 11, 101–121 (2004)

Acknowledgments

The authors would like to thank the anonymous reviewers very much for their helpful suggestions for improving the manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ma, L., Liu, X. A nonlinear recursive instrumental variables identification method of Hammerstein ARMAX system. Nonlinear Dyn 79, 1601–1613 (2015). https://doi.org/10.1007/s11071-014-1763-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-014-1763-9