Abstract

Volterra series is a powerful mathematical tool for nonlinear system analysis, which extends the convolution integral for linear system to nonlinear system. There is a wide range of nonlinear engineering systems and structures which can be modeled as Volterra series. One question involved in modeling a functional relationship between the input and output of a system using Volterra series is to identify the Volterra kernel functions. In this article, a wavelet balance method-based approach is proposed to identify the Volterra kernel functions from observations of the in- and outgoing signals. The basic routine of the approach is that, from the system outputs under multilevel excitations, the Volterra series outputs of different orders are first estimated with the wavelet balance method, and then the Volterra kernel functions of different orders are separately estimated through their corresponding Volterra series outputs by expanding them with four-order B-spline wavelet on the interval. The simulation studies verify the effectiveness of the proposed Volterra kernel identification method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Linear systems, which have been widely studied by researchers in different fields, have provided a basis for the development of the majority of control system synthesis, mechanical system analysis, and design. However, it is well known that some dynamic behaviors which cannot be explained by linear system theory would happen to nonlinear systems [1], i.e., frequency distortion, generation of sub- and superharmonic components, occurrence of sub-resonance, limit cycle oscillation, bifurcation, chaos, and so on. To understand those dynamic behaviors of nonlinear systems, various theories and methods have been developed, such as chaos [2–4], nonlinear time series analysis methods [5–9], multiple scales method [10], KBM method [11], homotopy analysis method [12, 13], and harmonic balance method [14]. Unfortunately, there exists no canonical representation that encompasses all conceivable systems. Volterra series [15] is a powerful mathematical tool for nonlinear system analysis. It is one of the earliest approaches to achieve a systematic characterization of nonlinear system. Essentially, it is an extension of the standard convolution description of linear system to nonlinear system. The Volterra series is particularly appropriate for any system with smooth nonlinearity [16], which can be described by a polynomial form differential equation model. The Weierstrass approximation theorem [17] guarantees that any continuous function on a closed and a bounded interval can be uniformly approximated on that interval by a polynomial to any degree of accuracy. Therefore, a wide class of nonlinear systems can be well represented by the Volterra series. It has been seen that Volterra series has a wide range of the applications including aeroelastic system [18], biomedical engineering [19], fluid dynamics [20], electrical engineering [21], mechanical engineering [22–24], etc.

The key issue involved in modeling nonlinear system using the Volterra series is the identification of its kernel functions, which has proven to be a challenge for applying the Volterra series in practice. The Volterra kernel identification is fundamentally an ill-posed problem since the objective is to determine the structure of the system from the in- and outgoing signals. To address this problem, Wiener [25] developed a variation of the Volterra series that is orthogonal provided that the input signal is a Gaussian white noise. A number of statistical approaches, such as the cross-correlation technique [25], have been developed to identify Wiener kernels. A problem with the Wiener kernels identification is its difficulty to generate a Gaussian white noise input in experimental system, if not impossible. The identification of Volterra kernel functions is also difficult as the number of terms for modeling the kernels is usually quite large. For example, we consider a system with a memory of \(N\) samples. A simple discrete Volterra model for such a system requires \(N^{p}\) coefficients to represent the \(p\)th-order kernel function. Therefore, to present the system, a Volterra model requires a large number of coefficients even if only the first few kernels are included. Furthermore, not only is the identification of such a model difficult, but the model is too large to be used in many applications such as the control design. Clearly, it is necessary to obtain a reduced order kernel representation.

In the consideration of these limitations, many other approaches for kernels identification have been applied in both the time and frequency domains. Some techniques identify the kernels by applying specific input excitations to the system. For example, Bedrosian and Rice [26] developed a harmonic probing technique in frequency domain, and Worden et al. [27] extended it to deal with multi-input multi-output forms of the Volterra functional series. Pavlenko [28] applied impulse inputs to identify the time domain kernels from the impulse response data. To reduce the required number of estimated parameters, another common approach for kernel identification is to expand the kernels in terms of a set of function basis. For example, Marmarelis [29] estimated the kernels for biological system in terms of discrete Laguerre functions. Moodi and Bustan [30] used Laguerre functions and wavelet packets as orthogonal basis to represent Volterra kernel functions. Da Rosa et al. [31] derived an analytic solution to expand the Volterra kernels with a set of Kautz functions. These methods seek to represent the kernels in terms of a relatively compact set of globally or locally supported basis function. The advantage of these methods is that they only require the input with sufficient bandwidth for accurate kernels identification, but they do not require any specific input excitations.

Wavelets and multiresolution analysis can offer the time and frequency localization of functions and signals. In many cases, a function can be represented in a relatively small number of wavelet coefficients. This approach can highly reduce the calculation complexity of the identification process while improving the accuracy of the results. The advantage of this method is that once the basis is selected properly, the identification of coefficients is a linear question. Beylkin et al. [32] demonstrated that the wavelets are particularly effective for compressing various integral operators. Many subsequent researchers have sought to take advantage of these results. For example, Nikolaou and Mantha [33] used biorthogonal wavelets to express the first- and second-order Volterra kernels, and Chou and Guthart [34] derived sparse representations of Green’s function operators with the orthogonal wavelet basis. Wei et al. [35] derived nonparametric NARX model by expanding each functional component of nonparametric NARX model into wavelet multiresolution expansions. Coca and Billings [36, 37] identified NARMAX model from the data contaminated by noise based on semi-orthogonal wavelet multiresolution approximation. When wavelet basis is used to represent the Volterra kernels, the coefficients are nonvarying. Therefore, the Volterra kernel functions are linear equations with the nonvarying coefficients. Based on the advantages, Kurdila et al. [38] considered the reduced order Volterra kernel representations in terms of biorthogonal wavelets. This approach utilized a family of biorthogonal wavelets proposed by Cohen et al. [39]. These wavelets possess several preferable properties such as biorthogonality, symmetry or antisymmetry, and relatively compact support. In addition, the biorthogonal wavelets do not have explicit expression and are often defined by a procedure. This raises difficulties in applying the biorthogonal wavelets particularly when the object is quadratures [39]. Prazenica and Kurdila [40] constructed wavelets over the domain of the support of the triangular form of the second-order Volterra kernel. These triangular wavelets are orthogonal, compactly supported, and symmetric or antisymmetric. However, these functions are piecewise constant, and consequently do not yield very smooth kernel estimation. In addition, the triangular wavelet construction is specific to the second-order kernel. It is not straightforward to extend this approach to higher-order kernels. Recently, To overcome the problem, Prazenica and Kurdila [41] further presented an approach in which the multiwavelets constructed from the classical finite element basis functions using the technique of intertwining are used to obtain low-order estimations of the first-, second-, and third-order Volterra kernels. These results indicate the potential of the multiwavelet-based algorithm in obtaining the reduced order models for a large class of weakly nonlinear system. As pointed by Prazenica and Kurdila [41], there was one problem in applying the algorithm that the first-, second-, and third-order Volterra kernel functions must be identified simultaneously. This problem reduces the identification accuracy of Volterra kernel functions. In this article, to overcome this problem, a wavelet balance method-based approach is proposed. The basic routine of the approach is that, from the system outputs under multilevel excitations, Volterra series outputs of different orders are first estimated with the wavelet balance method, and then the Volterra kernel functions of different orders are separately estimated through their corresponding Volterra series outputs by expanding them with four-order B-spline wavelet on the interval (BSWI).

The outline of this article is as follows. Section 2 briefly introduces the wavelet analysis and BSWI, and presents the wavelet balance method in detail. The detailed procedure of Volterra kernel identification is presented in Sect. 3. Numerical simulations are presented in Sect. 4. Finally, the conclusions are given in Sect. 5.

2 BSWI and wavelet balance method

2.1 Wavelet analysis

Wavelet function \(\psi (x)\) is a function whose integral value is equal to zero during the integral interval of \((-\infty ,+\infty )\),

\(\psi (x)\) is known as mother wavelet, by translating \(k\) and dilating \(2^{j}\), we can obtain a function basis which is a cluster of square integrable function space. \(L^{2}(R)\).

An arbitrary function \(f(x)\in L^{2}(R)\) can be represented as

where

A multiwavelet basis for \(L^{2}(R)\) is derived by translating and dilating multiple wavelet functions \([\psi ^{1},\ldots ,\psi ^{r}]\). These multiwavelets are generated from \(r\) scale functions \([\phi ^{1},\ldots ,\phi ^{r}]\). The multiwavelets \([\psi ^{1},\ldots ,\psi ^{r}]\) and their associated scale functions \([\phi ^{1},\ldots ,\phi ^{r}]\) satisfy the two scale equations,

The coefficients \(\left[ {a_p^{s,t} } \right] ,\left[ {b_p^{s,t}} \right] \) are elements of scale function and wavelet filter matrices, respectively. The scale translates and dilates of the scale functions and multiwavelets are defined as

where, parameter \(j\in Z,\,Z\) is the set of all integers.

In the multiwavelet-based multiresolution analysis, an arbitrary function \(f(x)\in L^{2}(R)\) can be approximated in terms of a fine resolution space \(V_{j}\) as [41]:

where \(\alpha _{j,k}^s \) are scale function expansion coefficients, and \(f_j (x)\) denotes an approximation on level \(j\). An equivalent, multiscale representation of \(f_j (x)\) can be expressed as [41]

It means that \(f(x)\) can be represented by level \(j_{0}\) scale functions and level \(j_{0}\) through \(j-1\) multiwavelets. In most cases, many of the coefficients in Eq. (8) are equal to or close to zero. Consequently, the function can be accurately represented by relatively few multiwavelet coefficients.

The first-order Volterra kernel function can be represented conveniently by these one-dimensional multiwavelets. In order to represent higher-order Volterra kernel functions, higher dimensional multiwavelets are required. Higher dimensional multiwavelets can be constructed by the tensor products of one-dimensional scale functions and multiwavelets [41].

2.2 B-spline wavelet on the interval (BSWI)

On a bounded interval, BSWI basis has the good characteristics of compact support, symmetry, smoothness [42, 43], and explicit expression. Moreover, B-spline wavelets have the best approximation properties among all the known wavelets of a given order \(L\) [44]. Therefore, the Volterra kernel functions can be conveniently expressed by BSWI. Considering these advantages of BSWI, we use BSWI multiwavelets to represent each order Volterra kernel functions. Four-order B-spline scale functions on the interval, \(j=0,\,\phi _{4,k}^0 \,\,(k=-3,-2,-1,0)\) can be expressed as [42]

Four-order B-spline scale functions on the interval, \(j=0,\,\phi _{4,k}^0 \,\,(k=-3,-2,-1,0)\) are shown in Fig 1.

Four-order BSWI, \(j\!=\!0,\,\psi _{4,k}^0 \,(k=-3,-2,-1,0)\) can be expressed as [42, 45]

The coefficients \(a_{0} ,a_{1} ,a_{2} ,a_{3} \) of different \(k\) in Eq. (13) are not listed for the sake of simplicity. Interested readers can find them in [42, 45].

For arbitrary scale \(j,\,m\) order B-spline scale functions \(\phi _{m,k}^j (x)\) and wavelet functions \(\psi _{m,k}^j (x)\), can be computed using the following formulae [42, 45]:

Four-order B-spline scale functions on the interval, \(j=3,\,\phi _{4,k}^0 \,\,(k=-3,-2,-1,0)\) are shown in Fig. 2. Two-dimensional four-order B-spline scale functions \(\Phi (t_{1} ,t_{2} )\) on the interval, \(j=3\), are shown in Fig. 3.

2.3 Wavelet balance method

The basic principle of the wavelet balance method is to expand the state variables by wavelet basis methods [46], such as the biorthogonal wavelet basis. In order to describe wavelet balance method well, a general ordinary differential equation is used as an example. Considering the following ordinary differential equation, we obtain [46],

where \(X(t)=[X_1 (t),X_2 (t),\ldots ,X_n (t)]^{T}\) are the state variables, and \(F(X,t)\) is a given nonlinear vector function.

The state variables \(X(t)\) can be expanded by wavelet basis as follows:

where \(C_{n\times M} \) is the coefficient matrix, \(\{ \phi _i (t),\,i=1,2,\ldots ,M \}\) is wavelet basis, and \(M\) is the total number of function basis.

Substituting Eq. (17) into Eq. (16) gives

with

Then, because the left and right sides of Eq. (18) must be equal all the time, we can get

Consequently, the coefficient matrix \(C\) can be determined using a least square-based approach as

Finally, according to the estimated \(C\) and Eq. (17), the state variables \(X(t)\) can be calculated.

Comparing with the harmonic balance method where the Fourier basis with a global support is adopted, the main feature of the wavelet balance method is that wavelet basis has compact or local support in the time domain and possesses several advantages. [46–48]. First, it works in the time domain, so that many complex problems in the frequency domain, such as higher-order harmonics, can be handled efficiently. Second, the adaptive scheme automatically selects the proper wavelet basis functions given certain accuracy. High-level wavelet basis functions are selected only near singularities. However, the Fourier basis has global support, and it is infeasible to realize such a selection in time domain. Therefore, numerous researchers have done several studies about wavelet balance method. For example, Barmada et al. [49] used the wavelet balance method to solve the nonlinear equations. Soveiko and Nakhla [47, 48] used it to calculate the steady-state analysis of nonlinear microwave circuits under periodic excitation. Zhou et al. [50, 51] pointed out that the wavelet balance method increased significantly in sparsity of the equation matrices, and consequently decreased the CPU cost and storage requirements, while retaining accuracy and convergence of the traditional approach.

3 Volterra series and Volterra kernel function identification

3.1 Volterra series

If a system is linear and time-invariant, then the first-order Volterra series represents the linear input–output relation of system, which is shown as follows [25]:

where \(x(t)\) is the input, and \(y(t)\) is the output; when Eq. (23) can be interpreted as Duhamel integral, system is determined uniquely by impulse response function \(h(t)\). As regards a large class of nonlinear system, the input–output relation of system is nonlinear, which can be represented by higher-order Volterra series. Higher-order Volterra series is the extension of this familiar convolution integral for linear system to a series of multidimensional convolution integrals, which can be shown as follows [25]:

where functions \(h_n (\tau _1 ,\ldots ,\tau _n ),\,n=1,2,\ldots ,\infty \) are the extension of impulse response function for linear system to nonlinear system, named Volterra kernel functions in general.

In order to use Volterra series to model the input–output relation of nonlinear system, each order Volterra kernel functions should be identified. Rugh [15] pointed out that the dimensional disaster makes the identification of the Volterra kernel functions difficult. The Volterra kernel function identification is a common characteristic of ill-posed problems, and the problem is exacerbated by the fact that all kernel functions must be identified simultaneously. In order to improve the identification accuracy of Volterra kernel functions, the sub-band Volterra kernels are introduced [52]. For example, when a two-order Volterra series is considered, then the first- and second-order Volterra series outputs can be derived from following equations [52]:

where \(a\ne 1,\, and Y_1 (n),\,Y_2 (n)\) are the system’s total output signals to input signals \(x(t),\,ax(t)\), respectively. \(y_1 (n),\,y_2 (n)\) are the first- and second-order output signals, and \(e_1 (n),\,e_2 (n)\) are the errors between ideal and practical outputs, respectively. Using matrix inversion and neglecting \(e_1 (n),\,e_2 (n),\,y_1 (n),\,y_2 (n)\) can be solved. However, as this method is directly implemented in time domain, the Vander matrix inversion may amplify the error in \(Y_1 (n),\,Y_2 (n)\). This disadvantage will reduce the identification accuracy of Volterra kernel functions. In order to overcome this disadvantage, the wavelet balance method is used to derive the sub-band Volterra series outputs.

From Eq. (24), when the input is \(u(t)\), the system response is

where

When the system is excited \(N\) times by multilevel inputs amplitudes of which are \(a_{(1)} ,\ldots ,a_{(N)} \), respectively, \(N\) output responses \(Y_{(1)} ,\ldots ,Y_{(N)} \) are obtained. From Eq. (24), the output response under the excitation of amplitude \(a_{(n)} \) can be determined as

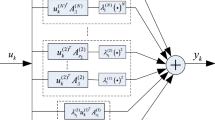

Then, the output responses under multilevel excitations can be expressed as follows:

Expressing \(Y_1 (t),Y_2 (t),\ldots ,Y_N (t),y_1 (t),y_2 (t),\ldots ,y_N (t)\) by wavelet basis, respectively, yields

Substituting Eq. (30) into Eq. (29), and according to the wavelet balance method, it can be derived as follows:

and

Then, based on the identified \(y_{1,j,k} ,y_{2,j,k} ,\ldots y_{N,j,k} \), the subband Volterra series outputs \(y_1 (t),y_2 (t),\ldots , y_N (t)\)can be derived by using Eq. (30).

By implementing the Vander matrix inversion on the wavelet transform coefficients, the sub-band Volterra series outputs are calculated based on the wavelet balance method. From the estimated Volterra series outputs \(y_1 (t),y_2 (t),\ldots , y_N (t)\), the Volterra kernels of different orders can be identified separately. The identification accuracy of Volterra kernel functions is dependent on the accuracy of each order Volterra series outputs. Consequently, the identification method presented in this article can improve the identification accuracy of Volterra kernel functions.

3.2 The first-order kernel function

The first-order Volterra series can be represented by [15]

In order to derive a discrete form of (33), the input \(u\) is approximated using zero-order hold. The input and output are sampled at the rate of \(2^{j}\) Hz, and the number of data points is \(N\). The zero-order hold approximation of the input can be written as [41],

Define function \(\chi _{j,k} (t)\) as [41],

where \(\chi \) is the characteristic function of interval [0, 1]. The coefficients \(\left\{ {u_{j,k}}\right\} \) are equivalent to scale samples of the input [41],

By discretizing the output at a sampling rate of \(2^{j}\) Hz, Eq. (33) yields \(N\) equations for the discrete first-order outputs [41]:

where \(t_n =2^{-j}n\); the translated zero-order hold approximation of the input can be expressed as [41],

The first-order kernel can be approximated by the B-spline scale functions on level \(j_{1}\):

Substituting Eqs. (38) and (39) into Eq. (37), the discrete first-order outputs are derived:

with

Equation (40) can be written in matrix form as [41],

where \(\underline{y}_{1,j} \) is a vector of discrete first-order outputs, and \(\underline{\alpha }_1 \) is a vector of scale function coefficients that represent the first-order kernel. The \(N\times N_{1}\) matrix [\(M_{1}\)] is calculated by using Eq. (40), where the integrals are calculated using Gauss–Legendre quadrature.

Equivalent to the single-scale expansion in Eq. (39), the multiscale representation of the kernel can be expressed as [41],

where \(j_{0}\) is the coarsest level chosen in the wavelet decomposition. Denoting \(\underline{\beta }_1 \) as the vector of multiscale kernel coefficients, and denoting [\(T_{1}\)] as the invertible matrix that performs the discrete wavelet transform, Eq. (42) can be written as

3.3 The second-order kernel

The second-order Volterra series can be represented by [15]

The second-order kernel can be represented by a combination of two-dimensional tensor product scale functions as [41],

The second-order kernel is assumed to decay to zero after finite time \(T_{2}\). Then, the symmetric second-order kernel is supported on the [\(0,\, T_{2}]\,\times \,[0,\, T_{2}\)] square domain.

Substituting Eq. (46) and the zero-order hold approximation of the input (38) into Eq. (45), \(N\) equations for the discrete second-order outputs can be obtained [41]:

where, \(n=1,\ldots ,N\). The upper limit in the summations over \(k \)and \(m\) is given by [41],

Equation (47) can be written in the matrix form:

where \(\underline{y}_{2,j} \)is a vector of discrete second-order outputs and \(\underline{\alpha }_2 \) is a vector of single-scale second-order kernel coefficients.

Equivalent to the single-scale expansion in Eq. (46), the multiscale representation of the second-order kernel can be expressed as [41],

Similar to the first-order case, it is possible to construct an invertible matrix [\(T_{2}\)] that decomposes the vector of single-scale coefficients into a vector of multiscale coefficients. Then, Eq. (49) can be represented as [41],

Taking advantage of the symmetry of the second-order kernel, the number of unknown coefficients in Eq. (47) can be significantly reduced. The symmetry of the kernel means that the number of coefficients can be reduced from \(N_2^2 \) to \(\tilde{N}_2 =(N_2^2 +N_2 )/2\). Therefore, an \(\tilde{N}_2 \)-dimensional vector of second-order kernel coefficients can be defined as \(\hat{{\underline{\alpha }}}_2 \). This vector can be related to \(\underline{\alpha }_2 \) by a transformation matrix \([P_2]\) [41],

Then, the discrete second-order Volterra model can be expressed in the following form [41]:

3.4 The third-order kernel

The third-order Volterra series output can be represented by [15]

where \(h_3 (\tau _1 ,\tau _2 ,\tau _3 )\) is the symmetric third-order kernel. The single-scale representation of the third-order kernel can be written as [41]

Due to computational constraints, the discretization level \(j_{3 }\) is usually chosen to be much coarser than the input/output discretization. The third-order kernel is assumed to decay to zero after finite time \(T_{3}\). Then, the symmetric third-order kernel is supported on a [\(0,\, T_{3}]\,\times \,[0,\,T_{3}]\,\times \,[0,\,T_{3}\)] three-dimensional domain.

The zero-order hold approximation of the input in Eq. (38) and the single-scale representation of the kernel in Eq. (55) are substituted into Eq. (54). \(N\) equations for the discrete third-order outputs can be obtained [41]

\(n=1,\ldots ,N\). The upper limits in the summations over \(k,\,m\), and \(f\) are given by [41]

Equation (56) can be written in the matrix form [41],

where \(\underline{y}_{3,j}\) is a vector of discrete third-order outputs, and \(\underline{\alpha }_3 \) is a vector of third-order kernel coefficients.

As usual, there is an equivalent multilevel representation of the third-order kernel. An invertible matrix [\(T_{3}\)] can be formed that decomposes the single-scale coefficients into the vector of multiscale coefficients. However, just as in the second-order case, it is not practical to form this matrix. Therefore, low-order approximations of the third-order kernel are obtained by choosing an appropriately coarse resolution level \(j_{3}\) in Eq. (56). Therefore, the multiscale kernel coefficients do not appear explicitly in the discrete third-order model.

Then, similar to the second-order case, we get

where \(\hat{{\underline{\alpha }}}_3 \) is a vector of unique third-order kernel coefficients. The discrete third-order Volterra model can be written in the form:

Taking advantage of derived \({y}_{1,j} ,\,{y}_{2,j} \), and \({y}_{3,j} \), the coefficients of the first-, second-, and third-order kernels can be identified through Eqs. (44), (53), (60), respectively. According to the identified coefficients, the first-, second- and third-order kernels can be separately derived. Therefore, based on this Volterra kernel function identification method put forward in this article, the first-, second-, and third Volterra kernel functions can be identified separately. This is quite different from the method presented by Prazenica and Kurdila [41] in which the first-, second-, and third-order kernels must be identified simultaneously. This may degrade the accuracy of the estimated third-order kernel.

It should be noted that this identification problem is ill-posed in that the objective is to determine the structure of the system from the in- and outgoing signals [41]. Therefore, in order to obtain stable kernel estimation, a regularization technique must be used to solve the least squares problem. There are many regularization methods discussed in the literature [53, 54]. In this article, we use truncated singular value decomposition to compute the least squares solution of Eqs. (44), (53), and (60).

4 Numerical simulations

In this section, two nonlinear dynamical systems are used to demonstrate the feasibility of the Volterra kernel function identification based on wavelet balance method.

Case 1: The system is a nonlinear oscillator considered in [41], having the following equation of motion,

where \(m\) is the mass, \(c\) is the damping coefficient, \(k_{1}\) is the linear stiffness coefficient, and \(k_2 \) is a quadratic spring stiffness. The system parameters are chosen as

Because the dynamics of motion is known, the analytic expressions of Volterra kernels can be derived in the frequency domain using the method of harmonic probing [26]. Based on the method of harmonic probing, the analytic expressions of the first-, second-, and third-order kernels in the frequency domain can be calculated as follows:

From the expression of the first-order kernel in the frequency, we can find that the linear FRF is exactly equivalent to the FRF of its associated linear system, which is generated by keeping the linear characteristic parameters unchanged and setting all the nonlinear characteristic parameters to be zero. The associated linear system of system (61) can be expressed as

Time domain Volterra kernels can be derived by taking inverse Fourier transforms of the expressions in Eqs. (62)–(64). The first-order kernel is equivalent to the impulse response function of Eq. (65) whose the expression is

where \(\xi \) is the damping ratio, \(\omega _{\text{ n }} \) is the natural frequency, and \(\omega _{\text{ d }} \) is the damped natural frequency defined as

In this case study, these parameters in Eq. (67) are set to be

Similarly, the time domain forms of the second- and third-order kernels are obtained by taking multidimensional inverse Fourier transforms of Eqs. (63) and (64). This calculation is performed in Maple. The analytic expressions for these time domain kernels are extremely complicated and not given here.

Since a linear chirp input used by Prazenica and Kurdila [40] only has one frequency at a time, it is insufficient to identify higher-order kernels of nonlinear system. Therefore, in order to generate the input signal that possesses multiple frequencies all the time. The input to the system is chosen as follows [55]:

The input signal is shown in Fig. 4.

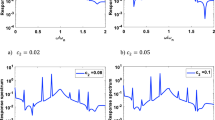

The response of the system (61) to the input (69) is simulated by numerically integrating, using function ode45 in MATLAB. The amplitudes A of the multilever inputs are chosen as 1, 0.7, and 0.5. Correspondingly, three sets of output responses are obtained, and then they are used to identify the kernels. The data are sampled at the rate of 64 Hz (or \(j= 6\)). The time duration of the simulation is 32 s. The number of each dataset is 2048.

Based on the wavelet balance method described in Sect. 3, the first-, second-, and third-order Volterra outputs of system (61) can be derived using db4 wavelet. The first three Volterra outputs of different orders are shown in Fig. 5.

From the estimated first three Volterra outputs, the first three-order Volterra kernels are identified using the procedure introduced in Sect. 3. The identified and analytic results of the first-order kernels are shown in Fig. 6. The identified kernel matches with the analytic kernel very well. The identified and analytic results of the second- and third-orders are shown in Figs. 7 and 8, respectively. The identified kernels generally matches with the analytic kernels.

To validate this Volterra kernel function identification method, the output predicted by the identified first-order Volterra kernel \(h_1 (\tau )\) and the first-order Volterra output \(\hbox {y}_{1}\) are shown in Fig. 9. The output predicted by the identified second-order Volterra kernel \(h_2 (\tau _1 ,\tau _2 )\) and the second-order Volterra output \(\hbox {y}_{2}\) are shown in Fig. 10. The predicted first- and second-order Volterra outputs closely match with the simulated outputs. Clearly, the first two-order-identified kernels are able to accurately predict the first two-order Volterra outputs to the input. The output predicted by the identified third-order Volterra kernel \(h_3 (\tau _1 ,\tau _2 ,\tau _3 )\) and the simulated third-order Volterra output \(\hbox {y}_{3}\) are shown in Fig. 11. The predicted third-order Volterra output generally matches with the simulated third-order Volterra output. Therefore, the identified third-order kernel is capable of predicting most of the third-order Volterra output.

Case 2: The system is a two-order discrete Volterra series that is usually regarded as nonlinear filter [56]. Several researchers have used such nonlinear system representations for studying nonlinear channel equalization [57] and nonlinear distortion in electronic devices [58]. Koh and Powers [59] had utilized it to study the nonlinear drift oscillations of moored vessels subject to random sea waves. One example of the second-order Volterra filters can be described as follows:

The input to the system is,

The amplitudes A of the multilever inputs are chosen as 1 and 0.5, respectively. Correspondingly, two sets of output responses are obtained, and then they are used to identify the kernels. The data are sampled at the rate of 64 Hz (or \(j = 6\)). The time duration of the simulation is 32 s. The number of each dataset is 2048.

Based on the Volterra kernel function identification method described in the above sections, the first- and second-order Volterra kernel functions can be derived. The identified and accurate first-order kernels \(h(n)\) are given in Table 1, the identified and accurate second-order kernels are given in Table 2. From Tables 1 and 2, it can be seen that the identified first- and second-order kernels are very close to their real values.

Overall, the results in Cases 1 and 2 verify the effectiveness of the Volterra kernel function identification method based on wavelet balance method.

5 Conclusion

In this article, a wavelet basis expansion-based approach is proposed to identify the Volterra kernel function through multilevel excitations. The approach first estimated the Volterra series outputs of different orders based on the wavelet balance method, from the system outputs under multilevel excitations. Then, Volterra kernel functions of different orders are, respectively, estimated through their corresponding Volterra series outputs by expanding them with four-order BSWI. Two simulation studies verify the effectiveness of the proposed Volterra kernel identification method. This is basically the theoretical study for a novel Volterra kernel function identification. Further studies will be concerned with the application of the proposed technique to identify the Volterra kernel functions of different engineering structures under different cases. This kernel functions identification approach can be used in the health monitoring of structures.

References

Nayfeh, A.H., Balachandran, B.: Applied Nonlinear Dyna- mics. Wiley, New York (1995)

Perc, M.: Visualizing the attraction of strange attractors. Eur. J. Phys. 26, 579–587 (2005)

Perc, M., Marhl, M.: Synchronization of regular and chaotic oscillations: the role of local divergence and the slow passage effect: a case study on calcium oscillations. Int. J. Bifurcation Chaos Appl. Sci. Eng. 14, 2735–2751 (2004)

Perc, M., Marhl, M.: Chaos in temporarily destabilized regular systems with the slow passage effect. Chaos Solitons Fractals 27, 395–403 (2006)

Kantz, H., Schreiber, T.: Nonlinear Time Series Analysis. Cambridge University Press, Cambridge (2004)

Perc, M.: The dynamics of human gait. Eur. J. Phys. 26, 525–534 (2005)

Perc, M.: Nonlinear time series analysis of the human electrocardiogram. Eur. J. Phys. 26, 757–768 (2005)

Kodba, S., Perc, M., Marhl, M.: Detecting chaos from a time series. Eur. J. Phys. 26, 205–215 (2005)

Perc, M.: Introducing nonlinear time series analysis in undergraduate courses. FIZIKA A-ZAGREB A(15), 91–112 (2006)

Nayfeh, A.H.: Perturbation Methods. Wiley, New York (2000)

Cai, J.P., Wu, X.F., Li, Y.P.: Comparison of multiple scales and KBM methods for strongly nonlinear oscillators with slowly varying parameters. Mech. Res. Commun. 31, 519–524 (2004)

Liao, S.J.: On the homotopy analysis method for nonlinear problems. Appl. Math. Comput. 147, 499–513 (2004)

Liao, S.J.: Comparison between the homotopy analysis method and homotopy perturbation method. Appl. Math. Comput. 169, 1186–1194 (2005)

Eigoli, A.K., Vossoughi, G.R.: Dynamic analysis of microrobots with Coulomb friction using harmonic balance method. Nonlinear Dyn. 67, 1357–1371 (2012)

Rugh, W.J.: Nonlinear System Theory: The Volterra–Wiener Approach. Johns Hopkins University Press, Baltimore (1980)

Franz, M.O., Schölkopf, B.: A unifying view of Wiener and Volterra theory and polynomial kernel regression. Neural Comput. 18, 3097–3118 (2006)

Jeffreys, H., Jeffreys, B.S.: Methods of Mathematical Physics, 3rd edn. Cambridge University Press, Cambridge (1988)

Silva, W.: Identification of nonlinear aeroelastic systems based on the Volterra theory: progress and opportunities. Nonlinear Dyn. 39, 25–62 (2005)

Korenberg, M.J., Hunter, I.W.: The identification of nonlinear biological systems: Volterra kernel approaches. Ann. Biomed. Eng. 24, A250–A268 (1996)

Raveh, D.E.: Computational fluid dynamics based aeroelastic analysis and structural design optimization—a researcher’s perspective. Comput. Methods Appl. Mech. Eng. 194, 3453–3471 (2005)

Safari, N., Roste, T., Fedorenko, P., et al.: An approximation of Volterra series using delay envelopes, applied to digital predistortion of RF power amplifiers with memory effects. IEEE Microw. Wirel. Compon. Lett. 18, 115–117 (2008)

Jing, X.J., Lang, Z.Q.: Frequency domain analysis of a dimensionless cubic nonlinear damping system subject to harmonic input. Nonlinear Dyn. 58, 469–485 (2009)

Khan, A.A., Vyas, N.S.: Application of Volterra and Wiener theories for nonlinear parameter estimation in a rotor-bearing system. Nonlinear Dyn. 24, 285–304 (2001)

Tawfiq, I., Vinh, T.: Nonlinear behaviour of structures using the Volterra series-signal processing and testing methods. Nonlinear Dyn. 37, 129–149 (2004)

Schetzen, M.: The Volterra and Wiener Theories of Nonlinear Systems. Wiley, New York (1980)

Bedrosian, E., Rice, S.O.: Output properties of Volterra systems (nonlinear systems with memory) driven by harmonic and Gaussian inputs. Proc. IEEE 59, 1688–1707 (1971)

Worden, K., Manson, G., Tomlinson, G.R.: Harmonic probing algorithm for the multi-input Volterra series. J. Sound Vib. 201, 67–84 (1997)

Pavlenko, V.D.: Estimation of the Volterra kernels of a nonlinear system using impulse response data. In: Signal/Image Processing and Pattern Recognition: Proceedings of the Eighth All-Ukrainian International Conference UkrOBRAZ, 28–31 August 2006

Marmarelis, V.Z.: Identification of nonlinear biological systems using Laguerre expansions of kernels. Ann. Biomed. Eng. 21, 573–589 (1993)

Moodi, H., Bustan, D.: On identification of nonlinear systems using Volterra kernels expansion on Laguerre and wavelet function. In: 2010 Chinese Control and Decision Conference, pp. 1141–1145 (2010)

Da Rosa, A., Campello, R.J.G.B., Amaral, W.C.: Choice of free parameters in expansions of discrete-time Volterra models using Kautz functions. Automatica 43, 1084–1091 (2007)

Beylkin, G., Coifman, R., Rokhlin, V.: Fast wavelet transforms and numerical algorithms I. Commun. Pure Appl. Math. 44, 141–183 (1991)

Nikolaou, M., Mantha, D.: Efficient nonlinear modeling using wavelets and related compression techniques. In: NSF Workshop on Nonlinear Model Predictive Control (1998)

Chou, K.C., Guthart, G.S.: Representation of Green’s function integral operators using wavelet transforms. J. Vib. Control 6, 19–48 (2000)

Wei, H.L., Billings, S.A., Balikhin, M.A.: Wavelet based non-parametric NARX models for nonlinear input–output system identification. Int. J. Syst. Sci. 37, 1089–1096 (2006)

Coca, D., Billings, S.A.: Continuous-time system identification for linear and nonlinear systems using wavelet decompositions. Int. J. Bifurcation Chaos 7, 87–96 (1997)

Coca, D., Billings, S.A.: Non-linear system identification using wavelet multiresolution models. Int. J. Control 74, 1718–1736 (2001)

Kurdila, A.J., Prazenica, R.J., Rediniotis, O., et al.: Multiresolution methods for reduced-order models for dynamical systems. J. Guid. Control Dyn. 24, 193–200 (2001)

Cohen, A., Daubechies, I., Feauveau, J.C.: Biorthogonal bases of compactly supported wavelets. Commun. Pure Appl. Math. 45, 485–560 (1992)

Prazenica, R.J., Kurdila, A.J.: Volterra kernel identification using triangular wavelets. J. Vib. Control 10, 597–622 (2004)

Prazenica, R.J., Kurdila, A.J.: Multiwavelet constructions and Volterra kernel identification. Nonlinear Dyn. 43, 277–310 (2006)

Xiang, J.W., Chen, X.F., He, Z.J., et al.: A new wavelet-based thin plate element using B-spline wavelet on the interval. Comput. Mech. 41, 243–255 (2008)

Xiang, J.W., Chen, X.F., Li, B., et al.: Identification of a crack in a beam based on the finite element method of a B-spline wavelet on the interval. J. Sound Vib. 296, 1046–1052 (2006)

Cohen, A.: Numerical Analysis of Wavelet Methods. Elsevier, Amsterdam (2003)

He, Z.J., Chen, X.F., Li, B.: Wavelet Finite Element Theory and Its Engineering Application. Science Press, Beijing (2006)

Li, X., Bo, H., Ling, X., et al.: A wavelet-balance approach for steady-state analysis of nonlinear circuits. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 49, 689–694 (2002)

Soveiko, N., Nakhla, M.: Wavelet harmonic balance. IEEE Microw. Wirel. Compon. Lett. 13, 232–234 (2003)

Soveiko, N., Nakhla, M.: Steady-state analysis of multitone nonlinear circuits in wavelet domain. IEEE Trans. Microw. Theory Tech. 52, 785–797 (2004)

Barmada, S., Musolino, A., Tucci, M.: Study of nonlinearly loaded microwave circuits by an innovative multiresolution time-frequency analysis. In: IET Seminar Digest (2007)

Zhou, D., Cai, W.: A fast wavelet collocation method for high-speed circuit simulation. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 46, 920–930 (1999)

Zhou, D., Cai, W., Zhang, W.: An adaptive wavelet method for nonlinear circuit simulation. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 46, 931–938 (1999)

Deng, Y., Shi, Y.B., Zhang, W.: An approach to locate parametric faults in nonlinear analog circuits. IEEE Trans. Instrum. Meas. 61, 358–367 (2012)

Hansen, P.C.: Rank-Deficient and Discrete Ill-Posed Problems. SIAM, Philadelphia (1998)

Hansen, P.C.: Rank-Deficient and Discrete Ill-Posed Problems: Numerical Aspects of Linear Inversion. SIAM, Philadelphia (1987)

Peng, Z.K., Lang, Z.Q., Wolters, C., et al.: Feasibility study of structural damage detection using NARMAX modelling and nonlinear output frequency response function based analysis. Mech. Syst. Signal Process. 25, 1045–1061 (2011)

Mathews, V.J., Sicuranza, G.L.: Volterra and general polynomial related filtering. In: IEEE Winter Workshop on Nonlinear, Digital Signal Processing. T\_2.1-T\_2.8 (1993)

Cowan, C., Adams, P.: Non-linear system modelling: concept and application. IEEE Int. Conf. Acoust. Speech. Signal Process. 9, 444–447 (1984)

Reiss, W.: Nonlinear distortion analysis of pin diode attenuators using Volterra series representations. IEEE Trans. Circuits Syst. 31, 535–542 (1984)

Koh, T., Powers, E.J.: Second-order Volterra filtering and its application to nonlinear system identification. IEEE Trans. Acoust. Speech Signal Process. 6, 1445–1455 (1985)

Acknowledgments

The authors gratefully acknowledge that the study was supported by the NSFC for Distinguished Young Scholars (11125209), and the NSFC (11322215, 51121063) for this study.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Cheng, C.M., Peng, Z.K., Zhang, W.M. et al. Wavelet basis expansion-based Volterra kernel function identification through multilevel excitations. Nonlinear Dyn 76, 985–999 (2014). https://doi.org/10.1007/s11071-013-1182-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-013-1182-3