Abstract

This paper focuses on the problem of the adaptive neural control for a class of a perturbed pure-feedback nonlinear system. Based on radial basis function (RBF) neural networks’ universal approximation capability, an adaptive neural controller is developed via the backstepping technique. The proposed controller guarantees that all the signals in the closed-loop system are bounded and the tracking error eventually converges to a small neighborhood around the origin. The main advantage of this note lies in that a control strategy is presented for a class of pure-feedback nonlinear systems with external disturbances being bounded by functions of all state variables. A numerical example is provided to illustrate the effectiveness of the suggested approach.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

During the past decades, adaptive backstepping control has become one of the most popular design methods for nonlinear systems in triangular structure, and many significant results have been obtained; see, for instance [1–3] and the reference therein. These researches provide a systematic methodology of solving tracking or regulation control problems of nonlinear systems without satisfying the matching condition. However, it is assumed that an accurate model of the system is available and the unknown parameters appear linearly with respect to known nonlinear functions. This assumption is not sufficient for many practical situations, since it is a difficult work to precisely describe a nonlinear system by known nonlinear functions. Therefore, the investigation on the problem of controlling nonlinear systems with incomplete model knowledge is a meaningful issue.

In order to deal with highly uncertain nonlinear and complex systems, approximator-based adaptive control approaches have also been extensively studied in the past decades by using Lyapunov stability theory. In control design procedure, RBF neural networks (or fuzzy logic systems) are used to approximate uncertain nonlinear functions in dynamic systems because of their universal approximation capability, and then the backstepping technique is applied to construct an adaptive controller. Based on this idea, many interesting control schemes have been proposed for a large class of strict-feedback nonlinear system with uncertain nonlinear functions [5–24]. Using the universal approximation capability of fuzzy logic systems, Wang [4] first proposed a stable adaptive fuzzy control approach for a class of nonlinear systems with unknown functions. Then many adaptive fuzzy control schemes were obtained for uncertain nonlinear systems [7–17]. Alternatively, some adaptive control approaches were investigated for nonlinear systems based on RBF neural networks [5, 6, 18–24]. In the aforementioned papers, however, the research results are obtained under the condition that the considered systems are of affine nonlinear systems, in which the system inputs appear linearly in the state equation.

The nonaffine pure-feedback system stands for a more general class of triangular systems, which have no affine appearance of the variables to be utilized as virtual control inputs. In practice, many nonlinear systems are of nonaffine structure, such as mechanical systems [25], biochemical processes [3], and so on. Therefore, the control of nonaffine pure-feedback nonlinear systems is a meaningful issue and has received increasing attention in the control community in recent years [26–35]. In [26, 27], a class of much simpler pure-feedback systems where the last one or two equations were assumed to be affine were investigated. Using input-to-state stability analysis and the small gain theorem, an improved adaptive neural control approach was presented for a class of completely nonaffine pure-feedback systems [28]. Furthermore, many researchers further considered other types of pure-feedback nonlinear systems, such as pure-feedback systems with time-delay [32], with dead-zone [31], and discrete-time pure-feedback systems [34]. More recently, in [35], an novel observer-based adaptive fuzzy output-feedback control scheme is proposed for a class of MIMO pure-feedback nonlinear systems. Nevertheless, only a few results have been reported for the problem of adaptive control for pure-feedback nonlinear systems with external disturbance [31–33], in which the unknown external disturbance d i (x,t) maybe the function of all states, but its bounding function must be the function of \(\bar{x}_{i}=[x_{1}, x_{2}, \ldots, x_{i}]^{T}\), such as in [31, 32], or an unknown constant [33].

Motivated by the above observations, the problem of adaptive neural control for a class of pure-feedback nonlinear systems with external disturbance is investigated based on backstepping. It is shown that the proposed controller guarantees that all signals in the closed-loop systems remain bounded and the tracking error eventually converges to a small neighborhood around the origin. The main contributions of this paper lie in that (i) an adaptive neural control scheme is systematically derived to control a class of nonlinear pure-feedback systems with external disturbances bounded by functions of all system state variables which is more general than the existing ones; (ii) only one adaptive parameter is needed to be estimated online for n order nonlinear systems. As a result, computational burden is significantly alleviated, which might render this control design more suitable for practical application.

The remainder of this paper is organized as follows. The problem formulation and preliminaries are given in Sect. 2. A novel adaptive neural control scheme is presented in Sect. 3. The simulation examples are given in Sect. 4, followed by Sect. 5, which concludes the work.

2 Preliminaries and problem formulation

Consider a class of perturbed pure-feedback nonlinear system in the following form:

where x=[x 1,x 2,…,x n ]T∈R n, u∈R, and y∈R are state variable, system input, and system output, respectively, \(\bar{x}_{i}=[x_{1}, x_{2}, \ldots, x_{i}]^{T}\in R^{i}\); f i (⋅): R i+1→R with f i (0)=0 are unknown, but smooth nonaffine nonlinear functions; ψ i (⋅):R n→R with ψ i (0)=0 can be viewed as model uncertainties or unknown external disturbance functions.

The control objective in this paper is to design an adaptive neural tracking controller u for the system (1), such that the system output y converges to a small neighborhood of the reference signal y d , and all the signals in the closed-loop remain bounded.

For the control of pure-feedback system (1), define

with x n+1=u.

Assumption 1

The signs of \(g_{i}(\bar{x}_{i}, x_{i+1})\), i=1,2,…,n are known, and there exist constants b m and b M , such that for 1≤i≤n,

Remark 1

Assumption 1 implies that the unknown smooth functions \(g_{i}(\bar{x}_{i+1})\), i=1,2,…,n, are strictly either positive or negative. Without loss of generality, it is assumed that \(0<b_{m}\leq g_{i}(\bar{x}_{i+1})\leq b_{M}\), \(\forall \bar{x}_{i+1}\in R^{i+1}\). Moreover, since the constants b m and b M are not used for controller design, their true values are not necessary to be known.

Assumption 2

For functions ψ i (⋅)s in (1), there exist strict increasing smooth functions ϕ i (⋅):R +→R + with ϕ i (0)=0 such that for i=1,2,…,n,

Remark 2

The increasing property of ϕ i (⋅) means that if a k ≥0, for k=1,2,…,n, then \(\phi_{i}(\sum_{k=1}^{n}a_{k})\leq \sum_{k=1}^{n}\phi_{i}(na_{k})\). Note that ϕ i (s) is a smooth function with ϕ i (0)=0, so there exists a smooth function q i (s) such that ϕ i (s)=sq i (s), which results in

Assumption 3

The desired trajectory y d (t) and its time derivatives up to the nth order \(y_{d}^{(n)}(t)\) are continuous and bounded. It is further assumed that there exists a positive constant d ∗ such that |y d (t)|≤d ∗.

Throughout this paper, the following RBF neural networks will be used to approximate any continuous function f(Z):R n→R,

where Z∈Ω Z ⊂R q is the input vector with q being the neural networks input dimension, weight vector W=[w 1,w 2,…,w l ]T∈R l, l>1 is the neural networks node number, and S(Z)=[s 1(Z),s 2(Z),…,s l (Z)]T means the basis function vector with s i (Z) being chosen as the commonly used Gaussian function of the form:

where μ i =[μ i1,μ i2,…,μ iq ]T is the center of the receptive field and η i is the width of the Gaussian function. In [36], it has been indicated that with sufficiently large node number l, the RBF neural networks (5) can approximate any continuous function f(Z) over a compact set Ω Z ⊂R q to arbitrary any accuracy ε>0 as

where W ∗ is the ideal constant weight vector and defined as

where δ(Z) denotes the approximation error and satisfies |δ(Z)|≤ε.

Lemma 1

[37]

Consider the Gaussian RBF networks (5) and (6). Let \(\rho:=\frac{1}{2}\min_{i\neq j}\|\mu_{i}-\mu_{j}\|\), then an upper bound of ∥S(Z)∥ is taken as

It has been shown in [28] that the constant s in Lemma 3 is a limited value and is independent of the variable Z and the dimension of neural weights l.

Lemma 2

[38]

For any η∈R and ϵ>0, the following inequality holds:

In Sect. 3, an adaptive neural control via backstepping technique is proposed for perturbed pure-feedback nonlinear systems (1). The backstepping design with n steps is developed based on the following coordinate transformation:

where α 0=y d , \(\bar{y}_{d}^{(i)}\) denotes the vector of y d and up to its ith order time derivative and α i is the virtual control law. \(\hat{\theta}\) is the estimation of unknown constant θ which is specified as

with b m and b M being defined in Assumption 1, and \(W^{*}_{i}\) will be given later.

The adaptive neural controller and adaptive law will be constructed in the following forms:

where k i , a i , k 0, and λ are positive design parameters, S i (Z i ) is the basis function vector with \(Z_{i}=[\bar{x}_{i}^{T}, \hat{\theta}, \bar{y}_{d}^{(i)T}]^{T}\in \varOmega_{Z_{i}} \subset R^{2i+2}\) (i=2,…,n). Note that, when i=n, α n is the actual control input u(t).

Remark 3

It is easy to prove from (13) that if initial condition \(\hat{\theta}(0)\geq 0\), then \(\hat{\theta}(t)\geq 0\) for all t≥0. In fact, it is always reasonable to choose \(\hat{\theta}(0)\geq 0\) in a practical situation, as \(\hat{\theta}\) is an estimation of θ. This property will be used in each design step.

Lemma 3

For the coordinate transformations z i =x i −α i−1, i=1,2,…,n, the following result holds:

with \(\varphi_{i}(\hat{\theta})=(k_{i}+1)+\frac{1}{2a_{i}^{2}}s^{2}\hat{\theta}\), for i=1,2,…,n−1, and φ n =1.

Proof

From α 0=y d , (10), (12), and Lemma 1, we have

□

3 Adaptive neural tracking control

In the following, for simplicity, the time variable t will be omitted from the corresponding functions and let S i (Z i )=S i .

Step 1. Let us consider the first differential equation of system (1). Noting z 1=x 1−y d , its derivative is

To design a stabilization control law for (15), consider a Lyapunov function candidate as

where \(\tilde{\theta}=\theta-\hat{\theta}\) is the parameter error. Then the time derivative of V 1 along (15) is given by

By using Assumption 2, (4), (14), and the completion of squares, the following result can be obtained easily:

where \(\bar{\phi}^{2}_{1}(z_{l},\hat{\theta})=\frac{1}{2}{(n+1)^{2}} \varphi^{2}_{l}(\hat{\theta}) q^{2}_{1}({(n+1)}|z_{l}|\*\varphi_{l}(\hat{\theta}))\). Further, applying Lemma 2 to the last term on the right-hand side in (18) gives

Substituting (18) into (17) and using (19) yields

Define a new function \(w_{1}=-\dot{y}_{d} +\phi_{1}((n+1)d^{*})\*\tanh(\frac{z_{1}\phi_{1}((n+1)d^{*})}{\epsilon_{1}}) +\frac{1}{2}(n+2)z_{1}+k_{1}z_{1}+ z_{1}\sum_{k=1}^{n-1}\sum_{j=1}^{k}\bar{\phi}^{2}_{j}(z_{1},\hat{\theta})\). Then (20) can be rewritten as

Considering that \(\frac{\partial w_{1}}{\partial x_{2}}=0\), the following inequality based on Assumption 1 will be obtained:

According to Lemma 1 [6], for every value of x 1 and w 1, there exists a smooth ideal control input \(x_{2}=\bar{\alpha}_{1}(x_{1}, w_{1})\) such that

Applying mean value theorem [39], there exists μ 1 (0<μ 1<1) such that

where \(g_{\mu_{1}}:=g_{1}(x_{1}, x_{\mu_{1}})\), \(x_{\mu_{1}}=\mu_{1}x_{2}+(1-\mu_{1})\bar{\alpha}_{1}\). Obviously, Assumption 1 on g 1(x 1,x 2) is still valid for \(g_{\mu_{1}}\).

Next, substituting (24) into (21) and using the fact of (23) produces

with z 2=x 2−α 1 and α 1 being virtual control signal which will be defined later.

Since \(\bar{\alpha}_{1}\) contains unknown function w 1, an RBF neural network \(W_{1}^{T}S_{1}(Z_{1})\) is used to model \(\bar{\alpha}_{1} \) such that

where δ 1(Z 1) refers to the approximation error and ε 1 is a given constant.

Furthermore, the following inequality is true:

where θ has been defined in (11).

By constructing virtual control law α 1 in (12) with i=1, and using the fact of \(\hat{\theta}\geq 0\) and Assumption 1, the following result can be obtained:

Subsequently, by combining (25) together with (27) and (28), we have

where \(\rho_{1}=\delta\epsilon_{1}+\frac{1}{2}a_{1}^{2} +\frac{1}{2}b_{M}^{2}\varepsilon_{1}^{2}\).

Step 2. The derivative of z 2=x 2−α 1 is

where

Choose the following Lyapunov function:

The time derivative of V 2 is

Following the same line as that used in (18) results in

where \(\bar{\phi}^{2}_{j}(z_{l},\hat{\theta})=\frac{1}{2}{(n+1)^{2}}\varphi^{2}_{l}(\hat{\theta}) q^{2}_{j}({(n+1)}|z_{l}|\*\varphi_{l}(\hat{\theta}))\), j=1,2.

Let \(U_{2}=|\frac{\partial \alpha_{1}}{\partial x_{1}}|\phi_{1}((n+1)d^{*})+\phi_{2}((n+1)d^{*})\). Then, applying Lemma 2, we obtain

In addition, by applying the definition of \(\dot{\hat{\theta}}\) in (13), one has

Furthermore, by substituting (29), (34), and (35) into (33) and using (36)–(37), we can rewrite (33) as

where

Noting the fact that \(\frac{\partial w_{2}}{\partial x_{3}}=0\), the following inequality holds:

For every value of \(\bar{x}_{2}\) and w 2, there exists a smooth ideal virtual control input \(x_{3}=\bar{\alpha}_{2}(\bar{x}_{2}, w_{2})\) such that

Using mean value theorem [39], there exists μ 2 (0<μ 2<1) such that

where \(g_{\mu_{2}}:=g_{2}(\bar{x}_{2}, x_{\mu_{2}})\), \(x_{\mu_{2}}=\mu_{2}x_{3}+(1-\mu_{2})\bar{\alpha}_{2}\). Apparently, Assumption 1 is still valid for \(g_{\mu_{2}}\). Combining (38), (40), and (41), we have

By employing an RBF neural network \(W^{*T}_{2}S_{2}(Z_{2})\) to approximate \(\bar{\alpha}_{2}\), for any given constant ε 2>0, \(\bar{\alpha}_{2}\) can be expressed as

where the approximate error δ 2(Z 2) satisfies |δ 2(Z 2)|≤ε 2. Repeating the method utilized in (27) gives

Then, by choosing virtual control signal α 2 in (12) with i=2 and following the similar method to (28), we have

By adding and subtracting virtual control signal α 2 in (42) and using (44)–(45), (42) can be rewritten as

where z 2=x 3−α 2, \(\rho_{j}=\delta\epsilon_{j}+\frac{1}{2}a_{j}^{2} +\frac{1}{2}b_{M}^{2}\varepsilon_{j}^{2}\), j=1,2.

Remark 4

The adaptive law \(\dot{\hat{\theta}}\) in (13) is a function of all the error variables. So, unlike the conventional approximation-based adaptive control schemes, the term \(\frac{\partial \alpha_{1}}{\partial\hat{\theta}}\dot{\hat{\theta}}\) in (31) cannot be approximated directly by the RBF neural networks \(W^{*T}_{2}S_{2}(Z_{2})\). To solve this problem, in (37), \(\frac{\partial \alpha_{1}}{\partial\hat{\theta}}\dot{\hat{\theta}}\) is decomposed into two parts. The first term on the right-hand side of (37) can be contained in \(\bar{f}_{2}(Z_{2})\) modeled by \(W^{*T}_{2}S_{2}(Z_{2})\), and the last one in (37), which is the function of the latter error variables, namely z i , i=3,…,n, will be dealt with in the later design steps. This design idea will be repeated at the following steps.

Step i (3≤i≤n−1). For z i =x i+1−α i , the time derivative of z i is given by

where

Define the following Lyapunov function candidate:

Then the derivative of V i in (49) along with (47) can be expressed as

where the term \(\dot{V}_{i-1}\) in (50) can be obtained in the below form by following the procedures outlined in Step 2:

Following the procedures outlined in Step 2, one can get the following two inequalities:

where \(\bar{\phi}^{2}_{j}(z_{l},\hat{\theta})=\frac{1}{2}{(n+1)^{2}}\varphi^{2}_{l}(\hat{\theta}) q^{2}_{j}({(n+1)}|z_{l}|\*\varphi_{l}(\hat{\theta}))\), j=1,2,…,i. Furthermore, similar to (36), the following result is true:

where \(U_{i}=\sum_{j=1}^{i-1}|\frac{\partial \alpha_{i-1}}{\partial x_{j}}|\phi_{j}((n+1)d^{*})+\phi_{i}((n+1)d^{*})\).

For the term \(\frac{\partial \alpha_{i-1}}{\partial \hat{\theta}}\dot{\hat{\theta}}\), by using (13), we have

Subsequently, combining (50) with (51)–(55) produces

where

Considering the fact that \(\frac{\partial w_{i}}{\partial x_{i+1}}=0\), one has

According to Lemma 1 [6], by viewing x i+1 as a virtual control input, for every value of \(\bar{x}_{i}\) and w i , there exists a smooth ideal control input \(x_{i+1}=\bar{\alpha}_{i}(\bar{x}_{i}, w_{i})\) such that

Applying mean value theorem [39], there exists μ i (0<μ i <1) such that

where \(g_{\mu_{i}}:=g_{i}(\bar{x}_{i}, x_{\mu_{i}})\), \(x_{\mu_{i}}=\mu_{i}x_{i+1}+(1-\mu_{i})\bar{\alpha}_{i}\). Note that Assumption 1 is still valid for \(g_{\mu_{2}}\).

Substituting (59) into (56) and using (58) results in

Next, using an RBF neural networks \(W_{i}^{*T}S_{i}(Z_{i})\) to approximate \(\bar{\alpha}_{i}\), then constructing virtual control signal α i in (12) and following the same line as the procedures used from (43)–(46), one has

where z i+1=x i+1−α i , \(\rho_{j}=\delta\epsilon_{j}+\frac{1}{2}a_{j}^{2} +\frac{1}{2}b_{M}^{2}\varepsilon_{j}^{2}\), j=1,2,…,i.

Step n. This is the final step; the actual control input u will be constructed. For z n =x n −α n−1, we have

where \(\dot{\alpha}_{n-1}\) is given in (48) with i=n Take the Lyapunov function as

With the straightforward derivation similar to those employed in Step i, the derivative of V n satisfies the following inequality:

where w n is defined as

From (65), Assumption 1 and Lemma 1 [6], for every value \(\bar{x}_{n}\) and w n , there exists a smooth ideal control input \(u=\bar{\alpha}_{n}(\bar{x}_{n}, w_{n})\) such that

Using mean value theorem [39], there exists μ n (0<μ n <1) such that

with \(g_{\mu_{n}}:=g_{n}(\bar{x}_{n}, x_{\mu_{n}})\), \(x_{\mu_{n}}=\mu_{n}u+(1-\mu_{n})\bar{\alpha}_{n}\). Assumption 1 is still valid for \(g_{\mu_{n}}\).

By combining (64) together with (66) and (67), one has

Similarly, for any given positive constant ε n , an RBF neural network \(W_{n}^{*T}S_{n}(Z_{n})\) is utilized to approximate the unknown function \(\bar{\alpha}_{n}\). Following the same line as used in (27), we have

Now, construct the actual control signal u in (12) with i=n, then the following inequality holds:

Further, substituting (69) and (70) into (68) and taking (13) into account result in

where the inequality \(\frac{k_{0}b_{m}}{\lambda}\tilde{\theta}\hat{\theta}\leq-\frac{b_{m}}{2\lambda}k_{0}\tilde{\theta}^{2}+ \frac{b_{m}}{2\lambda}k_{0}\theta^{2}\) has been used in (71) and \(\rho_{j}=\delta\epsilon_{j}+\frac{1}{2}a_{j}^{2} +\frac{1}{2}b_{M}^{2}\varepsilon_{j}^{2}\), j=1,2,…,n−1, \(\rho_{n}=\frac{1}{2}a_{n}^{2} +\frac{1}{2}b_{M}^{2}\varepsilon_{n}^{2}+\frac{b_{m}}{2\lambda}k_{0}\theta^{2}\).

To date, the adaptive neural control design has been completed based on backstepping technique. The main result of this note will be summarized by the following theorem.

Theorem 1

Consider the pure-feedback nonlinear system (1), the controller (12), and the adaptive law (13) under Assumptions 1–3. Assume there exist sufficiently large compacts \(\varOmega_{Z_{i}}\), i=1,2,…,n such that \(Z_{i}\in\varOmega_{Z_{i}}\) for all t≥0. Then, for bounded initial conditions with \(\hat{\theta}(0)\geq0\), all signals in the closed-loop system remain bounded and the following inequality holds:

with a 0=min{2(1+b m )k j ,k 0,j=1,2,…,n} and \(b_{0}=\sum_{j=1}^{n}\rho_{j}\).

Proof

For the stability analysis of the closed-loop system, choose the Lyapunov function as V=V n . From (71), it follows

where a 0=min{2(1+b m )k j ,k 0,j=1,2,…,n} and \(b_{0}=\sum_{j=1}^{n}\rho_{j}\).

Then the following result is true:

which implies that all the signals in the closed-loop system are bounded.

Especially, from definition of V, one has

As a result, (72) can be obtained immediately. The proof is thus completed. □

Remark 5

In this research, the external disturbance ψ i (x,t) in (1) and its bounding function are assumed to be the function of all states. However, in many practical situations, the disturbance may be only the function of time, i.e., ψ i (x,t)=d i (t). In this case, a common restriction to external disturbance d i (t) is that there exists a constant d i such that |d i (t)|≤d i . Then, similar to the method proposed in [33], with a minor change of the virtual control signal (12), the similar result can be obtained by repeating the aforementioned procedures.

4 Simulation example

Example 1

To demonstrate the proposed control scheme, consider the following second-order pure-feedback nonlinear system:

where x 1 and x 2 are the state variables, y and u denote the system output, and the actual control input, respectively. The control objective is to design an adaptive neural controller such that all the signals in the closed-loop system remain bounded and the system output y follows the given reference signal y d =0.5(sin(t)+sin(0.5t)). According to Theorem 1, the virtual control law, the actual control law, and the adaptive laws are designed as

where z 1=x 1−y d ,z 2=x 2−α 1 and \(Z_{i}=[\bar{x}_{i}^{T}, \hat{\theta},\allowbreak \bar{y}_{d}^{(i)T}]^{T}\) (i=1,2), and the design parameters are taken as follows: k 1=k 2=5, a 1=a 2=2, k 0=0.3, and λ=1. The simulation are run with the initial conditions [x 1(0),x 2(0)]T=[0.2,0.1]T, and \(\hat{\theta}(0)=0\).

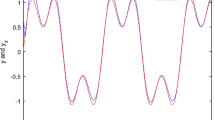

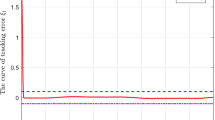

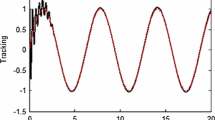

The simulation results are shown in Figs. 1–4. Figure 1 shows the system output y and the reference signal y d . From Fig. 1, we can see that the good tracking performance has been achieved. Figure 2 shows that the state variable x 2 is bounded. Figure 3 displays the control signal u. Figure 4 shows that the adaptive parameters \(\hat{\theta}\) is bounded.

5 Conclusion

In this paper, an adaptive neural control scheme has been proposed for a class of nonaffine pure-feedback nonlinear system with external disturbance. The developed adaptive neural tracking controller guarantees that all the signals involved are bounded, while the tracking error eventually converges to a small neighborhood of the origin. Moreover, the suggested controller contains only one adaptive parameter needed to be updated online. This makes our design scheme may be easily implemented in practical applications. Simulation results further illustrate the effectiveness of the proposed scheme.

References

Kanellakopoulos, I., Kokotovic, P.V., Morse, S.: Systematic design of adaptive controllers for feedback linearizable systems. IEEE Trans. Autom. Control 36(11), 1241–1253 (1991)

Annaswamy, A.M., Baillieul, J.: Adaptive control of nonlinear systems with a triangular structure. IEEE Trans. Autom. Control 39(7), 1411–1428 (1994)

Krstic, M., Kanellakopoulos, I., Kokotovic, P.V.: Nonlinear and Adaptive Control Design. Wiley, New York (1995)

Wang, L.X.: Stable adaptive fuzzy control of nonlinear systems. IEEE Trans. Fuzzy Syst. 1(2), 146–155 (1993)

Ge, S.S., Huang, C.C., Lee, T., Zhang, T.: Stable Adaptive Neural Network Control. Kluwer Academic, Norwell (2002)

Ge, S.S., Wang, C.: Direct adaptive NN control for a class of nonlinear systems. IEEE Trans Neutral Netw. 13(1), 214–221 (2002)

Chen, B., Liu, X.P., Liu, K.F., Lin, C.: Direct adaptive fuzzy control of nonlinear strict-feedback systems. Automatica 45(6), 1530–1535 (2009)

Chen, B., Liu, X.P., Liu, K.F., Lin, C.: Fuzzy-approximation-based adaptive control of strict-feedback nonlinear systems with time delays. IEEE Trans. Fuzzy Syst. 18(5), 883–892 (2010)

Tong, S.C., Li, Y.M.: Observer-based fuzzy adaptive control for strict-feedback nonlinear systems. Fuzzy Sets Syst. 160(12), 1749–1764 (2009)

Tong, S.C., He, X.L., Zhang, H.G.: A combined backstepping and small-gain approach to robust adaptive fuzzy output feedback control. IEEE Trans. Fuzzy Syst. 17(5), 1059–1069 (2009)

Wang, T., Tong, S.C., Li, Y.M.: Robust adaptive fuzzy control for nonlinear system with dynamic uncertainties based on backstepping. Int. J. Innov. Comput., Inf. Control 5(9), 2675–2688 (2009)

Tong, S.C., Li, Y.M., Feng, G., Li, T.S.: Observer-based adaptive fuzzy backstepping dynamic surface control for a class of MIMO nonlinear systems. IEEE Trans. Syst. Man Cybern., Part B, Cybern. 41(4), 1121–1135 (2011)

Tong, S.C., Liu, C.L., Li, Y.M.: Adaptive fuzzy decentralized control for large-scale nonlinear systems with time-varying delays and unknown high-frequency gain sign. IEEE Trans. Syst. Man Cybern., Part B, Cybern. 41(2), 474–485 (2011)

Li, Y.M., Ren, C.E., Tong, S.C.: Adaptive fuzzy backstepping output feedback control for a class of MIMO time-delay nonlinear systems based on high-gain observer. Nonlinear Dyn. 67(2), 1175–1191 (2012)

Li, T.S., Tong, S.C., Feng, G.: A novel robust adaptive fuzzy tracking control for a class of MIMO systems. IEEE Trans. Fuzzy Syst. 18(1), 150–160 (2010)

Li, T.S., Wang, D., Chen, N.X.: Adaptive fuzzy control of uncertain MIMO nonlinear systems in block-triangular forms. Nonlinear Dyn. 63(1), 105–123 (2011)

Li, T.S., Li, R.H., Li, J.F.: Decentralized adaptive neural control of nonlinear systems with unknown time delays. Nonlinear Dyn. 67(3), 2017–2026 (2012)

Li, T.S., Wang, D., Feng, G., Tong, S.C.: A DSC approach to robust adaptive NN tracking control for strict-feedback nonlinear systems. IEEE Trans. Syst. Man Cybern., Part B, Cybern. 40(3), 915–927 (2010)

Wang, M., Zhang, S.Y., Chen, B., Luo, F.: Direct adaptive neural control for stabilization of nonlinear time-delay systems. Sci. China Inf. 53(4), 800–812 (2010)

Hua, C.C., Guan, X.P.: Output feedback stabilization for time-delay nonlinear interconnected systems using neural network. IEEE Trans. Neural Netw. 19(4), 673–688 (2008)

Chen, M., Ge, S.S., How, B.: Robust adaptive neural network control for a class of uncertain MIMO nonlinear systems with input nonlinearities. IEEE Trans. Neural Netw. 21(5), 796–812 (2010)

Chen, M.: Robust adaptive tracking control of the underwater robot with input nonlinearity using neural networks. Int. J. Comput. Intell. Syst. 3(5), 646–655 (2010)

Chen, W.S., Li, J.M.: Decentralized output-feedback neural control for systems with unknown interconnections. IEEE Trans. Syst. Man Cybern., Part B, Cybern. 38(1), 258–266 (2008)

Zhang, H.G., Wang, Z.S., Liu, D.R.: Global asymptotic stability of recurrent neural networks with multiple time-varying delays. IEEE Trans. Neural Netw. 19(5), 855–873 (2008)

Ferrara, A., Giacomini, L.: Control of a class of mechanical systems with uncertainties via a constructive adaptive second order VSC approach. Trans. ASME, J. Dyn. Syst. Meas. Control 122(1), 33–39 (2000)

Ge, S.S., Wang, C.: Adaptive NN control of uncertain nonlinear pure-feedback systems. Automatica 38(4), 671–682 (2002)

Wang, D., Huang, J.: Adaptive neural network control for a class of uncertain nonlinear systems in pure-feedback form. Automatica 38(8), 1365–1372 (2002)

Wang, C., Hill, D.J., Ge, S.S., Chen, G.: An ISS-modular approach for adaptive neural control of pure-feedback systems. Automatica 42(5), 723–731 (2006)

Liu, Y.J., Wang, Z.F.: Adaptive fuzzy controller design of nonlinear systems with unknown gain sign. Nonlinear Dyn. 58(4), 687–695 (2009)

Liu, Y.J., Tong, S.C., Li, Y.M.: Adaptive neural network tracking control for a class of non-linear systems. Int. J. Inf. Syst. Sci. 41(2), 143–158 (2010)

Zhang, T.P., Ge, S.S.: Adaptive dynamic surface control of nonlinear systems with unknown dead zone in pure feedback form. Automatica 44(7), 1895–1903 (2008)

Wang, M., Liu, X., Shi, P.: Adaptive neural control of pure-feedback nonlinear time-delay systems via dynamic surface technique. IEEE Trans. Syst. Man Cybern., Part B, Cybern. 41(6), 1681–1692 (2011)

Du, H.B., Shao, H.H., Yao, P.J.: Adaptive neural network control for a class of low-triangular-structured nonlinear systems. IEEE Trans. Neural Netw. 17(2), 509–514 (2006)

Chen, W.S.: Adaptive NN control for discrete-time purefeedback systems with unknown control direction under amplitude and rate actuator constraints. ISA Trans. 48(3), 304–311 (2009)

Tong, S.C., Li, Y.M., Shi, P.: Observer-based adaptive fuzzy backstepping output feedback control of uncertain MIMO pure-feedback nonlinear systems. IEEE Trans. Fuzzy Syst. 20(4), 771–785 (2012)

Sanner, R.M., Slotine, J.E.: Gaussian networks for direct adaptive control. IEEE Trans. Neural Netw. 3(6), 837–863 (1992)

Kurdila, A.J., Narcowich, F.J., Ward, J.D.: Persistency of excitation in identification using radial basis function approximants. SIAM J. Control Optim. 33(2), 625–642 (1995)

Ploycarpou, M.M., Ioannou, P.A.: A robust adaptive nonlinear control design. Automatica 32(3), 423–427 (1996)

Apostol, T.M.: Mathematical Analysis. Addison-Wesley, Reading (1963)

Acknowledgements

This work is partially supported by the Natural Science Foundation of China (61074008, 61174033, and 11201037).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, H., Chen, B. & Lin, C. Adaptive neural tracking control for a class of perturbed pure-feedback nonlinear systems. Nonlinear Dyn 72, 207–220 (2013). https://doi.org/10.1007/s11071-012-0705-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-012-0705-7