Abstract

Predicting the temporal evolution of landslides is typically supported by numerical modelling. Dynamic sensitivity analysis aims at assessing the influence of the landslide properties on the time-dependent predictions (e.g. time series of landslide displacements). Yet, two major difficulties arise: (1) Global sensitivity analysis require running the landslide model a high number of times (>1,000), which may become impracticable when the landslide model has a high computation time cost (>several hours); (2) Landslide model outputs are not scalar, but function of time, that is, they are n-dimensional vectors with n usually ranging from 100 to 1,000. In this article, I explore the use of a basis set expansion, such as principal component analysis, to reduce the output dimensionality to a few components, each of them being interpreted as a dominant mode of variation in the overall structure of the temporal evolution. The computationally intensive calculation of the Sobol’ indices for each of these components are then achieved through meta-modelling, that is, by replacing the landslide model by a “costless-to-evaluate” approximation (e.g. a projection pursuit regression model). The methodology combining “basis set expansion—meta-model—Sobol’ indices” is then applied to the Swiss La Frasse landslide to investigate the dynamic sensitivity analysis of the surface horizontal displacements to the slip surface properties during the pore pressure changes. I show how to extract information on the sensitivity of each main modes of temporal behaviour using a limited number (a few tens) of long-running simulations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Landslides are by nature dynamic processes showing in many cases acceleration phases. One of the major causal factors is related to the time-dependent groundwater pressure changes. The Swiss large landslides (La Frasse case, Laloui et al. 2004 and Tacher et al. 2005; Steinernase case, Laloui et al. 2009) exemplify such complex hydro-mechanical behaviours. Predicting the temporal evolution of landslides is typically supported by numerical modelling; for instance, the landslide model output can take the form of time-varying surface displacements given pore pressure temporal changes.

One of the greatest challenges, when building such predictive models, is the estimation of parameters’ values and the associated uncertainty (Bell and Glade 2004; Narasimhan and Faber 2011). Investigating the impacts of parameter uncertainty on the landslide model outputs and ranking the sources of uncertainty in terms of importance can be very useful for risk management purposes, especially to guide future laboratory or in site characterizations and studies (Saltelli 2002).

Global sensitivity analysis (denoted GSA) can provide such valuable information. GSA is based on the functional analysis of variance decomposition of the model output and provides the Sobol’ indices (Sobol’ 1993; Sobol’ and Kucherenko 2005). See “Appendix 1” for more details. In particular, the Sobol’ indices of first order (also named “main effects”) give a measure of importance for each input parameter, which are useful to rank the sources of uncertainty within a “factors’ prioritization setting” as described by Saltelli et al. (2008). Such an analysis presents the advantages of exploring the sensitivity to input parameters over their whole range of variation (i.e. in a global manner contrary to local sensitivity analysis), of fully accounting for possible interaction between the input parameters, and of being applicable without introducing a priori assumptions on the mathematical formulation of the landslide model, for example, linear or second-order polynomial (Saltelli et al. 2008). Despite such advantages, very few studies (e.g. Hamm et al. 2006 and Rohmer and Foerster 2011) have implemented GSA for landslides models. To the author’s knowledge, the most widespread method remains the “one-factor-at-a-time” (OAT) approach (also named local sensitivity analysis). This consists in analysing variations from a base model results by varying, in turn, the input parameters or considering different scenarios. Applications of OAT to investigate the sensitivity to input parameters related to soil/rock properties (e.g. density, cohesion and angle of internal friction) or to slide characteristics (e.g. sizes and failure mechanisms) are illustrated, for instance, by Fine et al. (2005) for large submarine slope failure models, by Gorsevski et al. (2006) for landslide susceptibility models, and by D’Ambrosio et al. (2007) for debris-flow numerical simulations. The latter study also illustrates a sensitivity analysis for the input parameters related to the numerical procedure such as cell sizes. Though the implementation of OAT is simpler than GSA, OAT presents several shortcomings as pointed out by the statistical literature (see Saltelli and Annoni 2010 and references therein). The afore-described characteristics of GSA can overcome such limitations (Saltelli et al. 2008).

Yet, conducting GSA for landslides models is hindered by two major difficulties. On the one hand, the different algorithms available for the estimation of the Sobol’ indices like (extended) Fourier amplitude sensitivity test (E)FAST (Saltelli et al. 1999) or the Sobol’ algorithm (Sobol’ 1993) requires a large number of model evaluations (of the order of thousands). Their implementation appears hardly achievable when the landslide model is long running, that is, computationally intensive, with computation time cost (CPU time) of the order of several hours (depending on the size of the mesh model or on the complexity of modelled phenomena). This problem can be solved by using meta-modelling techniques (see for instance Storlie et al. 2009) consisting in replacing the complex landslide model by a mathematical approximation referred to as “meta-model” (also named “response surface” or “surrogate model”). These approximations are characterized by a very low CPU time and can be easily run a high number of times. In the field of landslide modelling, the study by Rohmer and Foerster (2011) illustrates the application of a meta-modelling strategy analysing the sensitivity of the horizontal surface displacements to the slip surface properties in the Swiss La Frasse case.

On the other hand, the dynamic nature of landslide models brings an additional level of difficulty to the implementation of the combination of “GSA–meta-modelling” procedure. The landslide model output is not a single quantity (scalar), but a function of time, which can be represented by n-dimensional vectors (i.e. curves) where n corresponds to the number of calculation time steps. To investigate the “dynamic” sensitivity analysis of the time-dependent model output, a possible approach (named time-varying GSA) would consist in calculating the Sobol’ indices separately at each time step. An illustration is provided by Rohmer and Foerster (2011) on the Swiss La Frasse case. Yet, this approach presents several disadvantages (see discussion in Campbell et al. 2006; Auder et al. 2011), because it may become intractable for long time series (n typically ranging from 100 to 1,000), and it introduces a high level of redundancy, because of the strong relationship between outputs from successive time steps. An alternative is to compute the generalized sensitivity index proposed by Lamboni et al. (2011), which allows explaining the influence of each input parameter on the overall functional output variability. Though this approach proves to be very efficient, it may miss important dynamic features of the output, that is, the general structure (or form) of the temporal evolution (Campbell et al. 2006). In other words, the questions of primary interest for dynamic sensitivity analysis are as follows: What shifts the temporal evolution up or down? What makes a possible peak wider or narrower? What reverses the temporal evolution? What accelerates the behaviour? , that is, the influence of the input parameters on the dominant modes of temporal evolution of the model output. In this view, I rely on the strategy proposed by Campbell et al. (2006) and recently applied in the field of systems biology modelling (Summer et al. 2012). This consists in the reduction of the dimensionality by expanding the functional model output in an appropriate functional coordinate system followed by GSA of the coefficients of the expansion. I then rely on the meta-modelling techniques applied to the coefficients of the expansion to overcome the computation burden associated with the calculation of the Sobol’ indices (Auder et al. 2011).

The remainder of the present paper is organized as follows. In a first section, I describe the motivating real-case example, namely the Swiss “La Frasse” landslide. In a second section, I describe the principles for basis set expansion of functional data and describe how to extract the main modes of variations (i.e. general structures of the temporal evolution) by using the case of La Frasse as an application case. In a third section, I propose a methodology for dynamic sensitivity analysis of long-running landslide models by combining techniques described in Sect. 3 and meta-modelling techniques. Finally, in Sect. 5, I apply the methodology to the La Frasse case and show how the proposed procedure can be useful for landslide risk management.

2 Motivating real-case example

In this section, I present the La Frasse case, which motivated the present work and which was used to exemplify the application of the techniques described in the following.

2.1 Study site

The La Frasse landslide is located in the Pre-alps of the Canton of Vaud in Switzerland (at ~20 km east from Lake Geneva). This landslide has experienced several crises in the past, especially in its lower part, in particular in 1910–1914, 1966, 1981–1982 and 1993–1994 (Tacher et al. 2005). During crises, displacements may reach up to 4 m (with a maximum observed velocity of 1 m/week in the lower part of the landslide). The total volume of the active mass represents ~73 million m3 with a length of 2,000 m, a width varying from 500 m (upper and medium parts) to 1,000 m (lower part) and an average thickness varying from 80 m (upper part) to 40 m (lower part).

In the present article, I focus on the 1994 crisis, which was studied by Laloui et al. (2004). During this crisis (over a period of nearly 300 days), the evolution of the groundwater table is considered to be at the origin of the sliding. The effect of the hydraulic regime on the geomechanical behaviour of the landslide was investigated by Laloui et al. (2004) through finite-element simulations considering a 2D cross-section through the centre of the landslide and using the finite-element program GEFDYN (Aubry et al. 1986).

2.2 Model set-up and parameters

The model is composed of 1,694 nodes, 1,530 quadrangular elements and six soil layers derived from the geotechnical investigations (see Fig. 1, adapted from Laloui et al. 2004). The pore pressure temporal changes were applied at the base of the slide (see Laloui et al. 2004 and Tacher et al. 2005 for further details). Figure 2a provides an example of such pore pressure changes at node 292 of the model (see location in Fig. 1). Several phases can be distinguished: a first peak of pore pressure between 75 and 100 days; a second phase composed of several peaks between 150 and 200 days, and finally a last peak around 275 days.

Overview of the landslide numerical model for the La Frasse case (adapted from Laloui et al. 2004). Maximum horizontal surface displacements (analysed in the study) are computed in the upper and lower parts of the landslides. The node 292 where the pore pressure changes are depicted in Fig. 2a is indicated

a Example of pore pressure changes imposed at the base of the slide; here shown at node 292 (see location in Fig. 1); maximum time-dependent horizontal displacements computed for 30 different values of the slip surface properties: b in the upper part (as defined in Fig. 1) and c in the lower part; The red-coloured curve corresponds to the mean temporal function

The complex behaviour of the slip surface material was modelled using the Hujeux elastoplastic multi-mechanism constitutive model (see details in Aubry et al. 1982; Lopez-Caballero et al. 2007 and references therein). The Hujeux constitutive model can account for soil behaviour in a large range of deformations and for plastic mechanisms, such as progressive friction mobilization, Coulomb-type failure, critical state and dilatancy/contractance flow rule. The main parameters for the slip surface materials are the bulk K and shear G elastic modulus, which are assumed to depend on the mean effective stress through a power-type law of exponent n e (named nonlinearity coefficient); the critical state (linked with the initial critical pressure p c0) and plasticity parameters, essentially the friction angle ϕ at perfect plasticity, the plastic compressibility β; and the dilatancy angle Ψ, appearing in the flow rule and defining the limit between soil dilatancy and contractance. The Mohr–Coulomb constitutive law was assumed for the other soil materials.

2.3 Objectives of the dynamic sensitivity analysis

In the present article, the dynamic sensitivity analysis was primarily focused on the soil formation of the slip surface, which primarily controls the hydro-mechanical behaviour of the landslide (Laloui et al. 2004; Tacher et al. 2005). The objective was to understand the influence of the seven input parameters of the Hujeux constitutive model on the temporal evolution of the maximum horizontal surface displacements calculated in the upper part and in the lower part of the landslide (Fig. 1), that is, to identify which properties drive the most the overall uncertainty in the temporal evolution of the surface displacements. In this view, the main effects (Sobol’ indices of first order) were calculated within a factors’ prioritization setting (as described by Saltelli et al. 2008). Note that I restricted the analysis to time-dependent landslide model outputs and using the maximum displacements allowed accounting for the fact that the landslide model outputs also vary in space. Sensitivity of spatio-temporal outputs constitutes a direction for further works.

Assuming a situation where the same “level of uncertainty” is assigned to the parameters of the Hujeux model, a 25 % variation around the original values identified by Laloui and co-authors (Laloui et al. 2004) was affected to each of the seven input parameters (Table 1). A uniform probability distribution was assigned to each of these input parameters (assumed to be independent). More sophisticated situations for uncertainty representation can be considered; for instance, in a calibration setting, the uncertainty on each slip surface property should be adequately represented making use of any kind of information related to the measurement procedure (number of samples, measurement error and possibility to construct empirical probability distribution), but also to the model inadequacy to perfectly fit the observations (i.e. differences between observed and simulated curves). Accounting for these multiple sources of information in a calibration procedure using long-running simulations has been recently tackled within a Bayesian framework relying on the combination of basis set expansion and on meta-modelling (Higdon et al. 2008).

The other parameters (appearing in the flow rule, the hardening and the threshold domains definition and categorized as “not-directly measurable” by Lopez-Caballero et al. 2007) were kept constant. The properties of the six other soil layers were assumed to be constant as well. An in-depth exploration of all these sources of uncertainty (a total number >50) is beyond the scope of the present article and can be addressed using the screening-based approach recently proposed by Auder et al. (2011).

A total number of 30 input parameters’ configurations were randomly generated using the procedure described in Sect. 4.1. The set of 30 time-dependent displacements were then computed for both parts of the landslide (see Fig. 2b, c). Each landslide model output was discretized in ~300 time steps, each of them representing a time interval of 1 day. A single simulation required ~96 h (~4 days) of calculation using a single CPU (with a 2.6 GHz dual-core processor and 1 GB of RAM). All simulations were performed in parallel using a computer cluster (grid computing architecture) composed of 30 CPU.

3 Handling time-dependent model outputs

In this section, I focus on the dimensionality reduction of the time-dependent output of the landslide model using basis set expansion techniques (Sect. 3.1) and more specifically on the multivariate principal component analysis (PCA) (Sect. 3.2). In Sect. 3.3, I show how this technique can be used to extract the key features of the temporal evolution of the landslide model output with an application on the set of temporal curves generated in the La Frasse case.

3.1 Basis set expansion of functional data

The objective is to reduce the dimension of the functional (time-dependent) output of the landslide model. In the present study, I do not consider continuous function of time, but the most common case in landslide modelling corresponding to functional data discretized on a regular grid of time points, that is, vectors of large but finite dimension.

Formally, consider a set of n 0 functional model outputs, \( \varvec{y}_{i} (t) \) (with i = 1, …, n 0) and discretized in T-vectors, that is, the time step t takes finite values in the set (1, 2, …, T). Define Y the n 0 × T matrix so that each row is composed of a model output \( \varvec{y}_{i} (t) \).

In the La Frasse case (see Sect. 2), the set of functional model outputs correspond to n 0 = 30 vectors of horizontal displacements of dimension T = 300 (number of time steps). The objective of the basis set expansion is then to reduce the set of temporal curves to scalar values of finite number d ≪ 300 so that they describe the key features of the temporal evolution of the calculated displacements, that is, their dominant modes of variations.

This can be achieved by expanding the functional model output in an appropriate functional coordinate system, that is, in terms of some basis functions of time \( \phi_{k} (t) \) (with k = 1, …, d). The basis set expansion of the set of centred temporal curves \( \varvec{y}^{C} (t) \) reads as Eq. (1):

where the mean temporal function \( \overline{\varvec{y}} (t) \) is computed as the mean of the \( \varvec{y}_{i} (t) \) at each time step t. The scalar expansion coefficients h ik indicate the “weight” (contribution) of each of the d basis function in each of the n 0 temporal curves. Usually, the dimension d is chosen so that most information is concentrated in the d first basis functions, that is, so that the variance in the set of temporal curves is explained at a minimum level of, let say, 99.9 %.

The basis functions can be of various forms, such as pre-defined Legendre polynomials, trigonometric functions, Haar functions or wavelet bases (Ramsay and Silverman 2005). The disadvantage is to give beforehand an idea of the modes of variations. Alternatives are adaptive basis functions, which determine the basis functions from the data. The classical data-driven method is the multivariate principal component analysis, denoted PCA (Jolliffe 2002), which can be applied to the time-dependent model outputs viewed as vectors of finite dimension. A continuous form of the method, the functional principal component analysis exists as well. Note that more advanced methods of basis set expansion may be used (see, e.g., Auder et al. 2011) when the structure of the model outputs is very complex (e.g. highly nonlinear).

3.2 Principal component analysis

PCA is a multivariate statistical procedure aiming at reducing the dimensionality of a data set while minimizing the loss of information. I introduce here the basic concepts. For a more complete introduction and full derivation of equations, the interested reader can refer to Jolliffe (2002) and Ramsay and Silverman (2005).

Let us denote the variance–covariance matrix \( \varvec{\sum} = \frac{1}{{n_{0} }}{\mathbf{Y}}_{C}^{'} \cdot {\mathbf{Y}}_{C} \) of the columns of Y C , that is, the matrix composed of the set of discretized centred temporal curves \( \varvec{y}_{i}^{C} (t) \). The PCA decomposition is based on the expansion of Σ as follows:

where λ 1 ≥ λ 2 ≥ ⋯ ≥λ T are the eigenvalues of Σ and v 1, v 2 ,…, v T are normalised and mutually orthogonal eigenvectors associated with these eigenvalues. Then, the principal components PCs h k with k = 1, 2, …, T are the mutually orthogonal linear combinations of the columns of Y C and the eigenvectors v k . Using the general formulation of Eq. (1), the scalar expansion coefficients (named PC scores) h ik indicate the “weight” of each of the T basis function \( \phi_{k} (t) = \varvec{v}_{k} (t) \) in the set of n 0 temporal curves \( \varvec{y}^{C} (t) \).

The inertia defined as the trace of Σ measures the total dispersion (variability) among the rows of Y. Given that \( \left\| {\varvec{h}_{k} } \right\|^{2} = \lambda_{k}^{{}} \) and that \( \sum\nolimits_{k = 1}^{k = T} {\lambda_{k} = {\text{trace}}(\varvec{\Upsigma})} \), by construction, the amount of variation described by the PCs declines as k increases and d ≪ T can be chosen considering a given “level of explained variability”.

3.3 Interpreting the basis set expansion

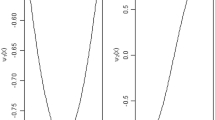

The application of PCA to the set of time-dependent displacements in the upper part of the La Frasse landslide (Fig. 2b) shows that the two first principal components PC1 and 2, respectively, account for 99.0 and ~1 % of the variation in the set. Figure 3a, c shows the temporal evolution of both PCs during pore pressure at the base of the landslide. PC1 is negative throughout the whole time duration of the crisis and alternately evolves between phases of steep decreases (approximately corresponding to the time of peaks of pore pressure) and phases of constant evolution (approximately corresponding to the time interval between pore pressure peaks). PC2 decreases from 0 to a negative constant and then steeply increases above zero after the second major period of pore pressure peaks (at the time instant of ~175 days).

a First principal component PC1 for the set of time-dependent horizontal displacements computed in the upper part of the landslide. b Interpretation of PC1 as a perturbation of the mean temporal function (black curve) plus (green curve) and minus (red curve) a multiple of PC1 (here set at 0.5); c Second principal component PC2; d Interpretation of PC2 as a perturbation of the mean temporal function

To get a better physical picture, Campbell et al. (2006) advocate plotting the mean temporal function plus and minus some multiple of the PC (this multiplicative constant is chosen as 0.5 in Fig. 3b, d). This allows interpreting the PCs as perturbations from the mean temporal function, that is, deviations from the “average” temporal behaviour of the landslide. In the upper part (see red-coloured curve in Fig. 2b), this average behaviour corresponds to successive phases of sharp increases in the horizontal displacements (“destabilized” phase) and of quasi-horizontal evolution (“stabilized” phase). Figure 3 shows that the first PC corresponds to a vertical up–down shift relatively to the mean function over the whole time duration, but with a magnitude of shift increasing with time. In other words, model runs which have negative scores for PC1 will have higher than average displacement values across the whole time duration. From a risk assessment perspective, those model simulations might lead to an increase over time of the horizontal displacements, that is, this mode of the temporal behaviour can be viewed as the overall most unstable ones. The second PC accounts for the same behaviour as PC1 before the time instant of 175 days. After this date, the behaviour is reversed, that is, a model run with negative scores for PC2 will have lower than average displacement, that is, this can be viewed as a stabilization mode.

The application of PCA to the set of time-dependent displacements in the lower part of the La Frasse landslide (Fig. 2c) shows that 99.9 % of the variation can be explained by three PCs (with contribution of, respectively, 98.3, 1.4 and 0.3 %). The “average” behaviour captured by the mean temporal function (see red-coloured curve in Fig. 2c) is different to the one in the upper part and corresponds to a monotonically increasing function, hence showing that the average behaviour in the lower part of the landslide is “destabilized” over the whole time period (contrary to the upper part). The interpretation of the two first PCs is similar to the ones in the upper part (but here, relatively to the average destabilized behaviour). The interpretation of the third PC (Fig. 4) is more complex and can be understood as a phase of acceleration, followed by a phase of deceleration and finally by a new phase of acceleration (considering model simulations with negative scores on PC3).

a Third principal component PC3 for the set of temporal curves computed in the lower part of the landslide. b Interpretation of PC3 as a perturbation of mean temporal function (black curve) plus (green curve) and minus (red curve) a multiple of PC3 (here set at 0.5). The two first PCs are very similar to those computed for the upper part (as shown in Fig. 3)

The basic idea of the dynamic sensitivity analysis through PCA is then to assess the sensitivity of the scores of each PC to the input parameters; for instance, if the scores of PC1 are sensitive to a particular input parameter, this means that this parameter is important in producing the type of behaviour in the model output as afore-described. Sensitivity can be assessed using Sobol’ indices, as proposed for instance by Summer et al. (2012). Yet, as underlined in the introduction, the algorithms to compute such sensitivity indices require a large number of simulations, which may be impractical when using landslide model with CPU time of several hours (in the La Frasse case, the CPU time exceeds 4 days).

4 Meta-modelling strategy for dynamic sensitivity analysis using PCA

To overcome the computation challenge related to the estimation of Sobol’ indices using a long-running landslide model, I describe in this section a methodology relying on the meta-modelling technique for dynamic sensitivity analysis using PCA. The basic idea of meta-modelling is to replace the long-running numerical model f by a mathematical approximation (denoted \( \tilde{f} \)) referred to as “meta-model” (also named “response surface” or “surrogate model”). The meta-model corresponds to a “costless-to-evaluate” function aiming at reproducing the behaviour of the “true” model f in the domain of model input parameters and at predicting the model responses with a negligible CPU time.

The main steps of the methodology are summarized in Table 2.

4.1 Step 1: selecting the training samples

The first step is to run f for a limited number n 0 of different configurations (named training samples) of m-dimensional vectors of input parameters x i = (x 1; x 2; …, x m ) with i = 1,2,…n 0. To choose them, a trade-off should be found between maximizing the exploration of the input parameters’ domain and minimizing the number of simulations, that is, a trade-off between the accuracy of the approximation (directly linked with n 0) and the CPU cost. To fulfil such requirements, I propose to randomly select the training samples by means of the Latin hypercube sampling (LHS) method (McKay et al. 1979) in combination with the “maxi–min” space-filling design criterion (Koehler and Owen 1996).

4.2 Step 2: reducing the model output dimensionality

In a second step, for each of the randomly selected training sample x i , a functional model output y i (t) is calculated by running the computationally intensive landslide model. The set of n 0 pairs of the form {x i ; y i (t)}, with i = 1, 2, … n 0, constitute the training data on which the meta-model is constructed. As the model output is functional, the procedure described in Sect. 3 is conducted to reduce the dimensionality of the functional model output. Step 2 results then in a set of n 0 pairs of the form {x i ; h ik }, with h ik the weight of the kth PC (i.e. the PC scores), with k = 1, 2, … d. See Sect. 3 for further discussion on the choice of d and on the interpretation of each PC.

4.3 Step 3: constructing the meta-model

Using the training data, the scores for each PC can then be approximated as a function of the input parameters x, that is, by a meta-model. Several types of meta-models exist: simple polynomial regression techniques, nonparametric regression techniques (Storlie et al. 2009), Kriging modelling (Forrester et al. 2008), artificial neural networks (Papadrakakis and Lagaros 2002), polynomial chaos expansions (Ghanem and Spanos 1991), etc. The choice of the meta-model type is guided by the a priori nonlinear functional form of f, as well as the number of input parameters.

4.4 Step 4: validating the meta-model

As the methodology involves replacing f by an approximation \( \tilde{f} \), it introduces a new source of uncertainty. Thus, a fourth step implies assessing the impact of such uncertainty, that is, validating the meta-model quality. Two issues should be addressed: 1. the approximation quality, that is, to which extent \( \tilde{f} \) manages at reproducing the observed PC scores, that is, the ones calculated based on the set of different long-running simulations; 2. the predictive quality, that is, to which extent \( \tilde{f} \)manages at predicting the PC scores at “yet-unseen” input parameters’ configurations.

Regarding the first issue, the differences between the approximated and the true quantity of interest (i.e. the residuals) are usually used. On this basis, the coefficient of determination R² can be computed:

where h i corresponds to the observed scores for a given PC (for i from 1 to n 0), \( h_{m} \) to the corresponding mean and \( \tilde{h}_{i} \) to the PC scores estimated using the meta-model. A coefficient R² close to 1 indicates that the meta-model is successful in matching the observations.

However, estimating a coefficient of determination for each PC score may not be easily interpretable (what is the physical meaning of R² of 98 % on the first PC?). Thus, I advocate computing the temporal evolution of R², which can be achieved by reconstructing the functional model output, that is, by transforming the estimated scores for each PC in the “physical” domain of the functional model output (using Eq. 2). This procedure presents the advantage of clearly highlighting the time domain, where the approximation is of poor quality.

Regarding the second quality issue, a first approach would consist in using a test sample of new data. Though the most efficient, this might be often impracticable as additional numerical simulations are costly to collect. An alternative relies on cross-validation procedures (see, e.g., Hastie et al. 2009).

In the case of functional outputs, this technique can be performed as follows: (1) the initial training data are randomly split into q equal subsets; (2) a subset is removed from the initial set; the basis set expansion is performed using the q − 1 remaining functional observations, so that the eigenvectors and eigenvalues are re-evaluated (see Sect. 3); (3) a new meta-model associated with the calculated PC scores is constructed; (4) the subset removed from the initial set constitutes the validation set; the PC scores of the validation set are estimated using the new meta-model; (5) the functional observations of the validation set are then “re-constructed” using the estimated PC scores; the time-dependent residuals (i.e. the residuals at each time step) are then estimated. This procedure is carried out in turn for each of the q subsets and corresponds to the “leave-one-out” cross-validation, if each subset is composed of a single observation.

Using the time-dependent residuals (computed for the q iterations of the cross-validation procedure), the predictive quality can be assessed regarding the temporal evolution of coefficient of determination \( R_{\text{CV}}^{2} \) [computed following a similar formula as Eq. (3)].

4.5 Step 5: conducting the global sensitivity analysis

Once validated, the costless-to-evaluate meta-models can be used to estimate the PC scores at any “yet-unseen” values of the input parameters and can be used to conduct the GSA using the Sobol’ indices. For further details on GSA, please refer to the “Appendix 1”.

In a factors’ prioritization setting, the Sobol’ indices of first order (main effects) for each PC can be computed using, for instance, the algorithm of Sobol’ (1993). This Monte Carlo sampling strategy requires N(m + 1) model evaluations with N the number of Monte Carlo samples and m the number of input parameters. As underlined in Sect. 3.3, if the considered PC is sensitive to a particular input parameter, this means that this parameter is important in producing the type of behaviour in the model output as analysed in step 2.

5 Application to the La Frasse case

The afore-described methodology (see summary in Table 2) is applied to the La Frasse case (described in Sect. 2). In practice, the packages of the R software (R Development Core Team 2011), named “sensitivity” (available at http://cran.r-project.org/web/packages/sensitivity/index.html) and “modelcf” developed by Auder et al. (2011) for meta-modelling of functional model outputs were used.

A set of 30 time-dependent horizontal displacements were calculated for 30 different configurations of the slip surface properties (seven input parameters, see description in Sect. 2), which were randomly chosen using a LHS technique (see Sect. 4.1). Using this training data, the PCA as described in Sect. 3.3 was carried out and provides the scores for two PCs in the upper part and three ones in the lower part.

The scores for each PC were approximated using a meta-model of seven input parameters. Different types of meta-model were tested (not shown here) and the approximation and the predictive quality were assessed following the procedure described in Sect. 4.4. For our case, the Projection Pursuit Regression technique presented a good trade-off between high levels of both approximation and predictive quality (see discussion below) and simplicity of the mathematical formulation of the meta-model. Details on this nonparametric regression technique can be found in “Appendix 2”.

Regarding the approximation quality, the coefficient of determination R² (Fig. 5a) steeply increases over time and rapidly reaches very high values (>99.9 %) after the time instant of 50 days for both parts of the landslide. Regarding the predictive quality, a leave-one-out procedure was conducted. Figure 5b provides the temporal evolution of \( R_{CV}^{2} \). This measure of predictive quality reaches high values (in average ~98 %) for the upper part of the landslide (straight line in Fig. 5a) after the time instant of 50 days, whereas the values appears to be lower (in average 85 %) for the lower part of the landslide, but can still be considered “satisfactory” (for instance, Storlie et al. (2009) use a threshold at 80 %).

a Temporal evolution of the coefficient of determination R² calculated based on the approximated scores of PC1 and 2 (upper part of the La Frasse landslide, straight line) and of PC1, 2 and 3 ones (lower part of the La Frasse landslide, dashed line); b Temporal evolution of the coefficient of determination \( R_{CV}^{2} \) resulting from the leave-one-out cross-validation procedure in the upper (straight lines) and lower part (dashed lines) of the La Frasse landslide

Though most temporal outputs cover the range 0–0.1 m in the upper part (only six samples cover the range 0.1–0.7 m, see Fig. 2b), the overall temporal evolution of the horizontal displacements appears to be well accounted for (both in terms of approximation and of prediction). On the other hand, the whole range of displacements’ variation is quite well covered in the lower part (see Fig. 2c), but the approximation is of lower quality. The explanation should be sought in the presence of a few specific steeply increasing temporal curves (the ones reaching the values of displacements >1.0 m at the end of the time period): their temporal mode of variation (related to PC3, see Fig. 4) is accounted for with greater difficulties as there are too few observations of this type. Improvements can be achieved through additional simulations, for instance relying on adaptive sampling strategy (see, e.g., Gramacy and Lee 2009).

Finally, the main effects for each PC can be computed using the costless-to-evaluate meta-model and using, in this case, the algorithm of Sobol’ (1993) (see Sect. 4.5). Preliminary convergence tests were carried out and showed that N = 5,000 yields satisfactory convergence of the sensitivity measures to two decimal places. Confidence intervals computed using bootstrap techniques were very narrow so that I do not show them in the following. The total number of simulations reaches 40,000 (considering the number of input parameters m = 7). Given the CPU time of nearly 4 days for one single simulation, the GSA would obviously not have been achievable using the “true” numerical landslide model. By using the meta-model, the CPU time of the sensitivity analysis only corresponds to the CPU time needed for the computation of the 30 training data, (i.e. of 4 days using a computer cluster composed of 30 CPU) and for the validation of the approximation and predictive quality (CPU time <1 h).

Figure 6 provides the GSA results for the upper (Fig. 6a) and the lower part (Fig. 6b) of the landslide for each PC as analysed in Sect. 3.3. I propose to conduct the analysis of these results regarding the goal of the risk practitioner. This can be formulated as follows: the analysis should primarily focus on PC1 for the La Frasse case, if the goal is to understand the global and major mode of temporal behaviour, and to decide accordingly future investigations and characterization studies.

Considering the upper part of the landslide (Fig. 6a), I show that the nonlinearity coefficient n e presents the greatest influence on the main mode of variation accounted for by PC1 (with a main effect almost reaching 60 %). This means that this slip surface property activates the overall vertical up–down shift behaviour of the time-dependent displacements as discussed in Sect. 3.3, that is, a possible unstable mode of variation over the whole time duration. Interestingly, this result is in good agreement with the analysis of Rohmer and Foerster (2011) using time-varying GSA. This result can have strong implications for future characterization tests, because the measurement of n e is known to be difficult as requiring laboratory tests at small strains, where the behaviour is truly elastic (e.g. strains lower than 10−4). Such a condition is not realized for classical triaxial tests where the accuracy is not better than 10−3 (e.g. Biarez and Hicher 1994).

Though contributing to a lesser extent to the variability of the time-dependent displacements (<1 %, see Sect. 3.3), the analysis of PC2 can be of great interest if the risk practitioner aims at understanding the occurrence of an “acceleration” behaviour after the second pore pressure peaks. The GSA analysis shows that the coefficient n e also influences this mode of variation but with lower contribution (main effect of ~25 %). The second most influential parameters are the shear modulus G, the angle of friction ϕ and dilatancy Ψ and the initial critical pressure p c0 with a main effect ranging between 10 and 15 %.

Considering the lower part of the landslide (Fig. 6b), I also show the clear influence of the nonlinearity coefficient n e (with a main effect of ~85 %) on the first mode of variation. The second mode is both influenced by n e and ϕ with a main effect of, respectively, 32 and 24.5 %. The influence of the latter property may be related to the occurrence of irreversible deformation in the lower part of the landslide during the pore pressure changes. If the risk practitioner aims at understanding the occurrence of an “acceleration” behaviour between the second and third pore pressure peak, the analysis of the third mode can be useful (see interpretation of PC3 in Sect. 3.3). Figure 6b shows that the third mode is highly influenced by the initial critical pressure p c0 (main effect of ~30 %). This parameter is linked with the initial void ratio and compaction ratio with a relationship depending on the type of soil (clays or sands, see, e.g., Lopez-Caballero et al. 2007).

6 Concluding remarks and further works

In the present article, I described a methodology to carry out dynamic (global) sensitivity analysis of landslide models. Two major difficulties were accounted for: (1) the functional nature of the landslide model output; (2) the computational burden associated with the calculation of the global sensitivity measures (Sobol’ indices) when using long-running landslide numerical models (with CPU time > several hours). In this view, I adopted a strategy combining: (1) basis set expansion to reduce the dimensionality of the functional model output and extract the dominant modes of variation in the overall structure of the temporal evolution; (2) meta-modelling techniques to achieve the computation, using a limited number of simulations, of the Sobol’ indices associated with each of the modes of variation. Using the La Frasse landslide as an application case, I showed how to extract useful information on dynamic sensitivity using a limited number (a few tens) of long-running simulations. I proposed to interpret such an analysis regarding the goal of the risk practitioner, for instance, in the following fashion: “identifying the properties, which influence the most the possible occurrence of a destabilization phase (acceleration) over the whole time duration or on a particular time interval”.

However, I acknowledge that a great difficulty of the methodology is the physical interpretation of the dominant modes of variation (even viewing them as perturbations of the mean temporal function), especially compared to the traditional time-varying GSA (more easily interpretable, but also intractable for very long time series). To better investigate this issue, other real-case applications of the methodology should be conducted in the future using the present work as a basis. More specifically, a future direction of research should focus on combining global sensitivity and calibration using real temporal evolution of surface displacements (see Fig. 1 in Laloui et al. 2004) relying for instance on the recently developed Bayesian approach of Higdon et al. (2008).

A second difficulty is related to the different uncertainty sources introduced not only by the use of a meta-model (and accounted for by using a cross-validation procedure), but also by the basis set expansion procedure on a very limited number of simulations. As a future direction of work, the adaptation of the bootstrap methodology introduced by Storlie et al. (2009) may be proposed to associate the sensitivity measures with confidence intervals reflecting such uncertainty.

Finally, the primary focus of the present article was on time-dependent landslide model outputs. However, landslides are, by nature, linked with spatio-temporal processes. This issue has only been tackled very recently in the computer experiments’ research community and may rely, for instance, on the recent works by Marrel et al. (2011) and Antoniadis et al. (2012).

References

Antoniadis A, Helbert C, Prieur C, Viry L (2012) Spatio-temporal metamodeling for West African monsoon. Environmetrics 23:24–36

Aubry D, Chouvet D, Modaressi A, Modaressi H (1986) Gefdyn software—logiciel d’analyse du comportement mécanique des sols par éléments finis avec prise en compte du couplage sol-eau-air (Gefdyn software, Finite element analysis of soil mechanical behaviour taking into account the soil-water-air coupling), Technical Report of Ecole Centrale Paris (in French)

Aubry D, Hujeux J-C, Lassoudière, F., Meimon Y (1982) A double memory model with multiple mechanisms for cyclic soil behaviour. In: Proceedings of the International symposium on numerical models. Balkema, pp 3–13

Auder B, De Crecy A, Iooss B, Marquès M (2011) Screening and metamodeling of computer experiments with functional outputs. Application to thermal–hydraulic computations. Reliab Eng Syst Saf (in press). doi:10.1016/j.ress.2011.10.017

Bell R, Glade T (2004) Quantitative risk analysis for landslides—examples from Bíldudalur, NW-Iceland. Nat Hazards Earth Syst Sci 4:117–131

Biarez J, Hicher P-Y (1994) Elementary mechanics of soil behaviour, saturated and remolded soils. Balkema, Amsterdam 184 pp

Campbell K, McKay MD, Williams BJ (2006) Sensitivity analysis when model outputs are functions. Reliab Eng Syst Saf 91:1468–1472. doi:10.1016/j.ress.2005.11.049

D’Ambrosio D, Iovine G, Spataro W, Miyamoto H (2007) A macroscopic collisional model for debris-flows simulation. Environ Model Softw 22:1417–1436

Fine IV, Rabinovich AB, Bornhold BD, Thomson RE, Kulikov EA (2005) The Grand Banks landslide-generated tsunami of November 18, 1929: preliminary analysis and numerical modelling. Mar Geol 215:45–57

Forrester AIJ, Sobester A, Keane AJ (2008) Engineering design via surrogate modelling: a practical guide. Wiley, Chichester, UK 210 pp

Friedman JH, Stuetzle W (1981) Projection pursuit regression. J Am Stat As 76:817–823

Ghanem RG, Spanos PD (1991) Stochastic finite elements—a spectral approach. Springer, New York 214 pp

Gorsevski PV, Gessler PE, Boll J, Elliot WJ, Foltz RB (2006) Spatially and temporally distributed modeling of landslide susceptibility. Geomorphology 80:178–198

Gramacy RB, Lee HKH (2009) Adaptive design and analysis of supercomputer experiments. Technometrics 51:130–145

Hamm NAS, Hall JW, Anderson MG (2006) Variance-based sensitivity analysis of the probability of hydrologically induced slope instability. Comput Geosci 32:803–817

Hastie T, Tibshirani R, Friedman J (2009) The Elements of statistical learning: data mining, inference, and prediction, 2nd edn. Springer, New York 746 pp

Higdon D, Gattiker J, Williams B, Rightley M (2008) Computer model calibration using high-dimensional output. J Am Stat As 103:570–583

Jolliffe IT (2002) Principal component analysis, 2nd edn. Springer, Berlin 487 pp

Koehler JR, Owen AB (1996) Computer experiment. In: Ghosh S, Rao CR (eds) Handbook of statistics. Elsevier Science, New York, USA, pp 261–308

Laloui L, Tacher L, Moreni M, Bonnard C (2004) Hydromechanical modeling of crises of large landslides: application to the La Frasse Landslide. In: Proceedings of the 9th international symposium on landslides. Rio de Janeiro, Brazil, pp 1103–1110

Laloui L, Ferrari A, Bonnard C (2009) Geomechanical modeling of the Steinernase landslide (Switzerland). In: Proceedings of the 1st Italian workshop on Landslides. Naples, June 8–10, 2009

Lamboni M, Monod H, Makowski D (2011) Multivariate sensitivity analysis to measure global contribution of input factors in dynamic models. Reliab Eng Syst Saf 96:450–459. doi:10.1016/j.ress.2010.12.002

Lopez-Caballero F, Modaressi Farahmand-Razavi A, Modaressi H (2007) Nonlinear numerical method for earthquake site response analysis—elastoplastic cyclic model and parameter identification strategy. Bull Earthq Eng 5:303–323

Marrel A, Iooss B, Jullien M, Laurent B, Volkova E (2011) Global sensitivity analysis for models with spatially dependent outputs. Environmetrics 22:383–397

McKay MD, Beckman RJ, Conover WJ (1979) A comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics 21:239–245

Narasimhan H, Faber M (2011) Quantification of uncertainties in the risk assessment and management process. In: Proceedings of the deliverable D5.4 of 7th framework programme SafeLand. available at: http://www.safeland-fp7.eu/results/Documents/D5.4.pdf

Papadrakakis M, Lagaros ND (2002) Reliability-based structural optimization using neural networks and Monte Carlo simulation. Comput Methods Appl Mech Eng 191:3491–3507

R Development Core Team (2011) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. ISBN 3-900051-07-0, http://www.R-project.org/

Ramsay JO, Silverman BW (2005) Functional data analysis. Springer, New York 428 pp

Rohmer J, Foerster E (2011) Global sensitivity analysis of large scale landslide numerical models based on the Gaussian Process meta-modelling. Comput Geosci 37:917–927

Saltelli A (2002) Sensitivity analysis for importance assessment. Risk Anal 22:579–590

Saltelli A, Annoni P (2010) How to avoid a perfunctory sensitivity analysis. Environ Model Softw 25:1508–1517

Saltelli A, Tarantola S, Chan K (1999) A quantitative, model independent method for global sensitivity analysis of model output. Technometrics 41:39–56

Saltelli A, Ratto M, Andres T, Campolongo F, Cariboni J, Gatelli D, Saisana M, Tarantola S (2008) Global sensitivity analysis: the primer. Wiley, Chichester, UK 304 pp

Sobol’ IM (1993) Sensitivity estimates for non linear mathematical models, mathematical modelling and. Comput Exp 1:407–414

Sobol’ IM, Kucherenko SS (2005) Global sensitivity indices for nonlinear mathematical models. Rev Wilmott Mag 1(56–61):2005

Storlie CB, Swiler LP, Helton JC, Sallaberry CJ (2009) Implementation and evaluation of nonparametric regression procedures for sensitivity analysis of computationally demanding models. Reliab Eng Syst Saf 94:1735–1763

Summer T, Shephard E, Bogle IDL (2012) A methodology for global-sensitivity analysis of time-dependent outputs in systems biology modelling. J R Soc Interface. doi:10.1098/rsif.2011.0891

Tacher L, Bonnard Ch, Laloui L, Parriaux A (2005) Modelling the behaviour of a large landslide with respect to hydrogeological and geomechanical parameter heterogeneity. J Int Consortium Landslides 2:3–14

Acknowledgments

The authors thanks the BRGM funded “Uncertainty” project, for contributions to the financial support of the present work. The application (La Frasse landslide case) is based on the finite-element landslide model built by the LMS of EPFL.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Variance-based global sensitivity analysis

I introduce here the basic concepts of variance-based global sensitivity. For a more complete introduction and full derivation of equations, the interested reader can refer to (Saltelli et al. 2008 and references therein).

Let us define f as the numerical model. Considering the m-dimensional vector X as a random vector of independent random variable X i (i = 1, 2, …, m ), then the output Y = f(X) is also a random variable (as a function of a random vector). A variance-based sensitivity analysis aims at determining the part of the total unconditional variance Var(Y) of the output Y resulting from each independent input random variable X i . This analysis relies on the functional analysis of variance (ANOVA) decomposition of f based on which the Sobol’ indices (ranging between 0 and 1) can be defined:

The first-order S i is referred to as “the main effect of X i ” and can be interpreted as the expected amount of Var(Y) (i.e. representing the uncertainty in Y) that would be reduced if it was possible to learn the true value of X i. This index provides a measure of importance useful to rank in terms of importance the different input parameters (Saltelli et al. 2008). The second-order term S ij measures the combined effect of both parameters X i and X j . Higher-order terms can be defined in a similar fashion. The total number of sensitivity indices reaches 2n − 1.

In practice, the sensitivity analysis is generally limited to the pairs of indicators corresponding to the main effect \( S_{i} \) and to the total effect \( S_{Ti} \) of X i (Saltelli et al. 2008). The latter is defined as follows:

where X −i = (X 1, …, X i−1, X i+1, … X n ). The total index corresponds to the fraction of the uncertainty in Y that can be attributed to X i and its interactions with all other input parameters. \( S_{Ti} = 0 \) means that the input factor X i has no effect so that X i can be fixed at any value over its uncertainty range in a “factors’ fixing” setting (as described in Saltelli et al. 2008).

Appendix 2: Projection pursuit regression

I introduce here the basic concepts of Projection Pursuit Regression modelling. For a more complete introduction and full derivation of equations, the interested reader can refer to (Friedman and Stuetzle 1981). Let us define \( \tilde{f} \) as the meta-model and x the m-dimensional vector of input parameters. This nonparametric regression technique assumes that \( \tilde{f}(x) \) takes the form:

where the m-dimensional vectors α k and α m are orthogonal for k≠m; the term \( \varvec{\alpha}_{k} \varvec{x} \) corresponds to a linear combination of the elements of x; g k is an arbitrary function; The vectors α k, the function g k and the dimension M are determined in an iterative manner (see algorithm described in Friedman and Stuetzle 1981).

The projection pursuit regression technique involves additive modelling (with the quantities \( \varvec{\alpha}_{k} \varvec{x} \) replacing the elements of x as the independent variables) and dimension reduction as M is usually smaller than m. By using functions of linear combinations of the elements of x, this technique allows accounting for variable interactions and nonlinearity in the true numerical model f.

Rights and permissions

About this article

Cite this article

Rohmer, J. Dynamic sensitivity analysis of long-running landslide models through basis set expansion and meta-modelling. Nat Hazards 73, 5–22 (2014). https://doi.org/10.1007/s11069-012-0536-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11069-012-0536-3