Abstract

Natural hazard assessments are always subject to uncertainties due to missing knowledge about the complexity of hazardous processes as well as their natural variability. Decision-makers in the field of natural hazard management need to understand the concept, components, sources, and implications of existing uncertainties in order to reach informed and transparent decisions. Until now, however, only few hazard maps include uncertainty visualizations which would be much needed for an enhanced communication among experts and decision-makers in order to make informed decisions possible. In this paper, an analysis of how uncertainty is currently treated and communicated by Swiss natural hazards experts is presented. The conducted expert survey confirmed that the communication of uncertainty has to be enhanced, possibly with the help of uncertainty visualizations. However, in order to visualize the spatial characteristics of uncertainty, existing uncertainties need to be quantified. This challenge is addressed by the exemplary simulation of a snow avalanche event using a deterministic model and quantified uncertainties with a sensitivity analysis. Suitable visualization methods for the resulting spatial variability of the uncertainties are suggested, and the advantages and disadvantages of their implementation in an interactive cartographic information system are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Natural hazards assessments often comprise estimations of trends, frequencies, and intensities of potential future events. These estimations are based on hypotheses and models, even if they are supported by observations of past events. As a consequence, aleatory uncertainties (caused by natural, unpredictable variation in the performance of the system under study) as well as epistemic uncertainties (caused by lack of knowledge about the behavior of the system) are always present in hazard assessment results. Epistemic uncertainties can be reduced; they vary depending on available historical data and used models. The presence of uncertainty is acknowledged by many natural hazards and risk specialists and is reflected in sound discussions about uncertainty inherent to natural hazards in general (e.g., Todini 2004; Pappenberger and Beven 2006; Ramsey 2009), issues of uncertainty definition and typology (e.g., Thomson et al. 2005; MacEachren et al. 2005) as well as location and quantification of existing uncertainty (e.g., Apel et al. 2008). In some fields, such as seismic or tsunami hazard management, probabilistic methods are widely used (Wiemer et al. 2009) and uncertainty distributions of input parameters are taken into account and propagated through the model. In recent research, the use of probabilistic analyses has been expanded to gravitational natural hazards processes such as landslides (e.g., Refice and Capolongo 2002; Xie et al. 2004; Guzzetti et al. 2005), flooding (e.g., Krzysztofowicz 2002; Bates et al. 2004; Werner et al. 2005; Most and Wehrung 2005; Apel et al. 2006), snow avalanches (e.g., Bakkehøi 1987; Straub and Grêt-Regamey 2006; Jomelli et al. 2007), or rock fall (e.g., Straub 2006; Straub and Schubert 2008).

Cartographic visualizations are valuable tools for the presentation and assessment of spatial data (Merz et al. 2007). Consequently, hazard assessment results are often illustrated by maps. However, only few maps include information about existing uncertainties (Pang 2008), and map users are usually not aware of existing uncertainties and limitations of the underlying geospatial information (Goodchild and Gopal 1989; Roth 2009).

Implications of this shortcoming for natural hazards management are discussed in the analysis of recent flooding in Switzerland (Bezzola and Hegg 2008), and as conclusion, the localization and communication of uncertainty and fuzziness are requested. Agumya and Hunter (2002) and Roth (2009) consider as well the communication of uncertainty important because only if uncertainty intrinsic to the input dataset is acknowledged, fully informed decisions can be made. The effect of uncertainty visualization on decision-making has been subject of many research projects (MacEachren and Brewer 1995; Leitner and Buttenfield 2000; Cliburn et al. 2002) that have demonstrated the supporting effects of visualizations on the process of decision-making (Deitrick 2007).

The objective of this paper is to bridge the discrepancies between theory and practice in uncertainty visualization in the field of natural hazards. The focus will be on gravitational natural hazards with local reach including snow avalanches, debris-flows, landslides, rock fall as well as flooding. These processes occur spatially confined and are therefore often assessed in detail. The resulting assessment outputs are available in a high spatial resolution and can be presented in maps on a local scale (called large-scale maps in cartography) allowing for the incorporation of detailed uncertainty visualizations.

This paper is structured in seven sections. Following this first introductory section, section number two treats the issue of uncertainty inherent to natural hazards assessment in general: after a short review of existing uncertainty definitions, the framework used for this research is defined, sources of assessment uncertainties are identified, and potential methods for the quantification of model uncertainties are presented. The third section addresses the state-of-the-art on uncertainty visualization: after an overview on uncertainty visualization, existing methods for the field of natural hazards are described. The results of an expert survey concerning the existence of uncertainty and the inclusion of uncertainty representations in natural hazard maps are disclosed in section four. In the fifth section, an exemplary method to quantify parameter uncertainty of a deterministic snow avalanche model is suggested, followed by the presentation of cartographic visualizations of the results and a discussion of advantages and weaknesses of the suggested methods. The potential of interactive maps is demonstrated in the sixth section: the advantages of interactive systems are summarized and it is shown how the interpretation and comprehension of uncertainty visualizations can be facilitated. The seventh section finally contains concluding remarks.

2 Uncertainty inherent to natural hazards assessments

2.1 Definition of uncertainty

Information uncertainty is a complex concept with many interpretations across knowledge domains and application contexts (MacEachren et al. 2005). Discussions about the quality of spatial data have been ongoing for the last 30 years, mostly performed by members of the Geographic Information Science (GIScience) community (Goodchild 1980). While early GIS research only rarely included uncertainty management, it has become an important topic and the unavoidability of uncertainty and error is acknowledged in GIScience (Veregin 1999; Sadahiro 2003; Kyriakidis 2008).

However, while some communities consistently differentiate uncertainty into specific categories (e.g., aleatory and epistemic uncertainties in probabilistic modeling), no harmonized terminology exists in the context of general geospatial uncertainty. As a consequence, various categorizations and frameworks have been developed by different authors. Thomson et al. (2005) as well as MacEachren et al. (2005) provide sound overviews on the different approaches. Since spatial data are mostly presented in form of maps, uncertainty research in GIScience mostly includes the question about the visualization of uncertainty. For visualization purposes, typologies based on the spatial data transfer standards (SDTS, overview by Fegeas et al. 1992) have persisted.

In the context of this research, the focus lies on epistemic uncertainties and the term uncertainty is used in accordance with the framework of MacEachren et al. (2005) as according to Roth (2009) this typology is an appropriate model of uncertainty categorization in the domain of floodplain mapping. It extends the framework of Thomson et al. (2005) and suggests that uncertainty of geospatial information consists of the components of data quality (accuracy/error, precision, completeness, consistency, lineage, and currency) as well as key elements from intelligence information assessment (credibility, subjectivity, and interrelatedness).

2.2 Sources of uncertainty in natural hazard assessments

During a hazard assessment process, different sources contribute to the total uncertainty. For visualization processes, Pang (2008) suggests to split the visualization pipeline into three stages: acquisition, transformation, and visualization. The sources of uncertainties in a typical gravitational hazard assessment according to this framework can exemplarily be ordered as follows:

2.2.1 Uncertainty in acquisition

Most natural hazards assessments include the use of numerical simulations. The conduction of such simulations requires the choice of a model that represents the natural process most accurately. Models can be of empiric, probabilistic, or deterministic nature and simulate processes in one, two or even three dimensions. Apart from the dimensionality, also the mathematical equations describing the real world are relevant. As these mathematical models are only simplifications of the complex natural processes, uncertainties are introduced. The responsible expert has to choose a model and judge if it is suitable to simulate the natural process he wishes to assess. After a model is chosen, input parameters for the model have to be acquired. Uncertainties inherent to the input parameters either arise during measurement (e.g., instrument or reading errors, ambiguities in radar measurements for DTM generation), extra- or interpolation (e.g., definition of input parameters for events with a recurrence interval of 300 years, interpolation of measurement points to a continuous DTM), or estimation (when parameters have to be estimated based on evidence of historical events or experiments). Once a first set of input parameters is defined, the spatial and temporal resolution of the computation has to be defined. Often lower resolutions are chosen due to the negative effect of high resolutions on computation times. Since the definition of input parameters and boundary conditions is difficult even if observations of historical events are available, models are usually calibrated using parameters of observed events as constraints. The interpretation of calibrations has to be conducted with care as dependencies of input parameters, and model components can have an effect on the results (Pappenberger and Beven 2006). Such calibrations can limit the uncertainty introduced by uncertain input parameters; however, models may perform poorly when used to predict events different from those used for calibration (Di Baldassarre et al. 2010).

2.2.2 Uncertainty in transformation

Output parameters of numerical models are available in a specific format and spatial resolution. Often these results have to be transformed and edited in order to reach the form in which they are presented to customers or other researchers. Possible transformations encompass for example the transformation into a different coordinate system, transformations from raster to vector data or vice versa, classifications, generalizations, data filtering, interpolations, or smoothing of raw raster data. If an assessment is conducted by more than one person, the compiling of data and the conversion into a uniform format can contribute to uncertainty introduction.

2.2.3 Uncertainty in visualization

Once the assessment results are available in a uniform format and the according scale, they can be presented in form of cartographic visualizations. During this visualization, step uncertainty is introduced actively (e.g., positional errors by generalizations and choice of coarse resolutions) or more passively in the form of different approaches in volume rendering or in-between-frame-interpolation for animated visualizations (Pang 2008).

2.3 Quantification of uncertainty in natural hazard assessments

After sources of uncertainty introduction are identified, existing uncertainties have to be quantified in order to allow for uncertainty visualizations. Existing approaches include, for example, the application of Bayesian networks (Bates et al. 2004; Straub 2006; Straub and Grêt-Regamey 2006), Monte Carlo simulations (Zischg et al. 2004; Apel et al. 2008), sensitivity analyses (Borstad and McClung 2009), and the Generalized Likelihood Uncertainty Estimation (GLUE, Beven and Binley 1992).

As probabilistic models require input parameter distributions, the design of such models makes primarily sense when sound statistical data about the input parameters are available. In respect of natural processes, this is the case with hydrological data as input for hydraulic flood models and many researchers are engaged in probabilistic flood modeling. For other processes, however, the determination of input parameter distributions is more difficult due to the lack of observations. Consequently, most simulations are based on deterministic models only and many hazard maps are generated without accounting for uncertainty. Examples are Swiss hazard maps; while they are considered to be among the most advanced in Europe for the inclusion of three hazard zones derived from intensities and frequencies (Hagemeier-Klose and Wagner 2009), experts can freely choose the assessment method and the consideration of uncertainties is not specifically mentioned in the national guidelines. Since these maps are only about to be finished and the use of deterministic models is standard practice, it is not to be expected that a transition toward the use of probabilistic models will take place anytime soon.

3 Uncertainty visualization

Although the necessity to communicate uncertainty information has been identified (e.g., Bezzola and Hegg 2008), there is no consensus about the best means of communication. Some experts argue that the inclusion of uncertainty information only confuses the map reader and can lead to misunderstandings. Evans (1997), however, conducted a study and concluded that all participants were able to interpret the visualized uncertainty information. Also, Pappenberger and Beven (2006) refuse the argument that decision-makers are not capable of understanding uncertainty distributions or measures, but that it may be the communication that is the major problem, rather than any real lack of understanding (Sayers et al. 2002).

Maps give a more direct and stronger impression of the spatial distribution of data than other forms of presentation (Merz et al. 2007). The visualization of uncertainty as means of communication has therefore been considered early in the uncertainty discussion (Buttenfield and Beard 1991). According to Pang et al. (Pang et al. 1997), uncertainty visualization strives to present data together with auxiliary uncertainty information and the ultimate goal is to provide users with visualizations that incorporate and reflect uncertainty information to aid in data analysis and decision-making.

The main challenge of uncertainty visualization research is to find suitable visual variables to depict single elements of uncertainty. The term “visual variables” was introduced by Bertin (1983) and encompasses eight variables divided in ordering and differential variables. Ordering variables encompass the two dimensions of the plane, size, and color value (also referred to as brightness Wilkinson 2005 or lightness Slocum et al. 2005). Differential variables were defined to be color hue, texture (pattern), orientation, and shape. Visual variables define the characteristics of point, linear, and areal symbolization. Several extensions to Bertin’s (1983) definition have been suggested; the most important additions in terms of uncertainty visualizations are color saturation (also called intensity; added by Morrison 1984), transparency (added by Wilkinson (2005)), and clarity (depending on the variables crispness, resolution, and transparency; added by MacEachren 1995). Figure 1 shows an overview on selected visual variables.

Overview on the visual variables color hue, color value, color saturation, shape, size, orientation, texture, transparency, and clarity (Illustrations: Schnabel (2007))

Buttenfield and Ganter (1990) were among the first researchers who categorized the components of data quality with focus on uncertainty visualization; they matched the five SDTS categories to three data types (discrete, categorical, and continuous) and considered which visual variables are most suitable for the representation of the resulting categories. However, uncertainty visualization techniques not only include the modification of visual variables. Some methods also make use of added geometry in the form of glyphs (compound point symbols), isolines, or other shapes (including simple point symbols).

3.1 Uncertainty visualization methods for uncertainties in natural hazards assessments

While research of scientific visualization communities produced sophisticated visualizations of multivariate data in multiple dimensions (e.g., Pang and Freeman 1996), most applied uncertainty visualizations in the field of natural hazards are simplistic univariate representations (meaning that hazard related data are displayed in one map and inherent uncertainties are depicted in a second map display) (e.g., Leedal et al. 2010). Trau and Hurni (2007) analyzed the suitability of visual variables and visualization techniques for uncertainty depictions in hazard prediction maps. Pang (2008) discusses the issue of uncertainty inherent to natural hazards in detail and suggests methods for multiple dimensions and data types.

Table 1 presents visual variables and visualization techniques that were found suitable for the depiction of uncertainty in natural hazards assessment by Trau and Hurni (2007) and Pang (2008).

Apart from visualization methods for the illustration of scalar uncertainty Pang (2008) presents various potential methods for the depiction of vector and multi-value uncertainty. Many of them are complex, difficult to implement, and hard to interpret because of their complexity. However, Pang (2008) states that it is foremost that presentations are kept simple and reserved for the most critical information in a decision process. For this reason and because many practitioners still fear that uncertainty visualizations cannot be interpreted by decision-makers, it is suggested to keep the displayed visualizations to the depiction of scalar values of the most critical uncertainty information. Further components can be presented in the form of textual specifications in special windows or tooltip information.

4 Expert survey

In order to find out if the theoretically presented solutions of addressing uncertainty are reflected in practice, the opinions of natural hazards experts on uncertainty inherent to natural hazard assessments and their visualizations were collected. An online questionnaire was sent to 65 natural hazards experts. The survey was answered by 34 experts in October and November 2009. It consisted of four introductory questions that concerned the level of experience, the range of assessed processes, and methods used for hazard assessments: all experts worked in the field of natural hazards management and had an average experience of 14 years. The processes they assessed comprised floods, debris-flows, landslides, rock fall, snow avalanches, and sink holes. Two-thirds conducted the numerical modeling of the processes themselves, the other one-third was more engaged in the management than in assessment activities. None of the experts mentioned to be using probabilistic methods.

Since only Swiss experts were interviewed, the answers refer to existing hazard maps in Switzerland. It is obvious that the experience of this small circle of specialists does not allow for a universal conclusion. However, since hazard mapping in Switzerland has a long history and the Swiss system has been adopted by other countries or regions (Zimmermann et al. 2005), the gathered opinions can serve as a basis for the definition of user needs as well as the location of existing shortcomings.

The main part of the survey covered the issue of uncertainty visualizations in hazard representations. An overview of the questions and answers is presented in Fig. 2.

In summary, it can be concluded that the majority of the experts agree that natural hazard assessments are associated with uncertainties that are often considerable or even serious. The improvement of the communication of these uncertainties among experts is considered important, and the use of interactive digital environments for the presentation of hazard related data and uncertainty is welcomed. However, there is no consensus about the ideal means of communication (some experts think that visualizations are a suitable tool, other prefer textual representations, graphs, or tables), or if quantitative uncertainty information should be provided at all.

If the results of our expert survey are compared with those of Roth’s (2009) focus groups, they both agree that uncertainty exists and that visualizations are a potential way to communicate uncertainties. While the participants of Roth’s focus groups are used to the ideas of quality checks according to guidelines (e.g., FEMA 2009), Swiss experts are not obliged to comply with any quality standard. Both groups, however, do not systematically represent uncertainties in their hazard maps and also ignore them during their natural hazards management tasks. Consequently, the discrepancy between theoretical uncertainty/uncertainty visualization research and practical realization as observed by Roth (2009) is confirmed by our expert survey.

Concerning this survey, it has to be mentioned that personal comments made by the experts revealed that many of them are reluctant to communicate uncertainties to the general public, however, would welcome an open discussion among experts. The opinion that the legally regulated hazard maps should stay as they are (and should therefore not contain uncertainty visualizations) was expressed repeatedly. This indicates that some of the more conservative views (i.e., reluctance to include uncertainty visualizations) might turn out to be more open if hazard visualizations for expert users and not the legally regulated hazard maps had been discussed in this survey.

5 Exemplary quantification of model uncertainties and potential visualization methods

One reason for the existing discrepancy between the need for the communication of existing uncertainty and fuzziness in natural hazards assessments (Bezzola and Hegg 2008) and the fact that Swiss experts (and many of their international colleagues) currently are not obliged to include quantitative information about uncertainty inherent to the assessment process may be the challenge of uncertainty quantification. Although suitable approaches for the visualization of uncertainties have been suggested, they can only be applied to available uncertainty information. As none of the questioned experts seems to be using probabilistic models for the assessment of the natural hazard situation, the quantification of uncertainty can be an issue. Consequently, a potential way to generate quantitative measures for the model uncertainty inherent to natural hazard simulations is presented.

5.1 Sensitivity analysis of a snow avalanche event

The initial and boundary conditions for the simulation of an extreme event are difficult to forecast with great confidence (Borstad and McClung 2009). Apart from the friction coefficients, fracture depth and release zones are the most important input parameters to the dynamic snow avalanche model RAMMS: avalanche (Christen et al. 2010) that was used for this study. In practice, friction parameters are chosen according to experience (expert opinion or existing guidelines (e.g., Salm et al. 1990). Prior to a calculation, release zones and fracture depths have to be determined by an expert. This poses a challenge since pictures of release areas taken shortly after a historical event only rarely exist. GIS can be of help in defining potential release areas according to slope angles and aspects.

As input parameters are difficult to determine, numerical models are usually calibrated against an observed event with a certain recurrence interval. Calibrations of snow avalanche models are mostly conducted using observed runout distances of historical events as boundary condition. A clear distinction between the influences of the friction parameters and the release volume, however, cannot be made since multiple parameter combinations might lead to the same results. For this study, it was neither the goal to accurately predict potential future snow avalanche events nor to conduct a complete sensitivity analysis of the used model, but to give an idea about the influences of input parameter variations on model outputs.

In order to measure the effects of input parameter uncertainty on the resulting output parameters, a sensitivity analysis was conducted for a snow avalanche simulation in the Stampbach gully in Blatten VS, Switzerland. The RAMMS:avalanche model was calibrated with the help of the runout distance of the historical event of April 4, 1999, which was estimated to have a recurrence interval of 30 years. The calibrated friction parameters were adopted for all subsequent calculations. The sensitivity of the results with respect to input parameter uncertainty was determined by exemplarily varying the input parameters release zone area by 1 m (moderate input uncertainty assumed) and 10 m (strong uncertainty) and fracture depth by 10% (moderate uncertainty) and 50% (strong uncertainty). This variation resulted in 25 parameter combinations. The resulting 25 output parameters (max. snow height, max. velocity, and max. impact pressure) are used as basis for the calculation of existing model uncertainties in natural hazard assessments. As uncertainty measures, min–max spread, standard deviation, and variation coefficient were estimated for each output parameter and each 5 × 5 m raster cell. Statistical spread reflects the total range within which the output parameters vary. If this variation is large, the model reacts sensitively to the varied input parameter. The standard deviation not only conveys a picture of the spread, but how the values are distributed around the mean. These two measures, however, can only be interpreted if they are compared with the mean value of the output parameter (i.e., a standard deviation of 0.5 m might be acceptable for a raster cell where the total snow height is 10 m, while the same standard deviation is quite large for a snow height of 0.2 m). In case of the variation coefficient (ratio of standard deviation to mean), this comparison is included in the measure. Due to this advantage, variation coefficients were used for all subsequent visualizations.

5.2 Implementation and assessment of visualizations for natural hazards assessment uncertainty

The visualization methods suggested in Table 1 were chosen from manifold existing uncertainty visualization techniques from different fields. In order to evaluate their suitability for use in the field of natural hazards, they need to be applied to a real data set in order to compare and assess the advantages and weaknesses of each method. For this purpose, different methods have been applied to the data set of the Stampbach avalanche.

Following Trau and Hurni’s (2007) approach, it was distinguished between univariate displays where data and uncertainty is displayed in separate maps that have to be compared and bivariate displays where thematic data and inherent uncertainty are displayed in one single map. Bivariate approaches are further divided into extrinsic techniques where additional geometry is added to the symbolization and intrinsic symbolization where a visual variable of the symbolization is modified to depict uncertainty.

Figure 3a shows an intrinsic approach where impact pressure and uncertainty are both mapped to the color of the raster cell; pressures are mapped to color hue (yellow, orange, and red) and variation coefficients to color value (100, 80, and 50%). In Fig. 3b, pressures are mapped to the same color hues as in Fig. 3a, however, uncertainty is mapped to color saturation (70, 40, and 15%).

In general, any visual variable presented in Table 1 can be used for the visualization of uncertainty in intrinsic approaches. Table 2 lists these visual variables and offers comments about their use for uncertainty visualization.

All intrinsic approaches have in common that slight changes in uncertainty are difficult to identify, especially for data sets with great variability. Solutions to mitigate this problem with the help of interactive functionality will be presented in the next section.

In Fig. 4, impact pressures and variation coefficients are displayed using bivariate approaches. Impact pressures are mapped to a blue color scheme (light blue = low pressures, dark blue = high pressures), overlaid by the variation coefficients (extrinsic approach). In Fig. 4a, this overlay consists of a red point symbol (small diameter = low variation coefficient, large diameter = high variation coefficient) for each raster cell. Fig. 4b shows a different extrinsic approach, where variation coefficients are displayed by scattered point symbols. In regions with low variation coefficients, points are small and loosely scattered while regions with high variation coefficients are covered by larger points that are densely scattered. A third extrinsic method is shown in Fig. 4c where uncertainty is added in the form of isolines.

While the visualization of uncertainty in intrinsic approaches is realized by the variation of one visual variable, the visual techniques used for extrinsic approaches are combinations of several visual variables. Table 3 summarizes the visualization techniques suggested for to depict uncertainty and offers comments about strengths and weaknesses.

In Fig. 5, impact pressures and variation coefficients are displayed using univariate methods. Fig. 5a shows a 2D split display where impact pressures are shown in the top display, mapped to a blue color scheme (light blue = low pressures, dark blue = high pressures). Variation coefficients of the impact pressures are displayed beneath, mapped to a color scheme ranging from yellow (low standard deviations) over orange to red (high standard deviations). In Fig. 5b, a 3D approach was applied; on the left impact, pressures are displayed in form of 3D bar charts placed on a block diagram (short bar charts = low pressures, high bar charts = high pressures). As a comparison of bar chart heights is hindered by the terrain, the magnitude of impact pressures is additionally indicated by color saturation (unsaturated purple = low pressures, saturated purple = high pressures). Variation coefficients are shown on the right, also mapped to bar charts and color saturation (orange color scheme).

Univariate methods can make use of both, the variation of a visual variable (presented in Table 2) or the application of visual techniques (presented in Table 3).

In the next section, it is discussed how the interpretation and comprehension of uncertainty visualizations can be facilitated by interactive functionality within a cartographic information system.

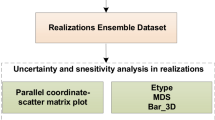

6 Interactive cartographic information system

Interactive cartographic information systems encompass numerous characteristics and functionalities that facilitate the presentation of complex information. Hurni (2008) defines the components of such systems (alternatively called Multimedia Atlas Systems MAIS) and gives an overview of advantages for the exploration and visualization of spatial data. One advantage in the context of uncertainty visualization is the interactive querying of available data: information about the assessment results as well as uncertainty information can be displayed in tooltip windows. This conveying of exact quantitative measures for each symbolized data point is especially helpful when the visualization method does not allow for a quantitative analysis. Additional information windows or bars can offer important details about assessment methods and uncertainty calculations. If the wealth of information is overwhelming for users, they can exclude layers from the display and only select data of interest. In addition, different visualization methods can be offered, for example 2D or 2.5D methods or different symbolization such as bars charts, interpolated areas or flat textures or colored areas. Sophisticated systems can even allow for a user-tailored customization of the offered visualizations; colors, class boundaries, or thresholds can be set by the user. Such a customization aims at facilitating the interpretation and understanding of complex data, including uncertainties (Kunz et al. 2011).

In order to make the aforementioned advantages available for natural hazard management tasks a cartographic information system for the exploration and visualization of natural hazards assessments and associated uncertainties has been developed. The GUI of the system is based on the Swiss World Atlas interactive (Cron et al. 2009) and is characterized by its lean layout without many buttons or menus.

Figure 6 shows the graphical user interface of this system.

Apart from choosing among different cartographic methods for the visualization of the assessment results as well as the inherent uncertainties, users can customize these visualizations according to their needs. This advantage should account for the different needs of the heterogeneous user group of natural hazards specialists. Hence, misunderstandings caused by incomprehensible maps should be prevented. A complete decision structure of the system was provided by Kunz et al. (2011).

Opinions of natural hazards experts concerning the integrated interactive functionality and visualization methods have been gathered by expert interviews (Kunz et al. 2011); overall, the system was well received by the experts and the suggested functionalities as well as the offered visualizations were found to facilitate the interpretation of natural hazards assessment results.

7 Conclusions

The various existing uncertainty definitions and typologies in the field of natural hazards hinder a clear communication and consequently the understanding about existing uncertainties. Furthermore, the fear of natural hazards experts that uncertainty information and visualizations are not correctly interpreted as well as the lack of guidelines or codes for standardized visualizations inhibit clear communication of uncertainties associated with natural hazards assessment results. As long as no standard or legal regulation exists and deterministic models are the standard method for hazard assessments, the generation of uncertainty information results in more work for the assessing expert and therefore presents an economical disadvantage. In this paper, it was shown how uncertainties in natural hazard modeling can be quantified with the help of a sensitivity analysis and how these uncertainties can be visualized. The suggested uncertainty visualizations were implemented in a cartographic information system that has been developed for natural hazards experts with the purpose of exploring and visualizing assessment data including uncertainties. The web-based technology of this system allows for easy platform independent access without additional software. Finally, such an interactive system can help to interpret and understand complex assessments including associated uncertainty and thus facilitate communication among experts.

References

Agumya A, Hunter GJ (2002) Responding to the consequences of uncertainty in geographical data. Int J Geogr Inf Sci 16:405–417. doi:10.1080/13658810210137031

Apel H, Thieken A, Merz B, Blöschl G (2006) A probabilistic modelling system for assessing flood risks. Nat Hazards 38(1):79–100. doi:10.1007/s11069-005-8603-7

Apel H, Merz B, Thieken AH (2008) Quantification of uncertainties in flood risk assessment. Int J River Basin Mange 6(2):149–162. doi:10.1080/15715124.2008.9635344

Bakkehøi S (1987) Snow avalanche prediction using a probabilistic method. Avalanche formation, movement and effects IAHS Special Publication (162):549–556. ISBN: 0-947571-96-5

Bates PD, Horritt MS, Aronica G, Beven K (2004) Bayesian updating of flood inundation likelihoods conditioned on flood extent data. Hydrol Process 18:3347–3370. doi:10.1002/hyp.1499

Bertin J (1983) Semiology of graphics: diagrams, networks, maps. University of Wisconsin Press, Madison. ISBN: 978-0299090609

Beven K, Binley A (1992) The future of distributed models: model calibration and uncertainty prediction. Hydrol Process 6(3):279–298. doi:10.1002/hyp.3360060305

Bezzola GR, Hegg C (2008) Ereignisanalyse Hochwasser 2005, Teil 2—Analyse von Prozessen, Massnahmen und Gefahrengrundlagen. BAFU, Bern. http://www.bafu.admin.ch/publikationen/publikation/00100/index.html

Borstad CP, McClung DM (2009) Sensitivity analyses in snow avalanche dynamics modeling and implications when modeling extreme events. Can Geotech J 46(9):1024–1033. doi:10.1139/T09-042

Buttenfield BP, Beard MK (1991) Visualizing the quality of spatial information. Paper presented at AutoCarto X, International symposium on computer-assisted cartography, Baltimore, MD. http://mapcontext.com/autocarto/proceedings/auto-carto-10/pdf/visualizating-the-quality-of-spatial-information.pdf

Buttenfield BP, Ganter JH (1990) Visualisation and GIS: what should we see? What might we miss? Paper presented at the 4th international symposium on spatial data handling, Zurich, Switzerland

Christen M, Kowalski J, Bartelt P (2010) RAMMS: numerical simulation of dense snow avalanches in three-dimensional terrain. Cold Reg Sci Technol 63(1–2):1–14. doi:10.1016/j.coldregions.2010.04.005

Cliburn DC, Feddema JJ, Miller JR, Slocum TA (2002) Design and evaluation of a decision support system in a water balance application. Comput Gr 26(2002):931–949. doi:10.1016/S0097-8493(02)00181-4

Cron J, Marty P, Bär H, Hurni L (2009) Navigation in school atlases: Functionality, design and implementation in the “Swiss world atlas interactive”. Paper presented at the 24th international cartographic conference ICC, Santiago, Chile. http://icaci.org/documents/ICC_proceedings/ICC2009/html/nonref/14_5.pdf

Deitrick SA (2007)Uncertainty visualization and decision making: Does visualizing uncertain information change decisions? Paper presented at the 23rd international cartographic conference ICC, Moscow, Russia. http://icaci.org/documents/ICC_proceedings/ICC2007/html/Proceedings.htm

Di Baldassarre G, Schumann G, Bates PD, Freer JE, Beven KJ (2010) Flood-plain mapping: a critical discussion of deterministic and probabilistic approaches. Hydrol Sci J 55(3):364–376. doi:10.1080/02626661003683389

Evans BJ (1997) Dynamic display of spatial data-reliability: does it benefit the map user? Comput Geosci 23(4):409–422. doi:10.1016/S0098-3004(97)00011-3

Fegeas RG, Cascio JL, Lazar RA (1992) An overview of FIPS 173, the spatial data transfer standard. Cartogr Geogr Info Sci 19(5):278–293. doi:10.1559/152304092783762209

FEMA (2009) Guidelines and specifications for flood hazard mapping partners. Map modernization. Federal Emergency Management Agency, US Department of Homeland Security, Washington DC. http://www.fema.gov/library/viewRecord.do?id=2206

Goodchild MF (1980) Fractals and the accuracy of geographical measures. Math Geol 12:85–98. doi:10.1007/BF01035241

Goodchild MF, Gopal S (eds) (1989) The accuracy of spatial databases. Taylor & Francis, London. ISBN: 978-0850668476

Guzzetti F, Reichenbach P, Cardinali M, Galli M, Ardizzone F (2005) Probabilistic landslide hazard assessment: An example in the collazzone area, central italy. In: Bergmeister K, Strauss A, Rickenmann D (eds) 3rd probabilistic workshop, technical systems and natural hazards. Schriftenreihe des Departments, Vol 7, pp 173–182. http://nbn-resolving.de/urn:nbn:de:bsz:14-ds-1232900322783-70671

Hagemeier-Klose M, Wagner K (2009) Evaluation of flood hazard maps in print and web mapping services as information tools in flood risk communication. Nat Hazards Earth Syst Sci 9:563–574. doi:10.5194/nhess-9-563-2009

Hurni L (2008) Multimedia atlas information systems. In: Shekhar S, Xiong H (eds) Encyclopedia of GIS. Springer Science+Business Media, New York, pp 759–763. ISBN: 978-0-387-30858-6

Jomelli V, Delval C, Grancher D, Escande S, Brunstein D, Hetu B, Filion L, Pech P (2007) Probabilistic analysis of recent snow avalanche activity and weather in the French Alps. Cold Reg Sci Technol 47(1–2):180–192. doi:10.1016/j.coldregions.2006.08.003

Krzysztofowicz R (2002) Bayesian system for probabilistic river stage forecasting. J Hydrol 268(1–4):16–40. doi:10.1016/S0022-1694(02)00106-3

Kunz M, Grêt-Regamey A, Hurni L (2011) Customized visualization of natural hazards assessment results and associated uncertainties through interactive functionality. Cartogr Geogr Inf Sci (in press)

Kyriakidis P (2008) Spatial uncertainty and imprecision. In: Shekhar S, Xiong H (eds) Encyclopedia of GIS. Springer Science+Business Media, New York. ISBN: 978-0-387-30858-6

Leedal D, Neal J, Beven K, Young P, Bates P (2010) Visualization approaches for communicating real-time flood forecasting level and inundation information. J Flood Risk Manag 3(2):140–150. doi:10.1111/j.1753-318X.2010.01063.x

Leitner M, Buttenfield BP (2000) Guidelines for the display of attribute certainty. Cartogr Geogr Inf Sci 27(1):3–14. doi:10.1559/152304000783548037

MacEachren AM (1995) How maps work: representation, visualization, and design. Guilford Press, New York. ISBN 978-1572300408

MacEachren AM, Brewer CA (1995) Mapping health statistics: Representing data reliability. Paper presented at the 17th international cartographic conference ICC, Barcelona, Spain. http://www.geovista.psu.edu/publications/ica1995/MacEachren_%20Mapping%20health%20statistics.pdf

MacEachren AM, Robinson A, Hopper S, Gardner S, Murray R, Gahegan M, Hetzler E (2005) Visualizing geospatial information uncertainty: what we know and what we need to know. Cartogr Geogr Inf Sci 32(3):139–160. doi:10.1559/1523040054738936

Merz B, Thieken AH, Gocht M (2007) Flood risk mapping at the local scale: concepts and challenges. In: Begum S, Stive MJF, Hall JW (eds) Flood risk management in Europe, Vol 25. Advances in natural and technological hazards research. Springer, Netherlands, pp 231–251. doi:10.1007/978-1-4020-4200-3_13

Morrison JL (1984) Applied cartographic communication: map symbolization for atlases. Cartographica 21(1):44–84. doi:10.3138/X43X-4479-4G34-J674

Most HVD, Wehrung M (2005) Dealing with uncertainty in flood risk assessment of dike rings in the Netherlands. Nat Hazards 36(1):191–206. doi:10.1007/s11069-004-4548-5

Pang A (2008) Visualizing uncertainty in natural hazards. In: Bostrom A, French SP, Gottlieb SJ (eds) Risk assessment, modeling and decision support. Springer, Berlin Heidelberg. doi:10.1007/978-3-540-71158-2

Pang A, Freeman A (1996) Methods for comparing 3D surface attributes. In: SPIE Vol 2656 Visual data exploration and analysis III, pp 58–64. ISBN: 9780819420305

Pang A, Wittenbrink CM, Lodha SK (1997) Approaches to uncertainty visualization. Vis Comput 13:370–390. doi:10.1007/s003710050111

Pappenberger F, Beven KJ (2006) Ignorance is bliss: or seven reasons not to use uncertainty analysis. Water Resour Res 42. doi:10.1029/2005WR004820

Ramsey M (2009) Uncertainty in the assessment of hazard, exposure and risk. Environ Geochem Health 31(2):205–217. doi:10.1007/s10653-008-9211-8

Refice A, Capolongo D (2002) Probabilistic modeling of uncertainties in earthquake-induced landslide hazard assessment. Comput Geosci 28(6):735–749. doi:10.1016/S0098-3004(01)00104-2

Roth RE (2009) A qualitative approach to understanding the role of geographic information uncertainty during decision making. Cartogr Geogr Inf Sci 36:315–330. doi:10.1559/152304009789786326

Sadahiro Y (2003) Stability of the surface generated from distributed points of uncertain location. Int J Geogr Inf Sci 17:139–156. doi:10.1080/713811751

Salm B, Burkhard A, Gubler HU (1990) Berechnung von Fliesslawinen, vol 47. Mitteilungen des Eidgenössischen Instituts für Schnee- und Lawinenforschung, SLF, Davos

Sayers PB, Gouldby BP, Simm JD, Meadowcroft I, Hall J (2002) Risk, performance and uncertainty in flood and coastal defence: a review. Flood and Coastal Defence R&D programme, Tech Rep. FD230/TR1. DEFRA/EA, Wallingford, UK. http://www.safecoast.org/editor/databank/File/risk,%20performance%20and%20uncertainty%20in%20flood%20defence.pdf

Schnabel O (2007) Benutzerdefinierte diagrammsignaturen in karten. Dissertation, ETH Zurich. http://e-collection.library.ethz.ch/eserv/eth:29352/eth-29352-02.pdf

Slocum TA, McMaster RB, Kessler FC, Howard HH (2005) Thematic cartography and geovisualization. Prentice hall series in geographic information science, 2nd edn. Pearson-Prenctice Hall, Upper Saddle River. ISBN: 978-0130351234

Straub D (2006) Natural hazards risk assessment using bayesian networks. In: Augusti G (ed) Safety and reliability of engineering systems and structures (ICOSSAR 05). Millpress, Rotterdam, pp 2535–2542. ISBN 90-5966-056-0

Straub D, Grêt-Regamey A (2006) A bayesian probabilistic framework for avalanche modelling based on observation. Cold Reg Sci Technol 46(2006):192–203. doi:10.1016/j.coldregions.2006.08.024

Straub D, Schubert M (2008) Modeling and managing uncertainties in rock-fall hazards. Georisk: Assess Manag Risk Eng Syst Geohazards 2(1):1–15. doi:10.1080/17499510701835696

Thomson J, MacEachren AM, Gahegan M, Pavel M (2005) A typology for visualizing uncertainty. Paper presented at the conference on visualization and data analysis 2005, San Jose, CA. doi:10.1117/12.587254

Todini E (2004) Role and treatment of uncertainty in real-time flood forecasting. Hydrol Process 18:2743–2746. doi:10.1002/hyp.5687

Trau J, Hurni L (2007) Possibilities of incorporating and visualizing uncertainty in natural hazard prediction. Paper presented at the 23rd international cartographic conference ICC, Moscow, Russia. http://icaci.org/documents/ICC_proceedings/ICC2007/html/Proceedings.htm

Veregin H (1999) Data quality parameters. In: Longley PA, Goodchild MF, Maguire DJ, Rhind DW (eds) Geographical information systems, principles and technical issues. Wiley, New York, pp 177–189. ISBN: 978-0-471-73545-8

Werner M, Reggiani P, Roo AD, Bates P, Sprokkereef E (2005) Flood forecasting and warning at the river basin and at the European scale. Nat Hazards 36(1):25–42. doi:10.1007/s11069-004-4537-8

Wiemer S, Giardini D, Fäh D, Deichmann N, Sellami S (2009) Probabilistic seismic hazard assessment of Switzerland: best estimates and uncertainties. J Seismol 13(4):449–478. doi:10.1007/s10950-008-9138-7

Wilkinson L (2005) The grammar of graphics. Satistics and computing, 2nd edn. Springer, New York. doi:10.1007/0-387-28695-0

Xie M, Esaki T, Zhou G (2004) GIS-based probabilistic mapping of landslide hazard using a three-dimensional deterministic model. Nat Hazards 33(2):265–282. doi:10.1023/B:NHAZ.0000037036.01850.0d

Zimmermann M, Pozzi A, Stoessel F (2005) Vademecum—hazard maps and related instruments—the Swiss system and its application abroad—capitalization of experience. DEZA, Bern. http://www.deza.admin.ch/ressources/resource_en_25123.pdf

Zischg A, Fuchs S, Stötter J (2004) Uncertainties and fuzziness in analysing risk related to natural hazards: a case study in the Ortles Alps, South Tyrol, Italy. In: Brebbia CA (ed) Risk analysis IV. WIT Press, Southampton. ISBN: 1-85312-736-1

Acknowledgments

The authors would like to thank Christian Omlin and Sascha Thöni of the Swiss World Atlas interactive team for the technical implementation of the cartographic information system. Their effort is much appreciated. Thanks also go to Christoph Graf of the Swiss Federal Institute for Forest, Snow and Landscape Research WSL for his valuable comments, his help concerning the choice of the study area, and the provision of base data for the numerical simulation. André Henzen of the WSL Institute for Snow and Avalanche Research SLF assisted in the reconstruction of the historical snow avalanche event with advice and input data, for which we would like to thank him. This paper is part of research project 20020-125183, funded by the Swiss National Science Foundation.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kunz, M., Grêt-Regamey, A. & Hurni, L. Visualization of uncertainty in natural hazards assessments using an interactive cartographic information system. Nat Hazards 59, 1735–1751 (2011). https://doi.org/10.1007/s11069-011-9864-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11069-011-9864-y