Abstract

Discrete network design problem (DNDP) is generally formulated as a bi-level programming. Because of non-convexity of bi-level formulation of DNDP which stems from the equilibrium conditions, finding global optimal solutions are very demanding. In this paper, a new branch and bound algorithm being able to find exact solution of the problem is presented. A lower bound for the upper-level objective and its computation method are developed. Numerical experiments show that our algorithm is superior to previous algorithms in terms of both computation time and solution quality. The conducted experiments indicate that in most cases the first incumbent solution which is obtained within a few seconds is superior to the final solution of some of previous algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The network design problem (NDP) concerns with modifying a transportation network (infrastructure) configuration by adding new links or improving existing ones, so that certain social welfare objectives (e.g. total travel time over the network) are maximized. How to locate new links and how to increase the capacity of existing links are motivating problems. The overall objective is to minimize the total system costs under limited budget, while accounting for the route choice behavior of network users. NDP can be roughly classified into three categories: the discrete network design problem (DNDP) dealing with adding new links or road-way segments to an existing road network; the continuous network design problem (CNDP) dealing with the optimal capacity expansion of a subset of existing links; and the mixed network design problem (MNDP) combining both CNDP and DNDP in a network (Yang and Bell 1998). The importance of the NDP problem has been highlighted in both academic and practical literature. From a practical view, NDP becomes more important as increasing populations coupled with economic growth produce travel demand exceeding the existing capacity of transportation infrastructures. Meanwhile, resources available to enhance capacity remain limited. Therefore, enhancing capacity needs a great deal of investments that could be hardly met in developing countries. Accordingly, it is urgent to study how to efficiently allocate limited investment to improve transport efficiency and maximize the social and economic benefits.

From an optimization point of view, the NDP can be viewed as a hierarchical decision making problem that includes system planners in the upper-level and users in the lower-level. System planners try to influence users’ choices by adding or expanding some links to minimize total system costs. Total costs of the system are affected by decision variables of both system planners and users (LeBlanc and Boyce 1986). The partition of the control over the decision variables between two hierarchical levels requires the formulation of the NDP as a bi-level programming problem (BLPP). In the resulted BLPP the system planner makes decisions about network configuration to improve the performance of the system, and the network users make choices about the routes of their travel in response to the upper-level decision. Since users are assumed to make their choices to maximize their individual utility functions, their choices do not necessarily align, and often conflict, with the choices that are optimal for the system planners. Lower-level problem is generally described by a user equilibrium model.

Previous studies give practical evidence implying that even small and experimental scale NDPs are difficult to solve (Gao et al. 2005; Magnanti and Wong 1984). There is also theoretical evidence supporting these observations since the BLPP is NP-hard even in its linear form. Because of the intrinsic complexity of NDP in the form of bi-level formulation, the problem has been recognized as one of the most difficult ones, yet challenging problems for global optimality in transportation. This often leads to computation times too high for practical purposes. Due to the practical importance of NDP, many heuristic/meta-heuristic algorithms to tackle it have been employed. Meta-heuristic algorithms have enjoyed a considerable popularity in the last decades (Poorzahedy and Abulghasemi 2005; Poorzahedy and Rouhani 2007). The guarantee of finding an optimal solution in heuristic/meta-heuristic algorithms is sacrificed for the sake of a good solution in a significantly reduced amount of time.

Although good progress and algorithms have been achieved during past decades, currently the most promising solution methods dealing with realistic scale problems are heuristics/meta-heuristics which do not guarantee the optimality or near-optimality of the solution. On the other hand, some global optimization approaches have been proposed based on converting the bi-level form to a single-level one and exploiting decomposition methods (Gao et al. 2005; LeBlanc 1975). These approaches need a lot of computation time.

This paper proposes a new branch and bound algorithm for the bi-level DNDP being able to provide an optimal solution for the real-scale network design problems. To cope with explicit or implicit path enumeration, the link-node network representation with multicommodity flows is employed. The main motivation is presenting an algorithm being able to find a global optimal solution for medium to large-scale DNDP while guarding against Braess’ paradox.

The remainder of this paper is organized as follows: Section 2 reviews the literature on network design problems. The proposed formulation is then described in Section 3. In Section 4, the numerical results on four test problems are reported. Finally, concluding remarks are offered in Section 5.

2 Literature review

LeBlanc (1975) made a pioneer study and proposed a branch-and-bound algorithm for solving DNDP. The bounding step was designed so that the Braess’ paradox could not occur. This algorithm is relatively inefficient and becomes computationally prohibitive, even for small networks, in cases where there is a relatively large number of new links to add to the network. Our algorithm could be considered as an improvement of LeBlanc’s B&B algorithm whose most building blocks including bounding, branching, fathoming, first incumbent, and some other minor parts have been improved. Poorzahedy and Turnquist (1982) developed a branch and bound algorithm for solving the integer programming model of the DNDP by replacing the objective function with another well-behaved function, which makes the problem a convex nonlinear mixed-integer program. They argued that since a strong linear relationship between the system and user optimal objective function exists, the system optimal objective function could be approximately replaced with the user optimal one. However, in situations where severe traffic congestion exists in the network, a significant gap appears between the mentioned functions. In addition, in mathematical programming, even in the presence of exact linear relationships between two objective functions, there is no guarantee that both objective functions lead to the same solution even if their objective values are very close to each other.

Steinbrink (1974a), Boyce (1984), Freisz (1985), and Magnanti and Wong (1984) conducted surveys on the modeling and algorithmic development for mathematical programming based NDP. Specifically, Steenbrink (1974a) reviewed the branch-and-bound techniques for solving DNDP. He presented an introduction to modeling DNDP and discussed techniques for the traffic assignment and Braess’ paradox. He proposed a method to approximate user equilibrium flows by the system optimal flows using an iterative decomposition algorithm. In their comprehensive survey paper, Magnanti and Wong (1984) presented a unified framework of modeling DNDP and described a number of algorithms including Benders’ decomposition and some of its accelerated versions, Lagrange relaxation, and dual ascent procedures as successful algorithms in providing a solution for the special cases of DNDP. As a more recent review, Yang and Bell (1998) presented a review analysis of the models and algorithms based on bi-level formulation for NDP in which MNDP was also mentioned. They classified the models based on the characteristics of the lower-level problem (deterministic or stochastic user equilibrium), the type of the decision variables at the upper-level, the objective function of the upper-level, and the type of the solution algorithm.

As previously mentioned, the bi-level programming program is very difficult to solve. Thus, designing efficient algorithms for NDP in the bi-level formulation is recognized as one of the most challenging problems in transportation (Meng et al. 2001). Therefore, many researchers used some simplifying assumptions and developed algorithms to the simplified problem. The rationale for this is mathematical tractability at the expense of realism of assumptions which, in turn, limits the applicability of the algorithms. Some of the simplifying assumptions are as follows (Poorzahedy and Rouhani 2007): (a) replacing user equilibrium flows by system equilibrium flows in the lower-level and obtaining a convex mixed integer nonlinear programming (Dantzig et al. 1979); (b) using linear functions for user travel time and make the problem less complex by assuming constant link travel time function (Boyce et al. 1973; Holmberg and Hellstrand 1998). This assumption is more suitable for intercity transportation networks owing to the fact that intercity roads are rarely congested. By assuming no congestion, there is no difference between SO and UE flows. Therefore, the objective function of the lower-level becomes like the upper-level one and this, in turn, leads to a single-level problem which is much easier to solve; (c) constraint relaxation, mostly the integrality of decision variables which may lead to an impractical solution in case of DNDP (Abdulaal and LeBlanc 1979; Dantzig et al. 1979; Steenbrink 1974b), and (d) aggregation of the network at different levels by link and node extraction procedure to make network simpler (Haghani and Daskin 1983).

Up to date, studies have mostly focused on the developing heuristics/meta-heuristics algorithm. Within the last two decades, meta-heuristic algorithms for optimization problems generally, and NDP specially, have been increasingly exploited. Meta-heuristics such as simulated annealing (Friesz et al. 1992; Lee and Yang 1994) and ant colony (Poorzahedy and Abulghasemi 2005; Poorzahedy and Rouhani 2007), have been previously used to solve NDP under deterministic conditions. Xiong and Schneider (1995) combined genetic algorithm with neural networks to solve NDP with special constraints such as mutually exclusive projects. Drezner and Salhi (2002) compared the performance of heuristics/meta-heuristics, such as descent algorithm, tabu search, simulated annealing, and genetic algorithm, for one-way and two-way network design problems. They found that genetic algorithm is superior in finding the best solution, while needing longer computation time to achieve such solutions. Karoonsoontawong and Waller (2006) used genetic algorithm, simulated annealing, and random search to solve CNDP when it is modeled as a linear bi-level programming with a lower-level of dynamic user-optimal traffic assignment model. Their study shows that genetic algorithm outperforms other algorithms. Lin et al. (2011) employed the cell transmission model to formulate CNDP as a bi-level linear program. They proposed a heuristic algorithm based on Dantzig-Wolf decomposition to solve the resulted problem. Their algorithm can obtain a local optimal solution for large-scale CNDP. However, the scope of their work was limited to single destination network.

Gao et al. (2005) presented an algorithm by using the support function concept (Floudas 1995) which in turn helps to express the relationship between user flows (the lower-level) and the new additional links in the existing urban network (the upper-level). They compared the performance of the proposed algorithm with LeBlanc’s Branch and Bound (B&B) (LeBlanc 1975) on the well-known Sioux-Falls test problem with 5 projects. It should be noted that although LeBlanc’s B&B is not efficient in solving real scale problems due to its loose lower bound, it guards against Braess’ paradox. However, we show by a counterexample that the algorithm proposed by Gao et al. (2005) cannot guarantee the optimality of the solution. It especially does not guard against Braess’ paradox. More specifically, their algorithm offers solutions, which really make system-level objective worse although the algorithm converges to a specious solution with a better objective. Zhang et al. (2009) formulated DNDP as a mathematical program with complementarity constraints where UE was represented in the form of a variational inequality problem. They proposed an active set algorithm to solve the problem. Conducted numerical experiments on three networks showed the effectiveness of their algorithm. However, they have not provided any comparative results with previous algorithms. Chung et al. (2011) formulated a single-level CNDP as a robust optimization problem explicitly incorporating traffic dynamics and demand uncertainty. They used cell transmission model and a box uncertainty set for modeling uncertain demands to have a tractable linear programming based CNDP. They considered a single-destination network.

Wang and Lo (2010) formulated CNDP as a single-level optimization problem. The main deficiency of their work is that the path set between all OD pairs should be known in advance and explicitly imported to the model. This feature prohibits employing their formulation to DNDP because adding new links changes the path set of many OD pairs. In addition, enumerating all paths between all OD pairs in a graph is an intractable problem by itself. They also used piece-wise linear regression to linearize the nonlinear travel time. Their experimental results show that because of the adopted linearization scheme, the presented formulation is not efficient in dealing even with small to medium CNDP problems. Farvaresh and Sepehri (2011) transformed the bi-level DNDP into a single-level MILP using representing UE condition as KKT conditions and employing an efficient linearization scheme to linearizing non-linear terms. They generate two sets of valid inequality which significantly strengthen the formulation. The performance of their formulation having generated valid inequalities when it solved by CPLEX on small networks, even with a relatively large number of new links, is acceptable; but it cannot deal with real problems. Luathep et al. (2011) formulated MNDP as a mathematical programming with equilibrium constraints and proposed a global optimization algorithm. They transformed the problem into a MILP problem using piecewise-linear approximation. They proposed a global optimization algorithm based on a cutting constraint method to solve the resulted MILP problem. Numerical results show the superior efficiency of the proposed method in comparison with the previous algorithms. An interesting result of their work is that solving MNDP on a given budget level most likely makes the system-level objective better than solving DNDP or CNDP separately on the same budget level. As they demonstrated, solving DNDP, CNDP, and MNDP for Sioux Falls network takes around 20, 27, and 35 min. Nevertheless, it seems that their algorithm is not efficient in dealing with large-scale real NDP problems, because it requires one relatively large MILP to be solved in each iteration.

Kim and Kim (2006) proposed a DNDP model in which total travel time was replaced by a comprehensive social cost. Their suggestion for such a social cost function seems rational since the upper-level decision maker (mostly government) should consider all issues of social costs. However, neglecting the Braess’ paradox is a practical limitation of their model. They used a genetic algorithm to solve their model and presented some numerical results based on a small network. Like Poorzahedy and Rouhani (2007), a small network was used to evaluate the performance of the suggested algorithm. Both studies compared the their best-found solutions with the global one obtained by full enumeration. Nevertheless, evaluation of the computational efficiency and the solution quality of a meta-heuristic algorithm by a test problem, whose all feasible solutions can be easily enumerated, is not without its pitfalls. Xu et al. (2009) employed genetic algorithm and simulated annealing to solve CNDP. They showed that simulated annealing algorithm outperforms genetic algorithm in solution quality in a shorter time. Sun et al. (2009) introduced an immune clone annealing algorithm, which is designed by combining annealing tactic of the simulated annealing and the immune clone algorithm to solve the MNDP problem. They provide some numerical results on a small network problem. Zhang and Gao (2009) transformed the bi-level MNDP to a single-level MNDP and proposed a locally convergent algorithm using penalty function method. Miandoabchi et al. (2011) formulated a bi-modal multi-objective DNDP considering the concurrent urban road and bus network design. They developed a hybrid genetic algorithm and a hybrid clonal selection algorithm to solve the resulted problem.

Most of the heuristics/meta-heuristics based studies used the well-known Sioux-Falls test problem to evaluate the computational aspects of their algorithms. However, due to the small number of projects (5 or 10 projects) which implies that the solution space of the NDP is small, using such a simple problem could not be a good examination. Because it is more likely a stochastic search method finds the optimal solution in a small solution space. It should be noted that due to budget constraint all subsets of projects do not need to be enumerated. In fact, meta-heuristics with well-tuned parameters probably enumerate all feasible solutions in such problems.

3 Problem formulation

In this section, used notations and definitions are presented, and following that the bi-level formulation of DNDP is introduced.

The following is a summary of the notes and symbols used in this paper.

- G(N, A):

-

a connected transportation network, with N and A being the sets of nodes and links, respectively. Here, N=O ∪ D ∪ T where O, D and T are the set of all origin, destination, and transition nodes respectively. A = A 1 ∪ A 2 where A 1 is the set of all existing links and A 2 is the set of all proposed new links (projects) which the model decides to be built or not

- (i, j):

-

link designation (i, j) ∈ A

- \( A_i^{+} \) :

-

set of links originating from node i ∈ N

- \( A_i^{-} \) :

-

set of links pointing to node i ∈ N

- (r, s):

-

origin–destination (OD) pairs where r ∈ O and s ∈ D are origin and destination nodes, respectively

- W :

-

the set of all OD pairs where W ⊆ N × N

- \( d_r^s \) :

-

the demand between OD pair (r, s) ∈ W which is assumed to be nonnegative constant

- x a :

-

the link flow on link a ∈ A

- x :

-

vector of x a’s with dimention equal to |A|, x = (…, x a, …)T

- \( x_a^s \) :

-

flow along link a ∈ A with destination s ∈ D

- y a :

-

a binary decision variable related to project link a ∈ A 2, taking value 1 or 0 depending on acceptance or rejection of project link a ∈ A 2

- y :

-

vector of y a ’s with dimension equal to |A2|, y = (…, y a , …)T

- t a (x a ):

-

the travel cost on link a ∈ A defined as a positive and continuous function of link flow x a which is often representing average travel time on link a ∈ A

- g a :

-

cost of establishing link a ∈ A 2

- B:

-

total available budget

Based on the above notations, the DNDP problem under the deterministic user equilibrium can be formulated as the following bi-level programming model:

Where ZU and ZL in (1) and (4) are the upper and lower-level objective functions, respectively. The upper-level decision maker decides which links should be established considering the response of users to resulted changes in the form of user optimum equilibrium. Constraint (2) indicates budget limitation; constraints (4)–(9) assert UE conditions; constraints (5) prohibit flow on non-constructed new links; constraints (6) are flow conservation and assure that the demands of all commodities will be satisfied and the balance of receive and delivery is held in each node; constraints (7) are flow aggregators so that the total flow on each link is the sum of the flows of all commodities on that link; and Constraints (3) and (8) show the positiveness and binary mode of decision variables. In constraint (5), M is an arbitrarily large positive number. Formulation (1)–(9) is based on the link-node flows such that the lower-level problem is formulated as a multicommodity network flow problem in a link-node representation. A commodity is associated with each destination node s ∈ D, and the demand of commodity s in node i is represented by \( d_i^s \).

As can be seen, in the multicommodity formulation the path information is not needed. It should be noted that, based on the definitions and assumptions stated before, the optimality conditions of the link-node formulation is equivalent to the UE condition (Patriksson 1994). The lower-level problem is a convex mathematical program which, because of the strict convexity of the objective function with respect to the link flows, has a unique optimal solution in terms of link flows (Sheffi 1985).

4 Branch and bound (B&B) for DNDP

The general B&B method for DNDP of the form (1)–(9) is based on the key ideas of branching (separating), bounding, and fathoming which are explained in the following (For more information on B&B terminology and technical details, the interested readers are referred to (Floudas and Pardalos 2009; Ibaraki 1987):

4.1 Branching

Let formulation (1)–(9) be denoted as (P) and let its set of feasible solutions be denoted as FS(P). A set of sub-problems (SP 1), (SP 2), …, (SP n ) of (P) is defined as a separation of (P) if (a) A feasible solution of any of the sub-problems (SP 1), (SP 2), …, (SPn) is a feasible solution of (P); and (b) Every feasible solution of (P) is a feasible solution of exactly one of the sub-problems. In fact, conditions (a) and (b) imply that the feasible solutions of sub-problems FS(SP 1), FS(SP 2),…, FS(SP n ) are a partition of the feasible solutions of problem (P). In B&B terminology, the original problem (P) is called a root (parent) node problem while sub-problems are called the child node problems (Floudas 1995). The relationships of the parent and child nodes in DNDP could be represented by a binary tree. In this binary tree, each node corresponds to a sub-problem; and from each non-leaf node two new nodes (sub-problem) are produced by branching on one of the binary decision variables y a , a ∈ A 2; that is, in the right child node y a = 1, while in the left child node y a = 0. The original problem P could be considered as sub-problem (SP 0 ) in root node. Sub-problem (SP n ) based on its local position in the tree is a restricted version of the original problem (P):

(5), (6), (7), (8), and (9)

where Φ n is the set of fixed variables y a from root node to node n along with their values; B n is the budget available at node n which is obtained from subtracting the summation of the cost of constructed projects from root node to node n. That is,

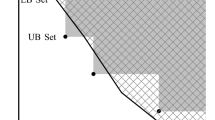

4.2 Bounding

A key issue in every B&B is its bounding scheme. Due to the Braess’ paradox bounding in bi-level formulated DNDP has its special difficulties. More specifically, in node n, for example, one cannot set all unfixed variables y a , ∀a ∈ A 2\Φ n equal to one and find user equilibrium flows on the resulted network, because there is no guarantee that the system level objective is improved by adding new links to the network. Therefore, to avoid invalid lower-bounds, the bounding scheme should be carefully designed. The following lemma is used to compute a lower bound in node n of B&B search tree:

Lemma

For any node n in the B&B tree, the optimal solution of sub-problem (SP n ) is greater than or equal to the optimal value of \( (SP_n^\prime ) \), which gives the least possible congestion at the system optimal flows:

(5), (6), (7), (8), and (9)

Proof

See (LeBlanc 1975).

Sub-problem \( (SP_n^\prime ) \) is a single-level mixed integer non-linear programming (MINLP). In other words, it is a network design problem with system optimal flows. LeBlanc (1975) did not solve sub-problem \( (SP_n^\prime ) \) and in turn, used a more relaxed problem for bounding in which all unfixed variables are set equal to 1. In fact, fixing all unfixed variables at 1 and computing system optimal flows underestimate the upper-level objective with a high gap. This leads to inefficient lower bounds. More details and computational implications of his work will be presented in the next section. Sub-problem \( (SP_n^\prime ) \) can be optimally solved by an algorithm which will be outlined in the Section 4.5. In each node, the optimal value of corresponding sub-problem’s objective function should be obtained. As will be discussed later, in the presented B&B, solving only one of two resulted sub-problems (left and right child nodes) is needed, because the solution of one of the two resulted child nodes’ sub-problems equals to the solution of the parent node’s sub-problem. This property significantly decreases the computation efforts in B&B search algorithm. Based on the bound of a node, in order to decide to expand or to stop expanding of the node, some tests are done in the fathoming step.

4.3 Fathoming

The fathoming criteria are checked in each resulted node. In fact, in each node n it is checked if the feasible solution of the sub-problem (SP n ) can contain an optimal solution of the problem (P). If the response to this question is no, then the node is fathomed; and if the response is yes, then the solution should be found and the node is fathomed. If no certain decision is made, then the node must be considered as a candidate to more expansion in the continuation of the searching B&B tree. More specifically, in each node n these criteria are evaluated: (a) Is there any unfixed variable? If not, then the user optimal flows of the resulted network are computed; the upper-level objective is determined, and the node is fathomed; (b) Is there enough budget to construct more projects, or equally to set more y a =1, ∀ a ∈ A 2\Φ n ? If not, then all unfixed variables are fixed at 0, and user optimal flows of the resulted network are computed; the upper-level objective is determined, and the node is fathomed; and (c) Is the lower bound larger than or equal to the current incumbent? If yes, the node is fathomed. The criterion (b) is satisfied if B n < min{g a | a ∈ A 2\Φ n }. In cases (a) and (b) where a new solution with a better upper-level objective is obtained, it is kept as incumbent; and the search tree is scanned and all nodes having lower bounds higher than or equal to the resulted incumbent are fathomed.

The pseudo-code of the proposed B&B algorithm is depicted in Fig. 1.

4.4 Algorithm description

The key steps of the algorithm are shortly explained in the following.

The proposed algorithm starts from the root node where no decision has been made on building or not building new projects. The algorithm iteratively selects a node from the list of active nodes based on the node selection strategy. There are three main strategies to select a node from the active node list L: depth first search, breathe first search, and best first search. There is a vector of decision variables associated with each active node n indicating the status of the all projects from the root node to node n. The elements of this vector, each of which corresponds to one project, take three values 1, 0, and −1 which, in turn, indicate fixed at 1 (build), fixed at 0 (not to build), and unfixed (no decision made yet), respectively. The status of decision variables in each node is shown by a column vector of size |A 2| in column n of matrix Λ. Matrix Λ is a matrix of dimension |A 2| × N max where N max is the maximum number of active nodes in the B&B search tree. It could be approximately set to a fixed number and in cases where more active nodes appear in the tree, the matrix is rescaled. In our conducted experiments, it was set to 100, and it was not violated by the algorithm. It does not seem to be so memory restrictive in today’s computers.

First, the sub-problem\( (SP_0^\prime ) \),\( (SP_n^\prime ) \)at root node (n = 0), is solved, and this leads to the lowest lower-bound for the original problem P because all sub-problems\( (SP_n^\prime ) \), n ≠ 0, are the restricted versions of sub-problem\( (SP_0^\prime ) \). The optimal solution of sub-problem\( (SP_0^\prime ) \)which is indicated by ŷ in pseudo-code is stored in the column n of matrix y so. Matrix y so, like matrix Λ, is of dimension |A 2| × N max . It is obvious that the optimal solution of sub-problem\( (SP_0^\prime ) \)is also a feasible solution for the original problem P. Therefore, if the user optimal flows of the resulted network are computed and put into the objective function of problem P, a solution is obtained in the root node. This solution (incumbent in the root node) is an upper-bound (UB) for problem P. In the pseudo-code, the current incumbent, its associated solution, and user optimal flows are stored in UB and y *, x *, respectively. In most cases, the gap between the resulted incumbent and the optimal solution of problem P is small and, hence, the incumbent in root node is very useful in the fathoming step.

In each iteration, one node from active nodes is selected; and in the selected node, one unfixed variable is fixed, or simply the decision on building a project is separated. This leads to two new active nodes, namely, right and left child nodes. Once a node is created, it is checked to see if all variables are fixed (the created node is a leaf node) or not. In the case of arriving at a leaf node, user optimal flows are computed, and the corresponding upper-level objective is compared with the current UB. If the upper-level objective is lower than UB, then UB and y * are updated. If the node is not a leaf node, then the bounding step is performed. Since the lower bound of one of the child nodes equals to that of the parent node, only one lower bound is computed in each iteration. This is because the value of the branching variable in the solution of the sub-problem corresponding to the parent node is either 0 or 1. In the former case, it does not need to compute the lower bound for the left child node, and in the latter case this holds for the right child node. This property improves computation costs significantly. Once a right child node is added to the search tree, the level of available budget at that node is checked. If the available budget does not suffice to build any other project, all unfixed variables are fixed at 0, and user optimal flows are computed, and the corresponding upper-level objective is compared with the current UB. If the upper-level objective is lower than UB, UB and y * are updated, and the node is fathomed. Since the available budget of the left child node is the same as that of the parent node, the available budget is not checked when a left child node is added to the search tree.

4.5 Computing lower bounds

The method of computing the lower bounds in the search tree is the main concern of any B&B algorithm. In each iteration, two new nodes are added to the search tree, but only one lower bound needs to be computed through solving problem (SP′). For the ease of reference, let rewrite the formulation of \( (SP_n^\prime ) \)in node n as follows:

where,

Problem \( (SP_n^\prime ) \) is a MINLP formulation such that: (a) objective function is convex and differentiable, (b) constraints are linear, X is a compact convex set, and Y is a finite set of binary elements and (c) for any fixed y, the resulted NLP, which is a system optimal equilibrium problem, has an optimal solution. Therefore, this problem can be optimally solved by algorithms like generalized Benders’ decomposition (GBD) (Floudas 1995; Geoffrion 1972), and outer approximation (OA) (Duran and Grossmann 1986; Fletcher and Leyffer 1994). The key idea underlying these algorithms is that in each iteration they generate an upper-bound and a lower-bound on the MINLP optimal solution. They alternate between solving a nonlinear programming (sub-problem) and solving a mixed integer linear programming (master-problem). The sub-problems of both methods for \( (SP_n^\prime ) \) are similar, and the major difference between them lies in the different derivations of the master problem. Both algorithms were experimentally tested; however, since OA had superior performance, only the OA related formulation is presented. Problem \( (SP_n^\prime ) \) can be written as:

Since the inner problem is bounded (it is a parametric in y system optimal equilibrium), infimum could be replaced by minimum. Let

For any y ∈ V, Let υ(y) be the optimal value of the following nonlinear sub-problem:

NLP(y) is the system optimal equilibrium flow problem for the network corresponding to the y ∈ V. In fact, NLP(y) is a restricted version of problem \( (SP_n^\prime ) \) because only a specific feasible y out of all y ∈ V has been chosen. Therefore, the optimal solution of the NLP(y) is a feasible solution for problem \( (SP_n^\prime ) \) and more importantly, υ(y) is an upper bound to the optimal value of problem\( (SP_n^\prime ) \).

Then problem \( (SP_n^\prime ) \) is equivalent to the following problem:

Note that Y∩V represents the projection of the feasible space of problem \( (SP_n^\prime ) \) onto the y-space. Problem (21a, 21b) is difficult to solve because both V and υ(y) are known implicitly (Duan and Xiaoling 2006; Floudas 1995). To cope with this difficulty, Duran and Grossmann (1986) used the outer linearization of υ(y) and a particular representation of V. Then based on Duran and Grossmann’s (1986) arguments for the general formulation of MINLP, it is concluded that problem \( (SP_n^\prime ) \) is equal to the following problem:

In view of the fact that g(x, y) is linear, constraints (24c) is simplified to \( {0} \geqslant g(x,{y^k}) \). Now, let

Then the following master problem is equivalent to problem \( (SP_n^\prime ) \) (Duran and Grossmann 1986).

Note that since the function f(x, y) is convex and g(x, y) is linear, the linearizations in M-OA correspond to outer approximations of the nonlinear feasible space of problem\( (SP_n^\prime ) \). It is assumed that the current transportation network is a connected network and, hence, for any y k ∈ V there exist system optimal equilibrium flows x k, or equally, NLP(y k) sub-problem is always feasible. Therefore, constraint y k ∈ V can be replaced by y k ∈ Y. Nevertheless, if NLP(y k) sub-problem is infeasible, the algorithm can solve the problem by adding one infeasible cut to the master problem. In addition, since solving the master problem M-OA requires enumerating all feasible binary variables y k, another relaxed version of M-OA is considered in which the solution of previous NLP(y k) sub-problems, k = 1,2, …,K, is employed and thus:

Since problem RM-OA is a relaxed version of M-OA, the solution of problem RM-OA is a lower-bound for problem\( (SP_n^\prime ) \). Furthermore, since function linearizations are accumulated as iterations k proceed, the sequence of the lower-bounds resulting from solving the master problem RM-OA is non-decreasing. Adding the following integer cut can improve convergence time because it prevents master problem from choosing the previous 0–1 values examined at the previous K iteration (Duran and Grossmann 1986).

where \( {B^k} = \left\{ {a|y_a^k = 1} \right\} \) and \( {N^k} = \left\{ {a|y_a^k = 0} \right\} \)}.

As the iterations proceed, two sequences of updated upper-bounds and lower-bounds are obtained, which are shown to be non-increasing and non-decreasing, respectively. Duran and Grossmann (1986), have shown that these two sequences converge in a finite number of iterations. The pseudo-code of OA based algorithm to solve problem \( (SP_n^\prime ) \) is presented in Fig. 2.

When the network is not small, solving NLP(y k) sub-problems and computing system optimal equilibrium flows x k at iteration k using off-the-shelf NLP optimization softwares is very difficult. This is not prohibitive because there are efficient algorithms to compute system optimal flows for large networks (e.g. Frank-Wolf (LeBlanc et al. 1975) and Bush-based algorithms like DBA (Nie 2010)). In all conducted experiments, Frank-Wolf was used to solve NLP sub-problems.

Remark 1

In each node of the B&B search tree, variables have been partitioned in two disjoint sub-sets, namely fixed variables and unfixed variables. While the search proceeds to deeper nodes, the number of unfixed variables decreases, and number of fixed variables increases. This leads to a simpler master problem of \( (SP_n^\prime ) \). Therefore, solving problem \( (SP_n^\prime ) \) by OA is more likely to be easier in deeper nodes of B&B search tree -in order to obtain the lower-bound- than it is in shallow nodes of the tree. In other words, while the search visits deeper nodes, the computation time of bounding potentially decreases or at least does not increase.

4.6 Variable selection for branching

Variable selection for branching on the selected node can have a significant influence on the performance of the B&B algorithm. Because robust techniques for identifying branching variables have not been established yet, a common way of choosing a branching variable is using heuristics. In the proposed algorithm, the bounding step provides useful information to guide the choice of a high-impact branching variable. A heuristic impact index is assigned to unfixed variables based on the solution of problem \( (SP_n^\prime ) \) in the node n. This index reflects branching priority of unfixed variables. First, unfixed variables are sorted based on the solution of problem \( (SP_n^\prime ) \) such that variables having value 1 are put at the higher level comparing to the variables having value 0. Then, variables are sorted decreasingly based on the total travel cost of their associated links, namely, x a t(x a ). Therefore, the branching variable is one which has value 1 in the solution of \( (SP_n^\prime ) \) and has the highest associated total travel cost in the resulted network.

5 Numerical results

In this section, the validity of the proposed algorithm is evaluated, and the results are compared with those of the previous works. To this end, four test problems are presented. The first test problem is a small network with a low number of new links. The first test problem is a case in which Braess’ paradox may occur; the second one is the well-known Sioux-Falls network with 5 new links; the third problem is a small network with a relatively large number of proposed new links; and the fourth one is a relatively large network with a moderate number of new links. The details of the tested problems are reported in Table 1.

All experiments were carried out on a HP Pavilion dv3 with 4 GB RAM and a 2.26 GHz Core2Duo CPU. The main body of B&B was coded in MATLAB. CPLEX 12.2 was used to solve MIP master problems in the lower bounding step. The parallel optimization mode of CPLEX has been set to deterministic mode. In all conducted experiments, Frank-Wolf algorithm coded in C++ language with convergence rate 0.01 or maximum 20 iterations was used to solve NLP sub-problems. Hereafter, we abbreviate our proposed algorithm as FS-B&B. In all experiments, a column vector of size |A 2| with zero elements is given as initial solution to G-GBD.

5.1 Comparative results

To validate the efficiency of the proposed algorithm, the performance of the proposed algorithm is compared with those of the previous ones proposed for DNDP including LeBlanc’s B&B (LeBlanc 1975) (hereafter abbreviated as L-B&B), Gao et al.’s GBD based algorithm (Gao et al. 2005) (hereafter abbreviated as G-GBD), and Poorzahedy and Abulghasemi (2005) and Poorzahedy and Rouhani’s ( 2007) ant system based meta-heuristics (hereafter abbreviated as PAR-AS). LeBlanc’s B&B is an exact algorithm guarding against Braess’ paradox. G-GBD algorithm is an exact algorithm (as claimed in the published paper) which uses GBD main concepts as well as the relationship between user and system optimal flows. However, we experimentally show that the algorithm proposed by Gao et al. (2005) cannot guarantee the optimality of the solution. It especially does not guard against the Braess’ paradox. More specifically, their algorithm offers solutions, which really make the system-level objective worse, although the algorithm converges to a specious solution with a better objective. PAR-AS is an ant system based meta-heuristics which was introduced in (Poorzahedy and Abulghasemi 2005) and was later improved in (Poorzahedy and Rouhani 2007) through being hybridized with genetic algorithm, simulated annealing, and tabu search. We only report the best results obtained from the base ant system in their earlier work (Poorzahedy and Abulghasemi 2005) and its improvement 1 in their later work (Poorzahedy and Rouhani 2007).

Test problem 1

The network of the first test problem is shown in Fig. 3. This problem is a modified network of the one which has been reported in (LeBlanc 1975). The travel time function of each link is depicted close to the link. There are three new links (3, 2), (5, 6), and (6, 7) with construction cost 8, 5, and 4 units of money, respectively. The decision variables corresponding to these new links are denoted by vector (y 1, y 2, y 3)T. There are three OD pairs (1, 7), (5, 1), (3, 8) with demand 2, 5, and 5, respectively. The problem is solved in two budget scenarios 9 and 17 units of money.

The detailed results of all four mentioned algorithms on test problem 1 are presented in Table 2. Column 1 and 2 show the ratio of available budget to the total cost needed to build all projects (B/C), and the absolute value of available budget, respectively. Column 3 contains the optimal value of the system-level objective of each scenario. Column 4 includes the names of the algorithms. Column 5 and 6 show the objective value corresponding to each algorithm and the final solution they provide, respectively. As shown in Table 1, all algorithms except G-GBD converge to the optimal solution in both budget scenarios. The solution (y 1, y 2, y 3)T = (0, 0, 0)T is given to G-GBD as the initial solution. G-GBD converges to solutions with the system level objective 2554.964 and 2514.028 in budget levels 9 and 17, respectively. In budget level 9 the new link (3, 2) is suggested to be established, and in budget level 17 all the three new links (3, 2), (5, 6) and (6, 7) are suggested to be established as well. However, if the user equilibrium flows and the associated system-level objective of the resulted network are computed, it is found that the true value of the system-level objective equals 2895.925 and 2946.683 for budget levels 9 and 17, respectively. In fact, the algorithm converges to a point different form the true objective value. But the main fault of G-GBD is that it suggests the worst solution (y 1, y 2, y 3)T = (1, 1, 1)T, because the optimal solution is (y 1, y 2, y 3)T = (0, 0, 0)T with system-level objective value 2686.516. As a matter of fact, the algorithm falls in the trap of Braess’ paradox. This shows that G-GBD may converge to a point whose value is not the true system-level objective. By inspecting the generated bounds in each iteration, one can find that the provided upper bounds are not valid and this, in turn, makes the algorithm converge to a non-optimal solution. Therefore, the G-GBD algorithm should be considered as a local optimal algorithm unless it is explicitly assumed that conditions like the Braess’ paradox do not happen. There is no guarantee for PAR-AS to guard against the Braess’ paradox, although in this specific case it found the optimal solution.

Test problem 2

Sioux-Falls network is a well-known test problem for both DNDP and CNDP. We use almost the same data as reported in (LeBlanc 1975).Footnote 1 Here, we assume that all the proposed projects are new links, and that their associated head and tail nodes are not directly connected. This test problem is somewhat different from that of (LeBlanc 1975) where the proposed projects are links which should be upgraded to a specific level. Briefly, in this test problem |N| = 24, |A| = 76, |A 1| = 66, |A 2| = 10, and the available budget B = 3000,000. It is assumed that the proposed projects are two-way streets and, hence, the total binary variables decreased to 5 variables each of which corresponds to a new two-way street. Due to the network’s low number of new projects, all the above-mentioned algorithms obtain the optimal solution. Set A 2 and cost of projects are assumed to be as follows:

The detailed results of employing all algorithms are presented in Table 3. Column 1 and 2 are the same as those of Table 2. Column 3–5 show the algorithm abbreviation, objective value, and the solution provided by each algorithm, respectively. Column 6 shows either the total iteration to converge (for G-GBD) or the total number of lower bound computations (for FS-B&B and L-B&B). These two indices are the main indicators of computational costs of the mentioned algorithms. No index is reported for PAR-AS because, firstly, there is no guarantee to obtain an optimal solution, and secondly, the number of runs or ants is usually chosen by the user. Column 7 shows the total CPU time of algorithms to terminate. As can be seen from Table 3, all algorithms successfully obtain the optimal solution in a reasonable time. In fact, there is no significant difference between the computational costs of G-GBD, PAR-AS, and FS-B&B in any of the budget levels. Algorithm L-B&B needs more time in low budget levels and less time in high budget levels to find the optimal solution or optimal confirmation of the found solution. As a result, reviewing previous studies reveals that the performance of the developed algorithms has been evaluated by small to medium-scale networks having a low number of projects being the candidate to be added to the network. Since the computational efficiency of these algorithms is mostly affected by the number of candidate projects, such test problems could not be a good examination to evaluate the performance of algorithms dealing with DNDP. Therefore, we devise a simple test problem with a relatively high number of new projects and a large network with a medium number of new projects to evaluate and compare the computational efficiency of the proposed and the previous algorithms. These networks are presented in test problems 3 and 4.

Test problem 3

This problem has 16 nodes, 17 existing links, and 25 new links (see Fig. 4.). The link travel time function is assumed as \( t({x_a}) = T_a^0 + {B_a}x_a^4 \), where \( T_a^0 \) and B a are free flow travel time and constant of link a, respectively. The values of \( T_a^0 \) are depicted on links, and the values of B a are assumed to be 10−6×\( T_{ij}^0. \)There are two OD pairs (1, 16) and (4, 13) each of which with demand 100.

In this test problem, there are totally more than 33 × 106 solutions in the solution space when budget constraint is relaxed; This number of points is much larger than 32 solutions in case of 5 new projects and 1024 solutions in case of having 10 new projects. The performance of previous algorithms and our proposed algorithm on this test problem are compared. Table 4 shows the results of employing G-GBD, PAR-AS, L-B&B, and FS-B&B on test problem 3. The results reported for PAR-AS algorithm are the best obtained results from the base ant system and its improvement 1. Column 1 and 2 are the same as those of Table 2. Column 3 shows algorithms by their abbreviations; and column 4 and 5 show their corresponding objective values as well as the relative deviation of the resulted objective from optimal objective values (relative deviation from optimal) in each scenario, correspondingly. The frequency of finding the optimal solution by PAR-AS is shown next to its associate objective value in column 4. Column 6 indicates the solution of each method in each scenario in terms of selected projects to be built. Column 7 reflects the consumed budget which is a portion of the available budget. This column was deliberately inserted to show how each algorithm effectively consumes the available budget. Column 8 shows the total CPU time for each method to solve related DNDP in each scenario. A limit of 10 h of CPU time is imposed for L-B&B algorithm in each budget level. The total number of nodes that L-B&B and FS-B&B need to compute lower bound, and the total number of iterations that G-GBD needs to converge are the main factors of their computational efficiency. Therefore, the number of computed lower bounds (L-B&B and FS-B&B) or iterations (G-GBD) is shown in column 9. The best value for the metric of each column in each budget scenario on the condition that the optimal solution is obtained in the shortest time, is printed in bold.

Examining the PAR-AS by the provided simple test problem reveals that unlike the reported results on small test problems of previous studies (Poorzahedy and Abulghasemi 2005; Poorzahedy and Rouhani 2007), the algorithm is not able to find the optimal solution in most budget levels. The algorithm was carried out over 50 runs in each budget level. It only finds the optimal solution in budget levels 100 in 4 runs out of 50 runs. Furthermore, in cases where the budget level is moderately tight, and by implication, where there are enough combinations of projects satisfying budget constraint, the gap between the solutions of PAR-AS and the optimal solution is significant. G-GBD finds the optimal solution in budget levels 100, 200, 300, and 400 in a time much longer than that of FS-H&B. It is worthy to note that in all four budget scenarios, the convergent point of G-GBD is different from the system-level objective, although the solution in terms of the selected projects was optimal. Hence, to compute the objective value, the user optimal flows corresponding to the resulted network is obtained and put in the system-level objective. This algorithm cannot converge to the optimal solution in the next three budget levels. As mentioned in test problem 1, G-GBD does not guarantee to obtain the optimal solution due to its invalid upper-bound. In budget levels 500, 600, and 700, there relative deviation of G-GBD objective value from the optimal solution is 12.48 %, 3.93 %, and 1.69 %, respectively.

L-B&B can find global solutions in all cases in a CPU time much longer than that of FS-B&B. In budget level 700 where the budget constraint is not so restrictive, L-B&B terminates in a reasonable time. However, it is evident that in most practical cases, the budget constraint is very restrictive. In cases with moderate budget level L-B&B finds the optimal solution within 3 to 6 h. The number of computed bounds in L-B&B is high. This is due to the significant gap between the lower bounds of predecessor nodes and the incumbent solution. In fact, the lower bounds are not effective. This has also been previously pointed by (Poorzahedy and Turnquist 1982). In contrast, FS-B&B, due to its tight lower bounds, explores fewer nodes and computes fewer lower-bounds.

As Table 4 shows, FS-B&B finds the optimal solution of the test problem 3 in all cases in the shortest CPU time. Briefly, it outperforms all other algorithms in both computational cost and solution quality. The time FS-B&B needs to compute lower bounds varies around 0.5 to 2 s in different budget scenarios. The total number of computed lower-bounds is small. This is partly due to effective branching variable selection and node selection, and partly due to relatively tight lower bounds in predecessor nodes.

Based on the above comparisons, test problem 3 reveals some drawbacks of the existing algorithms, including disability of finding optimal or near optimal solution in many cases in a reasonable time. This simple test problem, the key feature of which is having enough number of new projects to challenge enumerative algorithms, exhibits the shortcomings of the previous algorithms.

Test problem 4

This test problem is designed so that the network has enough nodes, links, and OD pairs to challenge the proposed algorithm (Fig. 5). We arranged 4 copies of Sioux-Falls network in a 4-cell layout and connected some adjacent nodes. We also added some new nodes and links to the network. The parameters of the links are similar to those of Sioux-Falls network. The results of employing all above-mentioned algorithms have been presented in Table 5. Columns of Table 5 are same as those of Table 4. This problem is challenging for all algorithms due to the number of links and OD pairs.

The results show that G-GBD does not find the optimal solution in any budget level. Since the user optimal flows are very different from the system optimal ones, the computed Lagrangian multipliers from the lower-level problem are not valid for the upper-level problem and this, in turn, leads G-GBD to generate invalid upper-bounds. It should be noted that computing optimal Lagrangian multipliers in the lower-level problem has its own computational costs. G-GBD does not converge even in 3000 iterations in budget levels 500 and 600. The gap between the objective value resulted from G-GBD and the optimal one, considering the system-level costs, is significant. It is worthy to note that even 1 % improvement in system-level costs in the network for real cases is remarkable. Algorithm PAR-AS provides the worst quality solutions. By considering solutions of PAR-AS in test problem 3 and 4 over various budget levels, it is implied that this algorithm tends to select more projects than other algorithms. Comparing the performance of L-B&B with G-GBD and PAR-AS indicates that this algorithm outperforms both other algorithms in terms of CPU time and solution quality. In test problem 4 and in the budget level 700, L-B&B has the best performance in terms of CPU time. Nonetheless, high budget levels are not the main cases where practitioners need decision support tools for network design purposes.

Algorithm FS-B&B solves test problem 4 in 6 budget levels having the ratio B/C smaller than 0.6 with the best performance. The algorithm particularly works well in budget levels ranging from small to medium (with a B/C ratio smaller than 0.4). An interesting note about FS-B&B is its good quality first incumbent solution which is obtained in the root node. The first incumbent solution in all budget levels for test problem 4 and its relative deviation with the solution of FS-B&B (optimal solution), G-GBD, and PAR-AS is presented in Table 6. Relative deviation of first incumbent with a given algorithm X is calculated by:

Reviewing the results shows that except budget level 100, the first incumbent of FS-B&B was better than the solution of PAR-AS with a significant deviation. It was also better than the solution of G-GBD in 5 budget levels out of 7 budget levels with a considerable gap, and was the same as G-GBD’s solution in the 2 remaining budget levels. Therefore, the first incumbent solution of FS-B&B can be a very good heuristic solution for DNDP. This seems worthy when the CPU time of obtaining the first incumbent is considered. The CPU time of obtaining the first incumbent in all budget scenarios of test problem 3 and 4 was shorter than 3 and 10 s, respectively.

Another important point regarding the proposed algorithm is that most of its total CPU time is spent on proving that the found incumbent solution is optimal. In other words, a common situation in the proposed algorithm is spending a large amount of computing time to improve the solution from a near-optimal to an optimal one. This is also true about lower bounds such that during the search most of the nodes have a tight lower bound which is close to the incumbent solution. If, for example, a maximum deviation of 0.2 % from the optimum solution is tolerable for the upper-level decision maker, the CPU time of algorithm termination in budget scenarios 6 and 7 drops from 769 and 1840 to 541 and 1283 s, respectively, while the optimal solutions remain unchanged.

The reported results for FS-B&B on all test problems are the best solutions obtained from the three before mentioned node selection strategies. In all test problems, the best first search as the node selection strategy was the most effective in terms of the number of both explored nodes and the total computed lower-bounds. In other words, choosing nodes with the lowest lower bound in each iteration leads to a node selection strategy with lowest computational cost. In other words, it is more likely to have the optimal solution in nodes with the smallest system objective under the system optimal flows. This is supported by (Poorzahedy and Turnquist, 1982) work where they found that system-level objective under the user and system optimal flows has a high correlation in about 500 different experimental networks.

The effectiveness of the proposed heuristic for finding branching variable against random branching variable selection was evaluated. In test problems 2–4 the average computation time saved by using the proposed heuristic against random variable selection was around 28 %, 39 %, and 47 %, respectively. Consequently, it is concluded that the proposed heuristic for branching variable selection has a significant effect on the total computation time of the algorithm.

5.2 Effects of congestion level on the performance of the algorithm

The computational cost of the proposed algorithm is strongly affected by the total number of computed lower bounds. The number of computed lower bounds is proportionate to the number of explored nodes of the B&B tree. The size of B&B tree is significantly affected by the quality of the lower bounds and the incumbent solution. The quality of the lower bounds and incumbent solution, however, is related to the level of congestion. To examine the effect of congestion, the algorithm is evaluated in different scenarios of congestion. To do that, Sioux-Falls network with 15 new one-way links (15 projects) is considered. It should be noted that we consider more new links in the network to explore more aspects of the algorithm, although these new links may not be possible to be added to the real Sioux-Falls network. The solution space of the corresponding DNDP consists of 215 points when the budget constraint is neglected.

We used the demand matrix of Sioux-Fall network (Poorzahedy and Rouhani 2007) as the basis. To study the congestion effect, we consider multiple demand scenarios. These scenarios results from multiplying the basis demand matrix by a certain factor (Demand Factor). Demand factor varies in the range [0.25, 2]. Besides, available budget varies from 0.1 to 0.6 of the total cost of all proposed projects. Table 7 shows the result of applying the algorithm on all generated scenarios. Column 1 shows demand factor. Column 2 shows B/C ratio indicating the available budget over total cost of all projects. Columns 3, 4, and 5 show computation time, the number of solved lower bounding problems, and the optimal system-level objective (vehicle-hour) of each scenario, respectively. Columns 6–9 show basic statistics minimum, maximum, mean and standard deviation of volume/capacity of links of the network corresponding to each demand factor. These columns indicate some aspects of congestion level over the network. For example, in case of demand factor 1, the minimum and maximum of v/c ratio of all links are 0.25 and 2.78, respectively; while the mean and standard deviation of v/c ratio of all links are 1.75 and 0.61, respectively. These indexes show that in scenarios corresponding to demand factor 1 the level of congestion is high (level of service is low). On the other hand, these statistics show the low level of congestion in the case of demand factor 0.25.

By reviewing the results of Table 7 some conclusions are drawn. The performance of the algorithm is significantly related to the level of congestion. In the low to medium congestion level (demand factor 0.25 and 0.5) algorithm converges to the optimal solution rapidly; while it converges to the optimal solution more slowly in the high congestion levels (demand factor 1 and 1.5). In cases where the congestion level is extremely high (demand factor 1.75 and 2) the performance of the algorithm in terms of both the computation time and the solved lower bounding problems is better than cases of high congestion level. In other words, in the networks with low to medium congestion, the quality of lower bounds is good. In highly congested networks, the quality of lower bounds gets worse, and it get improved again in severely congested networks. The level of available budget affects the convergence time of the algorithm in all demand scenarios negatively. Nevertheless, it seems that the impact of congestion on the performance of the algorithm is higher than the available budget. However, to scrutinize this feature of the algorithm more test problems and conditions should be examined.

Figure 6 shows the variations of the lower and upper bounds as the algorithm proceeds. In most cases, the algorithm finds the optimal solution in root node. In fact, most of its search is devoted to prove the optimality of the incumbent solution through updating the lower bound.

6 Conclusion

In this paper, a new branch and bound algorithm was proposed for the bi-level discrete network design problem. The computational performance and the solution quality of the proposed algorithm were evaluated on four test problems and compared with previous relevant algorithms. The results showed that the proposed algorithm outperforms previous algorithms in both CPU time and solution quality. It was experimentally shown that previous simple test problems are not good examinations to evaluate the performance of the existing algorithms. Therefore, two new test problems were devised to shed light on some drawbacks of the previous algorithms.

The main features of the proposed algorithm are: (a) it is complete, i.e. it can provide the optimal solution if there is any, although its time complexity may be high in large-scale problems. As a characteristic feature, unlike some previous algorithms, it guards against the Braess’ paradox; (b) it benefits from good strategies of node selection and branching variable selection; (c) it benefits from a good lower-bounding scheme, although it imposes extra computational costs to the algorithm. However, a part of these computational costs is compensated by exploiting the lower-bounding step through extracting effective guides to node as well as branching variable selection.

The computational cost of the proposed algorithm is strongly affected by the total number of computed lower bounds. Further studies can focus on different parts of the lower bounding step. In the present work, a good algorithm was presented to compute lower bound based on outer approximation. The algorithm is composed of two parts, namely, master problem and sub-problem which are iteratively solved until they converge. Sub-problems are efficiently solved by today’s traffic assignment algorithms, but master problems may be difficult in cases having a high number of new candidate links in a large network (e.g. more than 100 new links in a network with more than 1000 links). Employing Bush-based algorithms, like OBA (Bar-Gera, 2002) and DBA (Nie 2010) for solving sub-problems can lead to faster convergence, especially for large urban networks due to their superiority in memory usage and computation time and also producing highly precise UE solutions. In the current study, CPLEX solver was employed to solve master problems. Considering the structure of the problem having a lot of multicommodity network flow constraints, it is promising to develop specialized algorithms to solve the master problems more efficiently. This can be studied in future works. The quality of lower bounds seems good. By considering the fact that generally no explicit relationship has yet been found between system and user optimal flows (Karakostas et al. 2011), lower bounds are not expected to be improved significantly. Node selection and branching variable selection strategies could be improved. In this research best first strategy had the best performance comparing to depth first and breathe first search strategies. Yet, evaluating a combination of these search strategies needs more investigation. The branching variable selection is also a candidate to be more studied in order to adopt more effective variable selection strategy. In the current setting of the algorithm half of the lower bounds of the total explored nodes need to be computed because of the relationship between child and parent nodes of the B&B search tree. However, the problems which are solved to compute lower bounds seem to have the potential to reduce the computed lower bounds through finding better relationships among downstream and upstream nodes. Finding such relations among the solutions of lower bounding problems in different locations of the B&B search tree can be an area which merits further research.

In the present work we focused on DNDP with deterministic traffic assignment in the lower-level. The proposed algorithm can be easily adapted to non-deterministic traffic assignments. In this case, the lower bounding step is affected such that the sub-problems are non-deterministic user optimal traffic assignments. The computational efficiency and more customization of the algorithm to such a model should be studied in future studies. Finally, employing heuristics/meta-heuristics algorithms to have a cooperative search seems to be good practice to make the algorithm more scalable. The support to this idea is the feasible and near-optimal solution of DNDP in the root node of the proposed B&B. This is appropriate for neighborhood search to move to better solutions in a shorter time. Thus, as an area for further research, combining heuristics/meta-heuristics algorithm with the proposed global method to exploit advantages of both methods in order to provide a scalable method for large-scale DNDP is suggested.

Notes

Sioux-Falls network data are those that available on-line at: http://www.bgu.ac.il/bargera/tntp/.

References

Abdulaal M, LeBlanc LJ (1979) Continous equilibrium network design models. Transp Res 13B:19–32

Bar-Gera H (2002) Origin-based algorithm for the traffic assignment problem. Transp Sci 36:398–417

Boyce DE (1984) Urban transportation netwrok equilibrium and design models: recent achievements and future prospectives. Environ Plann A 16:1445–1474

Boyce DE, Farhi A, Weischedel R (1973) Optimal network problem: a branch-and-bound algorithm. Environ Plann 5:519–533

Chung BD, Yao T, Xie C, Thorsen A (2011) Robust optimization model for a dynamic network design problem under demand uncertainty. Netw Spat Econ 11:371–389

Dantzig GB, Harvey RP, Lansdowne ZF, Robinson DW, Maier SF (1979) Formulating and solving the network design problem by decomposition. Transp Res 13B:5–17

Drezner T, Salhi S (2002) Using hybrid metaheuristics for the one-way and two-way network design problem. Nav Res Log 49:449–463

Duan L, Xiaoling S (2006) Nonlinear integer programming. Springer, New York

Duran MA, Grossmann IE (1986) An outer algorithm for a class of mixed integer nonlinear programs. Math Program 36:307

Farvaresh H, Sepehri MM (2011) A single-level mixed integer linear formulation for a bi-level discrete network design problem. Transp Res 47E:623–640

Fletcher R, Leyffer S (1994) Solving mixed integer nonlinear programs by outer approximation. Math Program 66:327

Floudas C (1995) Nonlinear and mixed-integer optimization-fundamentals and applications. Oxford University Press, New York

Floudas CA, Pardalos PM (2009) Encyclopedia of optimization, 2nd edn. Springer, New York

Friesz TL (1985) Transportation network equilibrium, design and aggregation: key developments and research opportunities. Transp Res 19A:413–427

Friesz TL, Cho HJ, Mehta NJ, Tobin RL, Anadalingam G (1992) A simulated annealing approach to the network design problem with variational inequality constraints. Transp Sci 26:18–26

Gao Z, Wu J, Sun H (2005) Solution algorithm for the bi-level discrete network design problem. Transp Res 39B:479–495

Geoffrion AM (1972) Generalized Benders decomposition. J Optimiz Theory App 10:237–260

Haghani AE, Daskin MS (1983) Network design application of an extraction algorithm for network aggregation. Transp Res Rec 944:37–46

Holmberg K, Hellstrand J (1998) Solving the uncapacitated network design problem by a Lagrangian heuristic and branch-and-bound. Oper Res 46:247–259

Ibaraki T (1987) Enumerative approaches to combinatorial optimization. Ann Oper Res 10(11)

Karakostas G, Taeyon K, Anastasios V, Xia H (2011) On the degradation of performance for traffic networks with oblivious users. Transp Res 45B: 364–371

Karoonsoontawong A, Waller ST (2006) Dynamic continuous network design problem: linear bilevel programming and metaheuristic approaches. Transp Res Rec 1964:104–117

Kim BJ, Kim W (2006) An equilibrium network design model with a social cost function for multimodal networks. Ann Regional Sci 40:473–491

Leblanc LJ (1975) An algorithm for the discrete network design problem. Transp Sci 9:183–199

LeBlanc LJ, Boyce DE (1986) A bilevel programming algorithm for exact solution of the network design problem with user-optimal flows. Transp Res 20B:259–265

Leblanc LJ, Morlok EK, Pierskalla WP (1975) An efficient approach to solving the road network equilibrium traffic assignment problem. Transp Res 9B:309–318

Lee CK, Yang KI (1994) Network design of one-way streets with simulated annealing. Pap Reg Sci 32(2):119–134

Lin DY, Karoonsoontawong A, Waller ST (2011) Dantzig-Wolfe decomposition based heuristic scheme for bi-level dynamic network design problem. Netw Spat Econ 11:101–126

Luathep P, Sumalee A, Lam WHK, Li Z-C, Lo HK (2011) Global optimization method for mixed transportation network design problem: a mixed-integer linear programming approach. Transp Res 45B:808–827

Magnanti TL, Wong RT (1984) Network design and transportation planning: models and algorithms. Transp Sci 18:1–55

Meng Q, Yang H, Bell MGH (2001) An equivalent continuously differentiable model and a locally convergent algorithm for the continuous network design problem. Transp Res 35B:83–105

Miandoabchi E, Zanjirani FR, Dullaert W, Szeto WY (2011) Hybrid evolutionary metaheuristics for concurrent multi-objective design of urban road and public transit networks. Netw Spat Econ. doi:10.1007/s11067-011-9163-x

Nie YM (2010) A class of bush-based algorithms for the traffic assignment problem. Transp Res 44B:73–89

Patriksson M (1994) The traffic assignment problems: models and methods. VSP, Utercht

Poorzahedy H, Abulghasemi F (2005) Application of ant system to network design problem. Transportation 32:251–273

Poorzahedy H, Rouhani OM (2007) Hybrid meta-heuristic algorithms for solving network design problem. Eur J Oper Res 182:578–596

Poorzahedy H, Turnquist MA (1982) Approximate algorithms for the discrete network design problem. Transp Res 16B:45–55

Sheffi Y (1985) Urban transportation network: equilibrium analysis with mathematical programming methods. Prentice Hall, England Cliffs

Steenbrink A (1974a) Transport network optimization in the Dutch integral transportation study. Transp Res 8B:11–27

Steenbrink PA (1974b) Optimization of transport networks. John Wiley & Sons, New York

Sun Y, Song R, He S, Chen Q (2009) Mixed transportation network design based on immune clone annealing algorithm. J Transp Syst Eng Inf Technol 9(3):103–108

Wang DZW, Lo HK (2010) Global optimum of the linearized network design problem with equilibrium flows. Transp Res 44B:482–492

Xiong Y, Schneider JB (1995) Transportation network design using a cumulative genetic algorithm and neural network. Transp Res Rec 1364:37–44

Xu T, Wei H, Hu G (2009) Study on continuous network design problem using simulated annealing and genetic algorithm. Expert Syst Appl 36:1322–1328

Yang H, Bell MGH (1998) Models and algorithms for road network design: a review and some new developments. Transport Rev 18:257–278

Zhang H, Gao Z (2009) Bilevel programming model and solution method for mixed transportation network design problem. J Syst Sci Complex 22:446–459

Zhang L, Lawphongpanich S, Yin Y (2009) An active-set algorithm for discrete network design problems. The 18th International Symposium on Transportation and Traffic Theory, Hong Kong, Springer. In: Lam, W. H. K., Wong, S. C., Lo H. K., 2009. Transportation and Traffic Theory 2009: Golden Jubilee, 283–300, Springer

Acknowledgments

The authors would like to thank the three anonymous referees for many thoughtful and constructive comments. We also wish to thank Mr. Fouad Behzadpour for his grammatical and lexical revisions and comments. We accept full responsibility for all the remaining errors and shortcomings.

Author information

Authors and Affiliations

Corresponding author

Appendix A. The network data of test problem 4

Appendix A. The network data of test problem 4

The link parameters and OD trips data of test problem 4 are presented in Tables 8 and 9, respectively. In Table 8 each link is shown in the form of i <=> j: (T, B, C, g), where i and j are tail and head nodes, T is free-flow, B is the congestion factor, C is the capacity corresponding to each link, respectively. The forth element, g, is the cost of new projects. For existing links the value of g is left blank. The symbols <=> and -> show two-way and one-way streets, respectively.

Rights and permissions

About this article

Cite this article

Farvaresh, H., Sepehri, M.M. A Branch and Bound Algorithm for Bi-level Discrete Network Design Problem. Netw Spat Econ 13, 67–106 (2013). https://doi.org/10.1007/s11067-012-9173-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11067-012-9173-3