Abstract

Traffic scene visual perception technology is very important for intelligent transportation. Although the emerging panoptic segmentation is the most desirable sensing technology, object detection and semantic segmentation are relatively more mature and have fewer requirements for data annotation. In this paper, a joint object detection and semantic segmentation perception method is proposed for both practicability and accuracy. The proposed method is based on the results of object detection and semantic segmentation. Firstly, the result of basic semantic segmentation is preprocessed according to the principle of entropy. Secondly, the candidate bounding boxes of pedestrians and vehicles are extracted by object detection. Thirdly, candidate bounding boxes are optimized by using a K-means based vertex clustering algorithm. Finally, the contours of scene elements are matched with the results of semantic segmentation. The experimental results on the Cityscapes dataset show that the final perception effect is more susceptible to semantic segmentation results. The theoretical upper limit of the actual perception effect is 95.4% of the ground-truth of panoptic segmentation. The proposed method can effectively combine object detection and semantic segmentation, and achieve perception results similar to panoptic segmentation without additional data annotation.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Visual perception of traffic scenes is one of the research hotspots in the field of intelligent transportation. Since there are a large number of elements in the actual traffic scene, it is a huge challenge to achieve holistic scene perception. Referring to an early study [1], traffic scene elements can be divided into two categories: things class, i.e., countable elements (e.g., pedestrians, vehicles and animals) and stuff class, i.e., the same texture area (e.g., sky, road and grass). After decades of research, a lot of great progress has been made in the visual perception of traffic scenes. Before the ubiquitous use of deep learning, researchers targeted specific perception tasks, such as the detection and recognition of vehicles, pedestrians and traffic signs. Although holistic traffic scene perception has profound practical significance, it did not become a research hotspot due to the limitation of knowledge and technology at that time. The development of deep learning promotes the perception effect to a new level. In addition to single-task oriented deep neural networks, multi-task oriented deep neural networks are spring up continually, showing amazing scene perception performance. Panoptic segmentation [2] woke up the research interests in holistic traffic scene perception.

For intelligent transportation, it is difficult to achieve both practicability and high accuracy of visual perception algorithms. At present, in the field of visual perception of traffic scenes, object detection [3,4,5,6,7] and semantic segmentation [8,9,10,11,12,13,14,15] algorithms are relatively mature and useful, whose requirements for data annotation are also simple. However, object detection algorithms often produce false positive and false negative results, and cannot obtain the contours of the objects. While semantic segmentation algorithms can obtain the contours of the scene elements, but may have wrong results in image pixel classification and cannot identify the individuals in the object groups (individual person in the crowd, separated car in the vehicle group, etc.). Instance segmentation algorithms [16] can achieve the identification of the individual objects in the groups by combining object detection and semantic segmentation, but only focus on the foreground objects in the scene while ignoring the background elements. Panoptic segmentation algorithms [2, 17,18,19,20,21,22,23,24,25,26,27,28], which can compensate for the disadvantages of instance segmentation, achieve the perception of the whole scene by combining semantic segmentation and instance segmentation. However, panoptic segmentation algorithms require complex data annotation detailed to the contour of each object in the scene. Due to the complexity and variability of the actual traffic scene, the workload of data labeling is huge. The number of labeled samples for model training in deep learning is relatively large, even though training samples can be generated by generative adversarial networks which are different from the real samples captured by cameras. Panoptic segmentation algorithms have a promising future, but still lack practicality currently.

In this paper, a joint object detection and semantic segmentation algorithm is proposed. The practicability of the method is guaranteed, since the data labeling only for basic object detection and semantic segmentation is needed, which is quite simpler than that for panoptic segmentation. At the same time, similar accuracies of the perception results to panoptic segmentation can be achieved. Firstly, under the principle of information entropy, semantic binary images of pedestrians and vehicles can be extracted from the basic semantic segmentation results, and then the processing of denoising and enhancing will be carried out. Secondly, the candidate bounding boxes of pedestrians and vehicles will be supplemented with the basic object detection result. Thirdly, the quality of each candidate bounding box will be evaluated, and a K-means based vertex clustering algorithm will be used to optimize the qualified candidate bounding boxes. Finally, based on the semantic segmentation results, the contours of the scene elements belonging to the stuff class will be retained, and the contours of the scene elements belonging to the things class will be matched with candidate bounding boxes.

Since the perception results obtained by the proposed algorithm are similar to panoptic segmentation algorithms, PQ [2] is also suitable to evaluate the performance of the proposed algorithm. On the Cityscapes dataset [29], three groups of experiments are carried out: upper limit verification experiments, lower limit verification experiments, and cross verification experiments. The experimental results show that the precise scene perception results can be obtained by the proposed method without additional complex instance-level data annotation. In addition, the basic semantic segmentation results contribute more to the final perception effect than the basic object detection results.

The main contributions of this paper are summarized as follows:

-

(1)

A joint perception method is proposed, which can obtain the perception results similar to panoptic segmentation through combining object detection and semantic segmentation, and can achieve both practicability and high accuracy;

-

(2)

A grid based contour vertex clustering algorithm is designed to iteratively refine the candidate bounding box, which can extract the backbone of each scene element from noises;

-

(3)

The feasibility of the proposed method is verified by the upper limit verification and the lower limit verification experiments, and the main impact of the final perception effect is clarified by the cross validation experiments which are the basic semantic segmentation results.

The rest of this paper is organized as follows. In Sect. 2, some preliminaries and related work will be introduced. Section 3 presents the principle and implementation details of the proposed method. Experimental results and analyses to demonstrate the effectiveness of the method are arranged in Sect. 4. Section 5 discusses some concluding remarks of this paper and the prospects of future work.

2 Related Work

Scene perception is also known as scene parsing [1], image parsing [30], or holistic scene understanding [31]. For the visual perception of traffic scenes, various algorithms have been proposed for identifying pedestrians, vehicles and roads. Before the widespread use of deep learning, traditional methods focused on specific target information perception in traffic scenes. Compared with traditional methods, deep learning algorithms for visual perception have more advantages. The deep neural networks for single-tasks can greatly improve the perception performance. And multi-task deep neural networks can also achieve amazing scene perception performance and understand complete traffic scene information at the same time.

2.1 Traditional Scene Perception Algorithms

Traditional algorithms for traffic scene perception can be categorized into two classes: image processing based algorithms and machine learning based algorithms. Image processing based perception algorithms normally use basic image operations to realize scene perception. These basic image operations include image pre-processing (e.g., smoothing, sharpening and histogram equalization), image space conversion (e.g. color space conversion and space domain transformation), edge detection (e.g., canny operator and sobel operator), morphological operations (e.g., dilation, erosion and skeleton) and image segmentation (e.g., flood-fill and graph-cut). By synthesizing various image processing operations, Fan et al. [32] proposed a real-time lane detection algorithm using binocular stereo vision. Machine learning based perception algorithms normally adopt a combination framework with a front-end image feature extractor and a back-end object classifier. Traditional feature extractors include Haar-like, HOG, LBP and SIFT. Decision tree, Bayesian network, artificial neural network, and support vector machine can be used as object classifiers. To achieve good results, the analysis of the specific problems is usually carried out as the first procedure in the traditional methods. However, the robustness of the traditional methods is difficult to be guaranteed. Yao et al. [31] combined traditional object detection, scene classification and semantic segmentation to transform the whole problem into predictions of primitive structure to achieve holistic scene perception. However, traditional methods based traffic scene perception systems are large and cumbersome, and the final performance and the robustness cannot be achieved as expected.

2.2 Scene Perception Deep Neural Networks

Entity-level object detection is the most common technology for scene perception tasks. With the development of deep learning algorithms, a series of significant breakthroughs have been made in the field of multi-object detection. Faster R-CNN proposed by Ren et al. [3] could perceive a lot of elements in traffic scenes. However, a large number of candidate objects need to be verified in this kind of two-stage neural networks for object detection, which makes the overall efficiency unsatisfying. Therefore, single-stage object detection neural networks such as RetinaNet [4], SSD [5], YOLO [6] and CenterNet [7] were proposed for the efficiency issue. Although rectangular detection boxes are suitable for most scene elements, they can hardly describe the specific contours of the elements. Moreover, only detecting scene elements without identifying their attributes leaves much to be desired. It’s also a big challenge to achieve high accuracy of traffic scene perception due to the unavoidable false positive and false negative results.

Pixel-level semantic segmentation plays a very important role in scene visual perception. Thanks to the development of deep learning, researchers have made great breakthroughs in semantic segmentation. a variety of derivative and variant neural networks have emerged since FCN [8] was proposed as the first deep neural network for image semantic segmentation. Papers [9] and [15] made reviews on many semantic segmentation oriented deep neural networks. UNET [10], Tiramisu [11], SegNet [12], DeepLab [13] and BiSeNet [14] are the most representative architectures of neural networks for semantic segmentation. Yang et al. [33] discussed some defects of object detection based scene understanding methods, and proposed a semantic segmentation based neural network model for terrain perception which can be used as an assistant for blindman navigation. Zhou et al. [34] proposed a real-time perception method for drivable path prediction based on semantic segmentation. Although the contours of scene elements can be identified clearly, the labels of pixels are not always correct. In addition, when scene elements belonging to the same kind gather together to form a group, the individuals in the group cannot be identified successfully.

In order to overcome the inherent defects of individual perception technology, multi-task perception is becoming a trend. Teichmann et al. [35] proposed a multi-task learning network named MultiNet, which combined object detection and semantic segmentation. In the form of a unified neural network, MultiNet dealt with the task of object detection, object classification and semantic segmentation, and achieved scene perception in real time. It was found that the performance of multi-task learning neural network is closely related to the weight distribution where the loss value of each branch task is calculated. Therefore, Kendall et al. [36] proposed a multi-task learning method using uncertainty to weigh losses, which achieved advanced performance in scene understanding. Hu et al. [37] proposed a multi-task neural network based on Faster R-CNN to complete vehicle identification and body attribute prediction simultaneously. Similar to Mask R-CNN, Dvornik et al. [38] designed a deep neural network structure, BlitzNet, for real-time scene understanding. Since most of the current algorithms are trained individually for each procedure in traffic scene recognition tasks, Cheng et al. [39] proposed an end-to-end multi-task neural network, Dense-ACSSD, to implement multi-object detection and drivable area segmentation.

Sometimes, multi-task perception results can be improved by fusion processing. Panoptic segmentation can achieve the most advanced scene perception performance by fusing semantic segmentation results and instance segmentation results. The main variations of the existing neural networks for panoptic segmentation lie in two aspects: the acquisition of basic semantic segmentation and instance segmentation results, and the fusion algorithm of the two results. Among them, the acquisitions of basic results are quite similar, while the fusion of basic results shows some differences. Panoptic segmentation deep neural networks, such as PFPNet [17], AdaptIS [18], Seamless [20], TASCNet [22], AUNet [23], DeeperLab [24], Panoptic FCN [26], MaX-DeepLab [27], EfficientPS [28], simply fused the results of semantic segmentation and instance segmentation under the artificial heuristic rules. UPSNet [21] introduced a parameter-free panoptic decoder to fuse the basic results through pixel classification. OANet [25] designed a spatial sorting module to achieve fusion. Inspired by the representation of scene graphs, SOGNet [19] used categories, geometries and attributes of each scene element to perform a unified spatial embedding representation, and guided specific fusion processing by modeling overlap relations among instances. Panoptic FCN [26] present a conceptually simple, strong, and efficient framework for panoptic segmentation which aims to represent and predict foreground things and background stuff in a unified fully convolutional pipeline. MaX-DeepLab [27] designed an end-to-end model for panoptic segmentation which simplifies the current pipeline that depends heavily on surrogate sub-tasks and hand-designed components, such as box detection, non-maximum suppression, thing-stuff merging, etc. EfficientPS [28] architecture that consists of a shared backbone which efficiently encodes and fuses semantically rich multi-scale features.

Different from panoptic segmentation, a joint perception method is proposed in this paper which combines the results of basic object detection and semantic segmentation. Similar to TASCNet known as a panoptic segmentation algorithm, the proposed method also uses binary mask image processing but with different specific processing targets. The proposed method is different from the method in paper [1] by combining the results of basic object detection and semantic segmentation. To be specific, the proposed method focuses on the parallel fusion of basic perceptual results based on deep learning, while the method in paper [1] focuses on cascade semantic inference based on traditional methods. Since the accuracy of the object detection results is lower than that of the instance segmentation results, candidate bounding boxes are optimized to be matched with more detailed contours in the proposed method.

3 The Method

In this paper, a joint object detection and semantic segmentation based traffic scene perception method is proposed. The overview of the method is shown in Fig. 1. Firstly, the basic object detection and semantic segmentation results are obtained by deep neural networks. Secondly, under the principle of information entropy, basic perception results are preprocessed. Thirdly, candidate bounding boxes are supplemented by the joint algorithm. Fourthly, the quality of each candidate bounding box is evaluated and further optimized within a manageable range. Finally, contour matching is applied to achieve perception results which can be similar to that achieved by panoptic segmentation methods.

3.1 Basic Perceptual Information Capturing

Basic perceptual information of traffic scenes includes object detection results and semantic segmentation results. There are two key ways to obtain perceptual information, one is using two independent deep neural network models for each task, the other is training one multi-task neural network model for both tasks. The same training data should be prepared for these two ways. The scene object detection task needs entity-level annotations in the form of a bounding box, while the scene semantic segmentation task requires pixel-level category annotations. Obviously, compared with the data annotation required by panoptic segmentation, the data annotations required by simple object detection and semantic segmentation are simpler and more practical. The concrete perception models are introduced as follows.

The output of the object detection model is a series of bounding boxes and the corresponding category numbers of detected objects. For an input image X, the goal is to maximize the likelihood probability pd(O|X) of the target sequence O = {oi|i = 1, 2, …, no} and the confidence level of each object oi, where oi is usually composed of a tuple (c, x, y, w, h, t), and c represents the category of the object, x、y、w and h represent the abscissa and ordinate of the bounding box center, and the width and height of the bounding box respectively. Note x、y、w and h are actually relative values, t represents the confidence level (t ∈ [0,1]) when oi is predicted as category c, and no is the number of detected objects. The training task can be described as maximizing the probability of the target sequence by solving Eq. (1):

where θ represents the weight of the neural network model, and O* is a predicted sequence derived from the input image X while the optimal training weight θ* is used. It should be noted that the form of tuple oi in this paper is only one of the major forms.

The output of the semantic segmentation model is a scene semantic segmentation image. For an input image X, the goal is to assign a unique label to each pixel (i, j) with the category set ci, j(ci, j = 1, …, nc), and to output a scene semantic segmentation image Y, where nc is the number of categories of the semantic labels. The overall training process of semantic segmentation model is shown in Eq. (2):

where w represents the weight parameter of semantic segmentation neural network, ps is the predicted distribution probability, yi, j is the predicted value of the pixel (i, j) in the output image Y corresponding to the weight w, and Y * is the predicted result derived from the optimal weight w*.

3.2 Perceptual Information Pre-Processing

Perceptual information preprocessing aims to analyze the topological structure of the semantic output image Y* to obtain locations of scene objects, or object groups, which belong to the things class.

In this method, the image boundary tracking algorithm is used for semantic analysis. Structured information can be obtained by the boundary tracking algorithm encoding the boundary contour of each object into a chain code with a series of points. Since the scene semantic segmentation image Y* is too complex to be analyzed, Y* will be divided into a binary image sequence {Yi|i = 1, …, nc} according to the pixel value. As shown in Fig. 2, the original semantic segmentation image is split into a series of binary images, and each image represents one category. Assuming that event A in scene perception means that the contour of one scene object is clearly defined, and p(A) represents the probability of random event A. Then the information entropy H(A) of the scene semantic perception task can be formulated as Eq. (3), where Ai represents the event that the i-th object in the scene can match its contour, and n is the number of objects. Under the principle of information entropy, the smaller the probability of an event occurring, the more information can be achieved. To obtain more information, attention should be focused on those objects whose contours are difficult to be obtained.

Based on information entropy theory, the scene elements belonging to the things class (pedestrians and vehicles) with dense semantic contour have higher priority to be obtained. Specifically, according to the pixel category, Y* is scanned to form vehicle mask image Yv = {yi,j ∈ Tagv} and pedestrian mask image Yp = {yi,j ∈ Tagp}, where yi,j represents the category of the pixel (i, j) in Y*, Tagv and Tagp are the sets of vehicle related tags (car, bus, truck, etc.) and pedestrian related tags (man, woman, child, etc.) respectively. Then image scaling is applied to improve the computational efficiency, and morphology close operation is used to denoise the mask images. Therefore, Yv is enhanced to Yv', and Yp is enhanced to Yp'. Afterwards, a boundary tracking algorithm is applied to Yv' and Yp', and two output sets are obtained, i.e., the set of vehicle contours Dv = {dvi|i = 1,2,…,nv}, and the set of pedestrian contours Dp = {dpi|i = 1,2,…,np}, where dvi represents the contour of the i-th vehicle, dpi represents the contour of the i-th pedestrian, and nv and np are the total number of vehicles and pedestrians with contours respectively. Finally, the minimum rectangular bounding box of each object in Dv and Dp is calculated according to its contour, and two new sets are formulated as Dv' = {vdi = box(dvi)|i = 1, 2, …, nv}, Dp' = {pdi = box(dpi)|i = 1, 2, …, np}, where box(*) is a function for the bounding rectangle calculation. Figure 3 shows the actual effect of mask image preprocessing. Locations of candidate vehicles and pedestrians have been perceived successfully.

3.3 Perceptual Information Combination

Perception information combination is based on the semantic perception results Dv′ and Dp′, and the object detection results O*. Before the combination procedure, O* is divided into a set of candidate vehicle Ov = {voj = oi|oi ∈ Tagv, j = 1, 2, …, nv′} and a set of candidate pedestrians Op = {poj = oi|oi ∈ Tagp, j = 1, 2, …, np′}, where nv′ and np′ are the numbers of detected vehicles and pedestrians respectively. More details about the enhancement of the perception effect by combination are as follows.

The pairing matching algorithms can be used to filter the false positives. In order to filter false positives, elements from both Dv′ and Ov are unified into the same image coordinate system. Then, every object in Dv′ is compared to the objects in Ov. For vdi ∈ Dv′ and voj ∈ Ov, if IOU(vdi, voj) > Tiou, the pair of (vdi, voj) will be added to the candidate vehicle queue V, where IOU(*) is the function to calculate the special relationship of two rectangular boxes and Tiou is a threshold parameter. Since there is a one-to-one correspondence between vdi from Dv′ and dvi from Dv, for each pair of elements (vdi, voj) in V, a new set Gv = {gvj|j = 1, 2, …, ngv} can be constructed with gvj = {(xp,yp)|(xp,yp) ∈ dvi and φ((xp,yp), voj) = 1} consisted of the points in both region voj and contour dvi, here (xp,yp) represents the coordinate value of the boundary point, φ((xp,yp), voj) is an indicative function of whether the calculated point (xp,yp) is in region voj, and ngv is the number of elements of the set Gv. Meanwhile, voj is added to the final bounding box set of vehicles Vf. It is worth noting that the pairing matching operation not only filters the false positives in object detection results, but also gets the preparation work of recalling the false negatives ready.

Based on the pairing matching results, the false negatives can be recalled by finding missing objects from the pre-processed mask images. First of all, for the mask image Yv′, mask regions are removed if it appears in Vf by setting the pixel value to 0. Secondly, for the new image Yv′, a boundary tracking algorithm is applied again to obtain the supplementary candidate vehicle contour set Dw = {dwi|i = 1, 2, …, nvw}, where dwi represents the contour of the i-th candidate vehicle, and nvw is the total number of supplementary candidate vehicles. Thirdly, rectangular bounding boxes of contours in Dw are calculated, and constitute the set Vw = {vwi|i = 1, 2, …, nvw}. As shown in Fig. 4, the recalled false negatives might introduce new noises, which will reduce the accuracy of the recall results.

In order to filter the noises and extract the trunks, the recalled false negatives need to be post-processed. Specifically, the recalled results with a rather small area are filtered at first. Then, based on the set Vw, for every vwi ∈ Vw, the proportion of the pixels that belong to the vehicle category in the total rectangular area is calculated as rwi. If rwi > Trate, vwi is added to set Vf, the final bounding box set of vehicles; otherwise, the current bounding box is not ideal since a large number of irrelevant pixels are included.

For the latter case mentioned above, a grid based contour vertex clustering algorithm is designed to iteratively refine the candidate bounding box. In the image coordinate system, the current region vwi is divided into four sub-regions by a grid. Thus, the number of clusters to be clustered by the K-means algorithm is set to "4", and each cluster center is initialized as the center of the corresponding grid cell. Then, the Euclidean distance between boundary points is adopted as the distance measurement of two clusters, and the final center points of four clusters are named as pc1, pc2, pc3 and pc4. Afterwards, the external bounding box noted as vwi* is calculated and then added to the set Vf. Since there is a one-to-one correspondence between vwi* and dwi from Dw, the set Gv is supplemented with gv = {(xp,yp)|(xp,yp) ∈ dwi and φ((xp,yp), vwi*) = 1}. As shown in Fig. 5, although the original recalled false negative result is rough, the refined result from the proposed K-means based vertex clustering algorithm is much more detailed.

After the above joint algorithm, set Vf stores the joint enhanced results of vehicular bounding boxes, set Gv records the results of vehicular contour, and the elements of both Vf and Gv are one-to-one mapped. By using the same algorithm, the joint enhanced results of the pedestrian can also be obtained and stored in Pf and Gp. In Fig. 6, each line is a scene sample showing the comparison between the results obtained before processing and achieved from the joint pre-perception algorithm respectively. The use of the joint pre-perception algorithm can remove the false negative objects and recall the false negatives ones.

Finally, the perception results of the panoptic segmentation style can be obtained after scene element contour matching. Specifically, according to the one-to-one relationship between Vf and Gv, with the correspondence between Pf and Gp, the instance-like segmentation perception results can be generated. Obviously, the utilize of heuristic rules by fussing the perceptual results of instance-like segmentation and basic semantic perceptual information can provide similar results as panoptic segmentation. As shown in Fig. 7, scene elements of both the stuff class and the things class are presented in a panoptic segmentation style.

4 Experiments

The proposed joint perception algorithm which effectively combines the results of object detection and semantic segmentation can achieve a similar effect to panoptic segmentation. Therefore, three criteria defined for panoramic segmentation in paper [2] are used for evaluation, i.e., PQ (panoptic segmentation), SQ (segmentation quality) and RQ (recognition quality). The formulations are shown in Eq. (4):

where p is the predicted result, g is the ground-truth, TP is the positive result, FP is the false positive result, FN is the false negative result, IOU(p, g) is the function for evaluating the proportion of the pixels that intersection of p and g over union of p and g. RQ is widely used in object detection known as F1-score, which is used to calculate the accuracy of object recognition for each element in perception. SQ is the average intersection ratio of predicted semantic segmentation results and ground-truth. As discussed in paper [2], PQ = SQ × RQ can provide insight for analysis which measures performance of all classes in a uniform way using a simple and interpretable formula. Generally, IOU(p, g) > 0.5 is regarded as the matching condition.

In order to prove the effectiveness and accuracy of the proposed method, three groups of experiments are implemented: upper limit verification, lower limit verification, and cross verification. The upper limit verification is carried out when the results of semantic segmentation and object detection are completely consistent with the actual annotations, i.e., the ground-truth is used to verify the proposed joint perception algorithm. The lower limit verification is carried out when the two basic perception results are poor, i.e., perception models are trained on different datasets. The additional cross validation is designed to evaluate how basic perceptual information makes an influence on the final performance.

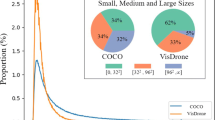

In order to facilitate the comparison with the panoramic segmentation methods, the proposed method is tested on the verification set of the Cityscapes dataset. All test methods reach the following agreement conditions: 19 kinds of targets in the original data annotation are used, including 11 kinds of stuff objects (road, sidewalk, building, wall, fence, pole, trafficlight, trafficsign, vegetation, terrain and sky) and 8 kinds of things objects (person, rider, car, truck, bus, train, motorcycle, cycle). Specifically, the upper limit verification uses the pixel level semantic segmentation annotation and the bounding box level target detection annotation directly, and the lower limit verification uses two models opened by OpenVINO: semantic segmentation adas-0001 and person vehicle bike detection cross road-0078. The model of object detection for them is obtained from the data training of the fixed-point traffic scene, which meets the requirements of the perception model in the lower limit evaluation for the traffic scene. Cross validation uses the two remaining combinations, A + O and O + A, shown in Table 1.

As shown in Table 1, the results of the upper limit validation experiment illustrate that the theoretical upper limit approximates the ground-truth of panoptic segmentation. In other words, when the basic semantic segmentation and object detection results are perfect, the proposed joint algorithm works well. When the basic semantic segmentation and object detection results are quite poor, the lower limit is still acceptable even though there is a gap between the results of the proposed algorithm and that of the state-of-the-art. As shown in Table 2, in the upper limit validation experiment, the PQ values are quite high in general, but not ideal in some semantic categories. Specifically, the PQ value is relatively low due to the high probability of occlusion in the categories like pedestrian and vehicle of which the number is large. As shown in Table 3, in the lower limit verification experiment, the SQ values of the proposed method are not low, but poor RQ values could lead to unsatisfactory PQ values. This means that there are a lot of false positives and false negatives in the perception results which should blame on the basic semantic segmentation results. In addition to the indicators, Fig. 7 shows the panoptic-level segmentation results in the lower limit verification experiment. Obviously, the scale of the scene element is an important factor that affects the perceived performance. As shown in Table 1, through additional mixed experiments, it can be found that when the result of semantic segmentation is perfect and even the result of object detection is not good, the proposed algorithm can still maintain a high performance; while when the result of semantic segmentation is not good and the result of object detection is perfect, the actual results of the proposed joint algorithm is close to that of the lower limit evaluation. Apparently, the result of the basic semantic segmentation determines the theoretical lower limit of perception performance, while the result of basic object detection determines the theoretical upper limit of perception performance. In general, the proposed joint perception algorithm is effective and has high accuracy.

5 Conclusion

In this paper, a joint perception algorithm for traffic scenes is proposed, which combines object detection and semantic segmentation. This is an attempt at holistic traffic scene parsing. Under the principle of information entropy, the perception of pedestrians and vehicles is targeted. Through the flexible application of image processing technology, the joint perception algorithm achieves panoptic-level segmentation performance. The proposed method can take both the accuracy and practicality into account without complex data annotation as panoptic segmentation required. Competitive performance is achieved on the Cityscapes dataset, and the importance of basic semantic segmentation results during the joint progress is verified. Since there is no need to specify the basic perception model, the algorithm has a wide range of generality. However, compared to the state-of-the-art panoptic segmentation technology, the proposed method still has defects in the effect of instance segmentation. Since the proposed method is based on both results of the basic semantic segmentation and object detection, either mistakes will lead to undesirable results. And occlusions or similar traffic scene elements also may lead to errors during semantic boundary calculation of adjacent elements. Thus, there are still improvements to be made in traffic scene perception algorithms based on joint object detection and semantic segmentation.

References

Tighe J, Niethammer M, Lazebnik S. (2014) Scene parsing with object instances and occlusion ordering. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3748–3755

Kirillov A, He K, Girshick R, et al. (2019) Panoptic segmentation. 2019 IEEE/CVF conference on computer vision and pattern recognition (CVPR), long beach, CA, USA, 2019, pp 9396–9405

Ren S, He K, Girshick R, Sun J (2017) Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Machine Intell. https://doi.org/10.1109/TPAMI.2016.2577031

Lin T, Goyal P, Girshick R, et al. (2017) Focal loss for dense object detection. In: Proceedings of the IEEE international conference on computer vision pp 2980–2988

Liu W, Anguelov D, Erhan D et al (2016) SSD: single shot multibox detector. In: Leibe Bastian, Matas Jiri, Sebe Nicu, Welling Max (eds) Computer Vision – ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I. Springer International Publishing, Cham, pp 21–37

Redmon J, Divvala S, Girshick R, Farhadi A. (2016) You only look once: Unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 779–788

Duan K, Bai S, et al. (2019) Centernet: Keypoint triplets for object detection. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 6569-6578

Long J, Shelhamer E, Darrell T. (2015) Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3431–3440

Garcia-garcia A, Orts-escolano S, Oprea S et al (2018) A survey on deep learning techniques for image and video semantic segmentation. Appl Soft Comput 70:41–65

Ronneberger O, Fischer P, Brox T (2015) U-Net: convolutional networks for biomedical image segmentation. international conference on medical image computing and computer assisted intervention, Munich, Germany, pp 234–241

Jégou S, Drozdzal M, Vazquez D, Romero A, Bengio Y. (2017) The one hundred layers tiramisu: Fully convolutional densenets for semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp 11–19

Badrinarayanan V, Kendall A, Cipolla R (2017) Segnet: A deep convolu- tional encoder-decoder architecture for image segmentation. IEEETrans Pattern Anal Mach Intell 39(12):2481–2495

Chen L, Papandreou G, Kokkinos I, Murphy K, Yuille AL (2018) DeepLab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans Pattern Anal Machine Intel 40(4):834–848

Yu C, Wang J, Peng C, et al. (2018) BiSeNet: bilateral segmentation network for real-time semantic segmentation. European Conference on computer vision, Munich, Germany, 2018, pp.334–349.

Siam M, Gamal M, Abdel-Razek M, et al. (2018) A comparative study of real-time semantic segmentation for autonomous driving. In: IEEE/CVF conference on computer vision and pattern recognition workshops (CVPRW), Salt Lake City, UT, pp 700–710.

He K, Gkioxari G, Dollár P, Girshick R. Mask R-CNN. (2017) In: Proceedings of the IEEE international conference on computer vision, Venice, Italy, pp 2961–2969

Kirillov A, Girshick R, He K, Dollár P. (2019) Panoptic feature pyramid networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Long Beach, CA, USA, pp 6399–6408

Sofiiuk K, Barinova O, Konushin A. (2019) Adaptis: Adaptive instance selection network. In: Proceedings of the IEEE/CVF international conference on computer vision, Seoul, Korea pp 7355–7363

Yang Y, Li H, Zhao Q et al (2020) SOGNet: scene overlap graph network for panoptic segmentation. Proc AAAI Conf Artif Intel 34(07):12637–12644

Porzi L, Bulò SR, Colovic A, Kontschieder P (2019) Seamless scene segmentation. 2019 IEEE/CVF conference on computer vision and pattern recognition (CVPR), Long Beach, CA, USA, pp 8269–8278

Xiong Y, Liao R, Zhao H, Hu R, Bai M, Yumer E, Urtasun R. (2019) Upsnet: a unified panoptic segmentation network. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8818–8826

Li J, Bhargava A, Tagawa T et al.(2019) Learning to Fuse Things and Stuff. arXiv: 1812.01192v2,

Li Y et al. (2019) Attention-guided unified network for panoptic segmentation. In: 2019 IEEE/CVF conference on computer vision and pattern recognition (CVPR), Long Beach, CA, USA, 2019, pp 7019–7028

Yang TJ, Collins MD, Zhu Y, Hwang JJ, Liu T, Zhang X, Sze V, Papandreou G, Chen LC. (2019) Deeperlab: Single-shot image parser. arXiv preprint arXiv:1902.05093

Liu H, Peng C, Yu C, Wang J, Liu X, Yu G, Jiang W. (2019) An end-to-end network for panoptic segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition pp 6172–6181

Li Y, Zhao H, Qi X, et al. (2021) Fully convolutional networks for panoptic segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 214–223

Wang H, Zhu Y, Adam H, Yuille A, Chen LC. (2021) Max-deeplab: End-to-end panoptic segmentation with mask transformers. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition pp 5463–5474

Mohan R, Valada A (2021) Efficientps: Efficient panoptic segmentation. Int J Comput Vision 129(5):1551–1579

Cordts M, Omran M, Ramos S, et al. (2016) The cityscapes dataset for semantic urban scene understanding. In: Proceedings of the IEEE conference on computer vision and pattern recognition pp 3213–3223

Tu Z, Chen X, Yuille AL, Zhu SC et al. (2003) Image parsing: unifying segmentation, detection, and recognition. In: Proceedings ninth IEEE international conference on computer vision, Nice, France, pp 18–25

Yao J, Fidler S, Urtasun R. (2020) Describing the scene as a whole: Joint object detection, scene classification and semantic segmentation. In: 2012 IEEE conference on computer vision and pattern recognition, Providence, RI, pp 702–709. IEEE.

Fan R, Dahnoun N (2018) Real-time stereo vision-based lane detection system. Measure Sci Technol 29(7):074005

Yang K, Wang K, Zhao X, Cheng R, Bai J, Yang Y, Liu D (2017) IR stereo RealSense: decreasing minimum range of navigational assistance for visually impaired individuals. J Ambient Intel Smart Environ 9(6):743–755. https://doi.org/10.3233/AIS-170459

Zhou W, Worrall S, Zyner A, Nebot E. (2018) Automated process for incorporating drivable path into real-time semantic segmentation. In: 2018 IEEE International Conference on Robotics and Automation (ICRA) Brisbane, QLD pp 6039–6044. IEEE

Teichmann M, Weber M, Zoellner M, Cipolla R, Urtasun R. (2018) Multinet: Real-time joint semantic reasoning for autonomous driving. In: 2018 IEEE intelligent vehicles symposium (IV) Changshu pp. 1013–1020

Kendall A, Gal Y, Cipolla R, Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In: 2018 IEEE/CVF conference on computer vision and pattern recognition, Salt Lake City, UT, 2018, pp 7482–7491

Huo Z, Xia Y, Zhang B (2016) Vehicle type classification and attribute prediction using multi-task RCNN. In: 9th International congress on image and signal processing, biomedical engineering and informatics (CISP-BMEI), Datong, pp 564–569

Dvornik N, Shmelkov K, Mairal J, Schmid C. (2017) Blitznet: A real-time deep network for scene understanding. In: Proceedings of the IEEE international conference on computer vision pp 4154–4162

Cheng Z, Wang Z, Huang H, Liu Y. (2019) Dense-acssd for end-to-end traffic scenes recognition. In: 2019 IEEE Intelligent Vehicles Symposium (IV) Paris, France, pp 460–465

Acknowledgements

This work is being supported by the National Key Research and Development Project of China under Grant No. 2020AAA0104001, the Zhejiang Lab. under Grant No. 2019KD0AD011005 and the Zhejiang Provincial Natural Science Foundation of China under Grant No. LQ22F020008.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Weng, L., Wang, Y. & Gao, F. Traffic Scene Perception Based on Joint Object Detection and Semantic Segmentation. Neural Process Lett 54, 5333–5349 (2022). https://doi.org/10.1007/s11063-022-10864-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-022-10864-z